Abstract

Portfolio optimization is about building an investment decision on a set of candidate assets with finite capital. Generally, investors should devise rational compromise to return and risk for their investments. Therefore, it can be cast as a biobjective problem. In this work, both the expected return and conditional value-at-risk (CVaR) are considered as the optimization objectives. Although the objective of CVaR can be optimized with existing techniques such as linear programming optimizers, the involvement of practical constraints induces challenges to exact mathematical methods. Hence, we propose a new algorithm named F-MOEA/D, which is based on a Pareto front evolution strategy and the decomposition based multiobjective evolutionary algorithm. This strategy involves two major components, i.e., constructing local Pareto fronts through exact methods and picking the best one via decomposition approaches. The empirical study shows F-MOEA/D can obtain better approximations of the test instances against several alternative multiobjective evolutionary algorithms with a same time budget. Meanwhile, on two large instances with 7964 and 9090 assets, F-MOEA/D still performs well given that a multiobjective mathematical method does not finish in 7 days.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Portfolio optimization models the investment decision mathematically, in which investors are going to allocate their finite capital into a set of assets such as stocks, funds and derivatives. The decision is supposed to devise a compromise to both return and risk. Modern portfolio theory is introduced by Markowitz [1], and his mean-variance (MV) model lays the foundation of the investment research. In the MV model, return and risk of an asset is statistically quantified with its expected return and standard deviation [2, 3]. Various alternative risk measures in addition to variance are also considered by Markowitz. However, due to computational problems, Markowitz adopts variance as the risk measure in his analyzes [4]. Variance is not theoretically robust because it evaluates both positive and negative returns as risk when the distributions of returns are asymmetric [5, 6]. Therefore, the downside risk measures, only considering relatively poor returns, are developed [7]. The notable downside risk measures involve semicovariance [8], lower partial moments [4], Value-at-Risk (VaR) [9] and Conditional Value-at-Risk (CVaR) [10].

Among these measures, VaR is popular and it has achieved a high status of being adopted in the industry field. Nevertheless, it meets several significant problems. First, it is unstable with empirical discreteness loss [11]. Secondly, it fails to be coherent [12]. Thirdly, minimizing a VaR is a computational complex task because it is non-convex and has many local minima [13]. On the contrary, these problems are no longer the obstacles when CVaR is employed [11]. Hence, we adopt CVaR as the measure of risk in this work. With a given confidence level 99%, if the VaR of a portfolio is 10,000 dollars, it means that with a 99% chance the loss will not exceed 10,000 dollars. However, the CVaR denotes the conditional expectation of losses above 10,000 dollars. It should be noted that the optimization of CVaR involves the optimization of VaR because these definitions ensure that VaR will never exceeds CVaR.

Basic portfolio problems can be easily solved through mathematical programming methods [10, 14]. Notwithstanding, in the real investment, this basic model is extended when various trading restrictions and preferences of investors are introduced as constraints. The efficiency of exact mathematical methods will deteriorate when constraints are included. Further, the deterioration will be catastrophic for large-scale portfolio problems if a cardinality constraint, restricting the number of selected assets, is involved [15]. For these constrained portfolio problems, they have been intensively studied in the past several decades [3, 16]. Since they are non-convex in most cases, heuristics, such as evolutionary algorithms (EAs), and hybrid algorithms are thus broadly adopted for them.

In the field of portfolio optimization, researchers are used to devise tailored EAs for specific constraints and models (resulting in different objective functions). Most of the studies focus on the MV model [3, 16,17,18,19]. Chang et al. develop three heuristic algorithms based on genetic algorithms, tabu search and simulated annealing for cardinality constrained portfolio optimization. The results indicate that no algorithm outperformed others [20]. This work is among the first to solve the constrained portfolio problem via EAs. Chen et al. devise a tailored variable representation and initialization scheme for the EA. These not only make the generated solutions naturally satisfy the constraints but also significantly expedite the convergence of the algorithm [21]. Kalayci et al. present a hybrid metaheuristic algorithm, it is composed of elitism mechanism from genetic algorithms, modification rate from artificial bee colony optimization approach and the Gaussian formulation from continuous ant colony optimization. The comparisons against other methods denote the proposed algorithm is efficient [22]. Drezewski et al. design an agent-based co-evolutionary multiobjective algorithm for portfolio problems. This algorithm uses two subpopulation to optimize the objectives and employs a competition mechanism for resources. The results show that the algorithm can find good solutions with a high level of diversity [23].

In the past few years, downside risk measures have been investigated more frequently [7]. EAs are also adapted for these problems. Lwin et al. propose a learning guided nonparametric approach (MODE-GL) for the mean-VaR model. The algorithm adopts a learning mechanism for the selection of assets and it shows the potential for good convergence [13]. Najafi and Mushakhian develop a hybrid algorithm with genetic algorithm and particle swarm optimization algorithm for the portfolio problem, measuring the risk with both semivariance and CVaR. Computational results show that the algorithm approximated the Pareto front (PF) well [24]. Mohuya et al. present a new bi-objective fuzzy portfolio model, considering Sharp ratio and VaR as the objectives. Meanwhile, they solve this model through three EAs. According to their observations, multiobjective cellular genetic algorithm is the best among these employed EAs [25]. Konstantinos Liagkouras develops a three-dimensional encoding multiobjective evolutionary algorithm (TDMEA). The algorithm is expected to be efficient on the large-scale problems, because the three-dimensional encoding can keep the length of chromosome invariant. This algorithm is applied to MV, mean-VaR and mean-CVaR model with the cardinality constraint and the results obtained are significant better than two compared well-known MOEAs [26].

Since EAs belong to a heuristic algorithm cluster, these algorithms generally can only get approximate optimal solutions. Therefore, researchers also attempt to improve the accuracy of the EAs with the help of exact mathematical methods.

The hybrid algorithms for portfolio optimization can be divided into two categories regarding the algorithm framework. Moral-Escudero et al. present a hybrid strategy to make use of a genetic algorithm and a quadratic programming optimizer. The genetic algorithm is adopted to tackle the cardinality constraint and the quadratic programming optimizer is implemented for finding the optimal capital weights of the selected assets [27]. Gaspero et al. incorporate a hill climbing strategy with a quadratic programming optimizer. The asset combinations are determined by a hill climbing strategy and the optimal allocations of capital are obtained from the quadratic optimizer [28]. These two hybrid algorithms demand multiple runs for aggregation functions to approximate the PF. These hybrid algorithms based on the single-objective framework may not be efficient enough. On the other hand, Branke et al. propose a hybrid MOEA for the MV model, basing on the multiobjective framework. It is able to approximate the PF once it is implemented. Notwithstanding, their algorithm can only work for the MV model with a continuous PF, because it involves frequent judgements of the intersections for local PFs, which are almost non-existent when the PF is discrete [29].

In this work, we propose a hybrid algorithm for constrained portfolio problems. Specifically, a multiobjective mathematical method, AUGMECON2 [30], and a linear programming optimizer are utilized to construct the local PFs for every determined asset combination. Then, the decomposition approach is applied to rank these local PFs [31]. This proposed MOEA, named as F-MOEA/D, maintains a local PF for each individual. It is different from general point-based MOEA, preserving only one point for an individual. F-MOEA/D employs an exact mathematical method for finding the optimal capital weights of selected assets. So it might be more accuracy than general MOEAs. On the other hand, it takes the advantages of MOEAs and thus may require less computational resources than an exact mathematical method on large-scale problems.

The rest of this paper is organized as follows. The next section introduces the definitions of multiobjective optimization and CVaR. In the subsequent section, a practical mean-CVaR model is presented followed by which the proposed F-MOEA/D, including the main framework, the decomposition approach and the generate operators for this front-based MOEA is detailed. The empirical results are presented and discussed next. The final section concludes the paper.

Problem formulation

This section introduces the optimization model studied in the paper.

Multiobjective optimization

A multiobjective optimization problemFootnote 1 is stated as

where \(\Omega \) is the decision space, \(F:\Omega \rightarrow {\mathbb {R}}^m\) consists of m real-valued objective functions and \({\mathbb {R}}^m\) is the objective space. Since the objective values are vectors rather than scalars, a partial order relation, dominance, is introduced. For two solutions a and b, the solution a is considered to dominate the solution b if and only if \(f_i(a)\le f_i(b)\) for all \(\ i \in \{1,\ldots ,m\}\) and \(f_j(a) < f_j(b)\) for at least one \(\ j \in \{1,\ldots ,m\}\). The optimal (non-dominated) solutions of this problem constitute a Pareto set (PS) and the optimal objective values compose a Pareto front (PF) [14, 32].

Risk measures

Variance is an important and widely studied risk measure for the portfolio problem [3, 19]. Nonetheless, this risk measure assumes that the distribution for return of assets should be normal. For example, Fig. 1 exemplifies the variance risk measure for a normal distributed asset. According to the perception of risk for investors, the proportion with lower return than the expected return is considered as the risk. This risk measure is applicable when the distribution is symmetrical about the mean line, otherwise the variance can barely depict the downside risk.

VaR measures the maximum downside risk of a portfolio with a given confidence level and CVaR is the expected loss above the VaR. Figure 2 illustrates the VaR and CVaR intuitively. The vertical axis is about the frequency and the horizontal is about the loss. It depicts a distribution of the loss when the confidence level is \(\alpha \) and the maximum downside risk is \(\beta \).

Formally, assume there are n available assets. \(\mathbf{r }\) denotes the return of the assets and its probability distribution is assumed as \(p(\mathbf{r })\) for convenience. Define \(\mathbf{w }=(w_1,\ldots ,w_n)^{\mathrm{T}}\) as the invested portfolio weight. \(f(\mathbf{w },\mathbf{r })=-\mathbf{r }^{\mathrm{T}}\mathbf{w }\) is the loss and thus the cumulative distribution function is given as

where \(\beta \in {\mathbb {R}}\).

For a given confidence level \(\alpha \), CVaR of the loss is

where \({\mathrm{VaR}}_{\alpha }= {\mathrm{inf}}\{\beta | \Psi (\mathbf{w },\beta ) \ge \alpha \}\). Further, Eq. (1) can be approximated by sampling the probability distribution in \(\mathbf{r }\) [10]. The approximated alteration of Eq. (1) can be stated as

where \((\cdot )^+\) indicates only the positive numbers are considered in this expectation.

Nevertheless, directly minimizing CVaR via Eq. (2) is unachievable with existing mathematical programming methods because it is a conditional expectation instead of a direct formula. Rockafellar and Uryasev; hence, remodel it in a linear formulation [10]. Let \(\varGamma \) be the set of assets, \(|\varGamma |=n\), and \(\varPi \) be the set of time intervals, \(|\varPi |=t\). The sampling from real markets generates a collection of normalized return matrix \(\mathbf{R }\) as

With the return matrix Eq. (3), the linear formulation for CVaR is given as

where \(\phi \) and \(\gamma _i\) that \(i\in \varPi \) are auxiliary variables. They have proved the solutions for these two CVaR models are same [10].

Practical mean-CVaR model

To build a practical mean-CVaR model, a normalized stock market indices over time are included for measuring the loss. Further, four practical constraints are also included [15, 18]. They are (i) cardinality constraint: it restricts the number of assets (K) for composing a portfolio, (ii) floor and ceiling constraint: it determines the minimum and maximum limits of the invested proportion for each asset, (iii) pre-assignment constraint: it denotes which asset must be selected regarding the preference of investors, and (iv) round lot constraint: it demands each asset should be held as multiples of trading lots.

Thereby, the practical mean-CVaR model, involving the normalized stock market index \(\varvec{\zeta }=(\zeta _1,\ldots ,\zeta _t)\) is stated as

where the stock market indices, \(\varvec{\zeta }\), is considered in the risk CVaR. The return R is inverted for minimization and the vector \(\varvec{\mu }=(\mu _1,\ldots ,\mu _n)^{\mathrm{T}}\) includes the mean values for every rows in the return matrix. Equation (6) requires that all the capital should be invested. Equation (7) is the cardinality constraint (i.e., just K assets are selected) and \(\mathbf{s }=\{s_1,\ldots ,s_{n}\}^{\mathrm{T}}\) is an indicator vector; \(s_i=1\) if the asset i is selected, and \(s_i=0\) otherwise. Equation (8) is the floor and ceiling constraint. Besides, Eq. (9) represents that the asset i must be included in a portfolio if \(z_i=1\). It is a pre-assignment constraint. Then Eq. (10) defines the round lot constraint and \(\tau \) is a multiple. The multiples for every assets are set to be a same constant, with which 1 is divisible, since it will be really hard to handle flexible ones. In that case, it will be beyond the scope of the main discussion in this work. Finally, Eq. (11), which is the discrete constraint, implies that \(s_i\) must be binary. In summary, the decision variables in this practical mean-CVaR model are selection variable s (constrained to Eqs. (7), (9) and (11)), weight variable w (constrained to Eqs. (6), (8) and (10)), and auxiliary variables \(\phi \) and \({\varvec{\gamma }}\).

Note that we evaluate a return of one portfolio by the historical data, although there are some alternatives [1, 33, 34]. Because this article mainly discusses about developing an algorithm for the mean-CVaR model, discussions of different measures for the return will be beyond the scope of this article.

Local PF evolution

Constructing the local PFs

The main computational burden for the exact mathematical method on the problem \(({\mathcal {P}})\) is introduced by the integer variables (cardinality constraint), casting it as a mixed-integer problem (MIP). The demanded computational resource of mathematical methods for MIPs, such as branch-and-bound and cut plane methods, grows exponentially when the amount of the integer variables increases [35, 36].

According to the problem \(({\mathcal {P}})\), there are two pivotal variables (s and w) and they are integer and real numbers. For a portfolio, the solution can be presented as \((\mathbf{s }, \mathbf{w })\). Fortunately, mathematical methods can still be efficient if the integer variables s, denoting the combinations of selected assets, are handled in advance. For instance, Fig. 3a exemplifies three sub-objective spaces for three different combinations of selected assets, \(\mathbf{s }^1\), \(\mathbf{s }^2\) and \(\mathbf{s }^3\) on a real-world portfolio problem. A solution with determinate selected assets and indeterminate weights is presented as \((\mathbf{s }, \cdot )\). The solutions, bundling a variable s with all feasible weights w, will constitute the sub-objective space of \((\mathbf{s }, \cdot )\). The optimal solutions for each sub-objective space will constitute the local PFs, as shown in Fig. 3b. Specifically, the local PFs for variables \(\mathbf{s }^1\) and \(\mathbf{s }^2\) are two curves, and the local PF for variable \(\mathbf{s }^3\) is a data point. For mathematical methods, it is much easier to find the local PFs for the sub-objective spaces than finding the global PF for the original problem \(({\mathcal {P}})\), when the integer variables are determined in advance.

In particular, when the integer part, variable \(\mathbf{s }\), can be found, the problem \(({\mathcal {P}})\) can be converted into the following problem with an additional \(\varepsilon \)-constraint.

where the objective R is involved as a constraint, \(\varGamma ' \in \varGamma \), and \(|\varGamma '|=K\). In the problem \(({\mathcal {P}}_{\varepsilon })\), since the n-dimensional binary variables \(\mathbf{s }\) is eliminated, much less computational resource is required when the exact mathematical method is invoked. There are some alternative algorithms for the \(\varepsilon \)-constraint method. Most of the algorithms will approximate the PF via setting a number of different values of \(\varepsilon \) [14]. These different values of \(\varepsilon \) are called grids.

AUGMECON2 is employed in F-MOEA/D to deal with the problem \(({\mathcal {P}}_{\varepsilon })\). It is a multiobjective mathematical method based on \(\varepsilon \)-method [30]. It inherits some novel strategy from AUGMECON, escaping from weakly Pareto optimal solutions and redundant iterations [37]. Further, AUGMECON2 enhances the computational efficiency since many redundant grids can avoided. To solve the problem \(({\mathcal {P}}_{\varepsilon })\), AUGMECON2 is combined with a linear programming optimizer from Matlab.

According to the discussion above, we can construct an estimation of the local PF with a number of grids when the integer variable \(\mathbf{s }\) is determined. In the next subsection, the method about ranking the local PFs will be presented.

Ranking the local PFs

The optimum of the problem \(({\mathcal {P}}_{\varepsilon })\) contains a set of local Pareto optimal solutions rather than a data point. Therefore, we need to rank the corresponding local PFs for the integer variable \(\mathbf{s }\) appropriately for the environmental selection in MOEAs. Although there should be many different shapes of the local PFs, without loss of generality, we discuss how to perform the elitist selection among local PFs as illustrated in Fig. 4. These curves denote three local PFs for different \(\mathbf{s }\) and all of them includes infinite points. Performing the elitist selection among these local PFs is tricky for existing algorithms. We exploit the decomposition strategy to decompose the multiobjective problem into several scalar optimization subproblems [31, 38].

Suppose a local PF \({\mathcal {F}}\) is constructed when the integer variable \(\mathbf{s }\) is determined. In a minimization problem, we have to evaluate all the points of the local PF \({\mathcal {F}}\) for a scalar subproblem. Then the point with the minimal value can stand for the local PF on the subproblem. In this manner, the comparison between the local PFs is converted into the comparison of some representatives and any decomposition method is applicable. In this work, the Tchebycheff approach is used and it is stated as

where \({\mathcal {F}}\) is the local PF, \(F=(f_1,\ldots ,f_m)\) is an objective vector. \(\lambda \) is a weight vector, \(\lambda =(\lambda _1,\ldots ,\lambda _m)^{\mathrm{T}}\), and \(z^*_i\) is the optimal value of the ith objective. The right side of Eq. (18) involves two parts. First, all the points F in the local PF \({\mathcal {F}}\) are evaluated by the Tchebycheff approach. Second, the minimal value for the Tchebycheff approach can be determined via traversing the all the points of the local PF. If \(g({\mathcal {F}}^1|\lambda ,z^*)\) is smaller than \(g({\mathcal {F}}^2|\lambda ,z^*)\), the local PF \({\mathcal {F}}^1\) is considered to be better than local PF \({\mathcal {F}}^2\).

Figure 4 depicts this elitist selection for two direction vectors (\(\mathbf{v }^1\) and \(\mathbf{v }^2\)), corresponding to two weight vectors. In Fig. 4a, as for local PF\(^1\), point A has the smallest Tchebycheff value with the direction vector \(\mathbf{v }^1\). For local PF\(^2\) and PF\(^3\), points B and C have the smallest Tchebycheff values. Since the smaller \(g({\mathcal {F}}|\lambda ,z^*)\) the better, we can hence conclude the local PF\(^1\) is the best while the point A has the smallest value for the Tchebycheff approach. For the same reason, the local PF\(^2\) is the best in the Fig. 4b.

Framework of F-MOEA/D

MOEA/D is a decomposition-based multiobjective evolutionary algorithm, in which variables are optimized iteratively [31, 39, 40]. The main idea is using the neighborhood information to assist the search of every individual in the population. At each generation, MOEA/D maintains N solutions \(x^1,\ldots ,x^N\), where \(x^i\) is the solution to subproblem i. Assume each subproblem is a minimization problem and the objective function of subproblem is \(g^i(x)\). According to the distances among weight vectors, a T-neighborhood of each solution can be determined since each one corresponds to a weight vector. Thereby, a solution in the T-neighborhood of solution \(x^i\) is defined as a T-neighbor of \(x^i\). The main loop of a basic MOEA/D framework works as follows.

For each subproblem i:

-

1.

Mating: Select some solutions from the neighborhood of subproblem i and generate new solution \(x_{{\mathrm{new}}}^i\) with the selected ones via reproduction operators.

-

2.

Evaluation: For each subproblem j in the T-neighborhood of subproblem i, evaluate \(g^j(x^i_{{\mathrm{new}}})\).

-

3.

Replacement: For each solution \(x^j\) in the T-neighborhood of subproblem i, replace \(x^j\) with \(x^i_{{\mathrm{new}}}\) if \(g^j(x^i_{{\mathrm{new}}})<g^j(x^j)\).

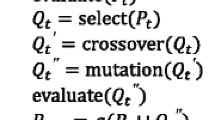

We take MOEA/D-DE in [41] as the basic of F-MOEA/D and Algorithm 1 summarizes F-MOEA/D. Weight vectors and neighborhood structure are initialized first (line 1). Then, the population is initialized as \(x^1,\ldots ,x^N\) and the corresponding local PFs \({\mathcal {F}}\) are obtained with the function Getfront() (line 2). The function Getfront() is based on AUGMECON2 and a linear optimizer. As for the inputs, \({\mathcal {P}}\) is the problem data, \(x^i\) is the integer variable \(\mathbf{s }\), and \(\lceil \root 2 \of {N} \rceil \) is the number of grids for the estimated local PF. For more details, audiences can refer to [30]. Afterwards, the reference point z and the fitness value \(FV^i\) for every subproblem i are initialized (line 3). Lines 4–27 describe the main loop of F-MOEA/D. From lines 6–10, the indices of the mating and replacement pool P are determined. Next, new candidates are generated and their local PFs are estimated (lines 11 and 12). The generate operators are detailed in “Representation and generate operators”. From lines 13–17, the reference point z is updated. Then, no more than \(n_r\) subproblems will be updated if the new candidate is better (lines 18–25). A more complete re-estimation of the local PFs will be performed by setting the grid number as N instead of \(\lceil \root 2 \of {N} \rceil \) (line 28). Finally, \(N^2\) points from all the local PFs are collected and N best solutions based on non-dominated sorting and the crowding distance are output (line 29).

As illustrated in Fig. 5, one example of performing F-MOEA/D on the constrained portfolio problem is presented. The iterative optimization process of F-MOEA/D is distinctively different from the general point-based MOEA. In each generation, each line is a local PF and each local PF corresponds to an individual. These individuals (local PFs) are maintained and evolved instead of points. From Fig. 5a–d (from generation 1 to 26), these sets of individuals (local PFs) gradually converge to the global PF.

Representation and generate operators

Various alternative representations can be employed for the binary vector \(\mathbf{s }\). We follow and slightly alter the representation and generate operators in [15]. They are tailored for the constrained portfolio optimization and the simulator experiments have demonstrated their effectiveness. Specifically, a n-dimensional continuous vector x is employed for the binary vector \(\mathbf{s }\). If L pre-assigned assets are selected, among the assets which are not pre-assigned, the \(K-L\) assets with highest values are selected. Then two generate operators are implemented with same chances.

First, generate operators for continuous variables can be directly employed here. They are differential evolution (DE) [42] and polynomial mutation [43], which never use prior knowledge. They are defined as follows:

-

O1

\(u_i=x^3_i+F\times (x^1_i-x^2_i)\)

\(x_{{\mathrm{new}},i}=\mathrm{polymt}(u_i,\eta _m,p_m)\),

where \(x^1\), \(x^2\) and \(x^3\) are three solutions randomly selected from the population, F is a scaling factor in DE, \(\mathrm{polymt}(u_i,\eta _m,p_m)\) is a polynomial mutation function on \(x_i\) with an index parameter \(\eta _m\) and a mutation probability \(p_m\), and \(x_{{\mathrm{new}}}\) is the new solution.

Second, a tailored operator that utilizes the known information of the portfolio optimization problem is slightly altered as follows.

-

O2

Swap the values of \(x_i\) and \(x_j\): \(x_i\rightleftharpoons x_j\), where the asset i is randomly chosen from the selected assets and the asset j is chosen randomly from the non-selected assets through one of the following strategies

-

S1

Choose an asset which has the highest return (\(\mu _j\))

-

S2

Choose an asset which has the lowest CVaR\(_{\alpha }\)

-

S3

Choose an asset which has the lowest VaR\(_{\alpha }.\)

-

S1

Empirical study

In the experimental studies, F-MOEA/DFootnote 2 is compared with NSGA-II, MOEA/D, SMS-EMOA, CCS-MOEA/D, MODE-GL, TDMEA and AUGMECON2&LP.

-

NSGA-II [44] is among the most popular MOEAs. It is based on the non-dominated sorting and crowding distance measure.

-

MOEA/D [31] is a decomposition-based MOEA. It decomposes a multiobjective problem with aggregation approaches and optimizes the decomposed problems simultaneously.

-

SMS-EMOA [45] is an indicator-based MOEA. It evaluates the generated solutions with hypervolume, thereby this algorithm searches in the direction of the best hypervolume.

-

Compressed Coding Scheme (CCS) is a tailored representation for MOEAs on portfolio optimization [15]. It maps a real-valued vector to both selection and allocation of assets. In this manner, the dependence among variables is expected to be utilized among the search. CCS has been incorporated into three mainstream MOEA frameworks [39]. In this work, CCS-MOEA/D is adopted.

-

The efficient learning-guided hybrid multi-objective evolutionary algorithm (MODE-GL) is proposed to solve portfolio optimization problems with real-world constraints [13]. In this work, a learning-guided strategy is devised to promote the algorithm search towards promising regions.

-

A three-dimensional encoding multiobjective evolutionary algorithm (TDMEA) is devised for the portfolio optimization problem [26]. A coding structure, specially developed to keep the size of chromosome invariant to the size of test instances, makes it efficient for large-scale problems.

-

AUGMECON2 is a multiobjective mathematical method based on \(\varepsilon \)-method [30].

The constraint set is considered as same as in [15, 18], it is cardinality \({K=10}\), floor \(\epsilon _i=0.01\), ceiling \(\delta _i=1.0\), pre-assignment \({z_{30}=1}\) and round lot \(\tau =0.008\). Besides, three values of \(\alpha \) are commonly considered: 0.90, 0.95 and 0.99, and \(\alpha =0.99\) is chosen in this paper.

Concerning the parameters, they are summarized in Table 1 and almost of them are set same as in the original literature. These parameters are the population size, parameters for generate operators, neighborhood size of MOEA/D, maximum replacement size, a parameter for dynamic neighborhood and the number of grids in AUGMECON2. MOEAs are independently implemented for 31 times. The stop criteria are set as 2000 s for the MOEAs and 7 days for the exact mathematical method.

Note that MOEAs are implemented on the Eq. (2) instead of the linear model introduced by Rockafellar and Uryasev. Because non-liner objective function is not difficult for MOEAs yet the linear model includes too much constraints.

Benchmark problems

The benchmarks like OR-libraryFootnote 3 are widely used and play a significant role in promoting the study of portfolio optimization. However, a distinct feature of the financial markets nowadays is that the scale of problems raises dramatically year after year. This requires us to tackle large-scale problems, involving thousands candidate assets. Hence, we test the compared algorithms in practical stock markets (Chinese stock markets) nowadays. The data is composed of daily close prices of assets and the CSI 300 Index from 11 December 2009 to 8 December 2020.Footnote 4 The available assets arrange from 475 to 9090 while both stocks and funds are considered. The details of the data are listed in the Table 2. The start and end dates are presented in the form of day-month-year.

Performance measures

Regarding the performance measures, two metrics are applied, Hypervolume (HV) [46] and Generational Distance (GD) [47], they are easy to use in PlatEMO [48]. HV prefers heuristics because it takes the diversity of solutions into account. Heuristics, like EAs, always pay much attention to the diversity of solutions while exact methods hardly do it [14, 49]. It is given as

where Q is the set of obtained solutions of algorithms, and \(hc_i\) is the hypercube bounded by solution i and the reference point r. Better solutions lead to higher HV. On the other hand, GD evaluates the convergence ability of algorithms. In this problem, it tends to prefer exact mathematical programming approaches since exact methods are going to find the optimum if possible yet heuristics search for approximations. It is defined as

where Q is the set of obtained solutions of algorithms and \(d_i\) is the shortest Euclidean distance among solution i and the representatives of PF. Better solutions lead to lower GD.

Note that the implementations of HV and GD require the true PFs or reference points. The PFs are obtained by implementing AUGMECON2&LP with 100 grids. However, they are unavailable while running the exact method after 7 days for some large-scale problems in this work. For these cases, all the solutions from two MOEAs with 31 independent runs are collected to construct the temporary PFs. Concerning the reference point, it is recommended to set the reference point r slightly dominated by the nadir point of the PF. Specifically, r is set as (1.1, 1.1).

Simulation experiments

In the experimental studies, we discuss about the running time of the exact mathematical method on the constrained portfolio problems. Then the performance of the implemented algorithms are compared numerically and graphically.

Figure 6 shows the running time of AUGMECON2&LP on D1 to D8. The exact mathematical method is very effective for small problems. It just takes several hundred seconds for addressing D1, D2 and D3. Nevertheless, the required times rise non-linearly. It takes more than 1 h to solve D5 and D6, while the demanded running times raise to 32 h for D7 and D8. Furthermore, the exact mathematical method even can not finish in 7 days for the largest two problems. These computational costs for large-scale problems are unaffordable when the investors are going do some high frequency trading operations. This confirms the necessity of devising efficient heuristic algorithms.

Tables 3 and 4 summarize the results of seven algorithms regarding HV and GD. The results of AUGMECON2&LP are not reported in these tables but are plotted in the figures as the true PFs for comparison. Because if the exact mathematical method completes, the HV and GD of it must be the best since it can find the proved optimal solutions for these MIPs.

Concerning HV, F-MOEA/D outperforms other six algorithms on almost all the problems while its performance is similar with CCS-MOEA/D and worse than TDMEA on \(D_{7}\). The three MOEAs, CCS-MOEA/D, MODE-GL and TDMEA, tailored for the constrained portfolio optimization outperform the generic MOEAs. This result is expected since the tailored MOEAs either utilize specialized representation nor make use of some priori information, such as the return and risk of assets. Among these tailored MOEAs, CCS-MOEA/D and TDMEA have similar performance while MODE-GL is defeated by them on all problems. The solutions obtained by SMS-EMOA are worst while NSGA-II and MOEA/D perform similarly. It is rational because the CPU time is set as the stop criterion and SMS-EMOA will calculate the performance metrics frequently, occupying lots of computational resources. Moreover, the Wilcoxon rank-sum test at 5% significant level is also adopted. Symbols ‘+, −, \(\sim \)’ means the performance of the corresponding algorithm is better than, worse than or similar to F-MOEA/D. For instance, ‘0/9/1’ denotes the CCS-MOEA/D is worse than F-MOEA/D on 9 problems, and competitive with F-MOEA/D on 1 problem. F-MOEA/D is still the best algorithm regarding the Wilcoxon rank-sum test.

Regarding GD, similar conclusion can be made from the Table 4. The results reconfirm the superiority of F-MOEA/D since it has better performance on almost problems and hardly loses to others in terms of the employed Wilcoxon rank-sum test. The three tailored MOEAs still performs well and their performance is better than three generic MOEAs. Meanwhile, SMS-EMOA still can not compete with other algorithms on all the problems.

In Tables 3 and 4, F-MOEA/D is the best algorithm on most test problems, but it is worse than some MOEAs on a few datasets. There are probably two reasons why F-MOEA/D sometimes fails. (1) Because F-MOEA/D can only ensure the solutions for the linear programming problem are optimal but the optimality for the selection of assets from EAs is not guaranteed. (2) In a same time budget, the number of sampling times for the selection of assets is very limited in F-MOEA/D compared to MOEAs since constructing the local PFs is time consuming.

Figure 7 illustrates the obtained efficient fronts of every algorithm, hence, an intuitive comparison can be made. Approximated fronts with median HVs for every algorithm, except MOEA/D and SMS-EMOA, are plotted. MOEA/D and SMS-EMOA are omitted because the performance of three generic MOEAs is similar. Due to the space limit, fronts on 6 problems are plotted. In Fig. 7a–d, the PFs are the solutions obtained by AUGMECON2&LP. On small-scale problems, \(D_1\) and \(D_3\), the solutions of F-MOEA/D almost lie on the true PFs. It indicates the optimal solutions are almost found by F-MOEA/D. Except MODE-GL, all the other algorithms perform similarly and their solutions are close to the PFs. The solutions from MODE-GL are frustrating when they are far away from the PFs and the diversity is not good. On \(D_5\) and \(D_7\), the solutions obtained by F-MOEA/D are not bad. Despite of the limitation times for sampling the variables in EAs, the approximated PFs obtained by F-MOEA/D are not far from the PFs and they are good distributed. CCS-MOEA/D and TDMEA perform much worse than F-MOEA/D on \(D_5\) and a bit better than F-MOEA/D on \(D_7\). Both NSGA-II and MODE-GL have poor performance on these two problems, but NSGA-II is now defeated by MODE-GL. Concerning \(D_9\) and \(D_{10}\), these two problems involve large number of candidate assets, the PFs are composed of the non-dominated solutions from all MOEAs with all independent implementations. The solutions of F-MOEA/D are very close to the PFs, while there are certain gaps between the solutions from the other algorithms and the PFs. The solutions of CCS-MOEA/D, TDMEA, MODE-GL are acceptable, but those of NSGA-II are very far from the PFs. These reconfirm the superiority of F-MOEA/D.

Ablation study

Besides the comparison among peer algorithms, this subsection intends to analyze the swap operator (O2) for the F-MOEA/D. There are three swap strategies based on the priori information of the data, they are devised for searching the asset with the highest return, the lowest CVaR and the lowest VaR, respectively. We hence perform an ablation study on these strategies. Due to the space limitation, the simulation experiments are implemented on 4 problems, \(D_1\), \(D_3\), \(D_5\), and \(D_7\).

Table 5 shows the results, the first, second, third and all the swap strategies are eliminated. We denote these methods as F-MOEA/D\(_{\{S2,S3\}}\), F-MOEA/D\(_{\{S1,S3\}}\), F-MOEA/D\(_{\{S1,S2\}}\), F-MOEA/D\(_\emptyset \) respectively. The results indicate that the first swap strategy play the most important role, because once it is eliminated the solutions become significant worse compared to the original F-MOEA/D on these 4 problems. The algorithm will get exact benefit from the first strategy because the objective, return R, in the portfolio optimization is a linear function. It means we can directly get the best solution for the return R with a greedy method. On the other hand, since the risk CVaR is not a linear function, a portfolio can not be guaranteed to have the lowest CVaR when each asset in the portfolio has a lowest CVaR. Therefore, the second and third strategy will not make such contributions as the first one. Nevertheless, the results in the table denote the algorithm will perform better if these two strategy are included.

Figure 8 illustrates the front obtained by each algorithm. Compared to the original F-MOEA/D, the fronts obtained by the algorithm without the swap operator will distinctly shift to the upper right. Since the upper side implies lower return and the right side denotes higher risk, these results indicate that the swap operator assists the algorithm search for better solutions. Furthermore, on \(D_7\), the solutions obtained by the algorithm without the first swap strategy are very far away from the high return area, this indicates in the large-scale problem the first swap strategy will help the algorithm search for the high return solutions efficiently.

A bit more experiments with different reproduction operators

So far, we have compared F-MOEA/D with three generic and two tailored MOEAs. In this subsection, comparisons among F-MOEA/D and three variants of MOEA/D, namely MOEA/D-DE [41], MOEA/D-PSO [50] and MOEA/D-GA [31], are also made. These variants have shown excellent performance on some traditional black box problems and the parameters of these reproduction operators are kept the same as in the literature.

These three variants of MOEA/D and F-MOEA/D are implemented for 4 problems, \(D_1\), \(D_5\), \(D_7\) and \(D_{10}\). Statistical results are provided in Table 6 and graphical results are presented in Fig. 9. In the Table 6, it is easy to tell that all these variants of MOEA/D with different reproduction operators are worse than F-MOEA/D not only on the mean values of HVs but also on the Wilcoxon rank-sum-test. In Fig. 9, the solutions of these three variants of MOEA/D roughly approximate the PF on \(D_1\), but they can hardly reach the PFs, on \(D_5\), \(D_7\) and \(D_{10}\). These results are almost anticipated, because these invoked reproduction operators are generic for black box problems and they are not tailored for portfolio optimization. For the small-scale problem, D1 (\(N=475\)), these reproduction operators are barely workable, but for the large-scale problems, \(D_5\), \(D_7\) and \(D_{10}\) (\(N\ge 3406\)), these operators almost fail.

Conclusion

This work models the portfolio problem practically. It characterizes the risk of the problem with a popular and easy to solve measure, CVaR. CVaR is more recognized than the variance measure in the industry filed and it is easier to be solved for existing optimization tools. Further, four real-world constraints are included in the problem. This constrained mean-CVaR model is an MIP and it induces challenges to optimization algorithms. Hence, we propose a Pareto front evolution strategy and it is embedded into MOEA/D. This novel algorithm is named F-MOEA/D and it facilitates the complementary advantages in the exact and heuristic optimization realms. Two parts are involved in this algorithms, i.e., constructing local PF through exact methods and picking the best one via decomposition approaches. Specifically, the local PFs are constructed via AUGMECON2 when the integer variables are determined by the EAs. Then, the best local PF is picked through the decomposition approach. Experimental studies demonstrate F-MOEA/D is more scalable than the mathematical method when the latter one even can not be completed in 7 days on large-scale problems. Furthermore, the performance of F-MOEA/D is much better than the alternative MOEAs on most of the problems.

For the future work, we are planning to extend this single-period model to multi-period since it is a fundamental requirement of the investors in the real financial markets. Moreover, we believe this kind of hybrid algorithm is suitable for many practical MIPs. So we will continue to find suitable MIPs and propose efficient algorithms based on the basic idea of uniting exact and heuristic methods.

Notes

Without loss of generality, the minimization problem is discussed in this work.

Available at https://github.com/CYLOL2019/F-MOEAD.

References

Markowitz H (1952) Portfolio selection. J Financ 7:77–91

He C, Tian Y, Wang H, Jin Y (2020) A repository of real-world datasets for data-driven evolutionary multiobjective optimization. Complex Intell Syst 6(1):189–197

Kolm PN, Tütüncü R, Fabozzi FJ (2014) 60 years of portfolio optimization: practical challenges and current trends. Eur J Oper Res 234(2):356–371

Harlow WV (1991) Asset allocation in a downside-risk framework. Financ Anal J 47(5):28–40

Bakshi G, Kapadia N, Madan D (2003) Stock return characteristics, skew laws, and the differential pricing of individual equity options. Rev Financ Stud 16(1):101–143

Fama EF (1965) The behavior of stock-market prices. J Bus 38(1):34–105

Krokhmal P, Zabarankin M, Uryasev S (2013) Modeling and optimization of risk. In: Handbook of the fundamentals of financial decision making: part II, pp 555–600. World Scientific, Singapore

Estrada J (2008) Mean-semivariance optimization: a heuristic approach. J Appl Financ (Former Financ Pract Educ) 18(1):57–72

RiskMetrics T (1996) Technical document, 4th edn. JP Morgan Inc., New York

Rockafellar RT, Uryasev S et al (2000) Optimization of conditional value-at-risk. J Risk 2:21–42

Rockafellar RT, Uryasev S (2002) Conditional value-at-risk for general loss distributions. J Bank Financ 26(7):1443–1471

Artzner P, Delbaen F, Eber J-M, Heath D (1999) Coherent measures of risk. Math Financ 9(3):203–228

Lwin KT, Qu R, MacCarthy BL (2017) Mean-VaR portfolio optimization: a nonparametric approach. Eur J Oper Res 260(2):751–766

Miettinen K (2012) Nonlinear multiobjective optimization, vol 12. Springer Science & Business Media, Berlin

Chen Y, Zhou A, Das S (2021) Utilizing dependence among variables in evolutionary algorithms for mixed-integer programming: a case study on multi-objective constrained portfolio optimization. Swarm Evolut Comput 100928

Ponsich A, Jaimes AL, Coello CAC (2013) A survey on multiobjective evolutionary algorithms for the solution of the portfolio optimization problem and other finance and economics applications. IEEE Trans Evolut Comput 17(3):321–344

Streichert F, Ulmer H, Zell A (2004) Evolutionary algorithms and the cardinality constrained portfolio optimization problem. In: Operations research proceedings 2003. Springer, Berlin, pp. 253–260

Lwin K, Qu R, Kendall G (2014) A learning-guided multi-objective evolutionary algorithm for constrained portfolio optimization. Appl Soft Comput 24:757–772

Ertenlice O, Kalayci CB (2018) A survey of swarm intelligence for portfolio optimization: algorithms and applications. Swarm Evolut Comput 39:36–52

Chang T-J, Meade N, Beasley JE, Sharaiha YM (2000) Heuristics for cardinality constrained portfolio optimisation. Comput Oper Res 27(13):1271–1302

Chen Y, Singh HK, Zhou A, Ray T (2021) A fast converging evolutionary algorithm for constrained multiobjective portfolio optimization. In: Evolutionary multi-criterion optimization: 11th international conference, EMO 2021. Springer International Publishing, Berlin, pp 283–295

Kalayci CB, Polat O, Akbay MA (2020) An efficient hybrid metaheuristic algorithm for cardinality constrained portfolio optimization. Swarm Evolut Comput 54:100662

Dreżewski R, Doroz K (2017) An agent-based co-evolutionary multi-objective algorithm for portfolio optimization. Symmetry 9(9):168

Najafi AA, Mushakhian S (2015) Multi-stage stochastic mean–semivariance-CVaR portfolio optimization under transaction costs. Appl Math Comput 256:445–458

Kar MB, Kar S, Guo S, Li X, Majumder S (2019) A new bi-objective fuzzy portfolio selection model and its solution through evolutionary algorithms. Soft Comput 23(12):4367–4381

Liagkouras K (2019) A new three-dimensional encoding multiobjective evolutionary algorithm with application to the portfolio optimization problem. Knowl Based Syst 163:186–203

Moral-Escudero R, Ruiz-Torrubiano R, Suárez A (2006) Selection of optimal investment portfolios with cardinality constraints. In: 2006 IEEE international conference on evolutionary computation. IEEE, pp. 2382–2388

Gaspero LD, Tollo GD, Roli A, Schaerf A (2011) Hybrid metaheuristics for constrained portfolio selection problems. Quant Financ 11(10):1473–1487

Branke J, Scheckenbach B, Stein M, Deb K, Schmeck H (2009) Portfolio optimization with an envelope-based multi-objective evolutionary algorithm. Eur J Oper Res 199(3):684–693

Mavrotas G, Florios K (2013) An improved version of the augmented \(\varepsilon \)-constraint method (augmecon2) for finding the exact Pareto set in multi-objective integer programming problems. Appl Math Comput 219(18):9652–9669

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evolut Comput 11(6):712–731

Li W, Meng X, Huang Y, Mahmoodi S (2021) Knowledge-guided multiobjective particle swarm optimization with fusion learning strategies. Complex Intell Syst 7(3):1223–1239

Krokhmal P, Palmquist J, Uryasev S (2002) Portfolio optimization with conditional value-at-risk objective and constraints. J Risk 4:43–68

Balezentiene L, Streimikiene D, Balezentis T (2013) Fuzzy decision support methodology for sustainable energy crop selection. Renew Sustain Energy Rev 17:83–93

Jünger M, Liebling TM, Naddef D, Nemhauser GL, Pulleyblank WR, Reinelt G, Rinaldi G, Wolsey LA (2009) 50 Years of integer programming 1958–2008: from the early years to the state-of-the-art. Springer Science & Business Media, Berlin

Achterberg T, Koch T, Martin A (2005) Branching rules revisited. Oper Res Lett 33(1):42–54

Mavrotas G (2009) Effective implementation of the \(\varepsilon \)-constraint method in multi-objective mathematical programming problems. Appl Math Comput 213(2):455–465

Chen Y, Zhou A (2021) Variable division and optimization for constrained multiobjective portfolio problems. Comput Res Repos arXiv:2101.08552

Zhou A, Qu B-Y, Li H, Zhao S-Z, Suganthan PN, Zhang Q (2011) Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evolut Comput 1(1):32–49

Shufen Q, Chaoli S, Zhang G, He X, Tan Y (2020) A modified particle swarm optimization based on decomposition with different ideal points for many-objective optimization problems. Complex Intell Syst 6(2):263–274

Li H, Zhang Q (2009) Multiobjective optimization problems with complicated Pareto sets, MOEA/D and NSGA-II. IEEE Trans Evolut Comput 13(2):284–302

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Deb K, Deb D (2014) Analysing mutation schemes for real-parameter genetic algorithms. Int J Artif Intell Soft Comput 4(1):1–28

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evolut Comput 6(2):182–197

Beume N, Naujoks B, Emmerich M (2007) SMS-EMOA: multiobjective selection based on dominated hypervolume multiobjective selection based on dominated hypervolume. Eur J Oper Res 181(3):1653–1669

Zitzler E, Thiele L (1999) Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Trans Evolut Comput 3(4):257–271

Van Veldhuizen DA (1999) Multiobjective evolutionary algorithms: classifications, analyses, and new innovations. Technical report, Air Force Institute of Technology

Tian Y, Cheng R, Zhang X, Jin Y (2017) PlatEMO: a MATLAB platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput Intell Mag 12(4):73–87

Ishibuchi H, Imada R, Setoguchi Y, Nojima Y (2018) How to specify a reference point in hypervolume calculation for fair performance comparison. Evolut Comput 26(3):411–440

Peng W, Zhang Q (2008) A decomposition-based multi-objective particle swarm optimization algorithm for continuous optimization problems. In: 2008 IEEE International Conference on Granular Computing. IEEE, pp 534–537

Acknowledgements

This work is supported by the Scientific and Technological Innovation 2030 Major Projects under Grant no. 2018AAA0100902, the Science and Technology Commission of Shanghai Municipality under Grant no. 19511120600, and the National Natural Science Foundation of China under Grant no. 61731009, 61673180, 61907015.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, Y., Zhou, A. Multiobjective portfolio optimization via Pareto front evolution. Complex Intell. Syst. 8, 4301–4317 (2022). https://doi.org/10.1007/s40747-022-00715-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00715-8