Abstract

Probabilistic linguistic term set (PLTS) provides a much more effective model to compute with words and to express the uncertainty in the pervasive natural language by probability information. In this paper, to avoid loss of information, we redefine the classical probabilistic linguistic term sets (PLTSs) by multiple probability distributions from an ambiguity perspective and present some basic operations using Archimedean t-(co)norms. Different from the classical PLTSs, the reformulated PLTSs are not necessarily normalized beforehand for further investigations. Moreover, the multiple probability distributions based PLTSs facilitate the incorporation of the different attitudes of the DMs in their score values and the deviation, and thus the comparisons. Then the Decision-Making Trial and Evaluation Laboratory (DEMATEL) method is extended to the reformulated PLTS frame by incorporating probability information. With these newly developed elements in the reformulated PLTSs, a DEMATEL based multiple attributes decision-making is proposed. The illustrative example and comparison analysis show that the method over the reformulated PLTSs is feasible and valid, and has the advantage in processing without any information loss (i.e., without normalization) and fully exploration of the DMs-preference and knowledge.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Decision-making is human-centered and has inborn uncertainty with vague information expressed by natural language. To date, there are many models developed to facilitate the representations of the ill-structured information with uncertainty and vagueness. The first model is the famous fuzzy linguistic model by Zadeh [59], which relies on the membership function to express the non-crisp word or sentences in human language and has been a milestone in qualitative analysis in decision-making. Since then, a formal methodology, known as computing with words (CWW), in reasoning, computing and making decisions with natural language based information has come into being and soon became an eye-catching formulation in various fields. Notably, many linguistic models are introduced to handle the pervasive linguistic qualitative problems and can be fallen into two categories: the single linguistic term-based models and the multiple linguistic term-based models. Among the single linguistic term-based models, there are the symbolic linguistic computing model [56],the virtual linguistic computing model [55], the 2-tuple linguistic model [21] besides the Zadeh’s classical fuzzy linguistic variables. These models rely only on a single linguistic term representation of a linguistic variable, which suggests that one has to present his/her opinions without any hesitations. However, it is not the case in practice. Due to the incomplete information or knowledge, people may be uncertain or hesitant on the linguistic expressions and one single linguistic term is not adequate to portray the scenario accurately. Then, the multiple linguistic term-based models are proposed to cover the uncertainties with complex linguistic expressions, such as the hesitant fuzzy linguistic term set (HFLTS) [41], which is mathematically reformulated as hesitant fuzzy linguistic element (HFLE) [32]. The HFLTSs use a set of potential linguistic terms to effectively express the vague and complex linguistic statements, such as “between medium and tall”, “at least slightly good” and the like. The series of fruitful theoretical and practical results [31] betray that HFLTS or HFLE provides an effective way to capture the uncertainty in natural languages.

To express the preferences of DMs in a more flexible way, HFLTSs are extended to incorporate additional information for differentiating the given linguistic terms. The typical ones are the PLTS [38], equivalent to the proportional linguistic distribution assessment [19, 52]), the proportional HFLTSs [7]. Particularly, PLTS proposed by Pang et al. [38] assigns probabilities to the linguistic terms of an HFLTS and is more accurate in describing the vagueness in linguistic statements than HFLTS. Consequently, since the arising of PLTSs, the emerging model attracts extensive attentions from scholars in various fields and substantially advances the progress in CWW [30, 37]. The pertaining investigations can be distinguished into three categories. The first is focus on the PLTSs operations and notations [17, 28, 37, 38, 54, 58, 61], which is the basis for CWW and information aggregation; the second is about some PLTS-based methods for decision-making. Many classical methods are tailored to facilitate the PLTSs, such as the PLTS-based versions of MULTIMOORA [51], TODIM [36], ORESTE [49], VIKOR [63], ELECTRE II [33], ELECTRE III [28], LINMAP [29], QUALIFLEX [14], gained and lost dominance score method [50], DEMATEL [9], which bring more convenience for various applications over the PLTSs. The third is about the extensions or variants of PLTSs, such as the probabilistic uncertain linguistic term set [34], probabilistic linguistic vector-term set [60], interval-valued PLTS [3], Uncertain PLTS [23], dual PLTS [53]. The investigations around PLTSs is on-going vigorously. For a more comprehensive and detailed summaries on PLTSs, we recommend the most recent survey contributions [30, 37].

As is known, PLTS has the advantage in considering the ignorance in probabilistic information due to the partial information (the summation of probabilities for the given linguistic terms is less than one), then the normalization of PLTSs is inevitably necessary for further investigation. Until now, there are fruitful normalization methods for PLTSs, such as Average assignment [38], full-set assignment [28, 62], power-set assignment [37], envelope assignment [37], attitudes assignment [45], in tackling the incomplete probabilistic information. However, these normalization methods lead to information loss about the ignorance of probability information since the ignorance of probability is eliminated after the normalization. Then the authors in [37] presented an open problem on whether the normalization is necessary. Indeed, the ignorance of probability information is practically inevitable, then it is of vital importance to provide a reasonable process framework without any information loss, which is the very point which drives us to reconsider the process frame for the underlying probability information of the PLTSs in a different perspective.

In general, a PLTS has a set of linguistic terms with a probability distribution with normality, which suggests people have an exact and complete knowledge of probabilistic information. If the normality does not hold, according to [38], then it suggests that people have partial ignorance as a result of incomplete information or knowledge. Indeed, the partial ignorance actually means the ‘probabilistic information’ for the linguistic terms is non-probabilistic and is referred to a non-additive measure or capacity [16, 43]. In other words, for this case a PLTS can be seen as the set of linguistic terms with a set of probability distributions. Hence, in this work, we try to reformulate the PLTS from the perspective of ambiguity and provides a new insight to handle the ignorance of probability information through the non-additive probabilities or capacities (alternatively the multiple probability distributions). Furthermore, the classical DEMATEL is extended by the reformulated PLTSs. In detail, the probability information by the PLTSs is incorporated to tackle the uncertainty in the linguistic evaluation of the pair-wise comparisons and consequently used to synthesize the prominence-relation analysis.

Throughout the paper, we focus on the reformulation the classical PLTSs, and introduce a DEMATEL-based multiple attributes decision-making (MADM). In summary, the following aspects in this work can be highlighted.

-

(1)

The redefinition of the PLTS from the perspective of ambiguity. The classical PLTS is equivalently reformulated as a set of potential linguistic terms with multiple probability distributions. Since the lower bound of the accompanying multiple probability distributions is an alternative for the ignorance of probability information, then the reformulation does not lead to any information loss. It is worthy noting that, different from the classical PLTS literature, the reformulated PLTSs can be operated and aggregated using Archimedean t-(co)norms without any probability normalization.

-

(2)

The redefinition of the PLTS by the multiple probability distributions facilitates the modeling of the behavioral attitudes of DMs. Specifically, the score values and the deviations of PLTSs are coupled with an optimism index, through which the behavioral attitudes of the decision-makers can be considered. By this way, we can incorporate the (optimistic, or moderate, or pessimistic) attitudes of the DMs in PLTS-based problem solutions.

-

(3)

The extension of the DEMATEL technology in the reformulated PLTS frame. With the pertaining probability information of the PLTSs, the classical DEMATEL method is extended. A family of initial direct relation matrices are obtained in a lexicographically way using the linguistic terms in PLTSs for the influence evaluations, and the resultant prominences and relations for each attribute are consequently probabilistically weighted and synthesized.

Here, we focus on the reformulation of the classical PLTS by transforming the ignorance of probabilities into multiple probability distributions in an ambiguity perspective. As a matter of fact, the reformulation is the accurate expression in the probability information, thus takes a step forward in exploring the experts-preference and knowledge. Further, in comparison (see Table 1), the DEMATEL-based aggregation method using the reformulated PLTSs in this work does not necessarily normalize the PLTSs beforehand and can well incorporate the different attitudes of the DMs for decision-making, thus does not result in any information loss in the process. Therefore, the improvement of the information exploration and utilization are the intrinsic starting point and drive for the employment of the reformulated PLTSs in DEMATEL. Compared to DEMATEL method based on the classical PLTS [9], or the linguistic term, or numerical expression, the method with the reformulated PLTS in this work has the advantage in effectively manifesting the experts-preference and knowledge, thus could lead to substantial improvement on the precision of decision-making.

The paper is organized as follows: as a preliminary, the next section provides some justifications on the ambiguity and the ignorance of the probability information about PLTSs; the redefinition and some notations of the PLTS are presented in the subsequent section followed by which the t-(co)norm-based operations over the reformulated PLTSs are focussed upon; then, the DEMATEL technology is extended using the reformulated PLTSs and then a PLTS DEMATEL-based aggregation method for decision-making is proposed; in the penultimate section, the illustrative example for the method is presented. In the end, some concluding remarks are presented.

Ambiguity and the ignorance of the probability information about PLTSs

In the literature of economics and psychology, the theories of choice have dominantly been the expected utility (EU) theory and the subjective expected utility (SEU) theory. It is assumed that in EU the pertaining probabilities are known while in SEU probabilities of states are subjectively or personally known. Actually, it is unbelievable to think of decision-making in which the probabilities are objectively presented. Thus, SEU is much more widely applied than EU. Nevertheless, there are much empirical evidence against SEU concerning the sharp distinction between whether the probabilities are (un)known. The distinctions are notated by various names, such as the risk vs uncertainty by Knight Frank [25], unambiguous vs ambiguous probabilities [13]. Generally, the term ambiguity refers to the case that the probability is unknown or partially known. Moreover, how much the decision-maker know about the probabilities does substantially influence their decisions [5]. The ignorance of probability, being a vital feature of PLTSs, can be seen as a snapshot of ambiguity. Therefore, ambiguity could be a point of departure to provide some new insights on PLTSs.

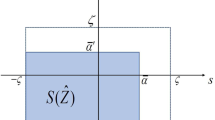

In general, a PLTS has the form as

For the case \(\sum _{i=1}^mp_i= 1\), i.e., the PLTS has a set of linguistic terms with a probability distribution, which suggests people have an exact and complete knowledge of probabilistic information; for the case \(\sum _{i=1}^mp_i< 1\), according to [38], it suggests that people have partial ignorance as a result of incomplete information or knowledge. More precisely, the partial ignorance is referred to a situation that the probabilities are unknown or partially known, which is referred to ambiguity argued by Ellsberg [13] in his experimental results known as the Ellsberg paradox. Ellsberg paradox tells us that under ambiguity the decision-makers may rely on non-probabilistic measures which are the so called non-additive probabilities [43], or capacities [16] and are defined to be a set function \(v:\Sigma \rightarrow [0,1]\) satisfying \(v(\emptyset )=0\) and \(v(S)=0\), and \(v(E)\le v(E')\) whenever \(E\subseteq E'\). Here \(\Sigma \) is the event algebra of a state space S. It is shown [42] that a capacity v can be interpreted as a lower envelope on a set \(\hbox {core}(v)\) of considered possible probabilities P, i.e., \(v(E)=\inf _{P\in \hbox {core}(v)}\{P(E)\}.\) Intuitively, the decision-makers with ambiguity or partial probability entertain multiple probabilities as potential beliefs. Therefore, \(\sum _{i=1}^mp_i< 1\) in PLTSs actually means the ‘probabilistic information’ for the linguistic terms is non-probabilistic and should be referred to a non-additive measure or capacity. In other words, for this case a PLTS can be seen as the set of linguistic terms with a family of probability distributions.

For illustration, we provide the following example which is adapted from [38].

Example 1

In evaluating the overall comfortable degree of a vehicle (e.g., Honda XR-V), one can use a PLTS such as

In an ambiguity perspective, 0.1, 0.65 and 0.2 can be seen as the capacity of the underlying linguistic terms. That is \(v(\text {slightly high})=0.1\), \(v(\text {high})=0.65\), \(v(\text {very high})=0.2\). Note that \(0.1+0.65+0.2<1\). Since a capacity can be interpreted as a lower bound on a set of potential probability distributions. Here we assume two probability distributions \(P_1\) and \(P_2\) for these linguistic terms with \(P_1(\text {slightly high})=0.1\), \(P_1(\text {high})=0.7\), \(P_1(\text {very high})=0.2\); and \(P_2(\text {slightly high})=0.15\), \(P_2(\text {high})=0.65\), \(P_2(\text {very high})=0.2\). Then it holds that

\(v(\text {slightly high}){=}\min \{P_1(\text {slightly high}), P_2(\text {slightly high})\}\);

\(v(\text {high})=\min \{P_1(\text {high}),P_2(\text {high})\}\);

\(v(\text {very high})=\min \{P_1(\text {very high}),P_2(\text {very high})\}\).

Then the PLTS by (2) can be considered that there are multiple probability distributions (\(P_1\) and \(P_2\)) for these linguistic terms and could be expressed equivalently in the multiple distribution-based form: \(\{\{\text {slightly high}(0.1), \) \( \text {high}(0.7),\text {very high}(0.2)\};\) \(\{\text {slightly high}(0.15),\text {high}(0.65),\text {very high}(0.2)\}\}.\)

The reformulation of PLTSs and the pertaining notations

By the justifications of the above, a PLTS by Eq. (1) can be redefined as follows.

Definition 1

Let \(S=\{s_0,s_1,\ldots ,s_\tau .\}\) be a LTS, a PLTS is defined by

with \(k\ge 1, L_j(p)=\{s_{\alpha _i}(p_{ij})|s_{\alpha _i}\in S,i=1,2,\ldots ,m, p_{ij}\ge 0,\sum _{i=1}^mp_{ij}= 1.\}\) and \(p_i=\min _jp_{ij}\) for each i.

By the definition, if \(k=1\), then \({\mathscr {L}}(p)\) is equivalently reduced to the case \(\sum _{i=1}^mp_i=1\) in Eq. (1). In general, people only have partial or incomplete relevant information about the alternatives, and can hardly obtain a precise probability distribution for the potential linguistic terms. Instead, they may choose a set of possible probability distributions for the linguistic terms in compromise. Thus, the redefinition is practically and behaviorally reasonable. Additionally, if \(m=1\), i.e., only one linguistic term is given, the probability is necessary 1 which suggests a complete belief in the specific linguistic term. For this case, we would abbreviate the notation \(s_\alpha (1)\) by omitting the probability 1, and use \(s_\alpha \) instead. Here, it is assumed the partial belief focuses only on the case with multiple linguistic terms.

With an ambiguity perspective, the reformulation of the PLTS transforms the ignorance of probabilities into multiple probability distributions. Alternatively, the PLTS is a set of linguistic terms with multiple probability distributions. Note that \(p_i=\min _jp_{ij}\) implies that \(\sum _{i=1}^mp_i=\sum _{i=1}^m\min _jp_{ij}\le 1\). Thus, the multiple probability distributions representation of PLTS do not lead to any information loss over the ignorance of probabilities, and provides a smart way to tackle the probability normalization problem. By the reformulation the probability normalization for PLTS is not necessary any more and can be directly skipped for further investigations. In [38], the authors provided a normalization method to handle the ignored probability information and suggested that there may be some alternative methods on probability normalization. However, by these normalization methods the ignored probabilities are assigned to various linguistic term entities, and consequently removed. In other words, the normalization methods on the PLTS bring about much information loss on the ignored probabilities. Thus, Liao et al. [30] adopted a wait-and-see attitude about the normalization and proposed the open problem on whether it is necessary to normalize the PLTS. Here, the reformulation of the PLTS provides an ambiguity perspective into the partial ignorance of probabilistic information. Thus the PLTS considers the partial probability as a set of multiple probability distributions. Therefore, the redefinition of PLTS provides a compromised solution to the open problem [30, 37] on PLTS normalization through the multiple probability distribution-based representation of PLTSs. In the rest of the paper, we focus on the PLTSs by (3).

For the normalization of PLTSs having different sets of linguistic terms, we just have to add the unshared linguistic terms and assign zero probabilities to them. We use the dotted symbols \(\dot{{\mathscr {L}}}(p)\) for the normalized PLTSs.

Example 2

Let \({\mathscr {L}}_1(p){=}\{ \{ s_4 (0. 2), s_3 (0. 4), s_2 (0.4) \}, \{ s_4 (0. 2), s_3 (0. 5),s_2 (0.3) \}, \) \(\{ s_4 (0. 3),s_3 (0. 4), s_2 (0.3) \}\}\), which is equivalent to \(L_1(p) = \{ s_4 (0.2),s_3 (0.4), s_2 (0. 3) \}\); \({\mathscr {L}}_2(p)=\{ \{ s_5 (0. 3),s_4 (0. 4),s_3 (0.3) \}, \{ s_5 (0. 2), s_4 (0. 6), s_3 (0.2) \}, \{ s_5 (0. 3),s_4 (0. 5),s_3 (0.2) \}\}\), which is equivalent to \(L_2(p) = \{ s_5 (0.2), s_4 (0.4), \) \(s_3 (0. 2) \}\). For normalization, we can, respectively, add the unshare linguistic terms \(s_5\) and \(s_2\) to \({\mathscr {L}}_1(p)\) and \({\mathscr {L}}_2(p)\), and assign zero probabilities for them. Then we can obtain \(\dot{{\mathscr {L}}}_1(p)=\{ \{s_5 (0), s_4 (0. 2), s_3 (0. 4), s_2(0.4) \}, \{s_5 (0), s_4 (0. 2), \) \(s_3 (0. 5),s_2 (0.3) \}, \{s_5 (0),\) \( s_4 (0. 3),s_3 (0. 4),\) \( s_2 (0.3) \}\}\); \(\dot{{\mathscr {L}}}_2(p) =\{ \{ s_5 (0. 3), s_4 (0. 4),\) \( s_3 (0.3),s_2 (0) \}, \{ s_5 (0. 2), \) \(s_4 (0. 6), s_3 (0.2),\) \( s_2 (0) \}, \{ s_5 (0. 3), s_4 (0. 5), s_3 (0.2), s_2 (0) \}\}.\)

Remark 1

Practically, it is statistically feasible [38] to the settle the classical PLTSs in applications. Analogously, how to statistically settle the reformulated PLTSs is vital for applications. Given a classical PLTS, there may be a uncountable number to tackle the ignored probabilities to derive probability distributions. One feasible way is to assign the ignored probabilities to each one of the underlying linguistic terms. For instance, in Example 1 (\(s_3=\) slightly high, \(s_4=\) high, \(s_5=\) very high), we can assign the ignored probability 0.05 to \(s_3,s_4,s_5\), respectively, and then obtain a reformulated PLTS by \(\{\{ s_3 (0. 15),\) \( s_4 (0. 65), s_5 (0.2) \},\{ s_3(0. 1),\) \( s_4 (0. 7), s_5 (0.2) \},\{ s_3 (0. 1),\) \( s_4 (0. 65), s_5 (0.25) \}\}\).

To provide a ranking scheme for comparison, we introduce the score values and deviation degrees for the reformulated PLTSs, in which the behavioral (optimism or pessimism) attitudes can be incorporated.

Definition 2

Let \(S=\{s_0,s_1,\ldots ,s_\tau .\}\) be a LTS, and the PLTS be \({\mathscr {L}}(p)= \{L_j(p)|j=1,2,\ldots ,k.\}\) with \(L_j(p)=\{s_{\alpha _i}(p_{ij})|s_{\alpha _i}\in S,i=1,2,\ldots ,m, p_{ij}\ge 0,\sum _{i=1}^mp_{ij}= 1.\}\), then the worse score value of \({\mathscr {L}}(p)\) is defined to be

the best score value of \({\mathscr {L}}(p)\) is defined to be

Generally, the score value \(SV_\pi [{\mathscr {L}}(p)]\) of \({\mathscr {L}}(p)\) with the optimism index \(\pi \in [0,1]\) is given by

In “Ambiguity and the ignorance of the probability information about PLTSs”, the worse case representation of the Choquet integral w.r.t. the capacity is an indication of ambiguity aversion. Also there are some empirical evidences in some recent work showing that people may have behaviors with ambiguity loving or neutrality [40]. Thus, the optimism index for the score value is necessary and suggests the degree of the optimism of a DM. The greater the index, the more optimistic the DM. With different choice of the optimism index, the attitudes of the DM can be demonstrated.

Definition 3

Let \(S=\{s_0,s_1,\ldots ,s_\tau .\}\) be a LTS, and the PLTS be \({\mathscr {L}}(p)= \{L_j(p)|j=1,2,\ldots ,k.\}\) with \(L_j(p)=\{s_{\alpha _i}(p_{ij})|s_{\alpha _i}\in S,i=1,2,\ldots ,m, p_{ij}\ge 0,\sum _{i=1}^mp_{ij}= 1.\}\), then the worse deviation degree of \({\mathscr {L}}(p)\) is defined to be

where \(\sigma _j=\sqrt{\sum _{i=1}^m(\alpha _i-\bar{\alpha }_j)^2p_{ij}}, \bar{\alpha }_j=\sum _{i=1}^m\alpha _i p_{ij}\); the best deviation degree of \({\mathscr {L}}(p)\) is defined to be

Generally, the deviation degree \(\sigma _\pi [{\mathscr {L}}(p)]\) of \({\mathscr {L}}(p)\) with the optimism index \(\pi \in [0,1]\) is given by

Definition 4

(Comparison of PLTSs) Given two PLTSs \({\mathscr {L}}_1(p)\) and \({\mathscr {L}}_2(p)\), the ranking scheme with optimism index \(\pi \in [0,1]\) for them is listed as follows.

-

1.

If \(SV_\pi [{\mathscr {L}}_1(p)]> SV_\pi [{\mathscr {L}}_2(p)]\), then \({\mathscr {L}}_1(p)\succ {\mathscr {L}}_2(p)\);

-

2.

If \(SV_\pi [{\mathscr {L}}_1(p)]< SV_\pi [{\mathscr {L}}_2(p)]\), then \({\mathscr {L}}_1(p)\prec {\mathscr {L}}_2(p)\);

-

3.

If \(SV_\pi [{\mathscr {L}}_1(p)]= SV_\pi [{\mathscr {L}}_2(p)]\), then

-

(a)

If \(\sigma _\pi [{\mathscr {L}}_1(p)]> \sigma _\pi [{\mathscr {L}}_2(p)]\), then \({\mathscr {L}}_1(p)\prec {\mathscr {L}}_2(p)\);

-

(b)

If \(\sigma _\pi [{\mathscr {L}}_1(p)]< \sigma _\pi [{\mathscr {L}}_2(p)]\), then \({\mathscr {L}}_1(p)\succ {\mathscr {L}}_2(p)\);

-

(c)

If \(\sigma _\pi [{\mathscr {L}}_1(p)]= \sigma _\pi [{\mathscr {L}}_2(p)]\), then \({\mathscr {L}}_1(p)\sim {\mathscr {L}}_2(p)\) (indifference).

-

(a)

It can be checked that the normalization of the PLTSs has no influences on their score values and deviation degrees as a result of the assigned zero probabilities, and thus do not change their ranking scheme. But, the optimism index \(\pi \), which is an indicator of DM’s attitudes, is substantial for the ranking of PLTSs. To highlight the point, we provide the following example.

Example 3

Let \({\mathscr {L}}_1(p)=\{ \{ s_4 (0. 2), s_3 (0. 4),s_2 (0.4) \}, \{ s_4 (0. 2), s_3 (0. 5), s_2 (0.3) \}, \) \(\{ s_4 (0. 3),s_3 (0. 4), s_2 (0.3)\}\}\), which is equivalent to \(L(p) = \{ s_4 (0.2),\) \( s_3 (0.4), s_2 (0. 3) \}\); \({\mathscr {L}}_2(p)=\{ \{ s_4 (0. 4), s_3 (0. 4), s_1 (0.2) \},\) \( \{ s_4 (0. 5), s_3 (0. 3),s_1 (0.2) \}, \) \(\{ s_4 (0. 4),s_3 (0.25),s_1 (0.35) \}\}\), which is equivalent to \(L(p) = \{ s_4 (0.4), s_3 (0.25),\) \( s_1 (0. 2) \}\), then \(\text {WSV}[{\mathscr {L}}_1(p)]=\min \{s_{2.8},s_{2.9},s_{3}.\}=s_{2.8}\), \(\text {BSV}[{\mathscr {L}}_1(p)]=s_{3}\), \(\sigma _w[{\mathscr {L}}_1(p)]=\max \{0.7483,0.7, 0.7746\}=0.7746\), \(\sigma _b[{\mathscr {L}}_1(p)]=0.7\);

\(\text {WSV}[{\mathscr {L}}_2(p)]=\min \{s_{3},s_{3.1},s_{2.7}\}=s_{2.7}\), \(\text {BSV}[{\mathscr {L}}_2(p)]=s_{3.1}\), \(\sigma _w[{\mathscr {L}}_2(p)]=\max \{1.0954,\) \(1.1358, 1.3077\}=1.3077\), \(\sigma _b[{\mathscr {L}}_2(p)]=1.0954\). Then,

-

(1)

for \(\pi =0\), \({\mathscr {L}}_1(p) \succ {\mathscr {L}}_2(p)\) since \(\text {WSV}[{\mathscr {L}}_1(p)]>\text {WSV}[{\mathscr {L}}_2(p)]\);

-

(2)

for \(\pi =0.5\) (a moderate attitude), \({\mathscr {L}}_1(p) \succ {\mathscr {L}}_2(p)\) since \(SV_\pi [{\mathscr {L}}_1(p)]=SV_\pi [{\mathscr {L}}_2(p)]=2.9\) and \(\sigma _\pi [{\mathscr {L}}_1(p)]=0.7373<\sigma _\pi [{\mathscr {L}}_2(p)]=1.2016\);

-

(3)

for \(\pi =1\), \({\mathscr {L}}_1(p) \prec {\mathscr {L}}_2(p)\) since \(\text {BSV}[{\mathscr {L}}_1(p)]>\text {BSV}[{\mathscr {L}}_2(p)]\).

Definition 5

Let \({\mathscr {L}}_1(p)= \{L_{j_1}(p)\) \(|j_1=1,2,\ldots ,k_1 \}\) with \(L_{j_1}(p)=\{s_{\alpha _{i}}(p_{ij_1})|\) \(s_{\alpha _{i}}\in S,i=1,2,\ldots ,m, p_{ij_1} \ge 0,\sum _{i=1}^{m_1}p_{ij_1}= 1.\}\); and \({\mathscr {L}}_2(p)= \{L_{j_2}(p)|j_2=1,2,\ldots , k_2.\}\) with \(L_{j_2}(p)=\{s_{\alpha _{i}}(p_{ij_2})|s_{\alpha _{i}}\in S,i=1,2,\ldots ,m, p_{ij_2} \ge 0,\sum _{i=1}^{m_2}p_{ij_2}= 1.\}\) be two PLTSs with the same set of ascending ordered linguistic terms, then the distance between \({\mathscr {L}}_1(p)\) and \({\mathscr {L}}_2(p)\) is defined by

Here, since we can assign zero probabilities to the unshared linguistic terms without influencing their ranking, the two PLTSs are assumed to have the same set of ordered linguistic terms. In addition, we have the following properties for the distance between PLTSs.

Theorem 1

Let \({\mathscr {L}}_1(p),{\mathscr {L}}_2(p) \) be two PLTSs by (3), then it holds that,

-

1.

\(d[{\mathscr {L}}_1(p),{\mathscr {L}}_2(p)]=0\) if and only if \({\mathscr {L}}_1(p)={\mathscr {L}}_2(p)\) in the sense that both of the PLTSs has the same probability distributions (for each \(1\le j_1\le k_1,1\le j_2\le k_2,\) \(p_{ij_1}=p_{ij_2}\) \((\forall i =1,2,\ldots , m)).\)

-

2.

\(d[{\mathscr {L}}_1(p),{\mathscr {L}}_2(p)]=d[{\mathscr {L}}_2(p),{\mathscr {L}}_1(p)].\)

The operations over the reformulated PLTSs

In every field, information aggregation relies extensively on the underlying operations. PLTSs are no exceptions, since their introduction, the basic operations have been one of the hot topics in the area. The most recent work by Mi et al. [37] provides an extensively comprehensive summary on the topic. The typical operations are based on the crisp values of the linguistic terms by appropriate transformation, usually the (scaled) subscripts of the linguistic terms, such as the operations in [17, 38, 50].Footnote 1 The pertaining transformation may lead to information loss of the intrinsic linguistic implications, but intuitive and simple for applications. Here, the operations for the reformulated PLTSs are defined over the subscripts and the underlying probabilities in an element-by-element way using one of the important aggregation operators—t-(co)norms [24, 35, 48], whose notations are provided in Appendix.

Definition 6

(T-(co)norm-based operations for PLTSs) Let the linguistic terms set be \(S=\{s_i\mid i=0,1,\ldots ,n.\}\), and \({\mathscr {L}}(p)= \{L_j(p)|j=1,2,\ldots ,k \}\) with \(L_j(p)=\{s_{\alpha _i}(p_{ij})|s_{\alpha _i}\in S,i=1,2,\ldots ,m, p_{ij}\ge 0,\sum _{i=1}^mp_{ij}= 1.\}\); \({\mathscr {L}}_1(p)= \{L_{j_1}(p)|j_1=1,2,\ldots ,k_1 \}\) with \(L_{j_1}(p)=\{s_{\alpha _{i_1}}(p_{i_1j_1})|s_{\alpha _{i_1}}\in S,i_1=1,2,\ldots ,m_1,\) \( p_{i_1j_1}\ge 0, \sum _{i=1}^{m_1}p_{i_1j_1}= 1.\}\); and \({\mathscr {L}}_2(p)= \{L_{j_2}(p)|j_2=1,2,\ldots ,k_2.\}\) with \(L_{j_2}(p)=\{s_{\alpha _{i_2}}(p_{i_2j_2})|s_{\alpha _{i_2}}\in S,i_2=1,2,\ldots ,m_2, p_{i_2j_2}\ge 0,\sum _{i=1}^{m_2}p_{i_2j_2}= 1.\}\) be three PLTSs, \(\mu \ge 0\), T(C) is an Archimedean t-(co)norm with generator t(c), then

-

(i)

\({\mathscr {L}}_1(p) \oplus {\mathscr {L}}_2(p)=\{L_{j_1j_2}(p)|j_1=1,2,\ldots ,k_1;j_2=1,2,\ldots ,k_2. \}\), where \(L_{j_1j_2}(p)=\{s_\gamma \left( p_{i_1j_1}p_{i_2j_2}\right) |\gamma =nC(\frac{\alpha _{i_1}}{n},\frac{\alpha _{i_2}}{n})=nc^{-1}(c(\frac{\alpha _{i_1}}{n})+c(\frac{\alpha _{i_2}}{n})),i_1=1,2,\ldots ,m_1; i_2=1,2,\ldots ,m_2.\}\);

-

(ii)

\(\mu {\mathscr {L}}(p)=\{ L_j(p) |j=1,2,\ldots ,k. \}\) with \(L_j(p)= \{s_\gamma (p_{ij})|\gamma = nc^{-1}(\mu c(\frac{\alpha _{i}}{n})),\) \(i=1,2,\ldots ,m\}\);

-

(iii)

\({\mathscr {L}}_1(p) \odot {\mathscr {L}}_2(p)=\{L_{j_1j_2}(p)|j_1=1,2,\ldots ,k_1;j_2=1,2,\ldots ,k_2. \}\), where \(L_{j_1j_2}(p)=\{s_\gamma \left( p_{i_1j_1}p_{i_2j_2}\right) |\gamma =nT(\frac{\alpha _{i_1}}{n},\frac{\alpha _{i_2}}{n})=nt^{-1}(t(\frac{\alpha _{i_1}}{n})+t(\frac{\alpha _{i_2}}{n})),i_1=1,2,\ldots ,m_1; i_2=1,2,\ldots ,m_2.\}\);

-

(iv)

\( {\mathscr {L}}^\mu (p)=\left\{ L_j\left( p\right) \right| j=1,2,\ldots ,k. \}\) with \(L_j(p)= \{s_\gamma (p_{ij})|\gamma = nt^{-1}(\mu t(\frac{\alpha _{i}}{n})),\) \(i=1,2,\ldots ,m\}.\)

By these definitions, the operations are closed in PLTSs by (3). Take \({\mathscr {L}}_1(p) \oplus {\mathscr {L}}_2(p)\) as an example, for each of the obtained elements \(L_{j_1j_2}(p)\), it holds that \(p_{i_1j_1}p_{i_2j_2}\ge 0\), and \(\sum _{i_1=1}^{m_1}\sum _{i_2=1}^{m_2}p_{i_1j_1}p_{i_2j_2}=\sum _{i_1=1}^{m_1}p_{i_1j_1}\sum _{i_2=1}^{m_2}p_{i_2j_2}=1,\) which implies \({\mathscr {L}}_1(p) \oplus {\mathscr {L}}_2(p)\) is a reformulated PLTS. In fact, these operations proceed with the two separate operations over the linguistic terms and the probabilities. For the operations \(\odot \) and \(\oplus \), by the definition, it may lead to repeated linguistic terms, which can be combined by summing up the pertaining probabilities. For illustration, we will only use the Frank and Hamacher t-(co)norms in the further analysis. Moreover, the following properties of these operations, i.e., symmetry, distributivity and associativity, can be derived with the properties of the underlying t-(co)norms or their generators.

Theorem 2

Let \({\mathscr {L}}(p),{\mathscr {L}}_i(p)(i=1,2,3)\) be PLTSs by (3), \(\mu ,\mu _1,\mu _2\ge 0,\) then it holds that,

-

(1)

\({\mathscr {L}}_1(p)\oplus {\mathscr {L}}_2(p)= {\mathscr {L}}_2(p)\oplus {\mathscr {L}}_1(p),\) \({\mathscr {L}}_1(p)\odot {\mathscr {L}}_2(p)={\mathscr {L}}_2(p)\odot {\mathscr {L}}_1(p);\)

-

(2)

\(\mu ({\mathscr {L}}_1(p)\oplus {\mathscr {L}}_2(p))= \mu ({\mathscr {L}}_1(p))\oplus \mu ({\mathscr {L}}_2(p));\) \(({\mathscr {L}}_1(p)\odot {\mathscr {L}}_2(p))^\mu = ({\mathscr {L}}_1(p))^\mu \odot ({\mathscr {L}}_2(p))^\mu ;\)

-

(3)

\(\mu _1{\mathscr {L}}(p)\oplus \mu _2{\mathscr {L}}(p)= (\mu _1+\mu _2){\mathscr {L}}(p);\) \({\mathscr {L}}^{\mu _1}(p)\oplus {\mathscr {L}}^{\mu _2}(p)= {\mathscr {L}}^{\mu _1+\mu _2}(p);\)

-

(4)

\(({\mathscr {L}}_1(p)\oplus {\mathscr {L}}_2(p)) \oplus {\mathscr {L}}_3(p)={\mathscr {L}}_1(p)\oplus ({\mathscr {L}}_2(p) \oplus {\mathscr {L}}_3(p)),\) \(({\mathscr {L}}_1(p)\odot {\mathscr {L}}_2(p))\odot {\mathscr {L}}_3(p)= {\mathscr {L}}_1(p)\odot ({\mathscr {L}}_2(p)\odot {\mathscr {L}}_3(p)).\)

Proof

The results can be trivially obtained by Definition 6 and the properties of the underlying t-(co)norms. \(\square \)

By these operations for PLTSs in Definition 6, we can define some average operators for information aggregations, such as the weighted average and geometric average operators for PLTSs.

Definition 7

Let \({\mathscr {L}}_i(p)(i=1,2,\ldots ,k)\) be PLTSs, then the PLTS weighted average(PLTSWA) and geometric average (PLTSWGA) operators are given, respectively, as follows:

where

MADM using the reformulated PLTSs

In this part, to illustrate the feasibility of the PLTS based method in applications, we use the reformulated PLTSs to provide a PLTS DEMATEL-based frameworks for MADM.

As usual, we assume \(\mathbf{X }=\{x_1,x_2,\ldots ,x_m\}\) is a set of alternatives, \(\mathbf{A }=\{a_1,a_2,\ldots ,a_n\}\) is a set of attributes with the attribute weighting vector \(w=(w_1,w_2,\ldots ,w_n)^{\mathrm{T}}\). The DM provides the evaluation of the alternative \(x_i\) on attribute \(a_j\) using the PLTS \({\mathscr {L}}_{ij}(p)\), then the decision matrix can be presented as follows.

where \({\mathscr {L}}_{ij}(p)=\{L_{ij}^{(j_1)}|j_1=1,2,\ldots ,k_1\}\) with \(L_{ij}^{(j_1)}=\{s_{\alpha _{i}}(p_{ij}^{(i_1j_1)})|s_{\alpha _{i}}\in S,i=1,2,\ldots ,m_{ij},\) \( p_{ij}^{(i_1j_1)}\ge 0,\sum _{i=1}^{m_{ij}}p_{ij}^{(i_1j_1)}= 1\}\). In the following, we present the DEMATEL-based aggregation method for decision-making.

Some essentials on the classical DEMATEL

DEMATEL was introduced in 1970s [15, 44] to provide solutions to the interrelated and complicated problems with causal relationships between complex attributes. The method is usually used to visualize the complex causal relationships by separating the attributes into cause and effect groups, and present the influence degrees of each attributes. Thus, the DEMATEL method is also employed to prioritize alternatives for decision-making.

The linguistic terms scale for pair-wise comparison is assumed to be linguistic terms, such as ‘None influence’, ‘Low influence’, ‘Moderate influence’, ‘High influence’, and ‘Extreme high influence’, which are denoted by 0, 1, 2, 3, 4, 5. To facilitate the operations over matrices, here we omit the prefix s for the linguistic terms and directly use the numerical values. The initial direct relation matrix Z over n attributes is a matrix with order n and can be obtained by pair-wise comparison in terms of influences and directions between attributes. The elements \(z_{ij}\) is perceived to be the degree to which the attribute \(a_i\) affects attribute \(a_j\) and the diagonal elements \(z_{ii}=0\). That is

By the initial direct relation matrix Z, we can obtain the normalized direct relation matrix

such that

-

\(\lim _{t\rightarrow \infty }X^t=O\) (the null matrix);

-

\(\lim _{t\rightarrow \infty }(E+X+X^2+\cdots +X^t)=(E-X)^{-1}\), where E is the identity matrix.

In general, the normalized direct relation matrix can be seen as a sub-stochastic matrix obtained from an absorbing Markov chain matrix by deleting all rows and columns associated with the absorbing states. The total relation matrix T can be calculated by the following equation:

By the equation, it can be seen that the element \(\tau _{ij}\) of T represents the overall degree to which the attribute \(a_i\) affects attribute \(a_j\). Thus, \(c_i=\sum _{j=1}^m\tau _{ij}\) is the overall degree to which the attribute \(a_i\) affects the other attribute; \(h_j=\sum _{i=1}^m\tau _{ij}\) is the overall degree to which the attribute \(a_j\) is affected by the other attributes. The prominence \(P_i\) of the attribute \(a_i\) is defined by \(P_i=c_i+h_i\), which is an indicator of the importance degree of the attribute \(a_i\). The larger the individual prominence \(p_ i\), the more important the attribute \(a_i\). The relation \(r_i\) of the criterion \(C_i\) is defined by \(R_i=c_i-h_i\), which is used to distinguish cause or effect attribute. If \(R_i>0\), then the attribute \(a_i\) belongs to the cause group; If \(R_i<0\), then the attribute \(a_i\) belong to the effect group. A cause diagram is obtained by mapping the ordered pairs of \((P_i,R_i)\) in the prominence-relation space, can be used to visualize the complicated causal relationships among criteria in a visible way to facilitate reasonable decision-making.

DEMATEL has the capacity in exploring the overall effect (influence) of factors, has been successfully employed in various problems in different fields. In the recent, the methodology about DEMATEL has been deepen or strengthen by the facilitating of various scenarios [2, 4, 6, 8, 18, 20, 27, 46] and the combinations with the pertaining methodologies such as the analytic network process (ANP) [47], Association Rule Mining (ARM) [1], and so on. A newly emerging direction is about the DEMATEL with both subjectivity and objectivity [10, 11]. In [11], with the basic belief assignment function and Dezert–Smarandache theory in deriving the group initial direct relation matrix, group DEMATEL with the combination of the subjective (experts’) and the objective (pair-factors’) assessments can reach a prescribed consensus level with some feedback mechanism. Notably, the linguistic DEMATEL [8, 20, 46] methods are shown to be much effective and reasonable in applications.

PLTS-based DEMATEL method

As an important direction of PLTS method [30], DEMATEL under PLTS is necessary. Here we introduce the reformulated PLTS-based DEMATEL method on a step-by-step basis.

STEP 1. Formulation of the PLTS-based initial direct relation matrix. It is assumed that, in the pair-wise comparison of attributes PLTSs are used. We can have a PLTS-based initial direct relation matrix

where \({\mathscr {L}}_{ij}(p)=\{L^{(ij)}_{k_{ij}}|k_{ij}=1,2,\ldots ,l_{ij}.\}\) with \(L^{(ij)}_{k_{ij}}=\{{\alpha _{t_{ij}}^{(ij)}}(p^{(ij)}_{k_{ij}t_{ij}})|t_{ij}=1,2,\ldots ,\tau _{ij}\}\) and in particular \({\mathscr {L}}_{ii}(p)=\{0\}\), i.e., \(l_{ii}=\tau _{ii}=1\), \(p^{(ii)}_{k_{ii}t_{ii}}=1\) and \(\alpha _{t_{ii}}^{(ii)}=0\).

STEP 2. Derivation of the segmental initial direct relation matrices with probability. For each \(t_{ij}\in \{1,2,\ldots ,\tau _{ij}\}(i,j=1,2,\ldots , m)\), we can get a series of segmental initial direct relation matrices with probability

which are lexicographically structured by the linguistic terms \(\alpha _{t_{ij}}^{(ij)}\) from the PLTSs \({\mathscr {L}}_{ij}(p)\) and have the form as follows.

where \(\alpha _{t_{ii}}^{(ii)}=0\) and the subscript \(p^{(I)}=\prod _{i=1}^m\prod _{j=1}^m \left( \frac{1}{l_{ij}}\sum _{k_{ij}=1}^{l_{ij}}p^{(ij)}_{k_{ij}t_{ij}}\right) \) is the potential probabilistic information, \(I=\left\{ \begin{array}{cccc} t_{11} &{} t_{12}&{}\ldots &{} t_{1m}\\ t_{21} &{} t_{22}&{}\ldots &{} t_{2m}\\ \vdots &{}\vdots &{} &{}\vdots \\ t_{m1} &{}t_{m2} &{} \ldots &{} t_{mm}\\ \end{array} \right\} \) is an index notation.

STEP 3. Derivation of the segmental prominences and the segmental relations. By excluding the probabilistic information, the segmental initial direct relation matrices are reduced to the classical initial direct relation matrices by Eq. (9). By the classical DEMATEL method, the corresponding total relation matrix \(T_{p}^{(I)}=\left( \tau _{ij}\right) _{ p^{(I)}}\), called segmental total relation matrix hereafter, can be obtained for each segmental initial direct relation matrix. Note the segmental total relation matrix inherit the probabilistic information of the corresponding segmental initial direct relation matrix. Then the segmental prominence \(P_{i,p}^{(I)}=\sum _j\tau _{ij}+\sum _j\tau _{ji}\) and the segmental relation \(R_{i,p}^{(I)}=\sum _j\tau _{ij}-\sum _j\tau _{ji}\) with probability \(p^{(I)}\) for attribute \(C_i\) can be generated using these segmental total relation matrices.

STEP 4. The synthesization of the segmental prominences and the segmental relations. By the underlying probability information, we can get the synthesized prominence and the synthesized relation, respectively, by \(P_i=\sum _{I}P_{i,p}^{(I)}p^{(I)}\) and \(R_i=\sum _{I}R_{i,p}^{(I)}p^{(I)}\). The synthesized prominence can be seen as the comprehensive degree of an attribute to influence the others and be influenced by the others, then it provides reliable information about weighting.

STEP 5. The determination of the attribute weights. Here, the weight \(w_i\) for \(a_i\) can be calculated by

Note that, equivalently, we can also firstly derive a synthesized total relation matrix T by probabilistically weighting average of the segmental total relation matrices. That is

from which the synthesized prominence can also be obtained.

Example 4

Assume the PLTS-based initial direct relation matrix is given by

then the segmental initial direct relation matrices can be obtained as follows.

\( Z_p^{(1)}=\left( \begin{array}{ccc} 0 &{} 3&{} 3\\ 4 &{} 0&{} 4\\ 4 &{}2 &{} 0\\ \end{array} \right) _{\tiny {0.45\times 0.35}}\), \( Z_p^{(2)}=\left( \begin{array}{ccc} 0 &{} 4&{} 3\\ 4 &{} 0&{} 4\\ 4 &{}2 &{} 0\\ \end{array} \right) _{0.55\times 0.35}\),

\( Z_p^{(3)}=\left( \begin{array}{ccc} 0 &{} 3&{} 3\\ 4 &{} 0&{} 4\\ 5 &{}2 &{} 0\\ \end{array} \right) _{0.45\times 0.65}\), \( Z_p^{(4)}=\left( \begin{array}{ccc} 0 &{} 4&{} 3\\ 4 &{} 0&{} 4\\ 5 &{}2 &{} 0\\ \end{array} \right) _{0.55\times 0.65}\).

By the classical DEMATEL method, we can get the segmental total relation matrix for each segmental initial direct relation matrix, i.e.,

\( T_p^{(1)}=\left( \begin{array}{ccc} 1.4348 &{} 1.3043 &{} 1.5652\\ 2.0870 &{} 1.2609 &{} 1.9130\\ 1.7391 &{} 1.2174 &{} 1.2609\\ \end{array} \right) _{0.45\times 0.35}\)

\( T_p^{(2)}=\left( \begin{array}{ccc} 2.2941 &{} 2.2353 &{} 2.3529\\ 2.8235 &{} 2.0588 &{} 2.5882\\ 2.3529 &{} 1.8824 &{} 1.8235\\ \end{array} \right) _{0.55\times 0.35}\)

\( T_p^{(3)}=\left( \begin{array}{ccc} 2.0270 &{} 1.6216 &{} 1.9459\\ 2.8108 &{} 1.6486 &{} 2.3784\\ 2.5946 &{} 1.6757 &{} 1.8108\\ \end{array} \right) _{0.45\times 0.65}\)

\( T_p^{(4)}=\left( \begin{array}{ccc} 3.6667&{} 3.1667 &{} 3.3333\\ 4.3333&{} 3.0833 &{} 3.6667\\ 4.0000&{} 3.0000 &{} 3.0000\\ \end{array} \right) _{0.55\times 0.65}.\)

The obtained segmental and synthesized prominence for each attributes are listed in Table 2. The synthesized prominence can also be obtained from the synthesized total relation matrix \( T=\left( \begin{array}{ccc} 2.5713 &{} 2.2421 &{} 2.4603\\ 3.2436 &{} 2.1794 &{} 2.8060\\ 2.9158 &{} 2.1167 &{} 2.1518\\ \end{array} \right) \) by (11).

Then by (10), we can obtain the weights for \(a_1,a_2,a_3\) are \(w_1=0.3521, w_2=0.3255, w_3= 0.3224\).

The DEMATEL-based MADM method

With the above DEMATEL method, a PLTS-based MADM method can be obtained as follows.

STEP 1: The DMs or a group of experts provide the decision matrix, where each element by the reformulated PLTS is the evaluation of an alternative on some attribute, and the initial direct relation matrix \(\mathbf{R }\) by (8) using the reformulated PLTSs.

STEP 2: Obtain the attribute weights \(w=(w_1,w_2, \ldots ,w_n)^{\mathrm{T}}\) by the above DEMATEL method based on the reformulated PLTSs.

STEP 3: Aggregate the overall attribute values \(Z_i(w)\) for each alternative \(x_i\) with the obtained attribute weights \(w=(w_1,w_2,\ldots ,w_n)^{\mathrm{T}}\). That is \(Z_i(w)=\hbox {PLTSWA}({\mathscr {L}}_{i1}(p), { } {\mathscr {L}}_{i2}(p),\ldots ,{\mathscr {L}}_{in}(p))= \mathop {\oplus }_{j=1}^nw_j{\mathscr {L}}_{ij}(p)\), or \(Z_i(w)=\hbox {PLTSWGA}({\mathscr {L}}_{i1}(p), {\mathscr {L}}_{i2}(p),\ldots ,{\mathscr {L}}_{in}(p))= \mathop {\odot }_{j=1}^n {\mathscr {L}}^{w_j}_{ij}(p)\). Using the ranking scheme based on the score values and deviation degrees of the overall attribute values by Definition 4\(Z_i(w)\), we can obtain the rank of the alternatives \(x_i\)s. Then the best alternative can be obtained by the ranking according to the attitudes of the DMs by the optimism index.

Illustrative case analysis

In this part, we provide an illustrative example, which is adapted from [38, 39] on the feasibility and the validity of the reformulated PLTS-based applications.

Case study

The example is about the selection of strategic projects which are evaluated from the attributes in investment position \((a_1),\) profitability (\(a_2\)), investment risk (\(a_3\)) and social responsibility (\(a_4\)). A group of members from the director board are asked to present their evaluations on three possible projects \(x_j(j=1,2,3)\). By the DEMATEL-based MADM method, we can proceed with the selection as follows.

STEP 1: The director board works out the decision matrix using the reformulated PLTSs, which is shown in Table 3. In addition, since the weight information for these attributes are completely unknown, then to determine the attributes weighting vector the director board has to provide the initial direct relation matrix (see Table 4) in PLTS form to facilitate the proposed DEMATEL method.

STEP 2: The determination of the attribute weights by the PLTS-based DEMATEL method. First, with the PLTSs based initial direct relation matrix we can obtain \(2^{11}\) segmental initial direct relation matricesFootnote 2 coupled with probabilities, from which the corresponding segmental total relation matrices can be calculated. By calculation, the synthesized total relation matrix \(T= \left( \begin{array}{cccc} 1.0068 &{} 1.1717&{} 1.1410&{} 1.0807\\ 1.2957 &{} 1.0593&{} 1.2313 &{} 1.0671\\ 1.5327 &{} 1.4826 &{} 1.2006 &{} 1.2832\\ 1.5300 &{} 1.6363 &{} 1.5523&{} 1.1397\\ \end{array} \right) .\) Then the synthesized prominences for the attributes \(a_1,a_2,a_3,a_4\) are, respectively, 9.7654, 10.0033, 10.6243, 10.4290. Thus, the weighting vector for these attributes is \(w=( 0.2392, 0.2450, 0.2603, 0.2555)^{\mathrm{T}}.\)

STEP 3: With the obtained weighting vector, we can use the PLTSWA or PLTSWGWA operator to get the overall attribute value \(Z_i(w)\) for each alternative \(a_i\). Here we can choose Frank or Hamacher t-(co)norms with different parameters for these underlying operations, and we can also use different optimism indices \(\pi \in [0,1]\) in the ranking of the evaluations. The evaluating results by the method with the parameterized Frank t-(co)norms under different optimism indices are listed in Table 5.

Remarks on the results

For the PLTSWA-based results, the ranking results are robust to the parameters of Frank t-(co)norm and even to the optimism indices, which suggests a strong compensation effect of the PLTSWA operator analogous to the classical weighted average. The compensation effect is the very point to determine the choice of PLTSWA or PLTSGWA. The PLTSWA works much better when compensation is allowable. In a situation where extreme results may lead to tremendous losses and compensation is unreasonable, it is recommended to use the PLTSGWA. Here the problem is to consider the selection of strategic projects in the long run, thus the results with PLTSGWA are more reliable.

For the PLTSGWA-based results, we can see the optimism indices affect the ranking results while the parameters for the Frank t-(co)norms do not. For the optimistic DM, the best alternative is \(a_3\), while for the less optimistic or pessimistic, the best selection is \(a_1\). In both cases, the parameter for the Frank t-(co)norms have little influence on the results, thus from the angle of the complexity of the calculation, it is recommend to use \(\theta =1\), for which case the t-(co)norm is the product t-(co)norm. We can get the similar results (see Table 6) with the Hamacher t-(co)norms, which include the Einstein t-(co)norm (\(\delta =0\)). Practically, it is enough to employ some typical t-(co)norms such as the product or Einstein t-(co)norms.

Note that, for the alternative \(a_1\), since in the decision matrix the evaluations in each attributes have a complete belief or knowledge of the probabilities, thus the score values for the overall attributes evaluations are free of the DMs’ attitudes. Meanwhile, for the alternatives \(a_2\) and \(a_3\) with partial probabilities, the score values for their overall evaluations are subject to changes in the DMs’ attitudes modeled by the optimism index. Therefore, the reformulated PLTSs in the multiple probability distributions has the advantage in facilitating to incorporate the attitudes of the DM for problem solutions.

Comparison analysis

In this part, we provide some comparison results of the case study. Since the reformulated PLTSs by (3) are employed in the work, to compare with the methods by the classical PLTSs by Pang et al. [38], we would equivalently transform the reformulated PLTSs in the dataset into the classical ones, which then may be subject to the normalization, and then proceed with the analysis with some corresponding methods to obtain the ranking results of the example. By the above justifications, we refer to the results using the product t-(co)norm-based PLTSGWA operator in the comparison (see Item 5 in Table 7).

In [38], the extended TOPSIS to rank the alternatives is dependent on their the closeness coefficients and the maximum deviation-based aggregation method relies on the aggregation operator PLWA where the attribute weight is gotten by the maximum deviation method. The ranking results by the two methods are all \(a_1\succ a_3\succ a_2\) (see Items 1 and 2 in Table 7). The results are consistent with those results of the work with the optimism index \(\pi =1\) and 0.5.

Also, we can use some existing basic PLTS operations in the maximum deviation method [38]. If we use the novel operations on the basis of scaled subscripts of linguistic by Gou and Xu [17], then the ranking result is \(a_3\succ a_1\succ a_2\) (see Item 3 in Table 7), which is consistent with the result of the work with the optimism index \(\pi =0\). In [35], Liu and Teng developed the Archimedean t-(co)norm-based operations of PLTSs, on the basis of which the Muirhead mean operations (PLAMM) are introduced. If we use the product t-(co)norm-based PLAMM with parameter vector \(P=(1,1,0,0)\) (i.e., the probabilistic linguistic Bonferroni mean operator), then it can be obtained that \(a_1\succ a_3\succ a_2\) (see Item 4 in Table 7).

It can be seen that the results by the method in this work are consistent with those of the existing methods. But it is worthy noting that, normalizations are routinely undertaken over the classical PLTSs before the operations, which is necessarily leads to information loss (i.e., the partial probabilistic information or the ignorance of probability, which are the vital feature of PLTSs, is eliminated). By contrast, through the representation by multiple probabilities over the potential linguistic terms, we need not carry out the normalization on the reformulated PLTS. Therefore, the method using the reformulated PTLSs has the advantage in probability information utilization without any normalization. Moreover, we can incorporate the (optimistic, or moderate, or pessimistic) DMs’ attitudes in the ranking scheme, thus consequently the obtained results can be compatible with the DMs’ attitudes. However, in the proposed method by the reformulated PTLSs we have to face the complexity of the underlying calculations as a result of the dimension disaster.

Concluding remarks

Probabilistic linguistic term set (PLTS) provides a much more effective model to compute with words and to express the uncertainty in the pervasive natural language by probability information. In this paper, to avoid loss of information, we redefine the classical PLTSs by multiple probability distributions from an ambiguity perspective and present some basic operations using Archimedean t-(co)norms. Different from the classical PLTSs, the reformulated PLTSs are not necessarily normalized beforehand for further investigations. Moreover, the multiple probability distributions based PLTSs facilitate the incorporation of the different attitudes of the DMs in their score values and the deviation, and thus the comparisons. In particular, the reformulated PLTSs can be incorporated in DEMATEL. In fact, the reformulation is the accurate expression in the probability information, thus takes a step forward in exploring the experts-preference and knowledge. Further, the reformulated PLTS requires no pre-stage normalization, thus does not result in any information loss in the process. Therefore, comparing to the existing DEMATEL methods under the classical PLTSs or numerical expression, the DEMATEL based on the reformulated PLTSs takes a step forward to exploring the experts’ probabilistic knowledge in a more accurate way without information loss.

From an ambiguity perspective, the paper concentrates on the reformulation of the classical PLTSs, which contributes a new insight into the PLTS-based methods and applications. With the reformulated PLTSs, we can provide some new thought to eliminate the pre-stage normalization and proceed with further investigations without any information loss. Thus, some known methods (see Table 1) for PLTS decision-making and some smart extensions of PLTSs [3, 23, 34, 53, 60] could be also restructured by the reformulated PLTSs in this work. In the future work, we would attempt to investigate the extended or improved DEMATEL methods [1, 10,11,12, 47] using the reformulated PLTSs.

Notes

Here, we can see there would be a disastrously large number of segmental initial direct relation matrices in a large sized attributes, leading to computational complexity. Therefore, the method is much more practically reasonable in problems with a small number of attributes.

References

Aaldering LJ, Leker J, Song CH (2018) Analyzing the impact of industry sectors on the composition of business ecosystem: a combined approach using ARM and DEMATEL. Expert Syst Appl 100:17–29

Asan U, Kadaifci C, Bozdag E, Soyer A, Serdarasan S (2018) A new approach to DEMATEL based on interval-valued hesitant fuzzy sets. Appl Soft Comput 66:34–49

Bai C, Zhang R, Shen S, Huang C, Fan X (2018) Interval-valued probabilistic linguistic term sets in multi-criteria group decision making. Int J Intell Syst 33(6):1301–1321

Bai C, Sarkis J (2013) A grey-based DEMATEL model for evaluating business process management critical success factors. Int J Prod Econ 146(1):281–292

Camerer C, Weber M (1992) Recent developments in modeling preferences: uncertainty and ambiguity. J Risk Uncertain 5(4):325–370

Chang B, Chang C-W, Wu C-H (2011) Fuzzy DEMATEL method for developing supplier selection criteria. Expert Syst Appl 38(3):1850–1858

Chen Z-S, Chin K-S, Li Y-L, Yang Y (2016) Proportional hesitant fuzzy linguistic term set for multiple criteria group decision making. Inf Sci 357:61–87

Ding X-F, Liu H-C (2018) A 2-dimension uncertain linguistic DEMATEL method for identifying critical success factors in emergency management. Appl Soft Comput 71:386–395

Dong Y, Zheng X, Xu Z, Chen W, Shi H, Gong K (2021) A novel decision-making framework based on probabilistic linguistic term set for selecting sustainable supplier considering social credit. Technol Econ Dev Econ 27(6):1447–1480

Du Y-W, Zhou W (2019) New improved DEMATEL method based on both subjective experience and objective data. Eng Appl Artif Intell 83:57–71

Du Y-W, Zhou W (2019) DSmT-based group DEMATEL method with reaching consensus. Group Decis Negot 28(6):1201–1230

Du Y-W, Li X-X (2021) Hierarchical DEMATEL method for complex systems. Expert Syst Appl 167:113871

Ellsberg D (1961) Risk, ambiguity, and the Savage axioms. Q J Econ 75(4):643–669

Feng X, Liu Q, Wei C (2019) Probabilistic linguistic QUALIFLEX approach with possibility degree comparison. J Intell Fuzzy Syst 36(1):719–730

Gabus A, Fontela E (1972) World problems, an invitation to further thought within the framework of DEMATEL. Battelle Geneva Research Centre

Gilboa I, Marinacci M (2016) Ambiguity and the Bayesian paradigm. In: Arló-Costa H, Hendricks V, van Benthem J (eds) Readings in formal epistemology. Springer, Berlin, pp 385–439

Gou X, Xu Z (2016) Novel basic operational laws for linguistic terms, hesitant fuzzy linguistic term sets and probabilistic linguistic term sets. Inf Sci 372:407–427

Govindan K, Khodaverdi R, Vafadarnikjoo A (2015) Intuitionistic fuzzy based DEMATEL method for developing green practices and performances in a green supply chain. Expert Syst Appl 42(20):7207–7220

Guo W-T, Huynh V-N, Sriboonchitta S (2017) A proportional linguistic distribution based model for multiple attribute decision making under linguistic uncertainty. Ann Oper Res 256(2):305–328

Han W, Sun Y, Xie H, Che Z (2018) Hesitant fuzzy linguistic group DEMATEL method with multi-granular evaluation scales. Int J Fuzzy Syst 20(7):2187–2201

Herrera F, Martínez L (2000) A 2-tuple fuzzy linguistic representation model for computing with words. IEEE Trans Fuzzy Syst 8(6):746–752

Jiang L, Liao H (2020) Mixed fuzzy least absolute regression analysis with quantitative and probabilistic linguistic information. Fuzzy Sets Syst 387:35–48

Jin C, Wang H, Xu Z (2019) Uncertain probabilistic linguistic term sets in group decision making. Int J Fuzzy Syst 21(4):1241–1258

Klement EP, Mesiar R, Pap E (2013) Triangular norms, vol 8. Springer Science & Business Media, Berlin

Knight Frank H (1921) Risk, uncertainty and profit. Houghton Mifflin, Boston

Li P, Wei C (2019) An emergency decision-making method based on DS evidence theory for probabilistic linguistic term sets. Int J Disaster Risk Reduct 37:101178

Li Y, Hu Y, Zhang X, Deng Y, Mahadevan S (2014) An evidential DEMATEL method to identify critical success factors in emergency management. Appl Soft Comput 22:504–510

Liao H, Jiang L, Lev B, Fujita H (2019) Novel operations of PLTSs based on the disparity degrees of linguistic terms and their use in designing the probabilistic linguistic ELECTRE III method. Appl Soft Comput 80:450–464

Liao H, Jiang L, Xu Z, Xu J, Herrera F (2017) A linear programming method for multiple criteria decision making with probabilistic linguistic information. Inf Sci 415:341–355

Liao H, Mi X, Xu Z (2020) A survey of decision-making methods with probabilistic linguistic information: bibliometrics, preliminaries, methodologies, applications and future directions. Fuzzy Optim Decis Mak 19(1):81–134

Liao H, Xu Z, Herrera-Viedma E, Herrera F (2018) Hesitant fuzzy linguistic term set and its application in decision making: a state-of-the-art survey. Int J Fuzzy Syst 20(7):2084–2110

Liao H, Xu Z, Zeng X-J, Merigó JM (2015) Qualitative decision making with correlation coefficients of hesitant fuzzy linguistic term sets. Knowl Based Syst 76:127–138

Lin M, Chen Z, Liao H, Xu Z (2019) ELECTRE II method to deal with probabilistic linguistic term sets and its application to edge computing. Nonlinear Dyn 96(3):2125–2143

Lin M, Wang H, Xu Z, Yao Z, Huang J (2018) Clustering algorithms based on correlation coefficients for probabilistic linguistic term sets. Int J Intell Syst 33(12):2402–2424

Liu P, Teng F (2018) Some Muirhead mean operators for probabilistic linguistic term sets and their applications to multiple attribute decision-making. Appl Soft Comput 68:396–431

Liu P, Teng F (2019) Probabilistic linguistic TODIM method for selecting products through online product reviews. Inf Sci 485:441–455

Mi X, Liao H, Wu X, Xu Z (2020) Probabilistic linguistic information fusion: a survey on aggregation operators in terms of principles, definitions, classifications, applications, and challenges. Int J Intell Syst 35(3):529–556

Pang Q, Wang H, Xu Z (2016) Probabilistic linguistic term sets in multi-attribute group decision making. Inf Sci 369:128–143

Parreiras RO, Ekel PY, Martini JSC, Palhares RM (2010) A flexible consensus scheme for multicriteria group decision making under linguistic assessments. Inf Sci 180(7):1075–1089

Potamites E, Zhang B (2012) Heterogeneous ambiguity attitudes: a field experiment among small-scale stock investors in China. Rev Econ Des 16(2–3):193–213

Rodriguez RM, Martinez L, Herrera F (2011) Hesitant fuzzy linguistic term sets for decision making. IEEE Trans Fuzzy Syst 20(1):109–119

Schmeidler D (1986) Integral representation without additivity. Proc Am Math Soc 97(2):255–261

Schmeidler D (1989) Subjective probability and expected utility without additivity. Econom J Econom Soc 57(3):571–587

Si S-L, You X-Y, Liu H-C, Zhang P (2018) DEMATEL technique: a systematic review of the state-of-the-art literature on methodologies and applications. Math Probl Eng 1:1–33

Song Y, Li G (2019) A large-scale group decision-making with incomplete multi-granular probabilistic linguistic term sets and its application in sustainable supplier selection. J Oper Res Soc 70(5):827–841

Suo W-L, Feng B, Fan Z-P (2012) Extension of the DEMATEL method in an uncertain linguistic environment. Soft Comput 16(3):471–483

Supeekit T, Somboonwiwat T, Kritchanchai D (2016) DEMATEL-modified ANP to evaluate internal hospital supply chain performance. Comput Ind Eng 102:318–330

Wan S-P, Yi Z-H (2015) Power average of trapezoidal intuitionistic fuzzy numbers using strict t-norms and t-conorms. IEEE Trans Fuzzy Syst 24(5):1035–1047

Wu X, Liao H (2018) An approach to quality function deployment based on probabilistic linguistic term sets and ORESTE method for multi-expert multi-criteria decision making. Inf Fusion 43:13–26

Wu X, Liao H (2019) A consensus-based probabilistic linguistic gained and lost dominance score method. Eur J Oper Res 272(3):1017–1027

Wu X, Liao H, Xu Z, Hafezalkotob A, Herrera F (2018) Probabilistic linguistic MULTIMOORA: a multicriteria decision making method based on the probabilistic linguistic expectation function and the improved Borda rule. IEEE Trans Fuzzy Syst 26(6):3688–3702

Wu Y, Li C-C, Chen X, Dong Y (2018) Group decision making based on linguistic distributions and hesitant assessments: maximizing the support degree with an accuracy constraint. Inf Fusion 41:151–160

Xie W, Xu Z, Ren Z, Wang H (2018) Probabilistic linguistic analytic hierarchy process and its application on the performance assessment of Xiongan new area. Int J Inf Technol Decis Mak 17(06):1693-1724

Xu Z, He Y, Wang X (2019) An overview of probabilistic-based expressions for qualitative decision-making: techniques, comparisons and developments. Int J Mach Learn Cybern 10(6):1513–1528

Xu Z (2004) EOWA and EOWG operators for aggregating linguistic labels based on linguistic preference relations. Int J Uncertain Fuzziness Knowl Based Syst 12(06):791–810

Yager RR (1981) Concepts, theory, and techniques a new methodology for ordinal multiobjective decisions based on fuzzy sets. Decis Sci 12(4):589–600

Yi Z-H, Qin F, Li W-C (2008) Generalizations to the constructions of t-norms: rotation(-annihilation) construction. Fuzzy Sets Syst 159(13):1619–1630

Yu W, Zhang H, Li B (2019) Operators and comparisons of probabilistic linguistic term sets. Int J Intell Syst 34(7):1476–1504

Zadeh LA (1975) The concept of a linguistic variable and its application to approximate reasoning-I. Inf Sci 8(3):199–249

Zhai Y, Xu Z, Liao H (2016) Probabilistic linguistic vector-term set and its application in group decision making with multi-granular linguistic information. Appl Soft Comput 49:801–816

Zhang X (2018) A novel probabilistic linguistic approach for large-scale group decision making with incomplete weight information. Int J Fuzzy Syst 20(7):2245–2256

Zhang X, Liao H, Xu B, Xiong M (2020) A probabilistic linguistic-based deviation method for multi-expert qualitative decision making with aspirations. Appl Soft Comput 93(3):106362

Zhang X, Xing X (2017) Probabilistic linguistic VIKOR method to evaluate green supply chain initiatives. Sustainability 9(7):1231

Acknowledgements

The author is very grateful to Prof. and EIC Yaochu Jin and the anonymous reviewers for their careful work and insightful comments for the improvement of the manuscript. Thanks also go to Prof. Huchang Liao (Sichuan University) for his kind help in preparation of the early version of the manuscript. The author deeply appreciates the directions by Prof. Hongquan Li (Hunan Normal University) and Prof. Feng Qin(Jiangxi Normal University).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported by PhD Research Startup Foundation of Nanchang Normal University under Grant NSBSJJ2020015, and is partially supported by Science and Technology Project of Jiangxi Provincial Educational Department of China under GJJ212609, and Humanities and Social Sciences Projects for Universities in Jiangxi Province of China under Grant GL18212, and Key R&D Project of Jiangxi Provincial Department of Science and Technology under Grant 20192BBEL50040.

Appendix: Introduction on triangular (co) norms

Appendix: Introduction on triangular (co) norms

Triangular (co)norms (T-(co)norms) [24, 57] are a class of symmetric, monotonic and associative operations on the unit square with neutral element 1(0) in fuzzy theory and information aggregation. A t-norm T and a t-conorm C is dual in the sense that \(C(x,y)=1-T(1-x,1-y)\). In particular, Archimedean t-norms can be additively generated by their generators t, i.e., \(T(x,y)=t^{-1}(\min (t(x)+t(y),t(0)))\) where the generator t is a \([0,1]\rightarrow [0,\infty ]\) a continuous strictly decreasing function. Similarly, Archimedean t-conorms can also be additively generated by their generators C, i.e., \(C(x,y)=c^{-1}(\min (c(x)+c(y),c(1)))\) where the generator c is a \([0,1]\rightarrow [0,\infty ]\) a continuous strictly increasing function. Here we recall the notations on the Hamacher and Frank t-norms and t-conorms [24] (Table 8).

In particular, for the Hamacher t-(co)norm, if \(\delta =0\), then \(T_\delta ^H(C_\delta ^H) \) reduces to the Einstein t-(co)norm, i.e., \(T_E(C_E)\); if \(\delta =1\), then \(T_\delta ^H(C_\delta ^H) \) reduces to the product t-(co)norm, i.e., \(T_P(C_P)\), which is also the case for the case \(\theta =1\) in the Frank t-(co)norm.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yi, Z. Decision-making based on probabilistic linguistic term sets without loss of information. Complex Intell. Syst. 8, 2435–2449 (2022). https://doi.org/10.1007/s40747-022-00656-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00656-2