Abstract

The motion intensity of patient is significant for the trajectory control of exoskeleton robot during rehabilitation, as it may have important influence on training effect and human–robot interaction. To design rehabilitation training task according to situation of patients, a novel control method of rehabilitation exoskeleton robot is designed based on motion intensity perception model. The motion signal of robot and the heart rate signal of patient are collected and fused into multi-modal information as the input layer vector of deep learning framework, which is used for the human–robot interaction model of control system. A 6-degree of freedom (DOF) upper limb rehabilitation exoskeleton robot is designed previously to implement the test. The parameters of the model are iteratively optimized by grouping the experimental data, and identification effect of the model is analyzed and compared. The average recognition accuracy of the proposed model can reach up to 99.0% in the training data set and 95.7% in the test data set, respectively. The experimental results show that the proposed motion intensity perception model based on deep neural network (DNN) and the trajectory control method can improve the performance of human–robot interaction, and it is possible to further improve the effect of rehabilitation training.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Compared with the traditional rehabilitation training, the rehabilitation exoskeleton robots can provide more scientific and reasonable rehabilitation training for patients while reducing the workload of the therapists [1, 2], which have become one of the most popular research topics [3,4,5]. Many research groups over the world have worked in this area [6,7,8], and the exoskeleton robots for different rehabilitation parts have been presented [9,10,11]. To improve the rehabilitation training and safety of exoskeleton robot, researchers have been exploring new design and control methods of human–robot interaction system [12,13,14].

The German Research Center for Artificial Intelligence (DFKI) developed a double-arm exoskeleton rehabilitation robot named RECUPERA. Patients with hemiplegia can conduct rehabilitation training in both standing and sitting posture through modular design, realizing two different rehabilitation modes: teaching and mirror image [15, 16]. Mehran et al. [17, 18] designed a 7-DOF upper limb rehabilitation exoskeleton robot and studied the adaptive neural network fast sliding mode control method. Aiguo et al. [19, 20] designed the WAM upper limb rehabilitation exoskeleton robot. Using the designed sensor to detect the motion state of the patients’ limb and the corresponding posture warning controller, the robot can immediately remove the restraint to patient’s limb in case of abnormal conditions during the movement. Guoliang et al. [21, 22] developed a flexible exoskeleton robot with 6-DOF, the shoulder joints were based on 3RPS parallel mechanism, and all joints were driven by pneumatic cylinders to perform the flexible motion control of each joint. To improve the interaction and control performance of the exoskeleton robot, the researchers proposed a control method based on electroencephalogram (EEG) [23, 24], electromyogram (EMG) [25, 26] and other motion intention recognition methods, without considering the influence of the wearer’s motor function on control. In this study, we propose a bionic control method for the upper limb exoskeleton robot [27] and a motion intensity classification method based on multi-modal information, which includes the motion signal of robot and the heart rate signal of patient [28]. The method proposed can reproduce the daily motion trajectories and provide a new method for improving the human–robot interaction performance.

At present, the existing control methods of the upper limb rehabilitation exoskeleton robots have improved the human–robot interaction performance [29, 30], but the rehabilitation training process lacks the perception of patients’ motor function, which limits the effect and safety of the rehabilitation training. In this study, a novel control method of rehabilitation exoskeleton robot is designed based on motion intensity perception model. The main contributions of the study are as follows: (1) to reflect patients’ motion intensity more comprehensively and accurately, the kinematic acceleration signal and the heart rate signal of patient are simultaneously collected to constitute the multi-modal vector. (2) Taking multi-modal vector as input, the motion intensity perception model is built based on the DNN model, which can perceive and classify motion intensity into three categories: strong, moderate and weak. (3) According to the perceived motion intensity, the control system is designed to optimize the motion trajectory of the exoskeleton robot in real time, and the online test is conducted to verify the effectiveness of the proposed method.

The method proposed is feasible to control the exoskeleton robot motion state according to patient’s condition and improve the human-robot interaction performance and safety.

Design of multi-modal information fusion vector

According to the definition of medical theory, motion intensity refers to the degree of exertion of strength and the tension of human body in executing actions, which is mainly determined by the degree of strength and fatigue of subjects. Motion intensity directly affects the stimulation effect of current movement on human body, moderate motion intensity can effectively promote the improvement and recovery of motor function. However, if motion intensity is too high and exceeds the limit that the human body can withstand for a long time, it will cause the human motor function decline [31]. In the process of rehabilitation training for patients, keeping moderate motion intensity can improve the stimulation of exercise on human body, prevent secondary injury and improve the safety of rehabilitation training.

Human motion intensity is mainly determined by real-time motion posture, recovery degree of patients and exercise fatigue degree, and the measurement standard is mainly based on patients’ physiological state and real-time motion state. Most human–robot interaction processes are performed by patients’ physiological signals or motion signals in the rehabilitation medical field [32, 33]. There will be great individual differences when using physiological signals alone, and the motion signal has the lag in intention recognition and trajectory control. In this study, the kinematic acceleration signal and the physiological heart rate signal are simultaneously used to form a multi-modal vector, which correspond to patients’ motion state and physiological state. Combining the advantages of the two signals, the multi-modal vector is used as the input layer of the deep learning model.

Figure 1 shows the process of obtaining motion intensity. Considering the patient’s exertion and fatigue during exercise, it is divided into three categories: strong, moderate and weak. The finger clip photoelectric pulse sensor Heat Rate Clamp is used to collect heart rate signals, the information is mainly concentrated in the time domain and frequency domain. To accurately and efficiently extract the heart rate information, four eigenvalues of the heart rate signal from the time domain and frequency domain are extracted. First, time domain standard deviation (SD) is obtained as follows:

where xi is the value of the heart rate signal corresponding to the time series, N is the total number of sampling points for this time series, μ is the average value of the signal for the period.

Furthermore, time domain approximate entropy (ApEn) is extracted to express the complexity of time series. For one-dimensional heart rate discrete signal a(1), a(2),…,a(N) obtained by equal interval sampling, reconstruct it into m dimensional vector A(1), A(2),…, A(N-m + 1), where m = 3 is reconstructed vector dimension, and count reconstructed vectors satisfying the following conditions:

where d[A(i), A(j)] is the vector distance, which is defined as the maximum absolute difference of each dimension in the two reconstruction vectors, r = 0.2 × SD represents the measure of similarity, \(j \in \left[ {1,N - m + 1} \right]\).

The disordered state variable in m dimension is

ApEn is the difference between the disordered state variables with higher and lower reconstruction dimensions:

Considering the periodicity and real-time performance of heart rate signal, fast Fourier transform is used to obtain frequency domain signal, root mean square frequency (RMSF) and frequency standard deviation (RVF) are extracted as frequency domain eigenvalues:

where f is sampling frequency of heart rate signal, and S is the corresponding amplitude.

The kinematic eigenvalue comes from the elbow joint and shoulder joint extension/flexion DOF, which are commonly used in daily life, they are extracted from both the integrated encoder of disc motor and the encoder configured on the back side of the stepper motor. The change of human motion intensity will lead to the joint relative angle difference between the body and the exoskeleton. Therefore, the relative velocity difference is extracted to predict the motion intensity. To avoid the error classification of the motion intensity caused by the sudden acceleration or deceleration of the joint at a certain time, all angle signal data in the first 3 s of a certain moment is collected, calculate the difference between the angle signal collected and the angle signal of the expected trajectory, and get its average value as relative velocity difference. After data standardization, the multi-modal fusion vector is imported into the motion intensity perception model as the input layer, and finally the real-time motion intensity is perceived and classified.

Due to different data sources, there is numerical difference between each data of multi-modal vectors. Gradient descent method [7] is needed for the subsequent model iterative optimization. The data imported into the classification model with large differences in each dimension will lead to instability of the gradient data, which greatly affected the convergence rate of the model. Therefore, the vector can only be used as the input layer of the model when the data is preprocessed. The mean and standard deviation of eigenvalues based on the Z-Score standardized data preprocessing method are shown in Fig. 2, the abscissa corresponds to the six eigenvalues extracted from multi-modal information fusion vectors, the ordinate represents the dimensionless value of the vectors after data standardization, and the three colors represent different motion intensity labels. It can be seen from the figure that the average value of the first four-dimensional eigenvalues extracted from the heart rate signal differ significantly with small variances. Therefore, the recognition of the heart rate signal eigenvalues of the multi-modal vector is higher, while the latter two-dimensional motion signal eigenvalues are more scattered with low recognition due to hysteresis and lack of periodicity of rehabilitation actions, which indicate that the heart rate signal has a higher correlation with the motion intensity defined in this study.

Motion intensity perception model based on deep learning

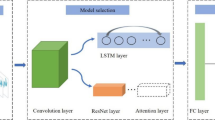

The primary function of deep learning is to perform classification based on deeper model structure. Deep learning includes a variety of algorithms, where the input of convolutional neural network (CNN) is tensor, and deep belief network (DBN) is used to evaluate classification probability. In this study, the input of the motion intensity perception model is the multi-modal fusion vector, and the expected output is the specific classification result. Therefore, the DNN method is used to implement the model in this study.

DNN is composed of neurons of perception models, which are mainly divided into input layer, hidden layer and output layer. According to the experience of modeling, the number of neurons in the three layers is 10, 8 and 4, respectively. To alleviate the gradient vanishing and exploding problems, select the linear activation function ReLu for the first two layers of the network and Softmax for the last layer [34].

Forward propagation of perception model

The forward propagation algorithm is mainly used to solve the output of the next layer through the output of the previous layer. Figure 3 shows the basic framework of DNN model constructed in this study.

Generalizing the forward relationship, for the J neurons in the i-1 layer, the output a(i) k of the k-th neuron in layer i is

where superscript denotes the layer index of the neural network and subscript denotes the neuron index of the neural network layer; z is the linear operation result of a single neuron; b is the deviation ratio after linear operation; ω is the linear operational coefficient of the neuron; the two numbers in the subscript represent the index of operation coefficients from the k-th neuron in layer i to the l-th neuron in layer i-1; σ is the neuronal activation function [35]; if i = 2, the upper input a is taken as the x parameter of the input layer.

For each layer of neural network, matrix operation is simpler than direct algebraic operation. Therefore, assuming that there are j neurons in i-1th layer and k neurons in the i-th layer, the output of the i-1 layer can form a vector ai−1 with dimension j × 1. Similarly, the output of the i-th layer can form a vector ai with dimension k × 1. The linear coefficients of the i-th layer can compose the operation matrix Wi with dimension j × k. The offset coefficient b of the i-th layer can compose the operation vector bi with dimension k × 1. The inactive operation results of the i-th layer compose the vector zi with dimension k × 1. Then, the forward propagation matrix is

Backward propagation of perception model

The deployment of DNN is completed by establishing the forward propagation relationship, and the unknown parameters in the neural network are set by random initial values during the deployment, which makes the neural network does not have uniform characteristics. Therefore, it is necessary to calculate the deviation between the output layer data and the predetermined label according to the loss function. The deviation value is used to optimize parameters of the neural network, which is the backward propagation process of the neural network. In DNN, gradient descent is often used to solve the extreme value of the loss function [36].

The primary function of the motion intensity perception model is classification. To make the classification labels more obvious, the one-hot coding is adopted to replace different motion intensity labels, therefore, the cross-entropy is used as the loss function in the optimization process [37].

The specific calculation formula of the cross-entropy loss function is [37]

where n is the number of classification categories; yc is the classification indicator variable, which is 1 if the classification results are consistent, otherwise it is 0; c is the current category; pc is the prediction probability of the current model for category c.

As the classification indicator variable yc can only be 1 or 0, and p under each classification label is independent of each other, therefore, the gradient vector can be further simplified, and the gradient of the specific loss function for p is

In practical application, the objects which needed to be optimized are the neuron parameters ω and b of each layer of the neural network, the loss function is a function of classification indicator variable y and prediction probability p, which is not directly related to the neuron parameters of each layer. Therefore, the partial differentiation needs to be calculated by chain derivative rule of compound function in turn. It can be obtained that

The first term of Eq. (11) has been solved. For the second term, the results of the linear operation are derived mainly from the activation function, the primary method to deal with the second term is to derive the results of the linear operation for the activation function, while the ReLu activation function itself is also a linear activation function. Therefore, it can be obtained that

The third term in Eq. (11) is the derivation of the linear operation result to the weight parameter of the neuron, which can be easily obtained as the corresponding value of the input layer when the front layer of the current neural network is the input layer.

Gradient descent optimization algorithm

To update and optimize the internal parameters of the model, solve the problem of slow gradient descent and ensure the stability of the model, many optimization algorithms have been proposed. Adam algorithm combines the advantages of Momentum and RMSProp algorithm, retains the new super parameters in both algorithms, and adds the adjustment of middle process, which is commonly used in deep learning models. Therefore, the Adam optimization algorithm is adopted in this study, and its implementation is as follows.

The exponential weighted average values in the Momentum algorithm are

where dW and db are error gradients of neuron parameters W and b obtained by backward propagation, respectively; β1 is the hyper parameter of the algorithm with the default value of 0.9.

Using RMSprop algorithm to update the iterative weight S,

where the initial value of the iterative weight S is 0, which will be updated continuously with the progress of gradient descent. β2 is the hyper parameter of RMSprop algorithm with the default value of 0.999.

The Adam algorithm needs to modify the gradient value according to the deviation, add superscript corrected to the gradient correction term, then obtained

where t is the current iteration time.

Similarly, the gradient correction terms of neuron parameter b are, respectively,

Neuron parameters W and b that updated according to learning rate α are

Analysis of motion intensity perception model

The motion intensity perception model is constructed based on DNN, and Fig. 4 shows the specific structure of DNN based on motion intensity perception.

The motion intensity is divided into three corresponding categories: strong, moderate and weak. Signal acquisition methods are different under each intensity. The definition of weak motion intensity is that the subject can complete the entire motion trajectory without initiatively sending force during the whole rehabilitation training process, and next round of data collection is conducted at an interval of 3 min after each group of data collection. The definition of moderate motion intensity is that the maximum output torque of the motor is limited and can only move under the condition of no load. During rehabilitation training, the subject will feel the assistance from the exoskeleton, and can complete the whole rehabilitation trajectory with less effort. After several continuous experiments, the subject will rest and pause to collect data. The definition of strong motion intensity is that the output torque of the motor on the rehabilitation exoskeleton is further restricted, and it can hardly drive itself to carry out rehabilitation training. The subjects need to take the initiative to complete the whole rehabilitation trajectory and carry out continuous experiments, and the data of fatigue status of subjects are mainly used.

The action of drinking water requires the collaborative participation of the four main joints of the human upper limb, which is a typical action in rehabilitation training. Therefore, taking drinking water as the training action, as shown in Fig. 5, a total of four healthy volunteers (22–24 years old) are selected for the test, including three males and one female. One of the volunteers (male, 23 years old) is selected as the subject for online test, and the other three volunteers are selected for offline test.

To simulate the rehabilitation scene of patients with upper limb muscular weakness, two sandbags weighing 1.0 kg are tied to the middle of brachium and forearm of the subjects, and the motor output moment is limited. In the process of model training and testing, when the subjects wear the exoskeleton robot and heart rate sensor, the operator will operate the host computer, let the exoskeleton output the corresponding motor torque according to different motion intensities, and move in accordance with the predetermined rehabilitation trajectory. At the same time, the operator will guide subjects the exertion of strength under different motion intensities. 600 groups of rehabilitation tests are performed under each motion intensity.

To give the model enough data to converge, 300 sets of multi-modal vector data (training data) with classification labels under each motion intensity are collected for model training. In addition, 300 sets of multi-modal vector data (test data) under each motion intensity are taken for model test, and a total of 1800 sets of multi-modal vector data is used for model construction and analysis. The average recognition accuracy of the perception model can reach 99.0% in the training data set and 95.7% in the test data set, respectively, and the accuracy fluctuation range is stable.

To compare the recognition accuracy of different algorithm models and different types of fusion vectors horizontally, the six-dimensional vectors are divided into three groups. The time domain standard deviation and approximate entropy of the first two dimensions of heart rate signals are time domain eigenvalues (TDE), the root mean square frequency and frequency domain standard deviation of the middle two dimensions are frequency domain eigenvalues (FDE), and the two-dimensional motion with joint velocity difference is angular velocity deviation (AVD). The identification effect of classification perception is measured, and the radar chart of test data identification accuracy distribution is obtained, as shown in Fig. 6.

The identification accuracy data of each model and multi-modal vector combination are shown in Table 1. By intuitively comparing different mathematical models, it can be seen that the classification accuracy of the DNN model is much higher than k-nearest neighbor (KNN) and support vector machine (SVM), which is mainly because of the algorithm complexity of DNN is much higher than that the other two while the time of training and classification test of DNN model is also higher. By analyzing the combination of different vectors, the identification accuracy of multi-modal vector fusion is significantly higher than any combination of two other feature signals. The identification accuracy of all eigenvalues combination of the heart rate signal is also higher than other combinations, which also indicates that the heart rate signal has a higher correlation with the motion intensity defined in this study. In addition, adding kinematic signals does not reduce the overall identification accuracy, and the eigenvalues of kinematic signals are mainly aimed at the individual differences in the general physiological signals. Therefore, the DNN model and the multi-modal fusion vector combination with all dimensions obtain the highest identification accuracy.

After horizontally comparing of different classification models, we try to analyze and compare the different classification labels of the single classification model. The confusion matrix is drawn by adding multi-modal fusion vectors of all dimensions to the DNN model with the highest classification accuracy in the above model. The confusion matrix is also known as the error matrix, which is mainly used to evaluate the accuracy of the classification model. The square matrix intuitively shows the classification accuracy of the classification model for different label data. The identification accuracy of the perception model for each label of the motion intensity is mainly observed, as shown in Fig. 7.

In Fig. 7, the abscissa represents the motion intensity label predicted by the perception model, and the ordinate represents the actual label of the multi-modal vector. The percentage values in blocks represent the sensitivity of the model, and the numbers on the right side of confusion matrix represent the precision and the false positive rate of the model. By observing the confusion matrix, it can be found that the perception model has a high recognition degree for the weak motion intensity label, and the identification error mainly comes from the identification deviation of strong motion intensity and moderate motion intensity, the error is within an acceptable range. The reason for the analysis error is that the distinction between the strong motion intensity and the moderate motion intensity is too vague when the sample data are collected.

Based on the above analysis of the classification accuracy of the perception model, it can be seen that the perception model is suitable for the motion intensity classification requirements defined in this study.

Control experiment based on motion intensity perception

Overall design of control system

The master–slave control scheme of centralized control and distributed control in parallel is determined for the control system. PC is used as the main controller to directly control the integrated joint motor, combine with the STM32 embedded controller as the stepper motor control system. The control system can be divided into four main modules: main controller module, embedded controller module, stepper motor module and joint motor module. The structure diagram of the control system is shown in Fig. 8.

The main controller module is performed by the PC. Its main functions include determining parameters used for rehabilitation training, processing the collected sensor signal data and importing vector to the motion intensity perception model for updating the motion intensity in real time. The main controller module communicates with the joint motor module through USB to CAN interface to perform position control. The main controller also needs to communicate with the embedded controller module through serial port.

The core task of embedded controller is to provide output enable signal, direction control signal and pulse control signal to the stepper motor driver through STM32. The encoder of the stepper motor is connected with the pin of the embedded controller, which collects the feedback signal of the encoder. The embedded controller and the main controller module can perform real-time synchronization control of multiple joint motors through serial port connection.

Stepper motor module is composed of stepper motor driver and encoder. The driver needs the multi-channel PWM signal from the embedded controller to change the operating state of the motor. The encoder assembled at the end of the stepper motor can detect current motion position and feedback to prevent motor blocking. After ADAMS dynamics simulation, the 86 stepper motor and 54 stepper motor are selected to achieve the function.

Joint motor module is a compact combination of disc motor driver, harmonic reducer and encoder. Disc joint motor requires CAN bus protocol, communication content includes receiving control signal and feedback position signal. The CAN bus is directly connected to the PC through CAN to USB, and the main controller sends the position control signal to it. Through ADAMS dynamic simulation, INNFOS disc joint motors are selected to achieve the function.

Experimental design of rehabilitation exercise based on motion intensity

The motion intensity perception model has achieved good results in offline test, but in the actual scenarios, the online model is needed to perform the real-time interaction. In this study, the rehabilitation training scene is simulated, and the model program interface is added to the control program of upper limb rehabilitation exoskeleton robot. The performance of the perception model is observed through online rehabilitation training.

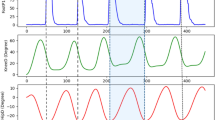

The volunteer (male, 23 years old) is selected as the online test subject. Before the test, the operator assists the subject to wear his left arm on the exoskeleton and adjust the overall length to the comfort. The sandbags are tied during the experiment, and the heart rate sensor is wearing on the left hand. The designed rehabilitation experiment is mainly aimed at the flexion/extension degree of the shoulder joint and the DOF of the elbow joint. First, to develop the demonstration reproduction mode, the water drinking action is selected for the rehabilitation training, the specific action decomposition is shown in Fig. 9. The motor movement angle during rehabilitation training is collected, as shown in Fig. 10, which is used as the training data of motion control. The zero position corresponds to the free-falling position of the upper limb, and the encoder data have been converted into the joint motion angle data. The M1, M2 and M3 of the shoulder joint correspond to the DOF of abduction/adduction, flexion/extension, and internal rotation/external rotation. The collected trajectory is saved in the corresponding file and outputted to the control system as the expected trajectory.

Next, the rehabilitation training is performed. The initial motion intensity is set to moderate intensity, and the online perception model is used to modify the real-time motion intensity every 5 s. According to the motion intensity collected of the subject, the corresponding output torque of the exoskeleton is adjusted. Each rehabilitation training trajectory is a rehabilitation cycle, the online test carries out five consecutive rehabilitation cycles and pauses for 5 s after each cycle is completed.

Using Lanczos resampling algorithm to up-sample the rehabilitation motion trajectory to control the increment adjustment. If the set of input points is x, then the weight of the Lanczos window function for each point is

where \(\sin {\text{c}}(x) = \frac{{\sin ({\uppi }x)}}{{{\uppi }x}}\); a is the hyper parameter of the algorithm, which can be chosen as 2 or 3, corresponding to the adjustment of the reduced interpolation or enlarged interpolation. The demand is the up-sampling, where a = 3.

Reconstruct the required point set, the specific reconstruction function is

where S(x) is the resampling value at position x; si is the sampling value of the original i position.

The purpose of resampling is to increase the number of trajectory points, so that the system can change the output of corresponding position control signal according to the motion intensity, and then changes the output velocity of the motor.

After testing, the algorithm of establishing threads in the main program for data collection, performing multi-modal fusion and standardization, and using the perception model for identification takes about 1.8 s to run. The control cycle of the motor is 200 Hz, and the thread resource utilization meets the expectation. The collection cycle of heart rate signal is 3 s. the control system responds quickly enough because the patient’s motion intensity is updated every 5 s. The experimental device is built as shown in Fig. 11.

The five continuous periodic motion trajectories recorded by the encoder are shown in Fig. 12. In the above experimental scheme, by collecting the heart rate signal and patients’ motion signal, the intensity data obtained by the online motion intensity perception model is shown in Table 2. The data in the table represent the duration of a certain motion intensity during the rehabilitation cycle. Figure 12 shows the trajectory of the simulated patients’ rehabilitation training driven by the motor in five rehabilitation cycles. In the first three rehabilitation cycles of the simulated patients, due to the full physical strength, rehabilitation training is carried out faster. Only during some stretching exercises, weak motion intensity is detected, and the overall motion intensity is maintained at a moderate level. In contrast, during the last two rehabilitation cycles, the percentage of weak intensity is increased, and the corresponding motion point trajectory output rate decreased, the overall motion trajectory is smoother, and also consumes more time to complete a rehabilitation cycle. Therefore, it is feasible to apply the motion intensity perception model proposed in this study to the control of the upper limb rehabilitation exoskeleton robot, and the trajectory control and optimization based on the patients’ motion intensity can be performed.

Conclusion

In this study, a motion intensity perception model based on multi-modal information fusion is proposed by fusing acceleration signal and heart rate signal, and it is applied for trajectory planning and control of upper limb rehabilitation exoskeleton robot. Using the 6-DOF upper limb exoskeleton robot developed in the laboratory previously, a multi-modal information fusion perception system is built to implement a series of tests. The results show that the collected experimental data of motion intensity basically conforms to the actual motion law of human, and the average recognition accuracy of motion intensity is greatly improved using the DNN model designed in this study. The average recognition accuracy can reach up to 99.0% in the training data set and 95.7% in the test data set, respectively. Taking water drinking action as an example, the rehabilitation training under teaching mode is performed by software and algorithm design, and the optimization strategy of motion velocity is accomplished based on the results of motion intensity perception. The algorithm of the system takes about 1.8 s to run. The control cycle of the motor is 200 Hz, and the thread resource utilization meets the expectation. The results show that the proposed motion intensity perception model can be applied for upper limb rehabilitation exoskeleton robots, as it can improve the rehabilitation training effect and human–robot interaction performance. Our future work will focus on adding EMG signals to the multi-modal vector for the analysis of patients’ motion intention and intensity, as well as the control of compliant motion.

Availability of data and material

The datasets used or analyzed during the current study are available from the corresponding author on reasonable request.

Code availability

The code used during the current study is available from the corresponding author on reasonable request.

References

Wang WD, Li HH, Kong DZ, Xiao MH, Zhang P (2020) A novel fatigue detection method for rehabilitation training of upper limb exoskeleton robot using multi-information fusion. Int J Adv Robot Syst 17

Shi D, Zhang WX, Zhang W, Ju LH, Ding XL (2021) Human-centred adaptive control of lower limb rehabilitation robot based on human-robot interaction dynamic model. Mech Mach Theory 162

Tschiersky M, Hekman EEG, Brouwer DM, Herder JL, Suzumori K (2020) A compact McKibben muscle based bending actuator for close-to-body application in assistive wearable robots. IEEE Robot Autom Lett 5:3042–3049

Wang D, Meng Q, Meng Q, Li X, Yu H (2018) Design and development of a portable exoskeleton for hand rehabilitation. IEEE Trans Neural Syst Rehabil Eng 26:2376–2386

Zhou LB, Chen WH, Wang JH, Bai SP, Yu HY, Zhang YP (2018) A novel precision measuring parallel mechanism for the closed-loop control of a biologically inspired lower limb exoskeleton. Ieee-Asme Trans Mechatron 23:2693–2703

Louie DR, Eng JJ (2016) Powered robotic exoskeletons in post-stroke rehabilitation of gait: a scoping review. J Neuroeng Rehabil 13

Alamdari A, Haghighi R, Krovi V (2019) Gravity-balancing of elastic articulated-cable leg-orthosis emulator. Mech Mach Theory 131:351–370

Zhang WX, Zhang W, Ding XL, Sun L (2020) Optimization of the rotational asymmetric parallel mechanism for hip rehabilitation with force transmission factors. J Mechan Robot-Trans Asme 12

Khazoom C, Veronneau C, Bigue JPL, Grenier J, Girard A, Plante JS (2019) Design and control of a multifunctional ankle exoskeleton powered by magnetorheological actuators to assist walking, jumping, and landing. IEEE Robot Autom Lettss 4:3083–3090

Ranzani R, Lambercy O, Metzger JC, Califfi A, Regazzi S, Dinacci D, Petrillo C, Rossi P, Conti FM, Gassert R (2020) Neurocognitive robot-assisted rehabilitation of hand function: a randomized control trial on motor recovery in subacute stroke. J Neuroeng Rehabil 17:115

Shi D, Zhang WX, Zhang W, Ding XL (2019) A review on lower limb rehabilitation exoskeleton robots. Chin J Mech Eng 32

Xing H, Torabi A, Ding L, Gao H, Deng Z, Mushahwar VK, Tavakoli M (2021) An admittance-controlled wheeled mobile manipulator for mobility assistance: Human & ndash;robot interaction estimation and redundancy resolution for enhanced force exertion ability? Mechatronics 74

Huang Y, Song R, Argha A, Celler BG, Savkin AV, Su SW (2021) Human motion intent description based on bumpless switching mechanism for rehabilitation robot. IEEE Trans Neural Syst Rehabil Eng 29:673–682

Wang WD, Li HH, Zhao CZ, Kong DZ, Zhang P (2021) Interval estimation of motion intensity variation using the improved inception-V3 model. Ieee Access 9:66017–66031

Bai SP, Christensen S, Ul Islam MR (2017) An upper-body exoskeleton with a novel shoulder mechanism for assistive applications. In: 2017 IEEE International Conference on Advanced Intelligent Mechatronics (Aim):1041–1046

Shivesh K, Hendrik WH, Mathias T, Marc S, Heiner P, Martin M, Elsa AK, Frank K (2019) Modular design and decentralized control of the recupera exoskeleton for stroke rehabilitation. Appl Sci 9

Rahmani M, Rahman MH (2020) Adaptive neural network fast fractional sliding mode control of a 7-DOF exoskeleton robot. Int J Control Autom Syst 18:124–133

Rahman MH, Rahman MJ, Cristobal OL, Saad M, Kenné JP, Archambault PS (2015) Development of a whole arm wearable robotic exoskeleton for rehabilitation and to assist upper limb movements. Robotica 33:19–39

Shi K, Song A, Li Y, Li H, Chen D, Zhu L (2021) A cable-driven three-DOF wrist rehabilitation exoskeleton with improved performance. Front Neurorobot 15:664062

Bai J, Song AG, Wang T, Li HJ (2019) A novel backstepping adaptive impedance control for an upper limb rehabilitation robot. Comput Electr Eng 80

Tao GL, Shang C, Meng DY, Zhou CC (2017) Posture control of a 3-RPS pneumatic parallel platform with parameter initialization and an adaptive robustmethod. Front Inf Technol Electr Eng 18:303–316

Tao G (2005) Modeling and controlling of parallel manipulator joint driven by pneumatic muscles. Chin J Mech Eng 18:537–541

Li ZJ, Li JJ, Zhao SN, Yuan YX, Kang Y, Chen CLP (2019) Adaptive neural control of a kinematically redundant exoskeleton robot using brain-machine interfaces. IEEE Trans Neural Netw Learn Syst 30:3558–3571

Soekadar SR, Witkowski M, Gomez C, Opisso E, Medina J, Cortese M, Cempini M, Carrozza MC, Cohen LG, Birbaumer N, Vitiello N (2016) Hybrid EEG/EOG-based brain/neural hand exoskeleton restores fully independent daily living activities after quadriplegia. Sci Robot 1

Song R, Tong KY, Hu X, Zhou W (2013) Myoelectrically controlled wrist robot for stroke rehabilitation. J Neuroeng Rehabil 10:52

D A, R S, W GJ (2017) Movement performance of human-robot cooperation control based on EMG-driven hill-type and proportional models for an ankle power-assist exoskeleton robot. IEEE Trans Neural Syst Rehabil Eng 25:1125–1134

Wang WD, Qin L, Yuan XQ, Ming X, Sun TS, Liu YF (2019) Bionic control of exoskeleton robot based on motion intention for rehabilitation training. Adv Robot 33:590–601

Wang WD, Li HH, Xiao MH, Chu Y, Yuan XQ, Ming X, Zhang B (2020) Design and verification of a human-robot interaction system for upper limb exoskeleton rehabilitation. Med Eng Phys 79:19–25

Gomez-Rodriguez M, Peters J, Hill J, Scholkopf B, Gharabaghi A, Grosse-Wentrup M (2011) Closing the sensorimotor loop: haptic feedback facilitates decoding of motor imagery. J Neural Eng 8

Cui X, Chen WH, Jin X, Agrawal SK (2017) Design of a 7-DOF cable-driven arm exoskeleton (CAREX-7) and a controller for dexterous motion training or assistance. Ieee-Asme Trans Mechatron 22:161–172

Thomas R, Johnsen LK, Geertsen SS, Christiansen L, Ritz C, Roig M, Lundbye-Jensen J (2016) Acute exercise and motor memory consolidation: the role of exercise intensity. PLoS ONE 11:e0159589

Escalona MJ, Brosseau R, Vermette M, Comtois AS, Duclos C, Aubertin-Leheudre M, Gagnon DH (2018) Cardiorespiratory demand and rate of perceived exertion during overground walking with a robotic exoskeleton in long-term manual wheelchair users with chronic spinal cord injury: a cross-sectional study. Ann Phys Rehabil Med 61:215–223

Wang F, Barkana DE, Sarkar N (2010) Impact of visual error augmentation when integrated with assist-as-needed training method in robot-assisted rehabilitation. IEEE Trans Neural Syst Rehabil Eng 18:571–579

Amin J, Sharif M, Yasmin M, Fernandes SL (2018) Big data analysis for brain tumor detection: deep convolutional neural networks. Fut Gen Comput Syst Int J of Esci 87:290–297

Ozkul F, Palaska Y, Masazade E, Erol-Barkana D (2019) Exploring dynamic difficulty adjustment mechanism for rehabilitation tasks using physiological measures and subjective ratings. IET Signal Proc 13:378–386

Udendhran R, Balamurugan M (2021) Towards secure deep learning architecture for smart farming-based applications. Complex Intell Syst 7:659–666

Song ZB, Guo SX, Pang MY, Zhang SY, Xiao N, Gao BF, Shi LW (2014) Implementation of resistance training using an upper-limb exoskeleton rehabilitation device for elbow joint. J Med Biol Eng 34:188–196

Acknowledgements

This work was supported by the Shaanxi Provincial Key R&D Program, under Grant no. 2020KW-058; Natural Science Foundation of Shaanxi Province, under Grant no. 2020JM-131; and the Fundamental Research Funds for the Central Universities, under Grant no. 31020200503001.

Funding

Shaanxi Provincial Key R&D Program (Grant No. 2020KW-058), Natural Science Foundation of Shaanxi Province (Grant No. 2020JM-131), Fundamental Research Funds for the Central Universities (31020200503001).

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all the authors, the corresponding author states that there is no conflict of interest.

Ethics approval

The experimental protocol was established, according to the ethical guidelines of the Helsinki Declaration and was approved by the Human Ethics Committee of Northwestern Polytechnical University. The Judgement’s reference number is 202001001.

Consent to participate

Written informed consent was obtained from individual or guardian participants.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, W., Zhang, J., Wang, X. et al. Motion intensity modeling and trajectory control of upper limb rehabilitation exoskeleton robot based on multi-modal information. Complex Intell. Syst. 8, 2091–2103 (2022). https://doi.org/10.1007/s40747-021-00632-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00632-2