Abstract

The Histology Classifier app was developed as a strategy to recreate laboratory sessions using digital platforms. This mobile app uses convolutional neural networks (CNN) to help students verify their identification of histological basic tissues. Further work will include measuring any educational benefits of this resource across the medical student cohort.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Classroom lectures and practical sessions of many medical schools have been rapidly migrating towards online teaching in response to the COVID-19 pandemic. Despite the many benefits of distance learning, educators began to realize that it came along with certain limitations [1]. Laboratory sessions of visual-centric subjects such as histology and pathology are challenging to replace with common distance learning platforms, because interpreting images is a skill that students master after repeated exposure to a considerable amount of images, often with guidance from an instructor. Alongside this modern approach to teaching, institutions should take advantage of digital technologies such as cloud computing and artificial intelligence (AI) to create an enhanced educational experience.

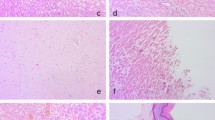

Usually, histology courses introduce students to the basic types of tissue, providing them with information about the structure and function of complex organs. With the purpose of aiding students to correctly identify histological basic tissues, I developed the Histology Classifier app (https://play.google.com/store/apps/details?id=com.app.bima). This Android application uses deep convolutional neural networks (CNN) to quickly make an accurate identification of histological images, using the camera on a smartphone. Furthermore, a website (https://bimascope.wordpress.com/2020/07/15/remote-lab/) is provided with sample micrographic images of each topic that students can display on any screen to test the application, thus recreating a laboratory session.

For image recognition, the MobileNetV2 network was chosen [2], a memory-efficient CNN architecture designed for mobile applications, to train five different models covering subclasses of the basic tissues including the epithelia, glands, connective, muscle, and the nerve tissue. Each one of the CNN models was trained by using histologic imagery of the Virtual Microscopy Learning Resources from both the University of Michigan and the University of British Columbia [3, 4]. Training, validation, and testing of each one of the CNN models were done using the Tensorflow framework (v1.14.0). After the training process, the value of the test accuracy of each model was recorded. By comparing these values, we gained a better understanding of each model’s performance with their corresponding datasets (see references in Table 1 for details about evaluation protocol). The user interface adapts the TensorFlow Lite API to an easy-to-use design (Fig. 1).

The app was built by BIMA, a biomedical informatics group in Venezuela, and trialed with students enrolled in the University of the Andes (ULA) School of Medicine Histology course, adapting the content to their recommended bibliography. When the campus was closed due to the pandemic, an illustrated step-by-step instruction was sent to the students, encouraging them to use the app to validate their own analysis and identification results. Within the app, users start by preselecting one of the five models available in the drop-down list, this enables the device’s camera for image classification. Moreover, a thresholding procedure was integrated, such that, if the match probability of a specific tissue is > 80%, the app displays a text description that corresponds to the observed image (Fig. 1).

This digital tool was well received by both the students and faculty. In particular, students praised the app for serving as an unconventional self-review tool. One student mentioned that she found this innovation as an entertaining way to assess her competency of recognizing cells and tissues. Feedback from course instructors was positive as well, they inquired about the possibility of adding interactive quizzes and expanding the app’s availability for IOS devices. The next steps under development will include a randomized controlled trial to analyze the app’s effectiveness in aiding the student’s learning process. Although, low-quality or ambiguous images can be confusing and mislabeled by the Histology Classifier app, upcoming versions will aim to improve the model’s recognition ability, while also introducing features that have been requested by users.

I believe that this learning method offers students a practical approach to using AI-assisted tools in research and medical diagnostics, thus creating valuable data through user insights and inputs. Approaching histopathological image-related diagnostics by using smartphones has demonstrated promising results [5]; therefore, implementations such as this one could be considered a future clinical choice for fast and reliable analysis of large amounts of samples.

Data Availability

The image datasets generated during and/or analyzed during the current project are available from the corresponding author upon request.

References

de Jong PG. Impact of moving to online learning on the way educators teach. Med.Sci.Educ. 2020;30:1003–4. https://doi.org/10.1007/s40670-020-01027-7.

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L. MobileNetV2: inverted residuals and linear bottlenecks. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, 2018, pp. 4510–20. https://doi.org/10.1109/CVPR.2018.00474.

Michigan Histology and Virtual Microscopy Learning Resources. University of Michigan Medical School, Ann Arbor, MI. 2020. http://histology.sites.uofmhosting.net. Accessed 20 Nov 2019.

Histology and Virtual Microscopy Learning Resources. University of British Columbia Faculty of Medicine, Vancouver, BC. 2020. http://histology.sites.uofmhosting.net/, https://cps.med.ubc.ca/virtual-histology/. Accessed 02 Dec 2019.

Jiang YQ, Xiong JH, Li HY, Yang XH, Yu WT, Gao M, et al. Recognizing basal cell carcinoma on smartphone-captured digital histopathology images with a deep neural network. Br J Dermatol. 2020;182(3):754–62. https://doi.org/10.1111/bjd.18026.

Acknowledgments

The author would like to thank Jose Gabriel Rujano for his helpful advice and manuscript editing as well as Charan Ghumman, Dr. Javier Salinas, and the Histology Department of the University of the Andes for their feedback and assistance on this work.

Funding

This project was financed solely by the author.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author declares that he has no conflict of interest.

Ethical Approval

NA

Informed Consent

NA

Code Availability

All code is available from the corresponding author upon reasonable request.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rujano-Balza, M.A. Histology Classifier App: Remote Laboratory Sessions Using Artificial Neural Networks. Med.Sci.Educ. 31, 305–307 (2021). https://doi.org/10.1007/s40670-021-01206-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40670-021-01206-0