Abstract

We present a novel approach to the system inversion problem for linear, scalar (i.e. single-input, single-output, or SISO) plants. The problem is formulated as a constrained optimization program, whose objective function is the transition time between the initial and the final values of the system’s output, and the constraints are (i) a threshold on the input intensity and (ii) the requirement that the system’s output interpolates a given set of points. The system’s input is assumed to be a piecewise constant signal. It is formally proved that, in this frame, the input intensity is a decreasing function of the transition time. This result lets us to propose an algorithm that, by a bisection search, finds the optimal transition time for the given constraints. The algorithm is purely algebraic, and it does not require the system to be minimum phase or nonhyperbolic. It can deal with time-varying systems too, although in this case it has to be viewed as a heuristic technique, and it can be used as well in a model-free approach. Numerical simulations are reported that illustrate its performance. Finally, an application to a mobile robotics problem is presented, where, using a linearizing pre-controller, we show that the proposed approach can be applied also to nonlinear problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Systems inversion, or output tracking, is a long-studied topic in control theory since the seminal work of Brockett and Mesarović [1], where the term reproducibility was used to denote it. Broadly speaking, it consists of finding, for a given system, the input that produces the desired output (thus justifying the name system inversion).

This technique is most often applied to systems already equipped with a feedback controller, which cares for stability and disturbance rejection, but need a better tracking in the transient phase, that makes a feed-forward action appropriate.

The systems inversion problem has been solved for linear SISO systems by Brockett [2], and since then it has been generalized to multivariable linear systems by several authors, e.g. Sain and Massey [3], and Silverman and Payne [4].

Motivated by practical problems in the control of flexible structures, the extension to multivariable nonlinear systems has been realized: see Hirschom’s paper [5] for the theoretical aspects, and Di Benedetto and Lucibello’s [6] for an application to the endpoint control of a flexible-arm manipulator. In the latter, the system under consideration is also time-varying.

More recently, the system inversion problem has been cast into an optimization frame; for example, Iamratanahl and Devasia [7] have investigated the minimum-time and minimum-energy output tracking problem for linear, multi-input, multi-output (MIMO) systems, yet constrained to square plants, i.e. plants having the same number of inputs and outputs. Afterwards, in [8] it has been shown that the output minimum transition time for nonlinear square MIMO systems under a constraint on the input intensity can be improved beyond the performance of the ‘bang-bang control’ by using pre- and post-actuation.

On returning to linear SISO systems, which are the subject of this paper, the system inversion problem has been often cast into transferring the system’s output from an initial value to a final one with some additional criteria, such as constraints on the shape of the output (for instance, not peeking, or having bounded derivatives [9]), or using minimum input energy [10].

The approach of ‘imposing the shape’ of the output taken in [9] has been extended recently in [11], where nonlinear scalar systems are considered, with the constraints fulfilment embedded into the input design optimization problem, and in [12], where the behavioural approach has been exploited to minimize the transition time and enforce a given smoothness degree of the input.

The above problems are made more difficult by the presence of right-half-plane zeros in the plant, which are common, for example, in the linearized models of flexible structures and cannot be recovered by the feedback controller: see [13] for an application. As explained in that paper, the transient tracking performance of linear MIMO systems is limited by its right-half-plane zeros, and a measure of performance that involves those zeros only can be given.

The limit case of nonhyperbolic systems, i.e. systems having zeros on the imaginary axis, which can lead the input signal to be very large [14], has been considered by Jetto et al.: see [15, 16]. In particular, the latter is one of the very few papers we are aware of that casts the inversion problem into a discrete-time frame, and assumes an ‘a priori’ structure of the input as a spline function. See also Marconi et al. [17] for a geometric approach to the problem for linear, discrete-time SISO systems.

In this paper, for SISO time-invariant systems we present an interpolation approach to the output tracking problem: the system’s output is forced to pass through a set of points taken from an ideal output, using a piecewise-constant input that minimizes the transition time, subject to a constraint on its intensity.

As already said, constraining the input intensity is often considered in the inversion literature, owing to its relevance in practical problems: see, e.g. [18], where a saturation approach is used, or [19], where constraints on both the input and its change rate are imposed.

The use of piecewise-constant input functions, also known as block-pulse functions (BPF), gives some advantages [20], or obeys to some constraints [21], where the structure of the controller is concerned. Piecewise-constant input functions are widely used, for example, in mobile robotics, where robots usually travel for the most part of their journey at a constant speed.

The main result of this contribution is a locally exact relationship between the transition time and the control effort needed for the transition. That relationship allows us to propose an algorithm that provides the minimum transition time for a given number of output values to be interpolated and a given bound on the input, and computes the values of the control signal segments.

Unlike the previously cited papers, owing to its purely algebraic nature, the algorithm we propose does not rely upon the stability and/or the minimum-phase property of the controlled system. It can be applied to time-varying systems as well, although in that case the relationship between the transition time and the control effort does not apply rigorously, and it has to be viewed as a heuristic approach. It is also worth to remark that the algorithm can be used in a model-free context, because it only requires the knowledge of the impulse response of the sampled data system: see Remark 1 in the next section.

A discussion about the behaviour of the system’s output far from the interpolation points is given, and a numerical comparison of the algorithm performance versus a method from the recent literature is also presented, which shows the effectiveness of the proposed approach.

Finally, an application of the proposed control approach to mobile robotics is presented, where a unicycle robot is driven to track a given trajectory. The method is applied to the input–output linearized model obtained by a feedback linearization technique [22], and then the optimal signal is used to feed the nonlinear system. This suggests that our approach can be straightforwardly applied also to nonlinear inversion problems when a linearizing-decoupling pre-controller is available.

2 Problem statement

Let \(\Sigma \) be the continuous-time, linear time-invariant SISO system of order n described by the state-space model

where \(x\in {\textbf {R}}^n\) is the state vector, \(u\in {\textbf {R}}\) and \(y\in {\textbf {R}}\) are the input and output signals, respectively, with \(A\in \mathbf {R}^{n\times n}\), b and \(c\in \mathbf {R}^n\). We assume \(x(0) = 0\) as the initial state, which in turn implies \(y(0) = 0\).

For a given \(T > 0\), let \(t_k = kT, k \in \mathbf {N}\), be a set of equally spaced time instants, and assume that the input u is constant in each interval \([t_k, t_{k+1})\), i.e. \(u(t) \equiv u(t_k)\) in that interval. Then the output of system \(\Sigma \) at the time instants \(t_k > 0\) is:

For this system, the minimum-time, output interpolation problem we consider is:

Problem. Given a set \(\{y^*_k, \ k = 1, \ldots ,m\}\) of desired output values, find the smallest interval size T and the corresponding input sequence \(\{u(t_0),\ldots ,u(t_{m-1})\}\), such that \(y(t_k) = y^*_k\), and a threshold on the input intensity is fulfilled: \(\vert u(t_k)\vert \le U_M, k = 0, \ldots , m-1\).

In other words, the interval size \(T^*\) has to be chosen that solves the optimization problem:

where \(y(t_k), k = 1, \ldots , m\), are given by Eq. (1).

To solve the problem we first note that, as the matrix A is constant, we have, independently of k:

and, denoting by \(\theta _T\) the integral at the r.h.s. of the previous formula, we obtain by iteration:

Finally, defining \(h_{p}(T) = c' \text {e}^{A(p-1)T} \theta _T\), we get

The sequence \(\{h_p(T), p \in {\textbf {N}}\}\), which depends on the choice of T, is the impulse response sequence of the sampled-data system corresponding to the continuous time system \(\Sigma \) for that value of T. Also notice that, if we let

then we have \(h_p(T) = h(pT), p \in {\textbf {N}}\).

Remark 1

The generic value \(h_p(T)\) can be seen as the difference between two consecutive values of the step response \(y_s\) of the system, i.e.:

This allows to obtain the values \(h_p(T)\) also by a real-ground experiment or a numerical simulation.

In view of Eq. (3), the constraint \(y(t_k) = y^*_k\) is:

which is a linear algebraic system of the form \(H_T U = Y^*\), where \(H_T\) is the lower-triangular Toeplitz’s matrix

and \(U = [u(t_0) \cdots u(t_{m-1})]', \;\; Y^* = [y_1^* \cdots y_m^*]'\).

Remark 2

Notice that the matrix \(H_T\) is nonsingular if and only if \(h_1(T) = c' \theta _T\ne 0\), i.e. if the step response of system \(\Sigma \) does not vanish at \(t = T\).

If \(h_1(T) \ne 0\), then the system \(H_T\, U = Y^*\) has a unique solution, and we rewrite Problem (2) in the more compact form

3 Main result

It is intuitive, on the basis of physical considerations, that the smaller we take T, the larger the maximum input size \(\Vert U\Vert _\infty \) becomes; this norm is bounded by [23, p. 345]

Where the infinity norm of \(H_T^{-1}\) is concerned, we will prove the following result.

Proposition 1

For sufficiently small values of T, the infinity norm of \(H^{-1}_T\) obeys to

where q is the order of first derivative of h that does not vanish at the origin, and \(\eta \) is independent of T.

Proof

See Appendix. \(\square \)

Remark 3

It is worth noticing that the value q in Proposition 1 is equal to the relative degree of system.

As a consequence of this result and of Eq. (8), increasing T makes, as expected, the upper bound on the control effort smaller, thus enlarging the feasible region of Problem (7), provided that T is small enough for Proposition 1 to hold. This is the rationale behind the algorithm to solve Problem (7) presented in the next section.

The numerical solution of Toeplitz’s linear system \(H_T U = Y^*\) can be carried out either by any standard technique for triangular systems, with a computational cost of \(O(m^2)\) elementary operations, or by the tailored Commenges-Monsion’s algorithm [24], which requires \(O(m \log (m))\) flops.

4 Algorithm

The result of Sect. 3 enables us to propose an iterative algorithm to solve Problem (7). The idea is to start the search for the solution from a small value of T, that will probably violate the constraint on the input, and then increase that value by repeatedly doubling it until the constraint is satisfied.

In view of Proposition 1, each time T is doubled the intensity of the input needed for the control decreases, until a feasible solution is found, provided that T is small enough for Proposition 1 to apply. Formally, we have the following result about the local convergence of the algorithm.

Proposition 2

If the solution \(T^*\) of Problem (7) is small enough for Proposition 1 to hold, owing to the continuity of the objective function, the sequence generated by the proposed algorithm converges to it.

The above result, however, cannot guarantee the convergence to the solution of any instance of Problem (7), because the optimal solution could exist, but be large enough that the approximation given by Proposition 1 does not hold (that essentially depends on the time constants of the system). In that case the proposed algorithm has to be viewed as a heuristic procedure.

5 Output behaviour analysis

A limitation of the proposed method is that it guarantees the exact matching between the real output and the ideal one at the time instants \(t_k\), but not in the inner points of any interval \([t_{k}, t_{k+1})\), because no specification is given for those points.

However, it is generally desirable that the values of the output in the open interval \((t_{k}, t_{k+1})\) are not too far from the values \(y_{k}^*\) attained at \(t = t_{k}\), or similarly at \(t = t_{k+1}\).

It is intuitive, and it has been shown in [25] that this can be obtained by making T small enough, provided that this is allowed by the constraints. Here we give a limitation on the distance

which accounts for the variability of the output to the right of each interpolation point.

In that interval, the system’s output is given by

and taking its first-order Taylor approximation around the instants \(t_k\) we get, for \(t \in [t_{k},t_{k+1})\),

The computation of \(\dot{y}(t_k)\) yields

and, by taking into account the constraint on the input size, we get

which is a bound that can be computed independently of the goal \(Y^*\) to be reached (but it needs that the interval length T has been fixed), or

which is a sharper bound that can be obtained once the target \(Y^*\) has been fixed and the input has been computed.

Denoting by \(Q_1(k,T,U_M)\) the first sum and by \(Q_2(k,T,U)\) the second one, we have two bounds for \(e_k(t)\):

which are clearly null in \(t = t_k\), and maximized by \(t \rightarrow t_{k+1}\); in the latter case we have

Thus, the first formula allows, for a given T and a given threshold \(U_M\) on the input size, to know the worst a priori error within each interval.

6 The time-varying case

The proposed approach can be extended to time-varying linear SISO systems described by

with the initial condition \(x(t_0) = 0\). Given again a grid of equally spaced points \(t_k = kT, k \in \mathbf {N}\), and a piecewise constant input sequence \(\{u(t_0),\ldots ,u(t_{m-1})\}\), we can write

where \(\Phi \) is the state transition matrix [26] of the system. Denoting by \(M(t_k,t_{k-1})\) the integral in the above formula we have

and we can again iterate, starting from \(x(t_0) = 0\), to obtain the solution at the time instants \(t_k\)

where the composition property \(\Phi (t,\sigma ) \Phi (\sigma ,\xi ) = \Phi (t,\xi )\) and \(\Phi (t,t) = I\) have been used [26].

Defining \(h_{k,i}(T) = c(t_k) \Phi (t_k,t_{i+1}) M(t_{i+1},t_i)\), the interpolation condition can be written also for the system \(\Sigma _t\)

which is again a lower triangular system, although the matrix \(H_T = \{h_{i,j}(T)\}, i,j = 1, \ldots , m\), is not a Toeplitz’s anymore. This implies that the proposed algorithm can still be used to find the optimal input sequence, but it has to be regarded as a heuristic, because the result of Proposition 1 does not apply.

7 Numerical experiments

In this section we report the results of three numerical experiments, aimed at investigating the effectiveness of the proposed algorithm in solving the minimum-time interpolation problem. The computations have been performed in MATLAB running on a standard PC. We denote by \(\tau = mT\) the length of the control interval, and in all experiments we take \(T_{min} = 10^{-8}\) and \(\varepsilon = 10^{-6}\).

In the three experiments, the ideal output is the polynomial function defined in the interval \([0, \tau ]\) proposed in [9]

That function and its first p derivatives vanish in \(t = 0\), the first p derivatives vanish also in \(t = \tau \), and the function is monotonically increasing independently of p, thus representing a smooth transition from the initial to the final values of the output.

The parameters to be set for each experiment are the input threshold \(U_M\), the number of desired output values m and the parameter p of (12). Following [9], the latter will be taken equal to the relative degree of the plant.

Remark 4

It is worth reporting that the three experiments have been performed also using the model-free approach illustrated in Remark 1, obtaining exactly the same results shown in this section.

7.1 Experiment 1

In the first numerical experiment we consider the problem proposed in [27], where a third-order nonminimum phase system is considered, described by the transfer function

the ideal output function \(y_d(t,\tau )\) from which we have taken the desired output values \(y_k^*\) is polynomial (12) with \(p = 1\), i.e.

The approach pursuit in that paper relies on the concept of cause/effect pairs associated with the system, and it is aimed at finding, between all input/output pairs satisfying some smoothness property, the one that minimizes the transition time between two set points.

In this experiment we set \(m = 8\), \(U_M = 1.4\). Figure 1 shows the error \(y(t) - y_d(t,\tau )\) and the piecewise constant control signal. The minimum control time found by the proposed algorithm is \(\tau ^* = 0.47\) s, which is half the value \(\tau ^* = 0.94\) s obtained in [27] using \(U_M = 1.2\).

We remark that a smooth control signal is generated by the method in [27], differently from our approach that returns a BPF, which is less expensive to be generated.

7.2 Experiment 2

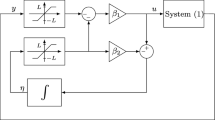

In the second experiment we have considered the problem proposed in [9], where a plant with transfer function

is controlled by the (proper) PID regulator

with \(K_c = 3.6172, \delta = 0.4323\) and \(\omega _n = 5.1073\), in a unity-feedback scheme that gives rise to a sixth-order system with a relative degree of three.

The value \(p = 3\), equal to the relative degree of the closed-loop plant, has been taken in Eq. (12), yielding to the ideal output function

This problem has been solved in [9] using a bisection search scheme similar to the one proposed here, which involves a global optimization search in a single variable at each step, and there the optimal value \(\tau ^* = 0.367\) s has been found, using a threshold \(U_M = 3\) on the input intensity.

We have used for our algorithm the threshold value \(U_M = 2.8\) and \(m = 5\), and have obtained \(\tau ^* = 0.358\) s, which is slightly better than the value obtained in the cited paper.

Figure 2 shows the functions y(t) and \(y_d(t,\tau )\) and the piecewise constant control signal. There you can see that, owing to a couple of complex poles with very low damping (\(\delta =0.15\)), the system output peeks in the time intervals between the interpolation points.

The last comment made for the previous numerical experiment also applies to this one.

7.3 Experiment 3

In the third experiment we consider the problem presented in [28], where a rocket must follow a one-dimensional position profile, and the rocket’s mass is time-varying because of the fuel consumption during the journey.

It is assumed that the rocket’s mass varies according to

where \(m_R\) is the mass of the empty rocket, \(m_F\) is the initial mass of the fuel, and \(\alpha \) is the fuel’s consumption rate.

On denoting by z(t) the position of the rocket and by \(\zeta \) the viscous friction coefficient, the rocket’s motion is governed byFootnote 1

and, returning to the dot notation for the time derivatives:

Suppose that a proportional position controller with value K has been pre-wrapped to the plant, obtaining the model

on defining \(x(t) = [z(t)\; \dot{z}(t)]'\), the last equation can be re-written in state-space form (10) with the positions

The plant’s physical parameters are \(m_R = 1.5\) kg, \(m_F = 1\) kg, \(\zeta = 10^{-5}\) N s/m, \(\alpha = 0.5\,\hbox {s}^{-1}\), and the proportional controller is \(K = 5\). The ideal output trajectory in this case is given again by Eq. (12) with \(p = 4\), which is an arbitrary choice, although the concept or relative degree has been introduced also for time-varying systems [30].

The numerical computation of functions \(h_{ij}\) of Eq. (11) for this model has been performed by a simulation, using the integrator ode45 of MATLAB, assuming as input a suitable set of m block-pulse functions. The parameters of the algorithm we have used in this case are \(m = 8\) and \(U_M = 12\). The optimal value of \(\tau \) found by our method is \(\tau ^* = 1.41\) s, and the tracking error and the input are shown in Fig. 3.

8 An application to mobile robotics

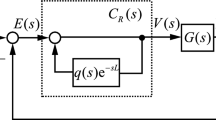

In this section, the application of the proposed approach to the control problem of the unicycle mobile robot is considered. That kind of mobile robot is modelled as in [22]

where \(x_r, y_r, \theta _r\) represent the robot’s pose, i.e. the robot centre’s coordinates in the plane and its heading, while \(v_r\) and \(\omega _r\) are the robot’s inputs. In particular, \(v_r\) is the driving velocity, i.e. the modulus (including the sign) of the velocity vector of the contact point, whereas the steering velocity \(\omega _r\) is the angular velocity of the wheel around the vertical axis passing through the robot’s centre: see Fig. 4. Assuming that the robot’s inputs are constrained by \(\vert v_r(t)\vert \le V\) and \(\vert \omega _r(t)\vert \le \Omega \), the goal is to design a control law that drives the robot along a desired trajectory \((x^*(t), y^*(t))\).

To this aim, the standard input–output linearization method [22] has been used: by applying the control law

robot’s model (14) is straightforwardly reformulated as

where \((x_B, y_B)\) are the coordinates of a point B located along the sagittal axis of the unicycle at a distance \(b > 0\) from its centre. Note that the robot’s heading angle \(\theta _r\) cannot be controlled by this technique.

The proposed method is then applied to linearized and decoupled model (16) to drive the robot along a desired path; the resulting control scheme is shown in Fig. 5. The constraints to impose on the ‘virtual inputs’ \(u_x\) and \(u_y\) to satisfy the real constraints on \(v_r\) and \(\omega _r\) can be obtained by writing linearization law (15) explicitly

and obtaining

It follows easily that by imposing the ‘virtual constraints’ \(\vert u_x(t)\vert \le U_M\) and \(\vert u_y(t)\vert \le U_M\), with \(U_M \le \min \{V,\Omega \, b \}/2\), also the real constraints will be satisfied.

The BPF control laws are then designed independently on the \(x_B\) and \(y_B\) axes using single integrator-based evolution (16). Two candidate values for T are obtained, namely \(T_x\) and \(T_y\), one for each axis. To be compliant with the desired trajectory on both axes, the value of T is chosen as the largest of them, and then the virtual signals \(u_x(t)\) and \(u_y(t)\) are computed.

In Fig. 6 the reference trajectory and the robot motion are shown. The constraints used in this simulation were \(V = 2\) m s\({}^{-1}\), \(\Omega = \pi \) rad s\({}^{-1}\), and \(b = 0.5\) m. A total of \(m = 25\) input pulses have been used, and \(T^* = 1.46\) s has been obtained.

The resulting control inputs \(u_x(t)\) and \(u_y(t)\) are reported in Fig. 7, and as expected, they are compliant with the imposed constraints.

The evolution of \((x_B, y_B)\) coincides with the reference trajectory at the points \((x^*(kT),y^*(kT))\), while the robot’s centre trajectory error satisfies

as it is expected due to the input–output linearization.

9 Conclusions

We have proposed a control methodology to solve the inversion problem for linear SISO systems with a minimum-time criterion and a bounded piecewise-constant input. We have shown that, in the proposed setting, the norm of the input is a decreasing function of the control interval length, a property that is often assumed true on the basis of physical considerations but that is formally proven only in specific cases.

Two distinguishing features of the proposed method are that it does not rely on any assumption, besides linearity, on the plant, and that it can be used in a model-free context, as the only data needed are the step response of the system.

Furthermore, it has been applied successfully to the control of a nonlinear system for which a linearizing/decoupling controller can be devised.

Last, but not by relevance, the proposed approach generates a control signal of the BPF type, that needs, for its generation in a practical implementation, a much simpler controller, making it appealing in the industrial applications.

The current research aims at two goals: (i) to extend the proposed approach to MIMO systems, and (ii) to propose a suitable optimization algorithm to replace the naive bisection search.

Code Availability

The Matlab code for the numerical experiments is available upon request.

Change history

12 October 2022

Missing Open Access funding information has been added in the Funding Note.

Notes

Actually, this is an approximate model of the rocket, as the momentum is neglected: see [29].

References

Brockett RW, Mesarović MD (1965) The reproducibility of multivariable control systems. J Math Anal Appl 11:548–563. https://doi.org/10.1016/0022-247X(65)90104-6

Brockett RW (1965) Poles, zeros and feedback: state-space interpretation. IEEE Trans Autom Control 10(2):129–135. https://doi.org/10.1109/TAC.1965.1098118

Sain MK, Massey JL (1969) lnvertibility of linear time-invariant dynamical systems. IEEE Trans Autom Control 14(2):141–149. https://doi.org/10.1109/TAC.1969.1099133

Silverman LM, Payne HJ (1971) Input-output structure of linear systems with application to the decoupling problem. SIAM J Contr 9(2):199–233. https://doi.org/10.1137/0309017

Hirschom RM (1979) Invertibility of observable multivariable nonlinear systems. IEEE Trans Autom Control 24(6):855–865. https://doi.org/10.1109/TAC.1982.1102901

Di Benedetto MD, Lucibello P (1993) Inversion of nonlinear time-varying systems. IEEE Trans Autom Control 38(8):1259–1264. https://doi.org/10.1109/9.233163

Iamratanakul D, Devasia S (2004) Minimum-time/energy output-transitions in linear systems. In: Proceedings of American control conference 2004, Boston, MA, pp 4831–4836. https://doi.org/10.23919/ACC.2004.1384078

Devasia S (2011) Nonlinear minimum-time control with pre- and post-actuation. Automatica 47(7):1379–1387. https://doi.org/10.1016/j.automatica.2011.02.022

Piazzi A, Visioli A (2001) Optimal noncausal set-point regulation of scalar systems. Automatica 37(1):121–127. https://doi.org/10.1016/S0005-1098(00)00130-8

Perez H, Devasia S (2009) Optimal output-transitions for linear systems. Automatica 39(2):181–192. https://doi.org/10.1016/S0005-1098(02)00240-6

Himmel A, Sager S, Sundmacher K (2020) Time-minimal set point transition for nonlinear SISO systems under different constraints. Automatica 114:108806. https://doi.org/10.1016/j.automatica.2020.108806

Minari A, Piazzi A, Costalunga A (2020) Polynomial interpolation for inversion-based control. Eur J Control 56:62–72. https://doi.org/10.1016/j.ejcon.2020.01.007

Qiu L, Davison EJ (1993) Performance limitations of non-minimum phase systems in the servomechanism problem. Automatica 29(2):337–349. https://doi.org/10.1016/0005-1098(93)90127-F

Devasia S (1997) Output tracking with nonhyperbolic and near nonhyperbolic internal dynamics: helicopter hover control. J Guid Control Dyn 20(3):573–580. https://doi.org/10.2514/2.4079

Jetto L, Orsini V, Romagnoli R (2015) Optimal transient performance under output set-point reset. Int J Robust Nonlinear Control 26(13):2788–2806. https://doi.org/10.1002/rnc.3475

Jetto L, Orsini V, Romagnoli R (2015) Spline based pseudo-inversion of sampled data non minimum phase systems for an almost exact output tracking. Asian J Control 17(5):1866–1879. https://doi.org/10.1002/asjc.1079

Marconi L, Marro G, Melchiorri C (2001) A solution technique for almost perfect tracking of non-minimum-phase, discrete-time linear systems. Int J Control 74(5):496–506. https://doi.org/10.1080/00207170010014557

Graichen K, Zeitz M (2008) Feedforward control design for finite-time transition problems of nonlinear systems with input and output constraints. IEEE Trans Autom Control 53(5):1273–1278. https://doi.org/10.1109/TAC.2008.921044

Himmel A, Sager S, Sundmacher K (2020) Time-minimal set point transition for nonlinear SISO systems under different constraints. Automatica 114(4):108806. https://doi.org/10.1016/j.automatica.2020.108806

Azhmyakov V, Basin M, Reincke-Collon C (2014) Optimal LQ-type switched control design for a class of linear systems with piecewise constant inputs. In: Proceedings of IFAC world congress 2014, Cape Town, South Africa, pp 6976–6981. https://doi.org/10.3182/20140824-6-ZA-1003.00515

Stanton SA, Marchand BG (2010) Finite set control transcription for optimal control applications. J. Spacecr. Rockets 47:457–471. https://doi.org/10.2514/1.44056

Siciliano B, Sciavicco L, Villani L, Oriolo G (2009) Robotics, modelling, planning and control. Springer, Berlin. https://doi.org/10.1007/978-1-84628-642-1

Horn RA, Johnson CR (2013) Matrix analysis, 2nd edn. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511810817

Commenges D, Monsion M (1984) Fast inversion of triangular Toeplitz matrices. IEEE Trans Autom Control 29(3):250–251. https://doi.org/10.1109/TAC.1984.1103499

Deb A, Sarkar G, Sen SK (1995) Linearly pulse-width modulated block pulse functions and their application to linear SISO feedback control system identification. IEE Proc Control Theory Appl 142(1):44–50. https://doi.org/10.1049/ip-cta:19951497

Brockett RW (1970) Finite dimensional linear systems. SIAM. https://doi.org/10.1137/1.9781611973884

Piazzi A, Visioli A (2005) Using stable input-output inversion for minimum-time feedforward constrained regulation of scalar systems. Automatica 41(2):305–313. https://doi.org/10.1016/j.automatica.2004.10.009

Forbes JR, Damaren CJ (2010) Passive linear time-varying systems: state-space realizations, stability in feedback, and controller synthesis. In: Proceedings of American control conference 2010, Baltimore, MD, pp 1097–1104. https://doi.org/10.1109/ACC.2010.5530792

Kleppner D, Kolenkow R (2014) An introduction to mechanics. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511794780

Ilchmann A, Mueller M (2007) Time-varying linear systems: relative degree and normal form. IEEE Trans Autom Control 52(5):840–851. https://doi.org/10.1109/TAC.2007.895843

Acknowledgements

The authors sincerely thank the referees for the constructive comments to the early version of this paper. They also wish to thank their former colleague and mentor professor Luciano Carotenuto of Università della Calabria, Italy, for the helpful discussions.

Funding

Open access funding provided by Universitá della Calabria within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work. All authors have read and agreed to the proofs of the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Appendix

Appendix

Preliminaries on Toeplitz’s matrices

Let \(H_T\) be the lower triangular Toeplitz’s matrix of order m defined by Eq. (6), characterized by the elements \(\{h_1(T), \ldots , h_m(T)\}\) which define its first column: all the other entries are constant along the descending diagonals, i.e. \((H_T)_{ij} = h_{i-j+1}(T), i \ge j\).

That matrix is obviously invertible if and only if \(h_1 \ne 0\), and also its inverse is a lower triangular Toeplitz’s matrix: see [23, p. 34].

From now on, let \(h_1(T) \ne 0\) and \(B_T = H_T^{-1}\), and let us drop the dependence of the matrices on T. It is known (see the above citation) that the entries \(\{b_1, \ldots , b_m\}\) of the first column of \(B_T\), which define the whole inverse: \((B_T)_{ij} = b_{i-j+1}\), \(i \ge j\), are given by:

in particular, the second and the third entries are:

In our case we have \(\{h_p = h(pT)\), \(p = 1, \ldots , m\}\), where the function h is given by Eq. (4), so Toeplitz’s matrix is:

We underline that Proposition 1 of Sect. 3 holds for any function h of class \(C^q, q \ge 0\), on the interval [0; mT].

Proof of Proposition 1

As \(\{h_p = h(pT)\}\), the direct computation of the first \(b_p\)’s gives

Now two cases arise.

Case 1: \(h(0) \ne 0\). In this case, for sufficiently small T we can write \(h(pT) \approx h(0) + p\, h^{(1)}(0)\, T\), \(p = 1, \ldots , m\); as a consequence, entries \(b_1, b_2, b_3\) become

If T is small enough that \(h^{(1)}(0)\, T\) can be neglected with respect to h(0), we have

and it is easily shown by induction that all the elements \(b_p\) vanish for \(p \ge 3\). As a consequence, matrix \(B_T\) is a bidiagonal matrix that does not depend on T, and its infinity norm is approximated by

which proves the thesis for Case 1 with the position \(\eta (0) = 2/\vert h(0)\vert \).

Case 2: \(h(0) = 0\) and q is the order of the first derivative that does not vanish at the origin. In this case, for sufficiently small T we can write

and

thus, the approximation of the first \(b_p\)’s yields

The structure of those elements lets us conjecture that

where \(\gamma :{\textbf {N}}^2 \rightarrow {\textbf {Z}}\). This is true for \(p = 1\) with \(\gamma (1,q) = -1\); using the induction principle, we suppose that this is true up to \(p-1\), and then we compute the generic \(b_p\) by Eq. (17), obtaining:

thus our conjecture is proved by the induction property on defining recursively

As Eq. (18) has now been proved, we can write explicitly the norm of \(B_T\)

which is of the form of Eq. (9) on defining

\(\square \)

It can also be shown by induction that if \(q = 1\) we get \(b_p \approx 0\) for all \(p \ge 4\), and if \(q = 2\) we obtain \(b_{p+1} \approx - b_p\) for all \(p \ge 4\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

D’Alfonso, L., Fedele, G., Pugliese, P. et al. Interpolation-based, minimum-time piecewise constant control of linear continuous-time SISO systems. Int. J. Dynam. Control 11, 574–584 (2023). https://doi.org/10.1007/s40435-022-01012-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-022-01012-5