Abstract

Discrete-choice experiments (DCEs) have become a commonly used instrument in health economics and patient-preference analysis, addressing a wide range of policy questions. An important question when setting up a DCE is the size of the sample needed to answer the research question of interest. Although theory exists as to the calculation of sample size requirements for stated choice data, it does not address the issue of minimum sample size requirements in terms of the statistical power of hypothesis tests on the estimated coefficients. The purpose of this paper is threefold: (1) to provide insight into whether and how researchers have dealt with sample size calculations for healthcare-related DCE studies; (2) to introduce and explain the required sample size for parameter estimates in DCEs; and (3) to provide a step-by-step guide for the calculation of the minimum sample size requirements for DCEs in health care.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The minimum sample size needed for a discrete-choice experiment (DCE) depends on the specific hypotheses to be tested. |

DCE practitioners should realize that a small size effect may still be meaningful, but that a limited sample size prevents detection of such small effects. |

Policy makers should not make a decision on non-significant outcomes without considering whether the study had a reasonable power to detect the anticipated outcome. |

1 Introduction

Discrete-choice experiments (DCEs) have become a commonly used instrument in health economics and patient-preference analysis, addressing a wide range of policy questions [1, 2]. DCEs allow for a quantitative elicitation of individuals’ preferences for health care interventions, services, or policies. The DCE approach combines consumer theory [3], random utility theory [4], experimental design theory [5], and econometric analysis [1]. See Louviere et al. [6], Hensher et al. [7], Rose and Bliemer [8], Lancsar and Louviere [9], and Ryan et al. [10] for further details on conducting a DCE.

DCE-based research in health care is often concerned about establishing the impact of certain healthcare interventions and aspects (i.e., attributes) thereof on patients’ decisions [11–20]. Consequently, a typical research question is to establish whether or not individuals are indifferent between two attribute levels. For instance: Do patients prefer delivery at home more than in a hospital?; Do patients prefer a medical specialist over an nurse practitioner?; Do patients prefer every 5 year screening over every 10 year screening?; Do patients prefer a weekly oral medication over a monthly injection?; Do patients prefer the explanation of their medical results through a face-to-face contact more than through a letter? As a result, an important design question is the size of the sample needed to answer such a research question. When considering the required sample size, DCE practitioners need to be confident that they have sufficient statistical power to detect a difference in preferences when this difference is sufficiently large. A practical solution (that does not require any sample size calculations) is to simply maximize the sample size given the research budget at hand, i.e., trying to overpower the study as much as possible. This is beneficial for reasons other than statistical precision (e.g. to facilitate in-depth analysis). However, particularly in the health care area, the number of eligible patients and healthcare professionals is generally limited. Although theory exists as to the calculation of sample size requirements for stated choice data, it does not address the issue of minimum sample size requirements in terms of testing for specific hypotheses based on the parameter estimates produced [21].

The purpose of this paper is threefold. The first objective is to provide insight into whether and how researchers have dealt with sample size calculations for health care-related DCE studies. The second objective is to introduce and explain the required sample size for parameter estimates in DCEs. The final objective of this manuscript is to provide a step-by-step guide for the calculation of the minimum sample size requirements for DCEs in healthcare.

2 Literature Review

2.1 Methods

To gain insight into the current approaches to sample size determination, we reviewed health care-related DCE studies published in 2012. Older literature was ignored, as the research frontier for methodological issues has shifted a lot over the past years [1, 22]. MEDLINE was used to identify healthcare-related DCE studies, replicating the methodology of two comprehensive reviews of the healthcare DCE literature [1, 2]. The following search terms were used: conjoint, conjoint analysis, conjoint measurement, conjoint studies, conjoint choice experiment, part-worth utilities, functional measurement, paired comparisons, pairwise choices, discrete choice experiment, dce, discrete choice mode(l)ling, discrete choice conjoint experiment, and stated preference. Studies were included if they were choice-based, published as a full-text English language article, and applied to healthcare. Consideration was given to background information of the studies, and detailed consideration was given to whether and how sample size calculations were conducted. We also briefly describe the methods that have been used to obtain sample size estimates so far.

2.2 Literature Review Results

The search generated 505 possible references. After reading abstracts or full articles, 69 references met the inclusion criteria. The appendix shows the full list of references [Electronic Supplementary Material (ESM) 1]. Table 1 summarizes the review data. Most DCE studies were from the UK, with the USA, Canada, and Australia also major contributors. Studies having 4–6 attributes and 9–16 choice sets per respondent were commonly used in the published healthcare-related DCE studies in 2012. The sample sizes differed substantially between the DCE studies.

Of 69 DCEs, 22 (32 %) had sample sizes smaller than 100 respondents, whereas 16 (23 %) of the 69 DCEs had sample sizes larger than 600 respondents; six (9 %) DCEs even had sample sizes larger than 1000 respondents. More than 70 % of the DCE studies (49 of 69) did not (clearly) report whether and what kind of sample size method was used; 12 % of the studies (8 of 69) just referred to other DCE studies to explain the sample size used. For example, Huicho et al. [23] mentioned that “Based on the experience of previous studies [24, 25], we aimed for a sample size of 80 nurses and midwives”, and Bridges et al. [26] mentioned “In a previously published pilot study, the conjoint analysis approach was shown to be both feasible and functional in a very low sample size (n = 20) [27]”. In 13 % of the DCE studies (9 of 69 [28–36]), one or more of the following rules of thumb were used to estimate the minimum sample size required: that proposed by (1) Johnson and Orme [37, 38]; (2) Pearmain et al. [39]; and/or (3) Lancsar and Louviere [9].

In short, the rule of thumb as proposed by Johnson and Orme [37, 38] suggests that the sample size required for the main effects depends on the number of choice tasks (t), the number of alternatives (a), and the number of analysis cells (c) according to the following equation:

When considering main effects, ‘c’ is equal to the largest number of levels for any of the attributes. When considering all two-way interactions, ‘c’ is equal to the largest product of levels of any two attributes [38].

The rule of thumb proposed by Pearmain et al. [39] suggests that, for DCE designs, sample sizes over 100 are able to provide a basis for modeling preference data, whereas Lancsar and Louviere [9] mentioned “our empirical experience is that one rarely requires more than 20 respondents per questionnaire version to estimate reliable models, but undertaking significant post hoc analysis to identify and estimate co-variate effects invariably requires larger sample size”.

Four of 69 (6 %) reviewed DCE studies used a parametric approach to estimate the minimum sample size required (a parametric approach can be used if one assumes, for example based on the law of large numbers, that the focal quantity—an estimated probability or coefficient—is Normally distributed. This assumption facilitates the derivation of the minimum sample sizes required). That is, three studies used the parametric approach as proposed by Louviere et al. [6] and one study [40] reported the parametric approach as proposed by Rose and Bliemer [21]. Louviere et al. [6] assume the study is being conducted to measure a choice probability with some desired level of accuracy. The asymptotic sampling distribution (i.e., the distribution as sample size N → ∞) of a proportion p N, obtained by a random sample of size N, is Normal with mean p (the true population proportion) and variance pq/N, where q = 1−p. The minimum sample size to estimate the true proportion within α 1 % of the true value p with a probability α 2 or greater has to satisfy the requirement that Prob(|p N−p| ≤ α1 p) ≥ α 2, which can be calculated using the following equation:

where Φ −1 is the inverse cumulative Normal distribution function, and r is the number of choice sets per respondent. Hence, the parametric approach as proposed by Louviere et al. [6] suggests that the sample size required for the main effects depends on the number of choice sets per respondent (r), the true population proportion (p), the one minus true population proportion (q), the inverse cumulative Normal distribution function (Φ −1), the allowed deviation from the true population proportion (α 1), and the significance level (α 2).

The parametric approach that has been recently introduced by Rose and Bliemer [21] focuses on the minimum sample size required based on the most critical parameter (i.e., to be able to determine whether each parameter value is statistically significant from zero). This parametric approach can only be used if prior parameter estimates are available and not equal to zero. The minimum required sample size to state with 95 % certainty that a parameter estimate is different from zero can be determined according to the following equation:

where γ k is the parameter estimate of attribute k, and Σ γk is the corresponding variance of the parameter estimate of attribute k.

2.3 Comment on the State of Play

The disadvantage of using one of the rules of thumb mentioned in paragraph 2.2 is that such rules are not intended to be strictly accurate or reliable. The parametric approach as proposed by Louviere et al. [6] is not suitable for determining the minimum required sample size for coefficients in DCEs, as this approach focuses on choice probabilities and does not address the issue of minimum sample size requirements in terms of testing for specific hypotheses based on the parameter estimates produced. The parametric approach for minimum sample size calculation proposed by Rose and Bliemer [21] is solely based on the most critical parameter, so it is not specific to a certain hypothesis. It also does not depend on a desired power level for the hypothesis tests of interest.

3 Determining Required Sample Sizes for Discrete-Choice Experiments (DCEs): Theory

In this section we explain the analysis needed to determine the minimum sample size requirements in terms of testing for specific hypotheses for coefficients in DCEs. Our proposed approach is more general than the parametric approaches mentioned in Sect. 2, as it can be used for any particular hypothesis that is relevant to the researcher. We outline which elements are required before such a minimum sample size can be determined, why these elements are needed, and how to calculate the required sample size. To provide a step-by-step guide that is useful for researchers from all different kinds of backgrounds, we strive to keep the number of formulas in this section as low as possible. Nevertheless, a comprehensive explanation of the minimum sample size calculation for coefficients in DCEs can be found in the appendix (ESM 2).

3.1 Required Elements for Estimating Minimum Sample Size

Before the minimum sample size for coefficients in a DCE can be calculated, the following five elements are needed:

-

Significance level (α)

-

Statistical power level (1−β)

-

Statistical model used in the DCE analysis [e.g., multinomial logit (MNL) model, mixed logit (MIXL) model, generalized multinomial logit (G-MNL) model]

-

Initial belief about the parameter values

-

The DCE design.

3.1.1 Significance Level (α)

The significance level α sets the probability for an incorrect rejection of a true null hypothesis. For example, if one wants to be 95 % confident that the null hypothesis will not be rejected when it is true, α needs to be set at 1−0.95 = 0.05 (i.e. 5 %). Conversely, if one decides to perform a hypothesis test at a 1−α confidence level, there is by definition an α probability of finding a significant deviation when there is in fact no true effect. Perhaps unsurprisingly, the smaller the imposed value of α (i.e., the more certainty one requires), the larger the minimum required sample size will be.

3.1.2 Statistical Power Level (1−β)

β indicates the probability of failing to reject a null hypothesis when the null hypothesis is actually false. The chosen value of beta is related to the statistical power of a test (which is defined as 1−β). As we want to assess whether a parameter value (coefficient) is significantly different from zero, we can define the sample size that enables us to find a significant deviation from zero in at least (1−β) × 100 % of the cases. For example, a statistical power of 0.8 (or 80 %) means that a study (when conducted repeatedly over time) is likely to produce a statistically significant result eight times out of ten. A larger statistical power level will increase the minimum sample size needed.

3.1.3 Statistical Model Used in the DCE Analysis

The calculation of the minimum required sample size also depends on the type of statistical model that will be used to analyze the DCE data (e.g., MNL, MIXL, G-MNL). The type of statistical model affects the number of parameters that needs to be estimated, the corresponding parameter values, and the parameter interpretation. As a consequence, the estimation precision of the parameters, which we will characterize through the variance covariance matrix of the estimated parameters, also depends on the statistical model that is used. In order to properly determine the estimation precision of each of the parameters, the statistical model needs to be specified.

3.1.4 Initial Belief About the Parameter Values

Of course, if the true values of the parameters (coefficients) were known, one would not need to execute the DCE. Nevertheless, before a minimum sample size can be determined, an initial estimate of the parameter values is required for two reasons. First, in models that are nonlinear in the parameters, such as choice models, the asymptotic variance–covariance matrix (AVC) depends on the values of the parameters themselves. This AVC is an intermediate stage in the sample size calculation (see Sect. 3.2 for more details), and reflects the expected accuracy of the statistical estimates obtained using the statistical model as identified under Sect. 3.1.3. Second, before a power calculation can be done, one has to describe a specific hypothesis and the power one wants to achieve given a certain degree of misspecification (i.e., the degree to which the true coefficient value deviates from its hypothesized value). As null hypothesis, we will use the hypothesis that there is no influence so the coefficient equals zero. The initial estimate of the parameter value can then be used as value for the effect size. The closer to zero the effect size is, the more difficult it will be to find a significant effect and hence the larger the minimum sample size will be. To obtain some insight into these parameter values, a small pilot DCE study—for example with 20–40 respondents—may be helpful.

3.1.5 DCE Design

The large literature on efficient design generation indicates the importance of the design in getting accurate estimates and powerful tests. The DCE design is described by the number of choice sets, the number of alternatives per choice set, the number of attributes, and the combination of the attribute levels in each choice set. The DCE design has a direct influence on the AVC, which affects the estimation precision of the parameters, and hence will have a direct influence on the minimum sample size required.Footnote 1

3.2 Sample Size Calculation for DCEs

Once all five required elements mentioned in Sect. 3.1 have been determined, the minimum required sample size for the estimated coefficients in a DCE can be calculated. First, as an intermediate part of the sample size calculation, the AVC has to be established. That is, the statistical model (Sect. 3.1.3), the initial belief on the parameter values, denoted with γ (Sect. 3.1.4), and the DCE design (Sect. 3.1.5), are all needed to infer the AVC matrix, \( \sum_{\gamma } \), of the estimated parameters. Details on how to construct the variance–covariance matrix from this information can be found, for example, in McFadden [4] for MNL and in Bliemer and Rose [41] for panel MIXL. A variance–covariance matrix is a square matrix that contains the variances and covariances associated with all the estimated coefficients. The diagonal elements of this matrix contain the variances of the estimated coefficients, and the off-diagonal elements capture the covariances between all possible pairs of coefficients. For hypothesis tests on individual coefficients, we only need the diagonal elements of \( \sum_{\gamma } \), which we denote by Σγk for the kth diagonal element.

Once the AVC, \( \sum_{\gamma } \), of the estimated parameters has been established and the confidence level (α), the power level (1−β), and the effect sizes (δ) are set, the minimum required sample size (N) for the estimated coefficients in a DCE can be calculated (see Eq. 4).

Each of the elements in this sample size calculation intuitively makes sense. In particular, with a larger effect size δ, a smaller sample size (N) will suffice to have enough power to find a significant deviation. Testing at a higher confidence level α increases z 1−α ,Footnote 2 and thus increases the minimum required sample size (N). The same holds when more statistical power is desired, as this increases z 1−β .Footnote 3 When the variance-covariance matrix contains smaller variance (\( \sum_{{\gamma {\text{k}}}} \)) the minimum sample size (N) required decreases, as the estimates will be more precise. Smaller values for \( \sum_{{\gamma {\text{k}}}} \) can be obtained from using more choice sets, more alternatives per choice set or a more efficient design.

4 Determining Required Sample Sizes for DCEs: A Practical Example

In this section, a practical example is provided to explain, step-by-step, how the minimum sample size requirement for a DCE study can be calculated. This is illustrated using R-code, which can also be found at http://www.erim.eur.nl/ecmc.

The DCE study used for this illustration concerns a DCE about patients’ preferences for preventive osteoporosis drug treatment [12]. In this DCE study, patients had to choose between drug treatment alternatives that differed in five treatment attributes: route of drug administration, effectiveness, side effects (nausea), treatment duration, and out-of-pocket costs. The DCE design was orthogonal and contained 16 choice sets. Each choice set consisted of two unlabeled drug treatment alternatives and an opt-out option.

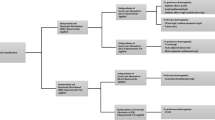

In what follows, we show in seven steps how the minimum sample size for coefficients can be calculated for the DCE on patients’ preferences for preventive osteoporosis drug treatment.

-

Step 1

Significance Level (α)

We first have to set the confidence through α. In the illustration, we choose α = 0.05. The resulting confidence level is 95 %, assuming a one-tailed testFootnote 4 (Box 1)

-

Step 2

Statistical Power Level (1−β)

The second step is to choose the statistical power level. For our illustration, we opt for a standard statistical power level of 80 % (i.e., β = 0.20, hence 1−β = 0.80) (Box 2).

-

Step 3

Statistical Model Used in the DCE Analysis

The third step is to choose the statistical model to analyze the DCE data. For our illustration, we opt for an MNL model. In the R code, this affects the way the AVC needs to be calculated, which is outlined in step 6

-

Step 4

Initial Belief About the Parameter Values

The fourth step concerns the initial beliefs about the parameter values. The DCE illustration regarding patients’ preferences for preventive osteoporosis drug treatment contains five attributes (two categorical attributes and three linear attributes) [12], resulting in eight parameters to be estimated (see Table 2 column ‘parameter label’). We use the point estimates of the parameters as our guess of the coefficients and the effect sizes δ (see Table 2 column ‘initial belief parameter value’) (Box 3)

Table 2 Alternatives, attributes and levels for preventive osteoporosis drug treatment, their parameter labels, initial belief about parameter values, and discrete-choice experiment design codes (based on de Bekker-Grob et al. [12])

-

Step 5

The DCE design

The fifth step focuses on the DCE design. The DCE design requires eight parameters to be estimated (ncoefficients = 8). Each choice set contains three alternatives (nalts = 3); that is, two drug treatment alternatives, and one opt-out alternative. The DCE design contains 16 choice sets (nchoices = 16) (Box 4)

-

The DCE design should be coded in a text-file in such a way that it can be read correctly into R. That is, the DCE design should contain one row for each alternative. So, there should be nalts × nchoices rows (see Table 3 as an example for our illustration, which contains 48 rows (i.e., 3 alternatives × 16 choice sets); rows 1–3 correspond to choice set 1, rows 4–6 correspond to choice set 2, etc.)

Table 3 DCE design Each row should contain the coded attribute levels for that alternative. See Table 3 for how the DCE design for our illustration was coded (columns A–F). For example, row 1 corresponds to the first preventive drug treatment alternative in choice set 1: a drug treatment alternative (value 1, column A) that should be taken as a tablet every week (value 1, column B1), which will result in a 5 % reduction of a hip fracture (value 5, column C) without side effects (value 0, column D), for which the drug treatment duration will be 10 years (value 10, column E) and out-of-pocket costs of €120 are required (value 120, column F). Be aware that only the DCE design (i.e., the ‘white part’ of Table 3) should be in a text file, so that it can be read correctly in R (Box 5)

-

-

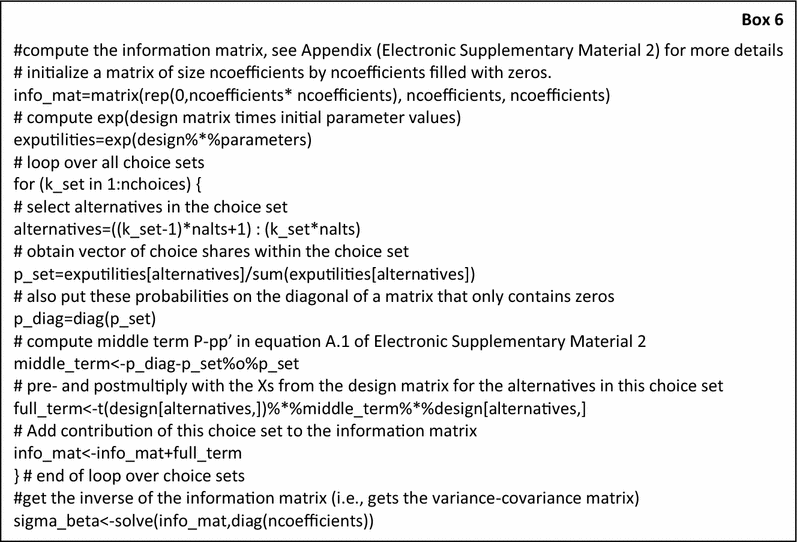

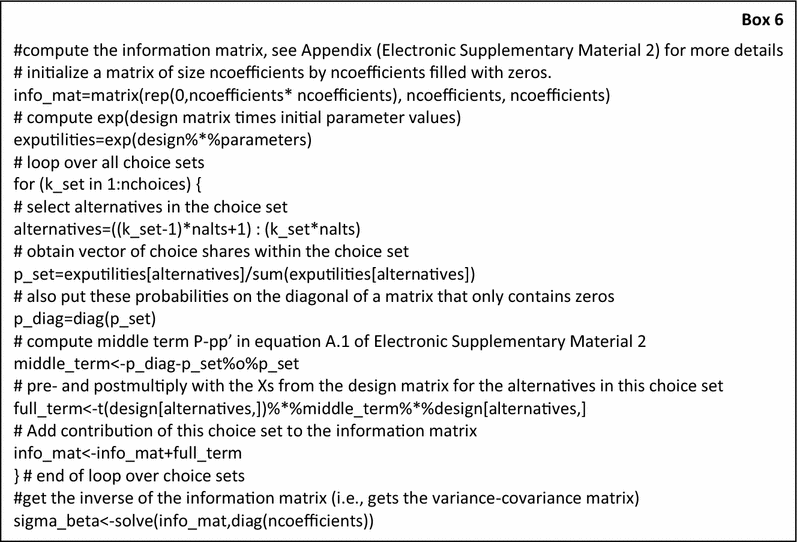

Step 6

Estimation Accuracy

Having our statistical model, our initial beliefs about the parameter values (i.e., our guess of the effect sizes) and our DCE design matrix, we are able to compute the AVC matrix (\( \sum_{\gamma } \)) (Box 6)

-

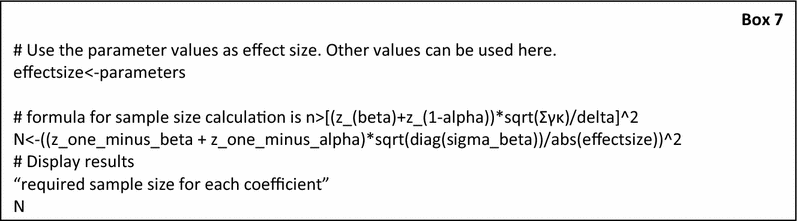

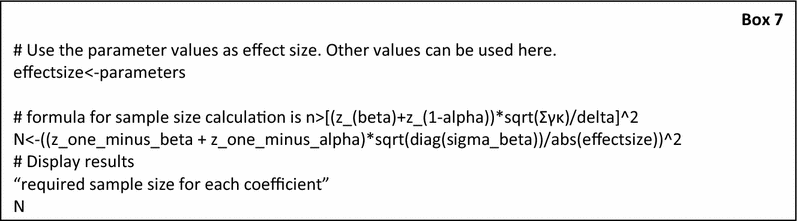

Step 7

Sample Size Calculation

The final step is to calculate the required sample size for the MNL coefficients in our DCE. Hereto we use Eq. 4 (Box 7)

-

The results of the minimum sample size required to obtain the desired power level for finding an effect when testing at a specific confidence level for each parameter are shown in Table 4. To illustrate the impact of the probability that we will find a significant effect given a specific effect size, we also computed the required sample size for the statistical power level 1−β equal to 0.6, 0.7, and 0.9. Additionally, we also computed the required sample sizes assuming a significance level α of 0.1, 0.025, and 0.01

Table 4 Minimum sample size required to obtain the desired power level 1−β for finding an effect when testing at a specific confidence level 1−α As can be seen from Table 4, one needs a minimum sample size of 190 respondents with a statistical power of 0.8 and assuming an α = 0.05, whether ‘injection every 4 months’ is significantly different from ‘tablet once a month (reference attribute level)’ (Table 4, column B2). If a smaller sample size of, for example, 111 respondents were to be used and no significant result to be found for this parameter, one has a statistical power of 0.6, assuming an α = 0.05, to conclude that respondents do not prefer ‘tablet every month’ over ‘injection every 4 months’. As a proof of principle, we compared the standard errors and confidence intervals from the actual study [12] against the predicted standard errors and confidence intervals. The results showed that they were quite similar (Table 5), which gives further evidence that our sample size calculation makes sense.

Table 5 Parameter estimates and precision from an actual discrete-choice experiment study [12] relative to those predicted by the sample size calculations

-

5 Discussion

In this paper, we have summarized how researchers have dealt with sample size calculations for health care-related DCE studies. We found that more than 70 % of the health care-related DCE studies published in 2012 did not (clearly) report whether and what kind of sample size method was used. Just 6 % of the health care-related DCE studies published in 2012 used a parametric approach for sample size estimation. Nevertheless, the parametric approaches used were not suitable as a power calculation for determining the minimum required sample size for hypothesis testing for coefficients based on DCEs. To fill in this gap, we explained the analysis needed to determine the required sample size in DCEs from a hypothesis testing perspective. That is, we clarified that the following five elements are needed before such a minimum sample size can be determined: significance level (α), statistical power level (1−β), statistical model used in the DCE analysis, initial belief about the parameter values, and the DCE design. An important feature of the resulting sample size formula is that the required sample size tends to grow exponentially. For example, when one wants a certain power level to detect an effect that is 50 % smaller, the required sample will be four times larger.

To build a bridge between theory and practice, we created a generic R-code as a practical tool for researchers to be able to determine the minimum required sample size for coefficients in DCEs. We then illustrate step-by-step how the sample size requirement can be obtained using our R-code. Although the R-code presented in this paper is for MNL only, the theory is also suitable for other choice models, such as the nested logit, mixed logit, scaled-MNL, or generalized-MNL.

Our approach for determining the minimum required sample size for coefficients in DCEs can also be extended to functions of parameters. For example, one might want to know whether patients are willing to pay a specific amount to increase effectiveness by 10 %. In order to test such a hypothesis, confidence intervals for a willingness-to-pay measure are needed. Once how these will be inferred from the limiting distribution of the parameters [42] is determined, ΣWTP (instead of Σγ) is known and the required sample size can be computed.

From a practical point of view, in health care-related DCEs, the number of patients and physicians that can be approached is often given, and sometimes rather small. Especially in these cases, our tool could indicate that power will be low. Using efficient designs (striving for small values for \( \sum_{{\gamma {\text{k}}}} \)), more alternatives per choice set, or clear wording and layout are ways to increase the power that is achieved.

The approach presented in this paper can also be used to reverse engineer the power that a specific design has for a given sample size. This can help researchers who find an insignificant result to ensure that they had sufficient power to detect a reasonably sized effect.

6 Conclusion

The use of sample size calculations for healthcare-related DCE studies is largely lacking. We have shown how sample size calculations can be conducted for DCEs when researchers are interested in testing whether a particular attribute (level) affects the choices that patients or physicians make. Such sample size calculations should be executed far more often than is currently the case in healthcare, as under-powered studies may lead to false insights and incorrect decisions for policy makers.

Notes

All aspects of our sample size calculation are conditional on the design of the experiment and the implementation in a questionnaire. The survey design will have an impact on the precision of the parameters that should be accounted for through its effect on the anticipated parameter values. Also, the model specification has an impact on the precision of the parameters.

The value of α (Sect. 3.1.1) is used to determine the corresponding quantile of the Normal distribution (z 1−α ) that is needed in the sample size calculations. The value of z 1−α for a given α can be found in the basic statistics textbooks or easily calculated in Microsoft Excel® using the formula NORMSINV(1−α). The value of z 1−α for an α of 0.05 equals 1.64.

In the computation of the sample size, we need z 1−β , the quantile of the Normal distribution with Φ(z 1−β ) = 1−β. Here again, Φ denotes the cumulative distribution function of the Normal distribution. Accordingly, the value for z 1–β for a given 1–β can be found in the basic statistics textbooks or easily calculated in Microsoft Excel® using the formula NORMSINV(1−β); e.g., assuming a statistical power level of 80 %, the value z 1−β is 0.84 [i.e., NORMSINV(0.8)].

A one-tailed test is used if only deviations in one direction are considered possible; in contrast, a two-tailed test is used if deviations of the estimated parameter in either direction from zero are considered theoretically possible. Be aware that, for a two-tailed test, the alpha level should be divided by 2 (i.e., α/2).

References

de Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ. 2012;21(2):145–72.

Clark MD, Determann D, Petrou S, Moro D, de Bekker-Grob EW. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32(9):883–902.

Lancaster KJ. A new approach to consumer theory. J Polit Econ. 1966;74(2):132–57.

McFadden D. Conditional logit analysis of qualitative choice behavior. In: Zarembka P, editor. Frontiers in econometrics. New York: Academic Press; 1974. p. 105–42.

Reed Johnson F, Lancsar E, Marshall D, Kilambi V, Muhlbacher A, Regier DA, et al. Constructing experimental designs for discrete-choice experiments: report of the ISPOR conjoint analysis experimental design good research practices task force. Value Health. 2013;16(1):3–13.

Louviere J, Hensher DA, Swait JD. Stated choice methods: analysis and application. Cambridge: Cambridge University Press; 2000.

Hensher DA, Rose JM, Greene WH. Applied choice analysis: a primer. Cambridge: Cambridge University Press; 2005.

Rose JM, Bliemer MCJ. Constructing efficient stated choice experimental designs. Transp Rev. 2009;29(5):587–617.

Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics. 2008;26(8):661–77.

Ryan M, Gerards K, Amaya-Amaya M, editors. Using discrete choice experiments to value health and health care. Dordrecht: Springer; 2008.

Oteng B, Marra F, Lynd LD, Ogilvie G, Patrick D, Marra CA. Evaluating societal preferences for human papillomavirus vaccine and cervical smear test screening programme. Sex Transm Infect. 2011;87(1):52–7.

de Bekker-Grob EW, Essink-Bot ML, Meerding WJ, Pols HA, Koes BW, Steyerberg EW. Patients’ preferences for osteoporosis drug treatment: a discrete choice experiment. Osteoporos Int. 2008;19(7):1029–37.

Guimaraes C, Marra CA, Gill S, Simpson S, Meneilly G, Queiroz RH, et al. A discrete choice experiment evaluation of patients’ preferences for different risk, benefit, and delivery attributes of insulin therapy for diabetes management. Patient Prefer Adherence. 2010;4:433–40.

Hiligsmann M, Dellaert BG, Dirksen CD, van der Weijden T, Goemaere S, Reginster JY, et al. Patients’ preferences for osteoporosis drug treatment: a discrete-choice experiment. Arthritis Res Ther. 2014;16(1):R36.

van Dam L, Hol L, de Bekker-Grob EW, Steyerberg EW, Kuipers EJ, Habbema JD, et al. What determines individuals’ preferences for colorectal cancer screening programmes? A discrete choice experiment. Eur J Cancer. 2010;46(1):150–9.

Bessen T, Chen G, Street J, Eliott J, Karnon J, Keefe D, et al. What sort of follow-up services would Australian breast cancer survivors prefer if we could no longer offer long-term specialist-based care? A discrete choice experiment. Br J Cancer. 2014;110(4):859–67.

Kimman ML, Dellaert BG, Boersma LJ, Lambin P, Dirksen CD. Follow-up after treatment for breast cancer: one strategy fits all? An investigation of patient preferences using a discrete choice experiment. Acta Oncol. 2010;49(3):328–37.

Dixon S, Nancarrow SA, Enderby PM, Moran AM, Parker SG. Assessing patient preferences for the delivery of different community-based models of care using a discrete choice experiment. Health Expect. 2013. doi:10.1111/hex.12096.

de Bekker-Grob EW, Hofman R, Donkers B, van Ballegooijen M, Helmerhorst TJ, Raat H, et al. Girls’ preferences for HPV vaccination: a discrete choice experiment. Vaccine. 2010;28(41):6692–7.

Yeo ST, Edwards RT, Fargher EA, Luzio SD, Thomas RL, Owens DR. Preferences of people with diabetes for diabetic retinopathy screening: a discrete choice experiment. Diabet Med. 2012;29(7):869–77.

Rose JM, Bliemer MCJ. Sample size requirements for stated choice experiments. Transportation. 2013;40:1021–41.

Ryan M, Gerard K. Using discrete choice experiments to value health care programmes: current practice and future research reflections. Appl Health Econ Health Policy. 2003;2(1):55–64.

Huicho L, Miranda JJ, Diez-Canseco F, Lema C, Lescano AG, Lagarde M, et al. Job preferences of nurses and midwives for taking up a rural job in Peru: a discrete choice experiment. PLoS One. 2012;7(12):e50315.

Blaauw D, Erasmus E, Pagaiya N, Tangcharoensathein V, Mullei K, Mudhune S, et al. Policy interventions that attract nurses to rural areas: a multicountry discrete choice experiment. Bull World Health Organ. 2010;88(5):350–6.

Scott A. Eliciting GPs’ preferences for pecuniary and non-pecuniary job characteristics. J Health Econ. 2001;20(3):329–47.

Bridges JF, Dong L, Gallego G, Blauvelt BM, Joy SM, Pawlik TM. Prioritizing strategies for comprehensive liver cancer control in Asia: a conjoint analysis. BMC Health Serv Res. 2012;12:376.

Bridges JF, Gallego G, Kudo M, Okita K, Han KH, Ye SL, et al. Identifying and prioritizing strategies for comprehensive liver cancer control in Asia. BMC Health Serv Res. 2011;11:298.

Bridges JF, Mohamed AF, Finnern HW, Woehl A, Hauber AB. Patients’ preferences for treatment outcomes for advanced non-small cell lung cancer: a conjoint analysis. Lung Cancer. 2012;77(1):224–31.

Bridges JF, Searle SC, Selck FW, Martinson NA. Designing family-centered male circumcision services: a conjoint analysis approach. Patient. 2012;5(2):101–11.

Gerard K, Tinelli M, Latter S, Blenkinsopp A, Smith A. Valuing the extended role of prescribing pharmacist in general practice: results from a discrete choice experiment. Value Health. 2012;15(5):699–707.

Landfeldt E, Jablonowska B, Norlander E, Persdotter-Eberg K, Thurin-Kjellberg A, Wramsby M, et al. Patient preferences for characteristics differentiating ovarian stimulation treatments. Hum Reprod. 2012;27(3):760–9.

Manjunath R, Yang JC, Ettinger AB. Patients’ preferences for treatment outcomes of add-on antiepileptic drugs: a conjoint analysis. Epilepsy Behav. 2012;24(4):474–9.

Philips H, Mahr D, Remmen R, Weverbergh M, De Graeve D, Van Royen P. Predicting the place of out-of-hours care–a market simulation based on discrete choice analysis. Health Policy. 2012;106(3):284–90.

Robyn PJ, Barnighausen T, Souares A, Savadogo G, Bicaba B, Sie A, et al. Health worker preferences for community-based health insurance payment mechanisms: a discrete choice experiment. BMC Health Serv Res. 2012;12:159.

Rockers PC, Jaskiewicz W, Wurts L, Kruk ME, Mgomella GS, Ntalazi F, et al. Preferences for working in rural clinics among trainee health professionals in Uganda: a discrete choice experiment. BMC Health Serv Res. 2012;12:212.

Tinelli M, Ozolins M, Bath-Hextall F, Williams HC. What determines patient preferences for treating low risk basal cell carcinoma when comparing surgery vs imiquimod? A discrete choice experiment survey from the SINS trial. BMC Dermatol. 2012;12:19.

Orme B. Sample size issues for conjoint analysis studies. Sequim: Sawtooth Software Technical Paper; 1998.

Johnson R, Orme B. Getting the most from CBC. Sequim: Sawtooth Software Research Paper Series, Sawtooth Software; 2003.

Pearmain D, Swanson J, Kroes E, Bradley M. Stated preference techniques: a guide to practice. 2nd ed. Steer Davies Gleave and Hague Consulting Group. 1991.

Pedersen LB, Kjaer T, Kragstrup J, Gyrd-Hansen D. Do general practitioners know patients’ preferences? An empirical study on the agency relationship at an aggregate level using a discrete choice experiment. Value Health. 2012;15(3):514–23.

Bliemer MCJ, Rose JM. Construction of experimental designs for mixed logit models allowing for correlation across choice observations. Transp Res B Methodol. 2010;44(6):720–34.

de Bekker-Grob EW, Rose JM, Bliemer MC. A closer look at decision and analyst error by including nonlinearities in discrete choice models: implications on willingness-to-pay estimates derived from discrete choice data in healthcare. Pharmacoeconomics. 2013;31(12):1169–83.

Acknowledgments

The authors thank Marie-Louise Essink-Bot and Ewout Steyerberg for their support regarding the osteoporosis drug treatment DCE study, Domino Determann for her support regarding the identification of healthcare-related DCE studies published in 2012, and Chris Carswell and John Bridges for their invitation to write this article. None of the authors have competing interests. This study was not supported by any external sources or funds.

Author contributions

EW de Bekker-Grob designed the study, conducted the review and DCE study, contributed to the analyses, and drafted the manuscript. B Donkers designed the study, performed the formulas, R-code and analyses, and drafted the manuscript. MF Jonker contributed to the R-code, the analyses, and to the writing of the manuscript. EA Stolk contributed to the writing of the manuscript. EW de Bekker-Grob and B Donkers have full access to all of the data in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis. EW de Bekker-Grob acts as the overall guarantor.

Author information

Authors and Affiliations

Corresponding author

Additional information

E. W. de Bekker-Grob and B. Donkers contributed equally to this work.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

de Bekker-Grob, E.W., Donkers, B., Jonker, M.F. et al. Sample Size Requirements for Discrete-Choice Experiments in Healthcare: a Practical Guide. Patient 8, 373–384 (2015). https://doi.org/10.1007/s40271-015-0118-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40271-015-0118-z