Abstract

Introduction

Simulation training is an important component of medical education. In former studies, diagnostic simulation training for direct and indirect funduscopy was already proven to be an effective training method. In this prospective controlled trial, we investigated the effect of simulator-based fundus biomicroscopy training.

Methods

After completing a 1-week ophthalmology clerkship, medical students at Saarland University Medical Center (n = 30) were block-randomized into two groups: The traditional group received supervised training examining the fundus of classmates using a slit lamp; the simulator group was trained using the Slit Lamp Simulator. All participants had to pass an Objective Structured Clinical Examination (OSCE); two masked ophthalmological faculty trainers graded the students’ skills when examining patient’s fundus using a slit lamp. A subjective assessment form and post-assessment surveys were obtained. Data were described using median (interquartile range [IQR]).

Results

Twenty-five students (n = 14 in the simulator group, n = 11 in the traditional group) (n = 11) were eligible for statistical analysis. Interrater reliability was verified as significant for the overall score as well as for all subtasks (≤ 0.002) except subtask 1 (p = 0.12). The overall performance of medical students in the fundus biomicroscopy OSCE was statistically ranked significantly higher in the simulator group (27.0 [5.25]/28.0 [3.0] vs. 20.0 [7.5]/16.0 [10.0]) by both observers with an interrater reliability of IRR < 0.001 and a significance level of p = 0.003 for observer 1 and p < 0.001 for observer 2. For all subtasks, the scores given to students trained using the simulator were consistently higher than those given to students trained traditionally. The students’ post-assessment forms confirmed these results. Students could learn the practical backgrounds of fundus biomicroscopy (p = 0.04), the identification (p < 0.001), and localization (p < 0.001) of pathologies significantly better with the simulator.

Conclusions

Traditional supervised methods are well complemented by simulation training. Our data indicate that the simulator helps with first patient contacts and enhances students’ capacity to examine the fundus biomicroscopically.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Simulator-based training can enhance student education without additional burden on classmates or patients. |

A significant effect of simulator-based training was already proven for direct and indirect ophthalmoscopy but the effect of fundus biomicroscopy training has not yet been studied. |

The aim of this study was to evaluate the effectiveness of simulator-based fundus biomicroscopy training with the slit lamp. |

Training on the Eyesi Slit Lamp Simulator significantly improved students’ examination techniques in fundus biomicroscopy and their ability to locate pathological fundus lesions. |

Further studies should be conducted to evaluate whether longer training periods result in superior technical skills and findings, as well as a significant improvement in correct clinical diagnosis. |

Introduction

Ophthalmoscopy has a long history in ophthalmology [1, 2]. In contrast to direct ophthalmoloscopy [3], indirect ophthalmoscopy [4], including biomicroscopic funduscopy with the slit lamp [5, 6], offers a binocular stereoscopic fundus evaluation with a wide field of view [7]. The different examination options should also be offered to students, not only to increase their interest in this special field of ophthalmology but also to consolidate their knowledge of ophthalmological diagnoses (e.g., fundus hypertonicus, diabetic retinopathy) with relevance to almost all other medical subspecialties [8].

This is crucial, as previous research demonstrated that medical students and practicing physicians experience a lack of confidence in their ophthalmoscopic abilities due to inadequate training during medical school [9,10,11].

A well-developed medical specialist training is necessary in order to have highly qualified physicians for the future [12]. Nevertheless, nowadays, a high workload with shortage of time and staff in clinical settings often makes it more difficult to introduce medical students or residents to the various examination methods in a structured and well-supervised way [13]. Many students feel that they have not received adequate training in the past in regards to conducting physical examinations, making a diagnosis, and establishing the correct management of various diseases [14].

Additionally, clinical medicine is increasingly focused on patient safety and quality and patients may be worried that students and residents are training on them [15]. Especially in the early education stage ethical concerns about using patients for training purposes arise. Physical examination of pears is an alternative method to improve students' skills, but usually only healthy eyes can be inspected and willingness to be examined is low [16].

Therefore, simulator-based training can offer a useful complement to reduce barriers to medical education without extra strain on classmates or even patients. The impact and educational utility of simulator-based medical education have grown in the past 40 years and are likely to increase in the future [17]. Lee et al. published a systematic review and analyzed studies with different training modalities: virtual reality, wet lab, dry-lab models, and e-learning [18]. Models with the strongest validity evidence were the Eyesi Surgical, Eyesi Direct Ophthalmoscope, and Eye Surgical Skills Assessment Test. Regarding the Eyesi training simulators (Haag-Streit Simulation, Mannheim, Germany), a significant effect in various studies was already proven for both surgical [19,20,21] and diagnostic skills in direct and indirect ophthalmoscopy [22,23,24,25]. In 2022, the Eyesi Slit Lamp Simulator was market launched. The simulator is integrated into a BQ 900 slit-lamp model from Haag-Streit Diagnostics and therefore offers all the functions of a real slit lamp: anterior chamber examination, gonioscopy, and fundus biomicroscopy (Fig. 1).

Students can practice posterior segment examinations using a 90D ophthalmoscope lens with the Eyesi Slit Lamp simulator. To visualize the retina, they must insert the 90D lens into the virtual slit beam and then slowly move it towards the model eye until they see a focused image through the slit-lamp microscope

After demonstrating a significant training effect for the Eyesi Slit Lamp simulator in anterior chamber examination [26], we sought to evaluate the effectiveness of simulator-based fundus biomicroscopy training. The study design and subject group (students) remained unchanged to ensure equal conditions for both studies. However, we included an additional OSCE observer to assess interrater reliability.

Methods

This prospective randomized controlled trial was conducted in accordance with the Declaration of Helsinki. The Ethics Committee of the Landesärztekammer Hessen reviewed the study (2022-3167-AF) and determined that ethical approval is not required. All study participants provided informed written consent. Additionally, consent for publication of clinical pictures was given by the patient volunteers.

In total, 243 medical students in their eighth semester at Saarland University Medical Center were invited to participate in the study via e-mail. Eligibility was restricted to those who had previously completed a comprehensive 1-week ophthalmology clerkship at the same institution, consisting of lectures and practical sessions on ophthalmologic examination and imaging methods, as well as ophthalmologic diseases and emergencies. Thirty positive responses were obtained. Block randomization was used. Students were assigned to two treatment groups: the traditional training group and the simulator-based training group with a 1:1 allocation ratio. A block size of six students was set. Out of 30 students, two students withdrew and 28 met the inclusion criteria and were included in the study (Supplemental Fig. S1). Written informed consent was obtained from all participants prior to enrollment.

Five 60-min courses for 2–3 students were held, both for the simulator-based training group and for the traditional training group. Both training groups were guided respectively by one ophthalmology-certified faculty instructor.

The students were given the opportunity to complete the alternative training after the OSCE examination, in which all participated.

Traditional Training Structure

The traditional training began with learning essential examination techniques to perform a systematic fundus examination. This included adjusting the refraction on the eyepieces and the interpupillary distance, positioning the patient correctly at the slit lamp, adjusting the light to the patient's eye, optimally localizing the 90D lens between patient's eye and slit, performing lateral and vertical translation, adapting of slit width and slit length and the interpretation of the inverted fundus image. In the next step, the essential fundus structures had to be localized and described. These structures include the macula, optic disc, superior and inferior temporal vascular arcade, upper and lower nasal vascular arcade and the equatorial region/outer periphery (vortex veins). Determining the structures was achieved through mutual examinations. Therefore, at least one eye of the students had to be dilated. For the last part of the training, the faculty instructor presented clinical images relevant to the students' curriculum and discussed fundus pathologies, including glaucoma, papilledema, dry AMD, wet AMD, diabetic retinopathy (background and high risk), toxoplasmosis, branch vein occlusion and hypertensive retinopathy.

Simulator-Based Training Structure

The simulator-based course started with abstract tasks to learn the different examination techniques. Therefore, each separate slit-lamp function was set as a separate task using a gamified teaching method: Students had to find and identify common items found in a virtual ophthalmologist’s office. Once a level was completed, the acquired function was retained for subsequent tasks. This step-by-step approach allowed students to become familiar with more and more slit-lamp functions as they progressed through the course. After completing all selected abstract tasks for device handling, the students had to examine and diagnose virtual patients, suffering from the same diseases with associated fundus pathologies, which were shown as clinical pictures to the traditional group (Fig. 2). Each virtual patient was presented with a brief medical history, current symptoms, as well as their visual acuity and intraocular pressure values.

a Glaucomatous optic nerve (left) taken during the simulator course compared to a real fundus biomicroscopy image (right). b Toxoplasmosis scar (left) taken during the simulator course compared to a real fundus biomicroscopy image (right). c Non-proliferative diabetic retinopathy (left) taken during the simulator course compared to a real fundus biomicroscopy image (right). d Dry age-related macular degeneration (left) taken during the simulator course compared to a real fundus biomicroscopy image (right)

OSCE

An OSCE examination for fundus biomicroscopy was developed to assess the skills of both training groups. We used the OSCE form for direct ophthalmoscopy developed by Boden et al. [22]. This form was previously adapted for slit-lamp examination in our prior study analyzing the impact of anterior chamber examination using the Eyesi Slit Lamp [23]. For this study, the form was extended to include fundus biomicroscopy (Table 1).

The following rubrics were created to guide the masked observer in assigning scores for the student’s fundus biomicroscopy skills: preparation for fundus examination (max six points), finding relevant structures of the fundus (max five points), describing the structures found (max eight points), correct diagnosis of the patient volunteer’s disease (max three points), explanation of ophthalmic procedures and appropriate application such as pupil dilation (max six points), and the correct diagnosis of five clinical images randomly chosen from nine images (max five points). For this purpose, images of the same disease patterns were used in the simulator and traditional course, but varied fundus photographs were taken for the OSCE. Scores per task were assigned depending on the difficulty level and importance for the fundus biomicroscopy examination (Table 1). All the rubrics were added up to calculate the total score (max 33 points) for the overall performance.

The students’ OSCE performance was evaluated by two independent observers. The first masked observer (observer 1) was a resident of the Department of Ophthalmology of the Saarland University Medical Center in his fourth year.

The second masked observer (observer 2) was a professor of ophthalmology with over 20 years of experience in medical student training, who volunteered to assess the OSCE. The examination room was on a separate floor to ensure that they had no contact with students during the program. Ten volunteers were recruited from the inpatients for the OSCE. To eliminate any potential exchange of diagnostic information among students, each subgroup examined a separate patient.

Post-assessment Survey

After attending the OSCE and both courses, each student filled out a post-assessment survey which was designed following the principles of survey tool development from the Medical Didactics course of the Goethe University Frankfurt. The course supervisor ensured that each student responded only once. The following categories were collected and evaluated: usefulness of the fundus biomicroscopy simulator course, increase in knowledge, effectiveness of abstract tasks, training of fundus biomicroscopy techniques, training of necessary techniques in the context of pathologies, three-dimensional localization of pathologies, efficacy of multimedia learning of pathologies, efficacy of independent examination training, preparation for a real fundus biomicroscopy examination, ability to recognize diseases with fundus biomicroscopy after simulator training, proportion of medical training on simulators. The students were asked to assign point values to each question. The questionnaire responses were scored on a scale from 1 to 7. A score of 1 represented low importance, whereas 7 represented very high importance.

Additionally, a self-assessment form was distributed to the students to compare the effectiveness of the simulator versus the traditional course in regards to gaining curriculum-relevant skills such as applying fundus biomicroscopy techniques, examining a healthy eye, recognizing a pathology, and assessing pathology location. Open-ended queries explored the benefits of fundus biomicroscopy simulator training and traditional training, as well as proposals for improvement.

Data and Statistical Analysis

The medical student volunteers received written and oral information about the study and were informed of their right to withdraw their participation at any time. The study data were only accessible to the authors and confidentiality was maintained by pseudonymizing the materials of the OSCE examination and survey forms. The data were analyzed using Excel, IBM SPSS Statistics v.28, and BiAS for Windows v. 11.12. A Fisher’s exact test was performed to investigate the homogeneity of the two training groups.

The primary outcome measures were the exam grades of both the simulator and traditional groups. Initially, the scores were tested for normal distribution through the Shapiro–Wilk test. As the scores were not normally distributed, the statistical analysis relied on the Wilcoxon–Mann–Whitney U-exact test to identify the differences between the objective OSCE scores of the simulator and traditional group [27, 28]. The data were described using median (interquartile range [IQR]).

Additionally, the Rosenthal's effect size was calculated, with values of 0.1 indicating a small effect, 0.3 indicating a medium effect, and 0.5 indicating a large effect [29]. The Spearman correlation coefficient was computed between the masked observers for interrater reliability (IRR), using a two-sided test with Edgeworth approximation. Secondary outcomes included students’ subjective assessment of the efficacy of the traditional versus the simulator course. Binomial tests were used for comparison. Post-assessment surveys were analyzed descriptively, with frequencies presented in contingency tables. A significance level of p < 0.05 was assumed for all tests.

Results

A total of 28 medical students in their eighth semester were enrolled in the study and randomized to simulator-based (n = 14) or traditional (n = 14) training.

Out of 28 students, two were unable to complete the entire course, including the OSCE, and one had to be excluded from the statistical analysis due to a significant language barrier. Twenty-five students [simulator group (n = 14), traditional trained group (n = 11)] remained for statistical analysis.

Regarding the characteristics of the simulator versus the traditional trained group (Table 2), there was no statistically significant difference in prior fundus biomicroscopy experience (p = 0.18), previous experience with the subject “ophthalmology” (p = 0.34) or presence of own eye diseases (p = 0.66). Furthermore, the planned subspecialty for medical residency is listed in Table 2.

The overall performance (total score) of the medical students in the fundus biomicroscopy OSCE was statistically ranked significantly higher in the simulator group (27.0 [5.25]/28.0 [3.0] vs. 20.0 [7.5]/16.0 [10.0]) by both observers with an interrater reliability of IRR < 0.001 and a significance level of p = 0.003 for observer 1 and p < 0.001 for observer 2. The highest overall performance score (33.0/33.0) was assigned by both observers to the same student, a student in the simulator group. Table 3 summarizes the scores assigned to the students in each group.

The scores given to students trained on simulators were consistently higher than those given to students trained traditionally. Interrater reliability was verified as significant for all subtasks (≤ 0.002) except subtask 1 “preparation for fundus examination” (p = 0.12). For this subtask, no significant difference could be found by observer 1 (5.5 [2.0] vs. 5.0 [2.0], p = 0.85), in contrast to observer 2 (5.0 [1.0] vs. 3.0 [2.0], p < 0.001).

For subtask 2 “Finding of relevant fundus structures” statistical significance was narrowly missed for observer 1 (3.5 [1.86] vs. 2.0 [1.0], p = 0.06), but was already reached for observer 2 (4.0 [0.75] vs. 1.0 [1.5], p < 0.001), IRR = 0.002.

Both observers scored the simulator group significantly higher (p = 0.009/p < 0.001) for the subtask 3 “correct description of the structures found” (5.0 [1.75]/6.0 [1.75] vs. 4.0 [2.0]/3.0 [3.0]), IRR < 0.001.

For subtask 4 “accurate diagnosis of the examined patient” a statistical significance (p = 0.02) was determined for observer 1 (3.0 [0.75] vs. 0.0 [1.75]), but narrowly missed for observer 2 with p = 0.05 (3.0 [0.75] vs. 1.0 [2.0]), IRR < 0.001.

Regarding subtask 5 “comments on students’ examination approach”, there was no significant difference found for the scores assigned by observer 1 (6.0 [0.0] vs. 6.0 [2.0], p = 0.13), contrary to observer 2 (6.0 [0.0] vs. 4.0 [2.0], p = 0.001), IRR < 0.001.

No significant difference between the simulator and traditional group was seen for subtask 6 “correct recognition of diseases on five clinical fundus pictures” (4.25 [0.88]/5.0 [1.0] vs. 4.0 [1.0]/4.0 [1.5]) for both observers with an interrater reliability of p < 0.001.

The students’ self-assessment forms showed similar subjective results. Students felt significantly better trained in the use of biomicroscopy and its practical background (p = 0.04), in the detection of fundus pathologies (p < 0.001) and in the correct localization of pathologies using the biomicroscopy simulator (p < 0.001).

Learning how to perform an examination of a healthy eye showed no statistical difference between the two learning methods (p = 0.42).

All 25 students returned their questionnaires. Point values for each question were scored on a scale from 1 to 7 (from 1 = “does not apply at all” to 7 “fully applies”). Both groups, the simulator group, which was trained on the slit lamp simulator prior to taking the OSCE exam, and the traditional group, which received simulator-based slit lamp training after taking the OSCE exam, reported a primarily positive experience with the slit lamp simulator course and would recommend it to others (7.0 [0.0]). They noticed an increase in knowledge over the training period (7.0 [0.0]), the abstract tasks helped them to get a better feeling for the procedure (7.0 [0.0]), they felt able to understand the biomicroscopy techniques in general (7.0 [1.0]) as well as in the context of pathologies (7.0 [1.0]). They gained a better sense of the location of the pathologies (7.0 [0.0]) and their typical manifestation form (7.0 [0.0]). As a result, they felt more prepared to identify diseases using fundus biomicroscopy after the simulator training (7.0 [1.0]). The interactive multi-media learning approach supported them to memorize the different fundus pathologies (7.0 [0.0]). The majority would like to have more simulated medical training during their medical studies (7.0 [0.0]). The detailed results can be found in Table 4.

In order to be adequately prepared to perform fundus biomicroscopy on real patients (6.0 [1.0]), they required more simulator training time as stated in the open field questions.

Based on the open field responses, other major topics among the stated advantages of simulator training based on open field responses were the ability to learn to visualize simulated fundus pathologies and not just inspect healthy eyes during the mutual examinations (14 [56.0%] of 25 responses), as well as the ability to train unrestrictedly without burdening patients or fellow students (12 [48.0%] of 25 responses). Additionally, eight of 25 [32.0%] students reported that they could improve their proficiency in using the slit lamp and 90D lens more quickly by receiving direct feedback from the simulator on their settings and the fundus structures found.

The primary benefit of examining real patients during slit-lamp training, as reported by 20 out of 25 respondents [80.0%], was the opportunity to interact with patients, which helped to alleviate inhibitions and fears associated with real patient contact. The students were only able to practice positioning the patient's head in front of the real slit lamp, as the mannequin's head is already attached to the simulator. This was reported by five out of 25 respondents (20%).

Discussion

Various publications have shown the added value of simulator training compared to e-learning or labs [13, 18, 30,31,32,33]. In a virtual reality environment, a non-existing environment is simulated and an augmented reality involves haptic elements from the real world to enhance the experience [34]. On a computerized literature search using PubMed, we found previous studies that investigated the effect of training on the Eyesi Direct and Indirect Ophthalmoscope, but there was no study that dealt with the effect of fundus biomicroscopy training with the Slit Lamp Simulator, launched in 2022.

For direct ophthalmoscopy training, studies have shown that examination technique improves with the use of a simulator as a supplemental learning tool.

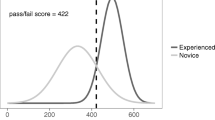

Boden et al. could show that the simulator-trained group achieved significantly higher scores compared to the traditionally trained group [22]. They randomized 34 medical students during their ophthalmological internship for a 45-min classical and simulator training following a 5-min introduction. The traditionally trained group achieved a learning success rate of 78% on the subsequent OSCE, while the simulator-based group achieved a higher score of 91% with less variation in all sub-disciplines. These results are comparable to our study; here the success rate of the traditionally trained group was 62% for observer 1 and 52% for observer 2. Meanwhile the simulator-based group achieved a significantly higher score of 80% for observer 1 and 85% for observer 2. Overall, the average total score was slightly lower for both groups. This could be attributed to the more challenging examination technique of indirect fundus biomicroscopy in comparison to direct funduscopy.

Boden et al. also underlined the advantages of patient- and instructor-independent training possibilities, i.e., a reduction of light exposure for patients and test subjects, as well as a standardized and controlled mediation of physiological and pathological findings.

In Howell and associates’ study, medical student volunteers underwent a longer training period with the direct ophthalmoscope simulator [24]. After a 1-h didactic instruction course, 17 students were randomized to an additional hour of training on a direct ophthalmoscope simulator, and another 16 students to a supervised practice examining classmates. After a 1-week independent student practice using the assigned training methods, masked ophthalmologist observers assessed the students’ ophthalmoscopy skills. They identified better performance among students in the simulator-trained group regarding three measures: longer independent practice time, technique (ability to handle a direct ophthalmoscope and conduct an examination) with p = 0.034, and, based on the results of one patient examination, the ability to locate fundus lesions accurately (p = 0.013). An improved practical technique for fundus biomicroscopy following simulation training could also be shown in the present study. The simulator trained group was able to better visualize fundus structures (observer 1 p = 0.06; observer 2 p < 0.001) and to describe typical medical findings (observer 1 p = 0.009; observer 2 p < 0.001). Training time was predetermined in our study, but in the open field questions, students also requested longer training times for fundus biomicroscopy with the Eyesi Slit Lamp simulator.

Longer training periods could also be necessary to demonstrate not only superior technical skills and findings, as mentioned above, but also a significant improvement regarding the correct clinical diagnosis.

Learning practical skills is much easier for direct ophthalmoscopy than indirect ophthalmoscopy. In a previous study using the Eyesi Direct and Eyesi Indirect simulator, we demonstrated improved management of simulated clinical cases after a 4-h training period for direct and indirect ophthalmoscopy. However, statistical significance was only observed for direct ophthalmoscopy [23]. This study found similar results with significantly better technical skills and medical findings, but no statistical difference in diagnosing diseases. The limited duration of the course may have contributed to this issue. A more extended training period, lasting several weeks and providing opportunities to practice and repeat the identification of various pathologies, could enhance students’ ability to identify correct and differential diagnoses.

The technical skills of the simulator group were significantly better compared to the traditional group. This may be attributed to the direct objective performance assessment provided by the simulator regarding the students’ settings and fundus structures found, as well as the design of the tiers, which are methodically built on one another. Training units with the Eyesi Slit Lamp’s comprehensive and modular curriculum to examine and diagnose simulated fundus pathologies (other than training on healthy eyes of fellow students) and the ability to learn unrestrictedly without burdening patients or fellow students (e.g., by dilating the pupil) were also seen as advantages by the students, as stated in their open field responses.

The strengths of our study include its randomized design, comparing fundus biomicroscopy simulator training to traditional training allowing equal training times for both groups. All attendees completed a 1-week ophthalmology clerkship first to ensure equal prerequisites. An objective assessment of acquired skills was performed by two masked observers to prove interrater reliability. A significant interrater reliability could be shown for all tasks except for “preparation for fundus examination”, including rather soft evaluation criteria such as the correct positioning of the patient, the slit light, and the 90D lens.

Patient volunteers were used for the OSCE in order to verify the transferability of examining simulated patients on real patients. In addition to the objective results, the study also evaluated the subjective assessment of the effectiveness of simulated fundus biomicroscopy training. The subjective assessment corresponded to the objective results. Students reported feeling better prepared for everyday clinical practice and requested more simulation training in the future.

The limitations of this study include the relatively small number of student volunteers and the limited training time. Therefore, statistically significant improvements in practical techniques and localization of fundus lesions were observed for the simulator group compared to the traditional group. However, no significant improvement was observed in diagnosing patients. The students reported subjectively that they were able to learn pathologies more effectively using the simulation slit lamp. It is recommended that further studies investigate the potential benefits of longer training periods, e.g., over weeks or months. The scoring system of our study was adapted from the direct ophthalmoscopy OSCE template of Boden et al. [22]. However, it still uses a non-validated scoring system. The validity evidence for the slit-lamp simulator has to be evaluated carefully along existing formal validation frameworks [35]. So far the Eyesi Indirect Ophthalmoscope Simulator [36] and Eyesi Surgical Simulator [37,38,39] have been verified for construct and face validity in former studies.

Overall, simulation tools can enhance the possibilities of student training. They can serve as an alternative to real patients. As an advantage, trainees can make mistakes and learn from them without the fear of harming the patient [31]. The students can train systematically in a self-guided manner according to their own learning speed and can subjectively develop a significantly higher level of confidence after the simulation training [40], too. Based on our experience in this and previous studies [22, 23, 26, 41], a lecture on simulator training was included in the 1-week ophthalmology clerkship for students at the Saarland University, Germany, and simulator training courses are offered on a voluntary basis 1 day after the clinical clerkship, which are well attended.

Conclusions

In summary, our results demonstrate that training on the Eyesi Slit Lamp Simulator significantly enhances practical skills in fundus biomicroscopy and thereby the ability to locate fundus lesions accurately. Further studies are needed to verify whether an extended training period of weeks or months would result in improved diagnosis. Additionally, students also subjectively reported a significant improvement in practical skills.

The Eyesi Slit Lamp Simulator can be a valuable supplement to traditional training methods. Students can independently train fundus biomicroscopy without the need for suitable patients or an all-time-present instructor to get better prepared for everyday clinical practice.

Data Availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Keeler CR. The ophthalmoscope in the lifetime of Hermann von Helmholtz. Arch Ophthalmol. 2002;120(2):194–201.

Pearce JM. The ophthalmoscope: Helmholtz’s Augenspiegel. Eur Neurol. 2009;61:244–9.

Orlans HO. Direct ophthalmoscopy should be taught within the context of its limitations. Eye (Lond). 2016;30:326–7.

Jacklin HN. 125 years of indirect ophthalmoscopy. Ann Ophthalmol. 1979;11(643–6):649–50.

Kercheval DB, Terry JE. Essentials of slit lamp biomicroscopy. J Am Optom Assoc. 1977;48:1383–9.

Hruby K. Biomicroscopy of the ocular fundus with the slit lamp. Klin Monbl Augenheilkd Augenarztl Fortbild. 1958;132:244–5.

Cordero I. Understanding and caring for an indirect ophthalmoscope. Community Eye Health. 2016;29:57.

Mackay DD, Garza PS, Bruce BB, Newman NJ, Biousse V. The demise of direct ophthalmoscopy: a modern clinical challenge. Neurol Clin Pract. 2015;5:150–7.

Wu EH, Fagan MJ, Reinert SE, Diaz JA. Self-confidence in and perceived utility of the physical examination: a comparison of medical students, residents, and faculty internists. J Gen Intern Med. 2007;22:1725–30.

Shuttleworth GN, Marsh GW. How effective is undergraduate and postgraduate teaching in ophthalmology? Eye (Lond). 1997;11:744–50.

Stern GA. Teaching ophthalmology to primary care physicians. The Association of University Professors of Ophthalmology Education Committee. Arch Ophthalmol. 1995;113:722–4.

Seitz B, Turner C, Hamon L, et al. Leadership in ophthalmology training: opportunities and risks of medical specialist education. Ophthalmologie. 2023;120:906–19.

Teagle AR, George M, Gainsborough N, Haq I, Okorie M. Preparing medical students for clinical practice: easing the transition. Perspect Med Educ. 2017;6:277–80.

Van Way CW. Thoughts on medical education. Mo Med. 2017;114:417–8 (Erratum in: Mo Med. 2018; 115:28).

Okuda Y, Bryson EO, DeMaria S Jr, et al. The utility of simulation in medical education: what is the evidence? Mt Sinai J Med. 2009;76:330–43.

Burggraf M, Kristin J, Wegner A, et al. Willingness of medical students to be examined in a physical examination course. BMC Med Educ. 2018;18:246.

McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. A critical review of simulation-based medical education research: 2003–2009. Med Educ. 2010;44:50–63.

Lee R, Raison N, Yan W, et al. A systematic review of simulation-based training tools for technical and non-technical skills in ophthalmology. Eye (Lond). 2020;34:1737–59.

Ferris JD, Donachie PH, Johnston RL, Barnes B, Olaitan M, Sparrow JM. Royal College of Ophthalmologists’ National Ophthalmology Database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2020;104:324–9.

Jacobsen MF, Konge L, Bach-Holm D, et al. Correlation of virtual reality performance with real-life cataract surgery performance. J Cataract Refract Surg. 2019;45:1246–51.

McCannel C, Reed D, Goldman DR. Ophthalmic surgery simulator training improves resident performance of capsulorhexis in the operating room. Ophthalmology. 2013;120:2456–61.

Boden KT, Rickmann A, Fries FN, et al. Evaluation of a virtual reality simulator for learning direct ophthalmoscopy in student teaching. Ophthalmologe. 2020;117:44–9.

Deuchler S, Sebode C, Ackermann H, et al. Combination of simulation-based and online learning in ophthalmology: efficiency of simulation in combination with independent online learning within the framework of EyesiNet in student education. Ophthalmologe. 2022;119:20–9.

Howell GL, Chávez G, McCannel CA, et al. Prospective, randomized trial comparing simulator-based versus traditional teaching of direct ophthalmoscopy for medical students. Am J Ophthalmol. 2022;238:187–96.

Rai AS, Rai AS, Mavrikakis E, Lam WC. Teaching binocular indirect ophthalmoscopy to novice residents using an augmented reality simulator. Can J Ophthalmol. 2017;52:430–4.

Deuchler S, Dail YA, Koch F, et al. Efficacy of simulator-based slit lamp training for medical students: a prospective, Randomized Trial. Ophthalmol Ther. 2023;12:2171–86.

Sachs L. Angewandte Statistik. 11th ed. Berlin: Springer; 2003.

Hollander M, Wolfe DA. Nonparametric statistical methods. New York: Wiley; 1999.

Rosenthal R. Meta-Analytic Procedures for Social Research. Applied Social Research Methods Series 6. Newbury Park: Sage Publ, 1991.

Bienstock J, Heuer A. A review on the evolution of simulation-based training to help build a safer future. Medicine (Baltimore). 2022;101: e29503.

Al-Elq AH. Simulation-based medical teaching and learning. J Fam Community Med. 2010;17:35–40.

Ayaz O, Ismail FW. Healthcare simulation: a key to the future of medical education—a review. Adv Med Educ Pract. 2022;13:301–8.

Serna-Ojeda JC, Graue-Hernández EO, Guzmán-Salas PJ, Rodríguez-Loaiza JL. Simulation training in ophthalmology. Gac Med Mex. 2017;153:111–5.

Brigham TJ. Reality check: basics of augmented, virtual, and mixed reality. Med Ref Serv Q. 2017;36:171–8.

Cook DA, Hatala R. Validation of educational assessments: a primer for simulation and beyond. Adv Simul (Lond). 2016;1:31.

Chou J, Kosowsky T, Payal AR, Gonzalez Gonzalez LA, Daly MK. Construct and face validity of the Eyesi Indirect Ophthalmoscope Simulator. Retina. 2017;37:1967–76.

Colné J, Conart JB, Luc A, Perrenot C, Berrod JP, Angioi-Duprez K. EyeSi surgical simulator: construct validity of capsulorhexis, phacoemulsification and irrigation and aspiration modules. J Fr Ophtalmol. 2019;42:49–56.

Cissé C, Angioi K, Luc A, Berrod JP, Conart JB. EYESI surgical simulator: validity evidence of the vitreoretinal modules. Acta Ophthalmol. 2019;97:e277–82.

Ahmed TM, Rehman Siddiqui MA. The role of simulators in improving vitreoretinal surgery training—a systematic review. J Pak Med Assoc. 2021;71:S106–11.

Yu JH, Chang HJ, Kim SS, et al. Effects of high-fidelity simulation education on medical students’ anxiety and confidence. PLoS ONE. 2021;13(16): e0251078.

Deuchler S, Scholtz J, Ackermann H, Seitz B, Koch F. Implementation of microsurgery simulation in an ophthalmology clerkship in Germany: a prospective, exploratory study. BMC Med Educ. 2022;22:599.

Acknowledgements

We thank all study participants as well as the patient volunteers for their involvement in the study.

Medical Writing, Editorial, and Other Assistance.

The manuscript was edited for proper English language, grammar, punctuation, spelling, and overall style by Christina Turner, management assistant of the Saarland University Medical Center, Department of Ophthalmology. No funding was received for this assistance.

Funding

The Eyesi Slit Lamp simulator was provided by Haag-Streit Simulation for the duration of the study. No funding was received for the execution of the study. The journal’s Rapid Service Fee was funded by the authors.

Author information

Authors and Affiliations

Contributions

Conceptualization: Svenja Deuchler, Frank Koch, Berthold Seitz, Yaser Abu Dail and Claudia Buedel; methodology: Svenja Deuchler, Frank Koch, Elias Flockerzi, Tim Berger, Albéric Sneyers and Yaser Abu Dail; Formal analysis: Svenja Deuchler and Hanns Ackermann; writing - original draft preparation, Svenja Deuchler, Frank Koch and Claudia Buedel; writing - review and editing, Berthold Seitz; supervision: Svenja Deuchler, Frank Koch, Elias Flockerzi and Berthold Seitz. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

Frank Koch, Berthold Seitz, Elias Flockerzi, Yaser Abu Dail, Tim Berger, Albéric Sneyers, and Svenja Deuchler are gratuitous consultants for Haag-Streit Simulation. Hanns Ackermann and Claudia Buedel have no competing interests to declare.

Ethical Approval

This prospective randomized controlled trial was conducted in accordance with the Helsinki Declaration of 1964 and its later amendments. The study was evaluated by the Ethics Committee of Landesärztekammer Hessen (number 2022-3167-AF), and it was deemed that ethical approval was not required. All study participants provided informed written consent. Additionally, consent for publication of clinical pictures was given by the patient volunteers.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Deuchler, S., Dail, Y.A., Berger, T. et al. Simulator-Based Versus Traditional Training of Fundus Biomicroscopy for Medical Students: A Prospective Randomized Trial. Ophthalmol Ther 13, 1601–1617 (2024). https://doi.org/10.1007/s40123-024-00944-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40123-024-00944-9