Abstract

Herein, we have proposed a scheme for numerically solving hyperbolic partial differential equations (HPDEs) with given initial conditions. The operational matrix of differentiation for exponential Jacobi functions was derived, and then a collocation method was used to transform the given HPDE into a linear system of equations. The preferences of using the exponential Jacobi spectral collocation method over other techniques were discussed. The convergence and error analyses were discussed in detail. The validity and accuracy of the proposed method are investigated and checked through numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Hyperbolic partial differential equations (HPDEs) constitute an important subclass of partial differential equations. The HPDEs are used in many disciplines of science and engineering, such as studying the transmission and propagation of electrical signals [1], wave propagation [2], hypoelastic solids [3], astrophysics [4], process engineering [5], acoustic transmission [6] and random walk theory [7]. The HPDEs are used in shaping the vibrational motion of structures (e.g., beams, machines and buildings) and represent basis for fundamental equations of atomic physics [8, 9]. Recently, the study of exact and numerical solutions of either hyperbolic or parabolic PDEs has received increasing attention [10,11,12,13,14,15].

Spectral techniques have been successfully applied for approximating the solution of differential problems defined in unbounded domains. For problems with sufficient smooth analytic solutions, they exhibit exponential rates of convergence, high accuracy and low computational cost. Doha et al. [16] used a Jacobi rational spectral technique for solving Lane–Emden initial value problems, in astrophysics, on a semi-infinite interval. Hafez et al. [17] applied a new collocation scheme for solving hyperbolic equations of second order in a semi-infinite domain. Doha et al. [18] proposed a new spectral Jacobi rational-Gauss collocation method for solving the multi-pantograph delay differential equations on the half line. Bhrawy et al. [19] solved some higher order ordinary differential equations using a new exponential Jacobi pseudospectral method.

In this study, we used exponential Jacobi functions for numerically solving the HPDEs. The operational matrices of derivatives and products of exponential Jacobi functions were derived. These matrices were jointly implemented with the collocation approach to evaluate the solutions of the HPDEs. Collocation method [20,21,22,23,24] is an effective technique for numerically approximating different kinds of equations.

The workflow of this paper encompass: In the next section, we present some notations and other mathematical facts. “Operational matrix of differentiation for exponential Jacobi” section is devoted to the operational matrix of differentiation for exponential Jacobi functions. In “Implementation of the method” section, the operational matrix of differentiation for exponential Jacobi was used in a combination with the exponential Jacobi collocation method to solve the HPDEs. The error analysis was executed in “Error analysis” section. Two numerical examples are given in “Numerical results” section. Finally, some concluding remarks are mentioned in “Conclusion” section.

Mathematical preliminaries

Here, we list some useful mathematical relations and identities needed in the construction of the exponential Jacobi operational matrix.

Exponential Jacobi functions

Consider the standard classical Jacobi polynomials \(J^{(\rho ,\sigma )}_k(z)\) on the interval \([-1,1]\) with the weight function \(\omega ^{(\rho ,\sigma )}(z)=(1-z)^{\rho }(1+z)^{\sigma }, \rho ,\sigma >-1\),

the set \(\{J^{(\rho ,\sigma )}_k(z):k=0,1,\ldots \}\) forms a complete orthogonal system in the weighted Hilbert space \(L_{\omega ^{\rho ,\sigma }(x)}^2[-1,1]\) equipped with the inner product

and the norm

Let us define the exponential Jacobi functions by replacing z by \(1-2 e^{-\frac{x}{L}}\). Denoting the exponential Jacobi functions \(J^{(\rho ,\sigma )}_i (1-2 e^{-\frac{x}{L}})\) by \(\Upsilon ^{(\rho ,\sigma )}_i(x)\), \(x\in [0,\infty )\). Therefore, \(\Upsilon ^{(\rho ,\sigma )}_i(x)\) may be generated by the following recurrence relation:

where

and

The exponential Jacobi functions \(\Upsilon ^{(\rho ,\sigma )}_i(x)\) of degree i can be written as

where

The set \(\{\Upsilon _{i}^{(\rho ,\sigma )}(x): i=0,1,\ldots \}\), satisfy the following orthogonality relation:

where

and \(\delta _{ij}\) is the well-known kronecker delta.

Function approximation

Now, approximation of u(x) by \(N+1\) terms of exponential Jacobi functions yields

where C and \(\phi (x)\) are the unknown coefficients vector and the exponential Jacobi function vector, respectively, and are given by:

and

Operational matrix of differentiation for exponential Jacobi

Here, we report the derivation of the operational matrix of derivatives of the exponential Jacobi functions, which is of important use to our numerical scheme.

Theorem 1

Let\(\phi (x)\)be the exponential Jacobi vector defined in (7). The derivative of the vector\(\phi (x)\)can be expressed by

where\(\mathbf{D }\)is \((N+1)\times (N+1)\)operational matrix of the derivative. Then, the nonzero elements\(d_{k\,\ell }\)for\(0\le k, \ell \le N\)are given as follows:

It easily noted that\(\mathbf{D }\)is a lower-Heisenberg matrix.

Proof

See, Bhrawy et al. [19].

Studying the class of exponential Jacobi functions yields many special orthogonal functions as a direct special cases, and these cases are reported in the following corollaries:

Corollary 1

(Legendre Case) If\(\rho = \sigma = 0\), then the nonzero elements, of the operational matrix of the exponential Legendre functions,\(d_{k\,\ell }\) for \(0\le k,\ell \le N\)are given as follows:

Corollary 2

(ChebyshevT Case) If\(\rho = \sigma =- \frac{1}{2}\), then the nonzero elements, of the operational matrix of the exponential Chebyshev functions of the first kind,\(d_{k\,\ell }\)for\(0\le k,\ell \le N\)are given as follows:

Corollary 3

(ChebyshevU Case) If\(\rho = \sigma = \frac{1}{2}\), then the nonzero elements, of the operational matrix of the exponential Chebyshev functions of the second kind,\(d_{k\,\ell }\) for \(0\le k,\ell \le N\)are given as follows:

Corollary 4

(ChebyshevV Case) If\(\rho = -\frac{1}{2},\ \sigma = \frac{1}{2}\), then the nonzero elements\(d_{k\,\ell }\) for \(0\le k,\ell \le N\)are given as follows:

Corollary 5

(ChebyshevW Case) If\(\rho = \frac{1}{2},\ \sigma =- \frac{1}{2}\), then the nonzero elements\(d_{k\,\ell }\)for\(0\le k,\ell \le N\)are given as follows:

Remark 1

The operational matrix for r-th derivative can be derived as

where \(r \in N\) and the superscript in \({\mathbf{D }}^{(1)}\) denote matrix powers. Thus,

Implementation of the method

The target of this part is to derive a scheme for the exponential Jacobi spectral collocation method based on the operational matrix of derivative of exponential Jacobi function to numerically solve the HPDEs on the half line. Let us consider the HPDEs of the form [25]

subject to the initial conditions

We approximate \(v(x,t),\ \frac{\partial v(x,t)}{\partial t}\) and \(\frac{\partial v(x,t)}{\partial x}\) by the double exponential Jacobi functions as

where \(\mathbf{C }^{T}\) is \((N + 1)\times (M + 1)\) unknown matrix. Now, using Eqs. (14), (15) and (16), then it is easy to write

Now, we tame the collocation procedure for solving Eqs. (17)–(19). Suppose \(x^{(\rho _1,\sigma _1)}_{i}\ (\ 0\leqslant i\leqslant M)\) are the exponential Jacobi collocation points of \(\Upsilon _{i}^{(\rho _1,\sigma _1)}(x)\) and \(t^{(\rho _2,\sigma _2)}_{j}\ (0\leqslant j\leqslant N-1)\) are the exponential Jacobi collocation points of \(\Upsilon _{j}^{(\rho _2,\sigma _2)}(t)\). We substitute these collocation points in (17)–(19); therefore, the collocation scheme can be written as:

This yields a algebraic system of \((N + 1)\times (M + 1)\) equations in the required double exponential Jacobi coefficients \(c_{ij}, i=0,1,\ldots ,M;\,j=0,1,\ldots ,N,\) which can be solved by using any standard iteration technique, like Newton’s iteration solver. Consequently, the approximate solution \(v_{N,M}(x,t)\) can be evaluated.

Error analysis

Here, we discuss the convergence rate of the suggested double basis expansion, for this target, the following lemmas are needed:

Lemma 1

The following definite integral is valid:

where \((a)_i\) denote the Pochhammer notation, i.e., \((a)_i=\Gamma (a+i)/\Gamma (a).\)

Lemma 2

For all\(\rho >-1\), there exist two generic constants\(0<\kappa _1<\kappa _2\)such that:

Lemma 3

If\(\rho ,\sigma >-1\)then\(\mid \Upsilon _{i}^{(\rho ,\sigma )}(x)\mid \le J/i^q\)where\(q=\max (\rho ,\sigma ,-\frac{1}{2})\), whereJis a generic positive constant.

In this theorem, we ascertain the vanishing rate of the unknown expansion coefficients of the approximate solution, under certain constrains on the exact smooth solution of the solved problem.

Theorem 2

Ifv(x, t) is separable, i.e.,\(v(x,t)=v_1(x)\,v_2(t)\)and\(v_1, v_2\)are of exponential order, in the sense that, there exist\(A_1, A_2, \mu _1\)and\(\mu _2\)positive constants, such that\(|v_1(x)|\le A_1\,e^{-\mu _1\,x}\)and\(|v_2(t)|\le A_2\,e^{-\mu _2\,t}\), then the expansion coefficients in (14) satisfy the following estimate:

Proof

By the hypothesis of theorem, we have,

applying the inner product, and by the orthogonality relation (3), we get,

i.e.,

where,

Now by application of integration by parts on \(I^{(\rho _1,\sigma _1)}_1(i)\) and \(I^{(\rho _2,\sigma _2)}_2(j)\), since \(v_1\) and \(v_2\) are of exponential order, by the integral formula in Lemma 1, repeated use of the estimate in Lemma 2 on \(I^{(\rho _1,\sigma _1)}_1(i)\) and \(I^{(\rho _2,\sigma _2)}_2(j)\), the theorem is proved. \(\square\)

In this theorem, based on the result of the previous theorem, we ascertain the convergence of the approximate solution as the number of retained modes increases.

Theorem 3

If\(\min (\rho _1+2\mu _1,\rho _2+2\mu _2)> \frac{1}{2}\)and\(-1<\max (\rho _1,\rho _2,\sigma _1,\sigma _2)<-\frac{1}{2}\), then series in (14) converges absolutely.

Proof

We show that the series \(|\displaystyle \sum _{0}^{\infty }\displaystyle \sum _{0}^{\infty }c_{ij}\,\Upsilon _{i}^{(\rho _1,\sigma _1)}(x) \,\Upsilon _{j}^{(\rho _2,\sigma _2)}(t)|\) converges absolutely.

By the estimate in Theorem 2, using Lemma 3, then

which completes the proof of the theorem. \(\square\)

In this theorem, we control the estimate of two consecutive approximate solutions, to ascertain the stability when the number of retained modes increases.

Theorem 4

If\(\min (\rho _1+2\mu _1,\rho _2+2\mu _2)>\frac{1}{4}\)and\(-1<\max (\rho _1,\rho _2,\sigma _1,\sigma _2)<-\frac{1}{2}\), then

Proof

By the triangle inequality, we have,

Now, application of Lemma 2, Lemma 3 to the two norms of the R.H.S of the later inequality, respectively, and by the result of Theorem 3, we get

which completes the proof of the theorem. \(\square\)

Numerical results

In this section, we test our algorithm by exibiting two numerical experiments to check the applicability and accuracy of the proposed scheme. Comparison of the numerical results obtained by the suggested technique with those obtained by generalized Laguerre–Gauss–Radau collocation approach [25] confirms that the presented scheme is very effective and convenient. Thereby, we assert that the proposed scheme is more appropriate for solving these kinds of problems.

The absolute errors in the given tables are

where v(x, t) and \(v_{N,M}(x,t)\) are the exact solution and the numerical solution, respectively, at the point (x, t), respectively. Moreover, the maximum absolute errors are given by

Example 1

[25] Consider the hyperbolic equation of first-order of the form

subject to initial conditions,

where

The exact solution is given by

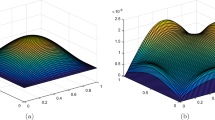

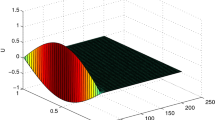

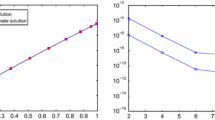

In Tables 1, 2 and 3, we give the absolute errors with \(\rho _1=\sigma _1=\rho _2= \sigma _2=-\frac{1}{2}\) (first kind exponential Chebyshev functions), \(\rho _1=\sigma _1=\rho _2= \sigma _2=0\) (exponential Legendre functions) and \(\rho _1=\sigma _1=\rho _2= \sigma _2=\frac{1}{2}\) (second kind exponential Chebyshev functions), respectively, at \(N=M=16\). Moreover, the results obtained by our method are compared with these obtained by generalized Laguerre–Gauss–Radau collocation method [25]. Figure 1 shows \(L^{\infty }\) error versus \(N = M\) and \(\rho _1=\sigma _1=\rho _2=\sigma _2\).

\(L^{\infty }\) error for Example 1 versus \(N = M\) and \(\rho _1=\sigma _1= \rho _2=\sigma _2\)

Example 2

[25] Consider the following hyperbolic equation of first-order

subject to initial conditions,

The exact solution is given by

Table 4 lists the results obtained by the our method in terms of absolute errors at \(N = M = 16\) for different values of \(\rho _1,\sigma _1,\rho _2,\sigma _2,x\) and t. Figure 2 shows the \(L^{\infty }\) error versus \(\rho _1=\sigma _1=\rho _2=\sigma _2\) and \(N=M\). Moreover, the results in Table 5 are more accurate if compared with these obtained by generalized Laguerre–Gauss–Radau collocation method [25].

\(L^{\infty }\) error for Example 2 versus \(N = M\) and \(\rho _1=\sigma _1= \rho _2=\sigma _2\)

Conclusion

We developed an accurate numerical technique and applied it to solve hyperbolic partial differential equations. The proposed operational matrix in combination with the exponential Jacobi spectral-collocation approach was elaborated for reducing the solution of hyperbolic first-order partial differential equations on the semi-infinite domain to an algebraic system of equations, which can be solved more easily. The operational matrices of derivatives of exponential Legendre, ChebyshevT, U, V, W functions can be obtained as direct special cases of the operational matrix of exponential Jacobi functions. The numerical results evince the high efficiency and accuracy of our approach.

References

Jordan, P.M., Puri, A.: Digital signal propagation in dispersive media. J. Appl. Phys. 85, 1273–1282 (1999)

Weston, V.H., He, S.: Wave splitting of the telegraph equation in R3 and its application to inverse scattering. Inverse Probl. 9, 789–812 (1993)

Yu, S.T.J., Yang, L., Lowe, R.L., Bechtel, S.E.: Numerical simulation of linear and nonlinear waves in hypoelastic solids by the CESE method. Wave Motion 47, 168–182 (2010)

Bonazzola, S., Gourgoulhon, E., Marck, J.-A.: Spectral methods in general relativistic astrophysics. J. Comput. Appl. Math. 109, 433–473 (1999)

Zhang, T., Tadé, M.O., Tian, Y.-C., Zang, H.: High-resolution method for numerically solving PDEs in process engineering. Comput. Chem. Eng. 32, 2403–2408 (2008)

Oberguggenberger, M.: Hyperbolic systems with discontinuous coefficients: generalized solutions and a transmission problem in acoustics. J. Math. Anal. Appl. 142, 452–467 (1989)

Banasiak, J., Mika, J.R.: Singularly perturbed telegraph equations with applications in the random walkt heory. J. Appl. Math. Stoch. Anal. 11, 9–28 (1998)

Lakestani, M., Sarray, B.N.: Numerical solution of telegraph equation using interpolating scaling function. Comput. Math. Appl. 60, 1964–1972 (2010)

Mittal, R.C., Bhatia, R.: A numerical study of two dimensional hyperbolic telegraph equation by modifed B-spline differential quadrature method. Appl. Math. Comput. 244, 976–997 (2014)

Abd-Elhameed, W.M., Doha, E.H., Youssri, Y.H., Bassuony, M.A.: New Tchebyshev–Galerkin operational matrix method for solving linear and nonlinear hyperbolic telegraph type equations. Numer. Methods Partial Differ. Equ. 32(6), 1553–1571 (2016)

Doha, E.H., Abd-Elhameed, W.M., Youssri, Y.H.: Fully Legendre spectral Galerkin algorithm for solving linear one-dimensional telegraph type equation. Int. J. Comput. Methods 16(8), 1850118 (2019)

Youssri, Y.H., Abd-Elhameed, W.M.: Numerical spectral Legendre–Galerkin algorithm for solving time fractional Telegraph equation. Rom. J. Pys. 63(3–4), 107 (2018)

Mu, L., Ye, X.: A simple finite element method for linear hyperbolic problems. J. Comput. Appl. Math. 330, 330–339 (2018)

Qin, X., Duan, X., Hu, G., Su, L., Wang, X.: An element-free Galerkin method for solving the two-dimensional hyperbolic problem. Appl. Math. Comput. 321, 106–120 (2018)

Hafez, R.M.: Numerical solution of linear and nonlinear hyperbolic telegraph type equations with variable coefficients using shifted Jacobi collocation method. Comput. Appl. Math. 37(4), 5253–5273 (2018)

Doha, E.H., Bhrawy, A.H., Hafez, R.M., Gorder, R.A.V.: A Jacobi rational pseudospectral method for Lane–Emden initial value problems arising in astrophysics on a semi-infinite interval. Comput. Appl. Math. 33, 607–619 (2014)

Hafez, R.M., Abdelkawy, M.A., Doha, E.H., Bhrawy, A.H.: A new collocation scheme for solving hyperbolic equations of second order in a semi-infinite domain. Rom. Rep. Phys. 68, 112–127 (2016)

Doha, E.H., Bhrawy, A.H., Hafez, R.M.: Numerical algorithm for solving multi-pantograph delay equations on the half-line using Jacobi rational functions with convergence analysis. Acta Math. Appl. Sin. Engl. Ser. 33, 297–310 (2017)

Bhrawy, A.H., Hafez, R.M., Alzaidy, J.F.: A new exponential Jacobi pseudospectral method for solving high-order ordinary differential equations. Adv. Differ. Equ. 2015, 152 (2015)

Bhrawy, A.H., Doha, E.H., Baleanu, D., Hafez, R.M.: A highly accurate Jacobi collocation algorithm for systems of high-order linear differential–difference equations with mixed initial conditions. Math. Methods Appl. Sci. 38, 3022–3032 (2015)

Hafez, R.M., Ezz-Eldien, S.S., Bhrawy, A.H., Ahmed, E.A., Baleanu, D.: A Jacobi Gauss–Lobatto and Gauss–Radau collocation algorithm for solving fractional Fokker–Planck equations. Nonlinear Dyn. 82, 1431–1440 (2015)

Bhrawy, A.H., Zaky, M.A.: An improved collocation method for multi-dimensional space-time variable-order fractional Schrödinger equations. Appl. Numer. Math. 111, 197–218 (2017)

Doha, E.H., Bhrawy, A.H., Hafez, R.M., Van Gorder, R.A.: Jacobi rational-Gauss collocation method for Lane–Emden equations of astrophysical significance. Nonlinear Anal. Model. Control 19, 537–550 (2014)

Bhrawy, A.H., Doha, E.H., Abdelkawy, M.A., Hafez, R.M.: An efficient collocation algorithm for multidimensional wave type equations with nonlocal conservation conditions. Appl. Math. Model. 39, 5616–5635 (2015)

Bhrawy, A.H., Hafez, R.M., Alzahrani, E.O., Baleanu, D., Alzahrani, A.A.: Generalized Laguerre–Gauss–Radau scheme for first order hyperbolic equations on semi-infinite domains. Rom. J. Phys. 60, 918–934 (2015)

Acknowledgements

The authors are very grateful to the anonymous referees for careful reviewing and crucial comments, which enabled us to improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Youssri, Y.H., Hafez, R.M. Exponential Jacobi spectral method for hyperbolic partial differential equations. Math Sci 13, 347–354 (2019). https://doi.org/10.1007/s40096-019-00304-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-019-00304-w

Keywords

- First-order partial differential equations

- Exponential Jacobi functions

- Operational matrix of differentiation

- Heisenberg matrix

- Convergence analysis