Abstract

We develop a provably efficient importance sampling scheme that estimates exit probabilities of solutions to small-noise stochastic reaction–diffusion equations from scaled neighborhoods of a stable equilibrium. The moderate deviation scaling allows for a local approximation of the nonlinear dynamics by their linearized version. In addition, we identify a finite-dimensional subspace where exits take place with high probability. Using stochastic control and variational methods we show that our scheme performs well both in the zero noise limit and pre-asymptotically. Simulation studies for stochastically perturbed bistable dynamics illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we are concerned with the problem of rare event simulation for the stochastic reaction–diffusion equation (SRDE)

where \(\epsilon \ll 1,\) \({\mathcal {A}}\) is a uniformly elliptic second-order differential operator, \(f:\mathbb {R}\rightarrow \mathbb {R}\) is a dissipative nonlinearity with polynomial growth and \({\dot{W}}\) is a stochastic forcing term of intensity \(\sqrt{\epsilon }\) modeled by space-time white noise. The mixed boundary conditions are given by the linear operator \({\mathcal {N}}\) which acts on functions defined on the boundary \(\partial (0,\ell )\) (see Sect. 2 for more details), and the initial datum \(x:(0,\ell )\rightarrow \mathbb {R}\) is a continuous function in the kernel of \({\mathcal {N}}.\)

Systems like (1) are of interest because they exhibit metastable behavior. Assuming that the associated noiseless dynamics are non-trivial and \(\epsilon >0\), the stochastic forcing can induce transitions between neighborhoods of metastable states. As \(\epsilon \rightarrow 0\), transitions and exits from domains of attraction occur with very small probabilities and rigorous asymptotic analysis of exit times and places is possible within the framework of large deviations or potential theory (see e.g. [18, 28, 31, 32] and [4, 16, 27, 35, 47], as well as references within, for results in metastability theory in finite and infinite dimensions respectively).

In practice, efficient simulation of such events is challenging. On the one hand, Large Deviation Principles (LDPs) characterize the exponential decay rates of probabilities in the limit as \(\epsilon \rightarrow 0\) but ignore the effect of prefactors which can be significant (see [23]). On the other hand, as \(\epsilon \) decreases, standard Monte-Carlo schemes require an increasingly large sample size in order to maintain a small relative error per sample. For this reason, accelerated and adaptive methods such as importance sampling or multi-level splitting become essential when it comes to rare events. For more details on the general theory and applications of such methods in a number of different models, the interested reader is referred to the book [10].

In the present work, we aim to develop a provably efficient importance sampling scheme that computes exit probabilities of \(X^\epsilon \) from scaled neighborhoods of a stable equilibrium point \(x^*\). In particular, let \(X^\epsilon _x\) denote the unique (mild) solution of (1) with initial condition x, \(D\subset L^2(0,\ell )\) and

For \(T, L>0,\) we focus on the estimation of probabilities \(\mathbb {P}[\tau _{x^*}^\epsilon \le T ]\) in the case where \(D=D_\epsilon \) with

The scaling \(h(\epsilon )\) is chosen so that \(h(\epsilon )\rightarrow \infty \) and \(\sqrt{\epsilon }h(\epsilon )\rightarrow 0,\) as \(\epsilon \rightarrow 0.\) As \(\epsilon \rightarrow 0\), exit probabilities from such domains lie in an asymptotic regime that interpolates between the Central Limit Theorem (CLT) and LDP. To be precise, let \(X^0_x\) denote the (deterministic) solution of (1) with \(\epsilon =0\) and define a family of centered and re-scaled processes

As \(\epsilon \rightarrow 0,\) the choices \(h(\epsilon )=1/\sqrt{\epsilon }\) and \(h(\epsilon )=1\) correspond to large and Gaussian deviations of \(X^{\epsilon }\) respectively.

Exits of \(X^\epsilon \) from D are then equivalent to exits of \(\eta _x^\epsilon \) from an \(L^2-\)ball of radius L around 0 and large deviations of the family \(\{\eta _x^\epsilon \}_{\epsilon \in (0,1)}\) are called moderate deviations of \(\{X_x^\epsilon \}_{\epsilon \in (0,1)}.\) Moderate Deviation Principles (MDPs) have been studied in many different contexts such as multiscale and interacting particle systems, Markov processes with jumps, small-noise stochastic dynamics, statistical estimation, option pricing and stochastic recursive algorithms see e.g. [30, 55] for SRDEs as well as [7, 11, 20, 29, 33, 34, 36, 38, 44].

Importance sampling is a variance-reduction accelerated Monte-Carlo method and its objective is to minimize the variance of the estimator by carefully chosen changes of measure. Such changes of measure "push" the dynamics towards trajectories that realize the rare event of interest. This procedure transforms tail events to more typical events, thus allowing for more efficient sampling. The simulation outcomes are then weighted by likelihood ratios so that the importance sampling estimators remain unbiased under the new probability measures. Importance sampling schemes for events in the large and moderate deviation regimes have been developed for finite-dimensional systems in [21, 23, 49, 50, 53]. In [21, 50], the authors observed that moderate-deviation based schemes provide a viable and simpler alternative to their large-deviation based counterparts, in cases where both are applicable. This is due to the fact that the MDP action functional, which characterizes exponential decay rates of probabilities, takes a much simpler form. In turn, this allows for more tractable and straightforward design of optimal changes of measure.

Importance sampling for SRDEs presents new challenges due to infinite dimensionality combined with the nonlinearity of the dynamics. Our work is close to [46] where a large deviation based scheme was developed for linear equations (i.e. when \(f=0\)). In there, the authors show that efficient changes of measure need to accomplish both variance and dimension reduction. For example, changes of measure that force infinitely many modes of the dynamics lead to estimators with very large variance when \(\epsilon \) is small. A possible workaround is to show that exits from D take place in a finite-dimensional submanifold of \(\partial D\) with high probability. This was achieved in the linear case of [46] where it was proved that, under a sufficiently large spectral gap, exit from D happens in the direction of the eigenvector \(e_1\) of \(-{\mathcal {A}}\) corresponding to the smallest non-zero eigenvalue. Similar results regarding the exit direction for (finite-dimensional) SDEs with a linear drift have been proved in [51] (see also Remark 5 below).

To the best of our knowledge, importance sampling for nonlinear SRDEs is rigorously studied here for the first time. The main difficulty in designing large deviation-based schemes for such equations lies in the task of identifying a finite-dimensional exit submanifold (if any). We are able to overcome this obstacle by working in the moderate deviation regime. As we show in the sequel, the latter is equivalent to linearizing the dynamics in a neighborhood of the equilibrium \(x^*\). Consequently, the results of [46] can be applied locally at the cost of a linearization error which is, however, negligible as \(\epsilon \rightarrow 0\). In cases where both LDP and MDP-based schemes are available, one may think of the tradeoff between the two as follows: Moderate deviations cover the regime between central limit theorem and large deviations, so they are appropriate to characterize rare events, but not so rare that they would be in the large deviations regime. On the other hand, moderate deviations schemes are in general more tractable due to the asymptotic linearization of the dynamics that takes place. In our setting, this tradeoff is reflected in the fact that we only consider exit domains (2) in which the radius shrinks to zero as \(\epsilon \rightarrow 0.\) Furthermore, the probability of exiting from a ball of radius \(\sqrt{\epsilon }h(\epsilon )\) is strictly smaller than the probability of exiting a ball of radius 1. The MDP importance sampling schemes described in this paper can provide a quantitative upper bound for the much more difficult to characterize LDP exit probabilities.

The design of an importance sampling scheme and proof of its good asymptotic and pre-asymptotic performance is the main contribution of this paper. In the course of our analysis, we prove an MDP for additive-noise SRDEs with a non-Lipschitz nonlinearity which cannot be found in the literature (see Theorem 3.1 and Remark 11). Furthermore, our theory is applied to the stochastic Allen–Cahn (also known as real Ginzburg-Landau or Chafee-Infante) equation and supplemented by simulation studies. In contrast to the linear case, there is a number of interesting cases where the aforementioned spectral gap is not satisfied. Another novel feature of this work is the construction of changes of measure that perform well asymptotically (i.e. as \(\epsilon \rightarrow 0\)) in the absence of this condition (see Hypothesis 3c’ below).

The rest of this paper is organized as follows: In Sect. 2 we fix the notation and state our assumptions. In the first part of Sect. 3 we introduce moderate deviations and subsolution-based importance sampling and then state and prove our results on the asymptotic theory of the scheme. Section 4 is devoted to the implementation and pre-asymptotic performance analysis of our scheme. In Sect. 5 we apply the developed theory to the case where f is, up to a sign, the derivative of a double-well potential. Our examples include the stochastic Allen–Cahn equation (which features a cubic nonlinearity) with different boundary conditions as well as SRDEs with higher order polynomial nonlinearities. The results of simulation studies are then presented in Sect. 6. Finally, Appendix A collects the proofs of some useful lemmas.

2 Notation and assumptions

Let \(\ell >0.\) The Hilbert space \(L^2(0,\ell )\) endowed with its usual inner product will be denoted by \((\mathcal {H},\langle \cdot ,\cdot \rangle _\mathcal {H})\). The Banach space \(C[0,\ell ],\) endowed with the supremum norm, is denoted by \({\mathcal {E}}.\) The norm of a Banach space \({\mathcal {X}}\) will be denoted by \( \Vert \cdot \Vert _{{\mathcal {X}}}\) and the closed ball of radius \(R>0\) and center \(x_0\in {\mathcal {X}}\), i.e. the set \(\{x\in {\mathcal {X}} : \Vert x-x_0\Vert _{{\mathcal {X}}}\le R\}\), by \(B_{\mathcal {X}}(x_0,R)\). We use \(\mathring{D},{\bar{D}},\partial D \) to denote interior, closure and boundary of a set \(D\subset {\mathcal {X}}\) respectively. The lattice notation \(\wedge , \vee \) is used to indicate minimum and maximum respectively.

For \(\theta > 0,\) \(p\in [1,\infty ),\) we denote by \(W^{p,\theta }(0,\ell )\) the fractional Sobolev space of \(x\in L^p(0,\ell )\) such that

\(W^{p,\theta }(0,\ell ),\) endowed with the norm \(\Vert \cdot \Vert _{p,\theta }:=\Vert \cdot \Vert _{L^p(0,\ell )}+[\cdot ]_{p,\theta },\) is a Banach space. \(W^{2,\theta }(0,\ell )\) is a Hilbert space and is denoted by \(H^{\theta }(0,\ell ).\) Moreover, for \(T>0\) and \(\beta \in [0,1)\), we denote by \(C^\beta ([0,T];{\mathcal {X}})\) the space of \(\beta \)-Hölder continuous \({\mathcal {X}}\)-valued functions defined on the interval [0, T]. \(C^\beta ([0,T];{\mathcal {X}}),\) endowed with the norm

is a Banach space.

For any two Banach spaces \({\mathcal {X}}, {\mathcal {Y}}\) we denote the space of linear bounded operators \(B: {\mathcal {X}}\rightarrow {\mathcal {Y}}\) by \({\mathscr {L}}({\mathcal {X}}; {\mathcal {Y}})\). The latter is a Banach space when endowed with the norm \(\Vert B\Vert _{{\mathscr {L}}({\mathcal {X}}; {\mathcal {Y}})}:=\sup _{x\in B_{\mathcal {X}}(0,1)}\Vert Bx\Vert _{{\mathcal {Y}}}\). When the domain coincides with the co-domain, we use the simpler notation \({\mathscr {L}}({\mathcal {X}}).\) The spaces of trace-class and Hilbert-Schmidt linear operators \(B:\mathcal {H}\rightarrow \mathcal {H}\) are denoted by \({\mathscr {L}}_1(\mathcal {H})\) and \({\mathscr {L}}_2(\mathcal {H})\) respectively. The former is a Banach space when endowed with the norm \(\Vert B\Vert _{{\mathscr {L}}_1(\mathcal {H})}:=\text {tr}(\sqrt{B^*B})\) while the latter is a Hilbert space when endowed with the inner product \(\langle B_1, B_2\rangle _{{\mathscr {L}}_2(\mathcal {H})}:= \text {tr}( B_2^* B_1)\).

The operator \({\mathcal {A}}\) in (1) is a uniformly elliptic second-order differential operator in divergence form. In particular:

with \(a\in C^1(0,\ell )\) and \(\inf _{\xi \in (0,\ell )}a(\xi )>0\). The operator \({\mathcal {N}}\) acts on the boundary \(\{0,\ell \}\) and can be either the identity operator (corresponding to Dirichlet boundary conditions), first-order differential operators of the type

for some \(b,c\in C^1[0,\ell ]\) such that \(b\ne 0\) on \(\{0,\ell \}\) (corresponding to Neumann or Robin boundary conditions) or

for periodic boundary conditions. We denote by A the realization of the differential operator \({\mathcal {A}}\) in \(\mathcal {H}\), endowed with the boundary condition \({\mathcal {N}}\). It is defined on a dense subspace \(Dom(A)\subset \mathcal {H}\) that contains

and it generates a \(C_0\) semigroup of operators \(S=\{S(t)\}_{t\ge 0}\subset {\mathscr {L}}(\mathcal {H})\). Moreover, the part of A in \(\overline{Dom(A)}\subset \mathcal {E},\) where the closure is taken in the topology of \(\mathcal {E},\) generates either a \(C_0\) or an analytic semigroup for which we use the same notation (see e.g. A.27 in [17] for a definition). Regarding the spectral properties of A, we make the following assumptions:

Hypothesis 1(a)

In view of (4), the operator \(-A\) is self-adjoint. As a result, there exists a countable complete orthonormal basis \(\{e_{n}\}_{n\in \mathbb {N}}\subset \mathcal {H}\) of eigenvectors of \(-A\). The corresponding sequence of nonnegative eigenvalues is denoted by \(\{a_{n}\}_{n\in \mathbb {N}}\).

Hypothesis 1(b)

The eigenvectors satisfy

Remark 1

Without loss of generality, we can replace the operator A by \({\tilde{A}}=A-cI\) for some \(c>0\) and the reaction term f in (1), by \({\tilde{f}}(x(\xi )):=f(x(\xi ))+cx(\xi )\). The model is invariant under this transformation and, in light of Hypothesis 1(a), it follows that \(\Vert {\tilde{S}}(t)\Vert _{{\mathscr {L}}(\mathcal {H})}\le e^{-c t}\). Throughout the rest of this work we will be using \({\tilde{A}},{\tilde{S}}\) and \( {\tilde{f}}\) with no further distinction in notation.

Let \(\theta \ge 0\). In view of Hypotheses 1(a) along with the previous remark, \(-A\), restricted to its image, has a densely defined bounded inverse \((-A)^{-1}\) which can then be uniquely extended to all of \(\mathcal {H}\). The fractional power \((-A)^{-\theta }\) is defined via interpolation and is also injective. Letting \((-A)^{\frac{\theta }{2}}:= ((-A)^{-\frac{\theta }{2}})^{-1}\) we define \(\mathcal {H}^\theta := Dom((-A)^\frac{\theta }{2})= Range((-A)^{-\frac{\theta }{2}})\subset \mathcal {H}\). The norm \(\Vert x\Vert _{\mathcal {H}^\theta }:=\big \Vert (-A)^\frac{\theta }{2}x\big \Vert _\mathcal {H}\) turns \(\mathcal {H}^\theta \) into a Banach space and is equivalent to the graph norm (see [41], Chapter 2.2).

Remark 2

For \(\theta \in (0,\frac{1}{2})\) the spaces \(H^\theta (0,\ell )\) and \(\mathcal {H}^\theta \) coincide via the identification

which holds with equivalence of norms. The latter implies that for each \(t\ge 0\), the linear operator \(S(t)-I\in {\mathscr {L}}(H^\theta ;\mathcal {H})\) and there exists a constant \(C>0\) such that

The analytic semigroup S possesses the following regularizing properties (see e.g. section 4.1.1 in [13]) :

(i) For \(0\le s\le r\le \frac{1}{2}\) and \(t>0\), S maps \(H^{s}(0,\ell )\) to \(H^{r}(0,\ell )\) and

for some positive constants \(c_{r,s}, C_{r,s}\).

(ii) S is ultracontractive, i.e. for \(t>0,\) S(t) maps \(\mathcal {H}\) to \(L^{\infty }(0,\ell )\) and furthermore, for any \(1\le p\le r\le \infty \),

The next set of assumptions concerns the nonlinear reaction term in (1).

Hypothesis 2(a)

\(f:\mathbb {R}\rightarrow \mathbb {R}\) is twice continuously differentiable and

where \(f_1:\mathbb {R}\rightarrow \mathbb {R}\) is globally Lipschitz continuous and \(f_2:\mathbb {R}\rightarrow \mathbb {R}\) is a non-increasing function.

Hypothesis 2(b)

There exists \(C_f>0\) and \(p_0\ge 3\) such that for all \( x\in \mathbb {R}\) and \(i\in \{0,1,2 \}\)

For \(p\ge 1\), f induces a superposition (or Nemytskii) operator \(F:{\mathcal {E}}\rightarrow L^p(0,\ell )\) defined by \(F(x)(\xi ):=f(x(\xi )),\) \(\xi \in (0,\ell ).\) In view of Hypotheses 2(a) and 2(b), F is twice Gâteaux differentiable along any direction in \({\mathcal {E}}\) and (with some abuse of notation) its Gâteaux differentials are given by \(D^{i}F=\partial ^{i}_xf\), \(i=1,2\).

The last set of assumptions concerns the stability properties of the deterministic and linearized dynamics governed by (1), after setting \(\epsilon =0.\)

Hypothesis 3(a)

There exists at least one asymptotically stable equilibrium \(x^*\in Dom(A)\) of (1) solving the elliptic Sturm-Liouville problem \( Ax+F(x)=0.\)

Hypothesis 3(b)

The linear self-adjoint operator \(-A-DF(x^*)\) has a countable, non-decreasing sequence of nonnegative eigenvalues \(\{a_n^f\}_{n\in \mathbb {N}}\) corresponding to a complete orthonormal set of eigenvectors \(\{e^f_n\}_{n\in \mathbb {N}}\subset {\mathcal {E}}.\) Therefore, the equilibrium \(x^*\) is asymptotically stable.

Hypothesis 3(c)

The first two eigenvalues of the self-adjoint operator \(-A-DF(x^*)\) satisfy \(3a_1^f<a_2^f.\)

This spectral gap provides a sufficient condition that allows us to identify a one-dimensional exit direction for limiting trajectories (see Lemma 3.4 below). A weaker condition under which our results continue to hold is \(2a_1^f<a_2^f\) (see Remark 7). In fact, our asymptotic results continue to hold under the following relaxed spectral gap:

Hypothesis 3(c\({}^\prime \))

There exists \(k_0\ge 1\) such that \(3a_1^f<a_{k_0+1}^f\) and \(a_1^f<a_2^f.\)

Note that Hypothesis 3(c) trivially implies Hypothesis 3c’ with \(k_0=1\). The latter will be used throughout Section 3 to prove asymptotic results. In Sect. 4 we restrict the pre-asymptotic analysis to schemes that work under Hypothesis 3(c).

Turning to the stochastic forcing, let \((\Omega ,{\mathscr {F}}, {\mathscr {F}}_{t\ge 0}, \mathbb {P})\) be a complete filtered probability space. The space-time white noise \({\dot{W}}\) is understood as the time-derivative of a cylindrical Wiener process \(W:[0,\infty )\times \mathcal {H}\rightarrow L^2(\Omega )\) in the sense of distributions. The latter is a Gaussian family of random variables with covariance given by

for \((t_i,\chi _i)\in [0,\infty )\times \mathcal {H}, i=1,2.\) Given a separable Hilbert space \((\mathcal {H}_1, \langle .\;,.\rangle _{\mathcal {H}_1})\) such that \(\mathcal {H}\) is a linear subspace of \(\mathcal {H}_1\) and the inclusion map \(\mathcal {H}\overset{i}{\rightarrow }\mathcal {H}_1\) is Hilbert-Schmidt, W can be identified with the \(\mathcal {H}_1-\)valued Wiener process

with covariance operator \(Q=ii^*\in {\mathscr {L}}_1(\mathcal {H})\). This identification is assumed throughout the rest of this paper without further distinction in notation.

Having introduced the necessary notation, we can recast (1) as a stochastic evolution equation on \({\mathcal {E}}\) given by

A mild solution to the latter is defined as a process \(X^\epsilon \) satisfying for each \(\epsilon \) and all \(t\in [0,T],\)

with probability 1. The last term is known as a stochastic convolution and will be frequently denoted by \(W_A.\) Our assumptions guarantee that the \({\mathcal {E}}\)-valued paths of \(W_A\) are continuous with probability 1 and

This can be proved by the stochastic factorization method of Da Prato-Zabczyk [17] (see also Theorem B.6 in [45]). Moreover, for each \(\epsilon >0,\) (8) has a unique mild solution taking values in \(C([0,T];{\mathcal {E}})\) with probability 1 (see e.g. Theorem 2.2 in [14]).

3 Moderate deviations, importance sampling and asymptotic theory

3.1 General theory and main results

In this section we present some theoretical aspects of subsolution-based importance sampling in the moderate deviation regime, applied to our problem of interest. First, we recall the notion of a Moderate Deviation Principle (MDP).

Definition 3.1

Let \(T>0,\) \({\mathcal {X}}=\mathcal {H}\) or \({\mathcal {E}}, x\in {\mathcal {X}}\) and a functional \({\mathcal {S}}_{x,T}:C([0,T];{\mathcal {X}})\rightarrow [0,\infty ]\) with compact sub-level sets.

(i) We say that the collection of \(C([0,T];{\mathcal {X}})\)-valued random elements \(\{X^\epsilon \}_{\epsilon \ll 1}\) satisfies an MDP with action functional \({\mathcal {S}}_{x,T}\) if, for all continuous and bounded \(g: C([0,T];{\mathcal {X}})\rightarrow \mathbb {R}\) and all scalings \(h(\epsilon )\) such that \(h(\epsilon )\rightarrow \infty \) and \(\sqrt{\epsilon }h(\epsilon )\rightarrow 0\) as \(\epsilon \rightarrow 0\)

where \(\eta _x^{\epsilon }\) is defined as in (3).

(ii) A Borel set \(E\subset C([0,T];{\mathcal {X}})\) will be called an \({\mathcal {S}}_{x,T}-\)continuity set if

As mentioned in Sect. 1 we aim to compute probabilities of the form

for \(\epsilon \ll 1, T>0,\) where \( \tau _{x^*}^\epsilon =\inf \{ t>0: X_{x^*}^{\epsilon }(t)\notin D\}\) and

for some \(L>0.\) Passing to the moderate deviation process \(\eta ^\epsilon _x\) and recalling that \(x^*\) is a (stable) equilibrium of \(X^0_x\) we see that

and

As will be shown in Sect. 3.4, \(\eta ^\epsilon _{x^*}\) converges, as \(\epsilon \rightarrow 0\), to the solution of a linear deterministic PDE with zero initial condition. Since 0 is the unique fixed point of this PDE, the limit process is bound to stay at 0 and \(\lim _{\epsilon \rightarrow 0} P(\epsilon )=0.\) This is why accelerated methods that estimate \(P(\epsilon )\) when \(\epsilon \) is small are useful.

In this paper, we will only work with unbiased estimators. Hence, minimizing the variance of the estimator is equivalent to minimizing the second moment. As we show below, an upper bound for the exponential decay rate of the second moment of any unbiased estimator can be determined in terms of the action functional \({\mathcal {S}}_{x,T}.\)

Lemma 3.1

Let \(P(\epsilon )\) as in (12) and \({\hat{P}}(\epsilon )\) be an unbiased estimator of \(P(\epsilon )\) with respect to a probability measure \(\bar{\mathbb {P}}\) defined on \((\Omega , {\mathscr {F}}).\) For any \(\phi \in C([0,T];{\mathcal {X}}),\) let \(\tau _\phi =\inf \{t>0: \phi (t)\notin \mathring{B}_{\mathcal {H}}(0,L) \}\) and

If \(\{X^\epsilon \}\) satisfies an MDP with action functional \({\mathcal {S}}_{x,T}\) and \(E=\{ \phi \in C([0,T];\mathcal {H}): \tau _{\phi }\le T\}\) is a \({\mathcal {S}}_{x,T}-\)continuity set then

where \({\bar{\mathbb {E}}}\) denotes expectation with respect to the measure \(\bar{\mathbb {P}}.\)

Proof

We have

Now, for any unbiased estimator \({\hat{P}}(\epsilon ),\)

where we used Jensen’s inequality. Thus

where we used the continuity property of E in the last equality. \(\square \)

As in finite dimensions (see e.g. the discussion in Section 2.2 in [23]) , the previous lemma shows that \(2G_T(0,0)\) is the best possible exponential decay rate for any unbiased estimator. In turn, this motivates the following criterion for asymptotic optimality.

Definition 3.2

An unbiased estimator \({\hat{P}}(\epsilon )\) of \(P(\epsilon )\) defined on a probability space \((\Omega , {\mathscr {F}}, {\bar{\mathbb {P}}})\) will be called asymptotically optimal if

In other words, an estimator is asymptotically optimal if its second moment achieves the best possible exponential decay rate in the limit as \(\epsilon \rightarrow 0\).

Importance sampling involves changes of measure chosen to guarantee that the corresponding estimators achieve optimal (or nearly optimal) asymptotic behavior. Given a measurable feedback control (or change of measure) \(u:[0,T]\times \mathcal {H}\rightarrow \mathcal {H}\) that is bounded on bounded subsets of \(\mathcal {H},\) we define a family of probability measures \(\{\mathbb {P}^\epsilon \}_{\epsilon >0}\) on \((\Omega , {\mathscr {F}})\) such that, for all \(\epsilon \), \(\mathbb {P}^\epsilon<<\mathbb {P}\) on \({\mathscr {F}}_T\) and

Using these new measures, it is straightforward to verify that

defined on \(( \Omega , {\mathscr {F}}_T, \mathbb {P}^{\epsilon } )\), is an unbiased estimator of \(\mathbb {P}[\tau ^{\epsilon }_{x^*}\le T]\). Its second moment is given by

As we show in the next lemma \(Q^{\epsilon }(u)\) admits a variational stochastic control representation which will be useful for studying its asymptotic behavior. A similar variational formula can be found in (2.5) of [46].

Lemma 3.2

Let \(u:[0,T]\times \mathcal {H}\rightarrow \mathcal {H}\) be a measurable feedback control that is bounded on bounded subsets of \(\mathcal {H}\), uniformly in \(t\in [0,T]\). Then for all \(\epsilon >0\)

where \({\mathcal {A}}\) is the collection of all \(\mathcal {H}\)-valued, \({\mathscr {F}}_{t\ge 0}\)-adapted processes v defined on [0, T] such that

with probability 1, \(\hat{\eta }^{\epsilon ,v}_{x^*}\) solves

and

Proof

Let \(\epsilon >0.\) From the Cameron-Martin-Girsanov theorem,

where

is a cylindrical Wiener process under \(\mathbb {P}^\epsilon \). Using yet another change of measure with

we can write

where \(\hat{\eta }^{\epsilon }_{x^*}\) solves

and \(\hat{\tau }^{\epsilon }_{x^*}\) denotes the corresponding exit time for \(\hat{\eta }^{\epsilon }_{x^*}\). This follows, once again, from the Cameron-Martin-Girsanov theorem, as

is a cylindrical Wiener process under the measure \({\tilde{\mathbb {P}}}^\epsilon \). From (19) we see that the second moment of the estimator can be written as an exponential functional of the driving noise and, as such, it admits the variational representation (17) (see (2.5) in [46] as well as (14) in [50] for the finite-dimensional case). \(\square \)

The form of the MDP action functional provides essential information for choosing changes of measure u that perform well asymptotically. In particular, if for all \(\phi \) with \({\mathcal {S}}_{x,T}(\phi )<\infty \) there exists a (local) Lagrangian \({\mathcal {L}}_x\) defined on a subset of \({\mathcal {X}}\times \mathcal {H},\) such that

then "good" changes of measure are connected to subsolutions of the PDE

with

Here, \(\mathbb {H}_x\) denotes the Hamiltonian corresponding to \({\mathcal {L}}_x\) via Legendre transform (up to a sign). In the problems we consider, the latter are not well-defined on the whole space but rather on a subset \({\mathcal {K}}\times \mathcal {H}\subset \mathcal {H}\times \mathcal {H},\) see e.g. (23) below. The notion of subsolution is meant in the sense of the following definition.

Definition 3.3

A subsolution of (21) is any \(U:[0,T]\times {\mathcal {K}}\rightarrow \mathbb {R}\) such that for all \((t,\eta ),\) \(U(\cdot ,\eta )\in C^1(0,T)\), \(U(t,\cdot )\in C^1({\mathcal {K}})\) in the sense of Fréchet differentiation and satisfies

The interested reader is referred to [25] for the original development of subsolution-based importance sampling. As we will show below (Theorem 3.1 and Remark 11), when \(x=x^*\), the MDP action functional takes the form (20) with

and the corresponding Hamiltonian is given by

A direct consequence of (20) is that we can construct an explicit stationary subsolution in terms of the corresponding quasipotential. The latter is given by

and \(V_{x^*}(\eta )=\infty \) otherwise. A physical interpretation of \(V_{x^*}(\eta )\) is that of the minimal "energy" required to push a path from 0 to the state \(\eta \) and its explicit form is a consequence of the fact that (8) is, in our setting, a gradient system (see e.g. [16, 17] Section 12.2.3 for SRDEs). In view of Hypotheses 1(a), 1(b), 3(a), 3(b) it follows that

is a subsolution of (21) on \({\mathcal {K}}=Dom(A)\). The final condition is satisfied since \(a_1^f=\inf _{n\in \mathbb {N}}a_n^f.\)

Remark 3

In finite-dimensional systems, feedback controls (or changes of measure) defined by \(u(t,\eta )=-D_\eta U(t,\eta )\) lead to nearly optimal asymptotic behavior (see [22] Section 2.3, [23] Theorem 2.4 for large-deviation and [50] Theorem 3.1 for moderate deviation-based schemes). A first issue that appears in infinite dimensions is that \(u(t, \hat{\eta }^{\epsilon ,v}_{x^*}(t))\) is not well-defined since with probability 1 and for all t, \(\hat{\eta }^{\epsilon ,v}_{x^*}(t)\notin Dom(A)\). The latter is a consequence of the spatial irregularity of the noise.

Throughout the rest of this paper, \(P^f_n:\mathcal {H}\rightarrow \mathcal {H}\) denotes an orthogonal projection to the \(n-\)dimensional eigenspace \(\text {span}\{ e^f_j\}_{j=1}^{n}\) and we consider the "projected" quasipotential \(V_{x^*}(P^f_n\eta )=V_{x^*}(\langle \eta , e_1^f\rangle _\mathcal {H}e_1^f),\) the subsolution \(U(t,P^f_n\eta )\) of (21) (with \({\mathcal {K}}=P_n^f\mathcal {H}\)). The changes of measure we will use are given by

with \(k_0\) as in Hypothesis 3c’. For implementation purposes, \(u_{k_0}\) is replaced by a sequence \(u_{k_0}^\epsilon \) that converges to \(u_{k_0}\) as \(\epsilon \rightarrow 0\). For more details on the choice of \(u_{1}^\epsilon \) see (63) and the discussion in Sect. 4 below.

We can now present our main results on the asymptotic behavior of the scheme.

Theorem 3.1

(Moderate Deviations) Let \(T>0, L>0\) as in (13), \(k_0\) as in Hypothesis 3c’, \(u_{k_0}\) as in (25), \(Q^\epsilon \) as in (16) and \(B_\mathcal {H}(0,L)\subset \mathcal {H}\) denote the closed ball of radius L centered at the origin. Moreover let \(u_{k_0}^\epsilon :[0,T]\times \mathcal {H}\rightarrow \mathcal {H}\) be a sequence that converges pointwise and uniformly over bounded subsets of \(\mathcal {H}\) to \(u_{k_0}\),

and

Under Hypotheses 1(a)-(c), 2(a),(b), 3(a),(b),(c’) we have

with the convention that the infimum over the empty set is \(\infty .\)

Remark 4

A few comments on (27) are in order: (1) If \(y\in H^1((0,T);\mathcal {H})\cap L^2([0,T];Dom(A)),\) the set \({\mathcal {C}}_{y,x^*}\) reduces to the singleton \(\{{\bar{v}}(t):= {\dot{y}}(t)-Ay(t)-DF(x^*)y(t)-u(t,y(t))\}\) and for any \(y\notin H^1((0,T);\mathcal {H})\cap L^2([0,T];Dom(A)),\) \({\mathcal {C}}_{y,x^*}\) is empty. (2) Using the same notation, it follows that the right-hand side of (27) can be expressed as

(3) Since the functional on the right-hand side involves only the values of y on \([0,\tau ]\) it is straightforward to see that the infimum can in fact be taken over paths \(y\in C([0,\tau ];\mathcal {H})\) that satisfy the constraints in (26).

Using the moderate deviation asymptotics of Theorem 3.1 we can then prove the following:

Theorem 3.2

(Near asymptotic optimality) Let \(L,T>0\), \(k_0,u_{k_0},u_{k_0}^\epsilon :[0,T]\times \mathcal {H}\rightarrow \mathcal {H}\) as in Theorem 3.1, \({\mathcal {A}}\) as in Lemma 3.2, U as in (24) and \(G_T\) as in (15). For any sequence \(\{v^\epsilon \}\subset {\mathcal {A}}\) such that

we have

Moreover, we have the second moment bounds

where \(U(0,0)\le G_T(0,0)\) and \(G_T(0,0)\longrightarrow U(0,0)\) as \(T\rightarrow \infty .\)

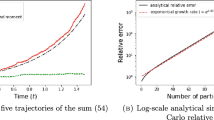

The first statement above asserts that the limiting controlled trajectories exit the domain D through the boundary near the direction of the eigenvector \(e_1^f\) (see Hypotheses 3(c), (c’)). Finally, (30) shows that, for any finite time horizon T, our scheme is close to asymptotic optimality, according to Definition 3.2, and achieves optimal behavior in the limit \(\epsilon \rightarrow 0, T\rightarrow \infty \). Near asymptotic optimality is a common feature of importance sampling schemes for continuous-time dynamics even in finite dimensions. This is mainly a consequence of using subsolutions of (21) instead of exact solutions which are rarely given in explicit form. Our numerical studies indicate that near optimality leads to provably superior performance in comparison to standard Monte Carlo.

Remark 5

The moderate deviation regime allows us to work with the exit problem of a linear equation instead of that of the initial nonlinear SRDE (1). The "drift" of this linear equation is given by \(A+DF(x^*)\) and thus the dominant eigenpairs of this operator govern the exit time and exit place asymptotics. As mentioned in the introduction, similar statements have been proved for finite-dimensional linear equations in [51] (see e.g. Theorem 6).

3.2 On the asymptotic exit direction

In this section we study the limiting variational problem appearing on the right-hand side of (27). In particular, we will show that, under Hypothesis 3c’, changes of measure that force the dynamics in the \(e_1^f\) direction lead to minimal paths that exit from the ball \(\mathring{B}_\mathcal {H}(0,L)\) through the same direction. From this point on we will only use the notation \({\mathcal {S}}_{x,T}\) to denote the explicit action functional

Moving on to the variational problem in (27), we let \(I^{k_0}:{\mathcal {T}}\subset C([0,T];\mathcal {H})\rightarrow \mathbb {R},\)

and seek to characterize \(\arg \min _{y\in {\mathcal {T}}}I^{k_0}(y).\) For the first part of this section we consider the case \(k_0=1\) covered by Hypothesis 3(c). The more general setting of Hypothesis 3c’ will be studied in Proposition 3.1 below. For the sake of simplicity we will drop the superscript \(k_0\) and write \(I\equiv I^{1}\) and \(u\equiv u_{1}\) unless otherwise stated.

A first observation is that \(I(y)<\infty \) if and only if \(y\in H^1((0,T);\mathcal {H})\cap L^2([0,T];Dom(A))\) and for all such y the infimum above is uniquely attained by

(see also Remark 4 above). Therefore, in view of (25), we can re-express I as follows:

The last two terms in the last display are equal due to (25). Thus,

It is straightforward to verify that \(\arg \min _{y\in {\mathcal {T}}}I(y)\ne \varnothing ,\) i.e. the minimum value of I over the set \({\mathcal {T}}\subset C([0,T];\mathcal {H})\) is attained in \({\mathcal {T}}\). Indeed, \({\mathcal {S}}_{x^*,\cdot }:[0,T]\times C([0,T];\mathcal {H})\rightarrow [0, \infty ]\) is lower-semicontinuous and the second summand in (34) defines a continuous functional on the same set. Thus, I is itself lower-semicontinuous and furthermore \({\mathcal {T}}\) is closed in the topology of \(C([0,T];\mathcal {H})\) (recall that \(B_\mathcal {H}(0,L)\) in (26) is a closed ball in \(\mathcal {H})\).

Remark 6

We shall proceed to the characterization of minimizers in three steps. First we minimize over paths y with \(y(0)=0\) and \(y(\tau )=z\in \partial B_{\mathcal {H}}(0,L)\). Then we minimize over the exit place z and finally over the time \(\tau \) in which the path y hits the boundary \(\partial B_{\mathcal {H}}(0,L)\) of the closed ball \(B_{\mathcal {H}}(0,L)\). At this point, we emphasize that, in contrast to \(\tau ^\epsilon _{x^*}\) (14), \(\tau _\phi \) (Lemma 3.1),\(\hat{\tau }^{\epsilon , v}_{x^*}\) (Lemma 3.2) and \(\hat{\tau }^{\epsilon , v^\epsilon }_{x^*}\) (28), it is not known a priori whether the time \(\tau \) is the first exit time of y from the open ball \(\mathring{B}_\mathcal {H}(0,L)\). We will show that the latter is true for minimizing paths in Lemma 3.4 and Proposition 3.1 below.

Lemma 3.3

Let \(y^*\in \arg \min \{ I(y): y\in C([0,\tau ];\mathcal {H}), y(0)=0, y(\tau )=z\}.\) Then

Proof

The fact that we minimize over \(y\in C([0,\tau ];\mathcal {H})\) instead of \(C([0,T];\mathcal {H})\) is justified by Remark 4-3). Next notice that \(y^*_{z,\tau }\in C([0,\tau ];\mathcal {H})\) since \(\sinh \) is increasing and continuous. In particular,

Proceeding to the proof we have, in view of (34),

with \({\mathcal {L}}_{x^*}\) as in (22). Minimizers are then governed by the Euler-Lagrange equation

which boils down to

Projecting to the eigenbasis \(\{e_k^f\}_{k\in \mathbb {N}}\) of \(A+DF(x^*)\) we obtain

Letting \(y_k=\langle y, e_k^f\rangle _\mathcal {H},z_k=\langle z, e_k^f\rangle _\mathcal {H},\) the general solution of the latter has the form \( y_k(t)=c_1e^{a_k^ft}+c_2e^{-a_k^{f}t} \) and taking into account the initial and terminal conditions we obtain

Thus,

\(\square \)

The next lemma is concerned with the exit direction when Hypothesis 3(c) holds.

Lemma 3.4

Let \(T>0,\) I as in (32) and \(u,{\mathcal {T}}, C_{y,x^*}\) as in Theorem 3.1. Under Hypothesis 3(c), any \(y^*\in \arg \min \{I(y); y\in {\mathcal {T}} \} \) \(y^*\) first exits \(\mathring{B}_\mathcal {H}(0,L)\) at \(\tau =T\) in the direction of the eigenvector \(e_1^f\) (recall Remark 6) i.e. for all \(k\ge 2\),

\(\Vert y^*(t)\Vert _{\mathcal {H}}<L\) for all \(t<T\) and \(\Vert y^*(T)\Vert _{\mathcal {H}}=L.\)

Proof

Let \(\phi ^*=\phi ^*_{z,\tau }\) be a minimizer provided by Lemma 3.3. Notice that, since the Euler-Lagrange equations provide necessary conditions for minimality, any \(\phi ^*\in \arg \min \{ I(y): y\in C([0,\tau ];\mathcal {H}), y(0)=0, y(\tau )=z\}\) will be of this form. After straightforward algebra we obtain

Now for each fixed \(\tau \), Hypothesis 3(c) guarantees that this quadratic form is minimized for \(z^*\in \partial B_{\mathcal {H}}(0,L)\) such that \(z^*_k=0\) for all \(k\ge 2\) and \(z_1^*=\pm L\) (see e.g. Theorem 3.4 in [46]). Then,

is minimized for the largest possible \(\tau \) i.e. for \(\tau =T.\) Hence, since the order with which the variables are being minimized does not change the value of the minimum, we have \(\min _{y\in {\mathcal {T}}}I(y)= I(\phi ^*_{z^*,T})\) and the minimizers \(y^*=\phi ^*_{z^*,T}\) enjoy the desired properties. Finally, note that any element \(y^*\in \arg \min \{I(y); y\in {\mathcal {T}} \} \) is of the form \(\phi ^*_{z^*, T}.\) Indeed, fix the initial and terminal values \(y^*(0)=0, y^*(\tau )=z\in \partial B_\mathcal {H}(0, L)\) and assume that \(y^*\) does not satisfy the Euler-Lagrange equations (35). Since the latter provide necessary conditions for minimality, it follows that \(y^*\) is not a minimizer. Moreover, it follows from the previous calculations that if \(\tau <T\) or if \(z_k \ne 0\) for some \(k\ge 2\) then \(y^*\) cannot be a minimizer of I. The proof is complete.\(\square \)

As mentioned above, the previous lemma implies that, for any minimizing path \(y^*\), \(\tau \) is in fact the first exit time from the open ball \(\mathring{B}_\mathcal {H}(0,L),\) i.e. \(\tau =\inf \{t\in [0,T]: y^*\notin \mathring{B}_\mathcal {H}(0,L) \}\) and furthermore \(\tau =T\).

Remark 7

If the sampling time T is large enough, the results of Lemma 3.4 as well as Theorems 3.1, 3.2 remain true under the weaker spectral gap assumption that \(2a_1^f<a_k^f\) for all \(k\ge 2.\) Since we are interested in schemes that perform well for large values of T, this generalization comes at no cost. For more details on this relaxed condition see [46, Theorem 3.9].

Up to this point we have worked under Hypothesis 3(c) to show that minimizers of the functional I lie on the one-dimensional subspace where the change of measure u acts. In the absence of a sufficiently large spectral gap the situation is more complicated. In particular, if the sampling time T is large enough, the minimizers can be orthogonal to u. In other words, forcing the system towards its physical exit direction \(e_1^f\) might actually lead to controlled trajectories that exit from a subspace that is orthogonal to \(e_1^f\) under the change of measure. This is proved in the following lemma.

Lemma 3.5

Assume that the eigenvalues \(\{a^f_k\}_{k\in \mathbb {N}}\) are strictly increasing, \(a_2^f\le 2a_1^f\) and let

If \(T> T^*\) then any minimizer \(y^*\in \arg \min \{I(y); y\in {\mathcal {T}} \} \) satisfies \(\Vert y^*(t)\Vert _{\mathcal {H}}<L\) for all \(t<T\) and \(\Vert y^*(T)\Vert _{\mathcal {H}}=L.\) Moreover \(y^*\) first exits \(\mathring{B}_\mathcal {H}(0,L)\) at \(\tau =T\) in the direction of the eigenvector \(e_2^f\) (recall Remark 6) i.e. for all \(k\ne 2,\)

Proof

As in the proof of Lemma 3.4 we have

We claim that, without loss of generality, we can consider \(\tau \in (T^*,T].\) Assuming the latter for now, we can compare the weights \(\lambda _k^f\) to conclude that

and since \(x\mapsto x/(1-e^{-2\tau x})\) is (strictly) increasing for all \(\tau ,\) it follows that

Therefore, the quadratic form is minimized for \(z^*\in \partial B_\mathcal {H}(0,L)\) such that \(z^*_k=0\) for all \(k\ne 2\) and \(z^*_2=\pm L\). Consequently

and

Since \(\lambda _2^f\le \lambda _k^f\) for all \(k\ge 2\) it follows that

which follows from (37) by setting \(\tau =T>T^*.\) Since the infimum is achieved at \(t=T\), the combination of (38) and (39) concludes the proof. \(\square \)

Remark 8

Lemma 3.5 highlights the importance of sufficient spectral gaps for the design of efficient changes of measure. If Hypothesis 3(c) fails, a scheme that forces the \(e_1^f\) direction will be far from optimal and is expected to produce large errors for small values of \(\epsilon .\) Under the assumptions of that lemma, one can repeat the arguments of the proof above to show

If the ratio \(2a_1^f/a_2^f\) is large, this bound translates to sub-optimal performance as \(\epsilon \rightarrow 0\) which does not improve as \(T\rightarrow \infty .\) Moreover, as we will see in Sect. 5, this ratio depends non-trivially on the interval length \(\ell \) and is indeed large when \(\ell \) is moderately small. This behavior is caused by the linearization of the dynamics and is completely absent when \({f=0}.\) For an example that satisfies the assumptions of Lemma 3.5 see Sect. 5.1.

Before we conclude this section we consider once again the situation where the eigenvalues \(\{a_k^f\}_{k\in \mathbb {N}}\) do not satisfy Hypothesis 3(c) but instead Hypothesis 3c’ holds. We show that the conclusions of Lemma 3.4 can be recovered by projecting to a higher dimensional eigenspace of \(A+DF(x^*)\) consisting of the first \(k_0\) eigenvalues.

Proposition 3.1

Let \(k_0\) as in Hypothesis 3c’, U as in (24) and \(u_{k_0}\) as in (25). Under Hypothesis 3c’ any minimizer \(y^*\in \arg \min \{I^{k_0}(y); y\in {\mathcal {T}} \}\) satisfies the same properties as in Lemma 3.4.

Proof

Following the computations in (33), which carry over verbatim, we see that

Since the second term is constant for each fixed value of the exit point \(y(\tau ),\) the Euler-Lagrange equations and minimizers for this functional are then identical to those derived in Lemma 3.3 for I. Thus, for any minimizing path \(\phi ^*_{z,\tau }\) that hits the point \(z=(z_k)_{k\in \mathbb {N}}\in \partial B_{\mathcal {H}}(0,L)\) at time \(\tau \in [0,T]\) we have

Comparing the weights \(\lambda ^f_{k_0,j}\) we see that for all \(1<j\le k_0\)

which holds since \(x\mapsto x/(1-e^{-2\tau x})\) is (strictly) increasing for all \(\tau \) and \(a_1^f<a_2^f\le a_j^f\) for any \(j\ge 2\). In order to show that minimizers point towards \(z_1\) it remains to compare \(\lambda ^f_{k_0,1}\) with \(\lambda ^f_{k_0,j}\) for \(j\ge k_0+1.\) Since \(\lambda _{k_0,k_0+1}\le \lambda _{k_0,k_0+2}\le \dots \) it suffices to consider \(\lambda ^f_{k_0,k_0+1}.\) In view of Hypothesis 3c’ and Theorem 3.4 of [46] we conclude that

for all \(\tau \in [0,T]\). The proof is complete.\(\square \)

3.3 Tightness of \(\hat{\eta }_{x^*}^{\epsilon , v^\epsilon }\)

Let \(v^\epsilon \) be a sequence in \({\mathcal {A}}\) satisfying the assumptions of Theorem 3.2, \(u_{k_0}\) as in (25) and \(u_{k_0}^\epsilon :[0,T]\times \mathcal {H}\rightarrow \mathcal {H}\) be a sequence that converges pointwise and uniformly over bounded subsets of \(\mathcal {H}\) to \(u_{k_0}.\) The goal of this section is to prove tightness estimates for the collection \(\{ \hat{\eta }_{x^*}^{\epsilon , v^\epsilon }:\epsilon <\epsilon _0 \}\) of \(C([0,T];{\mathcal {X}})-\)valued random elements. Throughout the rest of this section we drop the index \(k_0\) and write \(u\equiv u_{k_0}, u^\epsilon \equiv u^{\epsilon }_{k_0} .\)

Recall that for each \(\epsilon ,\) \(\hat{\eta }_{x^*}^{\epsilon , v^\epsilon }\) is the unique mild solution of the controlled equation (18) with \(v=v^\epsilon , u=u^\epsilon .\) Existence and uniqueness is once again provided by Theorem 2.2 of [14] (see also Theorem 7.1 of [45]). The following lemma guarantees that, for \(\epsilon \) small, the sequence \(v^{\epsilon }\) is bounded in \(L^2\).

Lemma 3.6

There exists \(\epsilon _0>0\) and a constant \(C>0\) such that

Proof

In view of the variational representation (17) any approximate minimizer \(v^\epsilon \in {\mathcal {A}}\) satisfies

Now from the MDP for bounded functionals (see Definition 3.1 as well as Remark 11 below), along with Lemma 3.1, there exists a constant \(C>0\) such that, for \(\epsilon \) sufficiently small,

Hence, from the uniform convergence of \(u^\epsilon \) to u and the uniform boundedness of u in bounded subsets of \(\mathcal {H}\) and the fact that \(\hat{\tau }^{\epsilon ,v^\epsilon }_{x^*}\le T\) with probability 1 the estimate follows. \(\square \)

Remark 9

Without loss of generality, we can trivially extend the controls \(v^{\epsilon }\) to [0, T] by letting \(v^{\epsilon }(t)=0 \) for \(t\in [ \hat{\tau }^{\epsilon ,v^\epsilon }_{x^*}, T ].\) This convention will be in use for the rest of this section.

We shall now proceed to the proof of tightness estimates.

Lemma 3.7

Let \(p\ge 1\). For all \(\epsilon ,T>0\), there exist \(\epsilon _0>0, \alpha ,\beta >0\) such that

Proof

Using the mild formulation we have

We now fix a version of the process \( \Psi ^{\epsilon ,v^\epsilon }(t,\xi )\) and work path-by-path. The paths of \(\Psi ^{\epsilon ,v^\epsilon }\) are weakly differentiable with probability 1 and

with \({\mathcal {A}}\) as in (4). Next, let \(t\in [0,T]\) and choose \(\xi _t\in [0,L]\) to be such that

In view of Proposition A.1 in [45] (see also Proposition D.4 of [17]) we can estimate the left derivative of the supremum norm \(\Vert \Psi ^{\epsilon ,v^\epsilon }(t)\Vert _{\mathcal {E}}\) by

From the uniform ellipticity of \({\mathcal {A}}\) we have for all \( t\in [0,T], {\mathcal {A}}\Psi ^{\epsilon ,v^\epsilon }(t,\xi _t) \text {sign}\big (\Psi ^{\epsilon ,v^\epsilon }(t,\xi _t)\big )\le 0.\) Thus, in view of Hypothesis (2(a))

where \(M_{f_1}\) is the Lipschitz constant of \(f_1\). To proceed, we distinguish the following two cases:

Case 1:

Since \(f_2\) is non-increasing,

Hence,

Case 2:

In this case it is straightforward to verify that

The reader is referred to the proof of Theorem 6.1 of [45] for a similar argument. The latter, along with the optimality of \(\xi _t\), yields

Setting \(\Xi ^{\epsilon ,v^\epsilon }(t):=\max \{ \big \Vert U^{\epsilon }+W_A/h(\epsilon )\big \Vert _{C([0,T];\mathcal {E})}, \big \Vert \Psi ^{\epsilon ,v^\epsilon }(t)\big \Vert _{\mathcal {E}} \}\), we can combine (43), (44) and the mean value inequality to obtain

By Grönwall’s inequality,

where \(C_{T,\phi }=e^{2M_{f_1} T}\). Since the latter holds for all \(t\in [0,T]\) we obtain

Turning to the control term,

for any \(\theta >1/2\). This is a consequence of the embedding \(W^{\theta ,p}(O)\hookrightarrow {\mathcal {E}}\) which holds for smooth domains \(O\subset \mathbb {R}^d\) and all \(\theta >d/p\). From the smoothing property (6), the Cauchy-Schwarz inequality, the uniform convergence of \(u^\epsilon \) to u and (25) we have

which holds w.p. 1 for \(\theta <1\), \(\epsilon \) sufficiently small and \(\rho >0\). As for the stochastic convolution term we have \(h(\epsilon )\rightarrow \infty \) and (10) yields

for \(\epsilon \) small and some \(C>0\) independent of \(\epsilon \). The estimate is a consequence of the Sobolev embedding theorem along with heat kernel estimates and the stochastic factorization formula. Combining (42), (45), (46), Lemma 3.6 and Remark 9 we obtain

and \(h(\epsilon )\rightarrow \infty \) as \(\epsilon \rightarrow 0\). Another application of Grönwall’s inequality leads to

which is the first estimate in (41). Note here that C does not depend on \(x^*\). Turning to the spatial Hölder regularity, an application of Taylor’s theorem for Gâteaux derivatives yields

for some \(\theta _0\in (0,1)\). Let \(\theta >1/2\) and \(\alpha =(2\theta -1)/2\). By virtue of the Sobolev embedding theorem (see e.g. Theorem 8.2 in [19]) and Hypothesis 2(b) we have

In view of (47),

Repeating similar arguments to the ones used in (46) we see that

Moreover, we have the following well-known spatial equicontinuity estimate for the stochastic convolution

The reader is refered to [17], Theorems 5.16, 5.22 for the proof and a detailed discussion of regularity properties of stochastic convolutions. Combining the latter along with (49) and (50) we deduce that for each \(\epsilon >0, t\in [0,T]\) \(\hat{\eta }^{\epsilon ,v^{\epsilon }}_{x^*}(t)\in C^a\) w.p. 1 and furthermore

for some sufficiently small \(\epsilon _0\). It remains to study the temporal equicontinuity of \(\hat{\eta }^{\epsilon ,v^{\epsilon }}_{x^*}\). Letting \(s<t\in [0,T]\) we have

Hence,

From the estimates preceding (49) and the arguments in (46) we obtain

and

respectively. As for the stochastic convolution, there exists \(\beta \in (0,1)\) such that

(see e.g. [17], Theorem 5.22). Finally, let \(\theta >0, \beta \in (0,1/2)\) such that \(\beta +\theta /2<1\). From the Sobolev embedding theorem and (5)

Following the derivation of the estimates (49), (50), (51) (see also Lemma A.3 in [30]) we deduce that

From the latter and (52)-(55), there exists a sufficiently small \(\epsilon _0\) and \(\beta >0\) such that

This proves the last estimate in (41) and completes the proof. \(\square \)

From Lemma 3.7, along with an infinite-dimensional version of the Arzelà-Ascoli theorem, it follows that the family of laws of the controlled processes \(\{\eta ^{\epsilon ,v^\epsilon }_{x^*}\}_{\epsilon }\) is concentrated on compact subsets of \(C([0,T];{\mathcal {E}}),\) uniformly over sufficiently small values of \(\epsilon \). Thus, in view of Prokhorov’s theorem (Theorem 3.3 below), it forms a relatively compact set in the topology of weak convergence of measures in \(C([0,T];{\mathcal {E}})\). In the next section we aim to characterize the limit points as \(\epsilon \rightarrow 0\).

3.4 Limiting behavior of \(\hat{\eta }_{x^*}^{\epsilon , v^\epsilon }\)

Before we proceed to the main body of this section let us recall the notion of a tight family of probability measures and the classical theorem of Prokhorov.

Definition 3.4

Let \({\mathcal {Z}}\) be a Polish space and \(\Pi \subset {\mathscr {P}}({\mathcal {Z}})\) be a set of Borel probability measures on \({\mathcal {Z}}\) and \(\{P_n\}_{n\in \mathbb {N}}\subset \Pi .\) We say that (i) \(P_n\) converges weakly to a measure \(P\in {\mathscr {P}}({\mathcal {Z}})\) if for every \(f\in C_b({\mathcal {Z}})\)

(ii) \(\Pi \) is tight if for each \(\epsilon >0\) there exists a compact set \(K_\epsilon \subset {\mathcal {Z}}\) such that for all \(P\in \Pi \),

Prokhorov’s theorem asserts that the notions of tightness and relative weak sequential compactness are equivalent for Borel measures on Polish spaces.

Theorem 3.3

(Prokhorov) Let \({\mathcal {Z}}\) be a Polish space and \(\Pi \subset {\mathscr {P}}({\mathcal {Z}})\) be a tight family of Borel probability measures. Then every sequence in \(\Pi \) contains a weakly convergent subsequence.

Lemma 3.8

Let \(\epsilon _0\) be sufficiently small, \(v^\epsilon \) be a sequence in \({\mathcal {A}}\) satisfying the assumptions of Theorem 3.2, u as in (25) and \(u^\epsilon :[0,T]\times \mathcal {H}\rightarrow \mathcal {H}\) be a sequence that converges pointwise and uniformly over bounded subsets of \(\mathcal {H}\) to u. Any sequence in \( \{(\hat{\eta }^{\epsilon ,v^\epsilon }_{x^*}, v^{\epsilon })\}_{\epsilon <\epsilon _0}\) has a further subsequence that converges in distribution in \(C([0,T];{\mathcal {E}})\times L^2([0,T];\mathcal {H})\) to a pair \((\hat{\eta }^{v^0}_{x^*}, v^0)\) in the product of uniform and weak topologies. Moreover:

(i) \(\hat{\eta }^{v^0}_{x^*}\) is equal in law to the (unique) solution of

(ii) Any sequence in \(\{\hat{\tau }^{\epsilon ,v^\epsilon }_{x^*}\;;\epsilon <\epsilon _0\}\) converges in distribution to a [0, T]-valued random variable \(\hat{\tau }^{v^0}\) such that

and for all \(t<\hat{\tau }^{v^0},\) \(\hat{\eta }^{v^0}_{x^*}(t)\in B_\mathcal {H}(0,L)\) with probability 1 (recall that \(B_\mathcal {H}(0,L)\) denotes a closed ball on \(\mathcal {H}\)).

Proof

Starting from the controls \(v^\epsilon ,\) Lemma 3.6 along with Remark 9 yield

Since any bounded subset of \(L^2([0,T];\mathcal {H})\) is relatively compact in the weak topology, we deduce from the discussion after Lemma 3.7 that the family of laws of the pairs \(\{(\hat{\eta }^{v^\epsilon }_{x^*}, v^{\epsilon })\}_{\epsilon <\epsilon _0}\) is tight. By virtue of Prokhorov’s theorem any sequence of such elements contains a subsequence (denoted with the same notation) that converge in distribution to a pair \((\hat{\eta }_{x^*}, v^{0})\) of \(C([0,T];{\mathcal {E}})\times L^2([0,T];\mathcal {H})\)-valued random elements. We remark here that \(L^2([0,T];\mathcal {H})\) with the weak topology is not globally metrizable, hence not a Polish space, and Prokhorov’s theorem is not directly applicable. However the same conclusions can be drawn by a more general version of the theorem (e.g. Theorem 8.6.7 in [8]). Invoking Skorokhod’s theorem we can now assume that this convergence happens almost surely. This theorem involves the introduction of a new probability space with respect to which the convergence takes place. This will not be reflected in our notation for the sake of convenience. We will now characterize the law of \(\hat{\eta }_{x^*}\).

(i) Recall that for all \(t\in [0,T]\)

with probability 1. Starting from the last term, the estimate (10) yields \(\frac{1}{h(\epsilon )}W_A\longrightarrow 0\) in \(L^p(\Omega ; C([0,T];{\mathcal {E}}))\) for any \(p\ge 1\). Next, from Lemma 4.7 in [46] we have

almost surely in \(C([0,T];{\mathcal {E}}).\) As for the term involving the changes of measure \(u^\epsilon \)

The first term on the right hand side converges to 0 by our assumptions along with (47). The almost sure convergence of \(\hat{\eta }^{\epsilon ,v^\epsilon }_{x^*}\) and the continuity of u (see (25)) along with the dominated convergence theorem imply the convergence of the second term to 0. Next, in view of (48), Hypothesis 2(b) and the dominated convergence theorem we have

as \(\epsilon \rightarrow 0\). Uniqueness of (56) along with a subsequence argument complete the proof.

(ii) Since [0, T] is compact in the standard topology, the family of [0, T]-valued random variables \(\{\hat{\tau }^{\epsilon ,v^\epsilon }_{x^*}\}_{\epsilon <\epsilon _0}\) is tight. Invoking Prokhorov’s and Skorokhod’s theorems once again, any sequence in this family has a subsequence that converges almost surely to a [0, T]-valued random variable \(\hat{\tau }^{v^0}_{x^*}.\) From the almost sure convergence of \(\hat{\eta }^{\epsilon ,v^{\epsilon }}_{x^*}\) and the definition of \(\hat{\tau }^{\epsilon ,v^\epsilon }_{x^*}\) (see Lemma 3.2), \(\hat{\eta }^{\epsilon ,v^{\epsilon }}_{x^*}\big (\hat{\tau }^{\epsilon ,v^{\epsilon }}_{x^*}\big )\longrightarrow \hat{\eta }_{x^*}^{ v^0}\big (\hat{\tau }^{v^0}_{x^*}\big )\in \partial B_{\mathcal {H}}(0,L)\) almost surely (the latter being a closed set ). Moreover, for any \(t<\hat{\tau }^{v^0}_{x^*},\) there exists \(\delta >0\) and \(\epsilon _0>0\) sufficiently small such that \(t\le \hat{\tau }^{v^0}_{x^*}-\delta <\hat{\tau }^{\epsilon ,v^{\epsilon }}_{x^*}\) for all \(\epsilon \le \epsilon _0\) on a set of probability 1. Thus, for \(\epsilon \) sufficiently small, \(\{ \hat{\eta }^{\epsilon ,v^{\epsilon }}_{x^*}\big (t\big ) \}_{\epsilon }\subset {B_{\mathcal {H}}(0,L)}\) and the pointwise limit \(\hat{\eta }^{\epsilon ,v^{0}}_{x^*}\big (t\big )\in B_\mathcal {H}(0,L)\) with probability 1. \(\square \)

Remark 10

A simple consequence of Lemma 3.8 is that the moderate deviation process \(\eta ^{\epsilon }_{x}\) (3) which results by setting \(u=v^\epsilon =0\) in (18), converges as \(\epsilon \rightarrow 0\) to the solution of the linear deterministic PDE \({\dot{\phi }}(t) = [A +DF(X^0_x(t))]\phi (t)\) with zero initial condition, i.e. \(\eta ^{\epsilon }_{x}\rightarrow 0.\)

3.5 Proof of Theorem 3.1

Before we move on to the proof we remind the reader that the index \(k_0\) has been dropped.

Let \(\epsilon >0\). Returning to (17), choose a sequence \(\{v^\epsilon \}\subset {\mathcal {A}}\) of approximate minimizers such that (28) holds. Since \(u^\epsilon \) converges uniformly to u over bounded subsets, there exists \(\epsilon _0\) sufficiently small such that for any \(\delta >0\) and \(\epsilon <\epsilon _0\)

From the variational representation (17), Lemma 3.6 and the assumptions on \(u^\epsilon \) and u there exists \(\epsilon _0\) sufficiently small such that

Thus, there exists a sequence in \(\epsilon \) over which the left hand side in (57) converges to \(\liminf _{\epsilon \rightarrow 0} -\log Q^{\epsilon }(u^\epsilon )/h^2(\epsilon )\). Since the functional \({\mathcal {J}}: C([0,T];\mathcal {E})\times L^2([0,T];\mathcal {H})\times [0, T]\rightarrow \mathbb {R}\),

is lower semi-continuous in the product of uniform, weak and standard topologies, we can pass to a further subsequence and apply the Portmanteau lemma along with Lemma 3.8 to obtain

with \({\mathcal {T}}\) as in (26). Since \(\delta \) is arbitrary, the upper bound is complete. To obtain a lower bound we will use the conclusions of Proposition 3.1 for the limiting variational problem. To this end let \(y^*\) satisfy

As we mentioned in Sect. 3.2, the optimization problem on the left-hand side has an explicit solution attained by

and from Proposition 3.1, \(T=\inf \{ t>0: \Vert y^*(t)\Vert _{\mathcal {H}}=L\}=\tau _{y^*}\). Now consider the processes \(\hat{\eta }^{\epsilon , {\bar{v}}}_{x^*}\) controlled by \({\bar{v}}\). From Lemmas 3.7, 3.8, \(\{\hat{\eta }^{\epsilon , {\bar{v}}}_{x^*};\epsilon >0 \}\) is tight and converges in distribution to a process \(\hat{\eta }^{{\bar{v}}}_{x^*}.\) From the choice of \({\bar{v}}\) and uniqueness of solutions it follows that \(\hat{\eta }^{{\bar{v}}}_{x^*}=y^*\) with probability 1. Moreover, the exit times \(\hat{\tau }^{\epsilon , {\bar{v}}}_{x^*}\) converge in distribution to a random time \(\hat{\tau }^{{\bar{v}}}\) which is no less than the first exit time of \(y^*\) from \(\mathring{B}_\mathcal {H}(0,L)\). Since the latter is equal to T it follows that \(\hat{\tau }^{{\bar{v}}}=T\) with probability 1. Thus

where the second inequality follows from lower semi-continuity. Combining (58) and (60) allows us to conclude.

Remark 11

Theorem 3.1 is essentially equivalent to an MDP for the family \(\{X^\epsilon \}_{\epsilon }\) of solutions of (8), in the space \(C([0,T];{\mathcal {E}}).\) The latter is an asympotic statement for exponential functionals of \(g(X^\epsilon ),\) where \(g: C([0,T];{\mathcal {E}})\rightarrow \mathbb {R}\) is continuous and bounded (see Definition 3.1), while the former covers exit probabilities and corresponds to the choice \(g={\tilde{g}}\) with

The case for bounded continuous test functions is in fact simpler, does not require analysis of the limiting variational problem and can be proved using very similar arguments to the ones used above. To be precise, for any continuous, bounded \(g: C([0,T];{\mathcal {E}})\rightarrow \mathbb {R}\) the variational representation (17) takes the form

according to the classical results of [12]. The controlled process \(\eta ^{\epsilon ,v}\) solves (18) with \(u=0\) and \({\mathcal {A}}\) is a collection of square-integrable adapted controls. The tightness and limiting statements of Lemmas 3.7, 3.8 carry over verbatim after setting \(u=0\) and (11) then follows with the same action functional (31) by proving an upper and a lower bound as above. In particular, the upper bound is a consequence of lower-semicontinuity and the lower bound follows by considering the minimizing control \({\bar{v}}\) in (59). In fact, this simpler MDP is used to obtain Lemma 3.6 above, which is important for the case of unbounded functionals that we consider here.

3.6 Proof of Theorem 3.2

Let \(\{v^\epsilon \}\subset {\mathcal {A}}\) satisfy (28). From Lemma 3.8, Theorem 3.1 and the lower semi-continuity argument in (58) we know that the triples \((\hat{\eta }^{\epsilon ,v^\epsilon }_{x^*}, v^{\epsilon }, \hat{\tau }^{\epsilon ,v^\epsilon }_{x^*})\) converge in distribution to a triple \((\hat{\eta }^{v^0}_{x^*}, v^{0}, \hat{\tau }^{v^0}_{x^*} )\) and

Invoking Lemma 3.8 once again we have \(\hat{\eta }^{v^0}_{x^*}\in {\mathcal {T}}\) and \(v^0\in {\mathcal {C}}_{\hat{\eta }^{v^0}_{x^*},x^*}\) with probability 1. Since the left-hand side is the infimum over all such paths and controls it follows that

with probability 1. Thus, from Proposition 3.1 we can conclude that \(\hat{\tau }^{\epsilon ,v^\epsilon }_{x^*}\rightarrow T\) in probability as \(\epsilon \rightarrow 0\), \(\big \langle \hat{\eta }^{v^0}_{x^*}(T), e_1^f \big \rangle ^2_\mathcal {H}=L^2\) with probability 1 and (29) follows.

It remains to prove (30). We start from the upper bound which is a consequence of Lemma 3.1, provided that \(E=\{ \phi \in C([0,T];\mathcal {H}): \tau _{\phi }\le T\}\) is a \({\mathcal {S}}_{x^*,T}-\)continuity set. This property can be verified from the analysis of Sect. 3.2. In particular, Lemmas 3.3, 3.4 and Proposition 3.1 remain true after setting the second summand in (34) or (40) equal to 0. Hence the infima of the action functional over \(\{\tau _{\phi }\le T\}, \{\tau _{\phi }<T\} \) and \(\{\tau _{\phi }=T\}\) coincide and the estimate follows. As for the lower bound, we combine Theorem 3.1, (36) and (24) to obtain

and

The latter shows that the lower bound actually holds with equality, hence the proof is complete.

4 Implementation and pre-asymptotic analysis of the scheme

4.1 Implementation issues and exponential mollification

In Sect. 3, we demonstrated that, under fairly general spectral gap conditions, an importance sampling scheme using the change of measure \(u_{k_0}\) (25) achieves nearly optimal asymptotic behavior as the noise intensity \(\epsilon \rightarrow 0\). However, changes of measure based only on the quasipotential subsolution U (24) can lead to poor pre-asymptotic performance. This issue is present even in finite dimensions and is related to the behavior of the controlled dynamics near the origin. In [23], the authors demonstrated that, for certain choices of controls v, the second moment of the estimator degrades over time. In these situations, the system tends to spend a large amount of time near the attractor thus accumulating a large running cost which affects the variance. As a result, for fixed \(\epsilon >0\) the pre-exponential terms which are ignored by the asymptotic bounds (30) dominate and can even lead to errors that increase exponentially as T grows. For more details the reader is referred to the discussion in [23] pp.2919-2921.

In infinite dimensions, an additional challenge appears when the changes of measure act on the full space \(\mathcal {H}.\) As we will see in Lemma 4.1 below, in order to prove that the second moment of a scheme behaves well for any fixed \(\epsilon >0,\) one needs to have good control over the quantity

where \(Z_{x^*}\) denotes a subsolution used for the analysis of the scheme. However, any radial function \(Z:\mathcal {H}\rightarrow \mathbb {R}\) such that \(Z(\eta )={\bar{Z}}(\Vert \eta \Vert _\mathcal {H})\), with \({\bar{Z}}''<0,\) satisfies \(\text {tr}\big [ D^2_\eta Z(\eta )\big ]=-\infty \). Thus, apart from dealing with the difficulties related to unbounded operators (see Remark 3), changes of measure for SRDEs that effectively accomplish dimension reduction are necessary for provably efficient performance.

In this section we construct a scheme under Hypothesis 3(c), i.e. our changes of measure only force the \(e_1^f\) direction. From this point on it is understood that \(u\equiv u_1\) and \(u^\epsilon _{1}\equiv u^\epsilon .\) In order to deal with the aforementioned issues, our changes of measure \(u^\epsilon \) will meet the following criteria: 1) The projected-quasipotential subsolution (denoted below by \(F_1\)) will be used for regions of space that are sufficiently far from the origin. 2) A constant subsolution \(F^{\epsilon }_2\) will dominate near zero. \(F^{\epsilon }_2\) does not influence the dynamics until they enter the domain where \(F_1\) dominates. 3) To avoid issues from lack of smoothness, the combination of \(F_1,F_2\) should be appropriately mollified. 4) As \(\epsilon \rightarrow 0\) the changes of measure \(u^\epsilon \) converge to the asymptotically nearly optimal u. A suitable choice is provided by the exponential mollification of \(F_1,F_2^\epsilon \).

To be precise, we define for \(a_1^f,e_1^f\) as in Hypothesis 3(c), \(\kappa \in (0,1)\) and \(\delta =\delta (\epsilon )>0\)

and consider the exponential mollification

We implement our scheme using the change of measure

where

\(\delta =2/h^2(\epsilon )\) is the mollification parameter and \(\kappa \) is a parameter that controls the size of the neighborhood outside of which \(F_1\) dominates.

In order to derive non-asymptotic bounds for the second moment of the estimator, we will use the following min/max representation for the Hamiltonian

(see e.g. [23, 24]) and for any smooth functions \(U_{x^*},Z_{x^*}:[0,T]\times \mathcal {H}\rightarrow \mathbb {R}\) we let \(u(t,\eta )=-D_\eta U_{x^*}(t,\eta )\) and \(p=D_\eta Z_{x^*}(t,\eta )\). Thus we obtain

A consequence of this expression is the following pre-asymptotic bound for the second moment:

Lemma 4.1

For any smooth functions \(U_{x^*},Z_{x^*}:[0,T]\times \mathcal {H}\rightarrow \mathbb {R},\) \({\mathscr {D}}_{x^*}\) as in (61) and some \(\theta _0\in (0,1)\) let

and

For all \(\epsilon >0\) we have

The proof makes use of Itô’s formula and is deferred to Appendix A.

Remark 12

The term \({\mathscr {H}}_{x^*}^{\epsilon }\) accounts for the error coming from the local approximation of the nonlinear dynamics by their linearized version around the stable equilibrium \(x^*.\) A significant part of this section is devoted to the pre-asymptotic control of this term.

The rest of this section is devoted to the pre-asymptotic analysis of \(Q^{\epsilon }(u^\epsilon )\) based on the lower bound (67) with \(U_{x^*}=U^\delta (t,\eta ),\)

4.2 Performance analysis of the scheme

At this point we shall recall the definition of the random times

where \(\hat{\eta }^{\epsilon ,v}_{x^*}\) solves (18). Before we state the main result of this section, we provide the definition of exponential negligibility; a concept which will be frequently used in the sequel.

Definition 4.1

A term will be called exponentially negligible (a) in the moderate deviations range if it can be bounded from above in absolute value by \(C_1e^{- c_2h^2(\epsilon )}/h^2(\epsilon )\) where \(C_1<\infty , c_2>0\) (b) in the large deviations range if (a) holds with \(1/h^2(\epsilon )\) replaced by \(\epsilon \).

Remark 13

Since \(\sqrt{\epsilon }h(\epsilon )\rightarrow 0\) as \(\epsilon \rightarrow 0,\) exponential negligibility in the large deviations range implies exponential negligibility in the moderate deviations range.

The analysis of this section is summarized in the following theorem. Its proof is postponed for the end of this section and is preceded by several auxiliary estimates.

Theorem 4.1

Let \(T,\alpha ,\zeta _0,\epsilon >0\) and \(u^\epsilon (t,\eta )=-D_\eta U^{\delta (\epsilon )}(t,\eta )\) with \(U^\delta \) defined in (62). Assume that \(\delta =2/h^2(\epsilon ), \kappa \in (0,1-\alpha ), \zeta \in (\zeta _0,1/2)\) and \(\epsilon \) is sufficiently small to have \(h^{2(\kappa +\alpha -1)}(\epsilon )\le \frac{9a_1^f}{2}(\zeta _0-2\zeta _0^2)\wedge \frac{a^f_1}{2}.\) Then, up to exponentially negligible terms in the moderate deviations range,

‘, if \(h(\epsilon )\) is such that \(\sqrt{\epsilon }h^3(\epsilon )\longrightarrow 0\) as \(\epsilon \rightarrow 0\) then for \(\epsilon \) sufficiently small we have

Remark 14

Note that for a small fixed \(\epsilon ,\) (69) shows that, in theory, the second moment degrades as the sampling time T grows. This degradation is caused by the linearization error (65) and suggests that, in practice, good performance lies in the balance between \(\epsilon \) and T. Fortunately, (70) shows that this theoretical degradation is no longer present if the scaling \(h(\epsilon )\) does not grow too fast. Moreover, the simulation studies of Sect. 6 show that our scheme performs well for large T even when this growth assumption is not satisfied.

The following lemma collects a few straightforward computations that will be used below. Its proof can be found in Appendix A.

Lemma 4.2

For all \((t,\eta )\in [0,T]\times \mathcal {H},\zeta \in (0,1),\) \(U^\delta , Z\) as in (62), (68) and some \(\theta _0\in (0,1)\) we have

Moving on to the main body of the analysis, let \(B_{\infty }(x^*,1)\) denote an open \(L^\infty -\)ball of radius 1 centered at \(x^*\) and

Returning to (67) we have the following decomposition

Remark 15

This decomposition allows us to deal with the cubic power of \(\eta \) that appears in (65). Since we are only controlling the spatial \(L^2-\)norm of the moderate deviation process, this term is problematic. In particular, estimates based in the a-priori bound (47) will introduce T-dependent constants which are not desirable for the pre-asymptotic analysis.

The last term in (72) concerns the behavior of the controlled process \(\hat{\eta }^{\epsilon ,v}\) in the event that it exits an \(L^\infty -\)ball of radius \(1/\sqrt{\epsilon }h(\epsilon )\) before it exits \(\mathring{B}_{\mathcal {H}}(0,L)\). Since the latter is a very rare event in the moderate deviations range, we expect that this term is exponentially negligible. This claim is proved in the following proposition.

Proposition 4.1

The term

is exponentially negligible in the moderate deviations range for \(\epsilon \) sufficiently small.

Proof

Let \(t\in [0,T], \eta \in L^\infty \cap B_\mathcal {H}(0,L)\), \(\epsilon \) small enough to have \(\sqrt{\epsilon }h(\epsilon )<1.\) In view of (7),

Moreover, from (71) we have

where we used that \(\zeta ,\rho \in (0,1),\) and \(h(\epsilon )>1\). Combining the last two estimates we deduce that for any \(v\in {\mathcal {A}},\)

An application of Hölder’s inequality along with (41) yields

Recall now that \({\hat{X}}_{x^*}^{\epsilon , v}\) solves

and, as \(\epsilon \rightarrow 0\), \(\{{\hat{X}}_{x^*}^{\epsilon , v}\}_{\epsilon >0}\) satisfies a large deviation principle in \(C([0,T];L^\infty (0,\ell ))\) with action functional \(\widetilde{{\mathcal {S}}}_{x^*,T}: C([0,T];L^\infty (0,\ell ))\rightarrow [0,\infty ] \) given by

where the convention \(\inf \varnothing =+\infty \) is in use (see e.g. [14], Theorems 6.2, 6.3). Passing to a convergent subsequence if necessary, we deduce that

where \({\mathcal {B}}_{\infty }(x^*,L):=\{\phi \in C([0,T];\mathcal {H}): \sup _{t\in [0,T]}\Vert \phi (t)-x^*\Vert _{L^\infty }<1\}.\) Hence, for \(\epsilon \) sufficiently small

or equivalently

Finally, we claim that \(\inf _{\phi \in {\mathcal {B}}_{\infty }(x^*,L)^c}\widetilde{{\mathcal {S}}}_{x^*,T}(\phi )\!>\!0\). Indeed, since the action functional is lower semi-continuous (see Lemma 5.1, [14]) and \({\mathcal {B}}_{\infty }(x^*,L)^c\subset C([0,T];L^\infty (0,\ell ))\) is closed, there exists a minimizer \(\phi ^*\in {\mathcal {B}}_{\infty }(x^*,L)^c.\) Furthermore, there exists \(u^*\in {\mathcal {P}}_{\phi ^*}\) such that

The last inequality fails if and only if \(u^*=0\) almost everywhere in \([0,T]\times [0,\ell ].\) Since \(x^*\) is an equilibrium of the uncontrolled system, the latter implies that \(\phi ^*(t)=x^*\) for all \(t\in [0,T],\) hence \(\phi ^*\notin {\mathcal {B}}_{\infty }(x^*,L)^c.\) This contradicts the initial choice of \(\phi ^*\) and concludes the argument. Therefore, the term of interest is exponentially negligible in the large deviation range hence also in the moderate deviation range. \(\square \)

Next, we turn our attention to the third term in (72). The linearization error in this term is easier to control, since the process \(\sqrt{\epsilon }h(\epsilon )\hat{\eta }^{\epsilon ,v}\) is uniformly bounded by 1 in \(L^\infty -\)norm. This fact is used in the following lemma whose proof can be found in Appendix 1.

Lemma 4.3

For all \(\eta \in B_{\infty }(0,1/\sqrt{\epsilon }h(\epsilon ))\) there exists a constant \(C=C_{x^*,\ell ,f}>0\) such that for \(\epsilon \) sufficiently small we have

As for \( {\mathfrak {H}}_{x^*}^{\epsilon }( U^\delta , Z)(t,\eta )- {\mathscr {H}}_{x^*}^{\epsilon }(Z_{x^*})(t,\eta ),\) straightforward algebra along with the arguments of Lemma 4.2 of [23] yield

where the quantity \(\beta _0(\eta ):=\rho ^\epsilon (\eta )\big (1-\rho ^\epsilon (\eta )\big )\big \Vert D_\eta F_1(\eta )\big \Vert ^2_{\mathcal {H}}\) is nonnegative since \(\rho ^\epsilon \in [0,1]\) and \(\gamma _1:={\mathscr {D}}_{x^*}(F_1)(\eta )=-a_1^f/h^2(\epsilon ).\) Combining the latter with (73) and substituting \(\delta =2/h^2(\epsilon )\) and

we obtain the lower bound

At this point we partition \(B_\mathcal {H}(0,L)=B_1^f\cup B_2^f\cup B_3^f,\) where

and the constants \(\alpha ,\zeta , \kappa \in (0,1), K<0\) will be chosen later. The remaining part of this section is devoted to the study of the right-hand side of (74) on each component separately.

Lemma 4.4

Let \(\epsilon >0\) small enough to have \(\sqrt{\epsilon }h(\epsilon )<1\) and \( \zeta \in (0,1/2)\). For all \(\eta \in B_1^f\) (75), \( t\in [0,T]\) we have

up to terms that are exponentially negligible in the moderate deviations range.

We address the region \(B_3^f\) in the following lemma.

Lemma 4.5

Let \(\kappa \in (0,1),\) \(K=-\ln 3,\zeta _0>0,\) \(\zeta \in [\zeta _0,1/2),\) \(\epsilon >0\) small enough to have \(\sqrt{\epsilon }h(\epsilon )<1\) and \(h^{2(\kappa -1)}(\epsilon )\le \frac{9a_1^f}{2}(\zeta _0-2\zeta _0^2).\) For all \(\eta \in B_3^f\) (75), \(t\in [0,T]\) we have either

or, if \(h(\epsilon )\) is such that \(\sqrt{\epsilon }h^3(\epsilon )\rightarrow 0,\) then for sufficiently small \(\epsilon \) we have

It remains to study the region \(B_2^f.\) It is the most problematic region as there is no guarantee that the weight \(\rho ^\epsilon \) is exponentially negligible or of order one. The analysis is deferred to Appendix 1.

Lemma 4.6

Let \(\alpha \in (0,1), \kappa <1-\alpha , K=-\ln 3,\) \(\zeta \in (\zeta _0,1/2),\) \(\epsilon >0\) small enough to have \(\sqrt{\epsilon }h(\epsilon )<1\) and \(h^{2(\kappa +\alpha -1)}(\epsilon )\le \frac{a^f_1}{2}.\) For all \(\eta \in B_2^f\) (75), \( t\in [0,T]\) we have either

where C does not depend on \(\epsilon \) or, if \(\sqrt{\epsilon }h^3(\epsilon )\longrightarrow 0\), there exists \(\epsilon \) sufficiently small such that

Combining the three previous lemmas we arrive at the following regarding the third term in (72)

Lemma 4.7

There exists a constant C independent of \(T>0\) such that for \(\epsilon \) sufficiently small,

up to exponentially negligible terms in the moderate deviations range. Moreover, if \(h(\epsilon )\) is such that \(\lim _{\epsilon \rightarrow 0}\sqrt{\epsilon }h^3(\epsilon )=0\) then, for \(\epsilon \) sufficiently small,

up to exponentially negligible terms in the moderate deviations range.

Proof

(i) From Lemmas 4.4, 4.6(i), 4.5(i) we have

with probability 1, up to exponentially negligible terms. Since \(\hat{\tau }^{\epsilon ,v}_{x^*}\wedge \tau _{\infty }^{\epsilon }\le T\) with probability 1 and the constant is deterministic, the estimate follows by taking expectation.

(ii) The estimate follows from Lemmas 4.4, 4.6(ii), 4.5(ii). \(\square \)

We conclude this section with the proof of Theorem 4.1.

Proof of Theorem 4.1

In view of Lemmas (4.1) and (4.7)(i), (72) yields

up to exponentially negligible terms. In view of (68), we have \(Z\big (t,\eta \big )=(1-\zeta )U^\delta (t,\eta ).\) From Theorem 3.2 we have \(\lim _{\epsilon \rightarrow 0}\mathbb {E}[Z\big (\hat{\tau }^{\epsilon ,v}_{x^*},\hat{\eta }_{x^*}^{\epsilon , v}(\hat{\tau }^{\epsilon ,v}_{x^*})\big )]=0\). Thus for \(\epsilon \) sufficiently small we may write

As for the first term, since \(U^\delta \) is the exponential mollification of two functions, Lemma 4.1 of [23] gives that

Finally, the improved bound (70) follows by invoking Lemma (4.7)(ii). \(\square \)

5 The case of a double-well potential

In this section we specialize our results to SRDEs in which the differential operator \({\mathcal {A}}=\Delta \) (i.e. the second derivative operator in one spatial dimension) and the reaction term takes the form \(f=-V'_f,\) where \(V_f\) is a double-well potential as the one depicted below. This choice is possible in view of Hypotheses 2(a), 2(b) which allow arbitrary polynomial growth. Thus, we assume that \(V_f\) has two global minima and a local maximum which, for simplicity, is assumed to lie in the origin. Without loss of generality, we take \(f'(0)=-V''_f(0)=1\). Such SRDEs arise as scaling limits of particle systems with nearest-neighbor coupling that evolve in the inverted potential \(-V_f\) (see e.g. [4], Chapter 1) and provide one of the simplest examples of non-trivial dynamical behavior.