Abstract

We prove an asymptotic coupling theorem for the 2-dimensional Allen–Cahn equation perturbed by a small space-time white noise. We show that with overwhelming probability two profiles that start close to the minimisers of the potential of the deterministic system contract exponentially fast in a suitable topology. In the 1-dimensional case a similar result was shown in Martinelli et al. (Commun Math Phys 120(1):25–69, 1988; J Stat Phys 55(3–4):477–504, 1989). It is well-known that in two or more dimensions solutions of this equation are distribution-valued, and the equation has to be interpreted in a renormalised sense. Formally, this renormalisation corresponds to moving the minima of the potential infinitely far apart and making them infinitely deep. We show that despite this renormalisation, solutions behave like perturbations of the deterministic system without renormalisation: they spend large stretches of time close to the minimisers of the (un-renormalised) potential and the exponential contraction rate of different profiles is given by the second derivative of the potential in these points. As an application we prove an Eyring–Kramers law for the transition times between the stable solutions of the deterministic system for fixed initial conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are interested in the behaviour of solutions to the Allen–Cahn equation, perturbed by a small noise term. The deterministic equation is given by

and it is well-known that (1.1) is a gradient flow with respect to the potential

The fluctuation-dissipation theorem suggests an additive Gaussian space-time white noise \(\xi \) as a natural random perturbation of (1.1); so we consider

for a small parameter \(\varepsilon >0\).

In the 1-dimensional case, i.e. the case where the solution X depends on time and a 1-dimensional spatial argument, the behaviour of solutions to (1.3) is well-understood. Solutions exhibit the phenomenon of metastability, i.e. they typically spend large stretches of time close to the minimisers of the potential (1.2) with rare and relatively quick noise-induced transitions between them. Early contributions go back to the 80s where Farris and Jona–Lasinio [9] studied the system on the level of large deviations.

We are particularly interested in the “exponential loss of memory property” first observed by Martinelli, Olivieri and Scoppola in [17, 18]. They studied the flow map induced by (1.3), i.e. the random map \(x \mapsto X(t;x)\) which associates to any initial condition the corresponding solution at time t, and showed that for large t the map becomes essentially constant. They also showed that with overwhelming probability, solutions that start within the basin of attraction of the same minimiser of V contract exponentially fast, with exponential rate given by the smallest eigenvalue of the linearisation of V in this minimiser. This implies for example that the law of such solutions at large times is essentially insensitive to the precise location at which they are started.

It is very natural to consider higher dimensional analogues of (1.3), but unfortunately for space dimension \(d \ge 2\), Eq. (1.3) is ill-posed. In fact, for \(d \ge 2\) the space-time white noise becomes so irregular, that solutions have to be interpreted in the sense of Schwartz distributions, and the interpretation of the nonlinear term is a priori unclear. These kind of singular stochastic partial differential equations (SPDEs) have received a lot of attention recently (see e.g. [6, 10, 12]). The solution proposed in these works is to renormalise the equation, by removing some infinite terms, formally leading to the equation

Note that formally, this renormalisation corresponds to moving the minima of the double-well potential out to \(\pm \infty \) and making them infinitely deep at the same time. So at first glance, it seems unclear why these renormalised distribution-valued solutions should exhibit similar behaviour to the 1-dimensional function-valued solutions of (1.3).

In [14] Hairer and the second named author studied the small \(\varepsilon \) asymptotics for (1.4) for space dimension \(d=2\) and 3 on the level of Freidlin–Wentzell type large deviations. They obtained a large deviation principle with rate function \(\mathcal {I}\) given by

In fact, a result in a similar spirit had already appeared in the 90s [15]. The striking fact is that this rate function is exactly the 2-dimensional version of the rate function obtained in the 1-dimensional case [9]. The infinite renormalisation constant does not affect the rate functional. This result implies that for small \(\varepsilon \) solutions of the renormalised SPDE (1.4) stay close to solutions of the deterministic PDE (1.1) suggesting that (1.4) may indeed be the natural small noise perturbation of (1.1).

In this article we consider (1.4) over a 2-dimensional torus \(\mathbb {T}^2 = \mathbb {R}^2/L\mathbb {Z}^2\) for \(L<2 \pi \). It is known that under this assumption on the torus size L, the deterministic equation (1.1) has exactly three stationary solutions, namely the constant profiles \(-1,0,1\) (see [16, Appendix B.1]). Here \(\pm 1\) are stable minimisers of V and 0 is unstable. We prove that in the small noise regime with overwhelming probability solutions that start close to the same stable minimiser \(\pm 1\) contract exponentially fast. The exponential contraction rate is arbitrarily close to 2, the second derivative of the double-well \(x \mapsto \frac{1}{4} x^4 - \frac{1}{2} x^2\) in \(\pm 1\). This is precisely the 2-dimensional version of [17, Corollary 3.1].

On a technical level we work with the Da Prato–Debussche decomposition (see Sect. 2 for more details). An immediate observation is that differences of any two profiles have much better regularity than the solutions themselves. We split the time axis into random “good” and “bad” intervals depending on whether a reference profile is close to \(\pm 1\) or not. The key idea is that on “good” intervals solutions should contract exponentially, while they should not diverge too fast on “bad” intervals. Furthermore, “good” intervals should be much longer than “bad” intervals.

The control on the “good” intervals is relatively straightforward: the exponential contraction follows by linearising the equation and the fact that these intervals are typically long follows from exponential moment bounds on the explicit stochastic objects appearing in the Da Prato–Debussche approach. The control on the “bad” intervals is much more involved: in the 1-dimensional case two profiles cannot diverge too fast, because the second derivative of the double-well potential is bounded from below. But in the 2-dimensional case, where solutions are distribution-valued, there is no obvious counterpart of this property. Instead we use a strong a priori estimate obtained in our previous work [24] and the local Lipschitz continuity of the non-linearity. Ultimately this yields an exponential growth bound where the exponential rate is given by a polynomial in the explicit stochastic objects. We use a large deviation estimate to prove that these intervals cannot be too long. In the final step we show that the exponential contraction holds for all t if a certain random walk with positive drift stays positive for all times. This random walk is then analysed using techniques developed for the classical Cramér–Lunberg model in risk theory.

The original motivation for our work was to prove an Eyring–Kramers law for the transition times of X. In [2] Berglund, Di Gesú and the second named author studied spectral Galerkin approximations \(X_N\) of (1.4) and obtained explicit estimates on the expected first transition times from a neighbourhood of \(-1\) to a neighbourhood of 1. These estimates give a precise asymptotic as \(\varepsilon \rightarrow 0\) and hold uniformly in the discretisation parameter N. Their method was based on the potential theoretic approach developed in the finite-dimensional context by Bovier et al. in [3]. This approach relies heavily on the reversibility of the dynamics and provides explicit formulas for the expected transition times in terms of certain integrals of the reversible measure. The key observation in [2] was that in the context of (1.3) these integrals can be analysed uniformly in the parameter N using the classical Nelson’s estimate [22] from constructive Quantum Field Theory. However, the result in [2] was not optimal for the following two reasons: First, it does not allow to pass to the limit as \(N\rightarrow \infty \) to retrieve the estimate for the transition times of X. Second, and more important, the bounds could only be obtained for a certain N-dependent choice of initial distribution on the neighbourhood of \(-1\). This problem is inherent to the potential theoretic approach, which only yields an exact formula for the diffusion started in this so-called normalised equilibrium measure. In fact, a large part of the original work [3] was dedicated to removing this problem using regularity theory for the finite-dimensional transition probabilities.

In this paper we overcome these two barriers. We first justify the passage to the limit \(N\rightarrow \infty \) based on our previous work [24]: we use the strong a priori estimates on the level of the approximation \(X_N\) and the support theorem obtained there to prove uniform integrability of the transition times of \(X_N\). The only difficulty here comes from the action of the Galerkin projection on the non-linearity which does not allow to test the equation with powers greater than 1. To remove the unnatural assumption on the initial distribution we make use of our main result, the exponential contraction estimate. This estimate allows us to couple the solution started with an arbitrary but fixed initial condition with the solution started in the normalised equilibrium measure.

1.1 Outline

In Sect. 2 we briefly review the solution theory of (1.4). In Sect. 3 we state our main results, that is, the exponential loss of memory, Theorem 3.1, and the Eyring–Kramers law, Theorem 3.5. In Sect. 4 we prove Theorem 3.1 based on some auxiliary propositions. These propositions are proved in Sects. 5 and 6. Finally, in Sect. 7 we prove the Eyring–Kramers law, Theorem 3.5, generalising [2, Theorem 2.3]. Several known results that are used throughout this article as well as some additional technical statements can be found in the Appendix.

1.2 Notation

We fix a torus \(\mathbb {T}^2 = \mathbb {R}^2/L\mathbb {Z}^2\) of size \(0<L<2\pi \). All function spaces are defined over \(\mathbb {T}^2\). We write \(\mathcal {C}^\infty \) for the space of smooth functions and \(L^p, p\in [1,\infty ]\), for the space of p-integrable periodic functions endowed with the usual norm \(\Vert \cdot \Vert _{L^p}\) and the usual interpretation if \(p=\infty \).

We denote by \(\mathcal {B}^\alpha _{p,q}\) the (inhomogeneous) Besov space of regularity \(\alpha \) and exponents \(p,q\in [1,\infty ]\) with norm \(\Vert \cdot \Vert _{\mathcal {B}^\alpha _{p,q}}\) (see Definition A.1). We write \(\mathcal {C}^\alpha \) and \(\Vert \cdot \Vert _{\mathcal {C}^\alpha }\) to denote the space \(\mathcal {B}^\alpha _{\infty ,\infty }\) and the corresponding norm. Many useful results about Besov spaces that we repeatedly use throughout the article can be found in Appendix A.

For any Banach space \((V, \Vert \cdot \Vert _V)\) we denote by \(B_V(x_0;\delta )\) the open ball \(\{x\in V: \Vert x-x_0\Vert _V < \delta \}\) and by \({\bar{B}}_V(x_0;\delta )\) its closure.

Throughout this article we write C for a positive constant which might change from line to line. In proofs we sometimes write \(\lesssim \) instead of \(\le C\). We also write \(a\vee b\) and \(a\wedge b\) to denote the maximum and the minimum of a and b.

In several statements we write \(\pm 1\) to signify that the statement holds true for either choice of \(+1\) or \(-1\).

2 Preliminaries

Fix a probability space \((\Omega ,\mathcal {F},\mathbb {P})\) and let \(\xi \) be a space-time white noise defined over \(\Omega \). More precisely, \(\xi \) is a family \(\{\xi (\phi )\}_{\phi \in L^2((0,\infty )\times \mathbb {T}^2)}\) of centred Gaussian random variables such that

A natural filtration \(\{\mathcal {F}_t\}_{t\ge 0}\) is given by the usual augmentation (as in [23, Chapter 1.4]) of

We interpret solutions of (1.4) following [6] and [20]. We write \(X( \cdot ;x)\) for the solution started in x and use the decomposition  where

where  solves the stochastic heat equation

solves the stochastic heat equation

The remainder term v solves

where  are the 2nd and 3rd Wick powers of the solution to the stochastic heat equation

are the 2nd and 3rd Wick powers of the solution to the stochastic heat equation  . The random distributions

. The random distributions  and

and  can be constructed as limits of

can be constructed as limits of  and

and  , where

, where  is a spatial Galerkin approximation of

is a spatial Galerkin approximation of  , and \(\mathfrak {R}_N\) is a renormalisation constant which diverges logarithmically in the regularisation parameter N. The value of \(\mathfrak {R}_N\) is given by

, and \(\mathfrak {R}_N\) is a renormalisation constant which diverges logarithmically in the regularisation parameter N. The value of \(\mathfrak {R}_N\) is given by

Note that  is stationary in the space variable z, hence the expectation is independent of z. We refer the reader to [6, Lemma 3.2], [24, Section 2] for more details on the construction of the Wick powers. We recall that

is stationary in the space variable z, hence the expectation is independent of z. We refer the reader to [6, Lemma 3.2], [24, Section 2] for more details on the construction of the Wick powers. We recall that  and

and  can be realised as continuous (in time) processes taking values in \(\mathcal {C}^{-\alpha }\) for \(\alpha >0\) and that \(\mathbb {P}\)-almost surely for every \(T>0\), and \(\alpha '>0\)

can be realised as continuous (in time) processes taking values in \(\mathcal {C}^{-\alpha }\) for \(\alpha >0\) and that \(\mathbb {P}\)-almost surely for every \(T>0\), and \(\alpha '>0\)

The blow-up of  and

and  for t close to 0 is due to the fact that we define the stochastic objects

for t close to 0 is due to the fact that we define the stochastic objects  and

and  with zero initial condition, but we work with a time-independent renormalisation constant \(\mathfrak {R}_N\) (see (2.3)). We define the stochastic heat equation with a Laplacian with mass 1 because this allows us to prove exponential moment bounds of

with zero initial condition, but we work with a time-independent renormalisation constant \(\mathfrak {R}_N\) (see (2.3)). We define the stochastic heat equation with a Laplacian with mass 1 because this allows us to prove exponential moment bounds of  and

and  which hold uniformly in time (see Proposition D.1). Throughout the paper we use

which hold uniformly in time (see Proposition D.1). Throughout the paper we use  to refer to all the stochastic objects

to refer to all the stochastic objects  and

and  simultaneously. In this notation (2.4) turns into

simultaneously. In this notation (2.4) turns into

We fix \(\alpha _0\in (0,\frac{1}{3})\) (to measure the regularity of the initial condition x in \(\mathcal {C}^{-\alpha _0}\)), \(\beta >0\) (to measure the regularity of v in \(\mathcal {C}^\beta \)) and \(\gamma >0\) (to measure the blow-up of \(\Vert v(t;x)\Vert _{\mathcal {C}^\beta }\) for t close to 0) such that

We also assume that \(\alpha '>0\) and \(\alpha >0\) in (2.4) satisfy

In [24, Theorems 3.3 and 3.9]) it was shown that for every \(x\in \mathcal {C}^{-\alpha _0}\) there exist a unique solution \(v\in C\left( (0,\infty );\mathcal {C}^\beta \right) \) of (2.2) such that for every \(T>0\)

Remark 2.1

In Condition (2.5) \(\beta \) has to be strictly less than \(\frac{2}{3}\). This is necessary if one wants to treat all of the terms arising in a fixed point problem for (2.2) with the same norm for v. A simple post-processing of [24, Theorems 3.3 and 3.9] shows that in fact v is continuous in time taking values in \(\mathcal {C}^{2-\lambda }\) for any \(\lambda > \alpha \).

Equations (2.1), (2.2) suggest that indeed X can be seen as a perturbation of the Allen–Cahn equation (1.1), because the terms  and

and  in (2.2) all appear with a positive power of \(\varepsilon \). It is important to note that v is much more regular than X. The irregular part of \(X(\cdot ;x)\) is

in (2.2) all appear with a positive power of \(\varepsilon \). It is important to note that v is much more regular than X. The irregular part of \(X(\cdot ;x)\) is  . Therefore differences of solutions are much more regular than solutions themselves.

. Therefore differences of solutions are much more regular than solutions themselves.

We repeatedly work with restarted stochastic terms: we define  as the solution of

as the solution of

and let  and

and  be its Wick powers. By [24, Proposition 2.3] for every \(s>0\),

be its Wick powers. By [24, Proposition 2.3] for every \(s>0\),  are independent of \(\mathcal {F}_s\) and equal in law to

are independent of \(\mathcal {F}_s\) and equal in law to  . For \(t\ge s\) we can define a restarted remainder \(v_s(t;X(s;x))\) through the identity

. For \(t\ge s\) we can define a restarted remainder \(v_s(t;X(s;x))\) through the identity  . Rearranging (2.2) and using the pathwise identities in [24, Corollary 2.4] one can see that \(v_s\) solves

. Rearranging (2.2) and using the pathwise identities in [24, Corollary 2.4] one can see that \(v_s\) solves

In [24, Theorem 4.2] this is used to prove the Markov property for \(X(\cdot ;x)\).

3 Main results

In this article we prove the following main theorem.

Theorem 3.1

For every \(\kappa >0\) there exist \(\delta _0, a_0, C>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

See Sect. 4.2. \(\square \)

This theorem is a variant of [17, Corollary 3.1] in space dimension \(d=2\), but in that work the supremum is taken over both x and y inside the probability measure. We also obtain this version of the theorem as a corollary.

Corollary 3.2

For every \(\kappa >0\) there exist \(\delta _0,a_0,C>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

See Sect. 4.2. \(\square \)

Remark 3.3

The restriction \(t\ge 1\) in Theorem 3.1 appears only because we measure \(y-x\) in a lower regularity norm than \(X(t;y) - X(t;x)\). To prove the theorem we first prove Theorem 4.9 were we assume that \(y-x\in \mathcal {C}^\beta \) and in this case we prove a bound which holds for every \(t>0\).

Remark 3.4

Theorem 3.1 is an asymptotic coupling of solutions that start close to the same minimiser. In [17, Proposition 3.4] it was shown that in the 1-dimensional case, solutions which start with initial conditions x and y close to different minimisers also contract exponentially fast, but only after time \(T_\varepsilon \propto \mathrm {e}^{[(V(0)-V(\pm 1))+\eta ]/\varepsilon }\) for any \(\eta >0\). This is the “typical” time needed for one of the two profiles to jump close to the other minimiser. We expect that Theorem 3.1 and the large deviation theory developed in [14] could be combined to prove a similar result in the case \(d=2\).

As an application of Theorem 3.1 we prove an Eyring–Kramers law for the transition times of X. Before we state our main result in this direction let us briefly introduce some extra notation.

For \(\delta \in (0,1/2)\) and \(\alpha >0\) we define the symmetric subsets \(A(\alpha ;\delta )\) and \(B(\alpha ;\delta )\) of \(\mathcal {C}^{-\alpha }\) by

where \(D_\perp \) is the closed ball of radius \(\delta \) in \(\mathcal {C}^{-\alpha }\) and \({\bar{f}} = L^{-2} \langle f, 1 \rangle \). For \(x\in A(\alpha ;\delta )\) we define the transition time

Last, for \(k\in \mathbb {Z}^2\) we let

The sequences \(\{\lambda _k\}_{k\in \mathbb {Z}^2}\) and \(\{\nu _k\}_{k\in \mathbb {Z}^2}\) are the eigenvalues of the operators \(-\Delta -1 \) and \(-\Delta + 2\) endowed with periodic boundary conditions.

With this notation at hand the Eyring–Kramers law can be expressed as follows. Notice that by symmetry the same result holds if we swap the neighbourhoods of \(-1\) and 1 below.

Theorem 3.5

There exist \(\delta _0>0\) such that the following holds. For every \(\alpha \in (0,\alpha _0)\) and \(\delta \in (0,\delta _0)\) there exist \(c_+,c_->0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

See Sect. 7.3. \(\square \)

4 Proof of the exponential loss of memory

In this section we prove the exponential loss of memory. In Sect. 4.1 we present the basic ingredients needed in the proof and in Sect. 4.2 we give the proofs of Theorem 3.1 and Corollary 3.2.

4.1 Methodology

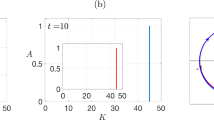

We define two sequences \(\{\nu _i(x)\}_{i\ge 1}\) and \(\{\rho _i(x)\}_{i\ge 1}\) of stopping times which partition our time axis and allow us to keep track of the time spent close to and away from the minimisers \(\pm 1\) (see Fig. 1 for a sketch). On the “good” intervals \([\rho _{i-1}(x), \nu _i(x)]\) we require both the restarted diagrams  to be small and the restarted remainder \(v_{\rho _{i-1}(x)}\) to be close to \(\pm 1\). The “bad” intervals \([\nu _i(x),\rho _i(x)]\) end when \(X(\cdot ;x)\) re-enters a small neighbourhood of the minimisers. The stopping times \(\rho _i(x)\) are defined in terms of the \(\mathcal {C}^{-\alpha _0}\) norm for \(X(\cdot ;x)\), while we define good intervals in terms of the stronger \(\mathcal {C}^\beta \) topology for \(v_{\rho _{i-1}(x)}\). To connect the two, we need to allow for a blow-up close to the starting point of the “good” intervals.

to be small and the restarted remainder \(v_{\rho _{i-1}(x)}\) to be close to \(\pm 1\). The “bad” intervals \([\nu _i(x),\rho _i(x)]\) end when \(X(\cdot ;x)\) re-enters a small neighbourhood of the minimisers. The stopping times \(\rho _i(x)\) are defined in terms of the \(\mathcal {C}^{-\alpha _0}\) norm for \(X(\cdot ;x)\), while we define good intervals in terms of the stronger \(\mathcal {C}^\beta \) topology for \(v_{\rho _{i-1}(x)}\). To connect the two, we need to allow for a blow-up close to the starting point of the “good” intervals.

Definition 4.1

For \(x\in \mathcal {C}^{-\alpha _0}\) we define the sequence of stopping times \(\{\rho _i(x)\}_{i\ge 0}, \{\nu _i(x)\}_{i\ge 1}\) recursively by \(\rho _0(x) = 0\) and

We now define the time increments

The process \(X( \cdot ; x)\) is expected to spend long time intervals close to the minimisers \(\pm 1\), which corresponds to large values of \(\tau _i(x)\). Large values of \(\sigma _i(x)\) are “atypical”. This behaviour is established Propositions 6.3 and 6.6.

The following proposition shows contraction on the “good” intervals. We distinguish between the cases (4.2) and (4.3) for \(y-x\) that lie in \(\mathcal {C}^\beta \) and \(\mathcal {C}^{-\alpha _0}\) respectively. The Da Prato–Debussche decomposition shows that differences of any two profiles lie in \(\mathcal {C}^\beta \) for any \(t>0\) but at \(t=0\) they maintain the irregularity of the initial conditions. Hence we only use (4.3) on the first “good” interval.

Proposition 4.2

For every \(\kappa >0\) there exist \(\delta _0,\delta _1,\delta _2>0\) and \(C>0\) such that if \(\Vert x-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}}\le \delta _0\) and \(y-x\in \mathcal {C}^\beta , \Vert y-x\Vert _{\mathcal {C}^\beta } \le \delta _0\) then

for every \(t\le \tau _1(x)\) defined with respect to \(\delta _1\) and \(\delta _2\). If we only assume that \(\Vert y-x\Vert _{\mathcal {C}^{-\alpha _0}}\le \delta _0\) then

for every \(t\le \tau _1(x)\).

Proof

See Sect. 5.1. \(\square \)

Our next aim is to control the growth of the differences on the “bad” intervals in terms of the stochastic objects  . This is done by partitioning the intervals \([\nu _i(x), \rho _i(x)]\) into tiles of length one. To achieve independence we restart the stochastic objects at the starting point of each tile.

. This is done by partitioning the intervals \([\nu _i(x), \rho _i(x)]\) into tiles of length one. To achieve independence we restart the stochastic objects at the starting point of each tile.

Definition 4.3

For \(k\ge 0\) and \(\rho \ge \nu \ge 0\) let \(t_k = \nu +k\). For \(k \ge 1\) we define a random variable \(L_k(\nu ,\rho )\) by

In our analysis we use a second tiling defined by setting \(s_k= t_k + \frac{1}{2} \), i.e. the tiles \([t_k,t_{k+1}]\) and \([s_k, s_{k+1}]\) overlap. In order to bound \(X(t;y) - X(t;x)\) on a time interval \([t_k,s_k]\) we restart the stochastic objects at \(s_{k-1}\) and write \(X(t;y) - X(t;x) = v_{s_{k-1}}(t;X(s_{k-1};y)) - v_{s_{k-1}}(t;X(s_{k-1};x))\). In Lemma 5.1 we upgrade the strong a priori bound obtained in [24, Proposition 3.7] to get a control on the \(\mathcal {C}^\beta \) norm of both remainders. This bound holds uniformly over all possible values of \(X(s_{k-1};y)\) and \(X(s_{k-1};x)\) and while the bound allows for a blow-up for times t close to \(s_{k-1}\) it holds uniformly over all times in \([t_k,s_k]\). Ultimately, the bound only depends on \(L_k(\nu +\frac{1}{2},\rho )\) in a polynomial way as shown in Fig. 2. Then we can use the local Lipschitz property of the non-linearity in (2.2) to bound the exponential growth rate of \(X(t;y) - X(t;x)\). For the first interval \([t_0,t_1]\) we do not use this trick, because we want to avoid bounds that depend on the realisation of the white noise outside of \([\nu , \rho ]\). On this interval, we make use of an a priori assumption that we have some control on \(\Vert X(\nu ;y)\Vert _{\mathcal {C}^{-\alpha _0}}\) and \(\Vert X(\nu ;x)\Vert _{\mathcal {C}^{-\alpha _0}}\).

Bounds on the \(\mathcal {C}^\beta \) norm of the restarted remainder v on the overlapping tiles of the partition of \([\nu ,\rho ]\). On a time interval \([t_k,s_k]\) we restart the stochastic objects at time \(s_{k-1}\) and bound \(v_{s_{k-1}}\) by a polynomial function of \(L_k\left( \nu +\frac{1}{2},\rho \right) \). On a time interval \([s_k,t_{k+1}]\) we restart the stochastic objects at time \(t_k\) and bound \(v_{t_k}\) by a polynomial function of \(L_k\left( \nu ,\rho \right) \)

Proposition 4.4

Let \(R>0\). Then there exists a constant \(C\equiv C(R)>0\) such that for every \(\Vert X(\nu ;x)\Vert _{\mathcal {C}^{-\alpha _0}}, \Vert X(\nu ;y)\Vert _{\mathcal {C}^{-\alpha _0}} \le R, \rho >\nu \ge 0\) and \(t\in [\nu ,\rho ]\)

where

for \(L_k\) as in (4.4), and for some constants \(p_0\ge 1\) and \(c_0\equiv c_0(R),L_0\equiv L_0(R)\ge 0\).

Proof

See Sect. 5.2. \(\square \)

If we assume that \(y-x\in \mathcal {C}^\beta \), combining the estimates in Propositions 4.2 and 4.4 suggest the bound

for any \(N\ge 1\). If we can show that the exponents satisfy

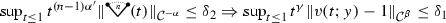

then (4.7) yields exponential contraction at time \(\rho _N(x)\) with rate \(2-\kappa \). The difference of the right hand side and the left hand side of the last inequality is given by the random walk \(S_N(x)\) in the next definition.

Definition 4.5

Let \(\Vert x-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0\). We define the random walk \((S_N(x))_{N\ge 1}\) by

where \(M_0 = 2\log C\) for \(C>0\) as in Propositions 4.2 and 4.4 .

“Typical” realisations of a random walk \(S_N = \sum _{i\le N} (f_i - g_i)\) for \(f_i \sim \mathrm {e}^{0.5/\varepsilon }\exp (1), g_i \sim \mathrm {e}^{0.1/\varepsilon } \mathrm {Weibull}(0.5,1), N=50\) and \(\varepsilon =0.01\). The choice of a Weibull distribution here captures the fact that the random variables \(L(\nu _i(x),\rho _i(x);\sigma _i(x)) + (2-\kappa ) \sigma _i(x) + M_0\) in Definition 4.5 have stretched exponential tails as shown in Proposition 6.7

The next proposition shows that the random walk \(S_N(x)\) stays positive for every \(N \ge 1\) with overwhelming probability (see Fig. 3 for an illustration). The proof is based on a variant of the classical Cramér–Lundberg model in risk theory (see [8, Chapter 1.2]). In this classical model a random walk \(S_N=\sum _{i\le N}(f_i - g_i)\) with i.i.d. exponential random variables \(f_i\) and i.i.d. non-negative random variables \(g_i\) is considered. The probability for \(S_N\) to stay positive for every \(N\ge 1\) can be calculated explicitly in terms of the expectations of \(f_i\) and \(g_i\) using a renewal equation. In our case we use the Markov property and Propositions 6.3 and Proposition 6.7 to compare the random walk \(S_N(x)\) in Definition 4.5 to this classical case.

Remark 4.6

If the family \(\{L(\nu _i(x),\rho _i(x);\sigma _i(x)) + (2-\kappa ) \sigma _i(x) + M_0\}_{i\ge 1}\) had exponential moments, a simple exponential Chebyshev argument would imply the following proposition without any reference to the Cramér–Lundberg model. However, by (4.4) and (4.6) one sees that \(L(\nu _i(x),\rho _i(x);\sigma _i(x))\) is a polynomial of potentially high degree in the explicit stochastic objects (which are themselves polynomials of the Gaussian noise \(\xi \)). Hence, we cannot expect more than stretched exponential moments, and indeed, such bounds are established in Proposition 6.7. In the proof of the next proposition we also use an exponential Chebyshev argument, but only to compare \(\frac{\kappa }{2}\tau _i(x)\) with a suitable exponential random variable which does not depend on x.

Proposition 4.7

For every \(\kappa >0\) there exist \(a_0>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

See Sect. 6.3. \(\square \)

4.2 Proofs of Theorem 3.1 and Corollary 3.2

We first treat the case where \(y-x\in \mathcal {C}^\beta \): let \(x\in \mathcal {C}^{-\alpha _0}\) such that \(\Vert x-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0\) and let y be such that \(y-x\in \mathcal {C}^\beta \) and \(\Vert y-x\Vert _{\mathcal {C}^\beta }\le \delta _0\). We also write \(Y(t) = X(t;y) - X(t;x)\). We consider the event

for \(S_N(x)\) as in Definition 4.5.

We first prove the following proposition which provides explicit estimates on the differences at the stopping times \(\nu _N(x)\) and \(\rho _N(x)\) for every \(N\ge 1\) and \(\omega \in \mathcal {S}(x)\) by iterating Propositions 4.2 and 4.4. To shorten the notation we drop the explicit dependence on the starting point x in the stopping times \(\nu _N\) and \(\rho _N\) and the random walk \(S_N\). We also drop the dependence on the realisation \(\omega \) but we assume throughout that \(\omega \in \mathcal {S}(x)\).

Proposition 4.8

For any \(\kappa >0\) let \(C>0\) be as in Proposition 4.2. Then for every \(\omega \in \mathcal {S}(x)\) and \(N\ge 1\)

Proof

We prove our claim by induction on \(N\ge 1\), observing that it is obvious for \(N=0\).

To prove (4.10) for \(N+1\) we first notice that by the definition of \(\rho _N\) we have that \(\Vert X^\varepsilon (\rho _N;x)-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}}\le \delta _0\) and since \(\omega \in \mathcal {S}(x)\) (4.11) implies that \(\Vert Y(\rho _N)\Vert _{\mathcal {C}^\beta } \le \delta _0\). Hence we can use (4.2) to get

Combining with the estimate on \(\Vert Y(\rho _N)\Vert _{\mathcal {C}^\beta }\) the above implies (4.10) for \(N+1\).

To prove (4.11) for \(N+1\) we first notice that by Proposition 6.2\(\Vert X(\nu _{N+1};x)\Vert _{\mathcal {C}^{-\alpha _0}}\le 2\delta _1+1\). This bound, (4.10) for \(N+1\) and the triangle inequality imply that \(\Vert X(\nu _{N+1};y)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0 + 2\delta _1+1\). Hence we can use Proposition 4.4 for \(\nu = \nu _{N+1}, \rho = \rho _{N+1}\) and \(R=\delta _0 + 2\delta _1+1\) to obtain

If we combine with (4.10) for \(N+1\) we have that

We then rearrange the terms to obtain (4.11), which completes the proof. \(\square \)

We are ready to prove the following version of Theorem 3.1 for sufficiently smooth initial conditions.

Theorem 4.9

For every \(\kappa >0\) there exist \(\delta _0, a_0, C>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

Let \(\omega \in \mathcal {S}(x)\) as in (4.9). For any \(t>0\) there exists \(N\equiv N(\omega )\ge 0\) such that \(t\in [\rho _N, \nu _{N+1})\) or \(t\in [\nu _{N+1}, \rho _{N+1})\).

If \(t\in [\rho _N, \nu _{N+1})\) then

If \(t\in [\nu _{N+1}, \rho _{N+1})\) then

By Proposition 4.7 there exist \(a_0>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

which completes the proof. \(\square \)

We are now ready to prove Theorem 3.1 and Corollary 3.2.

Proof of Theorem 3.1

This is a consequence of (4.3), Proposition 6.3 and Theorem 4.9. Let \(\delta _1,\delta _2>0\) sufficiently small such that \(\delta _1+\delta _2<\delta _0\) and assume that \(\tau _1(x)\ge 1\). By the definition of \(\tau _1(x)\)

If we also choose \(\delta _0'< \delta _0\) by (4.3) we have that for every \(\Vert y-x\Vert _{\mathcal {C}^{-\alpha _0}}\le \delta _0'\)

The probability of the event \(\{\tau _1(x) \ge 1\}\) can be estimated from below by Proposition 6.3 uniformly in \(\Vert x-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}}\le \delta _0'\). Combining with Theorem 4.9 completes the proof. \(\square \)

Proof of Corollary 3.2

We only prove the case where initial conditions are close to the minimiser 1. We fix \(\delta _0',\delta _1'>0\) such that \(2\delta _0' <\delta _0\) and \(\delta _0'+\delta _1'< \delta _1\). By Proposition 6.2 if we chose \(\delta _2\) sufficiently small then

uniformly for \(\Vert y-1\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0'\).

uniformly for \(\Vert y-1\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0'\).

This together with (4.3) implies that for every \(x,y\in B_{\mathcal {C}^{-\alpha _0}}(1;\delta _0')\)

Let

\(t\ge 1\) and \(y\in B_{\mathcal {C}^{-\alpha _0}}(-1;\delta _0')\). Then

\(\sup _{s \le t \le T} (t-s)^\gamma \Vert v_s(t;X(s;1))- (\pm 1)\Vert _{\mathcal {C}^\beta }\le \delta _1' \Rightarrow \sup _{s \le t \le T} (t-s)^\gamma \Vert v_s(t;X(s;y))- (\pm 1)\Vert _{\mathcal {C}^\beta }\le \delta _1\) for \(T,s\ge 1\).

\(\Vert X(t;1)-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0' \Rightarrow \Vert X(t;y)-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0\).

This implies that if we consider the process X(t; y) for \(t\ge 1\), the times \(\nu _i(X(1;y))\) and \(\rho _i(X(1;y))\) of Definition 4.1 for \(\delta _0,\delta _1\) and \(\delta _2\) can be replaced by the times \(\nu _i(X(1;1))\) and \(\rho _i(X(1;1))\) for \(\delta _0',\delta _1'\) and the same \(\delta _2\). Hence the corresponding random walk \(S_N(X(1;y))\) in Definition 4.5 can be replaced by \(S_N(X(1;1))\).

We can now repeat the proof of Theorem 4.9 for the difference \(X(t;y) - X(t;x), t\ge 1\), step by step, replacing the event in (4.9) by

This allows us to prove that

uniformly in \(y,x\in B_{\mathcal {C}^{-\alpha _0}}(1;\delta _0')\).

To estimate the event in (4.12) we use Theorem 3.1 and Propositions 6.1 and 4.7. This completes the proof. \(\square \)

5 Pathwise estimates on the difference of two profiles

In this section we prove Propositions 4.2 and Propositions 4.4. Our analysis here is pathwise and uses no probabilistic tools.

5.1 Proof of Proposition 4.2

Proof of Proposition 4.2

We only prove (4.3). To prove (4.2) we follow the same strategy as below. However in this case we do not need to encounter the blow-up of \(\Vert Y(t)\Vert _{\mathcal {C}^\beta }\) close to 0 and hence we omit the proof since it poses no extra difficulties.

Let \(Y(t) = X(t;y) - X(t;x)\) and notice that from (2.2) we get

We use the identity \(v(\cdot ;y) = v(\cdot ;x) +Y\) to rewrite this equation in the form

where \(\mathtt {Error}(v(\cdot ;x);Y) = -Y^3 - 3v(\cdot ;x) Y^2\) collects all the terms which are higher order in Y. Then

We set

Let \(\iota = \inf \{t>0: (t\wedge 1)^\gamma \Vert Y(t)\Vert _{\mathcal {C}^\beta } > \zeta \}\) for \(1\ge \zeta >\delta _0\) and notice that for \(t\le \tau _1(x) \wedge \iota \) using (5.1) we get

where we also use that for \(s\le t\)

Choosing \(\zeta \le {\tilde{\kappa }}/ C_1\) and \(\delta _2 \le \tilde{\kappa }/ C_2\vee C_3\) we have

Then, for \(t\le \tau _1(x) \wedge \iota \), by the generalised Gronwall inequality, Lemma B.1, on \(f(t) = (t\wedge 1)^\gamma \Vert Y(t)\Vert _{\mathcal {C}^{\beta }}\) there exist \(c>0\) such that

We now fix \(\delta _1>0\) such that \(c \tilde{\kappa }^{\frac{1}{1-\frac{\alpha +\beta }{2}-3\gamma }} \le \frac{\kappa }{2}\). This implies that for \(t\le \tau _1(x) \wedge \iota \)

Finally choosing \(\delta _0\) sufficiently small we furthermore notice that \(\tau _1(x) \wedge \iota = \tau _1(x)\), which completes the proof of (4.3). \(\square \)

5.2 Proof of Proposition 4.4

Before we proceed to the proof of Proposition 4.4 we need the following lemma which upgrades the a priori estimates in [24, Proposition 3.7]. Here and below we let \(S(t) = \mathrm {e}^{\Delta t}\).

Lemma 5.1

There exist \(\alpha ,\gamma ',C>0\) and \(p_0\ge 1\) such that if  then

then

Proof

Throughout this proof we simply write v(t) to denote v(t; x). We first need bounds on \(\Vert v(t)\Vert _{L^p}\), for p sufficiently large, and \(\int _s^t \Vert \nabla v(r)\Vert _{L^2}^2 \,\mathrm {d}r\). These bounds can be obtained by classical energy estimates. A bound on \(\Vert v(t)\Vert _{L^p}\) has already been obtained in [24, Proposition 3.7], which states that for every \(p\ge 2\) even

for some exponents \(p_n\ge 1\). To bound \(\int _s^t \Vert \nabla v(r)\Vert _{L^2}^2 \,\mathrm {d}r\) we need to slightly modify the strategy used in the proof of [24, Proposition 3.7]. In particular, combining [24, Equations (3.13) and (3.22)] and integrating from s to t we obtain the energy inequality,

which implies that

We now upgrade these bounds to bounds on \(\Vert v(t)\Vert _{\mathcal {C}^\beta }\). Using the mild form of (2.2) we have for \(1\ge t>s>0\)

To estimate \(\Vert v(t)\Vert _{\mathcal {C}^\beta }\) we use the \(L^p\) bound (5.2), the energy inequality (5.3) and the embedding \(\mathcal {B}^1_{2,\infty }\) to bound the terms appearing on the right hand side of the last inequality as shown below.

We treat each term in (5.4) separately. Below p may change from term to term and \(\alpha ,\lambda \) can be taken arbitrarily small. We write \(p_1\) and \(p_2\) for conjugate exponents of p, i.e. \(\frac{1}{p} = \frac{1}{p_1}+\frac{1}{p_2}\). We also denote by \((1\vee L)^{p_0}\) a polynomial of degree \(p_0\ge 1\) in the variable \(1\vee L\) where the value of \(p_0\) may change from line to line.

Term \({I_1}\):

Term \({I_2}\):

Term \({I_3}\):

Term \({I_4}\):

Term \({I_5}\):

Term \({I_6}\):

Term \({I_7}\):

Using Proposition A.9, (5.2) and (5.3) we notice that

Combining the above and choosing \(s=t/2\) we find \(\gamma ' > 0\) such that

which completes the proof. \(\square \)

Proof of Proposition 4.4

We denote by \((1\vee L)^{p_0}\) a polynomial of degree \(p_0\ge 1\) in the variable \(1\vee L\) where the value of \(p_0\) may change from line to line.

For \(k\ge 0\) recall that \(t_k = \nu + k\) and \(s_k = t_k + \frac{1}{2}\). As before, we write \(Y(t) = X(t;y) - X(t;x)\).

Let \(t\in (t_k,s_k], k\ge 1\). We restart the stochastic terms at time \(s_{k-1}\) and write \(Y(t) = v_{s_{k-1}}(t;{\tilde{y}}) - v_{s_{k-1}}(t;{\tilde{x}})\) where for simplicity \({\tilde{y}} = X(s_{k-1};y)\) and \({\tilde{x}} = X(s_{k-1};x)\). Together with (2.7), this implies that

Using the mild form of the above equation, now starting at \(t_k = s_{k-1}+\frac{1}{2}\), we get

By Lemma 5.1 there exist \(\gamma '>0\) such that

Combining the above we get

By the generalised Gronwall inequality, Lemma B.1, there exists \(c_0>0\) such that

Following the same strategy we prove that for \(t\in [s_k,t_{k+1}], k\ge 1\),

Finally, we also need a bound for \(t\in [t_0,t_1]\). To obtain an estimate which does not depend on any information before time \(t_0\) we use local solution theory. By [24, Theorem 3.3] there exists \(t_*\in (t_0,t_1)\) such that

and furthermore we can take

By Lemma 5.1 we also have that

Combining these two bounds we get

where the implicit constant depends on R. Note that \(\gamma <\frac{1}{3}\) whereas \(\gamma '\) is much larger. We write \(Y(t) = v_{t_0}(t;y)-v_{t_0}(t;x)\) and use the mild form starting at \(t_0\). We then use (5.7) to bound \(\Vert v_{t_0}(t;\cdot )\Vert _{\mathcal {C}^\beta }\) on \([t_0,t_1]\) which implies the estimate

The extra term \((r-t_0)^{-2\gamma }\) in the last inequality appears because of the blow-up of \(v_{t_0}(t;\cdot )\) and  for t close to \(t_0\). By the generalised Gronwall inequality, Lemma B.1, we obtain that

for t close to \(t_0\). By the generalised Gronwall inequality, Lemma B.1, we obtain that

For arbitrary \(t\in [\nu ,\rho ]\) we glue together (5.5), (5.6) and (5.8) to get

for some \(L_0>0\) which collects the implicit constants in the inequalities. \(\square \)

6 Random walk estimates

In this section we prove Proposition 4.7 based mainly on probabilistic arguments. In Sects. 6.1 and 6.2 we provide estimates on \(\frac{\kappa }{2} \tau _i(x)\) and \(L(\nu _i(x),\rho _i(x);\sigma _i(x)) + (2-\kappa ) \sigma _i(x) + M_0\) from Definition 4.5. In Sect. 6.3 we use these estimates to prove Proposition 4.7.

6.1 Estimates on the exit times

Proposition 6.1

Let \(\delta >0\) and  . Then there exist \(a_0>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

. Then there exist \(a_0>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

First notice that for \(N\ge 1\)

By Proposition D.1 and the exponential Chebyshev inequality there exists \(a_0>0\) such that for every \(k\ge 0\)

Hence

and choosing \(N = \mathrm {e}^{3a_0/\varepsilon }\) completes the proof. \(\square \)

Proposition 6.2

For \(\delta _1>0\) sufficiently small there exist \(\delta _0,\delta _2>0\) such that if

then for every \(\Vert x-(\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0\)

and

Proof

Let \(u(t) = v(t;x) - (\pm 1)\). By an exact expansion of \(-v^3+v\) around \(\pm 1\) we have that

where \(\mathtt {Error}(u) = -u^3\pm 3u^2\) and \(\Vert \mathtt {Error}(u)\Vert _{\mathcal {C}^\beta } \lesssim \Vert u\Vert _{\mathcal {C}^\beta }^3+\Vert u\Vert _{\mathcal {C}^\beta }^2\). Let \(T>0\) and \(\iota = \inf \{t>0: (t\wedge 1)^\gamma \Vert u(t)\Vert _{\mathcal {C}^\beta } \ge \delta _1\}\) for some \(\delta _1>0\) which we fix below. Using the mild form of (6.2) we get

If we furthermore assume (6.1) for \(t\le T\wedge \iota \) we obtain that

Then Lemma B.2 implies the bound

Choosing \(\delta _0< \frac{\delta _1}{4C}, \delta _1<\frac{1}{4C}\) and \(\delta _2<\frac{\delta _1}{4C}\) this implies that \(\sup _{t\le T\wedge \iota } (t\wedge 1)^\gamma \Vert u(t)\Vert _{\mathcal {C}^\beta } < \delta _1\) which in turn implies that \(\iota \le T\) and proves the first bound.

To prove the second bound we notice that for every \(t\le T\)

Hence it suffices to prove that \(\sup _{t\le T} \Vert u(t)\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _1\). Using again the mild form of (6.2) we get

for every \(t\le T\). Plugging in (6.1) and the bound \(\sup _{t\le T} (t\wedge 1)^\gamma \Vert u(t)\Vert _{\mathcal {C}^\beta }\le \delta _1\) the last inequality implies

Using again Lemma B.2 we obtain that \(\sup _{t\le T}\Vert u(t)\Vert _{\mathcal {C}^{-\alpha _0}} < \delta _1\), which completes the proof.

Proposition 6.3

For every \(\kappa >0\) and \(\delta _1>0\) sufficiently small there exist \(a_0,\delta _0,\delta _2>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

where \(\tau _1(x)\) is given by (4.1).

Proof

We first notice that there exists \(\varepsilon _0>0\) such that for every \(\varepsilon \le \varepsilon _0\)

The last probability can be estimated by Propositions 6.2 and 6.1 for \(\delta =\delta _2\). \(\square \)

6.2 Estimates on the entry times

In this section we use large deviation theory and in particular a lower bound of the form

where \(\aleph \) is a compact subset of \(\mathcal {C}^{-\alpha }\) and \(\mathcal {A}(T;x)\subset \{f:(0,T) \rightarrow \mathcal {C}^{-\alpha }\}\) is open. This bound is an immediate consequence of [14] and the remark that the solution map

is jointly continuous on compact time intervals. This estimate implies a “nice” lower bound for the probabilities \(\mathbb {P}(X(\cdot ;x)\in \mathcal {A}(T;x))\) if a suitable path \(f\in \mathcal {A}(T;x)\) is chosen.

In the next proposition we use the lower bound (6.3) for suitable sets \(\aleph \) and \(\mathcal {A}(T;x)\) to estimate probabilities of the entry time of X in a neighbourhood of \(\pm 1\). In particular, we construct a path \(f(\cdot ;x)\) and obtain bounds on \(I(f(\cdot ;x))\) uniformly in \(x\in \aleph \). This construction is similar in spirit to the one used in [9, proof of Theorem 9.1] for the 1-dimensional analogue of (1.4), although here we consider a slightly different event and the initial conditions are not regular functions since they lie in \(\mathcal {C}^{-\alpha _0}\). For this reason we make use of the smoothing properties of the deterministic flow given by Proposition C.3.

Proposition 6.4

Let \(\delta _0>0\) and \(\sigma (x) = \inf \big \{t > 0 : \min _{x_*\in \{-1,1\}} \Vert X(t;x) - x_*\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0 \big \}\). For every \(R,b>0\) there exists \(T_0>0\) such that

Proof

First notice that

By the large deviation estimate (6.3) it suffices to bound

We construct a suitable path \(g\in \mathcal {A}(T_0;x)\) and we use the trivial inequality

We now give the construction of g which involves five different steps. In Steps 1, 3 and 5, g follows the deterministic flow. The contribution of these steps to the energy functional I is zero. In Steps 2 and 4, g is constructed by linear interpolation. The contribution of these steps is estimated by Lemma 6.5. Below we write \(X_{det}(\cdot ;x)\) to denote the solution of (1.1) with initial condition x. We also pass through the space \(\mathcal {B}^1_{2,2}\) to use convergence results for \(X_{det}(\cdot ;x)\) which hold in this topology (see Propositions C.1 and C.2).

Step 1 (Smoothness of initial condition via the deterministic flow):

Let \(\tau _1=1\). For \(t\in [0,\tau _1]\) we set \(g(t;x) = X_{det}(t;x)\). By Proposition C.3 there exist \(C\equiv C(r)>0\) and \(\lambda >0\) such that

Step 2 (Reach points that lead to a stationary solution):

By Step 1 \(g(\tau _1;x)\in B_{\mathcal {C}^{2+\lambda }}(0;C)\) uniformly for \(\Vert x\Vert _{\mathcal {C}^{-\alpha _0}}\le R\). Let \(\delta >0\) to be fixed below. By compactness there exists \(\{y_i\}_{1\le i\le N}\) such that \(B_{\mathcal {C}^{2+\lambda }}(0;C)\) is covered by \(\cup _{1\le i\le N} B_{\mathcal {B}^1_{2,2}}(y_i;\delta )\). Here we use that \(\mathcal {C}^{2+\lambda }\) is compactly embedded in \(\mathcal {B}^1_{2,2}\) (see Proposition A.8).

Without loss of generality we assume that \(\{y_i\}_{1\le i\le N}\) is such that \(y_i\in \mathcal {C}^\infty \) and \(X_{det}(t;y_i)\) converges to a stationary solution \(-1,0,1\) in \(\mathcal {B}^1_{2,2}\). Otherwise we choose \(\{y_i^*\}_{1\le i\le N} \in B_{\mathcal {B}^1_{2,2}}(y_i;\delta )\) such that \(y_i^* \in \mathcal {C}^\infty \) and relabel them. This is possible because of Proposition C.1.

Let \(\tau _2=\tau _1+\tau \), for \(\tau >0\) which we fix below. For \(t\in [\tau _1,\tau _2]\) we set \(g(t;x) = g(\tau _1;x) + \frac{t- \tau _1}{\tau _2-\tau _1} (y_i - g(\tau _1;x))\), where \(y_i\) is such that \(g(\tau _1;x) \in B_{\mathcal {B}^1_{2,2}}(y_i;\delta )\).

Step 3 (Follow the deterministic flow to reach a stationary solution):

Let \(T_i^*\) be such that \(X_{det}(t;y_i) \in B_{\mathcal {B}^1_{2,2}}(x_*;\delta )\) for every \(t\ge T_i^*\), where \(x_*\in \{-1,0,1\}\) is the limit of \(X_{det}(t;y_i)\) in \(\mathcal {B}^1_{2,2}\), for \(\{y_i\}_{1\le i\le N}\) as in Step 2. Let \(\tau _3=\tau _2+ \max _{1\le i\le N}T_i^*\vee 1\). For \(t\in [\tau _2,\tau _3]\) we set \(g(t;x) = X_{det}(t- \tau _2;y_i)\). If \(X_{det}(\tau _3-\tau _2;y_i) \in B_{\mathcal {B}^1_{2,2}}(\pm 1;\delta )\) we stop here. Otherwise \(X_{det}(\tau _3-\tau _2;y_i)\in B_{\mathcal {B}^1_{2,2}}(0;\delta )\cap B_{\mathcal {C}^{2+\lambda }}(0;C)\) (here we use again Proposition C.3 to ensure that \(X_{det}(\tau _3-\tau _2;y_i)\in B_{\mathcal {C}^{2+\lambda }}(0;C)\)) and we proceed to Steps 4 and 5.

Step 4 (If an unstable solution is reached move to a point nearby which leads to astable solution):

We choose \(y_0\in B_{\mathcal {B}^1_{2,2}}(0;\delta )\) such that \(y_0\in \mathcal {C}^\infty \) and \(X_{det}(t;y_0)\) converges to either 1 or \(-1\) in \(\mathcal {B}^1_{2,2}\). This is possible because of Proposition C.2.

Let \(\tau _4 = \tau _3 +\tau \) for \(\tau >0\) as in Step 2 which we fix below. For \(t\in [\tau _3,\tau _4]\) we set \(g(t;x) = g(\tau _3;x) + \frac{t - \tau _3}{\tau _4 - \tau _3} (y_0 - g(\tau _3;x))\).

Step 5 (Follow the deterministic flow again to finally reach a stable solution):

Let \(T_0^*\) be such that \(X_{det}(t;y_0)\in B_{\mathcal {B}^1_{2,2}}(\pm 1;\delta )\) for every \(t\ge T_0^*\), where \(y_0\) is as in Step 4. Let \(\tau _5 = \tau _4+T_0^*\vee 1\). For \(t\in [\tau _4,\tau _5]\) we set \(g(t;x) = X_{det}(t-\tau _4;y_0)\).

For the path \(g(\cdot ;x)\) constructed above we see that after time \(t \ge \tau _5, g(t;x)\in B_{\mathcal {B}^1_{2,2}}(\pm 1;\delta )\) for every \(\Vert x\Vert -{\mathcal {C}^{-\alpha _0}}\le R\). This implies that \(\Vert g(t;x) - (\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} <C\delta \) since by (A.5), \(\mathcal {B}^1_{2,2} \subset \mathcal {C}^{-\alpha _0}\). We now choose \(\delta >0\) such that \(C \delta < \delta _0\) and let \(T_0=\tau _5+1\). Then \(g\in \mathcal {A}(T_0;x)\).

To bound \(I(g(\cdot ;x))\) we split our time interval based on the construction of g i.e. \(I_k=[\tau _{k-1},\tau _k]\) for \(k=1,\ldots ,4\) and \(I_5=[\tau _5,T_0]\). We first notice that for \(k=1,3,5\)

since on these intervals we follow the deterministic flow. For the remaining two intervals, i.e. \(k=2,4\), we first notice that by construction \(\Vert g(\tau _{k-1};x)\Vert _{\mathcal {C}^{2+\lambda }},\Vert g(\tau _k;x)\Vert _{\mathcal {C}^{2+\lambda }}\le C\). By (A.3), \(\mathcal {C}^{2+\lambda } \subset \mathcal {B}^2_{\infty ,2}\) for every \(\lambda >0\), hence we also have that \(\Vert g(\tau _{k-1};x)\Vert _{\mathcal {B}^2_{\infty ,2}}, \Vert g(\tau _k;x)\Vert _{\mathcal {B}^2_{\infty ,2}}\le C\). We can now choose \(\tau \) in Steps 2 and 4 according to Lemma 6.5, which implies that

Hence

For \(b>0\) we choose \(\delta \) even smaller to ensure that \(C\delta < b\). Finally, by (6.3) there exists \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

which completes the proof. \(\square \)

Lemma 6.5

([9, Lemma 9.2]) Let \(f(t) = x + \frac{t}{\tau } (y-x)\) such that \(\Vert x\Vert _{\mathcal {B}^2_{2,2}}, \Vert y\Vert _{\mathcal {B}^2_{2,2}} \le R\) and \(\Vert x-y\Vert _{L^2} \le \delta \). There exist \(\tau >0\) and \(C\equiv C(R)\) such that

Proof

We first notice that \(\partial _t f(t) = \frac{1}{\tau } (y-x)\), hence \(\Vert \partial _t f(t)\Vert _{L^2} \le \frac{1}{\tau } \delta \). For the term \(\Delta f(t)\) we have

where we use that the Besov space \(\mathcal {B}^2_{2,2}\) is equivalent with the Sobolev space \(H^1\). This is immediate from Definition A.1 for \(p=q=2\) if we write \(\Vert f*\eta _k\Vert _{L^2}\) using Plancherel’s identity. For the term \(f(t)^3 - f(t)\) we have

Hence for \(C\equiv C(R)\)

Choosing \(\tau = \delta \) completes the proof. \(\square \)

In the next proposition we estimate the tails of the entry time of X in a neighbourhood of \(\pm 1\) uniformly in the initial condition x. This is achieved by Proposition 6.4 and the Markov property combined with [24, Corollary 3.10] which implies that after time \(t=1\) the process \(X(\cdot ;x)\) enters a compact subset of the state space with positive probability uniformly in x.

Proposition 6.6

Let \(\delta _0>0\) and \(\sigma (x) = \inf \big \{t > 0 : \min _{x_*\in \{-1,1\}} \Vert X(t;x) - x_*\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0 \big \}\). For every \(b>0\) there exist \(T_0>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

for every \(m\ge 1\).

Proof

By [24, Corollary 3.10] we know that \(\sup _{x\in \mathcal {C}^{-\alpha _0}} \sup _{t\in (0,1]} t^{\frac{p}{2}} \mathbb {E}\Vert X(t;x)\Vert _{L^p}^p <\infty \), for every \(p\ge 2\), and the bound is uniform in \(\varepsilon \in (0,1]\) since it only depends polynomially on \(\sqrt{\varepsilon }\). Hence by a simple application of Markov’s inequality there exist \(R_0>0\) such that

By Proposition 6.4 for every \(b>0\) there exists \(T_0>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Then for every \(x\in \mathcal {C}^{-\alpha _0}\) and \(\varepsilon \le \varepsilon _0\)

Using the Markov property successively implies for every \(m\ge 1\) and \(x\in \mathcal {C}^{-\alpha _0}\)

Combining (6.6) and (6.7) we obtain that

The last inequality completes the proof if we relabel b and \(T_0\). \(\square \)

Proposition 6.7

Let \(\delta _0>0, \nu _1(x), \rho _1(x)\) as in Definition 4.1, \(\sigma _1(x)\) as in (4.1) and \(L(\nu _1(x),\rho _1(x);\sigma _1(x))\) as in (4.6). For every \(\kappa , M_0,b>0\) there exist \(T_0 > 0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

for every \(m\ge 1\) and \(p_0\ge 1\) as in (4.6).

Proof

We first condition on \(\nu _1(x)\) to obtain the bound

where \(\sigma (x) = \inf \left\{ t > 0 : \min _{x_*\in \{-1,1\}} \Vert X(t;x) - x_*\Vert _{\mathcal {C}^{-\alpha _0}} \le \delta _0 \right\} \). Let \(T_0 \ge 1\) to be fixed below and notice that for any \(T_1>0\)

for some \(C>0\), where in the second inequality we use convexity of the mapping \(g\mapsto g^{\frac{1}{p_0}}\) and the fact that \(L_k(l,\sigma )\) is increasing in \(\sigma \) by Definition 4.3. By Proposition 6.6 we can choose \(T_1>0\) and \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

We also notice that

where in the first inequality we use that \(L_k(l,mT_1) \le L_k(l,l+k)\), for every \(1\le k\le \lfloor m T_1 \rfloor \), and in the second we use an exponential Chebyshev inequality, independence and equality in law of the \(L_k(l,l+k)\)’s. For any \(T>0\) we choose \(c\equiv c(n)>0\) according to Proposition D.1, \(T_0\) sufficiently large and \(\varepsilon _0\in (0,1)\) sufficiently small such that for every \(\varepsilon \le \varepsilon _0\)

Combining all the previous inequalities imply that

This completes the proof if we relabel b since T is arbitrary. \(\square \)

6.3 Proof of Proposition 4.7

In this section we set

In this notation the random walk \(S_N(x)\) in Definition 4.5 is given by \(\sum _{i\le N} (f_i(x) - g_i(x))\).

To prove Proposition 4.7 we first consider a sequence of i.i.d. random variables \(\{{\tilde{f}}_i\}_{i\ge 1}\) such that \(\tilde{f}_1 \sim \exp (1)\). We furthermore assume that the family \(\{\tilde{f}_i\}_{i\ge 1}\) is independent from both \(\{f_i(x)\}_{i\ge 1}\) and \(\{g_i(x)\}_{i\ge 1}\). For \(\lambda >0\) which we fix later on, we set

In the proof of Proposition 4.7 below we compare the random walk \(S_N(x)\) with \({\tilde{S}}_N(x)\). The idea is that \(\sum _{i\le N} f_i(x)\) behaves like \(\lambda \sum _{i\le N} \tilde{f}_i\) for suitable \(\lambda >0\).

In the next proposition we estimate the new random walk \(\tilde{S}_N(x)\) using stochastic dominance. In particular we assume that the family of random variables \(\{g_i(x)\}_{i\ge 1}\) is stochastically dominated by a family of i.i.d. random variables \(\{\tilde{g}_i\}_{i\ge 1}\) which does not depend on x and obtain a lower bound on \(\mathbb {P}(-{\tilde{S}}_N(x) \le u \text { for every } N\ge 1)\).

From now on we denote by \(\mu _Z\) the law of a random variable Z.

Proposition 6.8

Assume that there exists a family of i.i.d. random variables \(\{{\tilde{g}}_i\}_{i\ge 1}\), independent from both \(\{g_i(x)\}_{i\ge 1}\) and \(\{{\tilde{f}}_i\}_{i \ge 1}\), such that

for every \(g\ge 0\). Let \({\tilde{S}}_N = \lambda \sum _{i\le N} \tilde{f}_i - \sum _{i\le N} {\tilde{g}}_i\). Then

Proof

Let

We first prove that for every \(N\ge 1\) and every x

For \(N = 1\) we have that

Let us assume that (6.8) holds for N. Let \(\partial B_0 = \{y: \Vert y- (\pm 1)\Vert _{\mathcal {C}^{-\alpha _0}} =\delta _0\}\). Conditioning on \(\left( {\tilde{f}}_1, g_1(x), X(\nu _2(x);x)\right) \) and using independence of \({\tilde{f}}_1\) from the joint law of \(\left( g_1(x), X(\nu _2(x);x)\right) \) we notice that

In the last equality above we use that \(G_N(u+\lambda f-g)\) does not depend on y, hence we can drop the integral with respect to y. Let

Then for fixed \(u,f\ge 0, H\) is decreasing with respect to g. By Lemma E.1

Integrating the last inequality with respect to f with \(\mu _{{\tilde{f}}_1}\) and combining with (6.9) we obtain

which proves (6.8). If we now take \(N\rightarrow \infty \) in (6.8) we get for arbitrary x

which completes the proof. \(\square \)

In the next proposition we prove existence of a family of random variables \(\{{\tilde{g}}_i\}_{i\ge 1}\) that satisfy the assumption of Proposition 6.8 and estimate their first moment.

Proposition 6.9

There exists a family of i.i.d. random variables \(\{\tilde{g}_i\}_{i\ge 1}\), independent from both \(\{g_i(x)\}_{i\ge 1}\) and \(\{{\tilde{f}}_i\}_{i\ge 1}\), such that

and furthermore for every \(b>0\) there exist \(\varepsilon _0\in (0,1)\) and \(C>0\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

We first notice that by the Markov property

Let F(g) be the right continuous version of the increasing function \(1 - \sup _{x\in \mathcal {C}^{-\alpha _0}} \mathbb {P}(g_1(x) \ge g)\). We consider a family of i.i.d. random variables such \(\{\tilde{g}_i\}_{i\ge 1}\) independent from both \(\{g_i(x)\}_{i\ge 1}\) and \(\{{\tilde{f}}_i\}_{i\ge 1}\) such that \(\mathbb {P}({\tilde{g}}_i\le g) = F(g)\). To estimate \(\mathbb {E}{\tilde{g}}_1\) let \(c_\varepsilon >0\) to be fixed below. We notice that

For \(b>0\) we choose \(T_0>0\) and \(\varepsilon _0\in (0,1)\) as in Proposition 6.7. Then for every \(\varepsilon \le \varepsilon _0\)

where in the last inequality we use Proposition 6.7 to estimate \(\mathbb {P}\left( g_1(x)^\frac{1}{p_0} \ge mT_0\right) \). We now choose \(c_\varepsilon >0\) such that \(c_\varepsilon T_0 = \log \left( 1+\mathrm {e}^{-b/\varepsilon }\right) \). Then

Finally, by (6.10) we obtain that

which completes the proof if we relabel b. \(\square \)

Remark 6.10

In the proof of Proposition 6.9 we use stretched exponential moments of \({\tilde{g}}_1\), although we only need 1st moments (see Lemma 6.12 below). This simplifies our calculations.

From now on we let \({\tilde{S}}_N = \lambda \sum _{i\le N} {\tilde{f}}_i - \sum _{i\le N} {\tilde{g}}_i\) for \(\{{\tilde{g}}_i\}_{i\ge 1}\) as in Proposition 6.9.

In the next proposition we explicitly compute the probability \(\mathbb {P}(-{\tilde{S}}_N \le 0 \text { for every } N\ge 1)\). The proof is essentially the same as the classical Cramér–Lundberg estimate (see [8, Chapter 1.2]). We present it here for the reader’s convenience.

Proposition 6.11

For the random walk \({\tilde{S}}_N\) the following estimate holds,

Proof

Let \(G(u) = \mathbb {P}(-{\tilde{S}}_N \le u \text { for every } N \ge 1)\). Conditioning on \(({\tilde{f}}_1,{\tilde{g}}_1)\) and using independence we notice that

where in the last equality we use that \({\tilde{f}}_1\sim \exp (1)\) and we also make the change of variables \({\bar{f}} = u+\lambda f\). This implies that G(u) is differentiable with respect to u and in particular

Integrating the last equation form 0 to u we obtain that

Let \(F(g) := \mu _{{\tilde{g}}_1}([0,g])\). A simple integration by parts implies

Combining (6.12) and (6.13) we get

By taking \(u\rightarrow \infty \) in the last equation and using the dominated convergence theorem and the law of large numbers we finally obtain

which completes the proof. \(\square \)

Combining Propositions 6.8, 6.9 and 6.11 we obtain the following lemma.

Lemma 6.12

For any \(b>0\) there exist \(\varepsilon _0\in (0,1)\) and \(C>0\) such that for every \(\varepsilon \le \varepsilon _0\)

Proof

By Propositions 6.8, 6.9 and 6.11 and

Moreover, by Proposition 6.9 for every \(b>0\) there exist \(\varepsilon _0\in (0,1)\) and \(C>0\) such that for every \(\varepsilon \le \varepsilon _0, \mathbb {E}{\tilde{g}}_1\le C \mathrm {e}^{b/\varepsilon }\) which completes the proof. \(\square \)

We are now ready to prove Proposition 4.7 which is the main goal of this section.

Proof of Proposition 4.7

We estimate \(\mathbb {P}(S_N(x) \le 0 \text { for some } N\ge 1)\) in the following way,

The second term on the right hand side can be estimated by Lemma 6.12 which provides a bound of the form

For the first term we notice that

By Markov’s inequality, independence of \(\{f_i(x)\}_{i\ge 1}\) and \(\{{\tilde{f}}_i\}_{i\ge 1}\) and equality in law of the \({\tilde{f}}_i\)’s the last inequality implies that

Let \(\varepsilon _0\in (0,1)\) as in Proposition 6.3. For the term \(I_N(x)\) we notice that for every \(\varepsilon \le \varepsilon _0\)

where in the first inequality we use the Markov property and in the last we use Proposition 6.3. If we choose \(\frac{1}{2\lambda }=\mathrm {e}^{-(2a_0-b)/\varepsilon }\) and choose \(\varepsilon _0\in (0,1)\) even smaller the last inequality implies that for every \(\varepsilon \le \varepsilon _0\)

Combining with (6.16) we find \(\varepsilon _0\in (0,1)\) such that for every \(\varepsilon \le \varepsilon _0\)

Finally (6.14), (6.15) and (6.17) imply that

which completes the proof since b is arbitrary. \(\square \)

7 Proof of the Eyring–Kramers law

In this section we prove the Eyring–Kramers law Theorem 3.5. We first need to introduce some additional tools.

We consider the spatial Galerkin approximation \(X_N(\cdot ;x)\) of \(X(\cdot ;x)\) given by

where \(\Pi _N\) is the projection on \(\{f \in L^2: f(z)=\sum _{|k|\le N} {\hat{f}}(k) L^{-2}\mathrm {e}^{2\pi \mathrm {i}k\cdot z/L}\}, \xi _N = \Pi _N \xi , x_N = \Pi _N x\) and \(\mathfrak {R}_N\) is as in (2.3). Here for \(k\in \mathbb {Z}^2\) we set \(|k|=|k_1|\vee |k_2|\). In this notation we have that \(\Pi _N f = f*D_N\), where \(D_N\) is the 2-dimensional square Dirichlet kernel given by \(D_N(z) = \sum _{|k|\le N} L^{-2}\mathrm {e}^{2\pi \mathrm {i}k\cdot z/L}\).

To treat (7.1) we write  for

for

Then \(v_N(\cdot ;x)\) solves

where  and

and  .

.

As in (3.1) and (3.2), for \(\delta \in (0,1/2)\) and \(\alpha >0\) we define the symmetric subsets A and B of \(\mathcal {C}^{-\alpha }\) by

where \(D_\perp \) is the closed ball of radius \(\delta \) in \(\mathcal {C}^{-\alpha }\) and \({\bar{f}} = L^{-2} \langle f, 1 \rangle \). To simplify the notation in this section, we have dropped the dependence of A and B on the parameters \(\alpha \) and \(\delta \). We will only write \(A(\alpha ;\delta )\) and \(B(\alpha ;\delta )\) if we need to specify the values of these parameters. For \(x\in A\) we define

and

Last, recall that for \(k\in \mathbb {Z}^2\) (see (3.3)),

The next theorem is essentially [2, Theorem 2.3].

Theorem 7.1

([2, Theorem 2.3]) Let \(0< L < 2\pi \). For every \(\alpha >0, \delta \in (0,1/2)\) and \(\varepsilon \in (0,1)\) there exists a sequence \(\{\mu _{\varepsilon ,N}\}_{N\ge 1}\) of probability measures concentrated on \(\partial A\) such that

where the constants \(c_+\) and \(c_-\) are uniform in \(\varepsilon \).

Proof

The proof of (7.5) is given in [2, Sections 4 and 5], but the following should be modified.

In [2], the sets A and B are defined as in (7.3) and (7.4) with \(D_\perp \) replaced by a ball in \(H^s\) for \(s<0\). The explicit form of \(D_\perp \) is only used in [2, Lemma 5.9]. There the authors consider the 0-mean Gaussian measure \(\gamma ^\perp _0\) with quadratic form \(\frac{1}{2\varepsilon } \left( \Vert \nabla f\Vert _{L^2}^2 - \Vert f-\bar{f}\Vert _{L^2}^2\right) \), and prove that \(D_\perp \) has probability bounded from below by \(1- c\varepsilon ^2\). Here we assume that \(D_\perp \) is a ball in \(\mathcal {C}^{-\alpha }\). To obtain the same estimate for this set, we first notice that the random field f associated with the measure \(\gamma ^\perp _0\) satisfies

$$\begin{aligned} \mathbb {E}\langle f, L^{-2}\mathrm {e}^{2\mathrm {i}\pi k \cdot /L} \rangle \lesssim \frac{\varepsilon \log \varepsilon ^{-1} \log \lambda _k}{1+\lambda _k}, \end{aligned}$$for every \(k\in \mathbb {Z}^2\), where the explicit constant depends on L. This decay of the Fourier modes of f and [21, Proposition 3.6] imply that the measure \(\gamma ^\perp _0\) is concentrated in \(\mathcal {C}^{-\alpha }\), for every \(\alpha >0\), which in turn implies [2, Lemma 5.9] for the set \(D_\perp \) considered here.

In [2], the authors consider (7.1) with \(\mathfrak {R}_N\) replaced by

$$\begin{aligned} C_N = \frac{1}{L^2} \sum _{|k|\le N} \frac{1}{|\lambda _k|} \end{aligned}$$and obtain (7.5) with the pre-factor given by

$$\begin{aligned} \frac{2\pi }{|\lambda _0|} \sqrt{\prod _{k\in \mathbb {Z}^2}\frac{|\lambda _k|}{\nu _k} \exp \left\{ \frac{\nu _k-\lambda _k}{\lambda _k}\right\} } = \lim _{N\rightarrow \infty } \frac{2\pi }{|\lambda _0|} \sqrt{\prod _{|k|\le N}\frac{|\lambda _k|}{\nu _k}} \exp \left\{ \frac{3 L^2 C_N}{2}\right\} . \end{aligned}$$In our case one can check by (2.3) that \(\mathfrak {R}_N\) is given by

$$\begin{aligned} \mathfrak {R}_N = \frac{1}{L^2} \sum _{|k|\le N} \frac{1}{|\lambda _k+2|}. \end{aligned}$$According to [2, Remark 2.5] this choice of renormalisation constant modifies [2, Theorem 2.3] by multiplying the pre-factor there with

$$\begin{aligned} \exp \left\{ -3 L^2 \lim _{N\rightarrow \infty } (\mathfrak {R}_N - C_N)/2\lambda _0\right\} . \end{aligned}$$

\(\square \)

Remark 7.2

The finite dimensional measure \(\mu _{\varepsilon ,N}\) in (7.5) is given by

where \(\rho _{A,B}\) is a probability measure concentrated on \(\partial A\), called the equilibrium measure, and \(\mathrm {cap}_A(B)\) is a normalisation constant. Under this measure and the assumption that the sets A and B are symmetric, the integrals appearing in (7.5) can be rewritten using potential theory as

This formula is derived in [2, Section 3] and it is then analysed to obtain (7.5).

Theorem 3.5 generalises (7.5) for the limiting process \(X(\cdot ;x)\) for fixed initial condition x in a suitable neighbourhood of \(-1\). To prove this theorem, we first fix \(\alpha \in (0,\alpha _0)\) and pass to the limit as \(N\rightarrow \infty \) in (7.5) to prove a version of (3.4) where the initial condition x is averaged with respect to a measure \(\mu _\varepsilon \) concentrated on a closed ball with respect to the weaker topology \(\mathcal {C}^{-\alpha _0}\) (see Proposition 7.7). This measure is the weak limit, up to a subsequence, of the measures \(\mu _{\varepsilon ,N}\) in Theorem 7.1. We then use the exponential loss of memory, Theorem 3.1, to pass from averages of initial conditions with respect to the limiting measure \(\mu _\varepsilon \) to fixed initial conditions.

The rest of this section is structured as follows. In Sect. 7.1 we prove convergence of the Galerkin approximations \(X_N(\cdot ;x_N)\) and obtain estimates uniform in the initial condition x and the regularisation parameter N. In Sect. 7.2 we prove uniform integrability of the stopping times \(\tau _B(X(\cdot ;x))\) and pass to the limit as \(N\rightarrow \infty \) in (7.5). Finally in Sect. 7.3 we prove Theorem 3.5.

7.1 Convergence of the Galerkin scheme and a priori estimates

In the next proposition we prove convergence of \(X_N(\cdot ;x)\) to \(X(\cdot ;x_N)\) in \(C([0,T];\mathcal {C}^{-\alpha })\) using convergence of the stochastic objects  which is proven in [24, Proposition 2.3]. This is a technical result and the proof is given in the Appendix.

which is proven in [24, Proposition 2.3]. This is a technical result and the proof is given in the Appendix.

Proposition 7.3

Let \(\aleph \subset \mathcal {C}^{-\alpha _0}\) be bounded and assume that for every \(x\in \aleph \), there exists a sequence \(\{x_N\}_{N\ge 1}\) such that \(x_N\rightarrow x\) uniformly in x. Then for every \(\alpha \in (0,\alpha _0)\) and \(0<s<T\)

in probability.

Proof

See Appendix F. \(\square \)

The next proposition provides a bound for \(X_N(\cdot ;x)\) uniformly in the initial condition x and the regularisation parameter N in the \(\mathcal {B}^{-\alpha }_{2,2}\) norm, for \(0<\alpha <\alpha _0\). This result has been already established in [24, Corollary 3.10] for the limiting process \(X(\cdot ;x)\) in the \(\mathcal {C}^{-\alpha }\) norm. There (2.2) is tested with \(v(\cdot ;x)^{p-1}\), for \(p\ge 2\) even, to bound \(\Vert v(\cdot ;x)\Vert _{L^p}\) by using the “good” sign of the non-linear term \(-v^3\). In the case of (7.2) this argument allows us to bound \(\Vert v_N(\cdot ;x)\Vert _{L^p}\) for \(p=2\) only, because of the projection \(\Pi _N\) in front of the non-linearity.

Proposition 7.4

For every \(\alpha \in (0,\alpha _0]\) and \(p\ge 1\) we have that

Proof

Proceeding exactly as in the proof of [24, Proposition 3.7] we first show that there exist \(\alpha \in (0,1)\) and \(p_n\ge 1\) such that for every \(t\in (0,1)\)

for every \(\alpha '\in (0,1)\), uniformly in \(x\in \mathcal {C}^{-\alpha _0}\). We then proceed as in the proof of [24, Corollary 3.10] and use (7.7) to prove (7.6). The only difference is that here we use the norm \(\Vert \cdot \Vert _{\mathcal {B}^{-\alpha }_{2,2}}\) and the embedding \(L^2\hookrightarrow \mathcal {B}^{-\alpha }_{2,2}\) on the level of \(v_N(\cdot ;x)\) together with the fact that

for every \(\alpha ,\alpha '>0\) and \(p\ge 1\), which is immediate from [24, Proposition 2.2, Proposition 2.3]. \(\square \)

7.2 Passing to the limit

In this section we pass to the limit as \(N\rightarrow \infty \) in (7.5) using uniform integrability of the stopping time \(\tau _B(X_N(\cdot ;x))\). To obtain uniform integrability we prove exponential moment bounds for \(\tau _B(X_N(\cdot ;x))\) uniformly in the initial condition \(x \in \mathcal {C}^{-\alpha _0}\) and the regularisation parameter N. We first bound \(\mathbb {P}\left( \tau _B(X_N(\cdot ;x)) \ge 1\right) \) using a support theorem and a strong a priori bound for \(X_N(\cdot ;x)\) in \(\mathcal {C}^{-\alpha }\). A support theorem for the limiting process \(X(\cdot ;x)\) has been already established in [24, Corollary 6.4]. To use it for \(X_N(\cdot ;x)\) we combine it with the convergence result in Proposition 7.3. To obtain a strong a priori bound for \(X_N(\cdot ;x)\) in \(\mathcal {C}^{-\alpha }\) we first use Proposition 7.4 which implies the bound in \(\mathcal {B}^{-\alpha }_{2,2}\) and then use Proposition G.2 to pass from the \(\mathcal {B}^{-\alpha }_{2,2}\) norm to the \(\mathcal {C}^{-\alpha }\) norm.

Proposition 7.5

For every \(\alpha \in (0,\alpha _0), \delta \in (0,1/2)\) and \(\varepsilon \in (0,1)\) there exist \(c_0 \in (0,1)\) and \(N_0\ge 1\) such that for every \(N\ge N_0\)

Proof

Let \(\alpha \in (0,\alpha _0)\) and let \(\aleph \) be a compact subset of \(\mathcal {C}^{-\alpha _0}\) which we fix below. Using the Markov property

The proof is complete if for every \(N\ge N_0\)

We notice that there exists \(\delta '>0\) such that for any \(y\in \aleph \)

Here we use that if \(\Vert X(1/2;y)-1\Vert _{\mathcal {C}^{-\alpha }},\Vert X_N(1/2;y) - X(1/2;y)\Vert _{\mathcal {C}^{-\alpha }} \le \delta '\), then \(X_N(1/2;y) \in B\) for \(\delta '\) sufficiently small. By the support theorem [24, Corollary 6.4] there exists \(c_1 \equiv c_1(\delta ,\varepsilon )>0\) such that

On the other hand Proposition 7.3 implies convergence in probability of \(X_N(1/2;y)\) to X(1 / 2; y) in \(\mathcal {C}^{-\alpha }\) uniformly in \(y\in \aleph \). Hence there exists \(N_0\ge 1\) such that for every \(N\ge N_0\)

Plugging (7.10) and (7.11) in (7.9) implies the first bound in (7.8).

We now prove the second bound in (7.8). By the Markov inequality for every \(R>0\)

By (7.6) the expectation on the right hand side of the last inequality is uniformly bounded over \(x\in \mathcal {C}^{-\alpha _0}\) and \(N\ge 1\). Thus choosing \(R>0\) large enough

By Proposition G.2 for every \(K,R>0\) there exist \(C\equiv C(K,R)\) such that

Choosing K sufficiently large, combining the last inequality with [24, Propositions 2.2 and 2.3] and using the Markov inequality imply that

Using the Markov property and (7.12) and (7.13) we get for arbitrary \(x\in \mathcal {C}^{-\alpha _0}\)

We finally notice that for every \(\alpha <\alpha _0\) the set \(\aleph = \{f\in \mathcal {C}^{-\alpha _0}: \Vert f\Vert _{\mathcal {C}^{-\alpha }}\le C\}\) is compact in \(\mathcal {C}^{-\alpha _0}\) which implies the second bound in (7.8). \(\square \)

In the next corollary we use Proposition 7.5 to prove exponential moments for the stopping time \(\tau _B(X_N(\cdot ;x))\).

Corollary 7.6

For every \(\delta >0\) and \(\varepsilon \in (0,1)\) there exist \(\eta _0>0\) and \(N_0\ge 1\) such that

Proof

By the Markov property we have that

Iterating this inequality and using Proposition 7.5 we obtain that

Then

and the proof is complete if we choose \(\eta _0<\log c_0^{-1}\). \(\square \)

In the next proposition we pass to the limit as \(N\rightarrow \infty \) in (7.5). Here we use Corollary 7.6, which implies uniform integrability of \(\tau _B(X_N(\cdot ;x))\), and the weak convergence of the measures \(\mu _{\varepsilon ,N}\).

Proposition 7.7

For every \(\alpha \in (0,\alpha _0), \delta \in (0,1/2)\) except possibly a countable subset, and \(\varepsilon \in (0,1)\) there exists a probability measure \(\mu _\varepsilon \in \mathcal {M}_1\left( A(\alpha _0;\delta )\right) \) such that

where the constants \(c_+\) and \(c_-\) are uniform in \(\varepsilon \).

Proof

We only prove the upper bound in 7.14. The lower bound follows similarly.

Let \(\alpha \in (0,\alpha _0)\) and \(\delta \in (0,1/2)\). Using the compact embedding \(\mathcal {C}^{-\alpha } \hookrightarrow \mathcal {C}^{-\alpha _0}\) (see Proposition A.8), for any \(\alpha <\alpha _0\), we have that \(A(\alpha ;\delta ) \subset A(\alpha _0;\delta )\). Let \(\{\mu _{\varepsilon ,N}\}_{N\ge 1}\) be the family of probability measures in (7.5). Using again the compact embedding \(\mathcal {C}^{-\alpha } \hookrightarrow \mathcal {C}^{-\alpha _0}\), for any \(\alpha <\alpha _0\), this family is trivially tight since it is concentrated on \(\partial A(\alpha ;\delta )\). Hence there exists \(\mu _\varepsilon \in \mathcal {M}_1\left( A(\alpha _0;\delta )\right) \) such that \(\mu _{\varepsilon ,N} {\mathop {\rightarrow }\limits ^{\text {weak}}} \mu _\varepsilon \) up to a subsequence.

By Skorokhod’s represantation theorem (see [7, Theorem 2.4]) there exist a probability space \((\Omega _\mu , \mathcal {F}_\mu , \mathbb {P}_\mu )\) and random variables \(\{x_N\}_{N\ge 1}\) and x taking values in \(A(\alpha _0;\delta )\) such that \(x_N{\mathop {=}\limits ^{\text {law}}} \mu _N, x{\mathop {=}\limits ^{\text {law}}} \mu _\varepsilon \) and \(x_N\rightarrow x\)\(\mathbb {P}_{\mu _\varepsilon }\)-almost surely in \(\mathcal {C}^{-\alpha _0}\). If we denote by \(\mathbb {E}_{\mathbb {P}\otimes \mathbb {P}_{\mu _\varepsilon }}\) the expectation of the probability measure \(\mathbb {P}\otimes \mathbb {P}_{\mu _\varepsilon }\), we have that

By Proposition 7.3\(X_N(\cdot ;x_N)\) converges to \(X(\cdot ;x)\)\(\mathbb {P}\otimes \mathbb {P}_{\mu _\varepsilon }\)-almost surely on compact time intervals of \((0,\infty )\) up to a subsequence. Let

and notice that for \(x(t) = L^{-2}\langle X(t;x), 1\rangle \)

As in [20, Proof of Theorem 6.1] the last set is at most countable, hence \(\tau _{B(\alpha ;\delta )}(X_N(\cdot ;x_N)) \rightarrow \tau _{B(\alpha ;\delta )}(X(\cdot ;x))\)\(\mathbb {P}\otimes \mathbb {P}_{\mu _\varepsilon }\)-almost surely up to a subsequence, except possibly a countable number of \(\delta \in (0,1/2)\).

By Corollary 7.6 the family \(\{\tau _{B(\alpha ;\delta )}(X_N(\cdot ;x))\}_{N\ge N_0}\) is uniformly integrable. Hence by Vitali’s convergence theorem (see [5, Theorem 4.5.4]) we obtain that

Combining with (7.5) and (7.15) the proof of the upper bound is complete. \(\square \)

7.3 Proof of Theorem 3.5

In this section we combine Proposition 7.7 and Theorem 3.1 to prove Theorem 3.5. The idea we use here was first implemented in the 1-dimensional case in [4]. Generally speaking, if we restrict ourselves on the event where the first transition from a neighbourhood of \(-1\) to a neighbourhood of 1 happens after the exponential loss of memory, \(\tau _{B(\alpha ;\delta )}(X(\cdot ;x))\) behaves like \(\int \tau _{B(\alpha ;\delta )}(X(\cdot ;x)) \, \mu _{\varepsilon }(\,\mathrm {d}x)\) for \(x\in A(\alpha _0;\delta )\). The probability of this event is quantified by Theorem 3.1 and Proposition 7.8. On the complement of this event the transition time \(\tau _{B(\alpha ;\delta )}(X(\cdot ;x))\) is estimated using Proposition 7.9.