Abstract

Content-based image retrieval (CBIR) systems aim to retrieve the most similar images in a collection, given a query image. Since users are interested in the returned images placed at the first positions of ranked lists (which usually are the most relevant ones), the effectiveness of these systems is very dependent on the accuracy of ranking approaches. This paper presents a novel re-ranking algorithm aiming to exploit contextual information for improving the effectiveness of rankings computed by CBIR systems. In our approach, ranked lists and distance scores are used to create context images, later used for retrieving contextual information. We also show that our re-ranking method can be applied to other tasks, such as (a) combining ranked lists obtained using different image descriptors (rank aggregation) and (b) combining post-processing methods. Conducted experiments involving shape, color, and texture descriptors and comparisons with other post-processing methods demonstrate the effectiveness of our method.

Similar content being viewed by others

1 Introduction

The continuous decrease of storage devices costs and the technological improvements in image acquisition and sharing facilities have enabled the dissemination of very large digital image collections, accessible through various technologies. In this scenario, effective and efficient systems for searching and organizing these contents are of great interest.

Content-based image retrieval (CBIR) can be seen as any technology that helps to search and organize digital picture archives by means of their visual content [11]. In general, given a query image, a CBIR system aims to retrieve the most similar images in a collection by taking into account image visual properties (such as shape, color, and texture). Collection images are ranked in decreasing order of similarity, according to a given image descriptor. An image content descriptor is characterized by [8]: (a) an extraction algorithm that encodes image features into feature vectors and (b) a similarity measure used to compare two images. The similarity between two images is computed as a function of the distance of their feature vectors.

A direct way to improve the effectiveness of CBIR systems consists in using more accurate features for describing images. Another possibility is related to the definition of similarity (or distance) functions that would be able to measure the distance between feature vectors in a more effective way.

Commonly, CBIR systems compute similarity considering only pairs of images. On the other hand, the user perception usually considers the query specification and the query responses in a given context. In interactive applications, the use of context can play an important role [1]. Context can be broadly defined as all information about the whole situation relevant to an application and its set of users. Information retrieval and recommendation systems, that include geographic information, user profiles, and relationships among users and objects, can be used for improving the effectiveness of obtained results. In a CBIR scenario, relationships among images, encoded in ranked lists, can be used for extracting contextual information.

In this paper, we present a new post-processing method that re-ranks images by taking into account contextual information encoded in ranked lists and distance among images. We propose a novel approach for retrieving contextual information, by creating a gray scale image representation of distance matrices computed by CBIR descriptors (referenced in this paper as context image). The context image is constructed for the \(k\)-nearest neighbors of a query image and analyzed using image processing techniques. The use of image processing techniques for contextual information representation and processing is an important novelty of our work. Our method uses distance matrices computed by CBIR descriptors that are later processed considering their image representation. The median filter, for instance, which is a well-known non-linear filter often used for removing noise, is exploited in our approach to improve the quality of distance scores. Basically, we consider that “wrong” distances can be considered and represented as “noise” in the context image, and the median filter is used for filtering this noise out. In fact, a very large number of image processing techniques can be used for extracting useful information from context images. We believe that our strategy opens a new area of investigation related to the use of image processing approaches for analyzing distances computed by CBIR descriptor, in tasks such as image re-ranking, rank aggregation, and clustering.

We evaluated the proposed method on shape, color, and texture descriptors. Experimental results demonstrate that the proposed method can be used in several CBIR tasks, since it yields better results in terms of effectiveness performance than various post-processing algorithms recently proposed in the literature.

This paper differs from previous works [29, 32] with regard to the following aspects: (a) it presents and discusses in more details the main concepts of the proposed methods, (b) it extends both re-ranking and rank aggregation algorithms by considering more contextual information, and (c) it presents new experimental results that overcome the original methods.

The paper is organized as follows. Section 2 discusses related work and Sect. 3 presents the problem definition. Section 4.1 describes the contextual information representation, while Sects. 4.2 and 4.3 describe the re-ranking and rank aggregation methods, respectively. Section 4.4 discusses how to use our approach for combining post-processing methods. Experimental design and results are reported in Sect. 5. Finally, Sect. 6 presents conclusions and future work.

2 Related work

This section discusses related work. Section 2.1 discusses the re-ranking approaches and Sect. 2.2 describes the rank aggregation methods.

2.1 Re-ranking

Recently, several approaches have been proposed for performing re-ranking tasks on various information retrieval systems [3, 12, 20, 29, 33, 34, 36, 41]. In general, these methods perform a post-processing analysis that uses an initial ranking and exploits additional information (e.g., relationships among items, user profiles) for improving the effectiveness of ranked lists.

In the Information Retrieval scenario, the term “global ranking” was proposed in [34] for designating a ranking model that takes all the documents together as its input, instead of only individual objects. In other words, a global ranking uses not only the information of documents but also the relation information among them.

The continuous conditional random fields (CRF) has been proposed in [34] for conducting the learning task in global ranking tasks. This model is defined as a conditional probability distribution over ranking scores of objects. It represents the content information of objects as well as the relation information between objects, necessary for global ranking. A global ranking framework that solves the problem via data fusion was proposed in [10]. The main idea of the approach is to take each retrieved document as a pseudo-information retrieval system. Each document generates a pseudo-ranked list by a global function. A data fusion algorithm is then adapted to generate the final ranked list.

Inter-documents similarity is considered in [12] and a clustering approach is applied for regularizing retrieval scores. In [45], a semi-supervised label propagation algorithm [50] was applied for re-ranking documents in information retrieval applications.

In the CBIR scenario, several methods have also been proposed for post-processing retrieval tasks, considering relationships among images. A graph transduction learning approach is introduced in [48]. The algorithm computes the shape similarity of a pair of shapes in the context of other shapes as opposed to considering only pairwise relations. The influence among shape similarities in an image collection is analyzed in [46]. Markov chains are used to perform a diffusion process on a graph formed by a set of shapes, where the influences of other shapes are propagated. The approach introduces a locally constrained diffusion process and a method for densifying the shape space by adding synthetic points. A shortest path propagation algorithm was proposed in [44], which is a graph-based algorithm for shape/object retrieval. Given a query object and a target database object, it explicitly finds the shortest path between them in the distance manifold of the database objects. Then a new distance measure is learned based on the shortest path and is used to replace the original distance measure. Another approach based on propagating the similarity information in a weighted graph is proposed in [47] and called by affinity learning. Instead of propagating the similarity information on the original graph, it uses a tensor product graph (TPG) obtained by the tensor product of the original graph with itself.

A method that exploits the shape similarity scores is proposed in [21]. This method uses an unsupervised clustering algorithm, aiming to capture the manifold structure of the image relations by defining a neighborhood for each data point in terms of a mutual \(k\)-nearest neighbor graph. The Distance Optimization Algorithm (DOA) is presented in [31]. DOA considers an iterative clustering approach based on distances correlation and on the similarity of ranked lists. The algorithm explores the fact that if two images are similar, their distances to other images and therefore their ranked lists should be similar as well.

Recently, contextual information has also been considered for improving the effectiveness of image retrieval [19, 33, 36, 49]. The objective of these methods is somehow mimic the human behavior on judging the similarity among objects by considering specific contexts. More specifically, the notion of context can refer to updating image similarity measures by taking into account information encoded on the ranked lists defined by a CBIR system [36]. Similar to approaches based on global ranking, these methods take information about relationships among images for re-ranking. In [33], the notion of context refers to the nearest neighbors of a query image. A similarity measure is proposed for assessing how similar two ranked lists are. An extension of this approach was proposed in [36]. A clustering method is used for representing the contextual information. In [33], a family of contextual measures of similarity between distributions is introduced. These contextual measures are then used in the image retrieval problem as a re-ranking method.

The contextual re-ranking algorithm proposed in this paper aims to exploit contextual information for image re-ranking tasks. An important novelty of the contextual re-ranking algorithm consists in the use of image processing techniques for contextual information representation and processing. The proposed method is flexible in the sense that it can be easily tailored to different CBIR tasks, considering shape, color and texture descriptors. Furthermore, it can also be used for rank aggregation and for combining post-processing methods.

2.2 Rank aggregation

Different CBIR descriptors produce different rankings. Further, it is intuitive that different descriptors may provide different but complementary information about images, and therefore their combination may improve ranking performance. An approach for improving CBIR systems consists in using rank aggregation techniques. Basically, rank aggregation approaches aim to combine different rankings in order to obtain a more accurate one.

Although rank aggregation problem has a long and interesting history that goes back at least two centuries [14, 26], it has been receiving great attention by the computer community in the last few decades. Rank aggregation is being employed in many new applications [14, 26], such as document filtering, spam webpage detection, meta-search, word association finding, multiple search, biological databases, and similarity search. Commonly, different rank aggregation approaches consider that objects highly ranked in many ranked lists are likely to be relevant [7]. For estimating the relevance of an object, given a ranked list, both rank positions [6] and retrieval scores [16] are considered.

Recently, learning to rank approaches are being considered [15]. Their objective is to use machine learning techniques to combine different CBIR descriptors. Rank aggregation can also be thought as an unsupervised regression, in which the goal is to find an aggregate ranking that minimizes the distance to each of the given ranked lists [37]. It can also be seen as the problem of finding a ranking of a set of elements that is “closest to” a given set of input rankings of the elements [13, 14, 35].

In general, using supervised or unsupervised techniques, rank aggregation methods consider only scores or positions for producing new rankings. The rich contextual information encoded in relationships among images is ignored. In this paper, we exploit these relationships for rank aggregation.

Different from the aforementioned methods, in our work, the similarity (in terms of effectiveness measures) between descriptors to be combined is considered for rank aggregation tasks. It is expected that uncorrelated systems would produce different rankings of the relevant objects, even when the overlap in the provided ranked lists is high. This observation is consistent with the statement that the combination with the lowest error occurs when the classifiers are independent and non-correlated [7].

3 Problem definition

This section presents a formal definition for problems discussed in this paper. Section 3.1 presents a definition of the re-ranking problem considering contextual information. Section 3.2 presents a definition of the rank aggregation problem.

3.1 Re-ranking method

Let \(\mathcal{ C} =\{\text{img}_{1}, {\text{img}}_{2},\dots , {\text{img}}_{N}\}\) be an image collection.

Let \(\mathcal{ D} \) be an image descriptor which can be defined [8] as a tuple \((\epsilon ,\rho )\), where

-

\(\epsilon : \hat{I}\rightarrow \mathbb{ R} ^{n}\) is a function, which extracts a feature vector\(v_{\hat{I}}\)from an image\(\hat{I}\).

-

\(\rho : \mathbb{ R} ^{n} \times \mathbb{ R} ^{n} \rightarrow \mathbb{ R} \) is a distance function that computes the distance between two images as a function of the distance between their corresponding feature vectors.

In order to obtain the distance between two images \({\text{img}}_{i}\) and \({\text{img}}_{j}\) it is necessary to compute the value of \(\rho (\epsilon ({\text{img}}_{i}), \epsilon ({\text{img}}_{j}))\). For simplicity and readability purposes we use the notation \(\rho ({\text{img}}_{i}, {\text{img}}_{j})\) along the paper.

The distance \(\rho ({\text{img}}_{i}, {\text{img}}_{j})\) among all images \({\text{img}}_{i}, {\text{img}}_{j} \in \mathcal{ C} \) can be computed to obtain an \(N \times N\) distance matrix \(A\). Given a query image \({\text{img}}_{q}\), we can compute a ranked list \(R_{{\text{img}}_{q}}\) in response to the posed query by taking into account the distance matrix \(A\). The ranked list \(R_{{\text{img}}_{q}}=\{{\text{img}}_{i}, {\text{img}}_{j}, \dots , {\text{img}}_{N}\}\) can be defined as a permutation of the collection \(\mathcal{ C} \), such that, if \({\text{img}}_{i}\) is ranked higher than \({\text{img}}_{j}\), then \(\rho ({\text{img}}_{q}, {\text{img}}_{i}) < \rho ({\text{img}}_{q}, {\text{img}}_{j})\). We can also take each image \({\text{img}}_{i} \in \mathcal{ C} \) as a query image \({\text{img}}_{q}\), in order to obtain a set \(\mathcal{ R} = \{R_{{\text{img}}_{1}},R_{{\text{img}}_{2}},\dots ,R_{{\text{img}}_{N}}\}\) of ranked lists for each image \({\text{img}}_{i} (1 \le i \le N)\) of collection \(\mathcal{ C} \). A re-ranking method that considers relations among all images in a collection can be represented by function \(f_{r}\), such that \(f_{r}\) takes as input the distance matrix \(A\) and the set of ranked lists \(\mathcal{ R} \) for computing a new distance matrix \(\hat{A}\):

Based on the distance matrix \(\hat{A}\), collection images can be re-ranked, i.e., a new set of ranked lists can be obtained. The contextual re-ranking algorithm, detailed in Sect. 4.2, consists in an implementation of function \(f_{r}\).

3.2 Rank aggregation method

Let \(\mathcal{ C} \) be an image collection and let \(\mathcal{ D} = \{D_1, D_2, \dots , D_m\}\) be a set of \(m\) image descriptors. The set of descriptors \(\mathcal{ D} \) can be used for computing a set of distances matrices \(\mathcal{ A} = \{A_1, A_2, \dots , A_m\}\). As discussed in previous subsection, for each distance matrix \(A_{i} \in \mathcal{ A} \), a set of ranked lists \(\mathcal{ R} _{i} = \{R_{1},R_{2},\dots ,R_{N}\}\) can be computed. Let \(\mathcal{ R} _\mathcal{ A} = \{\mathcal{ R} _{1}, \mathcal{ R} _{2}, \dots , \mathcal{ R} _{m}\}\) be a set of sets of ranked lists (one set \(\mathcal{ R} _{i}\) for each matrix \(\mathcal{ A} _{i}\)), the objective of rank aggregation methods that consider relationships among images is to use the sets \(\mathcal{ A} \) and \(\mathcal{ R} _\mathcal{ A} \) as input for computing a new distance matrix \(\hat{A}_{c} \):

Based on the combined distance matrix \(\hat{A}_{c}\), a new set of ranked lists can be computed. The Contextual Rank Aggregation Algorithm, detailed in Sect. 4.3, consists in an implementation of function \(f_{a}\).

4 Contextual methods

This section presents our methods for image re-ranking and rank aggregation considering contextual information. Section 4.1 discusses the contextual information representation used by our methods. Section 4.2 presents the re-ranking algorithm while Sect. 4.3 presents the rank aggregation algorithm. Finally, Sect. 4.4 discusses the use of our approach for combining re-ranking methods.

4.1 Contextual information representation

Let \(\mathcal{ C} \) be an image collection and let \(\mathcal{ D} \) be an image descriptor. The distance function \(\rho \) defined by \(\mathcal{ D} \) can be used for computing the distance \(\rho ({\text{img}}_{i}, {\text{img}}_{j})\) among all images \({\text{img}}_{i}, {\text{img}}_{j} \in \mathcal{ C} \) in order to obtain an \(N \times N\) distance matrix \(A\).

Our goal is to represent the distance matrix \(A\) as a gray scale image and to analyze this image for extracting contextual information using image processing techniques. For the gray scale image representation, referenced in this paper as context image \(\hat{I}\), we consider two reference images \({\text{img}}_{i}, {\text{img}}_{j} \in \mathcal{ C} \).

Let the context image \(\hat{I}\) be a gray scale image defined by the pair \((D_{I},f)\), where \(D_{I}\) is a finite set of pixels (points in \(\mathbb{ N} ^{2}\), defined by a pair \((x,y)\)) and \(f: D_{I} \rightarrow \mathbb{ R} \) is a function that assigns to each pixel \(p \in D_{I}\) a real number. We define the values of \(f\) function in terms of the distance function \(\rho \) (encoded into matrix \(A\)) and reference images \({\text{img}}_{i}, {\text{img}}_{j} \in \mathcal{ C} \).

Let \(R_{i}=\{{\text{img}}_{i_1}, {\text{img}}_{i_2}, \dots , {\text{img}}_{i_N}\}\) be the ranked list defined by matrix \(A\) considering the reference image \({\text{img}}_{i}\) as query image; and \(R_{j}=\{{\text{img}}_{j_1}, {\text{img}}_{j_2}, \dots , {\text{img}}_{j_N}\}\) the ranked list of reference image \({\text{img}}_{j}\). In this way, the axis of context image \(\hat{I}\) is ordered according to the order defined by ranked lists \(R_{i}\) and \(R_{j}\). Let \({\text{img}}_{i_x} \in R_{i}\) be an image at \(x\) position of ranked list \(R_{i}\) and \({\text{img}}_{j_y} \in R_{j}\) an image at \(y\) position of the ranked list \(R_{j}\), the value of \(f(x,y)\) (function that defines the gray scale of pixel \(p (x,y)\)) is defined as follows: \(f(x,y) = \bar{\rho }({\text{img}}_{i_x}, {\text{img}}_{j_y})\), where \(\bar{\rho }\) is defined by the distance function \(\rho \) normalized in the interval [0, 255].

An example, considering two similar reference images (from MPEG-7 dataset [23]), is illustrated in Fig. 1. The respective gray scale image representing matrix \(A\) is illustrated in Fig. 2. An analogous example for non-similar images is shown in Figs. 3 and 4.

The context images can represent a great source of information about an image collection and distance among images. A single context image contains information about all distances among images and their spatial relationship defined by the ranked lists of the reference images. In other words, a single pixel can relate four collection images: the two reference images (that define the position of the pixel, according to their ranked lists) and the two images whose distance define the grayscale value of the pixel. Another important advantage of this image representation relies on the possibility of using a large number of image processing techniques.

In this paper, our goal is to exploit useful contextual information provided by context images. Low distance values (similar images) are associated with dark pixels in the image, while high values (non-similar images) refers to non-black pixels. Considering two similar images as reference images, the beginning of two ranked lists should have similar images as well. This behavior creates a dark region at the top left corner of a context image (as we can observe in Fig. 2). This region represents a neighborhood of similar images with low distances.

The top left corner represents images at the first position of the ranked lists of the two reference images, whose accuracy is higher than any other region in context image. We aim to characterize contextual information by analyzing this region using image processing techniques. These information will be used by the re-ranking method presented in next section.

Other regions of context images could also be of interest. Considering similar reference images, the region close to the main diagonal, for example, contains more dark pixels (low distances) than the remaining of the image. Once the ranked lists of reference images are similar, pixels close to the main diagonal represent distances between similar images. The use of other regions of context images in image re-ranking tasks is left as future work.

4.2 The contextual re-ranking algorithm

Given an image \({\text{img}}_{i} \in \mathcal{ C} \), we aim to process contextual information of \({\text{img}}_{i}\) by constructing context images for each one of its k-nearest neighbors (based on distance matrix \(A\)). We use an affinity matrix \(W\) to store the results of processing contextual information. Let \(N\) be the size of collection \(\mathcal{ C} \), the affinity matrix \(W\) is an \(N \times N\) matrix where \(W[k,l]\) represents the similarity between images \({\text{img}}_k\) and \({\text{img}}_l\).

We use image processing techniques to process the context images that consider \({\text{img}}_i\) and each one of its k-nearest neighbor and then update the affinity matrix \(W\). The same process is performed for all \({\text{img}}_{i} \in \mathcal{ C} \). Since all images of \(\mathcal{ C} \) are processed, the affinity matrix \(W\) is used as input for computing a new distance matrix \(A_{t+1}\) (where \(t\) indicates the current iteration).

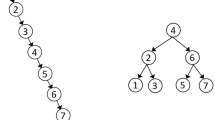

Based on the new distance matrix \(A_{t+1}\), a new set of ranked lists is computed. These steps are repeated along several iterations. Finally, after a number \(T\) of iterations, a re-ranking is performed based on the final distance matrix \(A_{T}\) in order to obtain the final set of ranked lists. The main steps of contextual re-ranking algorithm are illustrated in Fig. 5. Algorithm 1 outlines the complete re-ranking method, that is detailed in the following.

The affinity matrix \(W\) is initialized with value 1 for all positions in Line 4. Context images are created in Line 7, as explained in Sect. 4.1, considering \({\text{img}}_i\) (image being processed) and \({\text{img}}_j\) (current neighbor of \({\text{img}}_i\)) as reference images. The parameter \(L\) refers to the size of the square in the top left corner of context image that will be analyzed.

Image processing techniques are applied to context images in Line 8. Our goal is to identify dense regions of dark pixels. Dark pixels indicate low distance values and, therefore, similar images. These regions represent the set of similar images at first positions of both ranked lists whose distances to each other are low. We use a threshold for obtaining a binary image and then identify dark pixels. The threshold \(l\) used is computed based on normalization given by average and maximum distance values contained in \(L \times L\) square in top left corner of context image:

with \(p,q < L\).

Next, we use a median filter for determining regions of dense black pixels. The non-linear median filter, often used for removing noise, is used in our approach aiming to correct distances among images. Basically, we consider that “wrong” distances can be considered and represented as “noise” and the median filter is used to filter this noise out. More specifically, consider a dense region of black pixels at the top left corner of a context image. It represents a set of similar images (low distances) at the top positions of ranked lists of reference images. Consider a white pixel in this region, indicating a high distance between two images. By taking into account the contextual information given by the region of the pixel (position and other close pixels), it is very likely that the distance represented by this pixel is incorrect. In this scenario, the median filter replaces the white pixel by a black pixel. Similar reasoning can be applied to isolated black pixels in white regions. We should note that, in extreme situations, in which the CBIR descriptors completely confuse similar and non-similar images, there is less contextual information available in the context images.

Figure 6 illustrates an example of a binary image and Fig. 7 shows the same image after applying the median filter (with a \(3 \times 3\) mask).

Line 9 updates the affinity matrix \(W\) based on the context images. For updating, only black pixels (and their positions) are considered. The objective is to give more relevance to pixels next to the origin \((0,0)\), i.e., pixels that represent the beginning of ranked lists. The importance of neighbors should also be considered: neighbors at first positions should be considered more relevant when updating \(W\).

Let \({\text{img}}_i \in \mathcal{ C} \) be the current image being processed. Let \({\text{img}}_j\) be the \(k\) (such that \(k < K\)) neighbor of \({\text{img}}_i\). Let \({\text{img}}_i\) and \({\text{img}}_j\) be reference images and let \(\hat{I}(D_{I},f)\) be the context image after thresholding and applying the median filter. Let \(L\) be the size of the top left corner square that should be processed and let \(p(x,y) \!\in \! D_{I}\) be a black pixel \((f(x,y)\!=\!0)\), such that \(x,y < L\). The pixel \(p(x,y)\) represents the distance between images \({\text{img}}_x\) and \({\text{img}}_y\) such that the image \({\text{img}}_x\) is the image at the position \(x\) of the ranked list \(R_i\) and the image \({\text{img}}_y\) is the image at position \(y\) of ranked list \(R_j\).

Let \(H = \sqrt{2 \times L^2}\) be the maximum distance of a pixel \(p(x,y)\) to origin \((0,0)\), as illustrated in Fig. 8. Let \(W[x,y]\) represent the similarity between images \({\text{img}}_{i_x}\) and \({\text{img}}_{i_y}\). Then, for each black pixel \(p(x,y)\), the matrix \(W\) receives five updates: the most relevant one refers to the similarity between images \({\text{img}}_x\) and \({\text{img}}_y\); two updates refer to the relationship between the reference image \({\text{img}}_i\) with images \({\text{img}}_x\) and \({\text{img}}_y\); and two updates refer to the relationships between reference image \({\text{img}}_j\) and images \({\text{img}}_x\) and \({\text{img}}_y\). Figure 9 illustrates the relationship among these images provided by each black pixel in the context image. The update of the similarity score between \({\text{img}}_x\) and \({\text{img}}_y\) (the most relevant one) is computed as follows:

Note that low values of \(k, x, y\) (the beginning of ranked lists) lead to high increments of \(W\). Smaller increments occur when \(k\) has high values and \(x,y = L\). In this case, the term \(H/\sqrt{x^2 + y^2}\) is equal to 1. The remaining four updates (relationship among reference images and images \({\text{img}}_x, {\text{img}}_y\)) are computed as follows:

Observe that these four updates have together the same weight of the first update. They are computed based on the position of \({\text{img}}_x\) and \({\text{img}}_y\) (\(x,y\)) in the ranked the lists of other images (reference images \({\text{img}}_i, {\text{img}}_j\)), while the first update is given by a pixel that represents the distance between images \({\text{img}}_x\) and \({\text{img}}_y\).

When all images have been processed, and therefore an iteration has finished, the affinity matrix \(W\) presents high values for similar images. But there may be positions of \(W\) that was not updated (e.g., in the case of non-similar reference images), and have the initial value 1. The new distance matrix \(A_{t+1}\) (Line 12 of Algorithm 1) is computed as follows:

where \(\bar{A}_{t}\) is the distance matrix \(A_{t}\) normalized in the interval [0, 1]. When \(W[x,y] = 1\), i.e., \(W[x,y]\) was not updated by Eq. 4, we use the old distance matrix \(A_{t}\) for determining values of \(A_{t+1}\). Otherwise (when \(W[x,y] > 1\)), values of new distance matrix \(A_{t+1}\) are equal to the inverse of the values found in the affinity matrix \(W\). Since the smallest increment for \(W\) is 1 (and therefore \(W[x,y] = 2\)), the largest value of a new distance in \(A_{t+1}\) is \(0.5\). Therefore, we normalize the new distance values in the interval [0, 1] by multiplying distances by 2. \(A_{t+1}\) will have values in the interval [0, 2]: (a) in the interval [0, 1], if \(W[x,y] > 1\), and (b) in the interval [1, 2], if \(W[x,y] = 1\). A last operation is performed on the new distance matrix \(A_{t+1}\) for ensuring the symmetry of distances between images \((\rho (x,y) = \rho (y,x))\):

Finally, a re-ranking is performed based on values of \(A_{t+1}\) (Line 15 of Algorithm 1). At the end of \(T\) iterations, a new computed distance matrix \(A_{T}\) and a set of new ranked list are obtained.

4.3 The contextual rank aggregation algorithm

The presented re-ranking algorithm can be easily tailored to rank aggregation tasks. In this section, we present the contextual rank aggregation algorithm, aiming to combine the results of different descriptors. The main idea consists in using the same iterative approach based on context images, but using the affinity matrix \(W\) for accumulating updates of different descriptors at the first iteration.

Algorithm 2 outlines the rank aggregation algorithm. We can observe that the algorithm is very similar to the re-ranking algorithm (Algorithm 1). It also considers an iterative approach and the context images for the contextual information processing. Note that the main difference relies on lines 8–13 of Algorithm 2, that are executed only at the first iteration, when different matrices \(A_{d} \in \mathcal{ A} \) of different descriptors are being combined.

4.4 Combining post-processing methods

We defined a generic re-ranking algorithm as a implementation of a function \(f_{r} (A,\mathcal{ R} )\) in Sect. 3.1. Both the input and output of the function \(f_{r}\) are given by a distance matrix (since the set of ranked lists \(\mathcal{ R} \) can be computed based on a distance matrix).

In this way, a matrix obtained from another post- processing method (other implementation of \(f_{r}\)) can be submitted to our re-ranking algorithm. Different approaches may exploit different relationships among images and further improve the effectiveness of CBIR systems. Contextual information can be exploited by our re-ranking algorithm even after other methods have already been processed. We present experiments for validating this conjecture in Sect. 5.4.

5 Experimental evaluation

In this section, we present the set of conducted experiments for demonstrating the effectiveness of our method. We analyzed and evaluated our method under several aspects. In Sect. 5.1, we present an analysis of the Contextual Algorithm considering: the impact of parameters and image processing techniques on the re-ranking algorithm; and a brief discussion about complexity and efficiency.

In Sect. 5.2, we discuss the experimental results for our re-ranking method. Section 5.2.1 presents results of the use of our method for several shape descriptors, considering the well-known MPEG-7 dataset [23]. Sections 5.2.2 and 5.2.3 aim to validate the hypothesis that our method can be used in general image retrieval tasks. In addition to shape descriptors, we conducted experiments with color and texture descriptors.

Section 5.3 presents experimental results of our method on rank aggregation tasks. Section 5.4 presents experimental results of our re-ranking method combined with other post-processing methods. Finally, we also conducted experiments aiming to compare our results with state-of-the-art-related post-processing and rank aggregation methods in Sect. 5.5.

All experiments were conducted considering all images in the collections as query images. Results presented in the paper (MAP and Recall@40 scores) represent an average score.

5.1 Experiment 1: analysis of contextual re-ranking algorithm

In this section, we evaluated the contextual re-ranking algorithm with regard to different aspects. Section 5.1.1 analyzes the impact of parameters in effectiveness results. Section 5.1.2 evaluates the relevance of image processing techniques for the algorithm. Section 5.1.3 discusses aspects of efficiency and computational complexity.

5.1.1 Impact of parameters

The execution of Algorithms 1 and 2 considers three parameters: (a) \(K\): number of neighbors used as reference images, (b) \(L\): size of top left square of context image to be analyzed, and (c) \(T\): number of iterations that the algorithm is executed.

To evaluate the influence of different parameter settings on the retrieval scores and for determining the best parameters values we conducted a set of experiments. We use the MPEG-7 dataset [23] with the so-called bullseye score (Recall@40), which counts all matching objects within the 40 most similar candidates. The MPEG-7 data set consists of 1,400 silhouette images grouped into 70 classes. Each class has 20 different shapes. Since each class consists of 20 objects, the retrieved score is normalized with the highest possible number of hits. For distance computation, we used the CFD [30] shape descriptor.

Retrieval scores are computed ranging parameters \(K\) in the interval [1, 10] and \(L\) in the interval [1, 60] (with increments of 5) for each iteration. Figures 10, 11, 12, and 13 show surfaces that represent retrieval scores for iterations 1, 2, 3, and 4, respectively. For each iteration, the best retrieval score was determined.

We observed that the best retrieval scores increased along iterations and parameters converged for values \(K=7\) and \(L=25\). Figure 14 illustrates the evolution of precision according to the iterations of re-ranking algorithm. The best retrieval score was reached at iteration \(T=5\): 95.71%. Note that these parameters may change for datasets with very different sizes. The parameter values \(K=7, L=25\), and \(T=5\) were used for all experiments, except for Soccer color dataset (described in Scet. 5.2.3). Since this dataset is very smaller than others, we used \(K=3\).

5.1.2 Impact of image processing techniques

In this section, we aim to evaluate the impact of the image processing techniques in effectiveness results. For the experiments, we consider the MPEG-7 [23] dataset (with Recall@40 score), the CFD [30] shape descriptor and the parameters values defined in Sect. 5.1.1. We evaluated the method with regard to the follows aspects:

-

Median filter: we have disabled the median filter (considering only the thresholding). The retrieval score obtained was 93.94%.

-

Thresholding: we have disabled the thresholding and filter steps (considering updating for all pixels in context images). The effectiveness result obtained was 92.89%.

-

Masks of median filter: we evaluated the effectiveness of the method for different sizes of masks (3, 5, and 7). We have obtained for masks 3, 5, and 7, respectively, 95.71, 95.36, and 95.18%.

The experimental results demonstrate the positive impact of image processing techniques in effectiveness results of contextual re-ranking algorithm. The best retrieval score was obtained when a thresholding and a median filter of mask \(3 \times 3\) are used.

5.1.3 Aspects of efficiency

This paper focuses on the presentation of Contextual re-ranking Algorithm and its effectiveness evaluation. The focus on effectiveness is justified by the fact that the execution of the algorithm is expected to be off-line, as in other post-processing methods [44]. This subsection aims to briefly discuss some aspects of efficiency and computational complexity.

Let \(\mathcal{ C} \) be an image collection with \(N\) images. The number of context images that should be processed is equal to \((N \times K \times T)\). The size of context images that impacts the number of updates in matrix \(W\) is given by \(L^2\) pixels. Since the parameters \(K, T\), and \(L\) have fixed values independent of \(N\), the asymptotic computional complexity of main steps of the algorithm (image processing and \(W\) matrix updating steps) is \(O(N)\). Other steps of the algorithm have different complexities. The matrices \(A\) and \(W\) are recomputed \((O(N^{2}))\) at each iteration. The re-ranking step computes a sort operation \((O (N {\text{ log}}\,N))\) for all images \((O(N^{2} {\text{ log}}\,N))\). However, these steps admit optimizations: once the updatings for matrix \(W\) impact a small subset of positions (depending on the size \(L^2\) of context image), the matrices do not require to be totally recomputed and the ranked lists do not require to be totally sorted again. The contextual re-ranking algorithm can also be massively parallelized, since there is no dependence between processing of different context images at a same iteration. Optimizations and parallelization issues will be investigated in future work.

Also note that other post-processing methods use matrices multiplication approaches [48, 46] and graph algorithms [44], both with complexity of \(O(N^3)\).

We evaluated the computation time of contextual re-ranking algorithm for MPEG-7 dataset \((N=1{,}400)\), using the parameters defined in Sect. 5.1.1 (\(K=7, L=25\) and \(T=5\)), executing in a Linux PC Core 2 Quad and using a C implementation. This execution took approximately 6 s.

5.2 Experiment 2: re-ranking

In this section, we present a set of conducted experiments for demonstrating the effectiveness of our method. Various post-processing methods [21, 28, 46, 48] have been evaluated considering only one type of visual property (usually, either color or shape). We aim to evaluate the use of our method in a general way for several CBIR tasks. We compared results for several descriptors (shape, color, and texture) in differents datasets. The measure adopted is mean average precision (MAP), geometrically referred as the average area below precision \(\times \) recall curves considering different queries. Table 1 presents results for 11 image descriptors in three different datasets. As we can be observed in Table 1, the contextual re-ranking method presents positive effectiveness gains for all descriptors (including shape, color, and texture). The gains ranged from +1.37 to +18.90%, with 8.57% on the average. We conducted a paired \(t\) test and conclude that there is a 99.9% chance of difference between the mean values (before and after the re-ranking) being statistical significantly. Next subsections present the descriptors and datasets used for shape, color, and texture experiments.

5.2.1 Shape descriptors

We evaluate the use of our method with six shape descriptors considering the MPEG-7 dataset [23]: beam angle statistics (BAS) [2], segment saliences (SS) [9], inner distance shape context (IDSC) [24], contour features descriptor (CFD) [30] articulation-invariant representation (AIR) [17], and aspect shape context (ASC) [25]. Results of bullseye score for all descriptors are presented in Table 2. Note that the effectiveness gains are always positive and represent very significant improvement of effectiveness, ranging from +5.29 to +16.80%, with 10.56% on average. Figure 15 presents the percentage gain obtained by contextual re-ranking algorithm for CFD [30] descriptor considering each of 70 shape classes in MPEG-7 dataset. Note that bullseye score was improved over 30% for several classes.

Contextual re-ranking percent gain for CFD [30] shape descriptor on the MPEG-7 classes

The iterative behavior of the contextual re-ranking algorithm can be observed in results illustrated in Fig. 16. The figure shows the evolution of rankings along the iterations. The first row presents 20 results for a query image (first column) according to the CFD [30] shape descriptor. The remaining rows present the results for each iteration of contextual re-ranking algorithm. We can observe the significant improvement in terms of precision, ranging from 40% (on the ranking computed by the CFD [30] descriptor) to 100% at the fifth iteration of the re-ranking algorithm.

5.2.2 Texture descriptors

The experiments considered three texture descriptors: local binary patterns (LBP) [27], local activity spectrum (LAS) [40], and color co-occurrence matrix (CCOM) [22]. We used the Brodatz [5] dataset, a popular dataset for texture descriptors evaluation. The Brodatz dataset is composed by 111 different textures. Each texture is divided into 16 blocks, such that 1,776 images are considered. Our re-ranking method presents positive gains, presented in Table 1, ranging from +1.37 to 10.60%.

5.2.3 Color descriptors

Three color descriptors was considered for our evaluation: auto color correlograms (ACC) [18], border/interior pixel classification (BIC) [38], and global color histogram (GCH) [39]. The experiments were conducted on a database used in [43] and composed by images from seven soccer teams, containing 40 images per class. We can observe positive gains for all color descriptors, presented in Table 1, ranging from \(+\)2.42 to 9.63%.

5.3 Experiment 3: rank aggregation

This section aims to evaluate the use of our re-ranking method to combine different CBIR descriptors. We selected two descriptors for each visual property (shape, color, and texture): descriptors with best effectiveness results are selected (except for the MPEG-7 dataset, for which the AIR [17] descriptor yields results very close to the maximum scores). Table 3 presents the MAP scores obtained for rank aggregation (in bold) considering these descriptors. We can observe significant gains compared with each isolated descriptors results. Figure 17 illustrates the precision \(\times \) recall curves of shape descriptors CFD [30] and ASC [25] in different situations: before and after using the contextual re-ranking algorithm, and after using it for rank aggregation. As it can be observed, for both re-ranking and rank aggregation, very significant gains in terms of precision have been achieved.

5.4 Experiment 4: combining post-processing methods

In this section, we aim to evaluate the use of our re-ranking method combined with other post-processing methods. We considered two post-processing approaches: DOA [30] and Mutual kNN graph [21]. Table 4 presents the results for MAP and Recall@40 measures. The gains are positives, ranging from \(+\)0.30 to \(+\)0.90%.

5.5 Experiment 5: comparison with other approaches

We also evaluated our method in comparison with other state-of-the-art post-processing methods. We used the MPEG-7 dataset with the bullseye score again. Table 5 presents results of our contextual re-ranking algorithm (in bold) and several other post-processing methods in different tasks (re-ranking, rank aggregation, and combining post-processing methods). We also present the retrieval scores for some descriptors that have been used as input for these methods. We can observe that the contextual re-ranking method presents high effectiveness scores when compared with state-ot-the-art approaches. Note that our method has the best effectiveness performance when compared with all other post-processing methods in rank aggregation tasks.

6 Conclusions

In this work, we have presented a new re-ranking method based on contextual information. The main idea consists in creating gray scale image representations of distance matrix and performing a re-ranking based on information extracted from these images. We conducted a large set of experiments, considering several descriptors and datasets. Experimental results demonstrate the use of our method in several image retrieval tasks based on shape, color and texture descriptors. The proposed method achieves very high effectiveness performance when compared with state-of-the-art post-processing methods on well-known datasets.

Future work focuses on: (a) parallelizing and optimizing the proposed algorithm, (b) using other image processing techniques, as dynamic thresholding and other filtering approaches, (c) analyzing other regions of context images, and (d) investigating the use of context images for other applications (for clustering and computing the similarity between ranked lists, for example).

References

Abowd GD, Dey AK, Brown PJ, Davies N, Smith M, Steggles P (1999) Towards a better understanding of context and context-awareness. In: Proceedings of the 1st international symposium on handheld and ubiquitous Computing, HUC’99, pp 304–307

Arica N, Vural FTY (2003) Bas: a perceptual shape descriptor based on the beam angle statistics. Pattern Recogn Lett 24(9–10):1627–1639

Bai X, Wang B, Wang X, Liu W, Tu Z (2010) Co-transduction for shape retrieval. ECCV 3:328–341

Belongie S, Malik J, Puzicha J (2002) Shape matching and object recognition using shape contexts. PAMI 24(4):509–522

Brodatz P (1966) Textures: a photographic album for artists and designers. Dover, USA

Coppersmith D, Fleischer LK, Rurda A (2010) Ordering by weighted number of wins gives a good ranking for weighted tournaments. ACM Trans Algorithms 6:55:1–55:13

Croft WB (2002) Combining approaches to information retrieval. In: Croft WB (ed) Advances in information retrieval. The information retrieval, vol 7. Springer, USA, pp 1–36

da S Torres R, Falcão AX (2006) Content-based image retrieval: theory and applications. Revista de Informática Teórica e Aplicada 13(2):161–185

da Torres RS, Falcão AX (2007) Contour salience descriptors for effective image retrieval and analysis. Image Vis Comput 25(1):3–13

Dai HJ, Lai PT, Tsai RTH, Hsu WL (2010) Global ranking via data fusion. In: Proceedings of the 23rd international conference on computational linguistics: posters, COLING’10, pp 223–231

Datta R, Joshi D, Li J, Wang JZ (2008) Image retrieval: ideas, influences, and trends of the new age. ACM Comput Surv 40:5:1–5:60

Diaz F (2005) Regularizing ad hoc retrieval scores. In: CIKM’05, pp 672–679

Dwork C, Kumar R, Naor M, Sivakumar D (2001) Rank aggregation methods for the web. In: Proceedings of the 10th international conference on World Wide Web. ACM, New York, WWW’01, pp 613–622. doi:10.1145/371920.372165

Fagin R, Kumar R, Mahdian M, Sivakumar D, Vee E (2004) Comparing and aggregating rankings with ties. In: Proceedings of the twenty-third ACM SIGMOD-SIGACT-SIGART symposium on Principles of database systems, PODS’04, pp 47–58

Faria FF, Veloso A, Almeida HM, Valle E, da S Torres R, Gonçalves MA, Meira W Jr (2010) Learning to rank for content-based image retrieval. In: MIR’10, pp 285–294

Fox EA, Shaw JA (1994) Combination of multiple searches. In: The second text retrieval conference (TREC-2), NIST, NIST Special Publication, vol 500–215, pp 243–252

Gopalan R, Turaga P, Chellappa R (2010) Articulation-invariant representation of non-planar shapes. In: Proceedings of the 11th European conference on computer vision: part III, ECCV’10, pp 286–299

Huang J, Kumar SR, Mitra M, Zhu WJ, Zabih R (1997) Image indexing using color correlograms. In: CVPR’97, p 762

Jégou H, Harzallah H, Schmid C (2007) A contextual dissimilarity measure for accurate and efficient image search. In: CVPR, pp 1–8

Ji S, Zhou K, Liao C, Zheng Z, Xue GR, Chapelle O, Sun G, Zha H (2009) Global ranking by exploiting user clicks. In: SIGIR’09, pp 35–42

Kontschieder P, Donoser M, Bischof H (2009) Beyond pairwise shape similarity analysis. ACCV’09, pp 655–666

Kovalev V, Volmer S (1998) Color co-occurence descriptors for querying-by-example. In: MMM’98, p 32

Latecki LJ, Lakmper R, Eckhardt U (2000) Shape descriptors for non-rigid shapes with a single closed contour. In: CVPR, pp 424–429

Ling H, Jacobs DW (2007) Shape classification using the inner-distance. PAMI 29(2):286–299. doi:10.1109/TPAMI.2007.41

Ling H, Yang X, Latecki LJ (2010) Balancing deformability and discriminability for shape matching. ECCV 3:411–424

Liu YT, Liu TY, Qin T, Ma ZM, Li H (2007) Supervised rank aggregation. In: WWW 2007, pp 481–490

Ojala T, Pietikäinen M, Mäenpää T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. PAMI 24(7):971–987

Park G, Baek Y, Lee HK (2005) Re-ranking algorithm using post-retrieval clustering for content-based image retrieval. Inf Process Manag 41(2):177–194

Pedronette DCG, da S Torres R (2010) Exploiting contextual information for image re-ranking. CIARP 1:541–548

Pedronette DCG, da S Torres R (2010) Shape retrieval using contour features and distance optmization. In: VISAPP, vol 1, pp 197–202

Pedronette DCG, da S Torres R (2011) Exploiting clustering approaches for image re-ranking. J Vis Languages Comput 22(6):453–466

Pedronette DCG, da S Torres R (2011) Exploiting contextual information for rank aggregation. ICIP, pp 97–100

Perronnin F, Liu Y, Renders JM (2009) A family of contextual measures of similarity between distributions with application to image retrieval. CVPR, pp 2358–2365

Qin T, Liu TY, Zhang XD, Wang DS, Li H (2008) Global ranking using continuous conditional random fields. In: NIPS, pp 1281–1288

Schalekamp F, Zuylen A (1998) Rank aggregation: together were strong. In: Proceeding. of 11th ALENEX, pp 38–51

Schwander O, Nielsen F (2010) Reranking with contextual dissimilarity measures from representational Bregmanl k-means. In: VISAPP, vol 1, pp 118–122

Sculley D (2007) Rank aggregation for similar items. In: SDM

Stehling RO, Nascimento MA, Falcão AX (2002) A compact and efficient image retrieval approach based on border/interior pixel classification. In: CIKM’02, pp 102–109

Swain MJ, Ballard DH (1991) Color indexing. IJCV 7(1):11–32

Tao B, Dickinson BW (2000) Texture recognition and image retrieval using gradient indexing. JVCIR 11(3):327–342

Temlyakov A, Munsell BC, Waggoner JW, Wang S (2010) Two perceptually motivated strategies for shape classification. CVPR 1:2289–2296

Tu Z, Yuille AL (2004) Shape matching and recognition-using generative models and informative features. ECCV, pp 195–209

van de Weijer J, Schmid C (2006) Coloring local feature extraction. In: ECCV, vol Part II. Springer, Berlin, pp 334–348

Wang J, Li Y, Bai X, Zhang Y, Wang C, Tang N (2011) Learning context-sensitive similarity by shortest path propagation. Pattern Recogn 44:2367–2374

Yang L, Ji D, Zhou G, Nie Y, Xiao G (2006) Document re-ranking using cluster validation and label propagation. In: CIKM’06, pp 690–697

Yang X, Koknar-Tezel S, Latecki LJ (2009) Locally constrained diffusion process on locally densified distance spaces with applications to shape retrieval. In: CVPR, pp 357–364

Yang X, Latecki LJ (2011) Affinity learning on a tensor product graph with applications to shape and image retrieval. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 2369–2376

Yang X, Bai X, Latecki LJ, Tu Z (2008) Improving shape retrieval by learning graph transduction. ECCV 5305:788–801

Zhao D, Lin Z, Tang X (2007) Contextual distance for data perception. In: ICCV

Zhu X (2005) Semi-supervised learning with graphs. PhD thesis, Pittsburgh, PA, USA, chair-Lafferty, John and Chair-Rosenfeld, Ronald

Acknowledgments

Authors thank FAEPEX, CAPES, FAPESP, CNPq, and AMD for financial support. Authors also thank DGA-UNICAMP for its support in this work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pedronette, D.C.G., Torres, R.d.S. Exploiting contextual information for image re-ranking and rank aggregation. Int J Multimed Info Retr 1, 115–128 (2012). https://doi.org/10.1007/s13735-012-0002-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-012-0002-8