Abstract

Purpose of Review

Adequate nutrition knowledge may influence dietary behaviour, and the performance and health of athletes. Assessment of the nutrition knowledge of athletes can inform practice and provide a quantitative way to evaluate education interventions. This article aims to review nutrition knowledge questionnaires published in the last 5 years to identify advances, possible improvements in questionnaire development and design, and challenges that remain.

Recent Findings

Twelve new or modified questionnaires were identified. All had undergone validity and reliability testing. Advancements included quantitative measures of content validity and Rasch analysis. Online questionnaires were common, with at least seven using this format. Advances included use of images (n = 2), automated scored feedback (n = 1), and use of applied questions.

Summary

While advancements have been made in validation and reliability testing and electronic delivery, new questionnaires would benefit from interactive and attractive features including images, provision of electronic feedback, and applied questions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In sport, nutrition and performance are inextricably linked [1]. Dietary intake supplies the necessary energy and nutrients to meet training demands; for tissues to adapt, repair, and grow; and to promote immune function. During competition, emphasis is placed on the use of nutrition strategies to delay or prevent performance decrements related to fatigue or dehydration [2]. The use of planned nutrition strategies has been shown to improve performance when compared to ad libitum strategies [3, 4].

Hunger, taste, cost, and convenience are important determinants of food selection; however, an athlete’s choices are more complex due to a need to concurrently consider performance expectations, effects on physique, stage of training, and proximity to competition [5]. Given the range of factors that impact on food selection, knowledge of nutrition is necessary to help inform decisions around food choice. Without knowledge of the benefits of certain foods and nutrients, or the potential individual benefits from consuming these, athletes are not able to make considered decisions for their inclusion within their diet [6].

Nutrition knowledge and dietary quality have been shown to be positively associated in both the general population and in athletes [7, 8], although this relationship has been difficult to quantify due to the limited availability of validated instruments for this purpose [9]. Several recent studies have reported improvements in nutrition knowledge and dietary intake after educational interventions in a variety of sports and athlete types [10,11,12,13] which further suggests that the improvement of nutrition knowledge is linked to dietary intake. However, to give meaning to these results, the accurate assessment and quantification of knowledge level are necessary.

Sports nutritionists (SN) and other practitioners such as coaches and trainers working with athletes often play the role of educators. Nutrition knowledge assessment provides a means to measure progress over time, quantifies the effectiveness of education provided [14, 15], and facilitates the appropriate pitching of nutrition education especially in group settings. Sports nutritionists who are often working with large numbers of athletes in teams or at institutions [16] may benefit from using knowledge assessment tools to help prioritise athletes that require more urgent intervention.

Several reviews investigating nutrition knowledge in athletes have continued to identify the ongoing need for valid and reliable nutrition knowledge assessment tools for athletes [7, 17]. A review in 2016 found tools used in the measurement of nutrition knowledge in athletes were inadequately validated, making it difficult to ascertain the nutrition knowledge of athletes [18]. This manuscript aims to summarise nutrition knowledge questionnaires that have been published in the last 5 years to identify advances, where improvements in questionnaire development can still be made, and challenges that remain in this research space.

Search Strategy and Results

A search across two databases (PubMed and Web of Science) was conducted using terms including (“nutrition knowledge” OR “nutrition assessment” OR “knowledge assessment”) AND (“athlet*” OR “sport*”) AND (“questionnaire” OR “tool” OR “instrument”). Results were limited to the last 5 years (2016 onwards). To be included, articles had to describe the development of a nutrition knowledge questionnaire for any athlete population from any country and be published in English. Abstracts, theses, and reviews were excluded. Where possible, a copy of the questionnaire was retrieved.

Twelve questionnaires were identified through the search. Information describing each questionnaire including population, athlete type, delivery platform, assessment areas, questionnaire length, type of questions, scoring information, and validity and reliability testing were doubly extracted by two authors (R.T, K.B). The Nutrition for Sports Knowledge Questionnaire (NSKQ) [19] and its abridged version (A-NSKQ) by Trakman et al. were considered as separate questionnaires for the purpose of this review [20].

Results

A summary of the twelve questionnaires included in this review is provided in Table 1. Questionnaires have been developed by researchers from around the world (Australia, n = 3 [19–21]; UK, n = 2 [22, 23]; Turkey, n = 2 [24, 25]; Italy, n = 2 [26, 27]; and Spain [28•], Finland [29], and the USA, n = 1 [30]) with the majority targeting all athlete types (n = 8). The remaining questionnaires specifically targeted endurance [29], ultra-endurance [22], sports teams [28•], and track and field athletes [23]. One questionnaire was aimed specifically at early adolescents [27], and two were targeted at adolescents/youths as well as adults [26, 28•].

Most of the questionnaires were newly developed (n = 8) with the remainder being modifications of previously validated instruments. One questionnaire was modified as a shortened version of the original [20], two were adapted for Turkish audiences [24, 25], and the last was altered to suit ultra-endurance athletes [22].

The distribution of questionnaires was described as online, electronic, or an email link for seven studies [19–22, 28•, 29, 30]; however, usually no further details were provided. Qualtrics™ (Provo, UT, USA) and FileMaker Pro™ (Cupertino, CA, USA) were used as the platform for three questionnaires [19, 21, 30].

Validity and Reliability Measures of Questionnaires

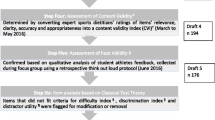

In author-developed questionnaires (n = 8), an expert panel was always used to establish face validity (does the questionnaire appear to measure what it claims to?) and/or content validity (is the questionnaire representative of the domain being assessed?) [19, 21, 23, 26, 27, 28•, 29, 30]. The PEAKS-NQ differed by conducting focus groups with SN from elite sporting institutions to inform the generation of items, resulting in high consensus and no deletions during refinement via a modified Delphi process [31]. These experts also identified other desirable features in nutrition knowledge questionnaires. These included a modular approach to allow the selection of the most relevant assessment areas, a rotating question bank, visual appeal (e.g. images), immediate feedback, and electronic deployment.

While the use of an expert panel is deemed appropriate for establishing content validity [32, 33••], expert groups used in the literature are diverse, including SN with differing levels of experience [23], experts from multiple career backgrounds [28•], psychologists, and paediatricians [26]. These experts’ training in sports nutrition were not described. In most questionnaires, content validity was established qualitatively; however, three recent instruments also included quantitative measures [19, 21, 28•]. The content validity index (CVI) was used differently in each study, with the NSKQ using an expert panel of nutritionists to rate each item [19]. The NUKYA [28•] assessed the CVI both as individual items (I-CVI) and as whole scale (S-CVI), whereas each section of the PEAKS-NQ [21] was rated by SNs then averaged to establish an overall S-CVI.

Construct validity, which refers to how well a questionnaire measures the variable that it intends to [34, 35], was most often supported by using known-groups validity, also referred to as discriminative validity or validation by extreme groups [32, 36]. Most questionnaires recruited a “high knowledge” group such as practicing nutritionists or nutrition students and a comparison group who were expected to have a lower level of nutrition knowledge such as athletes or university students with no nutrition training. Demonstrating a questionnaire’s ability to distinguish between differing levels of knowledge supports construct validity [32, 33••]. All questionnaires utilised this method except Okta et al. [24], who translated and modified a questionnaire which had been validated using this method [37]. The A-NSKQ did not reconduct known-groups testing after its initial development as it was developed from previously collected data [20].

Most questionnaires (n = 11) used a “test–retest” method to establish the reliability of questionnaires [19–23, 25–27, 28•, 29, 30]. The time between administrations ranged between 10 days [19] and 5 weeks [29]. Cronbach’s α was most often used to measure the internal consistency of the instrument [21, 23, 24, 26, 27, 28•, 29, 30], followed by the Kuder-Richardson 20 [19, 24, 25].

Rasch analysis was used to support the psychometric properties of four instruments [19–21, 28•]. However, the use of Rasch analysis and the subsequent statistics reported were inconsistent between studies. Rasch analysis has strong properties suited to questionnaire development including the evaluation of construct validity, refinement of test items by identifying non-discriminative items, the identification of questions not performing as expected, and assessment of reliability [38, 39, 40•].

Other methods of refining questionnaires included the use of a difficulty index to identify items that were too easy or hard, and or a discrimination index to assess how well items distinguish between high and low performing respondents [19, 24, 26, 27, 28•, 29, 30]. A “think out loud” protocol was used in one study to collect qualitative feedback from student athletes on the questionnaire [19]. Readability was assessed in one study using the Gunning Fog Index and the Flesch Kincaid Grade Level Index [30].

All questionnaires focussed on general and sports nutrition knowledge with the exception of one which focussed on sports nutrition knowledge only [30]. Nutrients (including macronutrients and micronutrients) and fluid/hydration were assessed across all questionnaires. Other topics that were frequently assessed included recovery nutrition (n = 5) [22, 24–26, 29], weight management (n = 7) [19–22, 25, 29, 30], and supplements (n = 9) [19–22, 24–27, 29]. Few commented on question type and the knowledge type tested, e.g. factual (declarative) or procedural (“how-to”) [41]. Procedural knowledge has been identified as important in demonstrating understanding of nutritional concepts [31].

The number of questions varied (range 26–89, mean 59 ± 18 items). The true number of items could be greater as each question may contain multiple sub-questions. For example, “The carbohydrate content of these foods is:” was reported as one question but contained six sub-questions [26]. Questionnaire completion time was reported in five studies [19–21, 23, 28•] of between 12 and 25 min. Many questionnaires predominantly used dichotomous answers (true/false, high/low, agree/disagree), with most including an “unsure” option [19, 23, 24, 26, 27, 30]. Half the instruments included multiple choice questions (MCQs) [19–21, 23, 27, 28•]. Scoring was reported in nine questionnaires [19–22, 25–27, 28•, 30] with the majority awarding + 1 for each correct and 0 for incorrect or “I don’t know” answers. Negative scoring was reported for incorrect answers in two studies [28•, 30]. The PEAKS-NQ was the only questionnaire to report automated scoring and feedback upon completion [21]. This feedback displayed scores and strengths and weaknesses in knowledge domains; these additions were reported subsequent to its validation [42•].

The use of images was mentioned in two studies [19, 21]. The NSKQ included pictures to reduce participant fatigue and the PEAKS-NQ included visual aids to improve respondent comprehension.

Overview of Current Questionnaire Design and Suggestions for Future Developments

In the last 5 years, advances have occurred in the way athlete nutrition knowledge is assessed. The extent of validity and reliability testing and refinement of questionnaires via more diverse and sensitive techniques appear to have helped address problems identified in previous systematic reviews [7, 17, 18]. Other areas for improvement in sports nutrition questionnaire development include focusing on “how to” knowledge questions, reducing the reliance on dichotomous items, minimising ambiguous questions, incorporating electronic features and the use of consistent methodology in translating and/or modifying questionnaires.

Testing of Validity and Reliability

A review of studies pre-2016 identified limited testing of validity and reliability; however, most newly developed questionnaires utilised at least four techniques: the use of an expert review panel for content validity, comparison of groups expected to perform differently to support construct validity, test–retest to inform questionnaire stability, and assessment of internal consistency. Tools modified from previously validated instruments conducted less testing prior to use [20, 22, 24, 25].

Most authors consulted a panel of experts to establish content validity. This could be problematic because what defines an expert is contextual. A wide range of experts were used in the validation of different questionnaires including SN with varying levels of experience, and other health professionals who may have limited nutrition training [23, 24, 28•, 29]. An alternative is the use of focus groups. Focus groups were used by Trakman and colleagues [19] to assess the clarity of the NSKQ after the item pool was generated, whereas the authors of the PEAKS-NQ used focus groups with SN to inform the initial item pool [31]. Focus groups can be useful in generating a representative item pool by giving participants an opportunity to stimulate each other’s thinking, providing more diverse perspectives that uncover researchers’ “blind spots” and can reduce researcher bias [43–45]. This method remains underutilised in this research area.

The use of quantitative methods to measure content validity has become more common. Content validity index (CVI), whether as a whole scale or by individual item, was assessed in three questionnaires, each in a slightly different manner [19, 21, 28•]. It may be too early to establish a standard practice for how CVI should be assessed; however, those developing new questionnaires should include this technique to compliment methods that use an expert panel. Considerations for how CVI can be implemented have been previously suggested [33••, 46, 47].

Another notable advance has been the inclusion of Rasch techniques in four recent questionnaires, which is a form of item response theory (IRT). Item response theory techniques consider individual question difficulty as related to person ability [44]. Evaluation of the psychometric properties of a questionnaire has traditionally been conducted via classical test theory (CTT) techniques which analyse whole scales based on total score, where the ability of the respondent is determined by overall performance [48]. Knowledge is a non-linear construct, so relying on CTT techniques can be problematic because they do not provide insight on how individuals perform on each item in relation to their ability [49]. Rasch modelling presumes that more difficult items are less likely to be answered correctly and vice versa; therefore, performance of a respondent can be predicted based on item difficulty and person ability [38]. From a questionnaire development perspective, Rasch analysis has been used to support construct validity, rank questions by difficulty and fit to identify potentially problematic or non-discriminatory items, and assess reliability by providing person and item separation reliability scores [19–21, 28•]. Reliability scores are similar to Cronbach’s α, but consider that data provided is non-linear which is advantageous [40•]. Different Rasch analyses were conducted by the four questionnaires, which can be attributed to the different computer programs that conduct this analysis [33••]. With its advantages over traditional techniques, developers of nutrition knowledge questionnaires should consider using a combination of IRT and CTT techniques in testing validation.

Aside from developments in validity and reliability, there remain several areas for opportunities to improve knowledge assessment in athletes. These include changes to the types of questions asked, use of images, electronic features, and modular question banks.

A Focus on Assessing Practical (“How to”) Nutrition Knowledge

For most questionnaires, it was unclear whether they focussed on factual (declarative) or procedural (practical/ “how to”) knowledge [41]. The ability to apply nutrition principles (procedural knowledge) is impossible without factual knowledge [41]; however, a focus on facts alone (e.g. questions such as “what percentage of your diet should be made up from carbohydrate?”) [23] may limit the usefulness of information [19]. That is, ability to recall facts is unlikely to truly reflect understanding of nutrition or the ability to select foods conducive to good health and performance. Greater understanding of practical nutrition knowledge has recently been associated with higher diet quality [50]. The assessment of both factual and application-based knowledge of sports nutrition should remain a focus of future questionnaires.

Reducing Reliance on Dichotomous Item Formats

Most questionnaires used dichotomous response formats and inclusion of a “not sure” option. There are arguments for and against the inclusion of an “unsure” option. Inclusion may decrease likelihood of guessing, but exclusion may mean respondents who are not confident in their answer will pass the question [32, 33••]. Where there are limited response options, such as in dichotomous questions, effectiveness of the measure may be decreased, as the respondent has a 50% chance of being correct. The use of MCQs with four to five answer options has been suggested as appropriate if there are adequate feasible distracters [32, 33••]. Research comparing MCQs with multiple-answer MCQs (e.g. “Select all statements that are correct”) in a programming course suggested no differences in preference for question type, that multiple-answer MCQs potentially reduced guessing of correct answers, and that this question format could be useful for providing formative feedback [51, 52]. Future questionnaires could consider a mix of MCQ and multiple-answer MCQs from which analysis could be conducted to provide insight into knowledge gaps and misunderstandings of the area assessed.

Reducing Ambiguous Questions

The assessment of nutrient knowledge was prevalent in reviewed questionnaires. In some questionnaires, this was examined by having the respondent determine whether a food is “high” or “low” in a certain nutrient or by classifying whether a food is a “good source” of a nutrient [23, 24, 26]. This may be ambiguous because what constitutes high or low and/or a good or poor source of a nutrient is not always clear. For example, a question such as “Do these foods contain a high or low content of protein? (Beans/Pulses)” [19] can be difficult to answer, as legumes contain substantially more carbohydrate than protein, so it is “low” compared to meat, but “high” in the context of a vegetarian/vegan diet. A more considered approach may be to provide dietary context as well as moving away from dichotomous answers for minimising these ambiguities.

The Use of Images to Enhance Readability and Engagement

Only two questionnaires specifically mentioned use of pictures [19, 21]. The use of images, visual elements, and interactive screen design could be leveraged to decrease respondent fatigue and monotony resulting in less incomplete responses and nonresponse bias [53,54,55]. One questionnaire specifically assessed readability [30]. To ensure an athlete’s knowledge score is not hindered by their level of literacy and to increase accessibility, use of images could reduce ambiguity in interpreting questions and improve comprehension [56, 57]. Similar use of visuals has been used successfully in a general nutrition knowledge instrument; however, this remains largely underutilised in athlete-specific questionnaires [58]. The consideration of visuals in combination with readability tests or audio narration of questions is recommended in the development of future questionnaires.

Greater Incorporation of Electronic Features

A key benefit of athlete nutrition knowledge assessment is to identify strengths and potential gaps in understanding. Several studies reported that questionnaires were deployed electronically, which represents an opportunity to return timely, automated, and personalised feedback to respondents. However, to date, only one questionnaire has implemented this [42•]. By offering personalised feedback or something that is tangible or meaningful to the user, respondents may answer more truthfully or be more likely to complete [59, 60]. The benefits of feedback in improving learning outcomes are well-established [61], and for athletes can provide a catalyst for self-learning and/or an opportunity to engage with a nutrition professional for assistance [62]. Studies in non-athletes have demonstrated the effectiveness of computer-generated, scored, and personalised feedback in improving diet and lifestyle factors [63, 64]. Feedback is also beneficial for SN in athlete education by providing insight into the effectiveness of and the potential gaps in their education.

Assessment by Modules

Use of electronic platforms may mean it is feasible to offer a “modular” approach to nutrition knowledge testing. This approach has been reported as a feature that practitioners would like to see in questionnaires [31]. Using this approach, practitioners or researchers administering a questionnaire could select the most relevant topics to suit the needs of the athlete. For example, a module on competition nutrition may be more relevant leading into a season. For a younger group of athletes, a supplements modulemay not be necessary. By developing modules, a questionnaire could be expanded to provide assessment for specific knowledge areas or sport types such as nutrition for travel or for endurance athletes. Establishing the validity and reliability of unique modules would allow each set of questions to be used as a standalone assessment. Further psychometric testing should be conducted to assess whether modules deployed as a series to form a questionnaire remains valid and reliable.

The development of a question bank that provides a rotating or randomly generated set of items that have been psychometrically tested to assess the same construct would further improve the way in which nutrition knowledge is assessed as it is not possible to rule out learning the test on multiple subsequent administrations without truly improving in knowledge. Although test–retest reliability has been used to demonstrate the stability of many questionnaires in this review, the development of a question bank would necessitate parallel-form reliability, where the correlation between original and alternate versions of an instrument is examined [35, 65]. Item response theory techniques have been used in the validation of question banks in other fields [66, 67], which may be relevant given the increase reliance on Rasch techniques identified by this review.

Modification of Knowledge Questionnaires

The time, resources, and expertise necessary to develop a new tool for a specific population are often impractical. Therefore, it is expected that existing measures will be modified or adapted to suit the population being examined. Where modifications have occurred, it is essential the measure is reassessed for validity and reliability, with the extensiveness of the testing related to the level of modification. A three-level classification system proposed by Coons et al. [68] suggests minor modifications are those that will not change the content or meaning, such as changing font or medium of delivery; moderate modifications are those that include splitting items, altering wording, or item order; and substantial modifications are those that include removing or changing items, their response options, or their wording. Where translation is necessary to reach diverse population groups, the process should be documented. A seven-step framework for the translation, adaptation, and validation of instruments has been proposed by Sousa et al. [69]. Specifically, steps 1–5 may be relevant to researchers looking to adapt questionnaires into their own language, with steps 6 and 7 describing the validation of the translated instrument. More importantly for nutrition-related questionnaires, food items should be aligned culturally and reflect the food supply and terminology used in the country [70]. For example, the relevant usage of prawn/shrimp, soft drink/soda, and the conversion of imperial or metric units should occur when modifying between US and Australian populations. Due to the time-sensitive nature of research, an “in-depth pre-test” conducted on a small sample may be adequate to determine whether modifications were appropriate, and to collect psychometric properties such as item-scale correlations and reliability measures prior to large-scale deployment [71]. It is also suggested that validity tests should match those conducted on the original measure as using different tests may demonstrate the validity, but makes comparison with the original difficult [71]. That said, further testing questionnaire validity outside of what was conducted on the original should not be discouraged.

Conclusion

Assessment of nutrition knowledge is an important component of providing support to athletes. Nutrition knowledge questionnaires may allow screening of large groups of athletes to prioritise individuals who need advice sooner and evaluate the effectiveness of advice and education initiatives. This review identified 12 new or modified questionnaires published in the last 5 years, with advancements including the use of more sophisticated validation and reliability testing techniques and the use of electronic features to advantage. Areas that need improvement are refinement of question format and using questions that assess procedural (practical application) rather than focussing on declarative (factual) knowledge. Future advancements would include the use of accessibility options for electronic questionnaires, the use of images to enhance readability and engagement, modular structure of questionnaires to provide adaptability, and further development in the provision of electronic feedback.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Thomas DT, Erdman KA, Burke LM. Position of the Academy of Nutrition and Dietetics, Dietitians of Canada, and the American College of Sports Medicine: nutrition and athletic performance. J Acad Nutr Diet. 2016;116(3):501–28. https://doi.org/10.1016/j.jand.2015.12.006.

Maughan R. The athlete’s diet: nutritional goals and dietary strategies. Proc Nutr Soc. 2002;61(1):87–96.

Hottenrott K, Hass E, Kraus M, Neumann G, Steiner M, Knechtle B. A scientific nutrition strategy improves time trial performance by≈ 6% when compared with a self-chosen nutrition strategy in trained cyclists: a randomized cross-over study. Appl Physiol Nutr Metab. 2012;37(4):637–45.

Hansen EA, Emanuelsen A, Gertsen RM, Sørensen SSR. Improved marathon performance by in-race nutritional strategy intervention. Int J Sport Nutr Exerc Metab. 2014;24(6):645–55.

Birkenhead KL, Slater G. A review of factors influencing athletes’ food choices. Sports Med. 2015;45(11):1511–22. https://doi.org/10.1007/s40279-015-0372-1.

Wansink B, Westgren RE, Cheney MM. Hierarchy of nutritional knowledge that relates to the consumption of a functional food. Nutrition. 2005;21(2):264–8. https://doi.org/10.1016/j.nut.2004.06.022.

Heaney S, O’Connor H, Michael S, Gifford J, Naughton G. Nutrition knowledge in athletes: a systematic review. Int J Sport Nutr Exerc Metab. 2011;21(3):248–61.

Wardle J, Parmenter K, Waller J. Nutrition knowledge and food intake. Appetite. 2000;34(3):269–75. https://doi.org/10.1006/appe.1999.0311.

Spronk I, Kullen C, Burdon C, O’Connor H. Relationship between nutrition knowledge and dietary intake. Br J Nutr. 2014;111(10):1713–26.

Rossi FE, Landreth A, Beam S, Jones T, Norton L, Cholewa JM. The effects of a sports nutrition education intervention on nutritional status, sport nutrition knowledge, body composition, and performance during off season training in NCAA Division I baseball players. J Sport Sci Med. 2017;16(1):60–8.

Valliant MW, Pittman H, Wenzel RK, Garner BH. Nutrition education by a registered dietitian improves dietary intake and nutrition knowledge of a NCAA female volleyball team. Nutrients. 2012;4(6):506–16. https://doi.org/10.3390/nu4060506.

Philippou E, Middleton N, Pistos C, Andreou E, Petrou M. The impact of nutrition education on nutrition knowledge and adherence to the Mediterranean Diet in adolescent competitive swimmers. J Sci Med Sport. 2017;20(4):328–32. https://doi.org/10.1016/j.jsams.2016.08.023.

Nascimento M, Silva D, Ribeiro S, Nunes M, Almeida M, Mendes-Netto R. Effect of a nutritional intervention in athlete’s body composition, eating behaviour and nutritional knowledge: a comparison between adults and adolescents. Nutrients. 2016;8(9):535. https://doi.org/10.3390/nu8090535.

Wirth KR, Perkins D. Knowledge surveys: an indispensable course design and assessment tool. Innovations in the Scholarship of Teaching and Learning. 2005:1–12.

Nuhfer E, Knipp D. The knowledge survey: a tool for all reasons. To improve the academy. 2003;21(1):59–78.

Hull MV, Jagim AR, Oliver JM, Greenwood M, Busteed DR, Jones MT. Gender differences and access to a sports dietitian influence dietary habits of collegiate athletes. J Int Soc Sports Nutr. 2016;13:38. https://doi.org/10.1186/s12970-016-0149-4.

Tam R, Beck KL, Manore MM, Gifford J, Flood VM, O’Connor H. Effectiveness of education interventions designed to improve nutrition knowledge in athletes: a systematic review. Sports Med. 2019;49(11):1769–86. https://doi.org/10.1007/s40279-019-01157-y.

Trakman GL, Forsyth A, Devlin BL, Belski R. A systematic review of athletes’ and coaches’ nutrition knowledge and reflections on the quality of current nutrition knowledge measures. Nutrients. 2016;8(9):570. https://doi.org/10.3390/nu8090570.

Trakman GL, Forsyth A, Hoye R, Belski R. The nutrition for sport knowledge questionnaire (NSKQ): development and validation using classical test theory and Rasch analysis. J Int Soc Sports Nutr. 2017;14. https://doi.org/10.1186/s12970-017-0182-y.

Trakman GL, Forsyth A, Hoye R, Belski R. Development and validation of a brief general and sports nutrition knowledge questionnaire and assessment of athletes’ nutrition knowledge. J Int Soc Sports Nutr. 2018;15(1):17. https://doi.org/10.1186/s12970-018-0223-1.

Tam R, Beck K, Scanlan JN, Hamilton T, Prvan T, Flood V et al. The Platform to Evaluate Athlete Knowledge of Sports Nutrition Questionnaire: a reliable and valid electronic sports nutrition knowledge questionnaire for athletes. Br J Nutr. 2020:1–11. https://doi.org/10.1017/S0007114520004286.

Blennerhassett C, McNaughton LR, Cronin L, Sparks SA. Development and implementation of a nutrition knowledge questionnaire for ultraendurance athletes. Int J Sport Nutr Exerc Metab. 2018:1–7. https://doi.org/10.1123/ijsnem.2017-0322.

Furber M, Roberts J, Roberts M. A valid and reliable nutrition knowledge questionnaire for track and field athletes. BMC Nutrition. 2017;3(1). https://doi.org/10.1186/s40795-017-0156-0.

Okta PG, Yildiz E. The validity and reliability study of the Turkish version of the General and Sport Nutrition Knowledge Questionnaire (GeSNK). Prog Nutr. 2021;23(1).

Ozener B, Karabulut E, Kocahan T, Bilgic P. Validity and reliability of the Sports Nutrition Knowledge Questionnaire for the Turkish athletes. Marmara Med J. 2021;34(1):45–50.

Calella P, Iacullo VM, Valerio G. Validation of a General and Sport Nutrition Knowledge Questionnaire in adolescents and young adults: GeSNK. Nutrients. 2017;9(5). https://doi.org/10.3390/nu9050439.

Rosi A, Ferraris C, Guglielmetti M, Meroni E, Charron M, Menta R, et al. Validation of a General and Sports Nutrition Knowledge Questionnaire in Italian early adolescents. Nutrients. 2020;12(10):3121. https://doi.org/10.3390/nu12103121.

Vázquez-Espino K, Fernández-Tena C, Lizarraga-Dallo MA, Farran-Codina A. Development and validation of a short sport nutrition knowledge questionnaire for athletes. Nutrients. 2020;12(11):3561. This is a good example of a recently developed questionnaire that has utilised the Rasch model and quantitative content validity testing.

Heikkilä M, Valve R, Lehtovirta M, Fogelholm M. Development of a nutrition knowledge questionnaire for young endurance athletes and their coaches. Scand J Med Sci Sports. 2018;28(3):873–80. https://doi.org/10.1111/sms.12987.

Karpinski CA, Dolins KR, Bachman J. Development and validation of a 49-item sports nutrition knowledge instrument (49-SNKI) for adult athletes. Top Clin Nutr. 2019;34(3):174–85. https://doi.org/10.1097/tin.0000000000000180.

Tam R, Beck KL, Gifford JA, Flood VM, O’Connor HT. Development of an electronic questionnaire to assess sports nutrition knowledge in athletes. J Am Coll Nutr. 2020;39(7):636–44. https://doi.org/10.1080/07315724.2020.1723451.

Parmenter K, Wardle J. Evaluation and design of nutrition knowledge measures. J Nutr Educ. 2000;32(5):269.

Trakman GL, Forsyth A, Hoye R, Belski R. Developing and validating a nutrition knowledge questionnaire: key methods and considerations. Public Health Nutr. 2017;20(15):2670–9. https://doi.org/10.1017/S1368980017001471. This reference reports on methods that can be used in the development of new questionnaires, and how validity and reliability can be assessed. It is valuable for informing the development of future questionnaires.

Kimberlin LC, Winterstein GA. Validity and reliability of measurement instruments used in research. Am J Health-Syst Pharm. 2008;65(23):2276–84. https://doi.org/10.2146/ajhp070364.

Heale R, Twycross A. Validity and reliability in quantitative studies. Evid Based Nurs. 2015;18(3):66–7. https://doi.org/10.1136/eb-2015-102129.

Davidson M. Known-groups validity. In: Michalos AC, editor. Encyclopedia of quality of life and well-being research. Dordrecht: Springer, Netherlands; 2014. p. 3481–2.

Zinn C, Schofield G, Wall C. Development of a psychometrically valid and reliable sports nutrition knowledge questionnaire. J Sci Med Sport. 2005;8(3):346–51. https://doi.org/10.1016/S1440-2440(05)80045-3.

Boone WJ. Rasch analysis for instrument development: why, when, and how? CBE—Life Sciences Education. 2016;15(4):rm4.

Bond TG. Applying the Rasch model: fundamental measurement in the human sciences. Third Edition. ed. EBL. Hoboken: Taylor and Francis; 2015.

Khine MS. Rasch measurement. 1st ed. 2020. ed. Singapore: Springer Singapore; 2020. This resource is valuable for researchers looking to better understand Rasch analysis and its utility in validity and reliability testing of questionnaires.

Worsley A. Nutrition knowledge and food consumption: can nutrition knowledge change food behaviour? Asia Pac J Clin Nutr. 2002;11 Suppl 3(s3):S579-S85. https://doi.org/10.1046/j.1440-6047.11.supp3.7.x.

Tam R, Flood VM, Beck KL, O'Connor HT, Gifford JA. Measuring the sports nutrition knowledge of elite Australian athletes using the Platform to Evaluate Athlete Knowledge of Sports Nutrition Questionnaire. Nutr Diet. 2021:1–9. https://doi.org/10.1111/1747-0080.12687. This referenence is important for demonstrating the feasibility of incorporating automated feedback and scoring into a nutrition knowledge questionnaire.

Hoyt WT, Mallinckrodt B. Improving the quality of research in counseling psychology: conceptual and methodological issues. American Psychological Association; 2012.

Mallinckrodt B, Miles J, Recabarren D. Using focus groups and Rasch item response theory to improve instrument development. Couns Psychol. 2016;44(2):146. https://doi.org/10.1177/0011000015596437.

Miles J, Mallinckrodt B, Recabarren D. Reconsidering focus groups and Rasch model item response theory in instrument development. Couns Psychol. 2016;44(2):226. https://doi.org/10.1177/0011000016631121.

Almanasreh E, Moles R, Chen TF. Evaluation of methods used for estimating content validity. Res Social Adm Pharm. 2019;15(2):214–21. https://doi.org/10.1016/j.sapharm.2018.03.066.

Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations Res Nurs Health. 2007;30(4):459–67. https://doi.org/10.1002/nur.20199.

Tavakol M, Dennick R. Post-examination analysis of objective tests. Med Teach. 2011;33(6):447–58. https://doi.org/10.3109/0142159X.2011.564682.

Tavakol M, Dennick R. Psychometric evaluation of a knowledge based examination using Rasch analysis: an illustrative guide: AMEE Guide No. 72. Med Teach. 2013;35(1):e838-e48. https://doi.org/10.3109/0142159X.2012.737488.

Deroover K, Bucher T, Vandelanotte C, de Vries H, Duncan MJ. Practical nutrition knowledge mediates the relationship between sociodemographic characteristics and diet quality in adults: a cross-sectional analysis. Am J Health Promot. 2019;34(1):59–62. https://doi.org/10.1177/0890117119878074.

Wong C, Denny P, Luxton-Reilly A, Whalley J, editors. The impact of multiple choice question design on predictions of performance 2021: ACM.

Petersen A, Craig M, Denny P, editors. Employing multiple-answer multiple choice questions. Proceedings of the 2016 ACM Conference on Innovation and Technology in Computer Science Education; 2016.

Deutskens E, de Ruyter K, Wetzels M, Oosterveld P. Response rate and response quality of internet-based surveys: an experimental study. Mark Lett. 2004;15(1):21–36. https://doi.org/10.1023/B:MARK.0000021968.86465.00.

Vicente P, Reis E. Using questionnaire design to fight nonresponse bias in web surveys. Soc Sci Comput Rev. 2010;28(2):251–67.

Angelovska J, Mavrikiou PM. Can creative web survey questionnaire design improve the response quality. University of Amsterdam, AIAS Working Paper. 2013;131.

Naska A, Valanou E, Peppa E, Katsoulis M, Barbouni A, Trichopoulou A. Evaluation of a digital food photography atlas used as portion size measurement aid in dietary surveys in Greece. 2016;19(13):2369–76. https://doi.org/10.1017/S1368980016000227.

Foster E, Matthews JNS, Nelson M, Harris JM, Mathers JC, Adamson AJ. Accuracy of estimates of food portion size using food photographs – the importance of using age-appropriate tools. Public Health Nutr. 2006;9(4):509–14. https://doi.org/10.1079/PHN2005872.

Franklin J, Holman C, Tam R, Gifford J, Prvan T, Stuart-Smith W, et al. Validation of the e-NutLit, an electronic tool to assess nutrition literacy. J Nutr Educ Behav. 2020;52(6):607–14. https://doi.org/10.1016/j.jneb.2019.10.008.

Bälter O, Fondell E, Bälter K. Feedback in web-based questionnaires as incentive to increase compliance in studies on lifestyle factors. Public Health Nutr. 2012;15(6):982–8.

Conrad FG, Couper MP, Tourangeau R, Galesic M, Yan T, editors. Interactive feedback can improve the quality of responses in web surveys. Proc Surv Res Meth Sect Am Statist Ass. 2005.

Black P, Wiliam D. Assessment and classroom learning. Assessment in Education: principles, policy & practice. 1998;5(1):7–74.

Nicol DJ, Macfarlane-Dick D. Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud High Educ. 2006;31(2):199–218.

Parekh S, Vandelanotte C, King D, Boyle FM. Improving diet, physical activity and other lifestyle behaviours using computer-tailored advice in general practice: a randomised controlled trial. Int J Behav Nutr Phys Act. 2012;9:108. https://doi.org/10.1186/1479-5868-9-108.

van der Haar S, Hoevenaars FPM, van den Brink WJ, van den Broek T, Timmer M, Boorsma A, et al. Exploring the potential of personalized dietary advice for health improvement in motivated individuals with premetabolic syndrome: pretest-posttest study. JMIR Form Res. 2021;5(6): e25043. https://doi.org/10.2196/25043.

Costa AS, Fimm B, Friesen P, Soundjock H, Rottschy C, Gross T, et al. Alternate-form reliability of the Montreal cognitive assessment screening test in a clinical setting. Dement Geriatr Cogn Disord. 2012;33(6):379–84.

Roberts C, Zoanetti N, Rothnie I. Validating a multiple mini-interview question bank assessing entry-level reasoning skills in candidates for graduate-entry medicine and dentistry programmes. Med Educ. 2009;43(4):350–9. https://doi.org/10.1111/j.1365-2923.2009.03292.x.

Sung VW, Griffith JW, Rogers RG, Raker CA, Clark MA. Item bank development, calibration and validation for patient-reported outcomes in female urinary incontinence. Qual Life Res. 2016;25(7):1645–54. https://doi.org/10.1007/s11136-015-1222-1.

Coons SJ, Gwaltney CJ, Hays RD, Lundy JJ, Sloan JA, Revicki DA, et al. Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO Good Research Practices Task Force report. Value Health. 2009;12(4):419–29. https://doi.org/10.1111/j.1524-4733.2008.00470.x.

Sousa VD, Rojjanasrirat W. Translation, adaptation and validation of instruments or scales for use in cross-cultural health care research: a clear and user-friendly guideline. J Eval Clin Pract. 2011;17(2):268–74. https://doi.org/10.1111/j.1365-2753.2010.01434.x.

Teufel NI. Development of culturally competent food-frequency questionnaires. Am J Clin Nutr. 1997;65(4):1173S-S1178. https://doi.org/10.1093/ajcn/65.4.1173s.

Stewart AL, Thrasher AD, Goldberg J, Shea JA. A framework for understanding modifications to measures for diverse populations. J Aging Health. 2012;24(6):992–1017. https://doi.org/10.1177/0898264312440321.

Acknowledgements

The authors would like to acknowledge the contributions of Ruby Williams and Elysia Coupland (Australian Catholic University) for their assistance during data extraction.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Sports Nutrition

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tam, R., Gifford, J.A. & Beck, K.L. Recent Developments in the Assessment of Nutrition Knowledge in Athletes. Curr Nutr Rep 11, 241–252 (2022). https://doi.org/10.1007/s13668-022-00397-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13668-022-00397-1