Abstract

In this work the Monte Carlo method is introduced for numerical evaluation of fractional-order derivatives. A general framework for using this method is presented and illustrated by several examples. The proposed method can be used for numerical evaluation of the Grünwald-Letnikov fractional derivatives, the Riemann-Liouville fractional derivatives, and also of the Caputo fractional derivatives, when they are equivalent to the Riemann-Liouville derivatives. The proposed method can be enhanced using standard approaches for the classic Monte Carlo method, and it also allows easy parallelization, which means that it is of high potential for applications of the fractional calculus.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is surprising that, to our best knowledge, although there exists a huge amount of literature on Monte Carlo method for integration, there are no available works on using Monte Carlo method for differentiation.

This paper is devoted to introducing the numerical evaluation of the Grünwald-Letnikov fractional derivatives by the Monte Carlo method.

It is well known and documented that the Grünwald-Letnikov fractional derivatives play an important role in various numerical methods and approximations of the Riemann-Liouville and Caputo fractional derivatives, based on fractional-order differences, see [11] or [1, 2, 9, 10].

In this work we, however, develop a totally different approach. After recalling the general framework and necessary notions, we introduce a stochastic interpretation of the Grünwald-Letnikov definition of fractional-order differentiation, and demonstrate, that the evaluation of a fractional-order derivative at a given point can be replaced by the study of a certain stochastic process.

Then we outline the basic scheme of the proposed Monte Carlo approach, and provide the algorithm for computations. Close look at the separate stages of this algorithm discovers an unexpected link between our Monte Carlo method for fractional differentiation, on one side, and the known finite difference methods on non-equidistant or nested grids, on the other side. In particular, in both cases the function values are evaluated at the nodes that are more dense near the current point, and less dense towards the beginning of the considered interval.

Implementation in the form of a toolbox for MATLAB allowed experiments with the functions, that are most frequently used in applications of fractional-order modeling, like Heaviside’s unit step function, power function, exponential function, trigonometrical functions, and the Mittag-Leffler function. The provided examples demonstrated excellent agreement of the exact fractional-order derivatives of the considered functions and the numerical results by the proposed method.

In the concluding remarks we emphasize two important aspects. First, the proposed method can be enhanced using standard approaches for improving the classical Monte Carlo method, such as reduction of variance, importance sampling, stratified sampling, control variates or antithetic sampling. Second, the proposed method allows parallelization, which means that finally parallel algorithms can be used in the applications of the fractional calculus.

2 Grünwald-Letnikov fractional derivatives

Let \(\alpha > 0\), and let

where \(\hat{f}\) is the Fourier transfrom of f. For \(f \in F_0\) define \(f^{(\alpha )} = g\) if \(g \in L_1\) and \((-i\omega )^\alpha \hat{f}(\omega ) = \hat{g}(\omega )\) for \(\omega \in \mathbb {R}\). The function \(f^{(\alpha )}\), defined uniquely by the uniqueness of the Fourier transform, is the Riemann-Liouville fractional derivative of f.

Similarly, let us define

where \(\check{f}(s) = \int _{0}^\infty e^{st} f(t) dt\) denotes the Laplace transform of f. For \(f \in F_0^{+}\) define \(f^{(\alpha )} = g\) if \(g \in L_1(\mathbb {R}_{+})\) and \((-s)^\alpha \check{f}(s) = \check{g}(s)\) for \(\text{ Re } (s) \le 0\).

In order to calculate the fractional-order derivative of f, the Grünwald-Letnikov fractional derivative can be used, that was introduced in [11] as a non-local operator on \(L_1(\mathbb {R})\) given by

where

and

When (2.1) is applied to function \(f \in F_0^{+}\), we extend f to \(L_1(\mathbb {R})\) by setting \(f(t)=0\) for \(t < 0\). Hence the operator (2.1) can be regarded as an operator on \(L_1 (\mathbb {R}_{+})\), see [1, 2, 9, 11] for more details.

In particular, the operator ((2.1) is well defined for a class F of bounded functions f, such that f and its derivatives of order up to \(n>1+\alpha \) exist and are absolutely integrable, and its Fourier transform is \((ik)^\alpha \hat{f} (k)\), see, e.g., [9, pp. 22–23].

Applying the Stirling approximation

we have

The binomial series

converges for any complex \(|z| \le 1\) and any \(\alpha > 0\). Thus for \(z = 1\) we have \(\sum _{k=0}^{\infty } w_k = (1-1)^\alpha = 0\), and hence

This has been noticed by Machado [8].

Denoting \(p_k=-w_k\) (\(k = 1, 2, \ldots \)), we have

where \(p_k = p_k(\alpha ) > 0\) for all \(0<\!\alpha \!<1\) (but there \(\exists k\): \(p_k(\alpha ) < 0\) if \(1<\!\alpha \!<2\)). Note that [11]

Using (2.4), we can obtain the following relationship:

which motivates the general form of fractional-order derivative as

Integration by parts gives the Caputo from of fractional derivative:

which is just the regularized form of the Riemann–Liouville fractional derivative:

Thus, the Grünwald-Letnikov fractional derivative can be considered as approximation of the Caputo of the Riemann-Liouville fractional derivatives in the numerical analysis of fractional differential equations. In particular, if \(f \in F\) (or \(F^{+}\)) then the non-local operator \(A_h^\alpha f\) defined in (2.2) converges to \(^{RL}D^{\alpha } f\) or \(^{C}D^{\alpha } f\) in \(L_1(\mathbb {R})\) (or \(L_1(\mathbb {R}_{+})\)) norm as \(h \rightarrow 0\), see [9, 11] for more details.

3 Monte Carlo approach to the Grünwald-Letnikov fractional derivatives

Let Y be a discrete random variable such that

Note that \(\mathbb {E}\,Y = \infty \), \(0<\alpha <1\). (\(\mathbb {E}\) denotes the mathematical expectation.)

Given \(f \in F_0\) (or \(F_0^+\) or F), we define the stochastic process

Then, if \(\mathbb {E} f(t - Yh) < \infty \), we have

where

We assume that for \(f \in F_0\) (or \(F_0^+\) or F)

for any fixed t and h.

Let \(Y_1\), \(Y_2\),..., \(Y_n\), ..., are independent copies of the random variable Y; then by the strong law of large numbers

with probability one for any fixed t and h, and hence

with probability one, where \(A_h^\alpha f\) is defined by (2.2).

Moreover, if \(f \in F_0\) (or \(F_0^+\) or F) and \(\mathrm {Var} f(t-Yh) < \infty \), then by the central limit theorem and Slutsky lemma as \(N \rightarrow \infty \) we have

where \(\longrightarrow ^{D}\) means convergence in distributions and N(0, 1) is the standard normal law.

Here \(v_N\) is the sample variance

Thus, for a given \(\varepsilon > 0\)

where \(\Phi _{1-\frac{\varepsilon }{2}}\) is the quantily of the standard normal law, and hence for a large N we have with probability \(1-\varepsilon \) the following asymptotic confidence interval:

for example, if \(\varepsilon = 0.05\), \(\Phi _{1-\frac{\varepsilon }{2}} \approx 1.96\).

4 The basic scheme of the method

The above results can be used as the basis of the Monte Carlo method for numerical approximation and computation of the Grünwald-Letnikov fractional derivatives.

Indeed, we can replace the samples \(Y_1\), \(Y_2\), ..., \(Y_N\) by their Monte Carlo simulations. For the simulation of the random variable Y with distribution (3.1), we introduce the cumulative distribution function

where \(p_k=p_k(\alpha )\) are defined in (3.1). Then

If U is a random variable uniformly distributed on [0, 1], then

and hence, to generate \(Y \in \{ 1, 2, \ldots \}\), we set

Each trial (draw) of the proposed Monte Carlo method includes the following steps.

-

1.

Evaluate \(p_i=p_i(\alpha )\) defined in (3.1).

-

2.

Evaluate \(F_j = \sum \limits _{i=1}^{j} p_i\).

-

3.

Generate N independent uniformly distributed random points, and compute the values \(Y_i\) using (4.1).

-

4.

Evaluate the expression

$$\begin{aligned} A_{N,h}^\alpha f(t) = \frac{1}{h^\alpha } \left[ f(t) - \frac{1}{N} \sum _{k=1}^{N} f(t - Y_k h) \right] \,. \end{aligned}$$(4.2)

After repeating steps 1–4 K times (trials), the mean of the obtained K values gives an approximation of the value of the fractional derivative of order \(\alpha \), \(0<\alpha \le 1\), at point t.

5 Close look at the stages of implementation

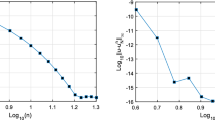

The proposed algorithm has been implemented in MATLAB [13]; this allows useful visualizations and numerical experiments with the functions that are frequently used in the fractional calculus and in fractional-order differential equations.

Obviously, the approximation (4.2) involves the mean of the values of f(t) evaluated at points \(t_k = t - Y_k h\) (\(k = 1, \ldots , N\)), where t is the current point of interest. In other words, all necessary function values are taken with the same weight.

The values \(F_j\) divide the interval [0, 1] into subintervals of unequal length. In Fig. 1 those divisions of [0, 1] are shown for orders \(\alpha =0.3\), \(\alpha =0.5\), and \(\alpha =0.7\). The first point is equal to \(\alpha \), and then the density of points \(F_j\) increases towards 1. This naturally produces larger values of \(Y_k\) towards 0, and smaller values of \(Y_k\) towards 1.

As a result, the points \(t_k\), at which the function f(t) is evaluated, are distributed over the interval [0, t] non-uniformly. In Fig. 2, Fig. 3, and Fig. 4 are shown examples of the distributions of \(t_k\) over [0, t] in some trials (draws) for \(t=5\) and \(\alpha =0.3\), \(\alpha =0.5\), \(\alpha =0.7\). We observe that the density of \(t_k\) towards 1 increases with increasing value of \(\alpha \).

The overall picture reminds some recent efforts in the finite difference methods based on non-equidistant grids or nested meshes [3,4,5,6,7, 14]. The common feature is represented by the higher density of discretization nodes near the current point, and the lesser density far from the current point; see [4, Fig. 1] and [7, Fig 2]. However, in the aforementioned papers the function values at the discretization nodes are taken with different weights, while in the proposed Monte Carlo approach all weights are the same.

The dependence of the density of nodes on \(\alpha \) indicates that the finite difference approaches using non-uniform grids or nested meshes can be improved, if this dependence on the order \(\alpha \) of fractional differentiation would eventually be taken into account.

6 Examples

The following examples demonstrate the use of the proposed Monte Carlo method for fractional-order differentiation. In all examples, the results of the computations are compared with the exact fractional-order derivatives of the function under fractional-order differentiation. The Mittag-Leffler function [11]

that appears in some of the provided examples, is computed using [12]. In all examples the considered interval is sufficiently large, namely \(t \in [0, 10]\). The exact fractional derivatives are plotted using solid lines, the results of the proposed Monte Carlo method are shown by bold points, the results of K individual trials (draws) are shown by vertically oriented small points (in all examples, \(K=100\)), and the confidence intervals are shown by short horizontal lines above and below the bold points.

6.1 Example 1. The Heaviside function

The result for \(\alpha = 0.2\) is shown in Fig. 5, and for \(\alpha = 0.5\) in Fig. 6. Since \(H(t) = 1\) for \(t>0\), these figures represent, in fact, the Grünwald-Letnikov (and Riemann-Liouville) fractional-order derivative of a constant. We see that in this case the sample variance of the values obtained in individual trials (draws) is very small.

6.2 Example 2. The power function

The result for \(\nu = 1.3\) and \(\alpha = 0.5\) is shown in Fig. 7. In this case, the sample variance of the values obtained in individual trials (draws) increases, but the confidence intervals remain sufficiently small.

6.3 Example 3. The exponential function

The result for \(\lambda = 0.1\) and \(\alpha = 0.5\) is shown in Fig. 8. The sample variance of the values obtained in individual trials (draws) increases, but the confidence intervals remain sufficiently small.

6.4 Example 4. The Mittag-Leffler function

The result for \(\lambda = 0.1\) and \(\alpha = 0.5\) is shown in Fig. 9. We see that in this case the sample variance of the values obtained in individual trials (draws) is very small, and the same holds for the confidence intervals.

The result for \(\lambda = 0.1\) and \(\alpha = 0.7\) is shown in Fig. 10. We see that in this case, while the sample variance of the values obtained in individual trials (draws) is large enough, the confidence intervals are still sufficiently small.

6.5 Example 5. The trigonometric functions

Taking into account that \(\sin (t) = t E_{2,2} (-t^2)\) and \(\cos (t) = E_{2,1} (-t^2)\), we have:

The results for (A) and (B) are shown in Fig. 11 and Fig. 12, respectively. We see that in this case also the sample variance of the values obtained in individual trials (draws) is very small, and the same holds for the confidence intervals.

Overall, these examples show that the proposed Monte Carlo method for fractional-order differentiation works well for various kinds of functions that are important for applications of the fractional calculus, and for functions of various kinds of behavior.

7 Concluding remarks: a way to parallelization

In this work, the Monte Carlo method is proposed for approximation and computation of fractional-order derivatives. It can be used for evaluation of all three types of fractional-order derivatives, that usually appear in applications: the Grünwald-Letnikov, the Riemann-Liouville, and also the Caputo fractional derivatives, when they are equivalent to the Riemann-Liouville derivatives [11, Section 3.1].

The proposed method is implemented in the form of a toolbox for MATLAB, and illustrated on several examples. This opens a way to development of a family of Monte Carlo methods for the fractional calculus, using standard methods for enhancements, such as reduction of variance, importance sampling, stratified sampling, control variates or antithetic sampling.

By its nature, the proposed Monte Carlo method for fractional-order differentiation allows parallelization of computations on multiple core processors, GPUs, computer grids, and on parallel computers, and therefore have high potential for applications.

References

Baeumer, B., Haase, M., Kovacs, M.: Unbounded functional calculus for bounded groups with applications. J. of Evolution Equations 9, 71–195 (2009). https://doi.org/10.1007/s00028-009-0012-z

Baeumer, B., Kovacs, M., Sankaranarayanan, H.: Higher order Grünwald approximations of fractional derivatives and fractional powers of operators. Trans. of the Amer. Math. Soc. 367(2), 813–834 (2015). https://doi.org/10.1090/S0002-9947-2014-05887-X

Diethelm, K., Freed, A.: An efficient algorithm for the evaluation of convolution integrals. Computers and Math. with Appl. 51, 51–72 (2006). https://doi.org/10.1016/j.camwa.2005.07.010

Diethelm, K., Garrappa, R., and Stynes, M.: Good (and not so good) practices in computational methods for fractional calculus. Mathematics 8, Art. 324, 1–21 (2020). https://doi.org/10.3390/math8030324

Ford, N., Simpson, A.: The numerical solution of fractional differential equations: speed versus accuracy. Numerical Algorithms 26, 333–346 (2001). https://doi.org/10.1023/A:1016601312158

Li, X.C., Chen, W.: Nested meshes for numerical approximation of space fractional differential equations. The Eur. Phys. J. Special Topics 193, 221–228 (2011). https://doi.org/10.1140/epjst/e2011-01393-3

MacDonald, Ch., Bhattacharya, N., Sprouse, B.P., Silva, G.A.: Efficient computation of the Grünwald-Letnikov fractional diffusion derivative using adaptive time step memory. J. of Comput. Phys. 297, 221–236 (2015). https://doi.org/10.1016/j.jcp.2015.04.048

Machado, J.A.T.: A probabilistic interpretation of the fractional-order differentiation. Fract. Calc. Appl. Anal. 6(1), 73–80 (2003)

Meerschaert, M., Sikorskii, A.: Stochastic Models for Fractional Calculus, 2nd edn. De Gruyter, Berlin (2019)

Meerschaert, M., Scheffler, H.P., Tadjeran, C.: Finite difference methods for two-dimensional fractional dispersion equation. J. of Comput. Phys. 211, 249–261 (2006). https://doi.org/10.1016/j.jcp.2005.05.017

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1999)0125588402

Podlubny, I., and Kacenak, M.: Mittag-Leffler function. MATLAB Central File Exchange, Submission No. 8738 (2006). https://www.mathworks.com/matlabcentral/fileexchange/8738. Retrieved March 19, 2022

Podlubny, I.: MCFD Toolbox. MATLAB Central File Exchange, Submission No. 108264 (2022). https://www.mathworks.com/matlabcentral/fileexchange/108264. Retrieved March 19, 2022

Sprouse, B.P., MacDonald Ch., and Silva G.A.: Computational efficiency of fractional diffusion using adaptive time step memory. Proc. of the 4th IFAC Workshop Fractional Differentiation and its Applications, Badajoz, Spain, 18–20 October 2010 (Eds. I. Podlubny, B. Vinagre, Y.Q. Chen, V. Feliu, I. Tejado). Art. No FDA10-051

Acknowledgements

The authors would like to thank the Isaac Newton Institute for Mathematical Sciences for support and hospitality during the programme Fractional Differential Equations (FDE2), when work on this paper was undertaken. This work was supported by EPSRC grant number EP/R014604/1. Nikolai Leonenko is also partially supported by LMS grant 42997 and ARC grant DP220101680. The work of Igor Podlubny is also supported by grants VEGA 1/0365/19, APVV-18-0526, and 040TUKE-4/2021.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest Statement

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Leonenko, N., Podlubny, I. Monte Carlo method for fractional-order differentiation. Fract Calc Appl Anal 25, 346–361 (2022). https://doi.org/10.1007/s13540-022-00017-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13540-022-00017-3

Keywords

- Fractional calculus (primary)

- Fractional differentiation

- Numerical computations

- Monte Carlo method

- Stochastic processes