Abstract

This study draws on quantitative reasoning research to explain how secondary mathematics preservice teachers’ (PSTs) modelling competencies changed as they participated in a teacher education programme that integrated modelling experience. Adopting a mixed methods approach, we documented 110 PSTs’ competencies in Vietnam using an adapted Modelling Competencies Questionnaire. The results show that PSTs improved their real-world-problem-statement, formulating-a-model, solving-mathematics, and interpreting-outcomes competencies. Showing their formulating-a-model and interpreting-outcomes competencies, PSTs enhanced their quantitative reasoning by properly interpreting the quantities and their relationships using different representations. In addition, the analysis showed a statistically significant correlation between PSTs’ modelling competencies and quantitative reasoning. Suggestions for programme design to enhance modelling competencies are included.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Developing students’ abilities to use mathematics in life has been recognised internationally (ACARA, 2022; OECD, 2013). Therefore, students need ample opportunities to connect the real and mathematical worlds, via modelling and application. In Vietnam, modelling competence is recently featured (Vietnam Ministry of Education & Training, 2018). The curriculum calls for students to develop the following competencies: (a) specify mathematical models (e.g. formulas, equations, tables, and graphs) in real-world situations, (b) solve the mathematical problems that emerge from their models, and (c) represent and evaluate their solutions in the real world. We have prepared secondary preservice teachers (PSTs) for future teaching to meet the reformed curriculum. Our previous studies (e.g., Tran et al., 2018) focused on the design stage of reforming a teacher education programme. This study reports the efficacy of the programme on PSTs’ modelling competencies.

Worldwide studies have focused on the modelling competencies of school students (e.g. Hankeln et al., 2019; Zöttl et al., 2011), post-secondary students (e.g. Crouch & Haines, 2005; Haines et al., 2003), and teachers (Chinnappan, 2010; Yoon et al., 2010) in small-scale qualitative studies. Recurring findings show that modellers often struggle to transition between the mathematical and real worlds (Gould & Wasserman, 2014; Haines & Crouch, 2005). The challenges might stem from the demand to attend to real-world entities and use mathematical objects to describe, explain, and analyse them (Galbraith & Stillman, 2006). This transition involves quantitative reasoning, quantifying, and analysing the relationship between important quantities (e.g. Thompson, 2011). Therefore, quantitative reasoning research provides a promising lens to understand how modellers transition between the mathematical and real worlds.

Thompson regards modelling as mathematics in the context of quantitative reasoning through mathematical notations and methods to reason about relationships among quantities. Despite this argument, few studies link the two traditions — examining modelling from the quantitative reasoning lens (Czocher & Hardison, 2021; Larson, 2013). Moreover, the few that have been undertaken are qualitative studies providing evidence of using quantitative reasoning in analysing students’ thinking in modelling tasks. Therefore, this study draws on the quantitative reasoning lens to explain how PSTs’ competencies change as they participate in a teacher education programme that integrates modelling experience. This study also addresses the research gap suggested by Cevikbas et al. (2022), that there is ‘no worldwide accepted evidence on the effects of short- and long-term mathematical modelling examples and courses in school and higher education on the development of modelling competencies’ (p. 206). This study addresses the research questions:

-

1.

What secondary PSTs’ modelling competencies change when participating in the teacher education programme?

-

2.

How do their modelling competencies change when reasoning quantitatively during the transitions between the real and mathematical worlds?

-

What is the relationship between modelling competencies and quantitative reasoning?

-

Literature review

Mathematical modelling cycles and modelling competencies

A common definition of mathematical modelling and applications has yet to be reached. However, ‘a wider definition would include posing and solving open-ended questions, quantitative tasks linked to real problems, and engaging in applied problem solving generally’ (Haines & Crouch, 2007, p. 417). Questions, tasks, and problems ‘encompass not only practical problems but also problems of a more intellectual nature that aim at describing, explaining, understanding or even designing parts of the world, including issues and questions pertaining to scientific disciplines’ (Niss et al., 2007, p. 8).

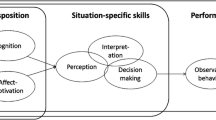

Modelling cycle relates to how the relationship between the mathematical and real worlds is described — a cyclic process of solving real problems using mathematics comprising different phases (or steps) (Kaiser, 2020). Researchers (Blum & Borromeo Ferri, 2009; Galbraith & Stillman, 2006; Haines et al., 2001; Kaiser & Stender, 2013) have described this process using different cycles. Typical phases of the cycles include reconstructing a real-life situation, deciding on an appropriate mathematical model, working in a mathematical environment, interpreting and evaluating results, adjusting the model, and repeating the process until a reasonable result is obtained. The cycles differ in terminology and fine-grained details. For example, Haines et al. (2001) illustrated the seven phases, referred to as mathematical modelling skills, each captured in a rectangle (Fig. 1). The starting point of this cycle is a problem in the ‘real world’ where solvers specify variables, constraints, and assumptions. The problem is translated into the mathematical world to formulate an appropriate model. A corresponding mathematical solution is produced, and the outcomes are interpreted back to the original situation. Then, the modeller evaluates and reports the solution, or revisits and refines their model.

Modelling cycle (Haines et al., 2001, p. 368)

Modelling competencies are considered ‘the competencies to solve real-world problems using mathematics’ (Kaiser, 2020, p. 554). Different approaches of modelling competencies exist (Cevikbas et al., 2022; Kaiser & Brand, 2015). In their review, Kaiser and Brand (2015) distinguished four main competencies research strands, namely (a) the introduction of modelling competencies and an overall comprehensive concept of competencies by the Danish KOM project, (b) the measurement of modelling skills and development of measurement instruments by a British-Australian group, (c) the development of comprehensive concepts based on sub-competencies and their evaluation by a German modelling group, and (d) the integration of metacognition into modelling competencies by an Australian modelling group. The common theme is that all strands (a) include cognitive skills and (b) share a similar approach in using some modelling cycles to describe modelling competencies.

The four research strands followed two approaches to fostering competence: holistic and atomistic (Blomhøj & Jensen, 2007). From a holistic approach, modellers experience the entire cycle when solving problems (e.g. Kaiser & Brand, 2015). From an atomistic approach, modelling competencies are divided into component competencies (e.g. Haines et al., 2001) and the evaluation focuses on only one or some components. Some research has focused on fostering modelling competencies for school students (e.g. Kaiser, 2007), undergraduate students (e.g. Haines et al., 2001), and teachers (Anhalt et al., 2018; Aydin-Güc & Baki, 2019; Durandt & Lautenbach, 2020). When focusing on PSTs (e.g. Aydin-Güc & Baki, 2019; Haines et al., 2001; Kaiser, 2007), these studies did not explicitly investigate changes but described PSTs’ competencies at one point in their projects, such as their engagement with a holistic modelling task (Anhalt et al., 2018; Govender, 2020), or word problems (Winter & Venkat, 2013).

Researchers have argued the advantages and disadvantages of the two approaches (e.g. Hankeln et al., 2019). The holistic approach is better for evaluating the ability to conduct an entire cycle. Researchers describe and analyse modellers’ thinking, reasoning, and blockages when modellers go through the cycle (e.g. Galbraith & Stillman, 2006; Mhakure & Jakobsen, 2021). For instance, based on their empirical data, Galbraith and Stillman proposed a framework for identifying student blockages during the cycle, showing modellers’ reasoning. From the real-world situation to real-world problem statement, modellers clarify the context of the problem by acting out, simulating, representing, and discussing the problem situation; simplifying assumptions; and identifying and specifying strategic entities. During the transition from the real-world problem statement to the mathematical model, they identify relevant variables for inclusion in the mathematical model, represent elements mathematically, and perceive the appropriate mathematical model to be used. From the mathematical solution to its real-world meaning, they identify and contextualise interim and final mathematical results with real-world counterparts, integrating arguments to justify their interpretation. Stillman et al. (2013) further specified the level of intensity, that is, the ‘robustness’ of the blockages, especially during the formulation phase of the modelling cycle. These qualitative studies are rich in detail about modellers’ thinking and reasoning when engaging in modelling activities.

In contrast, the atomistic approach has the advantage of gauging component competencies of many modellers. Researchers have developed standardised instruments to measure modelling competencies at scale. For example, Haines and colleagues developed and validated multiple-choice questions to assess four competencies, namely (a) real-world-problem-statement, (b) formulating-a-model, (c) solving-mathematics, and (d) interpreting-outcomes (Haines & Crouch, 2005; Haines et al., 2000). These studies show that the instruments provided an overview of students’ competencies, focusing exclusively on cognitive skills, at key modelling phases (Haines et al., 2000). The findings reveal what competencies modellers have when dealing with these tasks (in test conditions). However, limited information is provided regarding how the modellers reason with the tasks.

Research (e.g. Galbraith & Stillman, 2006; Haines et al., 2003) shows that students had difficulties transitioning between the mathematical and real worlds. It was challenging for students to consider and select which real-world aspect to mathematise as it needed some level of abstraction, deciding on the mathematics to use, and checking the solutions against reality. The challenges require modellers to deal with quantities and their relationship when selecting entities to mathematise and checking their mathematical solutions against the real-world context. Indeed, MaaB (2006, p. 116) argued that modellers need to:

-

Recognise quantities that influence the situation

-

Name them and identify key variables to construct relations between the variables

-

Mathematise relevant quantities and their relations

-

Simplify relevant quantities and their relations, if necessary

Therefore, we discuss how the quantitative reasoning research tradition can provide nuanced ways to investigate how modellers reason and think in test conditions when they transition between the mathematical and real worlds, exhibiting their formulating-a-model and interpreting-outcomes competencies.

Quantitative reasoning

Quantitative reasoning refers to quantifying and reasoning about the relationship between quantities (Thompson, 2011). Quantitative reasoning includes conceptualising a situation (e.g. aircraft landing), quantities (e.g. distance from a stacking point), and relationships between quantities (e.g. distance and time). Quantities are measurable attributes of an object, and quantification is assigning numerical values to quantities.

Previous quantitative reasoning research has examined students’ understanding of variables (e.g. Michelsen, 2006; White & Mitchelmore, 1996), functions (e.g. Ariza et al., 2015; Carlson et al., 2002; Johnson, 2015), and rate of change (Carlson et al., 2002; Herbert & Pierce, 2011; Mkhatshwa & Doerr, 2018). These studies have found that students did not refer to quantities but perceived variables as algebraic symbols to be manipulated (e.g. Michelsen, 2006; White & Mitchelmore, 1996). They often confused quantities, such as rate and amount (e.g. Mkhatshwa & Doerr, 2018), and had difficulties interpreting rate quantities (e.g. Herbert & Pierce, 2011). Furthermore, students struggled using graphs and formulas to represent and understand relationships between quantities (Ariza et al., 2015; Carlson et al., 2002; Johnson, 2015). Students tended to interpret relationships as linear even though the graph was not (e.g. Johnson, 2015). These studies suggest that, for further investigation, researchers could use representations and their transitions as appropriate contexts for students to express their understanding of quantities and relationships.

Quantitative reasoning researchers have focused on mental images (Moore & Carlson, 2012) to examine how students approach novel problems. For example, Moore and Carlson used interviews to investigate students’ mental images when producing a formula to reason about the volume of a box. Mkhatshwa (2020) argues that these tasks are often ‘geometric’ structures, such as the volume of shapes, but are not embedded in other real-world contexts that require students to map quantities and their relationships into mathematical objects. Put another way, they deal with the connection between the mathematical and real worlds and engage in mathematical modelling (cf. Blum & Borromeo Ferri, 2009) when reasoning quantitatively about real-world contexts.

On this promising synergy, a few studies have examined the connection between the two traditions. Analysing a pair of students working on a modelling activity, Larson (2013) focused on characterising the relationship between their quantitative reasoning and models. She found that the quantities play a central role in students’ models, serving as ‘a central mechanism in model development’ (p. 116). Hagena (2015) built on Larson’s (2013) argument by designing an intervention study to investigate the impact of measurement sense (quantitative reasoning is involved) on modelling competencies. Hagena found that PSTs who focused on measurement sense on length and area outperformed their counterparts on a modelling test. Finally, Czocher and Hardison (2021) highlighted the situation-specific attributes students attend to during modelling. The researchers found that the quantities projected onto the situation formed the basis of students’ conception of the modelling space, referred to as a set of mathematical models a student generates within the task.

In summary, the atomistic approach, although appropriate to investigate the what component of modelling competencies of many modellers, has not adequately examined how the modellers reason when solving modelling tasks. Besides, a few studies have suggested the connection between quantitative reasoning and modelling competencies. Therefore, using quantitative reasoning can help understand modelling competencies, especially when modellers transition between the mathematical and real worlds. By doing this, we also address the gap that there needs to be more research focusing on students’ quantitative reasoning when they engage in modelling problems (Mkhatshwa, 2020). In the current study, we focus on the modelling competencies that modellers exhibit in test conditions, described by Haines and colleagues as modelling skills, acknowledging that other components exist.

Methodology

We adopted a mixed methods approach (Leech & Onwuegbuzie, 2008) using both quantitative and qualitative data to examine changes in PSTs’ reasoning as it helps answer the what and how questions. Quantitatively, we collected PSTs’ performance on a questionnaire measuring modelling competencies. Qualitatively, we collected open-response data on PSTs’ reasoning when transitioning between the mathematical and real worlds. Our focus is primarily on documenting the changes and linking the changes to PSTs’ modelling experiences in the programme. We also seek to find the relationship between modelling competencies and quantitative reasoning. We describe the programme setting, the research instrument, and data collection and analysis.

Setting and participants

A total of 110 PSTs, 39 males and 71 females, in the secondary mathematics teaching programme at a university in central Vietnam participated in the study. The four-year programme includes 40 courses (2–4 credit hours each). We integrated mathematical modelling (as conceived by Haines & Crouch, 2007) with a focus on quantitative reasoning in four mathematics methods courses, Mathematics Teaching Methods and Mathematics Curriculum Development (semester 1 third year, 2017–2018), Assessment of Mathematics Learning (semester 2 third year, 2017–2018), and New Trends in Mathematics Teaching and Learning (semester 1 fourth year, 2018–2019).

Mathematical modelling focusing on quantitative reasoning

The PSTs engaged in various modelling problems (cf. Niss et al., 2007; Tran & Dougherty, 2014) that offer rich quantitative reasoning opportunities (Thompson, 2011). They worked with the problems as active learners and thought about pedagogical challenges in implementing them in future teaching (Table 1).

In semester 1 third year, PSTs engaged with modelling problems adopted from international textbooks as learners. Some 15 problems were integrated throughout the semester, one per week, of which 60% addressed the formulating-a-model and 40% interpreting-outcomes competencies. For example, when solving the aviation problem, ‘Suppose a pilot begins a flight along a path due north, flying at 250 miles per hour. If the wind is blowing due east at 20 miles per hour, what is the resultant velocity and direction of the plane?’ (Boyd et al., 2008, p. 537), PSTs mapped the real-world quantities, direction (north, east) and speed (250 miles per hour, 20 miles per hour), to the mathematical world (vectors in the coordinates) and reasoned about the relationships between the two vectors. This problem required the formulating-a-model competence.

PSTs also engaged with problems that required the interpreting-outcomes competence when transitioning from the mathematical world to the real world. For instance, PSTs solved the problem:

Students in the Hamilton High School science club tried to raise $240 to buy a special eyepiece for the high-powered telescope. The school offered to pay club members for an after-school work project that would clean up a nearby park and recreation centre building. Because the outdoor work was harder and dirtier, the deal would pay $16 for each outdoor worker and $10 for each indoor worker. The club had 18 members eager to work on the project. The diagram below shows graphs of solutions of the equations 16x + 10y = 240 and x + y = 18. [Graphs are provided.] Use the graphs to estimate a solution (x, y) for the system of equations. Explain what the solution tells about the science club’s fund-raising situation. (Hirsch et al., 2008, p. 51)

In this problem, after estimating the solution of the system of equations (working-mathematically), PSTs interpreted the solution to the number of people in the club who need to work outdoor and indoor respectively, if they all work and want to raise $240 (interpreting-outcomes).

In semester 2 third year, we introduced the PISA modelling cycle (OECD, 2013) for PSTs to reflect on and use the cycle to examine students’ engagement in real-world problems. This cycle was chosen due to its simplicity, and it has been introduced in our education system since Vietnam’s first participation in PISA 2012. Although used as an assessment framework, the cycle is applicable in helping PSTs understand how students go through the modelling process. We used PISA problems that offer rich opportunities for quantitative reasoning. For example, PSTs engaged in the Speed of Racing Car (OECD, 2013) to interpret the speed when the racing car travelled over different tracks and mapped it to a given graph. When solving this problem, PSTs were also engaged with the interpreting-outcomes competency when reasoning quantitatively. They mapped the graph to the real-world quantities, distance and speed. In addition, they reasoned about the relationships between the quantities, such as finding ‘the lowest speed recorded during the second lap’. PSTs interpreted how the second lap (distance) was represented as one interval on the graph, and read the lowest speed as the minimum point on that interval. Ten PISA problems were discussed in this semester with eight requiring PSTs to engage in formulating-a-model and two interpreting-outcomes.

In addition to solving the PISA problems, PSTs experienced five standard-application problems (cf. Niss et al., 2007; Tran & Dougherty, 2014). For instance, they engaged in the birthday box (standard-application task) as they considered real-life aspects to design a box containing a birthday cake with provided dimensions. PSTs calculated and minimised the amount of carton paper needed to design the box. In the end, they made the box and tested their design. PSTs went through the entire modelling cycle. PSTs started with the problem of designing a box with the minimum amount of paper (real-world-problem-statement). Next, they associated the amount of paper with the surface area using the constraint of A4 paper size and dimensions of the cake; PSTs assumed the shapes of the box; most used a rectangular prism, some used a cylinder, and a few assumed other shapes (real-world-problem-statement). The real-world problem became finding the shape’s dimensions so that the surface area was a minimum (formulating-a-model). PSTs solved the problem by using a derivative to find the minimum of a function of one of the box’s dimensions (solving-mathematics). Finally, the value was translated to other real-world dimensions of the box (interpreting-outcomes). The PSTs then made the box and tested it using the cake (evaluating-solution). Some PSTs presented their design and justified their solution (reporting). Others thought about improving their design but needed more time to finish it (refining-model). In this problem, PSTs engaged with several quantities, such as the amount of paper, shape, and dimensions associated with the shape, and modelled their relationships using functions.

In semester 1 fourth year, PSTs experienced a true-modelling project (cf. Niss et al., 2007; Tran & Dougherty, 2014) where they designed parking spaces for the university. The report on this task can be found elsewhere (Tran et al., 2018). PSTs experienced an entire modelling cycle and focused on essential quantities, such as the parking area, number and type of vehicles, and the associated space for each type and budget, and found ways to express the relationships between quantities.

Regarding pedagogical knowledge, PSTs integrated real-world problems in one lesson plan and reflected on the inclusion of real-world problems during their third-year field observation. They taught a lesson focusing on using real-world situations in their field teaching in their fourth year. In addition, in semester 1 fourth year, they discussed how to assess peer modelling projects using a rubric developed by the research team (reported elsewhere, Nguyen et al., 2019). For the current study, we focus on PSTs as modellers.

Instrument

We adapted the Modelling Competencies Questionnaire (Crouch & Haines, 2003) because this instrument was used with undergraduate students in the UK and showed its validity and reliability. A difference in the test condition was that the undergraduate students could use graphing calculators when answering the questions, but PSTs did not. Items were chosen and adapted to fit the Vietnamese context, so PSTs had adequate experience (either direct experience or knowledge of the phenomenon). We kept seven items and replaced the Sprinter item in test A with the Aircraft item in test B because of its familiarity to the PSTs. Haines et al. (2001) maintained that pairs of test items perform individually in a comparable manner; therefore, this substitution does not change the instrument’s validity. Table 2 maps each item to the modelling competencies (Crouch & Haines, 2003).

These items in test conditions require modellers to use mathematics (e.g. variables, functions, graphs) to understand, describe, and explain the real world (e.g. sunflower growth, aircraft). The items were drawn from the second strand of research in modelling competencies reviewed above.

The first two items measure the real-world-problem-statement competence. The Bus Stop item asked PSTs to specify assumptions (e.g. shelter, route, and weather) to simplify the real-world problem of choosing locations. Likewise, the Bicycle item asked PSTs to consider real-world factors that addressed the smoothness of the ride.

Three items measure the formulating-a-model competence when the modellers transition from the real world to mathematical world. First, the Supermarket item asked PSTs to choose a specific simulation method for customers’ waiting time at five checkouts. Second, the Evacuation item asked PSTs to specify parameters, variables, or constants in the elapsed time model; the number of people evacuated; and the time of the day without solving the problem. Third, the Ferry item asked PSTs to formulate an objective function to represent the revenue given the deck space restriction and the cost for each vehicle when using the ferry.

The Sunflower-Formula item was situated in the transition between the mathematical and real world (measuring interpreting-outcomes). PSTs chose a formula to represent the height of a sunflower while it grew. Similarly, the Aircraft item asked PSTs to choose a graph representing the relationship between the speed and the distance from the stacking situation to the arrival at the terminal.

The Electricity Cable item asked PSTs to choose an object that could pass beneath the cable hanging by two pylons provided the relationship between the measurement of the distance from the centre of the two pylons and the height of the cable from the ground. This item was considered to measure solving-mathematics.

Informed by quantitative reasoning research and the challenges students faced in previous modelling studies, we added an open-ended item asking PSTs to sketch the development of a sunflower before the item requiring them to choose a formula that models sunflower development. This item, Sunflower-Sketching, situated in the transition from the real world to mathematical world (formulating-a-model), was to explore if PSTs could connect their reasoning when sketching with the choice of formula (Sunflower-Formula). In addition, we asked PSTs to justify their choice to collect their reasoning in two items measuring the transition from the mathematical to real world: Sunflower-Formula and Aircraft. Overall, these three open-ended prompts (Fig. 2) were used to examine how PSTs transitioned between the mathematical and real worlds (associated with the formulating-a-model and interpreting-outcomes modelling competencies) and their use of multiple representations, including verbal, tabular, graphical, and symbolic, showing quantitative reasoning.

Data collection

The instrument was translated into Vietnamese and piloted to check language issues, appropriate contexts, and completion time. After content validation, we administered the adapted questionnaire in a paper-and-pencil format, and PSTs had 60 min to finish the test. Due to a significant time gap between the pre- and post-administrations and the recommendations of repeated applications of the same test to provide comparative results across cohorts (Crouch & Haines, 2003), we used only one form (revised test A). The tests were administered at the beginning of semester 1, year 3 (September 2017), and at the end of their last semester in year 4 (May 2019). A total of 110 PSTs finished both tests.

Data analysis

Quantitative analysis

We applied the partial credits coding (0, 1, 2) for each item measuring modelling competencies (Crouch & Haines, 2003). Descriptive statistics and paired t-tests were run for each item to explore the statistical significance of PSTs’ changes in each item. This analysis addressed research question 1 about what competencies change.

After coding the open-ended responses to three items examining quantitative reasoning (described in the next section), the quantitative reasoning score was achieved by adding the scores associated with the structure of observed learning outcomes (SOLO) taxonomy coding. In the post-test, nearly 100% of PSTs scored the highest in four out of eight items; the data did not provide adequate nuances of PSTs’ modelling competencies. We therefore used the pre-test data to find the relationship between modelling competencies (by adding the scores of eight items) and quantitative reasoning. A bivariate test was run to detect the relationship. This analysis addressed sub-research question 2.

Qualitative analysis

Responses to the three open-ended items were analysed to document how PSTs reasoned when showing their modelling competencies: formulating-a-model and interpreting-outcomes and their changes from pre-test to post-test. First, we investigated PSTs’ writing and sketches to document how they transitioned between the mathematical and real worlds. Informed by quantitative reasoning research, we investigated how PSTs attended to the meaning of quantities and coordinated the quantities to reason about their relationships via graphs, verbals, and formulas. We first applied emergent coding to survey the quantities and the relationships.

To describe patterns from data collected from 110 PSTs at two time points, we found the SOLO taxonomy (Biggs & Collis, 1989) appropriate to code for the number of quantities PSTs reasoned and how PSTs coordinated them when solving the problems. The SOLO framework has been used successfully in previous research to document the growth in complexity of performance in learning tasks. We adapted the SOLO framework describing the hierarchy of responses: (i) pre-structural responses do not address any quantity of the task; (ii) unistructural responses employ a single quantity of the task and do not recognise conflict should it occur; (iii) multi-structural responses sequentially consider more than one quantity and recognise conflict if it occurs but cannot resolve it; and (iv) relational responses create connections among the quantities to form an integrated whole and resolve conflict should it occur.

Sunflower-Sketching

First, we examined PSTs’ graphs, coding for the appropriateness of the quantities modelled: (a) height (the initial height, non-negative, the stationary point of the flower when it reaches its maximum) and (b) rate (increasing in height and varied rates of change (how fast the plant height increases/decreases)). We also referenced PSTs’ graphs to typical graphs in the Vietnamese curriculum (e.g. linear, quadratic, and exponential), as PSTs described the relationships between quantities (height and time).

Second, we documented how PSTs’ reasoning changed from pre-test to post-test using the revised SOLO taxonomy (Fig. 3). When sketching a graph to represent the growth, PSTs went through sequential time intervals, and each interval is independent of the other. No conflict occurred so we combined the last two levels of the SOLO taxonomy. Finally, we grouped their reasoning into idiosyncratic (0), incomplete (1), and complete (2) levels. PSTs who did not provide reasoning or included reasoning inconsistent with the graph were coded at the lowest level. The incomplete level attended to some quantities of the graph in their reasoning. The complete level attended to all quantities above appropriately in their reasoning. Each SOLO level associates with one score (0, 1, 2) for each item.

PST37 (Fig. 3a) produced the graph without justification, coded as idiosyncratic. PST24 (Fig. 3b) drew a linear graph passing through the coordinate and stated that the plant grew over time. This PST attended to three quantities (initial height, non-negative, and increasing height) but did not consider others, thus producing an incomplete graph. At the complete level, PST06 (Fig. 3c) drew a graph that included three intervals, the plant grew quickly, grew slowly when flowering, and then ‘nearly not developed’. The other two quantities (non-negative and initial height) were not stated explicitly but expressed in the graph. This PST used a smooth graph instead of several linear intervals, as shown in Fig. 3a.

Sunflower-Formula

We investigated how PSTs justified why they chose or eliminated formulas. We then checked if they started with the given formula and validated it with the real world (interpreting-outcomes) or considered real-world quantities and mapped them to the formula’s related parts (formulating-a-model). Quantities, including height (initial height (\(f\left(0\right)=0)\), positive height \(\left(f\left(t\right)>0\right)\), maximum height (\({\text{lim}}f\left(t\right)=A))\) and rate (increasing height (\({f}^{\prime}\left(t\right)>0)\) and varied rate of change (\({f}^{^{\prime\prime} }\left(t\right)\ne 0)\)) (can be expressed in verbal language), could be considered. We applied the revised SOLO taxonomy to investigate the changes from pre-test and post-test. An idiosyncratic level did not provide any reason, or the reasoning did not make sense. An incomplete level attended to some quantities that were inadequate to confirm the selection or elimination of options. A complete level provided adequate reasoning regarding the quantities to confirm a correct choice or eliminate others. We also focused on PSTs’ use of symbolic language (e.g. \(limf\left(t\right)=A\)) or verbal language (e.g. the plant approached the maximum height).

Aircraft

We investigated how PSTs justified why a graph was appropriate to model the aircraft behaviour or eliminated an inappropriate graph. We then noted if they started with the graph and validated it with real-world quantities (interpreting-outcomes) or considered real-world quantities and mapped them to the related parts of the graph (formulating-a-model). In this item, PSTs reasoned which quantities (speed, time, and distance (from the ground or the stacking point)) were associated with the axes. There were four graph types, (x, f(x)): (a) distance from the stacking point and speed; (b) distance from the ground and speed; (c) time and speed; and (d) time and distance from the ground or inconsistent application of quantities to the axis. We applied the revised SOLO taxonomy to code the reasoning. Reasoning that did not address the task or make sense was coded as idiosyncratic. An incomplete level reasoning addressed only one correct quantity, and a complete level addressed two quantities and their relationship correctly. We then documented changes in reasoning from the pre- to the post-test.

These analyses address research question 2 by using the ways PSTs reasoned quantitatively when transitioning between the mathematical and real worlds (exhibiting modelling competencies in test conditions).

Results

What modelling competencies changed?

Table 3 summarises PSTs’ performance on the eight items in the pre-test. For two items measuring the real-problem-statement competence, they performed better on Bus Stop (mean 1.43) than Bicycle (mean 0.72). For the three items measuring the formulating-a-model competence, PSTs performed better on the Ferry situation (mean 1.6) when they constructed equations to represent the situation compared with the other two when they specified models (0.82) or variables (0.84) to formulate a mathematical model. For two items measuring the interpreting-outcomes competence, PSTs performed better when a formula (1.00) instead of a graph (0.88) was involved. Finally, they performed moderately well on the item measuring the solving-mathematics competence (1.12) when they substituted the related dimension of the object into the table to find the appropriate height.

PSTs’ performance increased significantly on all items (p < 0.05) from the pre-test to the post-test (Table 4). The most notable improvement occurred on two items measuring interpreting-outcomes (Sunflower-Formula and Aircraft) and solving-mathematics competencies (Electricity Cable). In contrast, the lowest gains were found on two items addressing real-world-problem-statement (Bus Stop and Bicycle Wheel). Medium gains were found on the three remaining items measuring the formulating-a-model competence. In the post-test, nearly all PSTs chose a correct response for the Bus Stop, Ferry, Sunflower-Formula, and Electricity Cable items.

How did modelling competencies change when reasoning quantitatively during transitioning between the mathematical and real worlds?

Sunflower-Graph: Transitioning from the real world to mathematical world

PSTs sketched a graph of a sunflower’s height over time and explained their sketching. Some 108 PSTs created a graph in the post-test compared with 103 who did it in the pre-test. More PSTs drew appropriate graphs representing the growth in the post-test compared to the pre-test (63 to 23). An appropriate graph should consist of three intervals that coordinate two quantities, height and time: increasing rate of change, decreasing rate of change, and approaching a constant.

About half of the PSTs (53/103 pre-test and 55/108 post-test) used piecewise linear graphs to model the intervals. Some 16 PSTs used a quadratic graph in the pre-test, whereas no PST used this graph in the post-test (Fig. 4).

PST88 (Fig. 4a) attended to how the plant grew differently over time using piecewise linear graphs. She showed consistency between her reasoning and graph. In contrast, PST15 (Fig. 4b) reasoned that the plant height increased until flowering in the (0, t) interval and then decreased, creating the quadratic graph.

We examined what quantities the PSTs attended to and the consistency between their reasoning and graph (Table 5). More PSTs moved to the complete level in the post-test (57.3%) compared with those in the pre-test (20.9%). In contrast, about half of the PSTs (49.1%) were coded as idiosyncratic in the pre-test. More PSTs explicitly attended to all but the non-negative height quantities when reasoning about and sketching graphs in the post-test. More PSTs explicitly used the initial height in their reasoning (32 in the post-test and 4 in the pre-test). Some 63 PSTs took the varied rate of change into account in the post-test (vs. 23 in the pre-test). Twice as many PSTs reasoned about the maximum height of the plant (38 in the pre-test and 80 in the post-test).

Sunflower-Formula: Tendency to start from the mathematical world

Crouch and Haines (2003) used the Sunflower-Formula item to measure the interpreting-outcomes competence. More PSTs justified their choice in the post-test (108) compared with those in the pre-test (69). The PSTs started with a mathematical model (symbolic) and interpreted the mathematical outcomes into the real world to check if the model worked. For example, in her reasoning, PST41 evaluated \(f\left(1\right)\) (height of the flower at one time unit) and \(f(0)\) (initial height) and justified the constant growth to eliminate options B, C, D, and E. She then checked three quantities (\(f\left(0\right)\), \({f}{\prime}\left(t\right)>0\) (height increasing), and \(f\left(t\right)<1\) (height reaching maximum)) with option A to confirm her selection (Fig. 5).

In contrast, nine PSTs used their previously produced graph (Sunflower-Sketching) to choose the option (D or E) that matched their graph. This reasoning shows the formulating-a-model competence as they started with the real world, sketched the graph (in the mathematical world), and then chose a formula to match the graph.

The revised SOLO taxonomy coding shows that more PSTs moved to a higher level in the post-test (Table 6). More PSTs’ reasoning was coded as complete (57.3%) in the post-test compared with that in the pre-test (0.9%). This means they attended to the above quantities and their relationships in the post-test. In contrast, 68.2% of the reasoning was coded as idiosyncratic and 30.9% as incomplete in the pre-test.

Not all PSTs explicitly used symbolic representations, such as \(f\left(0\right)\), \({f}^{\prime}\left(t\right)\), \({f}^{{\prime\prime} }(t)\), and \({\text{lim}}f\left(t\right) =A\) in both tests. Only two PSTs used \({f}^{\prime}\left(t\right)\) in the pre-test, whereas 65 used this symbol in the post-test; no PST used \({f}^{{\prime\prime} }\left(t\right)\) in their reasoning, but verbal language instead (real-world quantities). The number of PSTs using \({\text{lim}}f\left(t\right)=A\) reduced from 18 in the pre-test to 12 in the post-test. Seven PSTs used \({f}^{\prime}\left(t\right)\) and \({\text{lim}}f\left(t\right)\) in the post-test, whereas no one used the combination in the pre-test. Instead, they said ‘the plant height increased over time’, ‘the height increased slowly/fast in the intervals’, or ‘reached a maximum height then stopped increasing’. The results revealed that they attended to the quantities but did not explicitly connect them with a symbolic representation.

Aircraft: Tendency to start from the real world

Crouch and Haines (2003) used the Aircraft item to measure the interpreting-outcomes competence. However, all PSTs moved from the real world to mathematical world, showing the formulating-a-model competence. They mapped the real-world quantities to the related parts of the graph. No PST started with a graph and returned to the real world to confirm if the graph was appropriate to the situation. For example, PST56 reasoned that the aircraft first travelled around a fixed height with a constant speed; therefore, the graph should be parallel to the x-axis in this interval. PST56 focused on the constant speed and how it was represented in the graph (parallel to the x-axis). Then, the aircraft started to land and reduced its speed (quantity); thus, the graph should be linear with a negative slope (showing relationships between two quantities, speed and time). Finally, when the aircraft moved along the ground to the terminal, the speed was minimal and approached 0. For these reasons, PST56 chose option D (Fig. 6).

The given graph shows the plane’s distance from the stacking point on the x-axis and the aircraft’s speed on the y-axis. However, only one PST read the quantity associated with the x-axis correctly in the post-test. Fewer PSTs read the x-axis as the distance from the ground (20 to 4 from the pre-test to the post-test). In contrast, more (66) PSTs considered the x-axis as time in the post-test compared with 1 in the pre-test. In the pre-test, over half of the PSTs correctly interpreted the y-axis as indicating the aircraft’s speed (58) compared with nearly all (107) in the post-test. A few PSTs read the y-axis as the distance from the ground in both tests (6 and 2 for pre- and post-tests, respectively). In Table 7, the bolded data indicates the correct interpretation.

Only one PST read the graph correctly in the post-test (0 in the pre-test). Others interpreted the graphs (x, y) as (a) time and distance from the ground or trajectory of the aircraft (6 vs. 2, pre- and post-tests, respectively); (b) time and speed (38 vs. 102, pre- and post-tests, respectively); and (c) distance from the ground and speed (20 vs. 4, pre- and post-tests, respectively). The distance from the ground and speed graph was inappropriate. This interpretation indicates the initial distance was 0, which was inconsistent with the context as the aircraft was above the ground. Some PSTs were unclear about which quantity the axis referred to and misapplied it in different places (Fig. 7).

PST91 (Fig. 7a) chose option D regarding the x-axis as the distance from the stacking point and the y-axis as the speed. PST70 (Fig. 7b) chose option C because ‘the aircraft increased its height before decreasing, after that it approached the ground.’ This PST referred to the x-axis as time (implicitly) and the y-axis as the height from the ground. PST49 (Fig. 7c) chose option D and reasoned how the speed (y-axis) changed over time, ‘it was constant, decreased, and finally approached 0 when the aircraft went into the terminal.’ PST106 (Fig. 7d) provided an indefinite quantity when associating the distance from the ground and the speed with the y-axis. She referred to the height and speed as constant at first; after that, the height decreased (the y-axis), and the speed reduced. We considered the reasoning about the speed reduction referred to the y-axis because the x-axis indicated an increasing quantity.

Only one PST (0.9%) was coded at the highest SOLO taxonomy level in the post-test, and no one achieved this level in the pre-test. On the other hand, nearly all PSTs achieved the incomplete level in the post-test (92.7%), whereas more PSTs were at the idiosyncratic level in the pre-test (65.5%) (Table 8).

Relationship between modelling competencies and quantitative reasoning

The bivariate test for the modelling competency score and the sum of the three quantitative reasoning items shows that the Pearson correlation was 0.236 (p = 0.013). This finding shows a positive linear relationship between the two aspects and that this relationship was statistically significant (p < 0.05). This means that when PSTs perform better in one aspect, they tend to perform better in the other. However, this relationship was weak.

Discussion and implications

Improvement of modelling competencies

This study investigated what and how PSTs’ modelling competencies changed as they participated in a teacher education programme that integrated mathematical modelling experiences focusing on quantitative reasoning. The PSTs’ performance increases were statistically significant in all four modelling competencies, real-world-problem-statement, formulating-a-problem, solving-mathematics, and interpreting-outcomes. The results might be explained by the programme that exposed PSTs as learners to the modelling cycle, solving real-world problems, and thinking about the pedagogical challenges of implementing modelling tasks in their future teaching.

During the last two years of the programme, PSTs engaged in part of the cycle focusing on the transition between the mathematical and real worlds when solving international textbooks and PISA problems. PSTs also engaged in the entire modelling cycle, such as solving standard-application (e.g. birthday box) and true-modelling tasks (e.g. designing university parking). In addition, when introducing the PISA modelling cycle and competencies, we also linked them back to the problems they had solved. Likewise, when PSTs analysed their peer projects, they reflected on the competencies and how their competencies could be improved.

The results resonate with Hagena’s (2015) study’s finding that PSTs’ modelling competencies improved when they focused on measurement sense. However, Hagena’s study investigated what modelling competencies change without detailing how they changed. Our results also confirmed Ikeda’s (2015) conjecture that using PISA problems enhances modelling competencies, and we add that students must engage in other modelling problems to develop the competencies. It also adds to the literature about how PSTs’ modelling experiences contribute to their educational effects (Ikeda & Stephens, 2021). However, Ikeda and Stephens focused on PSTs’ perception of the use of modelling in teaching, not on how modelling competencies change.

More experience with the beginning modelling phases

Although significantly improving their performance, PSTs still had difficulty with three items: (a) Bicycle when they simplified variables to form a real model; (b) Evacuation when they specified parameters, variables, and constants to be included in their mathematical model; and (c) Supermarket when they found a simulation method to address the problem (means were 0.99, 1.17, and 1.33, respectively (out of 2)). The findings confirm previous studies (Haines et al., 2000, 2001) showing that undergraduate students struggled with the beginning phases of the modelling process when they specified assumptions in the real problem or posed assumptions, variables, and factors to formulate a mathematical model. The result suggests that the programme experience should engage PSTs more in the earlier phases of the modelling cycle. In particular, teacher educators could provide ample experience for PSTs to engage with understanding and simplifying/structuring (cf. Blum & Borromeo Ferri, 2009), that is, to work with the nuances in the real world to deal with a real problem, a situation model, and a real model, before formulating a mathematical model.

For simplicity of implementation, we adopted the PISA cycle when introducing modelling to PSTs. This cycle helped PSTs understand mathematical modelling that can be integrated into school curricula, but more nuanced experience was needed. Haines et al. (2003) found that more than 63% of their participants did not show any evidence that the relationship between the mathematical and real worlds was considered, or they interpreted it simply in real-world terms or entirely in terms of reasoning in mathematics without reference to the needs of the model. This lack of connection between the mathematical and real worlds may explain the challenges PSTs had when answering the items. In addition, their undergraduate participants regarded the items as interesting but not necessarily mathematics-related. This finding points to the need to help PSTs connect the mathematical and real worlds and expand their vision of what mathematics can entail, which calls for further investigations.

Enhancing modelling competencies expressed in quantitative reasoning when transitioning between the mathematical and real worlds

When showing their formulating-a-problem and interpreting-outcomes competencies, PSTs enhanced their quantitative reasoning. For example, when sketching a graph to model sunflower growth, more PSTs moved to a higher level on the revised SOLO taxonomy. They coordinated more than one quantity in their reasoning and produced a graph that was consistent with their reasoning. The finding enriches previous understanding (Haines et al., 2001) of how modellers reason when dealing with real-world items answered under test conditions. It highlights that quantities can serve as a signpost to predict blockages and identify appropriate interventions to support modellers (cf. Stillman et al., 2013). The improvement can be associated with the experience throughout the programme when quantities and their relationships were a focus. In many instances (e.g. Aviation problem), we explicitly required PSTs to point out the quantities in the real world and map them to the mathematical world. We also asked PSTs to think about using their mathematical knowledge to represent the relationships between quantities.

The quantitative reasoning literature (e.g. Moore & Carlson, 2012) points to students’ confusion between amount and rate of change and a strong tendency to over-generalise linear phenomena to non-linear processes (Johnson, 2015) when they over-apply a constant rate of change in situations. However, PSTs in our study did distinguish them (height of the plants vs. the changes of height). Furthermore, they used different slopes to showcase their reasoning, although they did not attend to the derivable criterion of the functions (smooth). The choice of contexts (e.g. speed, the rate of growth over time) might impact how learners distinguish between the quantities. In particular, making quantities explicit when solving problems is crucial.

Although PSTs did attend to the rate of change, half of the PSTs used linear piecewise functions in both tests, and some used quadratic functions in the pre-test. The findings resonate with the types of functions PSTs often faced in their prior experiences with the Vietnamese curriculum as school students (Vietnam Ministry of Education & Training, 2006). Vietnamese students rarely find a function to model a real-world situation. Instead, they are exposed to familiar functions, such as polynomial and exponential, focusing on technical mathematics, such as drawing graphs and evaluating values. This finding suggests that teacher education programmes should include more opportunities for PSTs to use different functions to model data and check which models best fit the data. Here, curve-fitting technology can be utilised instead of letting PSTs rely on familiar functions they know (especially from school mathematics) to model them.

When choosing a graph in the Aircraft item, some PSTs had difficulty interpreting the quantities they were referring to and tended to read the x-axis as time. This finding is similar to one experience in the programme when PSTs had difficulties in the Racing task. This difficulty might be explained by the heavy use of time as an x variable, and students did not have enough opportunities to engage with other quantities (Mkhatshwa, 2020).

Identifying the relations between the quantities constituted another point of PSTs’ confusion when solving the Aircraft problem. Only one PST achieved the highest revised SOLO taxonomy level. Moore (2014) found that students relied on non-quantitative features when relating graphs to corresponding physical situations. The students in Moore’s study did not conceive graphs as emergent representations of covarying quantities but approached graphs as objects in and of themselves (e.g. the graph as a continuous motion of the fan). We also found similar reasoning in some PSTs, who regarded the graph as the aircraft’s trajectory. The results suggested that PSTs might need more experience to deal with rate in contexts where time is not considered. Quantitative reasoning researchers (e.g. Moore & Carlson, 2012) added that we must help learners construct a well-developed image of a problem’s context to form a conceptual foundation for interpreting formulas and graphs.

Compared with the study by Haines et al. (2003), our PSTs performed better than their undergraduate counterparts in the Sunflower-Formula item. The difference between our study and the previous one was that undergraduate students could use a graphing calculator to draw the graph from the formula and choose an appropriate one to model the growth. In this study, PSTs without a graphing calculator used a numerical and symbolic approach. In addition, PSTs tended to use verbal reasoning more than symbolic language in justifying why they chose a formula. Aspinwall and Miller (1997, 2001) argued that students’ attempts to express their thinking in words without using derivative and integral symbols enriched their understanding of connections between graphic, algebraic, and numeric representations. Future studies can explore how students and teachers transition between verbal and symbolic representations in these rich modelling tasks.

The result found a weak yet statistically significant positive relationship between modelling competencies and quantitative reasoning. This finding suggests that the two aspects can be developed in relation to each other. This result also expands the findings from a few qualitative studies (e.g. Larson, 2013), highlighting the synergy between the two aspects. Future studies can further investigate other relationships between the two aspects, such as if one helps develop the other or if the two should be developed in parallel.

Some limitations of the present study can be addressed in the future. The items measured only four competencies; some were missing, such as evaluating-solutions, refining-their-model, or reporting. In addition, although we explained the changes observed in the two years, our study could not provide strong evidence for the causal relationships between the intervention and the changes in modelling competencies without a control cohort. Future studies can use an experimental design with a control group to investigate the impact, thereby providing more robust evidence. Finally, as we focused on teachers as learners, future research can continue to discuss the knowledge needed for teaching modelling.

Conclusion

The contribution of this study lies in investigating how modellers reason during two phases of the modelling process when we measure modelling competencies using the atomistic approach. It pinpoints that quantitative reasoning can serve as a critical lens to explain how the PSTs navigated in this process. PSTs’ reasoning in the open-ended responses provided more nuanced information on how modellers solved the problems, and validated which modelling competencies were exhibited when PSTs engaged in the process. By adopting a quantitative measure, we also built further understanding of the relationship between modelling competencies and quantitative reasoning. Finally, the study provides initial evidence of the effectiveness of a teacher education programme on PSTs’ modelling competencies, which was called for by Cevikbas et al. (2022).

Data availability

The data that support the findings of this study are available from the National Foundation for Science and Technology Development (NAFOSTED) Vietnam, but restrictions apply to the availability of these data, which were used under licence for the current study and so are not publicly available. The data are, however, available from the authors upon reasonable request.

References

ACARA. (2022). The Australian curriculum. Retrieved March 30, 2023, from https://www.v9.australiancurriculum.edu.au/

Anhalt, C. O., Cortez, R., & Bennett, A. B. (2018). The emergence of mathematical modelling competencies: An investigation of prospective secondary mathematics teachers. Mathematical Thinking and Learning, 20(3), 202–221. https://doi.org/10.1080/10986065.2018.1474532

Ariza, A., Llinares, S., & Valls, J. (2015). Students’ understanding of the function-derivative relationship when learning economic concepts. Mathematics Education Research Journal, 27(4), 615–635. https://doi.org/10.1007/s13394-015-0156-9

Aspinwall, L., & Miller, D. (1997). Students’ positive reliance on writing as a process to learn first semester calculus. Journal of Instructional Psychology, 24(4), 253–261.

Aspinwall, L., & Miller, D. (2001). Diagnosing conflict factors in calculus through students’ writings: One teacher’s reflections. Journal of Mathematical Behavior, 20(1), 89–107. https://doi.org/10.1016/S0732-3123(01)00063-3

Aydin-Güc, F., & Baki, A. (2019). Evaluation of the learning environment designed to develop student mathematics teachers’ mathematical modelling competencies. Teaching Mathematics and Its Applications, 38(4), 191–215. https://doi.org/10.1093/teamat/hry002

Biggs, J., & Collis, K. (1989). Towards a model of school-based curriculum development and assessment using the SOLO taxonomy. Australian Journal of Education, 33(2), 151–163.

Blomhøj, M., & Jensen, T. H. (2007). What’s all the fuss about competencies? In W. Blum, P. L. Galbraith, H.-W. Henn, & M. Niss (Eds.), Modelling and applications in mathematics education (pp. 45–56). Springer.

Blum, W., & Borromeo Ferri, R. (2009). Mathematical modelling: Can it be taught and learnt? Journal of Mathematical Modelling and Application, 1(1), 45–58.

Boyd, J. C., Cummins, J., Malloy, E. C., Carter, A. J, Flores, A., Hovsepian, V., & Zike, D. (2008). Geometry. Glencoe McGraw-Hill.

Carlson, M. P., Jacobs, S., Coe, E., Larsen, S., & Hsu, E. (2002). Applying covariational reasoning while modeling dynamic events: A framework and a study. Journal for Research in Mathematics Education, 33(5), 352–378. https://doi.org/10.2307/4149958

Cevikbas, M., Kaiser, G., & Schukajlow, S. (2022). A systematic literature review of the current discussion on mathematical modelling competencies: State-of-the-art developments in conceptualising, measuring, and fostering. Educational Studies in Mathematics, 109(2), 205–236. https://doi.org/10.1007/s10649-021-10104-6

Chinnappan, M. (2010). Cognitive load and modelling of an algebra problem. Mathematics Education Research Journal, 22(2), 8–23. https://doi.org/10.1007/BF03217563

Crouch, R. M., & Haines, C. (2003). Do you know which students are good mathematical modellers? Some research developments (No. 83): Technical Report.

Crouch, R., & Haines, C. (2005). Mathematical modelling: Transitions between the real world and the mathematical model. International Journal of Mathematical Education in Science and Technology, 35(2), 197–206. https://doi.org/10.1080/00207390310001638322

Czocher, J. A., & Hardison, H. L. (2021). Attending to quantities through the modelling space. In F. Leung, G. Stillman, G. Kaiser, & K. L. Wong (Eds.), Mathematical modelling education in East and West (pp. 263–272). Springer.

Durandt, R., & Lautenbach, G. V. (2020). Preservice teachers’ sense-making of mathematical modelling through a design-based research strategy. In G. A. Stillman, G. Kaiser, & C. E. Lampen (Eds.), Mathematical modelling education and sense-making (pp. 431–441). Springer.

Galbraith, P., & Stillman, G. (2006). A framework for identifying student blockages during transitions in the modelling process. ZDM: The International Journal on Mathematics Education, 38(2), 143–162. https://doi.org/10.1007/BF02655886

Gould, H., & Wasserman, N. H. (2014). Striking a balance: Students’ tendencies to oversimplify or overcomplicate in mathematical modelling. Journal of Mathematics Education at Teachers’ College, 5(1), 27–34. https://doi.org/10.7916/jmetc.v5i1.640

Govender, R. (2020). Mathematical modelling: A ‘growing tree’ for creative and flexible thinking in preservice mathematics teachers. In G. A. Stillman, G. Kaiser, & C. E. Lampen (Eds.), Mathematical modelling education and sense-making (pp. 443–453). Springer.

Hagena, M. (2015). Improving mathematical modelling by fostering measurement sense: An intervention study with preservice mathematics teachers. In G. A. Stillman, W. Blum, & M. S. Biembengut (Eds.), Mathematical modelling in education research and practice (pp. 185–194). Springer.

Haines, C., & Crouch, R. (2005). Applying mathematics: Making multiple-choice questions work. Teaching Mathematics and Its Applications, 24(2–3), 107–113. https://doi.org/10.1093/teamat/hri004

Haines, C., Crouch, R., & Davis, J. (2001). Understanding students’ modeling skills. In J. F. Matos, S. K. Houston, & W. Blum (Eds.), Modelling and mathematics education: ICTMA 9—Applications in science and technology (pp. 366–380). Horwood Publishing.

Haines, C., & Crouch, R. (2007). Mathematical modelling and applications: Ability and competence frameworks. In In W. Blum, P. L. Galbraith, H.-W. Henn, & M. Niss (Eds.), Modelling and applications in mathematics education: The 14th ICMI study (Vol. 10, pp. 417–424). Springer.

Haines, C., Crouch, R., & Davis, J. (2000). Mathematical modeling skills: A research instrument. University of Hertfordshire, Department of Mathematics Technical Report No. 55. University of Hertfordshire.

Haines, C., Crouch, R., & Fitzharris, A. (2003). Deconstructing mathematical modelling: Approaches to problem solving. In Q-X Ye, W. Blum, K. Houston, Q-Y. Jiang (Eds), Mathematical modelling in education and culture (pp. 41–53). Horwood Publishing.

Hankeln, C., Adamek, C., & Greefrath, G. (2019). Assessing sub-competencies of mathematical modelling—Development of a new test instrument. In G. A. Stillman & J. P. Brown (Eds.), Lines of inquiry in mathematical modelling research in education (pp. 143–160). Springer.

Herbert, S., & Pierce, R. (2011). What is rate? Does context or representation matter? Mathematics Education Research Journal, 23(4), 455–477. https://doi.org/10.1007/s13394-011-0026-z

Hirsch, R. C., Fey, T. J., Hart, W. E., Schoen L. H., Watkins, E. A., Ritsema E. B., Walker, K. R., Keller, S., Marcus, R., Coxford, F. A., Burrill, G. (2008). Core-Plus Mathematics, contemporary mathematics in context, course 2. McGraw-Hill Glencoe.

Ikeda, T. (2015). Applying PISA ideas to classroom teaching of mathematical modelling. In K. Stacey & R. Turner (Eds.), Assessing mathematical literacy: The PISA experience (pp. 221–238). Springer.

Ikeda, T., & Stephens, M. (2021). Investigating preservice teachers’ experiences with the “A4 Paper Format” modelling task. In F. K. S. Leung, G. A. Stillman, G. Kaiser, & K. L. Wong (Eds.), Mathematical modelling education in East and West (pp. 293–304). Springer.

Johnson, H. L. (2015). Secondary students’ quantification of ratio and rate: A framework for reasoning about change in covarying quantities. Mathematical Thinking and Learning, 17(1), 64–90. https://doi.org/10.1080/10986065.2015.98194

Kaiser, G. (2007). Modelling and modelling competencies in school. In C. Haines, P. Galbraith, W. Blum, & S. Khan (Eds.), Mathematical modelling: Education, engineering and economics (pp. 110–119). Horwood Publishing.

Kaiser, G. (2020). Mathematical modelling and applications in education. In S. Lerman (Ed.), Encyclopedia of mathematics education (pp. 553–561). Springer.

Kaiser, G., & Brand, S. (2015). Modelling competencies: Past development and further perspectives. In G. A. Stillman, W. Blum, & M. S. Biembengut (Eds.), Mathematical modelling in education research and practice (pp. 129–149). Springer.

Kaiser, G., & Stender, P. (2013). Complex modelling problems in co-operative, self-directed learning environments. In G. Stillman, G. Kaiser, W. Blum, & J. Brown (Eds.), Teaching mathematical modelling: Connecting to research and practice (pp. 277–293). Springer.

Larson, C. (2013). Modeling and quantitative reasoning: The summer jobs problem. In R. Lesh, P. L. Galbraith, C. R. Haines, & A. Hurford (Eds.), Modeling students’ mathematical modeling competencies (pp. 111–118). Springer.

Leech, N., & Onwuegbuzie, A. (2008). A typology of mixed methods research designs. Quality and Quantity, 43(2), 265–275. https://doi.org/10.1007/s11135-007-9105-3

MaaB, K. (2006). What are modelling competencies? ZDM – the International Journal on Mathematics Education, 38(2), 113–142. https://doi.org/10.1007/BF02655885

Mhakure, D., & Jakobsen, A. (2021). Using the modelling activity diagram framework to characterise students’ activities: A case for geometrical constructions. In F. Leung, G. Stillman, G. Kaiser, & K. L. Wonk (Eds.), Mathematical modelling education in East and West (pp. 413–422). Springer.

Michelsen, C. (2006). Functions: A modelling tool in mathematics and science. ZDM: the International Journal on Mathematics Education, 38(3), 269–280. https://doi.org/10.1007/BF02652810

Mkhatshwa, T. P. (2020). Calculus students’ quantitative reasoning in the context of solving related rates of change problems. Mathematical Thinking and Learning, 22(2), 139–161. https://doi.org/10.1080/10986065.2019.1658055

Mkhatshwa, T., & Doerr, H. (2018). Undergraduate students’ quantitative reasoning in economic contexts. Mathematical Thinking and Learning, 20(2), 142–161. https://doi.org/10.1080/10986065.2018.1442642

Moore, K. C. (2014). Quantitative reasoning and the sine function: The case of Zac. Journal for Research in Mathematics Education, 45(1), 102–138. https://doi.org/10.5951/jresematheduc.45.1.0102

Moore, K. C., & Carlson, M. P. (2012). Students’ images of problem contexts when solving applied problems. Journal of Mathematical Behavior, 31(1), 48–59. https://doi.org/10.1016/j.jmathb.2011.09.001

Nguyen, A., Nguyen, D., Ta, P., & Tran, T. (2019). Preservice teachers engage in a project-based task: Elucidate mathematical literacy in a reformed teacher education program. International Electronic Journal of Mathematics Education, 14(3), 657–666.

Niss, M., Blum, W., & Galbraith, P. (2007). Introduction. In W. Blum, P. L. Galbraith, H.-W. Henn, & M. Niss (Eds.), Modelling and applications in mathematics education: The 14th ICMI study (Vol. 10, pp. 3–32). Springer.

OECD. (2013). Mathematics framework. In OECD PISA 2012 assessment framework (pp. 83–123). OECD Publishing.

Stillman, G., Brown, J., & Galbraith, P. (2013). Identifying challenges within transition phases of mathematical modeling activities at year 9. In R. Lesh, P. L. Galbraith, C. R. Haines, & A. Hurford (Eds.), Modeling students’ mathematical modeling competencies: ICTMA 13 (pp. 385–398). Springer.

Thompson, W. (2011). Quantitative reasoning and mathematical modeling. In S. Chamberlin, L. L. Hatfield, & S. Belbase (Eds.), New perspectives and directions for collaborative research in mathematics education: WISDOMˆe Monographs (Vol. 1, pp. 33–57). University of Wyoming.

Tran, D., & Dougherty, B. J. (2014). Authenticity of mathematical modeling. The Mathematics Teacher, 107(9), 672–678. https://doi.org/10.5951/mathteacher.107.9.0672

Tran, D., Nguyen, T. T. A., Nguyen, T. D., Ta, T. M. P, Nguyen, G. N. T. (2018). Preparing preservice teachers to teach mathematical literacy: A reform in a teacher education program. In Y. Shimizu, & R. Vihal (Eds.), Proceedings of the twenty-fourth ICMI Study school mathematics curriculum reforms: Challenges, changes and opportunities (pp. 405−412). Tsukuba, Japan.

Vietnam Ministry of Education and Training. (2006). School mathematics curriculum. Hanoi, Vietnam.

Vietnam Ministry of Education and Training. (2018). School mathematics curriculum. Hanoi, Vietnam.

White, P., & Mitchelmore, M. (1996). Conceptual knowledge in introductory calculus. Journal for Research in Mathematics Education, 27(1), 79–95. https://doi.org/10.2307/749199

Winter, M., & Venkat, H. (2013). Preservice teacher learning for mathematical modelling. In G. A. Stillman, G. Kaiser, W. Blum, & J. P. Brown (Eds.), Teaching mathematical modelling: Connecting to research and practice (pp. 395–404). Springer.

Yoon, C., Dreyfus, T., & Thomas, M. O. (2010). How high is the tramping track? Mathematising and applying in a calculus model-eliciting activity. Mathematics Education Research Journal, 22(2), 141–157. https://doi.org/10.1007/BF03217571

Zöttl, L., Ufer, S., & Reiss, K. (2011). Assessing modelling competencies using a multidimensional IRT approach. In G. Kaiser, W. Blum, R. Borromeo Ferri, & G. Stillman (Eds.), Trends in the teaching and learning of mathematical modelling (pp. 427–437). Springer.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

Ethics approval was waived by the University of Education, Hue University, as this is not required currently.

Consent to participate

The researchers explained the study to the preservice teachers in plain language statement, and consent form was agreed by the preservice teachers.

Consent for publication

The plain language statements provided details of participation and use of data in publications.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nguyen, A.T.T., Tran, D. Quantitative reasoning as a lens to examine changes in modelling competencies of secondary preservice teachers. Math Ed Res J (2024). https://doi.org/10.1007/s13394-023-00481-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13394-023-00481-x