Abstract

In this paper we give a solution to the quickest drift change detection problem for a multivariate Lévy process consisting of both continuous (Gaussian) and jump components in the Bayesian approach. We do it for a general 0-modified continuous prior distribution of the change point as well as for a random post-change drift parameter. Classically, our criterion of optimality is based on a probability of false alarm and an expected delay of the detection, which is then reformulated in terms of a posterior probability of the change point. We find a generator of the posterior probability, which in case of general prior distribution is inhomogeneous in time. The main solving technique uses the optimal stopping theory and is based on solving a certain free-boundary problem. We also construct a Generelized Shiryaev-Roberts statistic, which can be used for applications. The paper is supplemented by two examples, one of which is further used to analyze Polish life tables (after proper calibration) and detect the drift change in the correlated force of mortality of men and women jointly.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The quickest detection problems (also called the disorder problems) try to answer a question: how to detect significant changes in the observed system? We assume that the system is described as a certain probabilistic model and the goal is to use appropriate statistical methods to find the change in an optimal way. First, however, it is necessary to specify what optimal means in that context. Such problems are natural in many applications, such as economics, finance or engineering. One of the classical approaches to disorder problems is based on the drift change detection and on the Bayesian approach—see Shiryaev [29, 30], where Brownian motion with linear drift is considered and the drift is changing according to an exponential distribution. The original problem was first reformulated there in terms of a free-boundary problem. Then it was solved using optimal stopping methods. All details of this analysis are also given in surveys [35, 37] (see also references therein). Apart from Baysian method, the minimax approach have also been studied in the context of detection problems. This method is based on identifying the optimal detection time based on so-called cumulative sums (CUSUM) strategy; see e.g. Page [20], Beibel [3], Shiryaev [32] or Moustakides [18] in the Wiener case, or El Karoui et al. [14] in the Poisson case. The book of Poor and Hadjiliadis [26] gathers many approaches to these quickest detection problems. In this paper we choose the first approach.

Our first main goal is to perform the analysis of the quickest drift change detection problem for multivariate processes, taking into account the dependence between components. We also allow a general 0-modified continuous prior distribution of the change point, as well as random post-change drift parameter.

Most of works on the detection problems in Bayesian setting has been devoted to the one-dimensional processes consisting of only continuous (Gaussian) part or only jumps; see e.g. Beibel [2], Shiryaev [30] or [36, Chap. 4] or Poor and Hadjiliadis [26]. Only some particular cases of jump models without diffusion component have been already analysed, e.g. by Gal’chuk and Rozovskii [11], Peskir and Shiryaev [23] or Bayraktar et al. [4] for the Poisson process, by Gapeev [12] for the compound Poisson process with the exponential jumps or by Dayanik and Sezer [7] for more general compound Poisson problem. Later, Krawiec et al. [15] allowed observed process to have, apart from diffusion ingredient, jumps as well. This is very important in many applications appearing in actuarial science, finance, etc. Lévy processes have appeared in the context of the optimal detection in other last works as well. In particular, in [5] Buonaguidi studies the disorder problem for purely jump Lévy processes with completely monotone jumps. In this case, the solution to the disorder problem for a hyperexponential process is used to approximate the one of the original problem. The efficiency of the proposed approximation scheme is investigated for some popular Lévy processes, such as the gamma, inverse Gaussian, variance-gamma and CGMY processes. Moreover, in [10] Figueroa-López and Ólafsson prove that CUSUM procedure is optimal in Lorden’s sense for change-point detection for Lévy processes. What is interesting, their approach is based on approximating the continuous-time problem by a suitable sequence of change-point problems with equispaced sampling points, and for which a CUSUM procedure is shown to be optimal. Similar ideas can be found in [6, 9, 41]. Still, all of these results concern one-dimensional case only. This paper removes this limitation.

In addition, we assume that a drift change point \(\theta \) has a general 0-modified continuous prior distribution G. In most works it has been assumed that \(\theta \) can have only (0-modified) exponential distribution. Such assumption makes the free-boundary problem time-homogenous due to lack of memory property, which is not true in the general case. Furthermore, similarly like in Dayanik et al. [8], we assume that the direction of disorder is a random vector \(\zeta \) with known prior multivariate distribution function H(r). That is, after the change of drift, a new drift is chosen according to the law H. In the case when H is a one-point distribution we end up with the classical question where the after-change drift is fixed and different than zero. In the case when H is supported on two points in \({\mathbb R}^d\) we know that after-change drift may take one of the two possible values with known weights. Even for \(d=1\) this additional feature of our model gives much more freedom and has not been analyzed in detail yet.

The methodology used in this paper is based on transferring the detection problem to a certain free-boundary problem. More formally, in this paper we consider the process \(X=(X_t)_{t\ge 0}\) with

where \(X^{\infty }=(X^{\infty }_t)_{t\ge 0}\) and \(X^{(0,r)}=(X^{(0,r)}_t)_{t\ge 0}\) are both independent jump-diffusion processes taking values in \({\mathbb R}^d\). We assume that \(X^{\infty }\) and \(X^{(0,r)}\) are related with each other via the exponential change of measure described e.g. in Palmowski and Rolski [21]. This change of measure can be seen as a form of the drift change between \(X^{\infty }\) and \(X^{(0,r)}\) with additional change in jump distribution. The parameter r corresponds to the rate (direction) of disorder that can be observed after time \(\theta \).

Let \(\theta \) has an atom at zero with mass \(x>0\). We choose the classical optimality criterion based on both probability of a false alarm and a mean delay time. That is, in this paper, we are going to find an optimal detection rule \(\tau ^*\in {\mathcal {T}}\) for which the following infimum is attained

where \({\mathcal {T}}\) is the family of stopping times and \(c>0\) is fixed number. Measure \({\overline{{\mathbb P}}}^{G,H}\) will be formally introduced later. Firstly, we transfer above detection problem into the following optimal stopping problem

for the a posteriori probability process \(\pi =(\pi _t)_{t\ge 0}\) that also will be formally introduced later. The subscript x associated with \({\overline{{\mathbb E}}}^{G,H}\) indicates the starting position of process \(\pi \) equal to x. In the next step, using the change of measure technique and stochastic calculus, we can identify the infinitesimal generator of the Markov process \(\pi \). This part contains results of independent interest on properties of the posterior process \(\pi \), that are related to the multidimensionality of the process X. In the classical case with exponential distribution G, \(\pi \) is time-homogenous with generator \({\mathcal {A}}\). Finally, we formulate the free-boundary value problem, which in the time-homogenous case is as follows

with the boundary conditions

for some optimal level \(A^*\) which allows to identify the threshold optimal alarm rule as

We first generalize above free-boundary problem and then solve it for two basic models: two-dimensional Brownian motion with known post-change drift and two-dimensional Brownian motion with downward exponential jumps.

Our second main goal is to apply the solution of above multivariate detection problem to the analysis of correlated change of drift in force of mortality of men and women. The life expectancies for men and women are widely recognized as dependent on each other. For example, married people live statistically longer than single ones. Since many insurance products are engineered for marriages or couples it is crucial to detect the change of mortality rate of marriages. Indeed, the observed improvements of longevity produce challenges related with the capital requirements that has to be constituted to face this long-term risk and with creating new ways to cross-hedge or to transfer part of the longevity risk to reinsurers or to financial markets. To do this we need to perform accurate longevity projections and hence to predict the change of the drift observed in prospective life tables (national or the specific ones used in insurance companies). In this paper we analyze the Polish life tables for both men and women jointly. We proceed as follows. We take logarithm of the force of mortality of men and women creating a two-dimensional process, modeled then by a jump-diffusion process. This process consists of observed two-dimensional drift that can be calibrated from the historical data and a random zero-mean Lévy-type perturbation. Based on previous theoretical work we construct a statistical and numerical procedure based on the generalized version of the Shiryaev-Roberts statistic introduced by Shiryaev [29, 30] and Roberts [27], see also Polunchenko and Tartakovsky [24], Shiryaev [33], Pollak and Tartakovsky [25] and Moustakides et al. [19]. Precisely, we start from a continuous statistic derived from the solution of the optimal detection problem in continuous time. Then we take discrete moments \(0<t_1<t_2<\ldots <t_N\), construct an auxiliary statistic and raise the alarm when it exceeds certain threshold \(A^*\) identified in the first part of the paper.

The set-up used in examples is, however, simplified compared to the theory presented in the previous sections. Applications focus mainly on multi-dimensionality of presented problem, to see how one can analyse mortality of men and women jointly. The distribution of change time \(\theta \) is limited to classical (0-modified) exponential.

The paper is organized as follows. In Sect. 2 we describe basic setting of the problem, introduce main definitions and notation. In this section we also formulate main theoretical results of the paper. Section 3 is devoted to the construction of the Generalized Shiryaev-Roberts statistic. To apply it, we first need to find some density processes related to the processes X prior and post the drift change. This is done in Sect. 3 as well. Particular examples are analyzed in Sect. 4. Next, in Sect. 5, we give an application of the theoretical results to a real data from life tables. We finish our paper with some technical proofs given in Sect. 6.

2 Model description and main results

The main observable process is a regime-switching d-dimensional process \(X=(X_t)_{t\ge 0}\). It changes its behavior at a random moment \(\theta \) in the following way:

where \(X^{\infty }\) and \(X^{(0,r)}\) are two different independent Lévy processes related with each other via exponential change of measure specified later. The parameter r describes the drift after time \(\theta \). We will also assume that in general drift r is driven by a random vector \(\zeta \) with a given distribution H(r), which we formally introduce later. Moreover, this drift is chosen at time \(t=0\). Further, \(\theta \) is independent of the pre- and post-change random processes.

We assume the following model when the post-change drift equals r. The process that we observe after the change of drift is a d-dimensional processes \(X^{(0,r)} = (X^{(0,r)}_t)_{t\ge 0} = (X^{(0,r)}_{t,1},\ldots ,X^{(0,r)}_{t,d})_{t\ge 0}\) defined as

where

-

\(W^r=(W_t^{r})_{t\ge 0}=(W_{t,1}^{r},\ldots ,W_{t,d}^{r})^T\) is a vector of standard independent Brownian motions,

-

\(\sigma =(\sigma _{i,j})_{i,j=1,\ldots ,d}\) is a matrix of real numbers, responsible for the correlation of the diffusion components of \(X^{(0,r)}_{t,1},\ldots ,X^{(0,r)}_{t,d}\), we assume that \(\sigma _{ii}>0\) for all \(i=1,\ldots ,d\),

-

\(r=(r_1,\ldots ,r_d)^T\) is a vector of an additional drift,

-

\(N^{(0,r)}=(N_t^{(0,r)})_{t\ge 0}\) is a Poisson process with intensity \(\mu ^r\),

-

\((J_k^{(0,r)})_{k\ge 1}\) is a sequence of i.i.d. random vectors responsible for jump sizes; we denote each coordinate of \(J_k^{(0,r)}\) by \(J_{k,i}^{(0,r)}\) for \(i=1,\ldots ,d\) and its distribution by \(F^{r}_i\) with mean \(m^{r}_i\); we also denote by \(F^{r}\) a joint distribution of vector \(J_k^{(0,r)}\) and by \(m^{r}=(m^r_1,\ldots ,m^r_d)^T\) its mean.

We assume that all components of \(X_t^{(0,r)}\) are stochastically independent, i.e. \(W_t^{(0,r)}\), \(N_t^{(0,r)}\) and the sequence \((J_k^{(0,r)})_{k=1,2,\ldots }\) are independent.

Similarly, we assume that the process that we observe prior the drift change is a d-dimensional process \(X^{\infty } = (X^{\infty }_t)_{t\ge 0} = (X^{\infty }_{t,1},\ldots ,X^{\infty }_{t,d})_{t\ge 0}\) defined as

where

-

\(W^{\infty }=(W_t^{\infty })_{t\ge 0}\) is a vector of standard independent Brownian motions,

-

matrix \(\sigma \) is the same as for the process \(X^{(0,r)}\),

-

\(N^{\infty }=(N_t^{\infty })_{t\ge 0}\) is a Poisson process with intensity \(\mu ^{\infty }\),

-

\((J_k^{\infty })_{k\ge 1}\) is a sequence of i.i.d. random vectors, where each coordinate \(J_{k,i}^{\infty }\) of \(J_k^{\infty }\) has distribution \(F^{\infty }_i\) with mean \(m^{\infty }_i\); we also denote by \(F^{\infty }\) a joint distribution of vector \(J_k^{\infty }\) and by \(m^{\infty }=(m^{\infty }_1,\ldots ,m^{\infty }_d)^T\) its mean.

To formally construct the model with a drift change described above, we follow the ideas of Zhitlukhin and Shiryaev [42]. Precisely, we consider a filtered measurable space \((\Omega , \mathcal {F}, \{\mathcal {F}_t\}_{t\ge 0})\) with a right-continuous filtration \(\{\mathcal {F}_t\}_{t\ge 0}\), on which we define a stochastic system with disorder as follows. First, on a probability space \((\Omega , \mathcal {F}, \{\mathcal {F}_t\}_{t\ge 0})\) we introduce probability measures \({\mathbb P}^{\infty }\) and \({\mathbb P}^{(0,r)}\) for \(r\in {\mathbb R}^d\) with their restrictions to \({\mathcal {F}}_t\) given by \({\mathbb P}^{\infty }_t:={\mathbb P}^{\infty }|_{\mathcal {F}_t}\) and \({\mathbb P}^{(0,r)}_t:={\mathbb P}^{(0,r)}|_{\mathcal {F}_t}\). We assume that for each \(t\ge 0\) the restrictions \({\mathbb P}^{\infty }_t\) and \({\mathbb P}^{(0,r)}_t\) are equivalent. The measure \({\mathbb P}^{\infty }\) corresponds to the case when there is no drift change in the system at all and \({\mathbb P}^{(0,r)}\) describes the measure under which there is a drift r present from the beginning (i.e. from \(t=0\)). In the following we assume that both measures correspond to laws of the processes \(X^{\infty }\) and \(X^{(0,r)}\) described above, respectively. We also introduce a probability measure \({\mathbb P}\) that dominates \({\mathbb P}^{\infty }\) and \({\mathbb P}^{(0,r)}\) for each \(r\in {\mathbb R}^d\) and such that the restriction \({\mathbb P}_t:={\mathbb P}|_{\mathcal {F}_t}\) is equivalent to \({\mathbb P}^{\infty }_t\) and \({\mathbb P}^{(0,r)}_t\) for each \(t\ge 0\).

We define the Radon-Nikodym derivatives

Furthermore, for \(s\in (0,\infty )\) we define

Finally, for any fixed \(s\in (0,\infty )\) and \(r\in {\mathbb R}^d\), taking a consistent family of probability measures \(({\mathbb P}_t^{(s,r)})_{t\ge 0}\) defined via

by the Kolmogorov’s existence theorem we can define measures \({\mathbb P}^{(s,r)}\) such that \({\mathbb P}^{(s,r)}|_{\mathcal {F}_t}={\mathbb P}^{(s,r)}_t\). Note that for \(t<s\) and all \(r\in {\mathbb R}^d\) the following equality holds

since disorder after time t does not affect the behavior of the system before time t.

We consider Bayesian framework, that is, we assume that the moment of disorder is a random variable \(\theta \) with a given distribution function denoted by G(s) on \(({\mathbb R}_+,\mathcal {B}({\mathbb R}_+))\). We assume that G(s) is continuous for \(s>0\) with right derivative \(G'(0)>0\). Similarly, we assume that the rate (direction) of disorder is a random vector \(\zeta \) with multivariate distribution function H(r) on \(({\mathbb R}^d,\mathcal {B}({\mathbb R}^d))\). Hence, to catch this additional randomness we have to introduce an extended filtered probability space \(({\overline{\Omega }},{\overline{\mathcal {F}}},\{\overline{\mathcal {F}_t}\}_{t\ge 0},{\overline{{\mathbb P}}}^{G,H})\) such that

Measure \({\overline{{\mathbb P}}}^{G,H}\) is defined for \(A\in \mathcal {F}, B\in \mathcal {B}({\mathbb R}_+)\) and \(C\in \mathcal {B}({\mathbb R}^d)\) as follows

On this extended space random variables \(\theta \) and \(\zeta \) are defined by \(\theta (\omega ,s,r):=s\) and \(\zeta (\omega ,s,r):=r\) with \({\overline{{\mathbb P}}}^{G,H}(\theta \le s)=G(s)\) and \({\overline{{\mathbb P}}}^{G,H}(\zeta _1\le r_1,\ldots ,\zeta _d\le r_d)=H(r)\) for \(\zeta =(\zeta _1,\ldots ,\zeta _d)\) and \(r=(r_1,,\ldots ,r_d)\). Observe that measure \({\overline{{\mathbb P}}}^{G,H}\) describes formally the process X defined in (2).

In the problem of the quickest detection we are looking for an optimal stopping time \(\tau ^*\) that minimizes certain optimality criterion. We consider a classical criterion, which incorporates both the probability of false alarm and the mean delay time. Let \(\mathcal {T}\) denote the class of all stopping times with respect to the filtration \(\{\overline{\mathcal {F}_t}\}_{t\ge 0}\). Our problem can be stated as follows:

Problem 1

For a given \(c>0\) calculate the optimal value function

and find the optimal stopping time \(\tau ^*\) for which above infimum is attained.

Above \(\overline{{\mathbb E}}^{G,H}\) means the expectation with respect to \(\overline{{\mathbb P}}^{G,H}\).

The key role in solving this problem plays a posterior probability process \(\pi =(\pi _t)_{t\ge 0}\) defined as

We denote \(x:=\pi _0=G(0)\) and add a subscript x to \(\overline{{\mathbb E}}^{G,H}_x\) to emphasize it. Using this posterior probability, one can reformulate criterion (8) into the following, equivalent form:

Problem 2

For a given \(c>0\) find the optimal value function

and the optimal stopping time \(\tau ^*\) such that

That is, formally, the following result holds true.

Lemma 1

The criterion given in Problem 1 is equivalent to the criterion given in Problem 2.

Although the proof follows classical arguments, we added it in Sect. 6 for completeness.

Below we formulate the main theorem that connects Problem 2 to the particular free-boundary problem. It is based on the general optimal stopping theory in the similar way as Theorem 1 in Krawiec et al. [15], which it extends. However, for the general (continuous for \(s>0\) with right derivative \(G'(0)>0\)) distribution G(s) of the moment \(\theta \), the optimal stopping problem and its solution are time-dependent. The problem reduces to time-independent case for the (0-modified) exponential distribution G. We will prove it in Sect. 6.

Theorem 1

Let \(\left( \frac{\partial }{\partial t}+{\mathcal {A}}\right) \) be a Dynkin generator of the Markov process \((t,\pi _t)_{t\ge 0}\). Then the optimal value function \(V^*(x)\) from the Problem 2 equals \(f_0(x)\), where \(f_t(x)\) solves the free-boundary problem

with the boundary conditions

Furthermore, the optimal stopping time for the Problem 2 is given by

If G is the (0-modified) exponential distribution, then \(V^*(x)\) solves above free-boundary problem for the unique point \(A^*~\in ~(0,1]\) not depending on time with the optimal stopping time given by

Further, in this case \(f_t(x)=f_0(x)=f(x)\) and additionally the following condition holds

See also Peskir and Shiryaev [22, Chap. VI. 22], Krylov [16, p. 41], Strulovici and Szydlowski [40, Thm. 4] and [1] for details.

It is known that the Dynkin generator is an extension of an infinitesimal generator in the sense of their domains. Following [22, Chap. III] and discussion done on page 131 of [22] (see also the proof of [13, Prop. 2.6]) we can conclude that the optimal value function \(V^*(t,x)\) satisfies (10) where \(\left( \frac{\partial }{\partial t} + {\mathcal {A}}\right) \) is an infinitesimal generator as long as there exists unique solution of (10) lying in the domain of infinitesimal generator.

Now to formulate properly above free-boundary problem, we have to identify the infinitesimal generator \(\left( \frac{\partial }{\partial t} + {\mathcal {A}}\right) \) and its domain. They are given in next theorem. We use notation \(f_t(x) = f(t,x)\) for functions \(f: ([0,\infty ), [0,1]) \rightarrow {\mathbb {R}}\).

Theorem 2

The infinitesimal generator of the Markov process \((t,\pi _t)_{t\ge 0}\) is given by \(\frac{\partial }{\partial t}f_t(x) +{\mathcal {A}} f_t(x)\) for

and for functions \(f_t\in {\mathcal {C}}^2\). If G(s) is the (0-modified) exponential distribution, then \((\pi _t)_{t\ge 0}\) is a Markov process with generator \({\mathcal {A}}\) given as above with term \(-(1-x)(\log (1-G(t)))'\) substituted by \(G'(0)\) for functions \(f_t(x)=f(x)\) not depending on \(t\ge 0\).

We will prove this theorem later in Sect. 6.

Assume that we can find unique solution of (10)-(12) in the class \({\mathcal {C}}^2\) with \({\mathcal {A}}\) given in (16) then by above considerations it follows that this solution equals the value function \(V^*(t,x)\). Therefore in the final step we focus on the simple time-homogeneous case of exponential time change case, then we solve uniquely (10)-(12) for some specific choice of model parameters, and finally, find the optimal threshold \(A^*\) and hence the optimal alarm time. This allows us to construct a Generalized Shiryaev-Roberts statistic in this general set-up. Later we apply it to detect the changes of drift in joint (correlated) mortality of men and women based on life tables.

3 Generalized Shiryaev-Roberts statistic

Following Zhitlukhin and Shiryaev [42] and Shiryaev [31, II.7] and using the generalized Bayes theorem, the following equality for process \(\pi \) defined in (9) is satisfied

We will give another representation of the process \(\pi \) in terms of the process \(L^r=(L_t^r)_{t\ge 0}\) defined by

To find above Radon-Nikodym derivative such that process X defined in (2) indeed admits representation (3) under the measure \({\mathbb P}^{(0,r)}\) and (4) under \({\mathbb P}^{\infty }\), we assume that for given \(r=(r_1,\ldots ,r_d)\in {\mathbb R}^d\) the following relation holds

where for \(x=(x_1,\ldots ,x_d)\) the function \(h_r(x): {\mathbb R}^d\rightarrow {\mathbb R}\) is given by

Above the coefficients \(z_{r,1}\ldots z_{r,d}\) solve the following system of equations:

Theorem 3

Assume that (19) holds for given \(r\in {\mathbb R}^d\) and that the Radon-Nikodym derivative \(L^r=(L_t^r)_{t\ge 0}\) defined in (18) is given by

where

Then the process X defined in (2) admits representation (3) under the measure \({\mathbb P}^{(0,r)}\) and (4) under \({\mathbb P}^{\infty }\).

The proof will be given in Sect. 6.

Having the density process \(L^r\) defined in (18) identified in above theorem, we introduce an auxiliary process

Then by (6), (17) and (18) the following representation of \(\pi _t\) holds true

where the last equality follows from the definition of \(L_t^{(s,r)}\) in (6) for \(t<s\). By the Itô’s formula applied to (24) we obtain that \(\psi ^r_t\) solves the following SDE

The construction of the classical Shiryaev-Roberts statistic (SR) is in detail described and analyzed e.g. by Shiryaev [33], Pollak and Tartakovsky [25] and Moustakides et al. [19]. In this paper we consider Generalized Shiryaev-Roberts statistic (GSR). We start the whole construction from taking the discrete-time data \(X_{t_i}\in {\mathbb {R}}^d\) observed in moments \(0=t_0<t_1<\ldots <t_n\), where n is a fixed integer. We assume that \(t_i-t_{i-1}=1\) for \(i=1,\ldots n\). Let \(x_k:=X_{t_k}-X_{t_{k-1}}\) for \(k=1,\ldots ,n\). Since X is a d-dimensional process, \(x_k\) is a d-dimensional vector \(x_k=(x_{k,1},\ldots ,x_{k,d})\).

Considering a discrete analogue of (24) we define the following statistic

where from equation (22) we take

for \(n>0\) and \(L_0^r=1\). Above \(G(0)=x\) corresponds to an atom at 0.

For convenience it can be also calculated recursively as follows:

Recall from Theorem 1 that the optimal stopping time is given by

for some optimal level \(A^*\). Therefore from identity (25) we can introduce the following Generalized Shiryaev-Roberts statistic

and raise the alarm of the drift change at the optimal time of the form

Note that formally, we first choose a direction of new drift r according to distribution H. Then we apply statistic \({\widetilde{\psi }}_n^r\) to identify the GSR statistic by \({\widetilde{\pi }}_n= \frac{{\widetilde{\psi }}_n^r}{{\widetilde{\psi }}_n^r+1-G(n)}\) and hence to raise the alarm at the optimal level \(A^*(n)\).

We emphasize that the GSR statistic is more appropriate in longevity modeling analyzed in this article than the standard one (i.e. SR). Indeed, the classical statistic is a particular case when \(\theta \) has an exponential distribution with parameter \(\lambda \) tending to 0. The latter case corresponds to passing with mean value of the change point \(\theta \) to \(\infty \) and hence it becomes conditionally uniform, see e.g. Shiryaev [33]. Still, in longevity modeling it is more likely that life tables will need to be revised more often and therefore keeping dependence on \(\lambda >0\) in our statistic seems to be much more appropriate. For the similar reasons we also prefer to fix average moment of drift change \(\theta \) instead of fixing the expected moment of the revision time \(\tau \).

To apply above strategy we will focus on the exponential time of drift change. In this case we have to identify the optimal alarm level \(A^*\) in the first step and hence we have to solve the free-boundary value problem (10)–(12). We analyze two particular examples in the next section.

4 Examples

4.1 Two-dimensional Brownian motion

Consider the process X without jumps (i.e. with jump intensities \(\mu ^{\infty }=\mu ^r=0\)). In terms of processes \(X^{(0,r)}\) and \(X^{\infty }\) given in (3) and (4) it means that

Assume that

Then the first coordinate \(X^{(0,r)}_{t,1}\) is a Brownian motion with drift and with variance \(\sigma ^2_1\) and the second coordinate \(X^{(0,r)}_{t,2}\) is also a Brownian motion with drift and with variance \(\sigma ^2_2\). The correlation of the Brownian motions on both coordinates is equal to \(\rho \). Process \(X^{\infty }\) has similar characteristics but without any drift.

Next, assume that, conditioned on \(\theta >0\), \(\theta \) is exponentially distributed with parameter \(\lambda >0\), i.e.

and that there is only one possible post-change drift \(r_0\in {\mathbb {R}}^2\), that is

where \(\delta \) means a Dirac measure. Then the generator of process \(\pi \) according to (16) is equal to

where \(z_{r_{0},1}\) and \(z_{r_{0},2}\) solve the following system

Our goal is to solve the boundary value problem (10)–(12) where generator \({\mathcal {A}}\) is given by (28). Note that the system (10) takes now the following form

where

Observe that above equations allow us to refer to the classical Shiryaev problem, with our constant B included. Hence, from Shiryaev [29, 35] it follows that solution of above equation is given by

where

for

The exact values of function y(x) can be found numerically, while the threshold \(A^*\) can be found from the equation \(y(A^*)=-1\), which is the boundary condition (12).

4.2 Two-dimensional Brownian motion with one-sided jumps

The second example concerns similar 2-dimensional Brownian motion model as in the previous example, but with additional exponential jumps. Assume that \(\mu ^{\infty },\mu ^r>0\) and

In other words, jump sizes on each coordinate \(j\in \{1,2\}\) of process \(X^{\infty }\) are independent of each other and distributed exponentially with mean \(w_j>0\). Additionally, we assume as in the previous example that

and that there is only one possible post-change drift \(r_0\in {\mathbb {R}}^2\), that is

Jump distribution given by (29) together with Theorem 3 allows us to formulate the following lemma.

Lemma 2

Assume that jump distribution \(F^{\infty }\) of the process \(X^{\infty }\) is given by (29) and jump intensity is equal to \(\mu ^{\infty }\). Assume also that there exists a vector \(z_{r_0}=(z_{r_0,1},\ldots ,z_{r_0,d}\)) satisfying the system (21) such that \((\forall _{1\le j \le 2})(|w_jz_{r_0,j}|<1)\). Then the following distribution function \(F^{r_0}\) and intensity \(\mu ^{r_0}\) satisfy the condition (19):

Proof

By the combination of (19) and (20) we obtain that

which can be rearranged to

Now it is sufficient to observe that above formula is equal to the product \(\mu ^{r_0} F^{r_0}\) given by (30) and that \(F^{r_0}\) is indeed a proper distribution by the assumption that \((\forall _{1\le j \le 2})\) \((|w_jz_{r,j}|<1)\). \(\square \)

Remark 1

Considering the jump distributions \(F^{\infty }\) and \(F^{r_0}\) given by (29) and (30), the system (21) consists of equations

where

and

Remark 2

Distribution \(F^{r_0}\) given by (30) has similar characteristics to \(F^{\infty }\). More precisely: jumps on both coordinates \(X^{(0,r_0)}_{t,1}\) and \(X^{(0,r_0)}_{t,2}\) are independent, exponentially distributed with means \(\frac{w_1}{1-w_1z_{r_0,1}}\) and \(\frac{w_2}{1-w_2z_{r_0,2}}\), respectively.

The generator \({\mathcal {A}}\) given by (16) for jump distributions specified above can be expressed as

The integral part of \({\mathcal {A}}\) can be further simplified. For \(\alpha _1,\alpha _2>0\) we define the following integrals

and

Lemma 3

Assume that \(\alpha _1, \alpha _2, z_{r,1}, z_{r,2} > 0\) and \(\frac{\alpha _1}{z_{r,1}}\ne \frac{\alpha _2}{z_{r,2}}\). Then for \(x\in (0,1]\),

for \(\beta _1=\frac{\alpha _1}{z_{r,1}}\) and \(\beta _2=\frac{\alpha _2}{z_{r,2}}\) and

Using similar arguments like in Krawiec et al. [15] that there exists unique solution of the system equations (10)-(12), hence whole estimation procedure can be applied.

Remark 3

In Lemma 3 we restrict calculations to the case \(\beta _1\ne \beta _2\), but similar transformations of the integral may be made for the case \(\beta _1=\beta _2\) as well. The difference will appear in the distribution of random variable \(S^r\) present in the proof (see Sect. 6) being the sum of two exponential random variables.

Denote \(\gamma _i:=\frac{1}{z_{r_0,i}w_i}\) for \(i\in \{1,2\}\). Then from Lemma 3 the generator \({\mathcal {A}}\) given in (31) can be rewritten as follows

Equation \({\mathcal {A}}f(x)=-cx\) in the free-boundary value problem can be further simplified to get rid of the integrals and then solved numerically to find the threshold \(A^*\). We believe that this particular case may be finally solved numerically in a similar way as in the numerical analysis described in Krawiec et al. [15], since here we obtain equation of the same order and similar characteristics. However, in this article we focus our applications on the previous example, which is used in practice in the next section.

Remark 4

The results of above example are derived under the assumption of positive exponential jumps. However, the whole analysis can be also conducted for negative exponential jumps, i.e. for the distribution

Then we can use part of Lemma 3 concerning \(I_-^r(x)\) to derive the generator \({\mathcal {A}}\) given by

5 Application to the force of mortality

Now we are going to give an important example of applications, which concerns modeling of the force of mortality process. We will analyze the joint force of mortality for both men and women. We observe this process over the past decades and check if and when there have been significant changes of drift.

To achieve this goal, we introduce two-dimensional process of the force of mortality \(\mu :=(\mu _t)_{t\ge 0}=((\mu _t^1,\mu _t^2))_{t\ge 0}\). We interpret this process as follows:

-

the first coordinate \(\mu _t^1\) represents force of mortality of men, while the second one \(\mu _t^2\) represents force of mortality of women (of course they are correlated),

-

the time t runs through consecutive years of life tables, e.g. if \(t=0\) corresponds to the year 1990, then \(t=10\) corresponds to the year 2000,

-

the age of people is fixed for a given process \(\mu \), i.e. if \(\mu _0\) concerns 50-year old men and women, then \(\mu _{10}\) also concerns 50-year old men and women, but in another year.

The representation of the force of mortality process is given by

where \(\log {\bar{\mu }}_t:=(\log \mu _t^1, \log \mu _t^2)\) is a deterministic part equal to

Above \(a_0=(a_0^1, a_0^2)\) is a known initial force of mortality vector of men and women and \(a_1=(a_1^1, a_1^2)\) is a vector of a historical drift per one year. It is worth to mention here that our model is similar to the Lee-Carter model (for fixed age \(\omega \), cf. [17] ):

where \(a_{\omega }\) is a chosen number, \(k_t\) is certain univariate time series and \(\epsilon _{\omega ,t}\) is a random error. However, Lee-Carter method focuses on modeling the deterministic part of the force of mortality, while our detection procedure concerns controlling the random perturbation in time, precisely the moment when it substantially changes. This model is univariate as well in contrast to our two-dimensional mortality process.

In our numerical analysis the stochastic part \(X_t\) will be modeled by the two-dimensional Brownian motion analyzed in Example 4.1. We apply this model to the life tables downloaded from the Statistics Poland website [39]. We would like to emphasize that in this article we focus our applications on multivariate modeling of both men and women jointly. For this reason we simplify other assumptions here, such as the distribution of \(\theta \), random post-change drift or adding jumps into the model. However, as we have mentioned in Example 4.2, adding jumps into the model is also possible, but results in much more difficult equations to solve. To apply model from Example 4.2 one needs to solve the equation with generator given by (32). This equation has similar form to the one that was solved in Krawiec et al. [15] for the univariate case. There it has been solved numerically after the thorough analysis. Similar method should be applicable here for the model introduced in Example 4.2.

The first step concerns the model calibration. We start with some historical values of the force of mortality \({\hat{\mu }}_0,\ldots ,{\hat{\mu }}_n\), where each \({\hat{\mu }}_i=({\hat{\mu }}_{i,1},{\hat{\mu }}_{i,2})\) is a two-dimensional vector (one coordinate for women and one for men). We estimate \(a_1\) as a mean value of log-increments of \({\hat{\mu }}_0,\ldots ,{\hat{\mu }}_n\). Precisely,

where

A little more attention is needed to calibrate the stochastic part X, which includes correlation. Denote

and the increments

We estimate \(\sigma _1\) as a standard deviation of the vector \((x_{1,1},x_{2,1},\ldots ,x_{n,1})\). Similarly, \(\sigma _2\) is calculated as a standard deviation of a vector \((x_{1,2},x_{2,2},\ldots ,x_{n,2})\). Finally, we calculate \(\rho \) as the sample Pearson correlation coefficient of vectors \((x_{1,1},x_{2,1},\ldots ,x_{n,1})\) and \((x_{1,2},x_{2,2},\ldots ,x_{n,2})\).

There are still some model parameters that have to be chosen a priori. In particular, we have to declare the anticipated incoming drift \(r_0\), the probability \(x=\pi _0={\overline{{\mathbb P}}}^{G,H}(\theta =0)\) that the drift change occurs immediately, the parameter \(\lambda >0\) of the exponential distribution of \(\theta \) and parameter c present in criterion stated in the Problem 2.

We assume their values at the following level:

-

\(\lambda =0.1\). It is the reciprocal of the mean value of \(\theta \) distribution conditioned to be strictly positive. Such choice reflects the expectation that the drift will change in 10 years on average.

-

\(x (={\overline{{\mathbb P}}}^{G,H}(\theta =0))=0.1\). This parameter should be rather small (unless we expect the change of drift very quickly).

-

\(c=0.1\). It is the weight of the mean delay time inside the optimality criterion stated in the Problem 1. It reflects how large delay we can accept comparing to the risk of false alarm. We have chosen rather small value and connected it to \(\lambda \) by choosing \(c=\lambda \).

-

Drift incoming after the moment \(\theta \)—we have connected the anticipated value of \(r_0\) to \(\sigma \) by \(r_0=(\sigma _1,\sigma _2)\). In practice we suggest to adjust the choice of \(r_0\) to the analysis of sensitivity of e.g. price of an insurance contract.

In Table 1 we sum up all parameters that were used (both calibrated and arbitrary chosen ones) in the numerical analysis. The calibration interval was set to years 1990–2000.

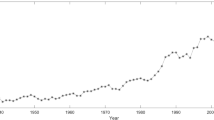

In the Fig. 1 we present exemplary plot of the force of mortality for women at age 60 through years 1990–2017. Most of the time it is decreasing, but we can observe a stabilization period around years 2002–2009. According to (33) we first take logarithm of the force of mortality, separate deterministic linear part and then model the remaining part by the process X given by (27). Figure 2 presents historical observations of this remaining part for the same data as in the Fig. 1.

The results of the detection algorithm for the force of mortality of 60-year old men and women jointly are presented in the Fig. 3. The change of drift for given parameters was detected in year 2006 (red vertical line in the first two plots). The threshold \(A^*\) for the optimal stopping time is here equal to 0.85, which is indicated by the red horizontal line in the third plot presenting values of \(\pi =(\pi _t)_{t\in \{1990,\ldots ,2017\}}\).

Note that calibration of parameters (including historical drift) has been done for interval 1990–2000, when the force of mortality was mostly decreasing. After year 2002 it stayed at a stable level for several years, which was detected as a change of drift. This change of behavior is even more evident in the Fig. 2, where we can observe that process X is mostly increasing through the years 2002–2009. This example shows that our detection method does not necessarily rise the alarm after the first observed deviation, but rather after it becomes more evident, that the change of drift actually has happened. Therefore, it copes well with cases of gradually changing drift, as long as eventually observed process significantly deviates from the model.

An important note need to be given at the end. This procedure is strongly dependent on parameters chosen to the model—e.g. post-change drift vector \(r_0\), which was chosen depending on \(\sigma _1\) and \(\sigma _2\), to give appropriate order of magnitude. Furthermore, the theoretical results contained in this article allows us to consider random drift. One good example may be a distribution of drift concentrated on several points around \(\sigma _1\) and \(\sigma _2\) at each coordinate. It gives much more freedom since it is not clear if the fixed magnitude of drift change equal to the historical standard deviation is enough.

Similarly, one may assume more general distribution of \(\theta \) or—assuming (0-modified) exponential distribution—connect \(\lambda \) to historically observed mean time between significant drift changes. Of course, it may be impossible if there were none or very few changes observed in the past. Thorough analysis of such cases is out of the scope of this article. However, as far as the applications are concerned, the goal should be to choose parameters allowing to detect drift change before it is too late. So if there is not enough historical data then one may arbitrary choose rather small mean of the prior distribution (e.g. 10 years as in our example), which should result in quicker detection. Then in the worst case scenario one will recalibrate the model too soon, obtaining similar parameters as before the recalibration.

A full analysis of the impact of individual parameters on the results should be the subject of further research, developing the applications of the detection method described in our paper.

6 Proofs

Proof of Lemma 1

Note that

Moreover, observe that by Tonelli’s theorem we have:

Putting together (36) and (37) completes the proof. \(\square \)

Proof of Theorem 1

We start from the observation that process \(((s,\pi _s))_{s\ge 0}\) is Markov which follows from Theorem 2. Let

Then \(V^*(x)=V^*(0,x)\). Moreover, for fixed \(t\ge 0\) the optimal value function \(V^*(t,x)\) is concave, which follows from [15, Lem. 3] and the assumption that distribution function G(t) of \(\theta \) is continuous for \(t>0\). Observe that from Theorem 2 it follows that \(((s,\pi _s))_{s\ge 0}\) is stochastically continuous and thus function \((t, x)\rightarrow \overline{{\mathbb E}}^{G,H}\left\{ \left[ 1-\pi _{\tau }+c\int _0^{\tau }\pi _s\textrm{d}s\right] \bigg |\pi _t=x\right\} \) is continuous for any fixed stopping time \(\tau \). Thus from [22, Rem. 2.10, p. 48] we know that the value function \(V^*(t,x)\) is lsc. Let

be an open a continuation set and \(D = C^c\) be a stopping set. From [22, Cor. 2.9, p. 46] we know that \(C=[0,A^*(t))\) and that the stopping rule given by

is optimal for Problem 2. Moreover, we have

and by [22, Chap. III] the optimal value function \(V^*(t,x)\) satisfies the following system

where \({\mathcal {A}}\) is a Dynkin generator. Using the same arguments like in the proofs of [15, Lem. 6 and Lem. 7] we can prove the boundary conditions \(f_t(A^{*}(t)-)=1-A^*(t)\) and \(f^\prime _t(A^{*}(t)-)=-1\).

Finally, if G is exponential, then \((\pi _s)_{s\ge 0}\) is Markov by Theorem 2. Now we can do the same arguments but taking simply \(V^*(x)\) instead \(V^*(t,x)\) above. Moreover, (15) follows from the same arguments like in the proof of [15, Lem. 6]. This completes the proof. \(\square \)

Proof of Theorem 2

First, we will find the SDE which is satisfied by process \(\pi _t^r\). By the definition of the process X for each \(i=1,\ldots ,d\), we get

Denote the continuous part of the process by an additional upper index c. Then

For the process \(L^r\) given in (22), by the Itô’s formula we obtain

where

By (26) we conclude that

Recall that by (25) we have

Then, using Itô’s formula once again we obtain

Moreover,

Together with the system of equations (21) it produces

Jump part of \(\pi _t\) is equal to \(\int _{{\mathbb R}^d}\Delta \pi _t^r\textrm{d}H(r)\), where

Using the Itô’s formula one more time completes the proof. \(\square \)

Proof of Theorem 3

The proof is based on the technique of exponential change of measure described in Palmowski and Rolski [21].

Firstly, we will prove that the process \((L_t^r)_{t\ge 0}\) satisfies the following representation

for the function \(h(x):=h_r(x)\) given in (20), where \({\mathcal {A}}^{\infty }\) is an extended generator of the process X under \({\mathbb P}^{\infty }\) and h is in its domain since it is twice continuously differentiable. Then from Theorem 4.2 by Palmowski and Rolski [21] it follows that the generator of X under \({\mathbb P}^{(0,r)}\) is related with \({\mathcal {A}}^{\infty }\) by

On the other hand, from the definition of the infinitesimal generator or using the Theorem 31.5 in Sato [28] it follows that for twice continuously differentiable function \(f(x_1,\ldots ,x_d):{\mathbb R}^d\rightarrow {\mathbb R}\) generators \({\mathcal {A}}^{\infty }\) and \({\mathcal {A}}^r\) are given by

For \(h_r(x)\) given by (20) we obtain

Further, since

and

then

Hence, we obtain

and thus \(L_t^r\) given in (22) indeed satisfies the representation (40) for function \(h_r(x)\) given by (20).

To finish the proof it is sufficient to show that the generator \({\mathcal {A}}^r\) given by (43) indeed coincides with the generator given in (41) for \(h(x)=h_r(x)\). First, by (23) we get

Second, (42) produces

Hence

Finally, using the system of equations (21) completes the proof. \(\square \)

Proof of Lemma 3

First observe that \(I_+^r(x)\) is equal to the expectation

where \(T_1\) and \(T_2\) are two independent random variables with exponential distributions \(\textrm{Exp}(\alpha _1)\) and \(\textrm{Exp}(\alpha _2)\), respectively. Then \(z_{r,1}T_1\sim \textrm{Exp}\left( \frac{\alpha _1}{z_{r,1}}\right) \), \(z_{r,2}T_2\sim \textrm{Exp}\left( \frac{\alpha _2}{z_{r,2}}\right) \) and the density of \(S^r:=\sum _{i=1}^2z_{r,i}T_i\) is given by

Hence, the expectation (44) equals

Next, we can integrate above integral by parts to obtain

and by substitution \(v:=\frac{xe^y}{x(e^y-1)+1}\) (hence \(y=\ln \left( \frac{v(1-x)}{x(1-v)}\right) \)) we derive

which completes the first part of the proof.

The formula for \(I_-^r(x)\) can be derived by substitution \(u:=-y\) to get

which, by the same arguments as for \(I_+^r(x)\), is equal to

Integration by parts together with substitution of \(v:=\frac{xe^{-y}}{x(e^{-y}-1)+1}\) \(\Bigg (\text {hence}\, y=-\ln \left( \frac{v(1-x)}{x(1-v)}\right) \Bigg )\) gives

which completes the second part of the proof. \(\square \)

References

De Angelis T, Peskir G (2020) Global \(C^1\) regularity of the value function in optimal stopping problems. Ann Appl Probab 30(3):1007–1031

Beibel M (1994) Bayes problems in change-point models for the Wiener process. In: Lecture notes-monograph series, pp 1–6

Beibel M (1996) A note on Ritov’s Bayes approach to the minimax property of the cusum procedure. Ann Stat 24(4):1804–1812

Bayraktar E, Dayanik S, Karatzas I (2005) The standard Poisson disorder problem revisited. Stoch Process Appl 115(9):1437–1450

Buonaguidi B (2020) The disorder problem for purely jump Lévy processes with completely monotone jumps. J Stat Plann Inference 205:203–218

Coquet F, Toldo S (2007) Convergence of values in optimal stopping and convergence of optimal stopping times. Electron J Probab 12:207–228

Dayanik S, Sezer SO (2006) Compound Poisson disorder problem. Math Oper Res 31(4):649–672

Dayanik S, Goulding C, Poor HV (2008) Bayesian sequential change diagnosis. Math Oper Res 33(2)

Dolinsky Y (2010) Applications of weak convergence for hedging of game options. Ann Appl Probab 20:1891–1906

Figueroa-López JE, Ólafsson S (2019) Change-point detection for Lévy processes. Ann Appl Probab 29(2):717–738

Gal’chuk LI, Rozovskii B (1971) The “disorder’’ problem for a Poisson process. Theory Probab Appl 16(4):712–716

Gapeev PV (2005) The disorder problem for compound Poisson processes with exponential jumps. Ann Appl Probab 15(1A):487–499

Jacka SD (1991) Optimal stoppong and the American put. Math Finance 1(2):1–14

El Karoui N, Loisel S, Salhi Y (2017) Minimax optimality in robust detection of a disorder time in Poisson rate. Ann Appl Probab 27(4):2515–2538

Krawiec M, Palmowski Z, Płociniczak L (2018) Quickest drift change detection in Lévy-type force of mortality model. Appl Math Comput 338:432–450

Krylov N (1980) Controlled diffusion processes. Springer, Berlin

Lee RD, Carter LR (1992) Modeling and forecasting US mortality. J Am Stat Assoc 87(419):659–671

Moustakides GV (2004) Optimality of the CUSUM procedure in continuous time. Ann Stat 302–315

Moustakides GV, Polunchenko AS, Tartakovsky AG (2009) Numerical comparison of CUSUM and Shiryaev-Roberts procedures for detecting changes in distributions. Commun Stat Theory Methods 38(16–17):3225–3239

Page ES (1954) Contnuous inspection schemes. Biometrika 41(1–2):100–115

Palmowski Z, Rolski T (2002) A technique for exponential change of measure for Markov processes. Bernoulli 8(6):767–785

Peskir G, Shiryaev AN (2006) Optimal stopping and free-boundary problems. Springer, Berlin

Peskir G, Shiryaev AN (2002) Solving the Poisson disorder problem. In: Advances in finance and stochastics. Springer, Berlin, pp 295–312

Polunchenko AS, Tartakovsky AG (2012) State-of-the-art in sequential change-point detection. Methodol Comput Appl Probab 14(3):649–684

Pollak M, Tartakovsky AG (2009) Optimality properties of the Shiryaev–Roberts procedure. Statistica Sinica 1729–1739

Poor HV, Hadjiliadis O (2009) Quickest detection, vol 40. Cambridge University Press, Cambridge

Roberts S (1966) A comparison of some control chart procedures. Technometrics 8(3):411–430

Sato K (1999) Lévy processes and infinitely divisible distributions. In: Cambridge studies in advanced mathematics, vol 68. Cambridge University Press, Cambridge

Shiryaev AN (1961) The problem of the most rapid detection of a disturbance in a stationary process. Sov Math Dokl 2:795–799

Shiryaev AN (1963) On optimum methods in quickest detection problems. Theory Probab Appl 8(1):22–46

Shiryaev AN (1996) Probability, volume 95 of graduate texts in mathematics

Shiryaev AN (1996) Minimax optimality of the method of cumulative sums (cusum) in the case of continuous time. Russ Math Surv 51(4):750

Shiryaev AN (2002) Quickest detection problems in the technical analysis of the financial data. In: Mathematical finance—Bachelier congress 2000. Springer, Berlin, pp 487–521

Shiryaev AN (2004) A remark on the quickest detection problems. Stat Decis 22(1/2004):79–82

Shiryaev AN (2006) From “disorder” to nonlinear filtering and martingale theory. In: Mathematical events of the twentieth century. Springer, Berlin, pp 371–397

Shiryaev AN (2007) Optimal stopping rules, vol 8. Springer, New York

Shiryaev AN (2010) Quickest detection problems: fifty years later. Seq Anal 29(4):345–385

Shkolnikov V, Barbieri M, Wilmoth J. The Human Mortality Database. http://www.mortality.org/

Statistics Poland. Life expectancy in Poland. http://www.stat.gov.pl/en/topics/population/life-expectancy/life-expectancy-in-poland,1,3.html

Strulovici B, Szydlowski M (2015) On the smoothness of value functions and the existence of optimal strategies in diffusion models. J Econ Theory 159:1016–1055

Szimayer ARA (2007) Maller finite approximation schemes for Lévy processes, and their application to optimal stopping problems. Stoch Process Appl 117:1422–1447

Zhitlukhin M, Shiryaev AN (2013) Bayesian disorder problems on filtered probability spaces. Theory Probab Appl 57(3):497–511

Acknowledgements

This work is partially supported by National Science Centre, Poland, under grants No. 2018/29/B/ST1/00756 (2019-2022) and 2016/23/N/HS4/02106 (2017–2020).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Krawiec, M., Palmowski, Z. Multivariate Lévy-type drift change detection and mortality modeling. Eur. Actuar. J. 14, 175–203 (2024). https://doi.org/10.1007/s13385-023-00350-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13385-023-00350-8