Abstract

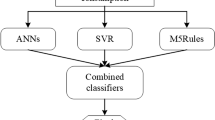

Energy being a precious resource needs to be mindfully utilized, so that efficiency is achieved and its wastage is curbed. Globally, multi-storeyed buildings are the biggest energy consumers. A large portion of energy within a building is consumed to maintain the desired temperature for the comfort of occupants. For this purpose, heating load and cooling load requirements of the building need to be met. These requirements should be minimized to reduce energy consumption and optimize energy usage. Some characteristics of buildings greatly affect the heating load and cooling load requirements. This paper presented a systematic approach for analysing various factors of a building playing a vital role in energy consumption, followed by the algorithmic approaches of traditional machine learning and modern ensemble learning for energy consumption prediction in residential buildings. The results revealed that ensemble techniques outperform machine learning techniques with an appreciable margin. The accuracy of predicting heating load and cooling load, respectively, with multiple linear regression was 88.59% and 85.26%, with support vector regression was 82.38% and 89.32%, with K-nearest neighbours was 91.91% and 94.47%. The accuracy achieved with ensemble techniques was comparatively better—99.74% and 94.79% with random forests, 99.73% and 96.22% with gradient boosting machines, 99.75% and 95.94% with extreme gradient boosting.

Similar content being viewed by others

Data Availability

Yes, Data are available.

Code Availability

Yes, code is available.

References

Lam, J.C.; Wan, K.K.; Tsang, C.L.; Yang, L.: Building energy efficiency in different climates. Energy Convers. Manag. 49(8), 2354–2366 (2008)

Ahmad, M.W.; Mourshed, M.; Rezgui, Y.: Trees vs neurons: comparison between random forest and ANN for high-resolution prediction of building energy consumption. Energy Build. 147, 77–89 (2017)

Chou, J.S.; Bui, D.K.: Modeling heating and cooling loads by artificial intelligence for energy-efficient building design. Energy Build. 82, 437–446 (2014)

Jain, R.K.; Smith, K.M.; Culligan, P.J.; Taylor, J.E.: Forecasting energy consumption of multi-family residential buildings using support vector regression: investigating the impact of temporal and spatial monitoring granularity on performance accuracy. Appl. Energy 123, 168–178 (2014)

Krzywinski, M.; Altman, N.: Multiple linear regression: when multiple variables are associated with a response, the interpretation of a prediction equation is seldom simple. Nat. Methods 12(12), 1103–1105 (2015)

Carreira, P.; Costa, A.A.; Mansu, V.; Arsénio, A.: Can HVAC Really Learn from Users? A Simulation-Based Study on the Effectiveness of Voting for Comfort and Energy Use Optimization. Sustain. Cities Soc. 41, 275–285 (2018)

Drgoňa, J.; Picard, D.; Kvasnica, M.; Helsen, L.: Approximate model predictive building control via machine learning. Appl. Energy 218, 199–216 (2018)

Roy, S.S.; Roy, R.; Balas, V.E.: Estimating heating load in buildings using multivariate adaptive regression splines, extreme learning machine, a hybrid model of MARS and ELM. Renew. Sustain. Energy Rev. 82, 4256–4268 (2018)

Kumar, S.; Pal, S.K.; Singh, R.P.: A novel method based on extreme learning machine to predict heating and cooling load through design and structural attributes. Energy Build. 176, 275–286 (2018)

Ngo, N.T.: Early predicting cooling loads for energy-efficient design in office buildings by machine learning. Energy Build. 182, 264–273 (2019)

Sunikka-Blank, M.; Galvin, R.: Introducing the prebound effect: the gap between performance and actual energy consumption. Build. Res. Inf. 40(3), 260–273 (2012)

Galvin, R.: Making the ‘rebound effect’more useful for performance evaluation of thermal retrofits of existing homes: defining the ‘energy savings deficit’and the ‘energy performance gap’. Energy Build. 69, 515–524 (2014)

Tsanas, A.; Xifara, A.: Accurate quantitative estimation of energy performance of residential buildings using statistical machine learning tools. Energy Build. 49, 560–567 (2012)

Fan, C.; Xiao, F.; Wang, S.: Development of prediction models for next-day building energy consumption and peak power demand using data mining techniques. Appl. Energy 127, 1–10 (2014)

Wei, X.; Kusiak, A.; Li, M.; Tang, F.; Zeng, Y.: Multi-objective optimization of the HVAC (heating, ventilation, and air conditioning) system performance. Energy 83, 294–306 (2015)

Park, H.S.; Lee, M.; Kang, H.; Hong, T.; Jeong, J.: Development of a new energy benchmark for improving the operational rating system of office buildings using various data-mining techniques. Appl. Energy 173, 225–237 (2016)

Candanedo, L.M.; Feldheim, V.; Deramaix, D.: Data driven prediction models of energy use of appliances in a low-energy house. Energy Build. 140, 81–97 (2017)

Manjarres, D.; Mera, A.; Perea, E.; Lejarazu, A.; Gil-Lopez, S.: An energy-efficient predictive control for HVAC systems applied to tertiary buildings based on regression techniques. Energy Build. 152, 409–417 (2017)

Peng, Y.; Rysanek, A.; Nagy, Z.; Schlüter, A.: Using machine learning techniques for occupancy-prediction-based cooling control in office buildings. Appl. Energy 211, 1343–1358 (2018)

Gallagher, C.V.; Bruton, K.; Leahy, K.; O’Sullivan, D.T.: The suitability of machine learning to minimise uncertainty in the measurement and verification of energy savings. Energy Build. 158, 647–655 (2018)

Deb, C.; Lee, S.E.; Santamouris, M.: Using artificial neural networks to assess HVAC related energy saving in retrofitted office buildings. Sol. Energy 163, 32–44 (2018)

Nayak, S.C.: Escalation of forecasting accuracy through linear combiners of predictive models. EAI Endorsed Trans. Scalable Inf. Syst. 6(22), 1–14 (2019)

Sethi, J.S.; Mittal, M.: Ambient air quality estimation using supervised learning techniques. EAI Endorsed Trans. Scalable Inf. Syst. 6(22) (2019)

Pallonetto, F.; De Rosa, M.; Milano, F.; Finn, D.P.: Demand response algorithms for smart-grid ready residential buildings using machine learning models. Appl. Energy 239, 1265–1282 (2019)

Pham, A.D.; Ngo, N.T.; Truong, T.T.H.; Huynh, N.T.; Truong, N.S.: Predicting energy consumption in multiple buildings using machine learning for improving energy efficiency and sustainability. J. Clean. Prod. 260, 121082 (2020)

Walker, S.; Khan, W.; Katic, K.; Maassen, W.; Zeiler, W.: Accuracy of different machine learning algorithms and added-value of predicting aggregated-level energy performance of commercial buildings. Energy Build. 209, 109705 (2020)

Xu, X.; Wang, W.; Hong, T.; Chen, J.: Incorporating machine learning with building network analysis to predict multi-building energy use. Energy Build. 186, 80–97 (2019)

Zhou, G.; Moayedi, H.; Bahiraei, M.; Lyu, Z.: Employing artificial bee colony and particle swarm techniques for optimizing a neural network in prediction of heating and cooling loads of residential buildings. J. Clean. Prod. 254, 120082 (2020)

Gao, W.; Alsarraf, J.; Moayedi, H.; Shahsavar, A.; Nguyen, H.: Comprehensive preference learning and feature validity for designing energy-efficient residential buildings using machine learning paradigms. Appl. Soft Comput. 84, 105748 (2019)

Seyedzadeh, S.; Rahimian, F.P.; Rastogi, P.; Glesk, I.: Tuning machine learning models for prediction of building energy loads. Sustain. Cities Soc. 47, 101484 (2019)

Roy, S.S.; Samui, P.; Nagtode, I.; Jain, H.; Shivaramakrishnan, V.; Mohammadi-Ivatloo, B.: Forecasting heating and cooling loads of buildings: a comparative performance analysis. J. Ambient Intell. Humaniz. Comput. 11(3), 1253–1264 (2020)

Iruela, J.R.S.; Ruiz, L.G.B.; Pegalajar, M.C.; Capel, M.I.: A parallel solution with GPU technology to predict energy consumption in spatially distributed buildings using evolutionary optimization and artificial neural networks. Energy Convers. Manag. 207, 112535 (2020)

Das, S.; Swetapadma, A.; Panigrahi, C.; Abdelaziz, A.Y.: Improved method for approximation of heating and cooling load in urban buildings for energy performance enhancement. Electr. Power Compon. Syst. 48, 1–11 (2020)

Cozza, S.; Chambers, J.; Deb, C.; Scartezzini, J.L.; Schlüter, A.; Patel, M.K.: Do energy performance certificates allow reliable predictions of actual energy consumption and savings? Learning from the Swiss national database. Energy Build. 224, 110235 (2020)

https://sweetcode.io/simple-multiple-linear-regression-python-scikit/

Cunningham, P.; Delany, S.J.: k-Nearest neighbour classifiers. Multiple Classif. Syst. 34(8), 1–17 (2007)

Martínez, F.; Frías, M.P.; Pérez, M.D.; Rivera, A.J.: A methodology for applying k-nearest neighbor to time series forecasting. Artif. Intell. Rev. 52(3), 2019–2037 (2019)

https://www.slideshare.net/amirudind/k-nearest-neighbor-presentation

Smola, A.J.; Schölkopf, B.: A tutorial on support vector regression. Stat. Comput. 14(3), 199–222 (2004)

https://scikit-learn.org/0.18/auto_examples/svm/plot_svm_regression.html

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Cutler, A.; Cutler, D.R.; Stevens, J.R.: Random forests. In: Ensemble Machine Learning, pp. 157–175. Springer, Boston, MA (2012)

Friedman, J.H.: Stochastic gradient boosting. Comput. Stat. Data Anal. 38(4), 367–378 (2002)

Chen, T.; Guestrin, C.: Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 785–794 (2016)

Myers, L.; Sirois, M.J.: Spearman correlation coefficients, differences between. Encycl. Stat. Sci. (2004)

Author information

Authors and Affiliations

Contributions

Mrinal Pandey and Monika Goyal conducted the research and analyze the data. Monika Goyal performed the literature survey and experiments. Statistical Analysis is done by Mrinal Pandey. The research article is written by Mrinal Pandey and Monika Goyal.

Corresponding author

Appendix

Appendix

1.1 Sample Calculations for Model Evaluation

The sample calculations using formulae in Eqs. 9–13 are described here. Table 6 contains the predicted values, observed values of response variables Y1 and Y2 from the dataset and predicted values after applying KNN algorithms. The calculations for model evaluation on the basis of values given in Table 6 have been performed manually on 20 and 100 sample size, respectively, which has been selected in respective order from 1–10 and 1–100.

Referring to Eqs. 9–13, applying the formulae on observed values and values predicted using KNN, For Y1 calculated results for samples of initial 20 records,

Referring to Eqs. 9–13, applying the formulae on observed values and values predicted using KNN, For Y1 calculated results for samples of initial 100 records,

The sample calculations using formulae in Eqs. 9–13 are described here. Table 7 contains the predicted values, observed values of response variables Y1 and Y2 from the dataset, and predicted values after applying XGBoost algorithms. The calculations for model evaluation on the basis of values given in Table 7 have been performed manually on 20 and 100 sample size, respectively, which has been selected in respective order from 1–20 and 1–100.

Applying the formulae on the values predicted using XGBoost, For Y1 the calculated results for samples of initial 20 records,

Applying the formulae on the values predicted using XGBoost, For Y1 the calculated results for samples of initial 100 records,

Rights and permissions

About this article

Cite this article

Goyal, M., Pandey, M. A Systematic Analysis for Energy Performance Predictions in Residential Buildings Using Ensemble Learning. Arab J Sci Eng 46, 3155–3168 (2021). https://doi.org/10.1007/s13369-020-05069-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-020-05069-2