Abstract

Schizophrenia is a severe mental illness which can cause lifelong disability. Most recent studies on the Electroencephalogram (EEG)-based diagnosis of schizophrenia rely on bespoke/hand-crafted feature extraction techniques. Traditional manual feature extraction methods are time-consuming, imprecise, and have a limited ability to balance accuracy and efficiency. Addressing this issue, this study introduces a deep residual network (deep ResNet) based feature extraction design that can automatically extract representative features from EEG signal data for identifying schizophrenia. This proposed method consists of three stages: signal pre-processing by average filtering method, extraction of hidden patterns of EEG signals by deep ResNet, and classification of schizophrenia by softmax layer. To assess the performance of the obtained deep features, ResNet softmax classifier and also several machine learning (ML) techniques are applied on the same feature set. The experimental results for a Kaggle schizophrenia EEG dataset show that the deep features with support vector machine classifier could achieve the highest performances (99.23% accuracy) compared to the ResNet classifier. Furthermore, the proposed model performs better than the existing approaches. The findings suggest that our proposed strategy has capability to discover important biomarkers for automatic diagnosis of schizophrenia from EEG, which will aid in the development of a computer assisted diagnostic system by specialists.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Schizophrenia is a complex and severe mental illness, which affects about 1 in 100 Australians (between 150,000 and 200,000) [1, 2] and approximately 20 million people worldwide [3]. People with schizophrenia mostly experience hallucinations, delusions, disorganized speech and behaviour, movement disorders, [3]. The schizophrenic patients face significant problems in their daily life living at diverse levels of social, cognitive, emotional, physiological, and psychological functions [4]. It was estimated that 20%–40% of schizophrenic patients attempt suicide at least one time, and 5%–10% of them successfully carried out suicide [5]. According to the World Health Organization (WHO) report, schizophrenia is linked with considerable disability that lead to high health care expense globally [3]. There is no cure for schizophrenia, but it can be treated and managed using long-term medication that causes an excessive load on the health care system and patients’ family [6]. Thus, accurate and early diagnosis is needed for better treatment that can significantly improve patients’ survival and quality of life and reduce health care costs.

There are several techniques to diagnose schizophrenia such as interview method, computed tomography (CT), magnetic resonance imaging (MRI), electroencephalography (EEG), etc. Among them, the interview diagnosis method is not reliable because it is a manual process which is laborious, onerous, subject to error, and unfairness. The neuroimaging techniques (e.g. MRI, CT) are expensive and require additional recording and computational time as compared to the EEG technique [7, 8, 9, 10, 11]. Currently, EEG is emerged as the reference standard for diagnosis of schizophrenia owing to its high temporal resolution, non-invasiveness, and relatively low financial cost compared to other tests [12]. The EEG technique records electrical activity of the brain through electrodes placed on scalp [4]. Produced EEG recordings convey information about the state of brain. Different brain-related disorders generate different patterns of EEG signals. EEG recording contains huge volumes of dynamic data to study brain function. Usually, this large amount of data is assessed by visual inspection, which takes long time, subject to human error, and reduces decision-making reliability [13, 14]. As yet, there is no reliable way of identifying schizophrenia from EEG data automatically, rapidly, and accurately. Therefore, the motivation of this study is to develop an automatic schizophrenia detection scheme using EEG signal data.

In recent years, many research studies have been performed to acquire informative features from EEG signals for the detection of schizophrenia [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28]. These studies reported various algorithms based on various features to characterize the.

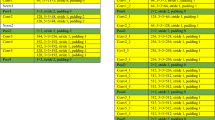

EEG-based brain region. Some of the past works in the area of schizophrenia detection are reported in as shown in Table 1. In literature, it is observed that most of the research work in the schizophrenia detection from EEG signals are based on bespoke/hand-crafted feature extraction methods such as wavelet transformation, fast Fourier transformation, power spectral density, spatial pattern of network, Kolmogorov complexity, entropy, etc. [15, 16, 17, 18, 19, 20, 21, 22, 23] where the methods are manually chosen based on the researcher’s expertise. The hand-crafted feature extraction methods cannot form intellectual high levels of representations of EEG signals to discover deep concealed characteristics from data that can achieve better performance. Sometimes it is difficult to choose effective feature extraction methods for different structures of EEG data and this is labor-intensive and time-consuming. Moreover, the methods underperform when the large datasets are used. In literature, very few research works have been performed on deep learning (DL) for detection of schizophrenia in EEG (e.g. we found few works [24, 26, 28]) but the methods are still limited in their ability to balance the efficiency and accuracy of schizophrenia detection. In addition, we also explored some recent works of schizophrenia detection that used Magnetic resonance images (MRI) data [29, 30, 31, 32, 33, 34] as reported in Table 1. Their performances weren’t really up to par.

Hence, this study introduces a deep learning-based feature extraction method employing a deep residual network (deep ResNet) model for the detection of schizophrenia using EEG signals data. The main advantage of the DL method is that there is no need to manually extract features from the signals. The network learns to extract features while training which can help to enhance the speed and effectiveness of supervised learning. A deep ResNet is a special form of neural network that helps to handle more advanced DL tasks and models. In neural network architectures, the deep ResNet model has capability to produce better performance through a network with many layers (optimum number of layers) overcoming the “vanishing gradient” problem. The proposed framework is designed with three phases: signal pre-processing, extraction of hidden patterns from EEG signals, and classification of schizophrenia. This study employs an average filtering method to pre-process the raw EEG data. The deep ResNet framework is developed to automatically extract concealed significant features from EEG signals to identify schizophrenia patients from normal control subjects. The obtained deep ResNet features are used as input to the softmax classifier. In order to further assess the performance, the deep ResNet feature set is also fed into several ML techniques. A detailed explanation of the mentioned methods is provided in Section II (A) with the motivations that these methods are considered in this study.

The main contributions of this work are summarized as follows: (1) for the first time, we introduce a deep ResNet based DL framework, for mining deeper features from EEG to automatically identify schizophrenia from normal control subjects; (2) we discover a sustainable classifier for the attained deep feature set from the DL and ML environment; (3) we investigate the performance of the deep ResNet feature set with several ML methods for detection of schizophrenia; (4) we enhance the identification performances than the state-of-arts methods.

Methodology

The aim of this study is to develop a deep RestNet based DL framework that can use EEG signal data to automatically and efficiently identify schizophrenia patients from normal control subjects improving the classification performance. The architecture of the proposed deep RestNet based framework for the diagnosis of schizophrenia is presented in Fig. 1 and the configurations of the proposed networks are outlined in Table 2. A brief description of the proposed framework is provided below.

Deep learning (DL) framework

The key important characteristic of the DL model is to automatically extract effective features, which has benefit for large volume data. The proposed DL framework consists of three steps as shown in Fig. 1: (1) signal pre-processing; (2) extraction of hidden patterns from EEG signals and (3) classification of schizophrenia. Step 1 involves with the signal pre-processing where the noise and artifacts of EEG signals are removed employing an average filtering method. Step 2 comprises the feature extraction where the hidden concealed patterns are extracted from denoised signals using the deep ResNet model to enable efficient detection of schizophrenia. Finally, step 3 performs the classification task where the extracted deep features are fed to softmax classifier to classify schizophrenia from normal control subjects. Also, the same feature set are fed as input to several ML classification methods for classifying schizophrenia classification. The detail description of the three steps are provided below:

Step 1. Signal pre-processing

EEG data are intrinsically noisy and often disturbed by artifacts. The noise and artifacts might bias the analysis of the signals that lead to incorrect conclusions. The key aim of pre-processing is to minimise or, eventually, eliminate noise and artifacts (e.g. outliers) from the signals. This process standardizes the data so that they can be easily used to create an appropriate model. This study employs an average filter for reducing and removing noise from EEG data. In the average filtering process, we smooth each signal (Sg) with a kernel size, KLen = 12 using Eq. (1), and the smoothed signal is sampled as \(\widehat{\mathrm{Sg}}\) with an interval length IntLen = KLen = 12 using Eq. (2). To create

the final matrix, which has a size of about 200, the values of KLen and IntLen are determined by trial and error. The raw signal data then serves as an input matrix for feature extraction in the deep ResNet model, which is discussed in the next step. This filtering was chosen for this study because it is straightforward, intuitive, and simple to use in order to smooth the signals and reduce the amount of intensity variation that may remove unrepresentative (unwanted) information from the signals.

Step 2. Extraction of hidden patterns of EEG signals by deep resnet model

This section aims to extract salient important features robustly and automatically from EEG data that enable efficient detection of schizophrenia. Feature extraction is the most important stage of a classification task. Transforming denoised EEG signals by an ideally small number of relevant values that describe task-related information is called ‘feature’. Traditional hand-crafted feature extraction methods choose features manually from data which is tedious and unsophisticated. The DL based methods extract meaningful features in an automatic way directly from the EEG data using less time that empower to generate better performance of recognition compared to the traditional methods. This study introduces a deep ResNet architecture to extract significant representative features from the denoised EEG signals. As per our knowledge, for the first time, the deep ResNet is employed in this study for the detection of schizophrenia from EEG signals. Previously, this method was employed on image recognition [34, 35, 36, 37, 38, 39] and epilepsy detection [40] but was not applied in the schizophrenia detection yet.

The motivation of using the deep ResNet model in this study is that this method is developed as a family member of extremely deep architectures showing convincing accuracy and nice convergence behavior in considerably increased depth. The deep ResNets built up with an advanced residual unit and full pre-activation [35], where identity mappings are based on skip layer styles to ease the vanishing/exploding gradient issues [41]. The deep ResNet makes better networks as easy as adding more layers onto it for having representative hierarchical discriminative features from data. In the deep ResNet structure, the feature of any deeper unit is characterized as the feature of any shallower unit plus the summation of the preceding residual responses. This characteristic helps to substantially improve performance on ultra-deep networks with more than 1000 layers compared to earlier residual methods [42]. The deep ResNet has capability to optimize and gain accuracy from considerably increased depth [35].

The ResNet is constructed based on feedforward deep neural networks developed in [35], where each layer uses different operations to extract information from the previous layers, and also has a unique residential operation in the network. The networks utilize skip connections, or shortcuts to jump over some layers. A detailed description of the deep ResNet architecture is available in refs [35, 43]. Typically, ResNet involves with convolutional (Conv) layers, rectified linear units (ReLu) layers, batch normalization layers, and lay skips which is demonstrated in Fig. 2.

Figure 2 illustrates an example of the residual learning module. In this figure, ‘X’ is the mapping of input, and F(X) is a residual function. As depicted in Fig. 2, after two branches (mapping ‘X’ of input and residual function F(X)) are combined, they are exposed to nonlinear transformation (ReLu activation function) to create the entire residual learning module. As shown in Fig. 2, the input is X and a shortcut is added after X, and the output of the block is superimposed upon the input. Therefore, the output of the block becomes F(X) + X, and the network weight parameters need to learn is F(X). In Fig. 2, the most useful information to realize is the ‘skip connection’ in identity mapping. This identity mapping does not have any parameters and is just there to add the output from the previous layer to the layer ahead [35]. In the proposed model, residual blocks are used in the whole network as the basic units of deep ResNet which is defined as below [44]:

Here X denote input vector and Y denote output vector of the given layers. The function F(X, {Wi}) symbolizes the residual mapping to be learned. In Eq. (3), the dimensions of X and F should be identical.

In this study, we design the structure of the deep ResNet model for implementation reported in Table 2. This table shows a structure of the network setting how it is implemented with large numbers of parameters on the data in this study. This study extracts deep features from five convolutional layer with several kernels, and then the activation function works on the convoluted results to generate the feature maps. The polling layer acquires the feature from the previous layers. The average polling layer uses the average value from each cluster of neurons of the previous layer. Finally, 2 fully connected layers are used, and their outputs are fed to the softmax function for classification task.

Step 3. Classification of schizophrenia

This stage aims to perform the schizophrenia classification task using the obtained deep feature set. As shown in Fig. 1, after five convolutional layers and one average pooling layer, there are two fully connected layers which are the final layer of the deep ResNets model that produces outputs, a probability distribution over all possible categories for the given input. In the final layer, this study uses a softmax function where there are an output ranges from 0 to 1 per class for classification. These outputs refer to the predicted probability of the signal belonging to a certain class, where higher probabilities indicate as the more confident that the input belongs to that class [45]. As seen in Fig. 1, in the fully connected layer, the output vector of the former layer is used as input to the softmax function. The below formula is applied to generate the probabilities of estimated results for the output of the softmax function:

where \(y\) refers to target class, x refers to a feature vector, w refers to the weight parameter, and \(b\) refers to a bias term.

This study uses a binary cross-entropy function as the loss function which calculates the binary cross-entropy (BCE) between estimates and targets [46], which is given by:

where \({N}_{0}\) indicate the total number of training samples, \({p}_{i}\) indicate the estimated result of each sample, and \({t}_{i}\) indicate the target class in \(\left\{\mathrm{0,1}\right\}\), where 0 refers to normal control and 1 refers to schizophrenia patient.

In order to investigate the efficacy of the obtained deep ResNet feature set, this study also used the same feature set to each of five ML classification methods: SVM, k-NN, DT, LD, and NB. The reason for the choice of these classifiers for this study is because those classifiers are straightforward and very effective in implementation. In addition, those ML classifiers have powerful and speediest learning capability to investigate all the training inputs in the classification process [12, 13, 14, 47, 48].

Step 4. Performance evaluation

In this step, the performances of the proposed model is evaluated using practically standard measurements such as accuracy, sensitivity, specificity, precision (positive predictive value), false positive rate (false alarm rate), false negative rate (miss rate), F1 score and operating characteristic curve (ROC) area [12, 49, 50, 51, 52].

Results

Firstly, this section presents a brief description of the data that is used in this study. Afterward, the design of the method implementation is described including the parameter setting information of the proposed the deep ResNet model. Then, feature extraction process is discussed at the end of this section. Afterward, the obtained results are discussed in Section IV.

Data acquisition

The study used an EEG signal database from Kaggle data source. That database consists of 81 subjects EEG data where 49 subjects are schizophrenia patients, and 32 subjects are normal control persons. Out of 81 subjects, 14 subjects are female, and 67 subjects are male. The average age is 39 and the average education is 14.5 years. EEG data were collected from total of 70 channels including 64 channels on the scalp and 6 channels around the eyes and nose. The data were continuously digitized at 1024 Hz and referenced off-line to averaged earlobe electrodes. The length of each signal was 3 s. EEG was measured 100 times each under these three conditions. (1) Subject pressed a button to generate the tone. (2) Subject passively listened to the same tone. (3) Subject pressed a button without generating the tone. In this study, we test only condition one to classify SZ patients and HC subjects because the HC subjects generate a press button tone while the SZ patients did not [53]. A details description of this dataset is available in reference [25] and [53].

Implementation

In this study, all experiments were performed in MATLAB (2018b) on a PC with a Six-Core Intel i7 processor and 32 GB of memory. The server was equipped with an NVIDIA RTX 2060 GPU with 6 GB of memory. The deep ResNet model was run in MATLAB Deep Learning Toolbox. This study implemented the proposed method on a schizophrenia EEG data. As mentioned before, this data contains EEG recordings of 81 subjects including 32 normal control subjects and 49 schizophrenia patients. The EEG recordings of normal control subjects contains 3108 trails, 3072 samples per trial and 70 channels, and the recording of schizophrenia subjects contains 4608 trails, 3072 samples per trial and 70 channels. Now we are going to show an example of how our data are processed with the proposed model in this study. Here we provide an example of data processing and transforming for subject 1.

For example, Subject 1 is made up of 887,808 × 70 data (samples x channels), which was transformed into a matrix with the dimensions 70 × 3072x289 (channels x samples x images). By using 887,808/3072, 289 was achieved. After applying average filter, the raw signal data matrix was converted to a matrix with a size of 70 × 256 × 289. Afterward, the 70 × 256x289 matrix was resized to 224 × 224x3 × 289 (height x width × 3 symbolize color layer x images) to be compatible with the deep ResNet input size for subject 1. An image sized 70 × 256 grey scale image is shown in Fig. 3a and this image is resized to 224 × 224 with grey-scale format as shown in Fig. 3b. As the grey scale format is not compatible for ResNet inputs, thus the grey-scale images are concatenated to 224 × 224 × 3 (height × width × color depth) as shown in Fig. 3c. Here color depth is generated by combining the same grey scale image three times. In this work, the “imresize” function was used to accomplish the resize operation in Matlab environment. The “imresize” function applies the nearest-neighbor, bilinear, or bicubic interpolation strategy to interpolation. Bicubic interpolation was utilised as hyper-parameters in the paper. Figure 4 shows an example for subject 1 how the signal data were transformed into image data for deep ResNet application.

Following a similar process, for a total of 81 subjects, the whole dataset was transformed into an image matrix with size 224 × 224x3 × 23,201 (height x width × 3 symbolize color layer x image samples). Then, this image data was divided into three parts: training, validation, and testing with ratios 70%, 10%, and 20%, respectively detail given below in Table 3. Table 3 provides the size of the three groups of data. In this study, the training dataset was used for learning process of the proposed model and the validation data set was regarded as a part of training set to tune the model. The validation set was used for tuning the parameters of a model and also for avoiding overfitting. Generally, the validation data set helps provide an unbiased evaluation of the model’s fitness. The testing dataset was used for performance evaluation.

To demonstrate the superiority of the proposed model, this study conducted extensive experiments and investigate the performance of the deep ResNet method with several ML methods. The hyper-parameters of the deep ResNet structure is provided in Table 2. All of the factors were fine-tuned based on the training set that provides the optimal training accuracy. Figure 5 displays an example of training and validation accuracy for the deep ResNet classifier in different iterations. It is seen that during training, there was no significant improvement on validation accuracy in some iterations. In this study, we considered the learning rate as 0.0001 for the training stage, and took one sample each time as the batch number. We used the brute force technique for determining optimal number of filters and kernel sizes. In the DL environment, the softmax function was used in the fully-connected (FC) layer for classification.

As mentioned before, besides DL classifier, this study also uses five ML classifiers: SVM, k-NN, DT, LD and NB for classification purposes to compare the performances of the obtained deep features. In this study, we selected the linear kernel for SVM as the best kernel function. Because, after extensive experiments using several kernels such as linear, polynomial, radial basis kernel), we found that linear kernel suits better with the acquired feature set than other kernel functions. For k-NN, we considered ‘Euclidean distance’ for distance measure and used the k value as 1 after some experimental investigations. Since there is no standard rule for choosing k, in this study we used an empirical method with a range of k values from 1 to 20 and selected a suitable k value that provides the lowest error rate, as the optimal model is the one with the lowest error rate. For DT, LD, and NB classifiers, we considered the default parameter settings in MATLAB because we could not find any standard rule to choose the values of the parameters of those classifiers.

Feature extraction

This section provides information on how EEG signal data are converted into a deep feature set by the deep ResNet model. Figure 5 presents a diagram of the data processing of how they are converted into the feature set. The sizes of the data are also indicated in this figure. As can be seen in Fig. 6, the proposed model generates two deep leaning features, and the size of the obtained deep feature set is 23201 × 2. To estimate the performance of the classification models, this study applied a 10 folds cross-validation technique on this obtained deep feature set. In 10 folds cross-validation, the original sample is randomly partitioned into 10 equal size fold. Of the 10 folds, a single fold is taken as the validation data for assessing the models, and the rest 9 folds are employed as the training data. The cross-validation process is then repeated 10 times, with each of the 10 folds used exactly once as the validation data. The average of the 10 folds’ results is considered as a separate evaluation for consideration.

Figure 7 presents a box plot showing the distribution of two obtained deep features: deep feature 1 and deep feature 2 in the Control and Schizophrenia category. It is apparent from Fig. 7 that both control and schizophrenia groups are showing same distribution pattern, which is symmetric, but a considerable variation is observed in the central value of both groups. The boxplot figures illustrate that features values between two groups values have significance difference that make easier to have efficient classification performance.

Discussion

This section presents discussion for the obtained experimental results of the proposed schizophrenia detection scheme. The experimental results are obtained based on binary classification process (2 class classification). Here, the schizophrenia signals are considered as one class and the normal control signals are considered as another class e.g. classification of schizophrenia and control.

Table 4 presents the overall classification results for the obtained deep features with the softmax classifier of deep ResNet model (DL classifier) and also with the four ML classifiers: SVM, k-NN, DT, LD and NB, separately. In this table, the overall performances are reported in terms of accuracy, sensitive, specificity, precision and F1-score. It is observed from Table 4 that the proposed deep ResNet features deliver reasonably better performance results for each of the reported ML classifiers compared to the DL classifier. As can be seen from Table 4, in most of the cases, the highest classification performances are achieved for the SVM classifier among the reported classifiers, which are 99.23% of accuracy, 99.02% of specificity, 99.36% of precision and 99.36% of F1-score. On the other hand, the DL classifier yields the lowest performances, where accuracy, specificity, precision, and F1-score values are 97.48%, 97.90%, 98.58%, and 97.88%, respectively. The highest sensitivity (99.44%) is obtained by the LD classifier while the lowest sensitivity (97.19%) is attained by the DL classifier. Thus, the results in Table 4 demonstrate that the deep RestNet features work better with the ML classifiers compared to the DL classifier. The overall accuracy is increased by 1.75% for the ML-based classifier compared to the DL scheme.

In order to show more detailed performance evidence such as class-specific performance, Fig. 8 presents a confusion matrix for each of the reported classification methods for the tenfold cross-validation procedure. These figures clearly display each label of data in the testing sets are predicted. The total number of each row represents the number of data predicted for that category while each column represents the true attribution of the category of the data. As seen in Fig. 8a, 2702 (98.60%) schizophrenia class data points are correctly identified by the deep ResNet classification model and 1822 (95.90%) normal control class data points are correctly classified by the model. On the other hand, 39 (1.4%) normal control data points are incorrectly identified as schizophrenia, and 78 (4.1%) schizophrenia data points are misclassified as normal control by the deep ResNet classifier. Similarly, the class-specific performance information for other classification methods such as for the SVM, k-NN, DT, LD, and NB are seen in Fig. 8b–f, respectively.

To provide further information about the classification performance, a false positive rate, and false negative rate for the proposed models are illustrated in Fig. 9. The lower false positive rate and false negative rate indicate the better quality of a classification method. It is seen from this figure that the lowest false positive rate (0.98%) is obtained by the SVM classifier, while the LD produces the lowest false negative rate (0.56%). The highest false positive rate (2.1%) and the highest false negative rate (2.81%) is attained by the deep ResNet classifier (DL technique). In both evaluation parameters, the deep features with the SVM classifier (ML technique) perform better compared to the DL method like the previous performance measurement.

To more validate the efficacy of the proposed model, Fig. 10 presents a ROC curve comparing the performance of the deep ResNet, SVM, k-NN, DT, LD, and NB classifiers for the same the deep feature set. The ROC curve is drawn putting true positive rates (sensitivity) in X axis and false-positive rates in Y axis. An overall performance of a classifier is measured through the area value under the ROC curve which belongs to between 0 and 1 (bigger area value reveals better performance of the classifier). Figure 11 displays the area values under the curve (AUC) for the reported classifiers. In Fig. 11, vertical lines on the top of the bar charts show standard error among the classifiers. As can be seen in Figs. 10 and 11, the highest AUC is obtained by three ML classifiers: SVM, LD and NB which is 99.96% (close to 100%). The k-NN yields the lowest AUC for the same feature set.

To assess the computational complexity of the proposed models, this study compares the execution (running) time for the various classification methods in the training part and testing part. It is seen from Table 5 that in both training and testing part, the deep ResNet (DL classifier) took more time compared to the reported ML classifiers. The highest training time (14,749 s) and the highest testing time (20.50 s) were obtained by the deep ResNet classifier. The LD classifier took the lowest time (0.5310 s) in the training part and the NB took the lowest time (0.01563 s) in the testing part. The SVM classifier took the second lowest time (0.0313 s) in the testing part. It is worthy to mention that the time of ResNet model includes feature extraction and also classification process. The ML methods consider only classification process time.

In this study, in most of the circumstances, the proposed deep features perform better with ML classifiers compared to the DL classifier. Specially, the deep feature set with the SVM algorithm produced higher performance compared to other reported classifiers. The high classification performance across the SVM algorithm proves the deep features are highly discriminating between the two classes: schizophrenia and normal control. Thus, we can argue strongly that the deep features obtained from the ResNet model are perfect represent of EEG signals and the SVM classifier is the best choice for detecting schizophrenia category EEG signals from normal control signals.

Comparison with the existing methods for the same dataset

This section provides a comparative report for our proposed method with the existing methods for the same Kaggle schizophrenia EEG dataset that was used in this study. Figure 12 presents the comparative report including the overall accuracy of the proposed method and the existing methods. Siuly et al. [54] developed an empirical mode decomposition-based features with ensemble bagged tree for the detection of schizophrenia from EEG signals. They obtained accuracy, sensitivity and specificity 89.59%, 89.76% and 89.32%, respectively. Khare et al. [55] introduced a method based on empirical wavelet transformation and SVM for the detection of schizophrenia from EEG signals. Their method achieved an accuracy of 88.70%, sensitivity of 91.13% and specificity of 89.29. For the EEG-based schizophrenia identification, Khare et al. [56] developed a flexible tuneable Q wavelet transform (F-TQWT) based methodology with least square support vector machine (F-LSSVM) that achieved 91.39% accuracy, 92.65% sensitivity, and 93.22% specificity. An optimised extreme learning machine (OELM) algorithm with a resilient variational mode decomposition (RVMD) foundation was proposed by Khare et al. in [57] and achieved an overall accuracy of 92.30%. In [58], Khare et al. proposed a convolutional neural network (CNN) model based on time–frequency analysis technique for detecting schizophrenia in EEG signals and obtained an overall accuracy of 93.36%. It is seen from Fig. 12 that our proposed model yielded the highest performance scores with the accuracy of 99.23%; sensitivity of 99.36% and specificity of 99.02%, compared to the existing two methods. The achieved accuracy improvement of our proposed model is 10.53% better than Khare et al. [55] accuracy score and 9.47% better than Siuly et al. [54] accuracy score. Towards the end, it can be stated that deep ResNet features of EEG signals with SVM classifier could be worked as an appropriate measurement to correctly distinguish schizophrenics and HC subjects.

Conclusions

In this study, a deep ResNet model-based DL framework is proposed to learn robust feature representations of EEG signals for automatic detection of schizophrenia detection from normal control subjects, improving the performances. The proposed network architecture is designed combining convolutional, residual connections, average pooling, and fully connected layers that provide good convergence with higher performance. This proposed model can automatically extract effective features which are advantageous for large scale data. In this study, we extracted deep features using the deep ResNet model and then used as input to the softmax function for classification. To examine the performance of the obtained deep features, we also used the same feature set to five ML methods: SVM, k-NN, DT, LD, and NB, separately. The performance of the proposed method was evaluated on a benchmark schizophrenia EEG dataset from Kaggle. The extensive experiments were conducted, and the achieved results reveal that the SVM classifier with the acquired deep feature set produces the highest accuracy of 99.23% compared to the reported classifiers while this value is 97.48% for the softmax classifier of the ResNet model. Also, the SVM model with the deep feature set produces the lowest false positive rate (0.98%) where this value is 2.1% for the deep ResNet model. Moreover, we also compute the computational complexity of the proposed models. It is seen that the DL based model took a very long time (14,749.00 s) for the training part and testing part (20.50 s) compared to the reported ML-based methods. The findings of this study indicate that deep feature performs better with the ML classifier in the schizophrenia detection compared to the DL classification method. The results prove that the proposed method has the capability to discover hidden important biomarkers from EEG for automatic detection of schizophrenia that can assist technologists to build up a software system.

Data availability

This study used Kaggle data set: "EEG data from basic sensory task in Schizophrenia", which is publicly available in the below link: https://www.kaggle.com/datasets/broach/button-tone-sz To get this dataset, first need to be register in Kaggle, then dataset will be accessible

References

BetterHealth channel, https://www.betterhealth.vic.gov.au/health/conditionsandtreatments/schizophrenia.

Healthdirect 2018, Schizophrenia, Healthdirect, viewed https://www.healthdirect.gov.au/schizophrenia. Accessed 4 Feb 2020

World Health Organization (WHO) 2019, schizophrenia, WHO, viewed https://www.who.int/news-room/fact-sheets/detail/schizophrenia. Accessed 5 Feb 2020

Goshvarpour A, Goshvarpour A (2020) Schizophrenia diagnosis using innovative EEG feature-level fusion schemes. Phys Eng Sci Med 43:227–238. https://doi.org/10.1007/s13246-019-00839-1

PE Tibbetts, (2013) Principles of cognitive neuroscience. Second Edition /Principles of neuroscience. Fifth Edition. Q Rev Biol pp. 88 139–140

McGlashan TH (1998) Early detection and intervention of schizophrenia: rationale and research. Br J Psychiatry 172:3–6

Oh SL, Vicnesh J, Ciaccio EJ, Yuvaraj R, Acharya UR (2019) Deep convolutional neural network model for automated diagnosis of schizophrenia using EEG signals. Appl Sci 9(14):2870

Alvi MA, Siuly S, Wang H, Wang K, Whittaker F (2022) A Deep learning based framework for diagnosis of mild cognitive impairment. Knowledge based Syst. https://doi.org/10.1016/j.knosys.2022.108815

Talo M, Baloglu UB, Yıldırım Ö, Acharya UR (2019) Application of deep transfer learning for automated brain abnormality classification using MR images. Cogn Syst Res 54:176–188

Gudigar A, Raghavendra U, San TR, Ciaccio EJ, Acharya UR (2019) Application of multiresolution analysis for automated detection of brain abnormality using MR images: a comparative study. Future Gener Comput Syst 90:359–367

Acharya UR, Sree SV, Ang PCA, Yanti R, Suri JS (2012) Application of non-linear and wavelet based features for the automated identification of epileptic EEG signals. Int J Neural Syst 22(2):1250002

S Siuly, Y Li, Y Zhang (2016) EEG Signal analysis and classification: techniques and applications. Health Information Science, Springer Nature, US (ISBN 978-3-319-47653-7).

Sadiq MT, Akbari H, Siuly S, Yousaf A, Rehman AU (2021) A novel computer-aided diagnosis framework for EEG-based identification of neural diseases. Comput Biol Med 138:104922

Alvi AM, Siuly S, Wang H (2022) Neurological abnormality detection from electroencephalography data: a review. Artif Intell Rev 55:2275–2312. https://doi.org/10.1007/s10462-021-10062-8

Ruiz J, de Miras AJ, Ibáñez-Molina MFS, Iglesias-Parro S (2023) Schizophrenia classification using machine learning on resting state EEG signal. Biomedical Signal Process Control. https://doi.org/10.1016/j.bspc.2022.104233

Akbari H, Ghofrani S, Zakalvand P, Tariq Sadiq M (2021) Schizophrenia recognition based on the phase space dynamic of EEG signals and graphical features. Biomed Signal Process Control 69:102917

E Olejarczyk, W (2017) Jernajczyk EEG in Schizophrenia. RepOD

Olejarczyk E, Jernajczyk W (2017) Graph-based analysis of brain connectivity in schizophrenia. PLoS ONE 12:e0188629

Kim K, Duc NT, Choi M, Lee B (2021) “EEG microstate features for schizophrenia classification. PLoS ONE 16:e0251842

R Buettner, D Beil, S Scholtz, and A Djemai, (2020) Development of a machine learning based algorithm to accurately detect schizophrenia based on one-minute EEG recordings. In: HICSS-53 proceedings: 53rd Hawaii International conference on system sciences.

Krishnan PT, Joseph Raj AN, Balasubramanian P, Chen Y (2020) Schizophrenia detection using multivariate empirical mode decomposition and entropy measures from multichannel EEG signal. Biocybern Biomed Eng 40:1124–1139

Jahmunaha V, Oha SL, Rajinikanthb V, Ciaccioe EJ, Cheongf KH, Arunkumarh N, Acharyaa UR (2019) Automated detection of schizophrenia using nonlinear signal processing methods. Artif Intell Med 100:101698

F Li, J Wang, Y Liao, C Yi, Y Jiang, Y Si, W Peng, D Yao, Y Zhang, W Dong,P Xu, (2019) Differentiation of Schizophrenia by combining the spatial EEG brain network patterns of rest and task P300. IEEE transactions on neural systems and rehabilitation engineering, pp. 27 594–602

Ko D-W, Yang J-J (2022) EEG-based schizophrenia diagnosis through time series image conversion and deep learning. Electronics 11(2265):2022

Kaggle website, https://www.kaggle.com/broach/button-tone-sz.

Aslan Z, Akin M (2020) Automatic detection of schizophrenia by applying deep learning over spectrogram images of EEG signals. Trait Du Signal 2020(37):235–244

Borisov SV, Kaplan AY, Gorbachevskaya NL, Kozlova IA (2005) Analysis of EEG structural synchrony in adolescents with schizophrenic disorders. Hum Physiol 31:255–261

Phang C-R, Ting C-M, Samdin SB, Ombao H (2019) Classification of eeg-based effective brain connectivity in schizophrenia using deep neural networks. 9th International IEEE/EMBS conference on neural engineering (NER). IEEE, San Francisco, pp 401–406

Levman J, Jennings M, Rouse E, Berger D, Kabaria P, Nangaku M, Gondra I, Takahashi E (2022) A morphological study of schizophrenia with magnetic resonance imaging, advanced analytics, and machine learning. Front Neurosci 16:926426. https://doi.org/10.3389/fnins.2022.926426

Center for Biomedical Research Excellence (COBRE); COBRE data set available. http://fcon_1000.projects.nitrc.org/indi/retro/cobre.html

Ghanbari M, Pilevar AH, Bathaeian N (2022) Diagnosis of schizophrenia using brain resting-state fMRI with activity maps based on deep learning. SIViP. https://doi.org/10.1007/s11760-022-02229-9

Zheng J, Wei X, Wang J, Lin H, Pan H, Shi Y (2021) Diagnosis of schizophrenia based on deep learning using fMRI. Comput Math Methods Med 9:8437260. https://doi.org/10.1155/2021/8437260.PMID:34795793;PMCID:PMC8594998

Oh J, Oh B-L, Lee K-U, Chae J-H, Yun K (2020) Identifying schizophrenia using structural MRI with a deep learning algorithm. Front Psychiatry 1(1):16

Lei D, Pinaya WHL, Young J, van Amelsvoort T, Marcelis M, Donohoe G, Mothersill DO, Corvin A, Vieira S, Huang X et al (2020) Integrating machining learning and multimodal neuroimaging to detect schizophrenia at the level of the individual. Hum Brain Mapp 41:1119–1135

K He, X Zhang, S Ren, J Sun, (2016) Deep residual learning for image recognition. In: CVPR.

Qin FW, Gao NN, Peng Y, Wu ZZ, Shen SY, Grudtsin A (2018) Finegrained leukocyte classification with deep residual learning for microscopic images. Comp Methods Programs Biomed 162(8):243–252

Surinta O, Khamket T (2019) Recognizing pornographic images using deep convolutional neural networks. The 4th International conference on digital arts, media and technology and 2nd ECTI Northern section conference on electrical, electronics, computer and telecommunications engineering. IEEE, Nan, pp 150–154

Lee S, Jang G (2017) Recognition model based on residual networks for cursive hanja recognition. International conference on information and communication technology convergence (ICTC). IEEE, Jeju, pp 579–583

B Liu, K Yao, M Huang, J Zhang, Y Li, R Li (2018) Gastric pathology image recognition based on deep residual networks. In: IEEE 42nd Annual Computer Software and Applications Conference (COMPSAC), vol. 02, pp. 408–412.

D Lu, J Triesch, “Residual deep convolutional neural network for EEG signal classification in epilepsy”, https://arxiv.org/abs/1903.08100.

RK Srivastava, K Greff, J Schmidhuber. (2015) Highway networks. arXiv:1505.00387 [cs.LG].

X Yu, Z Yu, S Ramalingam, (2018) “Learning Strict Identity Mappings in Deep Residual Networks”. arXiv:1804.01661 [cs.CV].

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. European conference on computer vision. Springer International Publishing, Cham, pp 630–645

Zeng H, Yang C, Dai G, Qin F, Zhang J, Kong W (2018) EEG classification of driver mental states by deep learning. Cogn Neurodyn 12:597–606

Bridle JS (1990) Probabilistic interpretation of feedforward classification network outputs, with relationships to statistical pattern recognition. In: Soulié FF, Hérault J (eds) Neurocomputing. Springer, Heidelberg, pp 227–236

G Cai, Y Guo, W Chen, H Zeng, Y Zhou, Y Lu. (2019) 14-Neutrosophic set based deep learning in mammogram analysis. Neutrosophic Set in Medical Image Analysis, pp. 287-310

Vapnik V (2000) The nature of statistical learning theory. Springer-Verlag, New York

Cover T, Hart P (1967) Nearest neighbor pattern classification. IEEE Trans Inform Theory 13(1):21–27

Siuly S, Bajaj V, Sengur A, Zhang Y (2019) An advanced analysis system for identifying alcoholic brain state through EEG signals. Int J Autom Comput 16:737–747

Siuly S, Li Y (2014) A novel statistical framework for multiclass EEG signal classification. Eng Appl Artif Intell 34:154–167

Supriya S, Siuly S, Wang H, Zhang Y (2018) EEG Sleep stages analysis and classification based on weighed complex network features. IEEE Trans Emerg Top Comput Intell. https://doi.org/10.1109/TETCI.2018.2876529

Siuly Y (2015) Li, “Designing a robust feature extraction method based on optimum allocation and principal component analysis for epileptic EEG signal classification.” Comput Methods Programs Biomed 119:29–42

Ford JM, Palzes VA, Roach BJ, Mathalon DH (2014) Did I do that? Abnormal predictive processes in schizophrenia when button pressing to deliver a tone. Schizophr Bull 40(4):804–812

Siuly S, Khare SK, Bajaj V, Wang H, Zhang Y (2020) A Computerized method for automatic detection of schizophrenia using EEG signals. IEEE Trans Neural syst Rehabilitation Eng 28(11):2390–2400. https://doi.org/10.1109/TNSRE.2020.3022715

Khare SK, Bajaj V, Siuly S, Sinha GR (2020) Classification of schizophrenia patients through empirical wavelet transformation using electroencephalogram signals. In: Bajaj V, Sinha GR (eds) Modelling and analysis of active biopotential signals in healthcare, vol 1. IOP Science, Bristol, pp 1–26. https://doi.org/10.1088/978-0-7503-3279-8ch1

Khare SK, Bajaj V (2021) A self-learned decomposition and classification model for schizophrenia diagnosis. Comput Methods Programs Biomed 211:106450

Khare SK, Bajaj V (2022) A hybrid decision support system for automatic detection of schizophrenia using EEG signals. Comput Biol Med. https://doi.org/10.1016/j.compbiomed.2021.105028

Khare SK, Bajaj V, Acharya UR (2021) SPWVD-CNN for automated detection of schizophrenia patients using EEG signals. IEEE Trans Instrum Meas. https://doi.org/10.1109/TIM.2021.3070608

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Idea development, Material preparation, data collection, analysis and assessments were performed by SS, YG, OFA, YL, PW and HW. The first draft of the manuscript was written by SS and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval

This study used publicly available dataset for testing their methodology performance. Therefore, no ethical approval is required.

Consent to participations

Not applicable.

Consent to publications

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Siuly, S., Guo, Y., Alcin, O.F. et al. Exploring deep residual network based features for automatic schizophrenia detection from EEG. Phys Eng Sci Med 46, 561–574 (2023). https://doi.org/10.1007/s13246-023-01225-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-023-01225-8