Abstract

Purpose

Breathing parameters change with activity and posture, but currently available solutions can perform measurements only during static conditions.

Methods

This article presents an innovative wearable sensor system constituted by three inertial measurement units to simultaneously estimate respiratory rate (RR) in static and dynamic conditions and perform human activity recognition (HAR) with the same sensing principle. Two units are aimed at detecting chest wall breathing-related movements (one on the thorax, one on the abdomen); the third is on the lower back. All units compute the quaternions describing the subject’s movement and send data continuously with the ANT transmission protocol to an app. The 20 healthy subjects involved in the research (9 men, 11 women) were between 23 and 54 years old, with mean age 26.8, mean height 172.5 cm and mean weight 66.9 kg. Data from these subjects during different postures or activities were collected and analyzed to extract RR.

Results

Statistically significant differences between dynamic activities (“walking slow”, “walking fast”, “running” and “cycling”) and static postures were detected (p < 0.05), confirming the obtained measurements are in line with physiology even during dynamic activities. Data from the reference unit only and from all three units were used as inputs to artificial intelligence methods for HAR. When the data from the reference unit were used, the Gated Recurrent Unit was the best performing method (97% accuracy). With three units, a 1D Convolutional Neural Network was the best performing (99% accuracy).

Conclusion

Overall, the proposed solution shows it is possible to perform simultaneous HAR and RR measurements in static and dynamic conditions with the same sensor system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A wearable device is a technology that can be worn, incorporated in clothes or as an accessory, and provides a series of signals and data regarding the subject’s status and health, as well as data regarding the surrounding environment.1 By means of wearables, it is possible to obtain physiological parameters without interfering with daily life activities, to reduce the obtrusiveness to a minimum and to enable a continuous and extended monitoring. Wearables find applications in several fields, including health, wellbeing, and fitness.4

Examples of parameters which can be measured are body and skin temperature, respiratory rate (RR), heart rate (HR) and pulse rate (PR), arterial blood pressure (ABP), blood glucose concentration, galvanic skin response (GSR) or electrodermal activity (EDA), peripheral capillary oxygen saturation (SpO2), photoplethysmogram (PPG), electrocardiogram (ECG), electroencephalogram (EEG), electromyogram (EMG),18 as well as parameters regarding the surrounding environment such as temperature, humidity, air pressure, and concentrations of various pollutants.12

Wearables can be integrated in telemonitoring system, which usually follow the so-called two-hop architecture.24 Its name derives from the fact that there are two steps of data transmission: the first from sensors to a gateway, with the communication performed by a sensor-manager link technology, and the second from the gateway to data management section, thanks to cellular link technologies such as Wi-Fi, 4G, and 5G.5 The networks that are obtained are called Wireless Body Area Networks, or WBANs.1

Remote Monitoring of Respiratory Parameters

In the healthcare field, the opportunity to identify abnormalities in RR is fundamental to forecast cardiac arrest,22 exacerbations,23 admissions to the Intensive Care Unit, and other adverse clinical events.39

Despite the relevance of RR as prognostic factor, the current gold standard for measuring RR is the number of breaths performed in one minute, identified through auscultation or observation, which is not suitable for prolonged monitoring outside the clinical environment. An alternative to this is the employment of dedicated devices, but a limitation that is found in several studies is that physiological parameters are usually detected with spot measurements and when the subject is at rest, while it is known that physical activity has an influence on cardiorespiratory function.21,33

Systems for continuous monitoring of breathing can use wearables based on different technologies: PPG-derived signals (e.g., in smartwatches31), respiratory inductance plethysmography (RIP),41 resistance-based sensors,11,19,42 capacitance-based sensors,32 inertial measurement units (IMUs),16,42 or fiber optic sensors.29 Some of these sensors can be embedded in garments.8 Although there are many proposed solutions, there is still a paucity of commercially available devices that are dedicated to respiratory parameters. The most commonly commercially available solutions are the smartwatches with PPG sensors, but the PPG signal is reliable for RR estimation only in resting conditions, due to the excessive motion artifacts present when subjects are performing dynamic activities. Considering the decreased intensity of PPG-derived respiratory signal with increasing RR, high RR is difficult to accurately detect from PPG signals, especially for values higher than 30 breaths per minute (bpm).27 Other technologies have different limitations. Most devices based on the movement of the chest wall and described in the literature only acquire the respiratory monitoring with one degree of freedom. However, it is known in the literature that different regions of the chest wall contribute to the breathing activity, and the level of contribution changes in different postures.37 In RIP, two bands are applied, one at the thoracic level and one at the abdominal level, but the slippage of bands can lead to inaccurate readings.38

The advantage of using wearable devices is that they are not cumbersome and can be used to monitor physiological parameters during daily life activities and outside of clinical settings. However, as respiratory parameters are known to change during different activities and in different postures,20 having a system that combines respiratory parameters in static and dynamic conditions and human activity recognition (HAR) would provide even more clinically relevant information.

Activity Recognition Systems

The current wearable technologies that can be used to implement HAR can be sensor-based, vision-based, or radio-based. Sensor-based technologies are the ones that can be used without environmental constraints. Linear accelerations13 and angular velocities26 can be detected via micro-electro-mechanical systems (MEMS), which measure either capacity changes or the deflection of magnetically excited comb structures. Their use is based on demonstrated relationships between accelerometer output and energy expenditure in studies on gait analysis and ergonomics.44 Barometric pressure sensors, on the other hand, can be particularly useful in fall detection.30 Data obtained from the employed sensors can then be fed to an artificial intelligence algorithm based on machine or deep learning techniques.

Aim of the Work

The present research work has three main goals: one is to present an advanced prototype suitable for continuous monitoring of respiratory parameters in static and dynamic conditions; another one is to exploit the possibility to perform HAR from the same raw sensor data used for respiratory monitoring; the third one is to use the knowledge on performed activity to fine-tune a RR estimation algorithm and thus improve accuracy. The first point was addressed by optimizing a previously validated IMU-based technology, while the second was performed with artificial intelligence methods. These points are addressed in "Materials and Methods" section. The third point is presented from the post-processing point of view in "Results" section and a possible workflow for future implementations is later discussed in "Discussion" section.

Materials and Methods

Dataset

The 20 healthy subjects involved in the research (9 men, 11 women) were between 23 and 54 years old at the time of the study, with mean age 26.8, mean height 172.5 cm and mean weight 66.9 kg. The experimentation was approved by the Ethical Committee of Politecnico di Milano (Protocol number: 20/2020) and all participants signed an informed consent.

The protocol, shown in Fig. 1, included seven static postures (sitting with support, sitting without support, supine, prone, left decubitus, right decubitus, standing) and five dynamic activities (walking slow at 4 km/h, walking fast at 6 km/h, running, climbing up and down the stairs, cycling). Each activity lasted 5 min. The walking and running activities were performed on a treadmill, while the cycling activity was performed on an ergometer.

Hardware, Firmware, and Data Transmission

The present work exploits a wearable respiratory Holter based on three Inertial Measurement Units (IMUs).2 Two sensor units are aimed at detecting chest wall breathing-related movements, one located on the thorax and the other on the abdomen; the last IMU is placed in a position not involved in respiratory motion but integral with body movement.15,16

Data from the 9-axis IMUs were previously validated to extract respiratory parameters in static conditions. An algorithm for the offline processing of the obtained data was developed and validated with Opto-Electronic Plethysmography (OEP) on healthy subjects.17 A comparison with OEP for the breathing frequency estimation demonstrated that the device based on the inertial measurement units (IMU-based device) provided optimal results in terms of mean absolute errors (< 2 breaths/min) and correlation (r > 0.963). However, raw data were never exploited in terms of HAR, which is of great interest in combination with respiratory parameters.

In this paper, the reference unit is placed on the lower back because its movement is integral with the one of the body trunk, but not involved in respiratory movement. The main components of each unit of the device are an inertial measurement unit (ICM-20948), and a microcontroller module with an integrated ANT transceiver (MDBT42Q, based on the microcontroller nRF52832). The device is attached to the skin of the subject with disposable ECG electrodes. The three units composing the wearable systems, once closed, are represented in Fig. 2 (top). In the same figure (bottom left), the thoracic and the abdominal unit are shown when a subject is wearing them. The thoracic unit is placed at the level of the abdominal rib cage, while the abdominal unit is placed next to the belly button. Fig. 2 also shows the reference unit placed on the lower back of the same subject (bottom right).

Data coming from the three units are collected either by means of an ANT USB2 Stick that is plugged into a personal computer during the acquisitions or by an Android smartphone that supports ANT.

The raw sensor data read by the IMU are composed of three accelerometer components, three gyroscope components and three magnetometer components and they are sent to the nRF52832 with a 40 Hz rate. The microcontroller, then, computes the 9-axis quaternion with the algorithm developed by Madgwick et al.,28 transmitting one quaternion out of four to the USB2 stick or smartphone, resulting in a 10 Hz frequency, through the ANT communication protocol.

The USB2 Stick or the smartphone is the receiver of the data sent by the nRF52832 and it is configured as the master, while the peripheral units work as the slaves. The topology of the network is called Shared Channel and is shown in Fig. 3. The master channel has a channel period of 30 Hz, so that it has a 10 Hz time slot to address each of the units.

Each sent quaternion is expressed through a floating-point value, ranging from − 1 to 1 and it is transmitted in a byte of the data payload. Moreover, it is also present a counter, increased every four quaternions calculated with a frequency of 40 Hz, to identify the n-th transmission.

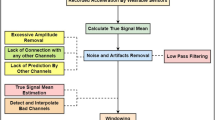

Respiratory Signal Processing

The process which leads to the respiratory parameters extraction from the data collected by the units is performed offline using a software that implements the previously validated algorithm by Cesareo et al.17 In the cited work, the estimation had a mean absolute error < 2 breaths/min with respect to a gold standard measurement (optoelectronic plethysmography) and was tested only in static conditions. The choice of the parameters corresponds to what was used in the previously validated algorithm in static conditions.

Preliminary validation data during dynamic activities (walking and running at different speeds) showed good agreement between the presented IMU-based system and a Cosmed K5 metabolic cart, as shown in a work by Angelucci et al.7 In dynamic conditions, a fine-tuning of the algorithm by Cesareo et al.17 to adapt the code to the processing of breathing during dynamic activities was added by including the knowledge of the performed activity in the respiratory signal processing.6 In particular, the variations are in the cut-off frequencies of some of the implemented filters. The whole elaboration algorithm can be subdivided into four main parts: pre-processing, dimension reduction, spectrum analysis, and processing.

In the pre-processing phase, the data divided by unit of origin are organized in four arrays, where missing data are replaced after interpolation is performed. The quaternions are created by combining the arrays. Then, the window to be analyzed is manually selected to avoid a transitory phase necessary for the quaternion to stabilize. The same selection was done in to train the HAR algorithm that is presented in the next section. An example of window selection of a single unit is shown in Fig. 4.

Afterwards, the quaternion product is computed, providing the orientations of the thoracic and abdominal unit referred to the orientation of the reference unit. (1) and (2) show how these computations are performed:

‘Th’ indicates the thoracic unit, ‘Ab’ the abdominal unit, and ‘Ref’ the reference unit. \({}_{y}{}^{x}\widehat{q}\) is the quaternion describing the position of a generic point x with respect to a generic point y, and all quaternions are expressed in the same way. For instance, \({}_{{{\text{Ref}}}}^{{{\text{Th}}}} \hat{q}\) describes the position of the thoracic unit with respect to the reference unit. * refers to the quaternion conjugate, and ⊗ to the quaternion multiplication.

After the quaternion product, the non-respiratory movements are reduced because angular changes are referred to the reference unit, which does not detect breathing-related motions, but it is integral with trunk movement. Then, the baseline is computed by means of the moving average on 97 samples for each quaternion component and subtracted to them to remove the residual non-breathing movement. The generated components are the input for the dimension reduction block.

With the aim of reducing the dimension of the dataset, the Principal Component Analysis (PCA)36,43 is performed. The first component, the one with the greatest amount of variance explained, is computed for the thorax and for the abdomen and considered as respiratory signal and constitute the basis for the spectrum analysis.

The generated signals are filtered with a Savitzky-Golay FIR (Finite Impulse Response) smoothing filter of the 3rd order with a window length of 31 samples. This filter works using the linear least squares method to fit successive sub-sets of adjacent data with a third order polynomial. In this way, the noise is decreased without changing the shape and the signal peaks height. Then, the mean (fmean) and the standard deviation (fstd) of the inverse of the distances between subsequent peaks are considered to obtain a frequency estimate. fmean and fstd are used to compute fthresh and the procedure is performed both for the thoracic component and the abdominal component as in (3):

\( f_{{\text{thresh}}\_{\text{min}}} \) is arbitrarily set at different values for static postures (0.05 Hz, as in Cesareo’s algorithm) or dynamic activities (0.2 Hz for walking and cycling, 0.4 Hz for running), so that a better filtering of low-frequency components of dynamic activities is guaranteed.

Once the threshold frequencies of both the thoracic and the abdominal unit are obtained, the low-frequency threshold is computed as the minimum between the abdominal low threshold and the thoracic low threshold. The use of a low threshold helps in the identification of the power spectral density (PSD) peak related to the RR and does not consider very low frequency peaks, often caused by movement artifacts.

Subsequently, the PSD estimate is computed employing the Welch's method, with the Hamming window type, 300 samples as window size, and 50 samples of overlap.

The PSD maximum in the interval between the computed low threshold and a maximum (1 Hz for static postures, 0.75 Hz for walking and cycling, 1.4 Hz for running) is identified (fpeak). This value is used to build the adaptive band-pass filter settings (centered in fpeak). The upper and lower cut-off frequencies, for both thorax and abdomen, are obtained as in (4) and (5):

The final processing block comprises all the processes intended to extract breathing frequency and other respiratory parameters from the signals obtained after the dimension reduction block.

The first step is the application of the band-pass filter with the previously set cut-off frequencies \({f}_{\text{U}}\) and \({f}_{\text{L}}\). Since the frequencies are dependent on \({f}_{\text{peak}}\), the result is an adaptive filter, based on the specific analysed recording.

Then, a parametric tuning based on the \({f}_{\text{peak}}\) value is performed. This is necessary for the subsequent steps of filtering and maxima and minima detection. In particular, the involved parameters are the window length in terms of samples for the third order Savitzky–Golay filter and the minimum peak distance. In fact, the algorithm chooses the tallest peak in the signal and ignores all peaks within the decided distance and the minimum prominence threshold, through which is possible to set a measure of relative importance; a more detailed description of the parameters can be found in the work by Cesareo et al.17

Afterwards, filtered signals are furtherly smoothed through the application of a third order Savitzky–Golay FIR filter, to optimize subsequent detection of maxima and minima point, which are identified applying the parameters previously set. Moreover, in addition to the thoracic and the abdominal signal, the process is repeated also for the sum of the two signals once they were filtered with the Savitzky–Golay filter. RR is thus obtained breath-by-breath and the values obtained for each posture or activity are reported in Section "Results".

Human Activity Recognition Algorithm

After the window selection, shown in Fig. 4, the data processing to train the activity recognition algorithm is different from the one for the respiratory analysis. All parameters of the algorithms are determined empirically to maximize the outcome.

The next step involves the creation of a single large dataset containing all the activity proper labeled for all the subjects. In a first phase, the algorithm is run on the data of the reference unit, because it can be considered representative of the subject’s positions. Secondly, the algorithm is trained on the signals coming from all three units (thoracic, abdomen, and reference). In both cases, a dataset is obtained merging the tasks “sitting without support” and “sitting with support” in a single label called "sitting", and the tasks “walking at 4 km/h” and “walking at 6 km/h” in a label called "walking", so the final dataset has 10 labels. This is done to increase the variability of the signal during the training process, so that the algorithm can be more robust in identifying a person that sits with a back support from a supine one and between a fast walk and a run.

The resulting dataset is unbalanced, because the labels "sitting" and "walking" had about twice the data of the other labels. The unbalancing is kept in the situation with one unit, while data are balanced in the training with three units. A balancing procedure is used, which consists in reducing the samples of each label to same number of the activity with the lowest amount of data.

The implemented preprocessing steps are data standardization, label encoding and segmentation. Standardization is performed with (6), so that data are centered on 0 and properly scaled. \(\mu\) is the mean and \(\sigma\) is the standard deviation.

The data are then segmented in non-overlapping windows of 200 samples in length, equal to 20 s of recording.

After these steps, splitting into training and test sets is required. It is chosen to use 80% of the data for the training set and the remainder 20% for the test set. The seed to the random generator was set equal to 42.

In machine learning methods the feature extraction must be performed before the model training. The selected features are both in time domain and frequency domain for one unit, while only the ones in time domain were used for the three units.

The time domain features are extracted from the time series of the signal and are the following: mean, standard deviation, variance, kurtosis, skewness, peak-to-peak distance, median, interquartile range. The frequency domain features are extracted from the Fast Fourier Transform (FFT) of the signal and are the following: mean, standard deviation, skewness, maxima and minima of the FFT, mean and maximum of the power spectral density.

Three machine learning methods are used: a K-Nearest Neighbor classifier (KNN), a Random Forest classifier (RF) and a Support Vector Machine (SVM).

In the case of the KNN classifier, the metric chosen for the computation of the distance is the Euclidean metric.34 The optimal number of neighbors K is around 5, since afterwards the accuracy score decreased.

In the case of the RF classifier,40 the splitting rule to create the nodes of the trees that compose the forest is the Gini Criterion. Afterwards, in each node the corresponding attribute is chosen by minimizing the impurity, as it is traditionally done with RF classifiers.

Since the data of this research project cannot be separated linearly in the original space, to develop a SVM a kernel is used. In this case, the Radial Basis Function Kernel is used, which can be expressed mathematically as (7):

where \(\sigma\) is the variance and the hyperparameter \(\Vert {X}_{1}-{X}_{2}\Vert\) is the Euclidean distance between two points \({X}_{1}\) and \({X}_{2}\). In this case, distance is used as an equivalent of dissimilarity: when the distance between the points increases, they are less similar. By default, \(\sigma\) is taken equal to one, so the kernel is represented by a bell graph, that decreases exponentially as the distance increases and is 0 for distances greater than 4.

Five additional networks are created using deep learning methods; their characteristics are shown in detail in Fig. 5. The networks used are the following: a 1D Convolutional Neural Network (1DCNN), a 2D Convolutional Neural Network (2DCNN), a single-layer Long Short Term Memory (BASE LSTM), a multi-layer Long Short Term Memory (MULTI LSTM) and a Gated Recurrent Unit (GRU).

All networks use the Sequential Model to construct a plain stack of layers where each layer has exactly one input tensor and one output tensor. During the optimization part of the algorithm, the error at the current state must be iteratively estimated. For all networks, the chosen loss function is the Sparse Multiclass Cross-Entropy Loss as in (8), used to calculate the model’s loss such that the weights can be updated to minimize the loss on subsequent evaluations. The Cross-Entropy loss is defined as:

where \(w\) refers to the model parameters, \({y}_{i}\) is the true label and \({\widehat{y}}_{i}\) is the predicted label.

After that, to reduce the losses, an optimizer is used to adjust the neural network’s attributes such as weights and learning rate. The optimization method for the CNNs and GRU used is the Adam optimizer25 based on adaptive estimates of lower-order moments. For the LSTM networks, the RMSprop optimizer is used. The batch size is a hyperparameter that defines the number of samples taken from the training dataset to train the network before updating the internal model parameters; the chosen value is 16.

Questionnaire

An evaluation questionnaire was given to the subjects participating in the study to collect impressions about usability, acceptance, and wearability of the wearable system. It is based on the System Usability Scale (SUS)14 and consists of 10 items, with odd-numbered items phrased positively and even-numbered items phrased negatively. The evaluation criteria of the SUS usability questionnaire were maintained in this application. In particular, the 10 items included in the ad-hoc questionnaire for the device evaluation are listed in Table 1.

The items are presented as 5-point scales numbered from 1 (“Strongly disagree”) to 5 (“Strongly agree”) and the subject had to give a score to each item. Age, gender, weight, and height were asked at the beginning of the questionnaire.

Results

Respiratory Rate

RR was studied for the 20 involved subjects in the different postures and activities. Due to the unfavorable signal-to-noise ratio, parameters could not be extracted in the case of climbing stairs with the previously validated algorithm, therefore those values are not included in the analysis. The dataset presented puts together the two sitting positions but separates “walking slow” and “walking fast” to show the sensitivity of the respiratory analysis algorithm to the different levels of effort. The boxplots of the median values obtained for each subject in the different conditions are shown in Fig. 6.

The distributions were statistically compared one with the other with a non-parametric Friedman test. The Shapiro–Wilk normality test was failed (p < 0.05); also, the Equal Variance Test (Brown-Forsythe) was failed (p < 0.05). The differences in the mean values among the groups were greater than would be expected by chance; there is a statistically significant difference (p ≤ 0.001).

To isolate the group or groups that differ from the other, the Bonferroni t-test was used as multiple comparison procedure. The p-values obtained with these comparisons were analyzed. The activities, “walking slow”, “walking fast”, “running” and “cycling” have a statistically significant difference with respect to static postures (p < 0.05 in all cases), but not always one with respect to the other. “Walking slow” and “walking fast” do not significantly differ from “cycling” (p = 1.000) and between one another (p = 1.000). This result confirms what is known in the literature,9,10 i.e. that during physical activity RR increases and this phenomenon is more evident when the activity is more demanding (during “running”). Also, there is a statistically significant difference between the “supine” and the “prone” positions (p = 0.045) and between the “lying right” and the “prone” positions (p = 0.049). This is likely due to the fact that the processing algorithm is designed to analyze the movement of the two units in the front with respect to the reference unit, while in prone position also the dorsal movement contributes to ventilation.3

Human Activity Recognition Algorithm

The results obtained with the previously introduced methods with one unit are reported in Table 2.

Looking at the accuracy, the best performing model is the GRU.

The results obtained with the previously introduced methods with three units are reported in Table 3. From the confusion matrix that can be obtained considering every individual position or activity, the highest confusion happens when the sitting position is predicted as supine, and vice versa. This is observed both in case with one unit and in the case with three units. This is probably because the posture 'sitting' includes data obtained when the subjects were sitting with and without a back support.

A direct comparison between the HAR methods developed with one unit and those developed with three is shown in Fig. 7.

In this case, all methods have better performances when compared to the case with only one unit. The best performing one is the 1DCNN. It must be considered also that the features extracted for the three units are only concerning time, which suggests that the inclusion of the frequency features could further improve the accuracy.

Questionnaire

The subjects found the device easy to wear and place, however most claimed they needed the support to manage the device. Nearly eighty percent asserted that the device attaching method makes placement easier and improves wearability. Moreover, nobody reported irritation or itching in the device mounting areas, and almost no one wanted to take their device off during activities. Around 70% of the participants believe the device would not interfere with their daily activities and that they would be able to sleep while wearing it. Almost everyone believes that it is possible to keep the device for long time, but only a few say they can use it independently, probably because the reference unit is placed on the lower back, so another person is needed to place the unit properly at the beginning of each acquisition. The actual results are shown in Fig. 8 in comparison to the ideal ones.

Discussion

In the presented sensor system,15,16 two sensor units are aimed at detecting chest wall breathing-related movements, one is located on the thorax and the other on the abdomen; the last IMU is placed on a position not involved in respiratory motion but integral with body movement, most often on the lower back. In fact, the influence of posture on chest wall motion is intensively studied in the literature. It was previously studied that most of the chest wall volume change is distributed in the thoracic compartment in vertical postures and in abdominal compartment in horizontal postures.37 For this reason, the system’s configuration is particularly advantageous for a thorough analysis of the chest movement during breathing in different postures since it can account for the different contributions of the chest wall in different positions.

The algorithm to extract breathing parameters allowed to obtain results that confirm what is known in the literature,9,35 i.e., that the frequency is higher in dynamic conditions, and increases for increasing efforts. Furthermore, it must be noted that most systems are not able to provide measurements of RR during demanding dynamic activities like running.

With a previous knowledge of the performed activity, the respiratory signal processing can be fine-tuned, and this system is able to accurately measure this signal even during dynamic activity. A future improvement of the project includes a new artificial intelligence algorithm that combines HAR to automatically decide how to process respiratory data.

A drawback of the actual signal processing algorithm is that it requires a manual analysis window selection, but this can be easily overcome by implementing a sliding window algorithm.

This system is advantageous both for sports and medical applications, due to its ability to measure this parameter in a broad range of situations. However, the signal-to-noise ratio is too low while climbing the stairs and the algorithm could not be applied.

The results obtained showed an overall good capability to recognize different activities, independently from the age or the gender of the subjects. Although only features in time and not in frequency were used in the case with three units, the comparison between the use of a single units compared to the use of three, showed that the second case works better, with higher accuracy and f1-score both for machine and deep learning methods. The dataset is however small and during dynamic activities with a prominent frequency component, like running or walking at a fixed speed. Data should be collected from more subjects and in more diverse condition to make the algorithm robust for use outside of laboratory settings. A final consideration regards the first step of the data preparation: removing the initial transitory might have led to an overestimation of the accuracy.

This work can be considered original with respect to state of art of HAR because there are many studies that works with the output data of the accelerometers and gyroscopes, while there are few that use only quaternion data. The three units only send quaternion data, which allows to reduce the dimension of the dataset (4 measures instead of 9 of the IMU). Only using the quaternion allows to send all the needed data in a single package without losing information. Quaternions allow to distinguish quite well between activity orientation-related, such as lying left and lying right or supine and prone. It is also worth noting that the device combines breath analysis with activity recognition, making it very innovative.

A limitation of this work is that the results of respiratory parameters were not compared with a gold standard, so accuracy of the adapted algorithm cannot be assessed. However, the technical feasibility of this solution was demonstrated. A trial on healthy subjects during dynamic activities is needed to fully validate the algorithm for dynamic activities. Subsequently, a trial on patients can provide physiological results that are clinically significant and that can be effectively used for telemedicine purposes without the supervision of the clinician. The proposed device could be applied in the case of chronic respiratory diseases, such as COPD or asthma, but also in cardiac diseases in which RR is predictive of an adverse event, such as heart failure or cardiac arrest. It is also possible to test this solution to monitor at home the acute phase, the rehabilitation phase, and the long-term clinical outcomes of Covid-19. A feature that is needed for patient monitoring is the generation of real-time alerts, but to determine what thresholds or trends constitute a critical event a pilot study is needed to gain relevant clinical data.

References

Aliverti, A. Wearable technology: role in respiratory health and disease. Breathe. 13:e27–e36, 2017.

Aliverti, A., and A. Cesareo. A wearable device for the continuous monitoring of the respiratory rate. 2020.

Aliverti, A., R. Dellacà, P. Pelosi, D. Chiumello, L. Gattinoni, and A. Pedotti. Compartmental analysis of breathing in the supine and prone positions by optoelectronic plethysmography. Ann. Biomed. Eng. 29:60–70, 2001.

Aliverti, A., M. Evangelisti, and A. Angelucci. Wearable tech for long-distance runners. In: The Running Athlete: A Comprehensive Overview of Running in Different Sports, edited by G. L. Canata, H. Jones, W. Krutsch, P. Thoreux, and A. Vascellari. Berlin: Springer, 2022, pp. 77–89.

Angelucci, A., and A. Aliverti. Telemonitoring systems for respiratory patients: technological aspects. Pulmonology. 26:221–232, 2020.

Angelucci, A., A. Aliverti, D. Froio, and F. Moro. Metodo per il monitoraggio della frequenza respiratoria di una persona. Patent: 102021000029204, 2021.

Angelucci, A., F. Camuncoli, M. Galli, and A. Aliverti. A wearable system for respiratory signal filtering based on activity: a preliminary validation. IEEE Int. Workshop Sport. 2022. https://doi.org/10.1109/star53492.2022.9860001.

Angelucci, A., M. Cavicchioli, I. A. Cintorrino, G. Lauricella, C. Rossi, S. Strati, and A. Aliverti. Smart textiles and sensorized garments for physiological monitoring: a review of available solutions and techniques. Sensors (Switzerland). 21:1–23, 2021.

Angelucci, A., D. Kuller, and A. Aliverti. A home telemedicine system for continuous respiratory monitoring. IEEE J. Biomed. Heal. Informatics. 2020. https://doi.org/10.1109/JBHI.2020.3012621.

Angelucci, A., D. Kuller, and A. Aliverti. Respiratory rate and tidal volume change with posture and activity during daily life. Eur. Respir. J. 56:2130, 2020.

Antonelli, A., D. Guilizzoni, A. Angelucci, G. Melloni, F. Mazza, A. Stanzi, M. Venturino, D. Kuller, and A. Aliverti. Comparison between the AirgoTM device and a metabolic cart during rest and exercise. Sensors 2020.

Bernasconi, S., A. Angelucci, and A. Aliverti. A scoping review on wearable devices for environmental monitoring and their application for health and wellness. Sensors. 22:5994, 2022.

Bouten, C. V. C., K. T. M. Koekkoek, M. Verduin, R. Kodde, and J. D. Janssen. A triaxial accelerometer and portable data processing unit for the assessment of daily physical activity. IEEE Trans. Biomed. Eng. 44:136–147, 1997.

Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 189:4–7, 1996.

Cesareo, A., E. Biffi, D. Cuesta-Frau, M. G. D’Angelo, and A. Aliverti. A novel acquisition platform for long-term breathing frequency monitoring based on inertial measurement units. Med. Biol. Eng. Comput. 58:785–804, 2020.

Cesareo, A., S. Gandolfi, I. Pini, E. Biffi, G. Reni, and A. Aliverti. A novel, low cost, wearable contact-based device for breathing frequency monitoring. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. EMBS 2402–2405, 2017. https://doi.org/10.1109/EMBC.2017.8037340

Cesareo, A., Y. Previtali, E. Biffi, and A. Aliverti. Assessment of breathing parameters using an inertial measurement unit (IMU)-based system. Sensors (Switzerland). 19:1–24, 2019.

Chan, M., D. Estève, J.-Y. Fourniols, C. Escriba, and E. Campo. Smart wearable systems: current status and future challenges. Artif. Intell. Med. 56:137–156, 2012.

Chu, M., T. Nguyen, V. Pandey, Y. Zhou, H. N. Pham, R. Bar-Yoseph, S. Radom-Aizik, R. Jain, D. M. Cooper, and M. Khine. Respiration rate and volume measurements using wearable strain sensors. NPJ Digit. Med. 2:1–9, 2019.

Contini, M., A. Angelucci, A. Aliverti, P. Gugliandolo, B. Pezzuto, G. Berna, S. Romani, C. C. Tedesco, and P. Agostoni. Comparison between PtCO2 and PaCO2 and derived parameters in heart failure patients during exercise: a preliminary study. Sensors. 21:6666, 2021.

Faria, I., C. Gaspar, M. Zamith, I. Matias, R. C. D. Neves, F. Rodrigues, and C. Bárbara. TELEMOLD project: oximetry and exercise telemonitoring to improve long-term oxygen therapy. Telemed. e-Health. 20:626–632, 2014.

Fieselmann, J. F., M. S. Hendryx, C. M. Helms, and D. S. Wakefield. Respiratory rate predicts cardiopulmonary arrest for internal medicine inpatients. J. Gen. Intern. Med. 8:354–360, 1993.

Gálvez-Barrón, C., F. Villar-Álvarez, J. Ribas, F. Formiga, D. Chivite, R. Boixeda, C. Iborra, and A. Rodríguez-Molinero. Effort oxygen saturation and effort heart rate to detect exacerbations of chronic obstructive pulmonary disease or congestive heart failure. J. Clin. Med. 8(1):42, 2019.

Gerhardt, U., R. Breitschwerdt, and O. Thomas. mHealth engineering: a technology review. J. Inf. Technol. Theory Appl. 19:5, 2018.

Kingma, D. P., and J. Ba. Adam: A method for stochastic optimization. arXiv1412.6980 , 2014.

Kunze, K., G. Bahle, P. Lukowicz, and K. Partridge. Can magnetic field sensors replace gyroscopes in wearable sensing applications? Proc. Int. Symp. Wearable Comput. ISWC. 2010. https://doi.org/10.1109/ISWC.2010.5665859.

Liu, H., J. Allen, D. Zheng, and F. Chen. Recent development of respiratory rate measurement technologies. Physiol. Meas. 40:07TR01, 2019.

Madgwick, S. O. H., A. J. L. Harrison, and R. Vaidyanathan. Estimation of IMU and MARG orientation using a gradient descent algorithm. IEEE Int. Conf. Rehabil. Robot. 2011.

Massaroni, C., C. Venanzi, A. P. Silvatti, D. L. Presti, P. Saccomandi, D. Formica, F. Giurazza, M. A. Caponero, and E. Schena. Smart textile for respiratory monitoring and thoraco-abdominal motion pattern evaluation. J. Biophoton. 11:1–12, 2018.

Massé, F., R. R. Gonzenbach, A. Arami, A. Paraschiv-Ionescu, A. R. Luft, and K. Aminian. Improving activity recognition using a wearable barometric pressure sensor in mobility-impaired stroke patients. J. Neuroeng. Rehabil. 12:1–15, 2015.

Mishra, T., M. Wang, A. A. Metwally, G. K. Bogu, A. W. Brooks, A. Bahmani, A. Alavi, A. Celli, E. Higgs, O. Dagan-Rosenfeld, B. Fay, S. Kirkpatrick, R. Kellogg, M. Gibson, T. Wang, E. M. Hunting, P. Mamic, A. B. Ganz, B. Rolnik, X. Li, and M. P. Snyder. Pre-symptomatic detection of COVID-19 from smartwatch data. Nat. Biomed. Eng. 4:1208–1220, 2020.

Naranjo-Hernández, D., A. Talaminos-Barroso, J. Reina-Tosina, L. M. Roa, G. Barbarov-Rostan, P. Cejudo-Ramos, E. Márquez-Martín, and F. Ortega-Ruiz. Smart vest for respiratory rate monitoring of copd patients based on non-contact capacitive sensing. Sensors (Switzerland). 18:1–24, 2018.

Nicolò, A., C. Massaroni, and L. Passfield. Respiratory frequency during exercise: the neglected physiological measure. Front. Physiol. 8:922, 2017.

Peterson, L. E. K-nearest neighbor. Scholarpedia. 4:1883, 2009.

Qi, W., and A. Aliverti. A multimodal wearable system for continuous and real-time breathing pattern monitoring during daily activity. IEEE J. Biomed. Heal. Informatics. 2019. https://doi.org/10.1109/JBHI.2019.2963048.

Ringnér, M. What is principal component analysis? Nat. Biotechnol. 26:303–304, 2008.

Romei, M., A. L. Mauro, M. G. D’Angelo, A. C. Turconi, N. Bresolin, A. Pedotti, and A. Aliverti. Effects of gender and posture on thoraco-abdominal kinematics during quiet breathing in healthy adults. Respir. Physiol. Neurobiol. 172:184–191, 2010.

Sackner, M. A., H. Watson, A. S. Belsito, D. Feinerman, M. Suarez, G. Gonzalez, F. Bizousky, and B. Krieger. Calibration of respiratory inductive plethysmograph during natural breathing. J. Appl. Physiol. 66:410–420, 1989.

Shah, S. A., C. Velardo, A. Farmer, and L. Tarassenko. Exacerbations in chronic obstructive pulmonary disease: identification and prediction using a digital health system. J. Med. Int. Res. 19:1–14, 2017.

Vercellis, C. Business Intelligence: Data Mining and Optimization for Decision Making. New York: Wiley Online Library, 2009.

Villar, R., T. Beltrame, and R. L. Hughson. Validation of the Hexoskin Wearable Vest During Lying, Sitting, Standing, and Walking Activities. Appl. Physiol. Nutr. Metab. 40:1019–1024, 2015.

Whitlock, J., J. Sill, and S. Jain. A-spiro: towards continuous respiration monitoring. Smart Heal.15:100105, 2020.

Wold, S., K. Esbensen, and P. Geladi. Principal component analysis. Chemom. Intell. Lab. Syst. 2:37–52, 1987.

Zago, M., A. F. R. Kleiner, and P. A. Federolf. Editorial: machine learning approaches to human movement analysis. Front. Bioeng. Biotechnol. 8:638793, 2021.

Acknowledgments

The authors thank Federico Moro, Dario Froio, Alessandro Pascone, Luca Testa and all the other members of e-Novia S.p.A. for their valuable support, advice, and cooperation during this research project.

Funding

Open access funding provided by Politecnico di Milano within the CRUI-CARE Agreement. Andrea Aliverti is an inventor of a granted patent owned by Politecnico di Milano. Alessandra Angelucci and Andrea Aliverti are inventors of a patent application owned by e-Novia S.p.A. e-Novia S.p.A. partially supported the work with a research grant.

Author information

Authors and Affiliations

Corresponding author

Additional information

Associate Editor Christian Zemlin oversaw the review of this article.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Angelucci, A., Aliverti, A. An IMU-Based Wearable System for Respiratory Rate Estimation in Static and Dynamic Conditions. Cardiovasc Eng Tech 14, 351–363 (2023). https://doi.org/10.1007/s13239-023-00657-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13239-023-00657-3