Abstract

The prisoner’s dilemma, the snowdrift game, and the stag hunt are two-player symmetric games that are often considered as prototypical examples of cooperative dilemmas across disciplines. However, surprisingly little consensus exists about the precise mathematical meaning of the words “cooperation” and “cooperative dilemma” for these and other binary-action symmetric games, in particular when considering interactions among more than two players. Here, we propose definitions of these terms and explore their evolutionary consequences on the equilibrium structure of cooperative dilemmas in relation to social optimality. We show that our definition of cooperative dilemma encompasses a large class of collective action games often discussed in the literature, including congestion games, games with participation synergies, and public goods games. One of our main results is that regardless of the number of players, all cooperative dilemmas—including multi-player generalizations of the prisoner’s dilemma, the snowdrift game, and the stag hunt—feature inefficient equilibria where cooperation is underprovided, but cannot have equilibria in which cooperation is overprovided. We also find simple conditions for full cooperation to be socially optimal in a cooperative dilemma. Our framework and results unify, simplify, and extend previous work on the structure and properties of cooperative dilemmas with binary actions and two or more players.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cooperative (or social) dilemmas can be informally described as situations where there is a tension between individual and collective interest regarding the cooperative behavior of individuals within a group [13, 32, 35, 48, 62, 80]. The tension arises because cooperation can benefit the whole group but individuals might prefer to reduce their own cooperation and exploit the cooperative behavior of others. Examples of cooperative dilemmas include the private provision of public goods [6, 51], the management of common resources [52], vigilance and sentinel behavior [11], voting [55], protests, and other kinds of political participation [14], vaccination [70], mask-wearing during virus pandemics [76], and many more.

Given their ubiquity, the study of cooperative dilemmas and their resolution has attracted enormous attention in economics, political science, anthropology, psychology, sociology, and evolutionary biology. Across these different disciplines, game theory has emerged as the standard way of formalizing and thinking about cooperative dilemmas [26, 43, 82]. Within this perspective, a social interaction is conceptualized as a game whose equilibria predict the strategic behavior of individuals in the long run. Such equilibria emerge as a result of individual rationality, individual or social learning, or of evolution acting on a population. The literature of cooperative dilemmas has used different equilibrium concepts, including the Nash equilibrium (NE), the evolutionarily stable strategy (ESS), and the asymptotic stable equilibrium (ASE) of the replicator dynamic [75]. Here, we make use of the ESS as equilibrium concept and guiding principle. In simple terms, an ESS is a strategy such that if all members of a population adopt it, then no rare alternative strategy would fare better [42]. The ESS is an equilibrium refinement of the (symmetric) NE, and, for the games we consider in this paper, equivalent to the concept of ASE [9].

Conceivably, the simplest game-theoretic representation of a cooperative dilemma is as a symmetric game of complete information between players who choose simultaneously between two alternative actions or strategies (“cooperation” and “defection”), i.e., a multi-player matrix game [8, 9, 27, 57]. The most paradigmatic example of such two-strategy cooperative dilemmas is the two-player prisoner’s dilemma [64]. In this game, “defection” is a dominant strategy (so that it is individually optimal to defect regardless of the co-player’s choice) and hence the only ESS (so that a population of defectors cannot be invaded by mutants cooperating with some probability). However, mutual “cooperation” yields higher payoffs to both players and can be, for certain payoff constellations, the socially optimal outcome. The (two-player) prisoner’s dilemma captures the essence of a cooperative dilemma in the starkest possible way, with a population trapped at an unique ESS featuring no cooperative behavior while expected payoffs would be maximized at some positive level of cooperation.

Although much earlier work focused exclusively on the prisoner’s dilemma, it has been realized that in many situations two other two-player games can be better representations of cooperative dilemmas occurring in nature or society: the snowdrift game [21] (or the game of chicken, [65]), and the stag hunt [71] (or assurance game). While the prisoner’s dilemma is characterized by both greed (an incentive to defect if the co-player cooperates) and fear (a disincentive to cooperate if the co-player defects), the snowdrift game is characterized only by greed (but not fear) and the stag hunt is characterized only by fear (but not greed). These different incentive structures lead to different ESS patterns. First, for the snowdrift game, there is a unique ESS characterized by a population where there is some cooperation, although less than what would maximize the expected payoff. Hence, in contrast to the prisoner’s dilemma, some level of cooperation is evolutionarily stable. However, as in the prisoner’s dilemma, this stable level of cooperation is lower than the socially optimal level. Second, for the stag hunt, there are two ESSs: the first with no cooperation, and the second with full cooperation, and where the fully cooperative ESS coincides with the socially optimal level of cooperation. Hence, in contrast to the prisoner’s dilemma, the socially optimal level of cooperation is evolutionarily stable. However, as in the prisoner’s dilemma, the population can be trapped at the equilibrium where nobody cooperates. Taken together, the prisoner’s dilemma, the snowdrift game, and the stag hunt constitute the three paradigmatic examples used to describe and think about cooperative dilemmas in two-player interactions [35].

In light of the wealth of research on cooperative and social dilemmas that has been published in recent decades, one would have anticipated a broad consensus regarding how to precisely define concepts such as “cooperation” and “cooperative dilemma”—at the very least for symmetric matrix games. However, this does not appear to be the case. In fact, there are multiple coexisting definitions [13, 48, 61] that are often at odds about the status of an action as cooperative (or not) or of a game as a cooperative dilemma (or not). Moving from two to more than two players only exacerbates the problem. Part of the issue is that many definitions proceed axiomatically by suggesting ways to classify games as cooperative dilemmas if given payoff inequalities hold, while other definitions emphasize the equilibrium structure (e.g., the ESS pattern) in relation to the location of socially optimal strategies that maximize expected payoffs. Such ambiguity is similar (and not unrelated) to the one surrounding different interpretations of the term “altruism” in evolutionary biology [34].

Here, we build on previous work [13, 33,34,35, 57, 59,60,61] to propose definitions of “cooperation,” “social dilemma,” and “cooperative dilemma” that are internally consistent and that are useful to characterize the outcome of social interactions. We also provide results aiming at easily identifying cooperative dilemmas, and propose multi-player generalizations of the trinity of games used in social dilemmas research, namely the prisoner’s dilemma, the snowdrift game, and the stag hunt; we also show how these multi-player cooperative dilemmas have similar properties than their two-player counterparts. We consider several classes of collective action games previously discussed in the literature and show how all of these games fall into the class of cooperative dilemmas we define. We also identify simple conditions for mutual cooperation to be socially optimal, i.e., for full cooperation to maximize the expected average payoff. We end by asking whether it is the case, as it is for the two-player prisoner’s dilemma, the snowdrift game, and the stag hunt, that cooperation is always underprovided at inefficient equilibria of a cooperative dilemma. A similar question has been asked before, although for more specific classes of cooperative dilemmas, by Gradstein and Nitzan [28] and Anderson and Engers [1].

2 Defining Cooperative Dilemmas

2.1 Games, Payoffs, and Strategies

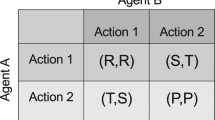

We consider normal form games with two pure strategies (or actions, or choices) denoted by \(\mathcal {C}\) and \(\mathcal {D}\). We focus on symmetric games among \(n \ge 2\) players where all players assume the same role in the game, and where the payoff of any player depends only on its own choice and on the numbers of players choosing the two available actions. We write \(C_k\) (resp. \(D_k\)) for the payoff of a player choosing \(\mathcal {C}\) (resp. \(\mathcal {D}\)) when k co-players choose \(\mathcal {C}\). Payoffs can be written in matrix form as

We collect the payoffs in the payoff sequences \({\varvec{C}} = (C_0,C_1,\ldots ,C_{n-1}) \in \mathbb {R}^{n}\) and \({\varvec{D}} = (D_0,D_1,\ldots ,D_{n-1}) \in \mathbb {R}^{n}\). We assume that \({\varvec{C}} \ne {\varvec{D}}\) holds, so as to exclude the uninteresting case where payoffs are independent of the chosen actions. However, \(C_k = D_k\) may hold for some values of \(k=0,1,\ldots ,n-1\), i.e., payoffs can be “non-generic.” In a similar spirit, we assume that \({\varvec{C}}\) and \({\varvec{D}}\) are not simultaneously constant, so as to exclude the uninteresting case where both payoff sequences are independent of k and hence of the actions chosen by co-players. Throughout, the word “game” should be understood as referring to such a two-strategy symmetric game.

We consider mixed strategies represented by \(x \in [0,1]\), where x is the probability that a player chooses \(\mathcal {C}\) (and \(1-x\), the probability that a player chooses \(\mathcal {D}\)). Thus, pure-strategy \(\mathcal {D}\) (resp. \(\mathcal {C}\)) corresponds to mixed strategy \(x=0\) (resp. \(x=1\)). We call mixed strategies with \(x \in (0,1)\), totally mixed strategies. Our main focus will be on symmetric strategy profiles in which all players adopt the same mixed strategy x. In particular, we will be interested in mixed strategies that are evolutionary stable when used by all players (i.e., evolutionarily stable strategies, ESS) and their relation to those strategy profiles that maximize expected average payoff over all symmetric strategy profiles. We refer to the later symmetric strategy profiles as social optima (see Sect. 3.3 for a formal definition).

2.2 What is Cooperation?

Given a game as the one described above, when can we say that action \(\mathcal {C}\) corresponds to “cooperation” and action \(\mathcal {D}\) to “defection”? To answer to this question, we propose the following definition of a cooperative action (hence, of “cooperation”), that we will endorse throughout.

Definition 1

(Cooperation) Action \(\mathcal {C}\) is cooperative if and only if (i) mutual \(\mathcal {C}\) is preferred over mutual \(\mathcal {D}\), i.e.,

holds, and (ii) action \(\mathcal {C}\) induces “positive individual externalities,” i.e.,

holds, with at least one of such inequalities being strict.

Definition 1 comprises two conditions. First, condition (i) means that players obtain a larger payoff if they all choose \(\mathcal {C}\) than if they all choose \(\mathcal {D}\). This condition is often encountered as part of previous definitions of multi-player “social dilemmas,” “cooperative dilemmas” or “cooperation games,” and hence implicitly included as a property of a cooperative action [13, 33, 48, 60, 61]. Second, condition (ii) is the requirement that the payoffs to \(\mathcal {C}\)-players and \(\mathcal {D}\)-players are non-decreasing (and at least sometimes increasing) in the number of cooperating co-players. In other words: a unilateral switch from defection to cooperation by a focal player will never decrease and will sometimes increase the payoff of a given co-player. Both stronger and weaker versions of condition (ii) of Definition 1 (and the underlying concept of positive individual externalities) have previously appeared in the literature to characterize the meaning of a cooperative action (or cooperative type) in game-theoretic and population genetics models of multi-player cooperation. A stronger version of condition (ii) (namely that the payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) are both strictly increasing, i.e., Eq. (3) but with strict inequalities) appears as part of the “individual-centered” interpretation of altruism proposed by [34]. A weaker version of this condition (namely that the payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) are both non-decreasing without the additional requirement that one inequality holds strictly) has appeared as part of the definitions of “n-player social dilemmas” [33], “cooperation games” [60], and “multi-player social dilemmas” [61]. Our formulation of condition (ii) sits in between these previous formulations by excluding cases where payoffs are totally insensitive to the number of cooperators among co-players while allowing for cases where the payoffs to players could be, in some contexts, constant with respect to an increase in the number of cooperators. This is the case, for instance, of the volunteer’s dilemma and the teamwork dilemma we discuss in Sect. 5.3.

2.3 What is a Cooperative Dilemma?

Not all games where \(\mathcal {C}\) is cooperative pose a social dilemma in the sense that there is a conflict between the individual and the collective interest to choose the cooperative action. To illustrate, consider the case of a two-player game with payoff matrix

satisfying \(C_1> C_0> D_1 > D_0\). In such a “harmony game” [37], action \(\mathcal {C}\) is cooperative and the symmetric strategy profile in which both players cooperate achieves the highest possible payoff (namely \(C_1\)) for both players. Hence, \(x = 1\) is the social optimum, and it is in the collective interest of the players that they both cooperate. At the same time, due to the payoff inequalities \(C_0 > D_0\) and \(C_1 > D_1\), cooperation is dominant. Hence, no matter what the other player does, it is in the individual interest of each player to choose \(\mathcal {C}\). Individual interest and the collective interest are thus perfectly aligned and there is no discernible dilemma.

The example of the harmony game suggests to define a cooperative dilemma by adding to Definition 1 the requirement that action \(\mathcal {C}\) is not dominant. This is, for instance, the approach followed by Płatkowski [61, Axiom 3]. As we will explain in Sect. 4, for games with more than two players such an approach is not satisfactory from an evolutionary perspective. Instead, to capture the intuition that a social dilemma should describe a situation where there is a conflict between the individual and the collective interests, we introduce the following definition:

Definition 2

(Social dilemma) A game is a social dilemma if it has an ESS \(x^*\) that is not a social optimum \(\hat{x}\).

Definition 2 is similar to the one given by Kollock [35, p. 184], who defines a social dilemma as a game having “at least one deficient equilibrium.” It is clear that in the harmony game the unique ESS is \(x = 1\) and thus coincides with the social optimum. Hence, the harmony game is not a social dilemma according to Definition 2. The same is true for the “prisoner’s delight” [72], i.e., the two-player game with payoffs satisfying \(C_1> D_1> C_0 > D_0\), in which action \(\mathcal {C}\) is cooperative and the unique ESS \(x=1\) again coincides with the social optimum.

Building on Definitions 1 and 2, we define a cooperative dilemma as follows:

Definition 3

(Cooperative dilemma) A cooperative dilemma is a social dilemma in which \(\mathcal {C}\) is cooperative.

For the case of two-player games, much of the previous literature on social dilemmas has identified the prisoner’s dilemma, the snowdrift game (or chicken), and the stag hunt (or assurance game) as the prototypical examples of cooperative dilemmas [35, 61]. Definition 3 is in line with this literature. Indeed, as we prove later (see Proposition 2), these are the only three different two-player games that are cooperative dilemmas according to our definition. Table 1 records the payoff inequalities that we use to define these games. Note that our definition of a prisoner’s dilemma is more permissive than the standard definition, which requires \(D_1> C_1> D_0 > C_0\). Similarly, the standard definitions of a snowdrift game and a stag hunt would require all four inequalities in the corresponding lines of Table 1 to be strict. Our definitions are in line with allowing weak inequalities in condition (ii) of Definition 1 and avoid cumbersome case distinctions.

In Sects. 4–6, we will explore the consequences of Definition 3 for the general class of games defined in Sect. 2.1. Doing so for games with more than two players is much more challenging than for the two-player case and requires additional tools and definitions. We introduce these in the following section.

3 Methods

Here, we introduce the concepts and tools that we use to derive our results. First, we briefly point out the sign terminology that we will use to describe sequences and functions. Second, we define the private gain function that determines the ESS structure of the game. Third, we define the social gain function that determines the social optimum. Fourth, we define the external gain function; this is the difference between the social function and the gain function. Fifth, and finally, we briefly introduce the Bernstein transform of a sequence. Bernstein transforms are important for our analysis because they provide a link between the sign structure of sequences determined by the payoffs of a game and the various gain functions that matter for our analysis. See Fig. 1 for an illustrating example of some of the notions we will introduce below.

Five-player game with payoff sequences \({\varvec{C}}=(0,2/3,2/3,2/3,2/3)\) and \({\varvec{D}}=(0,0,1,1,1)\). This is an example of the public goods games considered in Sect. 5.3 with benefit sequence given by \({\varvec{b}}=(0,0,1,1,1,1)\) and cost sequence given by \({\varvec{c}}=(0,1/3,1/3,1/3,1/3)\). Action \(\mathcal {C}\) is cooperative (Definition 1): (i) \(C_4 = 2/3 > 0 = D_0\) holds, and (ii) \({\varvec{C}}\) as well as \({\varvec{D}}\) are increasing sequences. The game has a unique totally mixed ESS \(x^*\) and a unique social optimum \(\hat{x}\) satisfying \(0< x^*< \hat{x} < 1\). Since \(x^* \ne \hat{x}\), the game is a social dilemma (Definition 2) and, since \(\mathcal {C}\) is cooperative, also a cooperative dilemma (Definition 3). Left panel: The expected payoffs to \(\mathcal {C}\)-players (\(w_C(x)\)) and to \(\mathcal {D}\)-players (\(w_D(x)\)) are, respectively, the Bernstein transforms of the payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\). \(w_C(x^*)=w_D(x^*)\) holds at the totally mixed ESS \(x^*\) (the “indifference condition” of totally mixed ESSs). The social optimum \(\hat{x}\) is the global maximum of the expected average payoff w(x). Right panel: The private gain function g(x), the social gain function s(x), and the external gain function h(x) are, respectively, the Bernstein transforms of the private gain sequence \({\varvec{G}}\), the social gain sequence \({\varvec{S}}\), and the external gain sequence \({\varvec{H}}\)

3.1 Sign Patterns of Sequences and Functions

We will make frequent use of specific language to describe the sign properties of both sequences (e.g., the private, social, and external sequences defined below) and functions (e.g., the private, social, and external functions defined below). For example, for a given sequence \({\varvec{A}} = (A_0,A_1,\ldots ,A_{n-1}) \in \mathbb {R}^{n}\), the initial (resp. final) sign of \({\varvec{A}}\) refers to the sign of the first (resp. final) nonzero element of \(\textbf{A}\), and the sign pattern of \({\varvec{A}}\) refers to the sequence of signs of the nonzero elements of \(\textbf{A}\) after consecutive repeated values are removed. We will also refer to a sequence as positive (resp. negative) if all of its elements are non-negative (resp. non-positive) and at least one element is positive (resp. negative). We refer the reader to “Appendix A” for more formal definitions of these and other related concepts. Similar sign notions apply to real functions; see “Appendix B” for formal definitions (restricted to polynomials on the unit interval, which are the class of real functions relevant for our analysis).

3.2 Private Gains

The expected payoff to a \(\mathcal {C}\)-player when all co-players play x is

Similarly, the expected payoff to a \(\mathcal {D}\)-player when all co-players play x is

We call the difference between these two expected payoffs the private gain function and denote it by g. It is given by

where

is the difference between the payoff to a \(\mathcal {C}\)-player interacting with k other \(\mathcal {C}\)-players and the payoff to a \(\mathcal {D}\)-player interacting with k other \(\mathcal {C}\)-players. Such a difference can be interpreted as the private gain enjoyed by a focal player who switches its action from \(\mathcal {D}\) to \(\mathcal {C}\) when k co-players play \(\mathcal {C}\) and the remaining \(n-1-k\) co-players play \(\mathcal {D}\).Footnote 1 We collect the terms \(G_k\) in the private gain sequence \({\varvec{G}} = {\varvec{C}}-{\varvec{D}} = (G_0,G_1,\ldots ,G_{n-1}) \in \mathbb {R}^{n}\).

We are interested in the private gain function (7) because it determines the ESS structure of the game (see Lemma 3 in “Appendix C” for a formal statement). In particular, pure-strategy \(x=0\) (resp. \(x=1\)) is an ESS if and only if g is negative (resp. positive) in a small neighborhood around \(x=0\) (resp. \(x=1\)), while a totally mixed strategy \(x^*\) is an ESS if and only if g changes sign from positive to negative around \(x^*\). Hence, totally mixed ESSs are roots of g (i.e., a totally mixed ESS \(x^*\) is a solution of \(g(x^*)=0\)). Since the private gain function (7) is a polynomial of degree \(n-1\), finding totally mixed ESSs involves solving a polynomial equation of degree \(n-1\).

3.3 Social Gains

The expected average payoff of a player playing mixed strategy x when all co-players also play x is

which can also be interpreted as the expected population payoff in a population where a proportion x of individuals are of type \(\mathcal {C}\) and a proportion \(1-x\) are of type \(\mathcal {D}\). A social optimum is a mixed strategy

that maximizes w. For ease of exposition, we will assume throughout that the social optimum is unique, i.e., that the expected average payoff w has a single global maximum.

The expected average payoff can be rewritten as

where

represents the total payoff to the n players when i players choose \(\mathcal {C}\) and \(n-i\) choose \(\mathcal {D}\) (and where we have set \(C_{-1} = D_n = 0\)). We collect the elements \(T_i\) in the total payoff sequence \({\varvec{T}} = (T_0,T_1,\ldots ,T_{n}) \in \mathbb {R}^{n+1}\). The average payoff to the n players when i players choose \(\mathcal {C}\) and \(n-i\) choose \(\mathcal {D}\) is then given by \(T_i/n\).

Taking the derivative of the expression for the expected average payoff in (11) and simplifying, we obtain

where

is the first forward difference of the total payoffs. The terms (14) can be interpreted as the gains in total payoff to all players caused by a focal player that switches its action from \(\mathcal {D}\) to \(\mathcal {C}\) when k co-players play \(\mathcal {C}\) and the remaining \(n-1-k\) co-players play \(\mathcal {D}\). For future reference, we collect such social gains (14) in the social gain sequence \({\varvec{S}} = (S_0,S_1,\ldots ,S_{n-1}) \in \mathbb {R}^{n}\), and call s the social gain function.

Candidate social optima correspond to either one of the two pure strategies (i.e., \(\hat{x}=0\) or \(\hat{x}=1\)) or to a totally mixed strategy \(\hat{x} \in (0,1)\) satisfying the first-order condition \(s(\hat{x}) = 0\). Specifically, a social optimum must be a local maximum of s. This observation leads to a link between the sign pattern of s and social optimality (see Lemma 4 of “Appendix C”) that parallels the link between the sign pattern of g to evolutionary stability we discussed above.Footnote 2 In particular, a totally mixed social optimum \(\hat{x}\) is a root of s. Since the social gain function (13) is a polynomial of degree \(n-1\), finding totally mixed social optima involves solving a polynomial equation of degree \(n-1\).

3.4 External Gains

The private gain function and social gain function both depend on the game structure given by the payoff sequences. It will be useful for our purposes to made this link explicit by expressing the social gain function as

where

with

where \(\Delta C_{k-1} = C_k - C_{k-1}\), \(\Delta D_k = D_{k+1} - D_k\), and \(C_{-1} = D_n = 0\). The terms (18) can be interpreted as the gains in payoff accrued to co-players when a focal player switches from playing \(\mathcal {D}\) to playing \(\mathcal {C}\) when k co-players play \(\mathcal {C}\) and all other co-players play \(\mathcal {D}\), and are thus equal to the social gains minus the private gains. We call these terms the external gains,Footnote 3 collect them in the external gain sequence \({\varvec{H}} = (H_0,H_1,\ldots ,H_{n-1}) \in \mathbb {R}^{n}\), and call h the external gain function. Equation (15) expresses the social gain function as the sum of the private gain function and the external gain function.

3.5 Bernstein Transforms

The expected payoffs to \(\mathcal {C}\)-players (5) and to \(\mathcal {D}\)-players (6) are polynomials in Bernstein form in the mixed strategy x with coefficients given, respectively, by the payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\), i.e., the expected payoff to \(\mathcal {C}\)-players, \(w_C(x)\) (resp. to \(\mathcal {D}\)-players, \(w_D(x)\)), can be understood as the Bernstein transform of the payoff sequence \({\varvec{C}}\) (resp. \({\varvec{D}}\)). Likewise, the private gain function (7), the social gain function (13), and the external gain function (17), are all Bernstein transforms are endowed with many shape-preserving properties linking the sign patterns of the sequences of coefficients and the sign patterns of the respective polynomials [24, 57], including preservation of initial and final signs, preservation of positivity, and the variation-diminishing property (see “Appendix D”). These properties imply a tight link between the sign pattern of the private gain sequence \({\varvec{G}}\) and the private gain function g, and between the social gain sequence \({\varvec{S}}\) and the social gain function s (see Appendices A and B for our sign terminology when applied to sequences and polynomials). This link implies, via Lemmas 3 and 4 in “Appendix C,” a connection between the sign pattern of the private gain sequence \({\varvec{G}}\) and the ESS structure of the game on the one hand, and a connection between the sign pattern of the social gain sequence \({\varvec{S}}\) and the location of the social optimum on the other hand. In many cases of interest, this allows us to identify a game as a cooperative dilemma and to extract key information about ESSs and social optima without the need for a more involved analysis (e.g., without the need for explicitly solving the polynomial equations needed to verify whether a totally mixed strategy is an ESS or a social optimum).

4 Identifying Cooperative Dilemmas

Having introduced our methods, we begin our investigation of multi-player cooperative dilemmas. We start by noting and recording two simple consequences of action \(\mathcal {C}\) being cooperative. First, since mutual \(\mathcal {C}\) is preferred over mutual \(\mathcal {D}\) if action \(\mathcal {C}\) is cooperative, it follows that the social optimum is greater than zero, i.e., that some cooperation is required to maximize the expected average payoff:

Lemma 1

Suppose mutual \(\mathcal {C}\) is preferred over universal \(\mathcal {D}\), i.e., condition (2) holds. Then, \(\hat{x} > 0\).

Proof

See “Appendix E.” \(\square \)

Second, if \(\mathcal {C}\) is cooperative, then it induces positive individual externalities, which in turn implies that the external gain sequence must be positive. This implies (by the preservation of positivity property of Bernstein transforms, see Lemma 5.5) that the external gain function (17) is positive. Thus, we have:

Lemma 2

Suppose that \(\mathcal {C}\) induces positive individual externalities, i.e., condition (3) holds (with at least one inequality being strict). Then, \(h>0\) holds; i.e., \(h(x) \ge 0\) holds for all \(x \in [0,1]\) with the inequality being strict for all \(x \in (0,1)\).

Lemma 2 implies (via identity (15) and, hence, \(g(x)=s(x)-h(x)\)) that the private gain function is strictly smaller than the social gain function for all \(x \in (0,1)\). Using Lemmas 1 and 2 together with Lemma 3 in “Appendix C” allows us to prove the following characterization result of multi-player cooperative dilemmas.

Proposition 1

Suppose that action \(\mathcal {C}\) is cooperative. Then, the following three statements are equivalent:

-

(i)

The game is a cooperative dilemma.

-

(ii)

There exists \(x \in [0,1]\) such that \(g(x) < 0\).

-

(iii)

\(x = 1\) is not the only ESS of the game.

Proof

See “Appendix E.” \(\square \)

Proposition 1 provides two alternative necessary and sufficient conditions for a game with a cooperative action to be a cooperative dilemma. Condition (ii) is that the private gain function is negative for at least some value of its domain. In other words, players must have an ex-ante incentive (in terms of their private gain in expected payoff) to choose \(\mathcal {D}\) over \(\mathcal {C}\) for at least some symmetric mixed-strategy profile played by co-players. Condition (iii) is that full cooperation (\(x=1\)) is not the only ESS. This encompasses two possibilities. The first is that \(x=1\) is not an ESS (as in the prisoner’s dilemma or the snowdrift game). The second is that \(x=1\) is an ESS but there exists at least one alternative ESS \(x^*\) satisfying \(x^* < 1\) (as in the stag hunt). We note that the equivalence between conditions (i) and (iii) in the statement of Proposition 1 would be trivial if it were the case that \(\mathcal {C}\) being cooperative implied that full cooperation is the social optimum (\(\hat{x}=1\)). However, this is not so. For instance, it can be verified by direct calculation for the prisoner’s dilemma and the snowdrift game that full cooperation is the social optimum for these games if and only if \(2 C_1 \ge C_0 + D_1\) holds. Section 6.1 provides further illustration.

Supposing that action \(\mathcal {C}\) is cooperative (which is straightforward to check), condition (ii) in Proposition 1 allows us to identify a cooperative dilemma by inspecting the sign pattern of the private gain function. Although, in general, this check is simpler than explicitly identifying the ESS and the social optimum, and comparing them (as required by Definition 2), it can still be a non-trivial task requiring a numerical instead of an analytical treatment. Fortunately, in many cases of interest, a direct inspection of the sign pattern of the private gain sequence might be enough to identify a cooperative dilemma. Two-player games are one such case. Indeed, we have:

Proposition 2

(Two-player cooperative dilemmas) Suppose that \(n=2\) and that action \(\mathcal {C}\) is cooperative. Then, the game is a social dilemma (and hence a cooperative dilemma) if and only if \({\varvec{G}}\) is not positive, i.e., if and only if

Further, condition (19) holds if and only if the game is a prisoner’s dilemma, a snowdrift game or a stag hunt as defined in Table 1.

Proof

See “Appendix E.” \(\square \)

Condition (19) means that either \(D_0 > C_0\) or \(D_1 > C_1\) holds, i.e., that \(\mathcal {C}\) does not weakly dominate \(\mathcal {D}\). For two-player games, the absence of such a dominance relation is necessary and sufficient for players to have a strict ex-ante incentive to choose \(\mathcal {D}\) rather than \(\mathcal {C}\) for some mixed strategy of their co-player, ensuring that condition (ii) in Proposition 1 is satisfied. Table 2 records the sign patterns of the gain sequence associated with the three kinds of two-player cooperative dilemmas.

One might hope that replacing condition (19) by

yields a counterpart to Proposition 2 for multi-player games. Condition (20) again excludes the possibility that action \(\mathcal {C}\) weakly dominates \(\mathcal {D}\) and has been previously considered as part of the definition of a cooperative dilemma. For instance, condition (20) appears as part of the definition of “multi-player social dilemma” proposed by Płatkowski [61, Axiom 3].

It is easy to verify that condition (20) is indeed a necessary condition for a game to be a cooperative dilemma (in the sense of our definition): If condition (20) fails, then \(g(x) \ge 0\) holds for all \(x \in [0,1]\), so that condition (ii) in Proposition 1 implies that the game is not a cooperative dilemma. We thus have:

Corollary 1

Suppose that action \(\mathcal {C}\) is cooperative. If the game is a cooperative dilemma, then condition (20) holds.

Proof

See “Appendix E.” \(\square \)

However, for \(n > 2\), the absence of a weak dominance relationship between \(\mathcal {C}\) and \(\mathcal {D}\) (i.e., condition (20)) does not exclude the possibility that the social optimum coincides with the unique ESS. From an evolutionary perspective, it is thus not the case that condition (20) is sufficient to imply a divergence between the collective interest and the behavior induced by individual interest. The underlying reason is that there may be no ex-ante incentive to defect (i.e., the gain function g remains positive for all x) despite the existence of some ex-post incentives to defect (condition (20)). Technically, this is due to the variation-diminishing property of Bernstein transforms (see Lemma 5.5) and the possibility that the gain function has a smaller number of sign changes than the gain sequence. The following example illustrates this possibility (see also Fig. 2).

Three-player game considered in Example 1. Left panel: Action \(\mathcal {C}\) is cooperative (Definition 1), since (i) \(C_2 > D_0\), and (ii) both the payoff sequence \({\varvec{C}}\) and the payoff sequence \({\varvec{D}}\) are increasing. Additionally, \(G_1 < 0\) holds: individuals have an ex-post incentive to defect if exactly one co-player cooperates. Right panel: The game is not a cooperative dilemma (Definition 3), since the unique ESS \(x^*=1\) coincides with the social optimum \(\hat{x}=1\), which maximizes the expected average payoff w(x). Since the private gain function g(x) is never negative in the unit interval, individuals have no ex-ante incentive to defect

Example 1

Consider the three-player game with payoff sequences \({\varvec{C}}=(1/2,1,2)\) and \({\varvec{D}}=(-1/2,5/4,3/2)\) (Fig. 2). The private gain sequence is then \({\varvec{G}}=(1,-1/4,1/2)\). By Definition 1, action \(\mathcal {C}\) is cooperative. Additionally, players have an ex-post incentive to defect when one of their co-players cooperate (\(G_1 < 0\)). However, the game does not constitute a cooperative dilemma, according to Definition 3, because its unique ESS coincides with the social optimum, which is given by \(\hat{x}=1\). This is because the private gain function (which simplifies to \(g(x)=1-\frac{5}{2}x+2x^2\)) is positive in its domain, so that players have no ex-ante incentive to defect. By Proposition 1, the game is not a cooperative dilemma according to our definition.

While the generalization of (19) to (20) fails to be sufficient for a multi-player game with a cooperative action to be a cooperative dilemma, an alternative generalization of (19) yields such a sufficient condition. Specifically, consider the condition that \(G_0 < 0\) or \(G_{n-1} < 0\) holds, which for \(n=2\) is equivalent to (19). Due to the sign-preserving properties of Bernstein transforms, \(G_0 < 0\) is equivalent to \(g(0) < 0\), whereas \(G_{n-1} < 0\) is equivalent to \(g(1) < 0\), so that by Proposition 1, the game is a cooperative dilemma when one of these two conditions holds. More generally, it suffices that the initial sign or the final sign of the \({\varvec{G}}\) is negative to ensure that for sufficiently small \(x > 0\) or for sufficiently large \(x < 1\), the inequality \(g(x) < 0\) holds. Hence, we obtain:

Corollary 2

Suppose that action \(\mathcal {C}\) is cooperative. If either the initial or the final sign of \({\varvec{G}}\) is negative, then the game is a cooperative dilemma.

Proof

See “Appendix E.” \(\square \)

The simplest among the multi-player games identified as cooperative dilemmas by Corollary 2 are the ones in which \({\varvec{G}}\) is negative (ensuring that both the initial and final sign of \({\varvec{G}}\) are negative) or the ones having only one sign change (ensuring that either the initial sign or the final sign is negative). Such games are the natural generalizations of the three kinds of two-player cooperative dilemmas to the multi-player case (cf. Table 2, which shows that for the prisoner’s dilemma \({\varvec{G}}\) is negative, whereas \({\varvec{G}}\) has one sign change from positive to negative for the snowdrift game and one sign change from negative to positive for the stag hunt). We are thus led to define:

Definition 4

(Multi-player prisoner’s dilemmas, snowdrift games, and stag hunts) Let action \(\mathcal {C}\) be cooperative and \(n > 2\).

-

1.

The game is a multi-player prisoner’s dilemma if \({\varvec{G}}\) is negative.

-

2.

The game is a multi-player snowdrift game if \({\varvec{G}}\) has a single sign change from positive to negative.

-

3.

The game is a multi-player stag hunt if \({\varvec{G}}\) has a single sign change from negative to positive.

Definition 4 expands the definition of prisoner’s dilemmas, snowdrift games, and stag hunts to interactions among any number of players. These definitions are related (but not equal) to previous definitions of such multi-player games (for some discussion, see “Appendix F”).

To see that the multi-player cooperative dilemmas defined in Definition 4 are similar to their two-player counterparts, we offer the following result, which demonstrates that the ESS structures of these games are identical to those of the corresponding two-player cooperative dilemmas.Footnote 4

Proposition 3

(ESS structure of (multi-player) prisoner’s dilemmas, snowdrift games, and stag hunts)

-

1.

A (multi-player) prisoner’s dilemma has exactly one ESS, namely \(x^*=0\).

-

2.

A (multi-player) snowdrift game has exactly one ESS \(x^* \in (0,1)\).

-

3.

A (multi-player) stag hunt has two ESS, namely \(x_1^*=0\) and \(x_2^*=1\).

Proof

See “Appendix E.” \(\square \)

5 Collective Action Games as Examples of Cooperative Dilemmas

We have given a definition of a cooperative dilemma together with conditions for a multi-player game to qualify as such. In this section, we review several classes of collective action games and show how all of them represent cooperative dilemmas for suitable parameter values. Moreover, we show that many of these collective action games belong to the categories of multi-player prisoner’s dilemmas, snowdrift games, and stag hunts defined in Definition 4 and characterized in Proposition 3.

5.1 Participation Games with Negative Externalities (Congestion Games)

Six-player congestion game with value sequence \({\varvec{v}}=(1,1,1,0,0,0)\) and payoff to choosing “out” of \(\gamma =1/3\). Left panel: Payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\), payoff functions \(w_C(x)\) and \(w_D(x)\), and expected average payoff w(x). Right panel: Private, social and external gain sequences \({\varvec{G}}\), \({\varvec{S}}\), and \({\varvec{H}}\), and corresponding private, social, and external gain functions g(x), s(x), and h(x). Action \(\mathcal {C}\) is cooperative as (per Definition 1) (i) \(C_5 = 1/3 > 0 = D_0\) holds, and (ii) \({\varvec{C}}\) is constant and \({\varvec{D}}\) is increasing. Since \({\varvec{G}}\) has a single sign change from positive to negative, the game is classified as a multi-player snowdrift game (Definition 4.2), having a totally mixed ESS \(x^*\) (Proposition 3.2). The social optimum \(\hat{x}\) features a higher probability of cooperating than the unique ESS, i.e., \(x^* < \hat{x}\)

As a first example of collective action games, consider the participation games with negative externalities to other participants (or congestion games) discussed by Anderson and Engers [1, Section 3]. This class of games includes, among others, the threshold participation game with “negative feedback” of Dindo and Tuinstra [19] and El Farol bar problem [3]; it also provides a simple formalization of the famous “tragedy of the commons” [31]. Playing \(\mathcal {D}\) (to participate, or to choose “in”) means to take part in an activity such as entering a market, exploiting a common resource, driving, or going to a bar (see Fig. 3 for an example). Playing \(\mathcal {C}\) (to abstain from participating, or to stay “out”) means to refrain from taking part in such an activity. The payoff to choosing “out” is a constant \(\gamma > 0\) (that Anderson and Engers [1] normalize to zero). The payoff to choosing “in” is a decreasing function of the total number of \(\mathcal {D}\)-players. Thus, participants generate negative externalities to other participants. The payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) can then be written as

where \(v_\ell \) for \(\ell \in \left\{ 0,1,\ldots ,n-1\right\} \) is the value of the activity to a participant (\(\mathcal {D}\)-player) given that \(\ell \) co-players also participate. By assumption, the sequence \({\varvec{v}}=(v_0,v_1,\ldots ,v_{n-1}) \in \mathbb {R}^n\) is decreasing and such that \(v_0> \gamma > v_{n-1}\) holds, so that the payoff to play “in” if everybody else plays “out” is greater than the payoff to play “out,” which in turn is greater than the payoff to play “in” if everybody else plays “in.” It follows that \({\varvec{C}}\) is constant and \({\varvec{D}}\) is increasing, and hence that \(\mathcal {C}\) induces positive individual externalities. Additionally, since \(C_{n-1} = \gamma > v_{n-1} = D_0\) holds, action \(\mathcal {C}\) (staying “out”) is cooperative.

The private gains are given by

The private gain sequence \({\varvec{G}}\) is thus decreasing and has sign pattern \((1,-1)\), i.e., \({\varvec{G}}\) changes sign exactly once from positive to negative. In other words, players have an incentive to participate in the activity (entering a market, exploiting a common resource, driving, going to a bar) as long as not too many others decide likewise. Since not participating (playing \(\mathcal {C}\)) is cooperative and \({\varvec{G}}\) has a single sign change from positive to negative, congestion games are particular instances of snowdrift games (Definition 4.2). It then follows from Proposition 3.2 that congestion games are all characterized by a unique ESS \(x^*\) that is totally mixed.

5.2 Games with Participation Synergies (Strategic Complements in Participation)

Six-player game with participation synergies with value sequence \({\varvec{v}}=(0,0,0,1,1,1)\) and payoff to choosing “out” of \(\gamma =1/3\). Left panel: Payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\), payoff functions \(w_C(x)\) and \(w_D(x)\), and expected average payoff w(x). Right panel: Private, social and external gain sequences \({\varvec{G}}\), \({\varvec{S}}\), and \({\varvec{H}}\), and corresponding private, social, and external gain functions g(x), s(x), and h(x). Action \(\mathcal {C}\) is cooperative as (per Definition 1) (i) \(C_5 = 1 > 1/3 = D_0\) holds, and (ii) \({\varvec{D}}\) is constant and \({\varvec{C}}\) is increasing. Since \({\varvec{G}}\) has a single sign change from negative to positive, the game is classified as a multi-player stag hunt (Definition 4.3). By Proposition 3.3, the game has two ESSs: \(x_1^*=0\) and \(x_2^*=1\). While \(x_2^*=1\) coincides with the social optimum, \(x_1^*=0\) is a socially inefficient ESS, where cooperation is underprovided

As a second example of collective action games, consider the participation games with positive externalities to other participants discussed by Anderson and Engers [1, Section 4]. These games are the counterpart to those discussed in Sect. 5.1, and include the “club goods” studied by Peña et al. [59] and De Jaegher [16], and the “n-person stag hunt game” of Luo et al. [38]. Let us in this case label \(\mathcal {C}\) the decision to participate, or to choose “in,” and \(\mathcal {D}\) the decision to abstain from participating, or staying “out.” As for congestion games, the payoff to staying “out” is a constant \(\gamma > 0\) (that Anderson and Engers [1] normalize to zero). The payoff to choosing “in” is now increasing in the number of other \(\mathcal {C}\)-players. Thus, participants generate positive externalities to other participants (see Fig. 4 for an example). The payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) are given by

where \(v_i\) for \(i \in \left\{ 0,1,\ldots ,n\right\} \) is the value of the activity to a participant (\(\mathcal {C}\)-player) given the total number i of participants among players (including the self). By assumption, the sequence \({\varvec{v}}=(v_1,\ldots ,v_{n}) \in \mathbb {R}^{n}\) is increasing, and such that \(v_1< \gamma < v_{n}\) holds, so that the payoff to play “in” if everybody else plays “out” is smaller than the payoff to play “out,” which in turn is smaller than the payoff to play “in” if everybody else plays “in.” It follows that \({\varvec{C}}\) is increasing and \({\varvec{D}}\) is constant, and hence that \(\mathcal {C}\) induces positive individual externalities. Additionally, since \(C_{n-1} = v_{n} > \gamma = D_0\) also holds, action \(\mathcal {C}\) (choosing “in”) is cooperative.

The private gains are given by

The private gain sequence \({\varvec{G}}\) has sign pattern \((-1,1)\), i.e., it has a single sign change from negative to positive. In this case, players have an incentive to participate in the activity as long as sufficiently many others also decide to do so. Since participating (playing \(\mathcal {C}\)) is cooperative and \({\varvec{G}}\) has a single sign change from negative to positive, games with participation synergies are particular instances of stag hunts (Definition 4.3). It then follows from Proposition 3.3 that games with participation synergies are all characterized by two ESSs: \(x_1^*=0\) and \(x_2^*=1\).

5.3 Public Goods Games

Six-player public goods game with benefit sequence \({\varvec{b}}=(0,0,0,1,1,1,1)\) and cost sequence \({\varvec{c}}=(1/7,1/7,1/7,1/7,1/7,1/7)\), i.e., a “teamwork dilemma” with \(\theta =3\) and \(\gamma =1/7\). Left panel: Payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\), payoff functions \(w_C(x)\) and \(w_D(x)\), and expected average payoff w(x). Right panel: Private, social and external gain sequences \({\varvec{G}}\), \({\varvec{S}}\), and \({\varvec{H}}\), and corresponding private, social, and external gain functions g(x), s(x), and h(x). Action \(\mathcal {C}\) is cooperative as (per Definition 1) (i) \(C_5 = 6/7 > 0 = D_0\) holds, and (ii) both \({\varvec{C}}\) and \({\varvec{D}}\) are increasing. \({\varvec{G}}\) has two sign changes: the first from negative to positive, and the second from positive to negative. The game has two ESSs: \(x=0\) and a totally mixed ESS \(x^*\). The game also features a unique and totally mixed social optimum \(\hat{x}\). Note that \(0< x^* < \hat{x}\) holds, i.e., there is underprovision of cooperation at both ESSs

As a third and final example of collective action games, consider public goods games where playing \(\mathcal {C}\) means to voluntarily contribute to a public good while playing \(\mathcal {D}\) means to shirk [2, 15, 16, 20, 28, 32, 39, 53, 57, 63, 69, 73, 74, 81]. Contributing entails a cost \(c_i \ge 0\) to each \(\mathcal {C}\)-player, while all players (both \(\mathcal {C}\)-players and \(\mathcal {D}\)-players) enjoy a benefit \(b_i \ge 0\), where \(0 \le i \le n\) denotes the total number of \(\mathcal {C}\)-players among players. The payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) are then given by

We collect the costs in the cost sequence \({\varvec{c}}=(c_1,\ldots ,c_n) \in \mathbb {R}^{n}\) and the benefits in the benefit sequence \({\varvec{b}}=(b_0,b_1,\ldots ,b_n) \in \mathbb {R}^{n+1}\). We assume that \({\varvec{b}}\) is increasing (so that the larger the number of \(\mathcal {C}\)-players, the larger the value of the public good that is provided) and that \({\varvec{c}}\) is non-decreasing (so that increasing the number of \(\mathcal {C}\)-players never increases the cost associated with contributing). We further assume that \(b_{n-1}-b_0 > c_{n}\) holds, so that the difference between the value of the public good if everybody contributes and its value if nobody contributes is larger than the personal cost if everybody contributes. See Figs. 1 and 5 for examples.

Since benefits \({\varvec{b}}\) are increasing and costs \({\varvec{c}}\) are non-decreasing, the payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) are increasing: Every player is better off the more other players contribute to the public good. It follows that action \(\mathcal {C}\) generates positive individual externalities. Since, additionally, \(b_{n-1}-b_0 > c_{n}\) holds, then \(C_{n-1}>D_0\) holds, and action \(\mathcal {C}\) is cooperative.

The private gains are given by

The sign pattern of the private gain sequence \({\varvec{G}}\) depends on the particular shapes of the benefit and the cost sequences, and in particular on how the marginal benefit of contributing \(\Delta b_k=b_{k+1}-b_k\) scales with the number of other contributors k and compares to the cost \(c_{k+1}\). Particular examples are given below.

Public goods games with concave benefits and fixed costs

Consider a public goods game where \({\varvec{b}}\) is concave (i.e., \(\Delta ^2 {\varvec{b}}\) is negative) and \({\varvec{c}}\) is a constant of value \(\gamma >0\) (i.e., \({\varvec{c}} = (\gamma ,\gamma ,\ldots ,\gamma )\)), as assumed, for instance, by Gradstein and Nitzan [28] and Motro [45].Footnote 5 Then \({\varvec{G}}\) is decreasing. If costs are high (\(\gamma \ge \Delta b_0\)), \({\varvec{G}}\) is negative, and the game is a prisoner’s dilemma (Definition 4.1). If costs are low (\(\gamma \le \Delta b_{n-1}\)), \({\varvec{G}}\) is positive, and the game is not a cooperative dilemma according to Corollary 1. If costs are intermediate (i.e., \(\Delta b_{n-1}< \gamma < \Delta b_0\) holds), \({\varvec{G}}\) has a single sign change from positive to negative, and the game is a snowdrift game (Definition 4.2). In this case, players have an individual incentive to contribute to the public good if there are relatively few contributors, and they have an incentive to shirk if there are relatively many contributors.

Public goods games with convex benefits

As a second subclass of public goods games, suppose that \({\varvec{b}}\) is convex (i.e., \(\Delta ^2 {\varvec{b}}\) is positive). Then, without the need of further assumptions on the cost sequence, \({\varvec{G}}\) is increasing. If costs are high (\(c_n \ge \Delta b_{n-1}\)), \({\varvec{G}}\) is negative, and the game is a prisoner’s dilemma (Definition 4.1). If costs are low (\(c_1 \le \Delta b_0\)), \({\varvec{G}}\) is positive, and the game does not constitute a cooperative dilemma (Corollary 1). If costs are intermediate (i.e., \(\Delta b_0 < c_1\) and \(\Delta b_{n-1} > c_n\) hold), \({\varvec{G}}\) has a single sign change from negative to positive, and the game is a stag hunt (Definition 4.3).

Public goods games with sigmoid benefits and fixed costs

As a third subclass of public goods games, suppose that \({\varvec{b}}\) is first convex, then concave (i.e., \(\Delta ^2 {\varvec{b}}\) has a single sign change from positive to negative), and \({\varvec{c}}\) is constant of value \(\gamma >0\) (i.e., \({\varvec{c}} = (\gamma ,\gamma ,\ldots ,\gamma )\)). Examples include models studied by Pacheco et al. [53] and Archetti and Scheuring [2], where the benefit sequence first accelerates and then decelerates with the number of contributors. In this case, the private gain sequence is unimodal, i.e., first increasing, then decreasing. Then, depending on how the cost of contributing \(\gamma \) relates to \(\Delta {\varvec{b}}\), we have the following cases. If costs are high (\(\gamma \ge \max _{k} \Delta b_k\)), \({\varvec{G}}\) is negative, and the game is a prisoner’s dilemma (Definition 4.1). If costs are low (\(\gamma \le \min _{k} \Delta b_k\)), \({\varvec{G}}\) is positive, and the game does not constitute a cooperative dilemma (Corollary 1). If costs are intermediate (i.e., \(\min _{k} \Delta b_k< \gamma < \max _{k} \Delta b_k\) holds), then the sign pattern of \({\varvec{G}}\) depends on the relative position of \(\Delta b_0\) and \(\Delta b_{n-1}\) with respect to \(\gamma \), as follows. If \(\Delta b_0 \ge \gamma \) and \(\Delta b_{n-1} < \gamma \), then \({\varvec{G}}\) has a single sign change from positive to negative, and the game is a snowdrift game (Definition 4.2). If \(\Delta b_0 < \gamma \) and \(\Delta b_{n-1} \ge \gamma \), then \({\varvec{G}}\) has a single sign change from negative to positive, and the game is a stag hunt (Definition 4.3). Finally, if \(\max \left\{ \Delta b_0, \Delta b_{n-1}\right\} < \gamma \), then the sign pattern of \({\varvec{G}}\) is \((-1,1,-1)\), i.e., the private gain sequence is first negative, then positive, and then negative again. This case, where \({\varvec{G}}\) has two sign changes (the first one from negative to positive, the second from positive to negative) is different from the previous examples and the game cannot be classified as a prisoner’s dilemma, a snowdrift or a stag hunt. Here, players have an incentive to contribute to the public good only if sufficiently many (but not too many) other players also contribute. Notwithstanding, the game is a cooperative dilemma, as it is clear by an application of Corollary 2. Moreover, the ESS structure of the game can also be characterized using Bernstein transforms [57, Results 4 and 5]. If \(\gamma \) is sufficiently low, the game has two ESSs: \(x_1^*=0\) and \(x_2^* \in (0,1)\); if \(\gamma \) is sufficiently large, the game has a unique ESS \(x^*=0\).

Threshold public goods games with fixed costs

A noteworthy example of a public goods game with sigmoid benefits and fixed costs is the threshold public goods game with fixed costs and no refunds [5, 50, 54, 74]. In this game, contributors pay a non-refundable cost equal to \(0< \gamma < 1\) and the public good is provided if and only if the number of contributors reaches an exogenous threshold \(\theta \), in which case all players get the same benefit (normalized to one) from the provision of the public good. The cost sequence is thus given by \({\varvec{c}} = (\gamma ,\gamma ,\ldots ,\gamma )\) and the benefit sequence by

where \(\llbracket \rrbracket \) denotes the Iverson bracket, i.e., \(\llbracket X \rrbracket = 1\) if X is true and \(\llbracket X \rrbracket = 0\) if X is false. If \(\theta =1\) (only one contributor is required) the game is known as the “volunteer’s dilemma” [18]. In this case, \({\varvec{b}}\) is concave, \(\Delta b_{n-1} = 0< \gamma < 1 = \Delta b_0\) holds, and the game is an instance of the public goods games with concave benefits and fixed intermediate costs presented in Sect. 5. In particular, the sign pattern of \({\varvec{G}}\) is \((1,-1)\) and the game is a snowdrift game. Alternatively, if \(\theta =n\) (all contributors are required), then \({\varvec{b}}\) is convex, both \(\Delta b_{0} = 0 < \gamma = c_1\) and \(\Delta b_{n-1} = 1 > \gamma = c_n\) hold, and the game is an instance of the public goods games with convex benefits and intermediate costs presented in Sect. 5. In particular, the sign pattern of \({\varvec{G}}\) is \((-1,1)\) and the game is a stag hunt. Finally, if \(1<\theta <n\) holds (more than one but less than all contributors are needed), the game is an instance of the public goods games with sigmoid benefits and fixed intermediate costs presented in Sect. 5. In this case, the game is sometimes referred to as a “teamwork dilemma” [46, 50]: the private gains are given by \(G_k = -\gamma < 0\) for \(k \ne \theta -1\) and \(G_{\theta -1}=1-\gamma >0\), and the sign pattern of \({\varvec{G}}\) is \((-1,1,-1)\). Here, individuals have an incentive to contribute to the public good if and only if exactly other \(\theta -1\) players were to contribute, as only in such scenario their contribution is pivotal.

6 Cooperation and Social Optimality in Cooperative Dilemmas

In this section, we address two questions about the socially optimal probability of cooperation in a cooperative dilemma. First, we investigate whether or not the social optimum features full cooperation (\(\hat{x} = 1\)). While we provide a simple condition for the social optimality of full cooperation, our analysis also reveals that not all cooperative dilemmas satisfy \(\hat{x}=1\). This observation raises our second question, namely whether it could happen that a cooperative dilemma has an ESS \(x^*\) featuring overprovision of cooperation in the sense that \(x^* > \hat{x}\) holds. We show that this is impossible.

6.1 When is Full Cooperation Socially Optimal?

We say that full cooperation is socially optimal if \(\hat{x} = 1\) is the social optimum. It is intuitive that full cooperation should be socially optimal if, no matter which pure-strategy profile we consider, switching the action of a single player from \(\mathcal {D}\) to \(\mathcal {C}\) never decreases and sometimes increases the total payoff of all players. This intuition is correct. Formally, requiring that the social gain sequence \({\varvec{S}} = (S_0, S_1, \ldots , S_{n-1})\), which we have defined in Sect. 3.3, is positive, i.e., that

holds with at least one strict inequality, suffices for the optimality of full cooperation. We thus have:

Proposition 4

Suppose \({\varvec{S}}\) is positive. Then, the social optimum satisfies \(\hat{x} = 1\).

Proof

See “Appendix G.” \(\square \)

To illustrate the application of Proposition 4, consider the public goods games presented in Sect. 5.3. For these games, the total payoffs are found by substituting (25) into (12) and simplifying. They are given by

where we set \(c_0=0\), i.e., by the difference between the total benefits (\(n b_i\)) and the total costs (\(ic_i\)) in a group of n players, i of which contribute to the collective action. The social gains thus satisfy

Since the benefit sequence \({\varvec{b}}\) is increasing, a sufficient condition for \({\varvec{S}}\) to be positive is that

holds, i.e., that the total costs borne by contributors is non-increasing in the number of contributors. If condition (31) holds, it follows from Proposition 4 that full cooperation is socially optimal. This is the case, for instance, if there is “cost sharing” [81], i.e., if the cost sequence is given by \(c_i = \gamma /i\) for some constant \(\gamma > 0\).

As a counterpart to Proposition 4, we can also provide a simple sufficient condition implying that full cooperation is not socially optimal:

Proposition 5

If \(S_{n-1} < 0\) holds, then the social optimum satisfies \(\hat{x} < 1\).

Proof

See “Appendix G.” \(\square \)

The condition \(S_{n-1} < 0\) in the statement of Proposition 5 holds in many cooperative dilemmas. For instance, in two-player games, we have \(S_{n-1} = S_1 = 2 C_1 - C_0 -D_1\), so that full cooperation is not socially optimal in prisoner’s dilemmas and snowdrift games with \(2 C_1 < C_0 + D_1\). More interestingly, Proposition 5 directly implies that the volunteer’s dilemma and the teamwork dilemmas discussed in Sect. 5.3 are examples of multi-player cooperative dilemmas in which full cooperation is not socially optimal. Indeed, for such games, \(\Delta b_{n-1} = 0\) (there is no additional collective benefit generated if the number of cooperators among players increases from \(n-1\) to n) and \(c_k = \gamma \) for all \(k=1,\ldots ,n\) (costs are fixed) hold. Substituting these values into equation (30) for \(k=n-1\), we obtain \(S_{n-1} = -\gamma \), so that Proposition 5 applies. See also the examples illustrated in Figs. 1, 3, and 5.

6.2 Can Cooperation be Overprovided at Equilibrium?

We have made it a defining feature of a cooperative action that it induces positive individual externalities (condition (ii) in Definition 1). As these positive externalities are not internalized in the private gains that determine evolutionary stability, intuition suggests that whenever action \(\mathcal {C}\) is cooperative, the probability of cooperation \(x^*\) at an ESS can not be higher than the socially optimal probability of cooperation \(\hat{x}\). The following result shows that this reasoning is correct.

Proposition 6

Suppose action \(\mathcal {C}\) is cooperative. Let \(x^*\) be an ESS and \(\hat{x}\) the social optimum. Then, \(x^* < \hat{x}\) holds unless \(x^* = \hat{x} = 1\).

Proof

See “Appendix G.” \(\square \)

Note that Proposition 6 not only excludes the possibility of overprovision of cooperation at an ESS \((x^* > \hat{x})\), but also establishes that the only case in which an ESS can agree with the social optimum is the one in which the internal incentives to cooperate are so strong that full cooperation is an ESS. Because the definition of a social dilemma excludes the possibility that the game has a unique ESS coinciding with the social optimum, it follows that in any cooperative dilemma either (i) all ESS feature underprovision of cooperation, \(x^* < \hat{x}\) (as in the two-player prisoner’s dilemma and snowdrift game) or (ii) there exist one ESS, namely \(x^* = 1\), which coincides with the social optimum, but all other ESS (of which at least one exists) feature underprovision of cooperation (as in the two-player stag hunt).

We find it noteworthy and surprising that (in contrast to all other results in this paper) Proposition 6 fails if the definition of a cooperative action that we have adopted is made more permissive by replacing condition (ii) in Definition 1 with the weaker requirement that action \(\mathcal {C}\) induces positive aggregate externalities, i.e., that switching one player’s action from \(\mathcal {D}\) to \(\mathcal {C}\) never decreases but sometimes increases the sum of the co-player’s payoffs. “Appendix H” expands on this.

7 Discussion

We have revisited the questions of what is cooperation, and what is a cooperative dilemma [34, 48, 61], in the context of binary-action multi-player games, and analyzed some of the evolutionary consequences of such definitions in the absence of any additional mechanism to promote the evolution of cooperation. Our contributions are sixfold.

First, we defined an action to be cooperative if two conditions hold (Definition 1). The first condition is that mutual cooperation must provide higher payoffs than mutual defection [13]. The second condition is that cooperation must provide what we have called “positive individual externalities,” that is, a player switching from defection to cooperation must never decrease and sometimes increase the payoff of each co-player for any profile of pure strategies adopted by co-players [33, 34, 60, 61, 77]. Building on this definition of a cooperative action, we then defined a cooperative dilemma as a game with a cooperative action that is also a social dilemma (Definition 3). In turn, we defined a social dilemma as a game featuring at least one ESS that is not socially optimal, in the sense that it does not maximize the expected average payoff (Definition 2). This definition of social dilemma is similar to the definition given by Kollock [35] but adapted to our evolutionary (and symmetric) setup. We illustrated our definitions with two-player games, and showed that the prisoner’s dilemma, the snowdrift game, and the stag hunt are cooperative dilemmas according to our definition. Moreover, these three classes of games are the only kinds of cooperative dilemmas that can arise when the number of players is two.

Second, we provided simple conditions guaranteeing that a game with a cooperative action is a cooperative dilemma. A necessary and sufficient condition is that, ex-ante, players have individual incentives to defect (Proposition 1). For two-player games (and two-player games only), this condition boils down to requiring that, ex-post, players have individual incentives to defect (Proposition 2), which can be easily verified by an inspection of inequalities involving the payoffs from the game. Moving to more than two players, we provided both simple necessary (but not sufficient) and simple sufficient (but not necessary) conditions for a game to be a cooperative dilemma, given in terms of the ex-post individual incentives to defect, and hence in terms of simple inequalities involving the payoffs from the game. The necessary condition is that individuals have some ex-post incentive to defect (Corollary 1). The sufficient condition is that individuals have an ex-post incentive to defect either if everybody else defects, or if everybody else cooperates (Corollary 2).

Third, we proposed definitions of prisoner’s dilemmas, snowdrift games, and stag hunts for more than two players (Definition 4). In all cases, the multi-player game has a cooperative action and an ex-post incentive structure reminiscent of its two-player counterpart, and thus stated in terms of inequalities at the level of payoffs of the game. Prisoner’s dilemmas are such that defection is (weakly) dominant. Individual incentives are thus characterized by both greed (of exploiting cooperators) and fear (of being exploited by non-cooperators). Snowdrift games are characterized by greed only, with incentives to defect if sufficiently many others cooperate. Stag hunts are characterized by fear only, with disincentives to cooperate if not enough others cooperate. We showed that in all cases, the ESS structure of each of these games is reminiscent of the ESS structure of the respective two-player games (Proposition 3): the multi-player prisoner’s dilemma is characterized by an unique ESS where cooperation is absent, the multi-player snowdrift game has a unique ESS that is totally mixed (so that players cooperate with a positive probability), and the stag hunt has two ESSs: full defection and full cooperation.

Fourth, we (i) reviewed three main classes of binary-action collective action games often discussed in economics and evolutionary biology, (ii) showed that they all fall (for suitable parameter values) within the category of cooperative dilemmas that we defined, and (iii) indicated how most of them fall within the class of multi-player prisoner’s dilemmas, snowdrift games, and stag hunts that we defined. The first class of collective action games comprises congestion games (Sect. 5.1), where taking part in an activity generates negative externalities to other participants, and which are special cases of snowdrift games. This observation, together with Proposition 3.2, recovers and generalizes Anderson and Engers [1, Proposition 1], who proved the existence and uniqueness of a symmetric NE for the class of congestion games we discussed. The second class comprises games with participation synergies (Sect. 5.2), where taking part in an activity generates positive externalities to other participants, and which are special cases of stag hunt games. This observation, together with Proposition 3.3, (i) recovers and strengthens Anderson and Enger [1, Proposition 7], who proved that \(x=0\) and \(x=1\) are symmetric NE for games with participation synergies, and (ii) provides a simpler proof for the result in Luo et al. [38, Appendix A] characterizing the ASE of the replicator dynamic of their “n-person stag hunt” game. The third and final class comprises the very popular public goods games (Sect. 5.3), where cooperating generates positive externalities to all other players, including individuals that do not cooperate and instead free ride on the contributions of cooperators. Here, depending on the particular shape of the benefit (or production) function, the game can be a prisoner’s dilemma, a snowdrift game, a stag hunt, or a different kind of game, characterized by a private gain sequence having two sign changes: the first one from negative to positive; the second from positive to negative. Such a game (which may be called a “stagdrift game” as it combines properties of both the snowdrift game and the stag hunt) can lead to a ESS structure with two ESSs: the pure equilibrium where everybody defects, and a totally mixed equilibrium where players cooperate with a positive probability.

Fifth, we provided simple sufficient conditions for full cooperation to be socially optimal, and for the social optimum to be totally mixed. The sufficient condition for full cooperation to be socially optimal is that the social gains are positive (Proposition 4). This means that switching the action of a focal player from defection to cooperation never makes all of the players, taken as a block and including the focal, worse off and it sometimes make them better off. The sufficient condition for the social optimum to be totally mixed is that the total payoff to players when all players cooperate is smaller than the total payoff to players when all but one player cooperates (Proposition 5). This proposition illustrates the fact that for many cooperative dilemmas, the socially optimal strategy may not be full cooperation, but rather a mixed strategy where individuals defect with some positive probability. This is already the case for subclasses of two-player prisoner’s dilemmas and snowdrift games, and it holds for a wide class of multi-player cooperative dilemmas as well.

Sixth, and finally, we investigated the question of whether cooperation is always underprovided at an inefficient ESS of a multi-player cooperative dilemma. We found that the answer is positive for all cooperative dilemmas as we defined them—just as it is the case for two-player cooperative dilemmas. In other words, cooperation can never be overprovided at equilibrium and it will always be underprovided unless the equilibrium coincides with the social optimum (Proposition 6). Our result recovers and generalizes to any cooperative dilemma both Gradstein and Nitzan [28, Proposition 7] and Anderson and Engers [1, Proposition 2], who proved, respectively, the underprovision at equilibrium for the class of public goods games with concave benefits and fixed intermediate costs introduced in Sect. 5, and the excessive participation at equilibrium in the class of congestion games considered in Sect. 5.1.

Previous definitions of cooperative dilemmas have proceeded axiomatically by defining cooperative dilemmas solely in terms of payoff inequalities [13, 48, 61, 62]. We find these definitions either too restrictive or too permissive. Dawes [13] defines a “social dilemma game” as a game satisfying (i) \(C_{n-1} > D_0\) (i.e., our condition (2)), together with (ii) \(C_k < D_k\) for all \(k \in \left\{ 0,1,\ldots ,n-1\right\} \), i.e., the condition that the private gain sequence \({\varvec{G}}\) is negative. While such a definition includes the multi-player prisoner’s dilemmas characterized above (and other games having \(x=0\) as the unique ESS and a social optimum satisfying \(\hat{x}>1\)), many of the collective action games we have reviewed in this paper would not qualify as social dilemmas under this definition, including (multi-player) snowdrift games and stag hunts. Nowak [48, p. 2] (see also Rand and Nowak [62, Box 1]) defines a “cooperative dilemma” as a game satisfying (i) \(C_{n-1} > D_0\) (i.e., our condition (2)) together with (iia) \(C_k < D_k\) for some \(k \in \left\{ 0,1,\ldots ,n-1\right\} \) or (iib) \(C_{k+1} < D_k\) for some \(k \in \left\{ 0,1,\ldots ,n-2\right\} \). Condition (iia) is equivalent to condition (20) (i.e., there is some ex-post incentive to defect, so that cooperation does not weakly dominate defection), while condition (iib) means that “in any mixed group defectors have a higher payoff than cooperators.” Płatkowski [61, Definition 1] defines a “multiplayer social dilemma” as a game satisfying conditions (i) and (iia) in the definition by Nowak [48], plus requiring that the payoff sequences \({\varvec{C}}\) and \({\varvec{D}}\) are both non-decreasing (i.e., our condition (3) without requiring any strict inequality). The latter two definitions label as dilemmas many games where the unique ESS coincides with the unique social optimum, and hence games where there is no apparent conflict between individual and collective interests, such as the three-player game in Example 1 (see also Fig. 2). This game is both a cooperative dilemma sensu Nowak [48] and a multi-player social dilemma sensu Płatkowski [61], but not a cooperative dilemma according to our definition. By requiring that cooperative dilemmas are social dilemmas sensu Definition 2, and by Proposition 1, our definition of cooperative dilemma excludes such cooperative “non-dilemmas.”

Our definition of cooperative action is related to the “individual-centered” definition of altruism for trait-group models in population genetics proposed by Kerr et al. [34] and based on previous work by Uyenoyama and Feldman [77]. More specifically, our condition that cooperation generates “positive individual externalities” (3) is essentially identical to conditions 7 and 8 in [34], which measure what they refer to as the “benefit of altruism.”Footnote 6 Kerr et al. [34] considered two other definitions of altruism: the “focal-complement”, and the “multi-level” definitions of altruism. In our framework, the way that these alternative definitions measure the benefit of altruism gives rise to potential alternative definitions of what a cooperative action is and how a cooperative dilemma could be defined (the latter by linking the new definition of cooperative action to our definition of social dilemma). Consider first the focal-complement definition of altruism proposed by Kerr et al. [34], based on previous work by Matessi and Karlin [41]. In this case, the benefit of altruism is equated with the generation of “positive aggregate externalities” (see the end of Sect. 6.2 and “Appendix H”). Indeed, our condition that cooperation generates “positive aggregate externalities” (36) is essentially identical to condition 2 in [34].Footnote 7 As we pointed out in Sect. 6.2 and “Appendix H,” we could replace condition (ii) in our definition of a cooperative action for the requirement that cooperation generates positive aggregate externalities. If we make that change, then all of our results would continue to hold, but for the result on the impossibility of overprovision of cooperation at an ESS (Proposition 6). As Example 4 in “Appendix H” illustrates, if cooperation requires positive aggregate externalities instead of positive individual externalities, it is possible that cooperation is overprovided in stag hunt games.

Consider next the multi-level definition of altruism proposed by Kerr et al. [34], based on previous work by Matessi and Jayakar [40] and Cohen and Eshel [12], among others. The benefit of altruism in this definition of altruism (condition 4 in [34]) is equated with the condition that the total payoffs to players strictly increases with the number of cooperators, which is equivalent to requiring that the social gains (14) are strictly positive. Suppose we were to replace condition (ii) in our definition of a cooperative action for the requirement that the social gain sequence is positive (not necessarily strictly). Then, by Proposition 4, full cooperation would be socially optimal, and all of our results would continue to hold (except for, obviously, Proposition 5). In particular, since the social optimum features full cooperation, it would follow trivially that any ESS different from full cooperation would feature underprovision of cooperation, and that overprovision of cooperation at an inefficient ESS would be impossible.