Abstract

We discuss the numerical solution to a class of continuous time finite state mean field games. We apply the deep neural network (DNN) approach to solving the fully coupled forward and backward ordinary differential equation system that characterizes the equilibrium value function and probability measure of the finite state mean field game. We prove that the error between the true solution and the approximate solution is linear to the square root of DNN loss function. We give an example of applying the DNN method to solve the optimal market making problem with terminal rank-based trading volume reward.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There has been extensive research in mean field game (MFG) since its introduction by Lasry and Lions [21] and Huang et al. [18] as a limit of symmetric nonzero sum noncooperative N-player dynamic games when the number of players tends to infinite, see Carmona and Delarue [4] and Guéant et al. [16] for excellent introduction to MFG. The main research focus nowadays is in two areas. One is to study the existence and uniqueness of MFG equilibrium with the partial differential equation (PDE) system that characterizes the equilibrium value function and mean field state, see Lasry and Lions [21]. The other is to analyze the convergence from the stochastic differential game among large but finite number of players to the MFG limit when the number of players tends to infinity and the numerical approximation for MFG, see Achdou et al. [1] and Achdou and Capuzzo-Dolcetta [2] The MFG theory has been applied to many modeling problems in economics, politics, social welfare and other areas, see, for example, Guéant ([14] and Lasry et al. [22].

In this paper, we focus on MFG with finite time horizon and continuous time state dynamic of each agent taking values in a finite set under fully symmetric payoff and complete information. Gomes et al. [12] first study finite state MFG and prove the existence and uniqueness of Nash equilibrium with the coupled forward and backward ordinary differential equation (FBODE) system and show the convergence of N-player game’s Nash equilibrium to that of the limiting MFG when N tends to infinite and time horizon is small. [8] analyze the MFG with a probabilistic approach. Carmona and Wang [7] tackle both the mean field of states and that of controls and prove the existence of equilibrium with backward stochastic differential equation and the uniqueness of equilibrium when the Hamiltonian does not depend on mean field controls. Carmona and Wang [6] analyze finite state MFG between one major player and infinite number of minor players. Cardaliaguet et al. [3] make the breakthrough in convergence analysis for a diffusion model with common noise and characterize the equilibrium with the master equation and its regular solution. Cecchin and Pelino [9] apply the master equation to obtain the convergence of feedback Nash equilibrium in the finite state space, which extends the convergence result in Gomes et al. [12] without requiring the time horizon being sufficiently small.

Despite the progress in existence, uniqueness and convergence for Nash equilibrium of the finite state MFG, there is still a considerable obstacle to approximate the N-player game with a simpler MFG. One main difficulty is that the Nash equilibrium of finite state MFG is characterized by a FBODE system with both the initial and terminal conditions, which in generally has no analytical solution and is difficult to solve numerically. One commonly used method for solving FBODE is the shooting method but it tends to work better when the dimension is low and the boundary condition is simple. The shooting method fails to work in our case. Gomes and Saude [13] propose a numerical scheme to solve finite state MFG under some monotone conditions that do not hold in many applications.

There has been active research in recent years on using the deep neural network (DNN) to solve PDEs and ODEs with different boundary conditions, see, e.g., Lagaris et al. [19], Malek and Beidokhti [27], Lee and Kang [24], Lagaris et al. [20]. Given that the feature of FBODE system is similar to that of a PDE, we are motivated to use DNN to numerically solve the FBODE system in the finite state MFG problem. Sirignano and Spiliopoulos [30] propose DGM (deep Galerkin method) to solve high-dimensional PDEs with a mesh-free DNN and show the convergence of approximate solutions to the true solution under some conditions, which is similar in spirit to the Galerkin method except that the solution is approximated by a neural network instead of a linear combination of basis functions. [5] provide a comprehensive literature review on deep learning method applied on MFG. Many papers apply the DGM approach to numerically solve high-dimensional PDEs derived from different types of MFG (see, e.g., Han et al. [17], Ruthotto et al. [29]), while others apply the DNN to solve the corresponding BSDEs (see, e.g., Fouque and Zhang [11], Lauriere [23]). Most of these papers only provide numerical results without rigorous proof for the numerical solutions. There is no guarantee that the neural network approximation can converge to the true solution, and the approximation may not be accurate enough albeit the loss function is already small as there is no relation established between the loss function and the error between approximate and true solutions. Li et al. [25] and Li et al. [26] prove the strong and uniform convergence of the DGM approach. Parallel to this paper, Mishra and Molinaro [28] focus on error estimation of physical informed neural network (PINN), which is the other name of DGM. For PDEs satisfying certain conditions, they provide the abstract framework to relate the loss function of neural network to the error between the true solution and the approximate solution generated by neural network and prove the error bound for several specific types of PDEs. Their assumptions on the regularity of PDEs are strong (see Assumption 2.1 in Mishra and Molinaro [28]) and are not necessarily satisfied by the FBODE system derived in this paper. To our best knowledge, there is no existing literature addressing the error between the approximation and the true solution via the loss function for FBODEs derived from continuous time finite state MFG problems. We provide the error bound estimation to fill the gap.

The main result of the paper, Theorem 2.6, states that the error between the true solution \((\theta , p)\) of the FBODE system and the DNN approximate solution \(({\tilde{\theta }}, {\tilde{p}})\) is linear to the square root of the loss function in the DNN method, which provides the magnitude of the error bound for the DNN approximation as well as the convergence result. To bridge \(\theta \) and \({\tilde{\theta }}\), we use the master equation for \(\theta \) in Cecchin and Pelino [9] and prove that \({\tilde{\theta }}\) satisfies a similar equation. Cecchin and Pelino [9] prove the equilibrium of finite players finite state game converges to that of the corresponding MFG with the former satisfying a backward ODE while the latter a FBODE which is equivalent to a backward PDE (master equation) and can be compared with the backward ODE system. In contrast, we want to estimate the error between the true solution and the DNN approximation to MFG with both satisfying FBODE systems and the one for the DNN approximation having extra error terms compared with the one for the true solution. We leverage the master equations to connect the two FBODE systems and do error analysis. Due to perturbation terms in the FBODE system, we need to address the issue of negative \({\tilde{p}}\), prohibited in [9, Theorem 6] and find a new way to bypass that difficulty.

As an application, we apply the DNN to numerically solve an optimal market making problem with the same framework as that of Guéant [15], except that the terminal reward depends on the trading volume ranking that is determined in a so-called market maker incentive program contract designed by the exchange to encourage market maker to provide more liquidity (i.e., trading volume). El Euch et al. [10] discuss the market maker incentive contract and analyze how exchange should optimally decide the commission fee schedule for market makers. The trading volume ranking-related reward, commonly seen in market incentive programs from various exchanges, is not considered in El Euch et al. [10]. In this paper, we use a finite state MFG to model the competition between market makers in the presence of the trading volume ranking reward and solve the Nash equilibrium using the DNN approach. The results may help exchanges design better market incentive program by better understanding market makers’ behavior responding to the contract.

The rest of the paper is organized as follows. In Sect. 2, we formulate the finite state MFG model and state the main result of the paper, Theorem 2.6, on the error estimation of DNN approximate solution. In Sect. 3, we discuss an application in the optimal market making with rank-based trading volume reward. Section 4 contains the proofs of Theorems 2.4, 2.5, 2.6 and Proposition 3.1. Section 5 concludes.

2 Model and Main Results

Define a finite state MFG in continuous time similar to the one in Cecchin and Pelino [9]. The finite state space is \(\Sigma = \{1, \ldots , K\}\), and the reference game player’s state is denoted by Z, which is a Markov chain. The player at state z can decide the switching intensities with feedback controls \(\lambda : [0, T] \times \Sigma \rightarrow ({\mathbb {R}}^{+})^{K}\) from \(\Sigma \) to \(({\mathbb {R}}^{+})^{K}\). The dynamic for the player is given by

where \(N^{z}_{t}\) is a Poisson process with controlled intensity \(\lambda _{z}(t, Z_{t})\).

If there are some states that state z cannot access, then we can simply set the corresponding components in the intensity vector to be zero. The probability measure on mean field of states is a function \(p: [0, T] \rightarrow P(\Sigma )\), where

Start at time \(t \in [0, T]\), given any probability measure p on the mean field of state, game player with controlled state process \(Z_{t}\) that start at state z wants to optimize

where \({\mathbb {E}}_t[\cdot ]\) is the conditional expectation given the initial state \(Z_{t} = z\) at time t, F the running payoff, G the terminal payoff and \({\mathcal {A}}\) the admissible control set containing all measurable functions \(\lambda : [0, T] \times \Sigma \rightarrow ({\mathbb {R}}^{+})^{K}\). We assume for any \(z \in \Sigma \), \(F(z, \lambda )\) is an upper bounded function which does not depend on \(\lambda _{z}\), the zth component of \(\lambda \). Define \(\theta : [0, T] \rightarrow {\mathbb {R}}^{K}\) by \(\theta (t) = (\theta _{1}(t), \ldots , \theta _{K}(t))\). According to Cecchin and Pelino [9], the equilibrium value function \(\theta \) and the mean field probability p satisfy the following FBODE system:

where \(\Delta ^{z}\) is the difference operator, defined as

and \(H: \Sigma \times {\mathbb {R}}^{K} \rightarrow {\mathbb {R}}\) is the Hamiltonian function, defined for any \(\mu \in {\mathbb {R}}^{K}\) satisfying \(\mu _{z} = 0\) as

and \(\lambda ^{*}(z, \mu ) = (\lambda ^{*}_{1}(z, \mu ), \ldots , \lambda ^{*}_{K}(z, \mu ))\) is the optimizer of \(H(z, \mu )\) except for \(\lambda ^{*}_{z}(z, \mu )\), which can be any value since in the proof of our main result we always let \(\mu _z = [\Delta ^{z} \theta (t)]_{z} = \theta _z(t) - \theta _z(t) = 0\) and \(F(z, \lambda )\) is independent to \(\lambda _z\). For notation convenience, we define

The backward equation in (2.2) comes from the optimization problem (2.1) given p and the forward equations comes from the consistent condition for probability measure p on mean field of states when everyone follows the equilibrium strategy. According to [12, Proposition 1], if H is differentiable and \(\lambda ^{*}(z, \mu )\) is positive except the zth element, for \(y \ne z\), we have

where \(\lambda ^{*}_{y}(z, \mu )\) is the intensity from state z to state y and \([D_{\mu } H(z, \mu )]_y\) the yth component of the gradient \(D_{\mu } H(z, \mu )\). In the proofs of main results, we always have \(\mu _z = 0\) when we use \(H(z, \mu )\), \(D_{\mu } H(z, \mu )\) or \(D^2_{\mu \mu } H(z, \mu )\), the Hessian matrix. For proof simplicity, with a little abuse of notation, we follow Cecchin and Pelino [9] to define artificially that

Then we can conclude that

and the feedback control \(\lambda (t, z) = \lambda ^{*}(z, \Delta ^{z} \theta (t))\) under the equilibrium.

We next assume H, G and \(\lambda ^{*}\) satisfy the following assumptions.

Assumption 2.1

Assume that, under (2.3), \(H(z, \mu )\) has a unique optimizer \(\lambda ^{*}(z, \mu )\) for every \(\mu \). H is \(C^{2}\) in \(\mu \) on bounded set, H, \(D_{\mu } H\) and \(D^{2}_{\mu \mu } H\) are locally Lipschitz in \(\mu \), and the second derivatives is bounded away from 0 on bounded set, i.e., there exists a constant C such that for any \(\mu \) in that bounded set satisfying \(\mu _z = 0\), we have

Moreover, assume that G is differentiable, and there exists a constant C such that when p is bounded, its directional derivative in any vector w satisfies

and that G is decreasing in p, i.e., for all \(p, {\bar{p}} \in {\mathbb {R}}^{K}\),

Remark 2.2

H satisfies \(H(z, \mu ) \ge H(z, {\bar{\mu }})\) for any \(z \in \Sigma \) if two vectors \(\mu = (\mu _1, \ldots , \mu _K)\) and \({\bar{\mu }} = ({\bar{\mu }}_1, \ldots , {\bar{\mu }}_K)\) satisfy

Then from [12, Proposition 2], solution to (2.2) has a prior bound \(C_{GH}\) as long as H satisfies Remark 2.2 and G is bounded for all p(T) in compact set \([0, 1]^{K}\). \(C_{GH}\) is defined as,

where the norm \(\Vert \cdot \Vert \) is the \(\infty \) norm. G is bounded because it is continuous and defined on a compact set. For given H and G, as \(\theta \) satisfies ODE system (2.2), and both H is Lipschitz continuous in Assumption 2.1, \(\frac{\mathrm{d} \theta _{z}(t)}{\mathrm{d} t}\) is also bounded. Similarly, as \(D_{\mu } H\) and \(\frac{\mathrm{d} \theta _{z}(t)}{\mathrm{d} t}\) are bounded, we can further see that \(\frac{\mathrm{d}^2 \theta _{z}(t)}{\mathrm{d} t^2}\) is bounded. From similar argument on p and \(\lambda ^{*}\), \(\frac{\mathrm{d} p_{z}(t)}{\mathrm{d} t}\) and \(\frac{\mathrm{d}^2 p_{z}(t)}{d t^2}\) are also bounded. It means for given H and G, there exists constants \(C_{\theta GH}\) and \(C_{p GH}\), such that

We summarize [9, Theorem 1], [12, Theorem 2], and state the following theorem without proof.

Theorem 2.3

Under Assumption 2.1, ODE system (2.2) has a unique solution \((\theta , p)\) for any initial value \(p(t_{0}) \in P(\Sigma )\) and the MFG has a unique Nash equilibrium point.

We assume in the rest of the paper that Assumption 2.1 holds, which guarantees the existence, uniqueness and convergence of the finite state MFG. However, to find the equilibrium, we need to solve (2.2), which generally does not have analytical solution. As (2.2) is a FBODE system, we cannot solve it numerically by simple discretization. We apply the deep neural network approach in Sirignano and Spiliopoulos [30] to numerically solve (2.2).

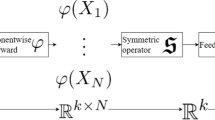

Define two sets of neural network functions as

where \(\nu : {\mathbb {R}} \rightarrow {\mathbb {R}}\) is the triple continuously differentiable activation function, and two strictly increasing triple continuously differentiable activation functions \(\nu _1, \nu _2: {\mathbb {R}} \rightarrow {\mathbb {R}}\) have twice continuously differentiable inverse functions \(\nu _1^{-1}\) and \(\nu _2^{-1}\). They satisfy

where e is any constant that is small.

In the numerical tests of this paper, we use hyperbolic tangent functions \(\tanh \) for activation functions, in particular, \(\nu _1(x)=a\tanh x +b\) for some constants a, b and \(\nu _2(x)=(\tanh x +1)/2\). We approximate the solution \((\theta , p)\) to (2.2) numerically by \(({\tilde{\theta }}^{(N)}, {\tilde{p}}^{(N)})\) which satisfies

By considering both the differential operators and boundary conditions in (2.2), we define the loss function \(\Psi \) for any approximate solution \(({\tilde{\theta }}, {\tilde{p}})\) as

where \(({\tilde{p}}_{K}(t))^{-}:= - {\tilde{p}}_{K}(t) \mathbb {1}_{ \{ p_{K}(t) \le 0 \} }\) and \(B_{\theta }\), \(B_{p}\) can be any constants that satisfy

where constants \(C_{\theta GH}\) and \(C_{p GH}\) are from (2.8). Then it follows

Both the integral term and maximum term in (2.11) can be calculated via Monte Carlo simulation. Practically, we use similar approach as in Sirignano and Spiliopoulos [30] to calculate these two to increase the robustness of training. Given N, the structure of the neural network has been determined. We train the network by finding the optimal values of \(\{ \beta _{j, i} \}^{2 K - 1, 2 n}_{i, j = 1}\), \(\{ \alpha _{i} \}^{2 n}_{i = 1}\) and \(\{ c_{i} \}^{2 n}_{i = 1}\) that determine \(({\tilde{\theta }}^{(N)}, {\tilde{p}}^{(N)})\) such that they minimize \(\Psi \). For the true solution \((\theta , p)\), \(\Psi (\theta , p) = 0\). Since \((\theta , p)\) exists and is unique, \(\Psi \) has unique minimal point \(\Psi (\theta , p) = 0\). We provide the convergence result Theorem 2.4 similar to the Theorem 7.1 in Sirignano and Spiliopoulos [30].

Theorem 2.4

There exists a sequence of \(({\tilde{\theta }}^{(N)}, {\tilde{p}}^{(N)})\) defined in (2.10) such that

Proof

See Sect. 4.

When the Loss function \(\Psi \) is smaller than certain value, because of the uniform bounds on the first and second derivatives of the approximation function, the maximum error on the time interval is also smaller than certain value.

Theorem 2.5

There exists constant \(\varepsilon _{0}\), such that for any \(\varepsilon < \varepsilon _{0}\), if \(\Psi < \varepsilon \) then there exists constant C such that for all \(t \in [t_{0}, T]\) and \(z \in \Sigma \), we have

where \(\Vert \cdot \Vert \) is the infinity norm.

Proof

See Sect. 4.

Note that the constant C in Theorem 2.5 depends on the FBODE system and the bound of its true solution, but is independent of the DNN structure. Theorem 2.5 is an algorithm independent result. We now state our main result on the error estimation for numerical solution to the finite state mean field game.

Theorem 2.6

For every \(t \in [t_{0}, T]\) and \(z \in \Sigma \), assume \({\tilde{\theta }}(t)\) and \({\tilde{p}}(t)\) satisfy:

where \(p_{0} \in P(\Sigma )\), \({\tilde{p}}_{K}(t) = 1 - \sum _{z \ne K} {\tilde{p}}_{z}(t)\) and \({\tilde{p}}_{z}(t) \in [0, 1]\) for \(z < K\). Then there exists uniform constant B and \(N_{0}\), such that when \(N > N_{0}\) and

we have for all \(t \in [t_{0}, T]\) and \(z \in \Sigma \),

Proof

See Sect. 4.

Note that the constant B depends on the FBODE system and the bound of its true solution, but is independent of the DNN structure, which implies that Theorem 2.6 is an algorithm independent result.

Combining Theorems 2.4 and 2.6, we immediately have the following result.

Theorem 2.7

The sequence \(({\tilde{\theta }}^{(N)}, {\tilde{p}}^{(N)})\) defined in (2.10) converges uniformly to the true solution \((\theta , p)\) of the FBODE (2.2), that is, for \(t \in [0, T]\),

Remark 2.8

Although we only prove the convergence and error estimation results for a two-layer neural network structure characterized by \({\varvec{\Theta }}^{n}(\nu _1, \nu )\) and \({\mathbf {P}}^{n}(\nu _2, \nu )\), the idea and the proof can be easily adapted to more sophisticated neural network models (more layers, LSTM, etc.) as they share similar structures.

3 Application: Optimal Market Making with Rank-Based Reward

The model setting is similar to Guéant [15], except that exchange provides incentive reward for market making. The terminal payoffs of market makers depend on their trading volumes and rankings in the market. The optimization problems of different market makers are coupled. It is in general difficult to solve a finite players game due to high dimensionality, but MFG provides a good approximation, see [8, 9] and [12]. We therefore use MFG as a proxy to solve the optimal market marking problem.

Consider a continuum family of market makers \(\Omega _{mm}\) who keep quoting bid/ask limit orders. Select one of them as a reference market maker. Assume asset price \(S_t\) follows a Brownian motion with initial value S,

where \(W_{t}\) is a standard Brownian adapted to the natural filtration \(\{ {\mathcal {F}}^{W}_{t} \}_{t \in {\mathbb {R}}_{+} }\) and \(\sigma \) the volatility of the stock. Assume \(\delta ^{a}_{t}\) and \(\delta ^{b}_{t}\) are ask/bid spreads, which are controls determined by the reference market maker. Denote by \(N_{t}^{a}\) and \(N_{t}^{b}\) the jump processes for buy/sell market order arrivals to the reference market maker, with intensities \(\Lambda (\delta ^{a}_{t})\) and \(\Lambda (\delta ^{b}_{t})\), respectively. Assume \(\Lambda : {\mathbb {R}} \rightarrow {\mathbb {R}}\) has continuous inverse function, and is decreasing, continuously differentiable satisfying:

The reference market maker has state variables \((X_{t}, q_{t}, v_{t})\) in which \(X_t\) is cash account, \(q_t\) inventory, \(v_t\) accumulated trading volume, with initial value (x, q, v). We assume \(q_{t}\) can only take values in a finite set \({\mathbf {Q}} = \{-Q, \cdots , Q\}\) and \(v_{t}\) can only take values in a finite set \({\mathbf {V}}:= \{0, \ldots , v_{\max }\}\) and any trading volume above \(v_{\max }\) is not counted in the reward calculation. Denote by \(I^{b}:= \mathbb {1}_{q + 1 \in {\mathbf {Q}}}\) and \(I^{a}:= \mathbb {1}_{q - 1 \in {\mathbf {Q}}}\) the indicators of market maker’s buying and selling capabilities.

The dynamic of \(X_{t}\) is given by

that of \(q_{t}\) by

and that of \(v_t\) by

Denote by \(p(t, q_{t}, v_{t})\) the probability measure on the mean field of discrete states \((q_{t}, v_{t})\) for all market makers. The reference market maker wants to solve the following optimization problem:

where \(X_T+q_TS_T\) is the cash value at time T, \(l (|q_{T}|)\) the terminal inventory holding penalty with l an increasing convex function on \(R_+\) with \(l(0)=0\), \(\gamma \sigma ^{2} \int _{0}^{T} q_{t}^{2} dt\) the accumulated running inventory holding penalty with \(\gamma \) a positive constant representing the risk adverse level, and \(R(v_{T})\) the cash reward given by the exchange as incentive for market markers to increase trading volume \(v_T\). We consider the rank-based trading volume reward, defined by

where \(1 - \sum _{j = v_{T}}^{v_{\max }} \sum _{i = -Q}^{Q} p(T, i, j)\) is the percentage of market makers that the reference market maker exceeds in trading volumes and R a positive constant representing the maximum reward set by the exchange.

Using the martingale property, (3.2) can be reduced to \(x + q S + \theta (0, q, v)\), where \(\theta \) is the value function defined by

and \({\mathbb {E}}_t[\cdot ]\) is the conditional expectation given \(q_{t} = q, v_{t} = v\).

We assume market maker takes closed loop feedback control, i.e., when market maker has state (q, v),

Since the only relevant states are \(q_{t}\) and \(v_{t}\) that both take values in finite sets, the problem can be reduced to a continuous time finite state MFG discussed in Cecchin and Pelino [9] by reformulating some notations as follows. Define \(K:= (2 Q + 1) (v_{\max } + 1)\) and \(\Sigma := \{1, \cdots , K\}\). There is a one-to-one mapping \(Z: {\mathbf {Q}} \times {\mathbf {V}} \rightarrow \Sigma \): For every \((q, v) \in {\mathbf {Q}} \times {\mathbf {V}}\), there exists \(z \in \Sigma \) such that

and for every \(z \in \Sigma \), there exists \((q, v) \in {\mathbf {Q}} \times {\mathbf {V}}\) such that

The state (q, v) is then reformulated by state z. The value function \(\theta \) and probability measure on mean field of state p are reformulated as \(\theta , p: [0, T] \rightarrow {\mathbb {R}}^{K}\), where

Define \(\lambda \) as

where \(\lambda \) satisfy

\(\beta ^{a}(z)\) and \(\beta ^{b}(z)\) are defined as the two accessible states from state z,

Define F and G as

The optimal market making problem is now reduced to a continuous time finite state MFG discussed in Sect. 2 of this paper. Denote the game as \(G_{R}\). We have the following result.

Proposition 3.1

\(G_{R}\) satisfies Assumption 2.1.

Proof

See Sect. 4.

According to Cecchin and Pelino [9], the Nash equilibrium of MFG \(G_{R}\) and that of finite players game exist and are unique, and the game with N players converges to the limiting MFG case in the order \(O(\frac{1}{N})\). We can numerically solve the FBODE system corresponding to the finite state MFG in (3.5) and find the equilibrium value function and probability of mean field by the DNN technique.

We next do some numerical tests. We use a LSTM (long short-term memory) neural network to approximate the solution \((\theta , p)\). Denote the function constructed by LSTM neural network as \(({\tilde{\theta }}(t, \beta ), {\tilde{p}}(t, \beta ))\), where \(\beta \) is the parameters set for neural network, designed by the following steps: Layer 0 is the input \(t \in [0, T]\) and layer k with output \(h_k\) is designed as follows:

with the initial values \(c_{0} = h_{0} = 0\), where the operator \(\circ \) is the element-wise product, functions \(\sigma \) some scaled \(\tanh \) activation functions (hence satisfying all assumptions in our main results), \(t \in [0, T]\) input to the LSTM network, \(f_k \in {\mathbb {R}}^{h}\) forget gate’s activation vector, \(i_k \in {\mathbb {R}}^{h}\) input/update gate’s activation vector, \(o_k \in {\mathbb {R}}^{h}\) output gate’s activation vector, \(h_k \in {\mathbb {R}}^{h}\) hidden state vector, \({\tilde{c}}_k \in {\mathbb {R}}^{h}\) cell input activation vector, \(c_k \in {\mathbb {R}}^{h}\) cell state vector, \(W \in {\mathbb {R}}^{h \times 1}, U \in {\mathbb {R}}^{h \times h}, b \in {\mathbb {R}}^{h}\) weight matrices and bias vector parameters which need to be learned during training, and h the number of hidden units. LTSM network is an advanced version of a traditional neural network and provides more accurate approximation for complicated functions. For our model, this specific structure performs better than that of traditional neural network.

We use a LSTM network with 3 layers and 32 nodes per layer. The network is trained by stochastic gradient approach with mesh-free randomly sampling points in [0, T]. This randomness adds to the robustness of the network. The detailed training procedure is similar to that in Sirignano and Spiliopoulos [30].

The market order arrival intensity function is given by \( \Lambda (\delta ):= A e^{-k \delta }\) and the liquidity penalty function \(l(q):= a q^2\). We assume all market makers start at 0 inventory and 0 trading volume. The benchmark data used are \(S=20\), hourly volatility \(\sigma =0.01\), \(\gamma =1\), \(T=10\) hours, capacity \(Q=1\), \(v_{\max }=10\), \(k=2\), \(a=2\), \(A=0.5\), and \(R=2\).

There are two typical schemes of trading volume rewards in most of exchanges’ incentive programs. One is the rank-based trading volume reward as in (3.3), and the other is the linear trading volume reward, defined by

Since \(R_L(v_{T})\) is independent of the mean field of state, the FBODE system for the value function and the probability of mean field of state is decoupled and can be solved numerically with a standard Euler scheme. We next do numerical tests and compare the value functions, optimal bid/ask spreads and probability distributions of trading volumes under three different trading volume reward schemes: 1. no trading volume reward (\(R = 0\), benchmark case), 2. linear trading volume reward (R in (3.6)), and 3. rank-based trading volume reward (R in (3.3)). The rank-based reward introduces competition between market makers, whereas the linear reward does not. The training result is satisfactory and the average loss is less than 0.003.

Figures 1 and 2 show the value functions \(\theta (t, q, v)\) for fixed \(q=0,1\) and varied v with the ‘Benchmark’ representing no trading volume reward, ‘\(v = 0\) lin’ path the linear reward and initial trading volume \(v = 0\), and ‘\(v = 0\)’ the rank-based reward. It is clear that the introduction of market incentive R increases the value functions for market makers, and the higher the initial trading volume v, the higher the value function \(\theta \). Even for market makers with initial trading volume \(v = 0\), the value functions are still higher than the benchmark one as they benefit from their potential market incentive gains, which explains the convergence of the curves for \(v=0\) to the benchmark one as t tends to T. The value functions for linear and rank awards are largely the same.

4 Proofs

4.1 Proof of Theorem 2.4

Proof

According to Theorem 2.3, there exists unique solution \((\theta , p)\) to ODE system (2.2), which is also the unique minimal point for \(\Psi \) such that

We use \((\nu _i^{-1})'\) to denote the first-order derivative of \(\nu _i^{-1}\) for \(i = 1, 2\). From (2.7) we know \(\theta \) is bounded by \(C_{GH}\). Hence, \(\frac{\mathrm{d}}{\mathrm{d} t} \theta _{z}(t)\) is also bounded uniformly for t and z. Moreover, \(p(t) \in P(\Sigma )\) for any \(t \in [0, T]\), and hence, is also bounded. From the assumption on \(\nu _1\), \(\nu _2\), we know

It means \(\theta _{z}\)’s image is bounded and a strict subset of \(\nu _{1}^{-1}\)’s domain. It is similar for \(p_{z}\) and \(\nu _{2}^{-1}\). Combining with the continuously differentiability of \(\nu _{1}^{-1}\) and \(\nu _{2}^{-1}\), we know \(\nu _{1}^{-1}(\theta _{z}(t))\), \((\nu _{1}^{-1})'(\theta _{z}(t))\), \(\nu _{2}^{-1}(p_{z}(t))\) and \((\nu _{2}^{-1})'(p_{z}(t))\) are bounded by some constant C uniformly for t and z. \((\nu _{i})'\) and \((\nu _{i})''\) are Lipschitz continuous on \([-2 C, 2 C]\) with coefficient L for \(i = 1, 2\). Define \( C^{N}(\nu ):= \{ \zeta : [0, T] \rightarrow {\mathbb {R}}; \quad \zeta (t) = \sum _{i = 1}^{N} \beta _{i} \nu ( \alpha _{i} t + c_{i}) \}\). According to the proof of Theorem 7.1 in Sirignano and Spiliopoulos [30], for any \(0< \varepsilon < C\), there exists \(N > 0\) and \(y_{z} \in C^{N}(\nu )\) such that

Hence, we have

On the other hand,

As \(y_{z}(t) \in [-2 C, 2 C]\), there exists constant \(C_1\) such that \((\nu _{1})'(y_{z}(t))\) is bounded by it uniformly. Moreover, we have

Hence, we have

The first inequality above comes from the boundedness and Lipschitz continuity of \(\nu _{1}'\), as well as the boundedness of \((\nu _{1}^{-1})'(\theta _{z}(t))\). Moreover, for second-order derivatives, we have

As \(\theta _{z}(t)\) and \(\frac{\mathrm{d}}{\mathrm{d} t} \theta _{z}(t)\) are bounded and \((\nu _{1}^{-1})\) is twice continuously differentiable, \(\frac{\mathrm{d}^2}{\mathrm{d} t^2} \nu _{1}^{-1}(\theta _{z}(t))\) is bounded. To estimate the difference of second-order derivatives between approximation function and true function, we have

Define

and we have

As \(y_{z}(t)\) and \(\frac{\mathrm{d}}{\mathrm{d} t} \nu _{1}^{-1}(\theta _{z}(t))\) are bounded from previous proof, and \(\nu _1\) is triple continuously differentiable function by definition, \(\nu _1''(y_{z}(t))\), \(\nu _1'(y_{z}(t))\), \((\frac{\mathrm{d}}{\mathrm{d} t} \nu _{1}^{-1}(\theta _{z}(t)))^2\) and \(\frac{\mathrm{d}^2}{\mathrm{d} t^2} \nu _{1}^{-1}(\theta _{z}(t))\) are also bounded. Moreover, \(\Vert \frac{\mathrm{d}}{\mathrm{d} t} y_{z}(t) \Vert \le \Vert \frac{\mathrm{d}}{\mathrm{d} t} \nu _{1}^{-1}(\theta _{z}(t)) \Vert + \varepsilon \), and hence bounded. According to the Lipschitz continuity of \(\nu _1'\) and \(\nu _1''\), as well as (4.1), we know there exists constants \(C_2\), such that

By making transformation on \(\varepsilon \) in above proof, we know for any \(0< \varepsilon < C\), there exists \(N > 0\) and \(y_{z} \in C^{N}(\nu )\) such that

Hence, we know there exists \(N > 0\) and \(y_{z} \in C^{N}(\nu )\) such that

Similarly, we know \(\Vert \frac{\mathrm{d}}{\mathrm{d} t} \nu _{2}(y_{z}(t)) \Vert \le C_{p GH} < B_{p}\). Then we get

If we define

Then from proof above we know for any \(0< \varepsilon < C\), there exists \(N > 0\) and \({\tilde{\theta }}^{(N)} \in {\hat{\varvec{\Theta }}}^{N}(\nu _1, \nu )\) such that

On the other hand, notice that any function \(f_{N} \in {\hat{\varvec{\Theta }}}^{N}(\nu _1, \nu )\), there should exists \(f_{K N} \in {\varvec{\Theta }}^{K N}(\nu _1, \nu )\) such that \(f_{K N} = f_{N}\), by letting some \(\beta _{j, i} = 0\). It means \({\hat{\varvec{\Theta }}}^{N}(\nu _1, \nu ) \subset {\varvec{\Theta }}^{K N}(\nu _1, \nu )\), and \({\tilde{\theta }}^{(N)} \in {\varvec{\Theta }}^{K N}(\nu _1, \nu )\). For p and \({\mathbf {P}}^{n}(\nu _2, \nu )\), we can have similar argument. Hence, we conclude that for any \(0< \varepsilon < C\), there exists \(N > 0\) and \({\tilde{\theta }}^{(N)} \in {\varvec{\Theta }}^{N}(\nu _1, \nu )\), \({\tilde{p}}^{(N)} \in {\mathbf {P}}^{N}(\nu _2, \nu )\) such that

Then similar to the proof for Theorem 7.1 in Sirignano and Spiliopoulos [30], we know there exists a uniform constant M which only depends on boundedness of \(\theta \), \(\lambda ^{*}\) and Lipshcitz coefficient of \(\lambda ^{*}\) and H, such that

It concludes the proof. \(\square \)

4.2 Proof of Theorem 2.5

Proof

Since for different \(t_{0}\), the following proof is the same, we assume \(t_{0} = 0\) for the ease of notation. We first focus on proving the first inequality in (2.12). Define

As \(\Psi < \varepsilon \), from the definition of \(\Psi \) we know \(\frac{\mathrm{d}^2 \theta _{z}(t)}{\mathrm{d} t^2}\) is uniform bounded on [0, T]. Furthermore, H is Lipschitz continuous, and \(\theta \) has bounded first-order derivative to t. Hence, e(t, z) is a Lipschitz continuous function on [0, T]. Denote its Lipschitz coefficient as L. There exists \({\hat{t}} \in [0, T]\) such that

For any \(\Delta t\) such that

From Lipschitz continuity of e, we have

It means

From (4.2), we know \( L \Delta t \le \frac{ | e({\hat{t}}, z) | }{2}\); therefore,

Combining the above two inequalities, we know for any \(t \in [{\hat{t}} - \Delta t, {\hat{t}} + \Delta t]\),

We can have the following estimation. Without loss of generality, we can assume \(T - {\hat{t}} \ge \frac{T}{2}\) (otherwise we can use the other side \( [{\hat{t}} - \Delta t, {\hat{t}}]\) as the limit of integration on the second inequality in the following),

which implies that for any \(t \in [0, T]\),

There exists \(\Psi _0\) such that for all \(\Psi < \Psi _0\), \(4\,L^{\frac{1}{3}} \Psi ^{\frac{1}{3}} \ge \frac{2 \sqrt{2 T}}{T} \Psi ^{\frac{1}{2}}\). Hence, there exists constant C such that

Using the similar arguments, the third inequality in (2.12) can be proved. The second and fourth inequalities are trivial. \(\square \)

4.3 Proof of Theorem 2.6

The general structure of the proof is similar to those in Cecchin and Pelino [9] with one key difference: In Cecchin and Pelino [9], p satisfies a non-perturbed Kolmogorov forward equation and has initial value in \(P(\Sigma )\) with nonnegative components, whereas \({\tilde{p}}\) satisfies a perturbed Kolmogorov forward equation (2.13) and its initial value is not necessarily in \(P(\Sigma )\) and may be negative, which makes some prior estimations in Cecchin and Pelino [9] not applicable for our case. We need to provide extra modifications by adding and subtracting an extra term \(M_1\) such that \({\tilde{p}}(t) + M_1\) is nonnegative, and need to modify every step in the proof to estimate the extra terms introduced by \(M_1\). For the completeness, we give the whole proof.

Note that the solution pair \(({\tilde{\theta }}, {\tilde{p}})\) to (2.13) is determined only by initial time \(t_{0}\) and initial value \({\tilde{p}}(t_{0})\). We first prove that \({\tilde{\theta }}\) is well defined (Proposition 4.5), continuous at \({\tilde{p}}(t_{0})\) (Proposition 4.2), and continuously differentiable at \({\tilde{p}}(t_{0})\) (Theorem 4.7) by discussing the linearized system (4.11), we then prove that \({\tilde{\theta }}\) satisfies a PDE similar to the master equation in Cecchin and Pelino [9] (Theorem 4.8) and that the master equation on some discrete grids of \(P(\Sigma )\) can be approximated by a backward ODE with extra error terms (Proposition 4.9), and we finally estimate the difference between \(\theta \) and \({\tilde{\theta }}\) by comparing the two backward ODE systems and conclude the proof.

Denote by \(\Vert x \Vert := \max _{1 \le z \le K} | x_{z} | \), the norm of x in \({\mathbb {R}}^{K}\) and \(\Vert f \Vert := \max _{t \in [0, T]} \max _{1 \le z \le K} | f_{z}(t) |\), the norm of f in \({\mathcal {C}}^{0}([0, T]; {\mathbb {R}}^{K})\). Due to the introduction of perturbation terms in ODE system (2.13), the existence and uniqueness of its solution can no longer be guaranteed for every initial value \({\tilde{p}}(t_{0})\). However, under certain conditions on (2.13), we can have the existence and prior bound estimation of solution to (2.13).

Proposition 4.1

Given constant \(M > 0\), define \(I_{p, M}:= [-M, 1+M]^{K}\) and

If functions \(\epsilon _i, i = 2, 4\) satisfy

where \(\frac{1}{N_{0}}:= \frac{1}{3} M e^{- \Lambda (M) T}\). Then for any initial time \(t_{0} \in [0, T]\) and \({\tilde{p}}(t_{0}) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), ODE system (2.13) has solution \(({\tilde{\theta }}, {\tilde{p}})\), where

Moreover, \(({\tilde{\theta }}, {\tilde{p}})\) satisfy the following on \([t_{0}, T]\) uniformly for any initial time \(t_{0} \in [0, T]\) and initial value \({\tilde{p}}(t_{0})\).

Proof

Given a prior \({\bar{p}}\) such that \({\bar{p}}(t) \in [-M, 1+M]^{K}\) for all \(t \in [t_{0}, T]\), Lipschitz continuous with Lipschitz coefficient bounded by L(M), where

and starts with the same \({\bar{p}}(t_{0}) = {\tilde{p}}(t_{0}) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), with which we solve the backward ODE in (2.13):

We know function \({\tilde{\theta }}(t)\) is bounded by constant \(C_{G}(M)\) following a similar proof as [12, Proposition 2]. Note that \(C_{G}(M)\) is monotonically non-decreasing w.r.t M, hence \(\Lambda (M)\) is also non-decreasing w.r.t M. Since \({\bar{p}}(t_{0}) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), there exists \(p_{0} \in P(\Sigma )\) such that \({\bar{p}}(t_{0}) - p_{0} = \epsilon _{4}\) where \(\Vert \epsilon _{4} \Vert \le \frac{1}{N_{0}}\). Consider two functions \({\tilde{p}}\) and p satisfying

Integrating both side and subtracting \({\tilde{p}}\) and p, we get

By Gronwall inequality, we have

As p is the solution to a Kolmogorov equation, \(p(t) \in P(\Sigma )\). Hence, the solution \({\tilde{p}}(t) \in [-M, 1+M]^{K}\) for all \(t \in [t_{0}, T]\), and \({\tilde{p}}\) is also Lipschitz continuous with Lipschitz coefficient bounded by L(M), as \(\Vert \frac{\mathrm{d} {\tilde{p}}}{\mathrm{d} t} \Vert \le L(M)\).

Let \({\mathcal {F}}([t_{0}, T])\) be the set of Lipschitz continuous functions defined on \([t_{0}, T]\), with Lipschitz coefficient bounded by L(M), taking values in \([-M, 1+M]^{K}\) and starting at the same initial value \({\tilde{p}}(t_{0})\) at \(t_{0}\). We can define mapping \(\xi : {\mathcal {F}}([t_{0}, T]) \rightarrow {\mathcal {F}}([t_{0}, T])\) in the following way: given \({\tilde{p}} \in {\mathcal {F}}([t_{0}, T])\), let \({\tilde{\theta }}\) be the solution of terminal value problem in (2.13). Then \({\tilde{\theta }}(t)\) is bounded by \(C_{G}(M)\). Let \(\xi ({\tilde{p}})\) be the solution to the initial value problem in (2.13). \(\xi ({\tilde{p}}) \in {\mathcal {F}}([t_{0}, T])\) from the above argument. Following the proof of [12, Proposition 4], \({\mathcal {F}}([t_{0}, T])\) is a set of uniformly bounded and equicontinuous functions. Thus, by Arzela–Ascoli theorem, it is a relatively compact set. It is also clear that it is a convex set. Hence, by Brouwer fixed point theorem, we know there exists fixed point for \(\xi \), which proves the existence of solution to (2.13). \(\square \)

We next prove that under certain condition, \(({\tilde{\theta }}, {\tilde{p}})\) is unique and continuous w.r.t initial condition.

Proposition 4.2

There exist positive constants \(N_0\) and C, such that if we have condition (4.3), then for any \(t_0 \in [0, T]\) and initial condition \({\tilde{p}}(t_{0}) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), the solution to (2.13) is unique. Moreover, let \(({\tilde{\theta }}, {\tilde{p}})\) and \(({\hat{\theta }}, {\hat{p}})\) be two solutions to ODE system (2.13) with different initial conditions \({\tilde{p}}(t_{0}), {\hat{p}}(t_{0}) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), then

Proof

Start with any M and the corresponding \(N_0\) defined in Proposition 4.1. Then both \({\tilde{\theta }}\) and \({\hat{\theta }}\) uniform bounded by \(C_{G}(M)\). Let us first assume \({\tilde{p}}_{z}(t), {\hat{p}}_{z}(t) \ge - M_1\) uniformly, and we will decide later the value for \(M_1\) and prove the condition for it. Similarly to the proof for [9, Proposition 5], we first try to obtain estimation on LHS of (4.7) given later. Define \(\phi := {\tilde{\theta }} - {\hat{\theta }}\) and \(\pi := {\tilde{p}} - {\hat{p}}\). Then the couple \((\phi , \pi )\) solves

Integrating \(\frac{\mathrm{d}}{\mathrm{d} t} \sum _{z \in \Sigma } \phi _{z}(t) \pi _{z}(t)\) over the interval \([t_0, T]\), using the product rule and (4.5), also noting that \(\sum _{z} \lambda ^{*}_{z}(y, \Delta ^{y} {\tilde{\theta }}(t)) \phi _{y}(t) = 0\), after some simple calculus, we have

As \(\lambda ^{*}(z, \mu ) = D_{\mu } H(z, \mu )\), by Taylor theorem, there exists point a on the line between \(\Delta ^{z} {\tilde{\theta }}(t)\) and \(\Delta ^{z} {\hat{\theta }}(t)\) such that

Then from assumption (2.4), and do above similar on another way round, we have the following estimations:

Similarly, we have

Since \(\sum _{z \in \Sigma } \phi _{z}(T) \pi _{z}(T) \le 0\) by (2.6), \({\tilde{p}}_{z}(t), {\hat{p}}_{z}(t) > -M_1\) by the choice of \(M_1\), using (4.6) and the inequalities above, we have

By Lipschitz continuity of \(\lambda ^{*}\), there exists C such that

Note that unlike the proof for [9, Proposition 5], both \({\tilde{p}}_{z}\) and \({\hat{p}}_{z}\) can be negative in our setting. If they were nonnegative, then we could choose \(M_1=0\), the same technique in [9, Proposition 5] would work. In the case here, we have to introduce \(M_1\) that requires us to do many more prior estimations.

We next derive the bound for \(\pi \). Integrating the second equation in (4.5) over \([t_{0}, t]\), we have

As \(\lambda ^{*}\) is both bounded and Lipschitz continuous, there exists C such that

where the second line holds because \({\tilde{p}}_{z}(s) + M_1 > 0\). Moreover, we have

Applying Gronwall inequality, as \({\tilde{p}}_{z}(s) \in [-M, 1 + M]\), there exists C such that

where C also only depends on M in Proposition 4.1.

We next derive the bound for \(\phi \). Integrating the first equation in (4.5) over \([t_{0}, t]\), from the Lipschitz continuity of G, H, there exists C such that

Applying Gronwall inequality, there exists constant C such that

By combining (4.8) and (4.9), using \(A B \le \varepsilon A^2 + \frac{1}{\varepsilon } B^2\) for \(A, B > 0\), there exists C such that

As C only depend on the boundedness and Lipschitz coefficient of H, G, \(\lambda ^{*}\) and the bound of \(D^2_{\mu \mu } H\), \({\tilde{\theta }}\), \({\hat{\theta }}\), which depend on the M in Proposition 4.1. We only need to select \(M_1\) such that

and we can have (4.4). Then it remains to decide the new \(N_0\) such that we have \({\tilde{p}}_{z}(t), {\hat{p}}(t) > -M_1\) uniformly as we assumed. From Proposition 4.1, \(N_1:= \frac{3 e^{\Lambda (M_1)}}{M_1}\) and we can simply define our new \(N_0\) as \(\max {N_0, N_1}\). On the other hand, the uniqueness of solution comes directly from (4.4). \(\square \)

According to Proposition 4.1 and 4.2, take any \(t \in [t_0, T]\) and \({\bar{p}}_{0} \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\) as the initial value for ODE system (2.13), there exists an unique solution \(({\bar{\theta }}(s), {\bar{p}}(s))\) on [t, T]. Note that \({\bar{\theta }}(s)\) might not equal \({\tilde{\theta }}(s)\) stated as the solution to (2.13) in Theorem 2.6, since \({\bar{\theta }}\) depends on the values of initial time t and initial condition \({\bar{p}}_{0}\) chosen above. And we can define a function \({\tilde{U}}\) on \(t \in [t_{0}, T]\) and \({\tilde{p}}_{0} \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\) by the corresponding \({\bar{\theta }}(t)\) explained above.

According to Proposition 4.1 and 4.2, \({\tilde{U}}\) is well defined and continuous w.r.t \({\tilde{p}}_{0}\). Moreover, for \(({\tilde{\theta }}, {\tilde{p}})\), the solution to (2.13) in Theorem 2.6 on \([t_{0}, T]\), which is the approximated solution we got from DNN and want to estimate error on, we have for all \(t \in [t_{0}, T]\) that:

It suggests \({\tilde{U}}\) has all information of \({\tilde{\theta }}\). If we can compare \({\tilde{U}}\) with the U defined similar in Cecchin and Pelino [9] corresponding to the true solution to (2.2), we can estimate the error of \({\tilde{\theta }}\). To compare \({\tilde{U}}\) with the U, we need to prove that \({\tilde{U}}\) also satisfy the master equation similar to U in Cecchin and Pelino [9]. To achieve this goal, we are to prove the continuously differentiability of \({\tilde{U}}\) in the following steps. We first define the derivative of \({\tilde{U}}\) w.r.t vector \({\tilde{p}}_0\) in a similar way to in Cecchin and Pelino [9], Define operator \(D^{y}_{p}\) as follows.

Definition 4.3

Define operator of a function \(U: {\mathbb {R}}^{K} \rightarrow {\mathbb {R}}\) as \(D^{y} U: {\mathbb {R}}^{K} \rightarrow {\mathbb {R}}^K\) for \(y \in \Sigma \).

where \(D^{y} U(p) = ([D^{y} U(p)]_{1}, \ldots , [D^{y} U(p)]_{K})\), and \(\delta _{z} \in {\mathbb {R}}^{K}\) such that all elements are 0 except the z element is 1.

By noticing that \(\mu = \sum _{z \ne 1} \mu _{z} (\delta _{z} - \delta _{1}) + (\sum _{z = 1}^{K} \mu _{z}) \delta _{1}\), if \({\tilde{U}}\) is differentiable, we have the following lemma from the linearity of directional derivative.

Lemma 4.4

Define the derivative of function U(p) along the direction \(\mu \in {\mathbb {R}}^{K}\) as a map \(\frac{\partial }{\partial \mu } U: {\mathbb {R}}^{K} \rightarrow {\mathbb {R}}\),

It satisfies

where \(\frac{\partial }{\partial \delta _{1}}\) is in fact the first component of the gradient of \({\tilde{U}}\), and \(D U(p):= D^{1} U(p)\) for notation simplicity. When \(\sum _{z = 1}^{K} \mu _{z} = 0\), for any \(y \in \Sigma \), we have

In order to characterize the directional derivative of \({\tilde{U}}\) w.r.t \({\tilde{p}}_{0}\), given \({\tilde{\theta }}\) and \({\tilde{p}}\), let us define a linear system of ODE for \((u, \rho )\) similar to [9, Equation (80)], which will be used quite a few times in the following.

Similar to [9, Equation (80)], \(D_{\mu } \lambda ^{*}_{z}(y, \Delta ^{y} {\tilde{\theta }}(t))\) is the gradient of \(\lambda ^{*}_{z}\) w.r.t its second variable in \({\mathbb {R}}^{K}\). The unknowns are u and \(\rho \), while b, c, \(u_{T}\), \(\rho _{0}\) are given measurable functions, with c satisfying \(\sum _{z = 1}^{K} c(t, z) = 0\). In fact, (4.11) is generalization of [9, Equation (80)]. In (4.11), it is a general directional derivatives of any direction in the terminal condition of \(u_{z}(T)\), while in [9, Equation (80)], it is directional derivatives of specific directions.

We first prove in Proposition 4.5 that the linear system (4.11) has a unique solution, which is linear bounded by its initial and boundary conditions.

Proposition 4.5

There exist positive constants \(N_0\) and C, such that if we have (4.3) and \({\tilde{p}}(t_0) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), then for any measurable function b, c and vector \(u_{T}\), the linear system (4.11) has a unique solution \((u, \rho )\). Moreover, it satisfies

Proof

We only discuss the case when \(t_{0} = 0\), as it can be extended to any \(t_{0} \in [0, T]\) by the same argument.

We first let \(N_0\) bigger than the one in Proposition 4.2. And similar to the proof for Proposition 4.2, to cope with the potential negativeness, we first assume \({\tilde{p}}_{z}(t) \ge - M_1\) uniformly and \(M_1 \le M\), and we will decide later the value for \(M_1\) small enough and find the \(N_0\) such that it holds. As \(\sum _{z \in \Sigma } \lambda ^{*}_{z}(y, \Delta ^{y} {\tilde{\theta }}(t)) = 0\), we have \(\sum _{z, y \in \Sigma } {\tilde{p}}_{y}(t) D_{\mu } \lambda ^{*}_{z}(y, \Delta ^{y} {\tilde{\theta }}(t)) \cdot \Delta ^{y} u(t) = 0\), and \(\sum _{z \in \Sigma } \frac{d \rho _{z}(t)}{\mathrm{d} t} = 0\). Hence, for any \(t \in [0, T]\), we have

Define set \(P_{\eta }(\Sigma )\) as

We define map \(\xi \) from \({\mathcal {C}}^{0}([0, T]; P_{\eta }(\Sigma ))\) to itself as follows: for a fixed \(\rho \in {\mathcal {C}}^{0}([0, T]; P_{\eta }(\Sigma ))\), we consider the solution \(u = u(\rho )\) to the backward ODE for u in (4.11), and define \(\xi (\rho )\) to be the solution to the forward ODE for \(\rho \) in (4.11) with \(u = u(\rho )\). From (4.13), \(\xi (\rho )\) is well defined as \(\xi (\rho )(t) \in P_{\eta }(\Sigma )\) for any t.

Similar to the proof for [9, Proposition 6], the solution to (4.11) is the fixed point of mapping \(\xi \), and we prove its existence by Schaefer’s fixed point theorem, which asserts that a continuous and compact mapping \(\xi \) of a Banach space X into itself has fixed point if the set \(\{\rho \in X: \rho = \omega \xi (\rho ), \omega \in [0, 1]\}\) is bounded. Firstly, \(\xi \) is continuous as the system (4.11) is linear in u and \(\rho \). \({\mathcal {C}}^{0}([0, T]; P_{\eta }(\Sigma ))\) is a convex subset of Banach space \({\mathcal {C}}^{0}([0, T]; {\mathbb {R}}^{K})\). Moreover, from the linearity and bounded coefficients of system (4.11), \(\xi \) maps any bounded set of \({\mathcal {C}}^{0}([0, T]; P_{\eta }(\Sigma ))\) into set of bounded and Lipschitz continuous functions with uniform Lipschitz coefficient in \({\mathcal {C}}^{1}([0, T]; P_{\eta }(\Sigma ))\), which by Arzela–Ascoli theorem, is relatively compact. By compact map definition, \(\xi \) is a compact map. Hence, to apply Schaefer’s fixed point theorem, it remains to prove that the set \(\{ \rho : \rho = \omega \xi (\rho ) \}\) is uniform bounded for \(\forall \omega \in [0, 1]\). We can restrict to \(\omega > 0\) since otherwise \(\rho = 0\). Fix a \(\rho \) such that \(\rho = \omega \xi (\rho )\), which means the couple \((u(\rho ), \xi (\rho ))\) solves (for notation simplicity we neglect their dependency on \(\rho \))

We need to prove the solution \((u, \xi )\) if existed, are bounded uniformly for any \(\omega \in (0, 1]\). For notation simplicity, we omit the dependence of \(\lambda ^{*}\) on the second variable. From (4.14),

The first line is 0 by exchanging z and y in the second double sum and using (2.3). Integrating over [0, T] and using the expression of \(u_{z}(T)\), we have

Reorganize the terms and we get

From assumption on G in (2.6) and definition of directional derivative, we have

Moreover, as \(\lambda ^{*}(y) = D_{\mu } H(y)\) (we also neglect the dependence of H on the second variable),

Since \({\tilde{p}}\) and \({\hat{p}}\) can be negative, the same step in [9, Proposition 6] to obtain estimation on RHS of above is not applicable. However, as \({\tilde{p}}_{y}(t) + M_1 \ge 0\) for all \(y \in \Sigma \), from (2.4), we can rewrite the RHS of the equation and get the following estimation instead.

So there exists constant C and \(C_1\) (\(C_1\) only depends on the dimension of u) such that

where \(b(t):= (b(t, 1), \ldots , b(t, K))\), and c(t) is defined similarly. As \(\lambda ^{*}\) and \(D_{\mu } \lambda ^{*}\) is bounded by constant C, from ODE for \(\xi \) in (4.14) we have

So that by Gronwall’s inequality, there exists constant C such that

where there exists C such that \(\sum _{y \in \Sigma } ({\tilde{p}}_{y}(t) + M_1) \le C^2 \) and

From (4.15), there exist different constants C at each line such that

Moreover, using Gronwall inequality on the backward ODE in (4.14) for function u, there exists C such that

Then there exists C such that

As \(\Vert \xi (T) \Vert \le \Vert \xi \Vert \), using the inequality \(A B \le \varepsilon A^2 + \frac{1}{4 \varepsilon } B^2\) for \(A, B \ge 0\), there exists C such that

Note that the constant C only depends on the boundedness of \({\tilde{\theta }}\), which depends on M in Proposition 4.1. If

then we have

Hence, the solution pair \((u, \xi )\) are bounded for all \(\omega \in [0, 1]\), which means \(\rho = \omega \xi (\rho )\) are also uniform bounded, and hence prove the existence of solution to (4.11). Meanwhile, let \(\omega = 1\) leads to the uniform bound estimation for solution \((u, \rho )\) to (4.11), and the uniqueness of it comes directly from (4.12). If \(N_0 > \frac{3 e^{\Lambda (M_1)}}{M_1}\), from Proposition 4.1, we have \({\tilde{p}}_{y}(t) > -M_1\) uniformly, which concludes our proof. Hence, we can just update our \(N_{0}\) set before to satisfy the inequality. \(\square \)

Then we can prove the differentiability of \({\tilde{U}}\) w.r.t \({\tilde{p}}_{0}\) in Proposition 4.6.

Proposition 4.6

Let \(({\tilde{\theta }}, {\tilde{p}})\) and \(({\hat{\theta }}, {\hat{p}})\) be the solutions to ODE system (2.13), respectively, starting from \((t_0, {\tilde{p}}(t_0))\) and \((t_0, {\hat{p}}(t_0))\), and \((v, \zeta )\) be the solution to (4.11) starting from \(\rho _0:= {\hat{p}}(t_0) - {\tilde{p}}(t_0)\). There exist positive constants \(N_0\) and C, such that if we have (4.3), then for any \(t_0 \in [0, T]\) and \({\tilde{p}}(t_0), {\hat{p}}(t_0) \in {\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\), we have

Proof

Without loss of generality, we assume \(t_{0} = 0\). Similar to the proof of [9, Theorem 7], we can use results from Proposition 4.5 to prove our conclusion. Define \(N_0\) as the one in Proposition 4.5. Then \({\tilde{p}}_{y}(t), {\hat{p}}_{y}(t) > - M_1\) uniformly on \((t, y) \in [0, T] \times \Sigma \). Define linearized system with \(w:= {\hat{p}}(0) - {\tilde{p}}(0) \):

From condition in Theorem 2.6, the sum of every component of \({\tilde{p}}\) equals 1 for all \(t \in [0, T]\). Hence, we know \(\sum _{z \in \Sigma } \epsilon _{2}(t,z) = 0\) and define

We know there exists C such that \(|S({\hat{p}}, {\tilde{p}})| \le C \Vert {\hat{p}}(T) - {\tilde{p}}(T) \Vert \). Set \(u:= {\hat{\theta }} - {\tilde{\theta }} - v\) and \(\rho := {\hat{p}} - {\tilde{p}} - \zeta \), they solve (4.11), where

From (2.3), \(\sum _{z \in \Sigma } c(t, z) = 0\). The existence and uniqueness of solution to (4.16) is guaranteed by Proposition 4.5. We can simplify b and c as

Moreover, since

we have

From Proposition 4.1, \({\tilde{\theta }}\), \({\tilde{p}}\), \({\hat{\theta }}\) and \({\hat{p}}\) are bounded. From Assumption 2.1, namely the Lipschitz continuity of \(D_{\mu } H\), \(D_{\mu } \lambda ^{*}\), \(\frac{\partial G}{\delta _{1}}\) and \(D^{1} G\) in their second variable, there exists constant C such that

Applying Proposition 4.5 and then Proposition 4.2, we have there exists C such that

which concludes the proof. \(\square \)

As (4.16) is a linear system. v and \(\zeta \) in (4.16) can be viewed as a linear map of w. Hence, Proposition 4.6 suggests that \({\tilde{U}}\) is differentiable w.r.t \({\tilde{p}}_{0}\) and the directional derivative \(\frac{\partial }{\partial w} {\tilde{U}}(t, z, {\tilde{p}})\) is the solution to ODE system (4.16), with \({\tilde{\theta }}_{z}(t) = {\tilde{U}}(t, z, {\tilde{p}}(t))\).

Theorem 4.7

There exist positive constants \(N_0\) and C, such that if we have (4.3), \({\tilde{U}}\) is differentiable on \(B(P(\Sigma ), \frac{1}{N_{0}})\), and for any vector w, \(\frac{\partial }{\partial w} {\tilde{U}}(t, z, {\tilde{p}}(t))\) exists and is Lipschitz continuous w.r.t \({\tilde{p}}\), uniformly in t, z. \(\frac{\partial }{\partial w} {\tilde{U}}(t, z, {\tilde{p}}(t))\) is also continuous w.r.t t.

Proof

Define \(N_0\) as the one in Proposition 4.5. Let \(({\tilde{\theta }}, {\tilde{p}})\) and \(({\hat{\theta }}, {\hat{p}})\) be two solutions to (2.13), with initial conditions \({\tilde{p}}(t_0), {\hat{p}}(t_0) \in B(P(\Sigma ), \frac{1}{N_{0}})\). Let also \(({\tilde{v}}, {\tilde{\zeta }})\) and \(({\hat{v}}, {\hat{\zeta }})\) characterize \(\frac{\partial }{\partial w} {\tilde{U}}(t_{0}, z, {\tilde{p}}(t_{0}))\) and \(\frac{\partial }{\partial w} {\tilde{U}}(t_{0}, z, {\hat{p}}(t_{0}))\), respectively. Then \(({\tilde{v}}, {\tilde{\zeta }})\) satisfies the following.

From Proposition 4.5, we know the uniform bound of both \({\tilde{v}}\) and \({\tilde{\zeta }}\) depend linearly on norm of w. Similar is for \(({\hat{v}}, {\hat{\zeta }})\), except for replacing \(({\tilde{\theta }}, {\tilde{p}})\) by \(({\hat{\theta }}, {\hat{p}})\). Set \(u:= {\tilde{v}} - {\hat{v}}\), \(\rho := {\tilde{\zeta }} - {\hat{\zeta }}\). They solve the linear system (4.11) with \(\rho (t_{0}) = 0\) and

Using the Lipschitz continuity of \(\lambda ^{*}\), \(D_{\mu } \lambda ^{*}\) and directional derivatives of G, applying the bounds (4.12) to \({\hat{v}}\) and \({\hat{\zeta }}\), and the estimation on \(\Vert {\tilde{\theta }} - {\hat{\theta }} \Vert \), \(\Vert {\tilde{p}} - {\hat{p}} \Vert \) from Proposition 4.2, there exists C such that

From Proposition 4.5, we have

From Proposition 4.6, we have

Therefore, \(\frac{\partial {\tilde{U}}}{\partial w}\) is Lipschitz continuous, uniform w.r.t t and z.

On the other hand, for another initial time \(t_1 > t_0\), we first compare \(\frac{\partial }{\partial w} {\tilde{U}}(t_{0}, z, {\tilde{p}}(t_{0}))\) and \(\frac{\partial }{\partial w} {\tilde{U}}(t_{1}, z, {\tilde{p}}(t_{1}))\), where \((t_{1}, {\tilde{p}}(t_{1}))\) is on the path \((t, {\tilde{p}}(t))\) start from \(t_{0}\) to T. They are both characterized by system like (4.17), though we need to replace \(t_0\) with \(t_1\) for \(\frac{\partial }{\partial w} {\tilde{U}}(t_{1}, z, {\tilde{p}}(t_{1}))\). Let \(({\tilde{v}}, {\tilde{\zeta }})\) satisfy (4.17). Then we know

\(\frac{\partial }{\partial {\tilde{\zeta }}(t_1)} {\tilde{U}}(t_{1}, z, {\tilde{p}}(t_{1}))\) is also characterized by (4.17), except that \(t_0\) and initial value need to be replaced by \(t_1\) and \({\tilde{\zeta }}(t_1)\). It means \(\frac{\partial }{\partial {\tilde{\zeta }}(t_1)} {\tilde{U}}(t_{1}, z, {\tilde{p}}(t_{1})) - \frac{\partial }{\partial w} {\tilde{U}}(t_{1}, z, {\tilde{p}}(t_{1}))\) can also be characterized by (4.17) except that \(t_0\) and initial value need to be replaced by \(t_1\) and \({{\tilde{\zeta }}}(t_1) - w\). From Proposition 4.5, we have there exists constant C such that

As \(\lambda ^{*}\), \(D_{\mu } \lambda ^{*}\) and the directional derivative of G are Lipschitz continuous and uniform bounded, as well as that both \({\tilde{v}}\) and \({\tilde{\zeta }}\) are uniformly bounded, we know hence both \(\frac{\mathrm{d} {\tilde{v}}_{z}(t)}{\mathrm{d} t}\) and \(\frac{\mathrm{d} {\tilde{\zeta }}_{z}(t)}{\mathrm{d} t}\) are also uniformly bounded by some constant C. We have

Combine above, we know there exists constant C such that

Then by the continuity of \(\frac{\partial }{\partial w} {\tilde{U}}\) w.r.t its third argument, as well as the continuity of \({\tilde{p}}\), we can also conclude that \(\frac{\partial }{\partial w} {\tilde{U}}\) is continuous w.r.t t, its first argument. \(\square \)

From Proposition 4.6 and Theorem 4.7, \({\tilde{U}}\) is \({\mathcal {C}}^{1}\) on compact set \({\bar{B}}(P(\Sigma ), \frac{1}{N_{0}})\). Hence, both \(D {\tilde{U}}\) and the directional derivative of \({\tilde{U}}\) along any direction are well defined, bounded, and Lipschitz continuous, uniformly for \(t \in [0, T]\). Theorem 4.7 also suggests that the directional derivative of \({\tilde{U}}\) along any direction is continuous w.r.t t. Thanks to these properties, we can use similar idea of the proof for existence of solution to the master equation in [9, Section 5.3.1], to show that \({\tilde{U}}\) also satisfies the master equation with some extra error terms.

Theorem 4.8

Let \(({\tilde{\theta }}, {\tilde{p}})\) be the solution to ODE system (2.13). Define \({\tilde{U}}\) as (4.10). There exist positive constants \(N_0\) and C, such that if we have condition (4.3) in Theorem 2.6, then \({\tilde{U}}\) satisfies the following master equation along the path \((t, {\tilde{p}}(t))\) on \([t_{0}, T]\), as long as \({\tilde{p}}(t) \in B(P(\Sigma ), \frac{1}{N_{0}})\).

where \(\Delta ^{z} {\tilde{U}}:= ( {\tilde{U}}(t, 1, {\tilde{p}}(t)) - {\tilde{U}}(t, z, {\tilde{p}}(t)), \ldots , {\tilde{U}}(t, K, {\tilde{p}}(t)) - {\tilde{U}}(t, z, {\tilde{p}}(t)) )\) and \(\Vert \epsilon \Vert < \frac{C + 1}{N}\), where \(N > N_{0}\) and C comes from the uniform bound coefficient in Proposition 4.2.

Proof

From condition in Theorem 2.6, \({\tilde{p}}(t) \in B(P(\Sigma ), \frac{1}{N_{0}})\) for every \(t \in [t_{0}, T]\) where \(B(P(\Sigma ), \frac{1}{N_{0}})\) being the open neighborhood of \(P(\Sigma )\). Hence, from Proposition 4.1, 4.2 and Theorem 4.7, \({\tilde{U}}\), \(D {\tilde{U}}\) and \(\frac{\partial }{\partial \delta _{1}} {\tilde{U}}\) are well defined on \((t, {\tilde{p}}(t))\). Take t as initial time and \({\tilde{p}}(t)\) as initial value, there exists an unique solution to (2.13), and we can always choose h small enough such that this solution taking value on \(t + h\), i.e., \({\tilde{p}}(t + h) \in B(P(\Sigma ), \frac{1}{N_{0}})\). Note that as \(\sum _{z \in \Sigma } \epsilon _{2}(t, z) = 0\) for all \(t \in [t_{0}, T]\), we have

Let us first compute limit of the following when h tends to 0.

For the first term in (4.19), we first define

By definition, we derive the derivative of W as

Then the first term in (4.19) can be reformulated as

From Lemma 4.4 and \(c(h) = \sum _{z = 1}^{K} ({\tilde{p}}_{z}(t + h) - {\tilde{p}}_{z}(t)) = 0\), we know

Substitute above to the first term in (4.19), we get

where \(\epsilon _{2}(t):= (\epsilon _{2}(t, 1), \ldots , \epsilon _{2}(t, z))\). From Theorem 4.7, we know for any \(y \in \Sigma \),

As \(D {\tilde{U}}\) is uniform bounded, we have the following with dominated convergence theorem:

On the other hand, dividing h and letting \(h \rightarrow 0\), we have the following:

The last equation comes from Definition of \({\tilde{U}}\), which suggests \(\Delta ^{y} {\tilde{U}} = \Delta ^{y} {\tilde{\theta }}(t)\).

For the second term in (4.19), from definition of \({\tilde{U}}\), we know

and hence,

Combining both the results from first and second term in (4.19), taking \(h \rightarrow 0\), we have

As \(\Vert D {\tilde{U}}(t, z, {\tilde{p}}(t)) \Vert \le C\) uniformly and \(\Vert \epsilon _2(t) \Vert \le \frac{1}{N}\), we know

Hence, defining \(\epsilon (t, z):= \epsilon _1(t, z) - D {\tilde{U}}(t, z, {\tilde{p}}(t)) \cdot \epsilon _2(t)\) concludes the proof. \(\square \)

Then the DNN approximation \(({\tilde{\theta }}, {\tilde{p}})\) is characterized by (4.18), while the true solution \((\theta , p)\) of the MFG is characterized by similar one, except \(\epsilon \) and \(\epsilon _{3}\) are 0. Although the two master equations are now backward PDE, it is still difficult to directly compare their solutions. Hence, we would like to approximate the two PDEs by two ODE systems on some discrete grids of \(P(\Sigma )\).

Define \(P^{N}(\Sigma ) = \{ (\frac{n_{1}}{N}, \ldots , \frac{n_{K}}{N}), \quad \sum _{z = 0}^{K} n_{z} = N, n_{z} \in {\mathbb {Z}}^{+}\}\). Then \(P^{N}(\Sigma )\) is a discrete grid of \(P(\Sigma )\). For any \(p^{N} \in P^{N}(\Sigma )\), define operators:

With the discrete grid and discrete operators defined above, we next show in Proposition 4.9 that the master equation can be approximate by a backward ODE system.

Proposition 4.9

There exists \(N_0\) such that for \(N > N_{0}\), every \(p^{N} \in P^{N}(\Sigma )\) and \(z \in \Sigma \), \({\tilde{U}}\) solves

where \({\tilde{\epsilon }}^{N} \in {\mathcal {C}}^{0}([0, T] \times \Sigma \times P^{N}(\Sigma ))\), \(\Vert {\tilde{\epsilon }}^{N} \Vert \le \frac{C}{N}\).

Proof

From Theorem 4.8, there exists constant \(N_0\), such that when \(N > N_0\) and (4.3), \({\tilde{U}}\) satisfies (4.18) when taking value on point \((t, z, p^{N})\).

It looks similar to (4.21), except for the discrete operator \(\Delta ^{N, y}\) and the differential operator \(D^{y}\). Hence, we next compare the two operators similar to [9, Proposition 3]. We first discuss the first component \(\delta _{1} - \delta _{y}\) of \(\Delta ^{N, y} {\tilde{U}}(t, z, p^{N})\) defined in (4.20),

where the last equality is derived by the Lipschitz continuity in \(p^{N} \in P(\Sigma )\) of \(D^{y} {\tilde{U}}\). As above can be applied to every component in \(\Delta ^{N, y} {\tilde{U}}(t, z, p^{N})\), we conclude that there exists \(N_0\) such that for \(N > N_{0}\),

where \(\epsilon ^{N, y} \in {\mathcal {C}}^{0}([0, T] \times \Sigma \times P^{N}(\Sigma ); {\mathbb {R}}^{K})\), \(\Vert \epsilon ^{N, y} \Vert \le \frac{C}{N^2}\).

Hence, we have

where

From the Lipschitz continuity of H and \(\lambda ^{*}\), as well as that \({\tilde{U}}\) is bounded, there exists constant C such that

From Proposition 4.2, we know there exists constant C such that

From Lemma 4.4 and \(\sum _{z \in \Sigma } \lambda ^{*}_{z}(y, \Delta ^{y} {\tilde{U}}) = 0\) for every \(y \in \Sigma \), we have

It follows that

From the boundedness of \(\lambda ^{*}\) and \(\epsilon \), there is constant C such that

We can conclude the proof by defining

\(\square \)

Finally we can proceed to the proof of our main result. The main idea of the proof is to characterize both the DNN approximation \(({\tilde{\theta }}, {\tilde{p}})\) and the true solution \((\theta , p)\) by their corresponding master equations, which are further approximated by two backward ODE systems on certain discrete grid points. Then the error of the two can be directly estimated on these grid points using Gronwall inequality. As both \(({\tilde{\theta }}, {\tilde{p}})\) and \((\theta , p)\) are uniformly Lipschitz continuous with respect to their initial conditions, the error between the grid points can also be estimated.

Completion of proof of Theorem 2.6

Since ODE system (2.2) admits a solution to any initial value \(p_{0} \in P(\Sigma )\), we can define

From Cecchin and Pelino [9], U satisfy the master equation for any \(p \in P(\Sigma )\):

Similar to the proof of Proposition 4.9, we know \(U(t, z, p^{N})\) satisfy ODE:

where \(\epsilon ^{N} \in {\mathcal {C}}^{0}([0, T] \times \Sigma \times P^{N}(\Sigma ))\), \(\Vert \epsilon ^{N} \Vert \le \frac{C}{N}\). From (4.21) and (4.22), there exists \(N_0\) such that when \(N > N_0\) and (4.3) holds, we have

From Proposition 4.1, both U and \({\tilde{U}}\) are bounded. Hence, H and \(\lambda ^{*}\) are Lipschitz continuous w.r.t their second variable. Define

There exists a constant C such that

As \(p_{N} \in P^{N}(\Sigma )\), there exists constant C such that

By applying Gronwall inequality, there is constant C such that for every \(t \in [0, T]\), \(z \in \Sigma \) and \(p^{N} \in P^{N}(\Sigma )\) we have

For \(N > 2 N_{0}\) where \(N_{0}\) is defined in Proposition 4.9 above, if \({\tilde{p}} \in B(P(\Sigma ), \frac{1}{N})\), there is \(p \in P(\Sigma )\) such that \({\tilde{p}} = p + \epsilon _{4}\) and \(\epsilon _{4} < \frac{1}{N}\). And there exists \(p^{N} \in P^{N}(\Sigma )\) such that

From Proposition 4.1, \({\tilde{U}}(t, z, {\tilde{p}})\) is well defined, and from Proposition 4.2, there exists constant C independent of N and p, such that for every \(t \in [0, T]\) and \(z \in \Sigma \),

Combining the above inequalities with (4.23), we have \(| {\tilde{U}}(t, z, {\tilde{p}}) - U(t, z, p) | \le \frac{C}{N}\) for some constant C independent to N and p, which is equivalent to

By using the uniform boundedness and Lipschitz continuity of \(\lambda ^{*}\), we can prove p and \({\tilde{p}}\) are Lipschitz continuous w.r.t \(\theta \) and \({\tilde{\theta }}\), respectively, with the help of Gronwall inequality and technique similar to the proof of Proposition 4.2. Note also that the Lipschitz coefficient only depends on the uniform bound and Lipschitz continuous coefficient of \(\lambda ^{*}\), which again only depend on the preliminary M given in Proposition 4.1. Hence, we know there exists a uniform constant C independent on N such that

This concludes the proof. \(\square \)

4.4 Proof of Proposition 3.1

Proof

The proof is divided to several steps to prove the conditions for H and G, respectively.

Step 1: proof of \(\lambda ^{*}\) for Assumption 2.1.

Let us first write out the Hamilton operator H for \(G_{c, R}\). Define \({\mathcal {A}}\) as the admissible control set for all \(\lambda \) that satisfy (3.4). Define \(\delta ^{a}:= \Lambda ^{-1}(\lambda _{\beta ^{a}(z)}(t, z))\) and \(\delta ^{b}:= \Lambda ^{-1}(\lambda _{\beta ^{b}(z)}(t, z))\), then we have

where \( g(\delta , \mu ):= \Lambda (\delta ) (\delta - c + \mu )\). From (3.1) and according to the proof of Lemma 3.1 in Guéant [15], \(\zeta (\mu ):= \sup _{\delta } \{ g(\delta , \mu ) \}\) is increasing w.r.t \(\mu \). Moreover, the optimal \(\delta ^{*}\) exists and is unique, which is a continuously differentiable function of \(\mu \).

Step 2: proof of H satisfying Assumption 2.1.

We only need to prove that the second-order derivative \(\zeta ''(\mu )\) is positive. From the proof of Lemma 3.1 in Guéant [15], \(\zeta \) is \({\mathcal {C}}^{2}\), \(\zeta '(\mu ) = \Lambda (\delta ^{*})\), and \(\delta ^{*}\) is strictly decreasing w.r.t \(\mu \). Hence, \(\Lambda (\delta ^{*})\) is strictly increasing w.r.t \(\mu \), which implies \(\zeta ''(\mu ) > 0\). Then there exists constant C such that \(\zeta ''(\mu ) > C\) when \(\mu \) is bounded.

Step 3: proof of G satisfying Assumption 2.1.

From (3.5), the differentiability and (2.5) of G are trivial. We then only need to prove (2.6). Note that

which is non-positive, this concludes the proof. \(\square \)

5 Conclusions

In this paper, we have solved the finite state mean field games problem by the deep neural network method. By transforming the fully coupled FBODE system to the master equation, we have proved that the error between the true solution and the approximate solution is linear to the square root of DNN loss function. We have also applied the DNN method to solve the optimal market making problem with terminal rank-based trading volume reward which is shown to perform better in liquidity provision and trading cost reduction than the linear trading volume reward. There remain many open questions such as general heterogeneous interaction structure and infinite state MFG. We leave these and other questions for future research.

Availability of data and material

Not applicable.

Code Availability

Not available

References

Achdou Y, Camilli F, Capuzzo-Dolcetta I (2012) Mean field games: numerical methods for the planning problem. SIAM J Control Optim 50:77–109

Achdou Y, Capuzzo-Dolcetta I (2010) Mean field games: numerical methods. SIAM J Numer Anal 48:1136–1162

Cardaliaguet P, Delarue F, Lasry JM, Lions PL (2015) The master equation and the convergence problem in mean field games. arXiv:1509.02505

Carmona R, Delarue F (2013) Probabilistic analysis of mean-field games. SIAM J Control Optim 51:2705–2734

Carmona R, Laurière M (2021) Deep learning for mean field games and mean field control with applications to finance. arXiv:2107.04568

Carmona R, Wang P (2016) Finite state mean field games with major and minor players. arXiv:1610.05408

Carmona R, Wang P (2018) A probabilistic approach to extended finite state mean field games. arXiv:1808.07635

Cecchin A, Fischer M (2020) Probabilistic approach to finite state mean field games. Appl Math Optim 81:253–300

Cecchin A, Pelino G (2019) Convergence, fluctuations and large deviations for finite state mean field games via the master equation. Stochast Process Appl 129:4510–4555

El Euch O, Mastrolia T, Rosenbaum M, Touzi N (2018) Optimal make-take fees for market making regulation. SSRN 3174933

Fouque JP, Zhang Z (2020) Deep learning methods for mean field control problems with delay. Front Appl Math Stat 6:11

Gomes D, Mohr J, Souza R (2013) Continuous time finite state mean field games. Appl Math Optim 68:99–143

Gomes D, Saude J (2017) Monotone numerical methods for finite-state mean-field games. arXiv:1705.00174

Guéant O (2009) A reference case for mean field games models. J mathématiques pures et appliquées 92:276–294

Guéant O (2017) Optimal market making. Appl Math Finance 24:112–154

Guéant O, Lasry JM, Lions PL (2011) Mean field games and applications. In: Paris-Princeton lectures on mathematical finance 2010. Springer, pp 205–266

Han J, Jentzen A (2018) Solving high-dimensional partial differential equations using deep learning. Proc Natl Acad Sci 115:8505–8510

Huang M, Malhamé R, Caines P (2006) Large population stochastic dynamic games: closed-loop Mckean-Vlasov systems and the nash certainty equivalence principle. Commun Inf Syst 6:221–252

Lagaris I, Likas A, Fotiadis D (1998) Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans Neural Netw 9:987–1000

Lagaris I, Likas A, Papageorgiou D (2000) Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans Neural Netw 11:1041–1049

Lasry JM, Lions PL (2007) Mean field games. Japan J Math 2:229–260

Lasry JM, Lions PL, Guéant O (2008) Application of mean field games to growth theory. hal:00348376

Lauriere M (2021) Numerical methods for mean field games and mean field type control. arXiv:2106.06231

Lee H, Kang I (1990) Neural algorithm for solving differential equations. J Comput Phys 91:110–131

Li J, Yue J, Zhang W, Duan W (2020) The deep learning Galerkin method for the general stokes equations. arXiv:2009.11701

Li J, Zhang W, Yue J (2021) A deep learning Galerkin method for the second-order linear elliptic equations. Int J Numer Anal Model 18:427–441

Malek A, Beidokhti R (2006) Numerical solution for high order differential equations using a hybrid neural network-optimization method. Appl Math Comput 183:260–271

Mishra S, Molinaro R (2021) Estimates on the generalization error of physics-informed neural networks for approximating pdes. arXiv:2006.16144

Ruthotto L, Osher SJ, Li W, Nurbekyan L, Fung SW (2020) A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proc Natl Acad Sci 117:9183–9193

Sirignano J, Spiliopoulos K (2018) DGM: a deep learning algorithm for solving partial differential equations. J Comput Phys 375:1339–1364

Acknowledgements

The authors are grateful to the anonymous reviewers whose constructive comments and suggestions have helped improve the paper of the previous version.

Funding

This study was supported in part by EPSRC (UK) Grant (EP/V008331/1).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Luo, J., Zheng, H. Deep Neural Network Solution for Finite State Mean Field Game with Error Estimation. Dyn Games Appl 13, 859–896 (2023). https://doi.org/10.1007/s13235-022-00477-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-022-00477-5