Abstract

An integrated model of the PUNQ-S3 reservoir in the North Sea was constructed. Then, 16 parameters with the highest impact on target functions were selected for sensitivity analysis. The sensitivity analysis was based on the two-level Plackett–Burman design of experimental method. Finally, seven variables with the highest impact on the target functions were selected. Net present value, cumulative oil production, and cumulative water production were three target functions. The proxy model was constructed using the three-level Box–Behnken experimental design for each of the three target functions, taking into account the effect of the variable’s interactions on each other. Then the compliance and predictivity of the proxy model for each target function were validated according to the decision variables. In the end, multiobjective optimization was conducted with the aim of maximizing net present value and cumulative oil production and minimizing cumulative water production using a parameter called composite desirability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Increasing the duration of a reservoir production gradually changes the dynamic characteristics of reservoir, and actual production conditions diverge from the default designing conditions considered for well completion. This leads to a lack of compliance of reservoir production potential with the potential of the well production which often could be seen in the form of wellhead or downhole flowing pressure drop leading to a reduction in production and eventually the plugging of the well (Shahsavari and Khamehchi 2018). One of the main tasks of petroleum engineers, especially production engineers, is to identify the causes of the problems of such wells and to investigate possible solutions to the problem and restore the well to the production loop (Boyun et al. 2007; Mahdiani et al. 2019). In some cases, it is also necessary to maximize the utilization of a designed operating system. The best solution to do this is to simulate the production system and check the performance of the well flow to minimize energy losses and pressure drop in the produced fluid lines. In this way, in fact, it is possible to separate the production potential capacity of each well and the reservoir through the nodal analysis concept and, by comparing them together, identify the limiting factor of production, and then propose a suitable solution for it to be eliminated (Beggs and Howard Dale 1980). Due to the existence of multiphase fluid in oil production wells and the complexity of mathematical relationships and the high computing volume, the use of a computer program is required to simulate and review diverse production optimization scenarios and demonstrate the importance of optimization in industrial projects (Naderi and Khamehchi 2016) (Hamedi and Khamehchi 2012) (Rashidi et al. 2010) (Vasant et al. 2017). Stoisits were among the first who implemented integrated optimization. In their study, the goal was to provide a method to increase the production flow rate, and variables were including the amount of injected gas allocated to each well and the allocation of any production wells to the surface equipment (Stoisits et al. 2001). In recent years, the construction of an integrated model and, consequently, the optimization of particular functions have been greatly increased by applying many constraints. At the same time, Denney optimized the production rate of each well and the amount of gas allocated to lift produced fluid in two wells using a genetic algorithm (Denney 2003).

Lake et al. (2007) used a quadratic programming method to optimize water injection as a way to increase productivity (Lake et al. 2007). Ehtesham et al. (2011) presented an article using an integrated reservoir and surface equipment model to optimize the performance of five surface separators with two optimized separators (Ehtesham et al. 2011). Codas et al. (2012) also carried out studies on the Urucu oil field in Brazil, succeeded in optimizing the production flow rate as an objective function by using variables of producing gas/oil ratio, water cut and bottom-hole pressure (Codas et al. 2012). Naderi and Khamehchi (2017) also used metaheuristic optimization methods such as genetic and bat algorithms. They chose the net present value (NPV) as the target function and investigated the effect of well location on it (Naderi and Khamehchi 2017).

Marmolejol and Rodriguez used Chambers–Mallows and Stuck algorithm for simulating alpha stable random variables characterizing demand patterns of real electrical systems. The use of Chambers–Mallows–Stuck method for simulating stable random variables provided a new way to generate test systems widely used in power systems research (Marmolejo and Rodriguez 2015).

Experimental designs have been used to the optimization of analytical methods more often than the past. Several benefits could be regarded for these methods like a reduction in the number of tests that need to be done which will lead to reduction in costs and consumption of resources. Meanwhile, these methods permit the construction of mathematical models that allow prediction of the target function value such as Box–Behnken design as well as identifying the most influential factors affecting target function using sensitivity analysis such as a two-level Plackett–Burman method.

In Box–Behnken design, each test can be considered as a combination of a two-level (full or fractional) factorial design with an incomplete block design. In each block, a certain number of factors are put through all combinations for the factorial design, while the other factors are kept at the central values (Box and Behnken 1960). Plackett–Burman designs are highly efficient designs. They use the minimum number of runs to quickly identify the factors with a significant effect on the response. The factors that are identified as important are then investigated more thoroughly in subsequent experiments (Plackett and Burman 1946a, b).

In this paper, an integrated production model was developed based on the available data for the PUNQ-S3 field. Then, using the Plackett–Burman design of experimental method, the sensitivity analysis on the net present value (NPV) target function was performed to determine the most effective factors. By selecting effective parameters and utilizing the Box–Behnken designing as one of the methods for analyzing the response surface, another design was also developed based on three target functions of the net present value, cumulative oil production, and cumulative water production. By conducting experiments and analyzing the results, a high-precision proxy model was generated and then multiobjective optimization by defining a function called composite desirability was performed.

Integrated model description

The field in this study is the PUNQ-S3 field in the North Sea basin. The data file for this field has been prepared from a reservoir study on a real field sample. Six wells are located in different parts of the reservoir, each with a production choke at surface. Figure 1 shows a schematic of an integrated design for wells and surface installations. As shown in Fig. 1, the produced fluid from each well comes together at point A (manifold) and directed toward a separator. In the following, we will study the construction of an integrated water drive reservoir, well and surface installations model.

Integrated water drive reservoir model

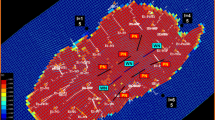

The PUNQ-S3 is known as a small-size reservoir with nineteen grid blocks along the x-axis, twenty-eight grid blocks along the y-axis, and five grid blocks along the z-axis, with a total of 1761 active grid blocks. Six wells have been drilled in different sections. The reservoir is restricted to two slightly strong aquifers from north and west and two faults from the east and south. There are no injection wells, and the geometry of the reservoir is modeled by a corner point geometry method. The initial reservoir pressure is 234 bar and produces heavy oil with density of 912 kg/m3. The produced gas/oil ratio for the reservoir oil sample at the surface is 74 m3gas/m3oil, which is expected to increase with time and further pressure drops below the bubble point (Odi 2013). The physical reservoir rock and fluid properties are given in Table 1 (Gu and Dean 2005). The parameters of the table are constant and do not change in the different design of experiments.

well model

Six production wells are located in different parts of the reservoir, named Pro1, Pro4, Pro5, Pro11, Pro12, and Pro15, with no injection well in the reservoir.

Calculation of produced fluid properties in the well

The produced reservoir fluid properties (oil + gas) should be specified along the well. For this purpose, the properties of the fluid measured in the fluid properties laboratory (PVT Lab) in various pressure steps are presented in Table 2.

In this paper, these values are adjusted to existing empirical relationships and are used to calculate fluid properties along wells and pipelines. The values of each parameter in Table 2 were subjected to PVT matching, and the values of the shift and multiplier parameters were obtained as statistical correction of empirical relationships. The correlations of Glaso (1980), Standing (1947), Lasater (1958), Vasquez-Beggs (1977), Petrosky et al. (1993), and Al-Marhoun et al. (1988) were used in order to obtain the best value for bubble point pressure, oil formation volume factor, and solution gas/oil ratio. In addition, the empirical relationships of Beal et al. (1946), Beggs et al. (1975), Petrosky et al. (1995), and Bergman–Sutton (2006) were used to match experimental data obtained from the measurement of viscosity with empirical relationships. The PVT matching results are shown in Table 3. The following three statistical parameters were compared to determine the best consistency with experimental data for each empirical relationship, which can be seen in Table 3 (1) shift, (2) multiplier, (3) standard deviation.

Shifting data is adding a constant k to each member of a data set, where k is a real number. In reality, it is lifting the entire distribution of data points and shifting a distance of k.

Multiplier (Scaling): Rescaling data is multiplying each member of a data set by a constant k. Rescaling will change the spread of our data as well as the position of data points. What remains unchanged is the shape of the distribution and the relative attributes of our curve.

Standard deviation shows the overall goodness of fit. The lower the standard deviation, the better the fit (Rietz and Carver 1924).

In order to better comparing the results and to choose the best fit between experimental data and empirical relationships for fluid properties, Fig. 2 is plotted. In Fig. 2a, b, in addition to the main measured data in the laboratory, which are characterized by solid squares, six other graphs are drawn, each of which is presented in legends. In Fig. 2c, in addition to the original data shown with solid squares, five other graphs are also observed in different colors. According to Fig. 2, the results obtained from the Beal et al. correlation for viscosity and the Glaso relationship for gas/oil ratio, oil formation volume factor, and bubble point pressure have the best fitting with the original data. The results of Table 3 also confirm this claim. The best fitting according to the statistical parameters is defined as follows:

-

A)

The closest value of the shift parameter to zero.

-

B)

The closest value of multiplier to one.

-

C)

The lowest value of standard deviation.

All of which agree with two above-mentioned relationships.

Calculation of the produced fluid pressure along the well

Each of the six wells in the PUNQ-S3 reservoir is vertical. In order to calculate the amount of pressure drop in the well, PE2 relationship was used. This relationship is quite suitable for vertical wells. In this empirical relationship, a flowchart was used to determine the flow regime presented by Gould et al. (1974). After determining the flow regime, to calculate the pressure drop in each particular flow regime separately, the empirical relationships were utilized (Griffith and Wallis 1961; Hagedorn and Brown 1965; Duns Jr and Ros 1963).

Nodal analysis for connecting the well and reservoir

The coupling of the reservoir and well was performed using the nodal analysis method (Ebrahimi and Khamehchi 2015). In each test design, for each set of well and reservoir settings, the relationship between rate and pressure in the upstream and downstream of the node (connecting grid block between reservoir and well) is written; then, from the intersection point of these two figures, flow rates and operational pressure are given. The pressure at the upstream node (reservoir) is calculated by executing the reservoir simulator and calculating the bottom-hole pressure for a specific production rate. At the downstream of the node, the vertical lift performance (VLP) curves using the empirical relationships in Sect. 2.2.2 have been generated for 20 different liquid flow rate values, 10 different water cut values, 10 different gas/oil ratio values, and 10 different surface pressure. Due to the fluid production rate conditions, water cut, gas/oil ratio, and wellhead pressure, the appropriate VLP chart is selected and intersected with the IPR chart.

Surface facilities model

Chokes are considered as the connection point of well and surface pipeline for each of wells. The well is known as upstream and surface pipe as downstream. The VLP graphs are dominant in the upstream of the choke (as nodes).

Using the various relationships provided, downstream pressure in choke can be obtained. In this paper, the utilized relationships are provided by Perkin (1993), which are known in the choke modeling software as the ELF method. The produced fluid from any well is directed to the manifold by a pipeline. The accumulated fluid in the manifold is terminated through a pipeline to the separator. Specifications for pipelines are shown in Table 4, respectively. Considering the isothermal conditions around the surface pipelines (surrounding temperature 60 °F) and the identical type of the pipes (roughness = 0.0006) and the entirely horizontal pipelines, the PE2 relationships indicated in Sect. 2.2 are used to calculate pressure over the surface pipeline.

Methodology

Sensitivity analysis and verification

In this section, the purpose is to determine the parameters with the greatest impact on the target functions in a production system. Three target functions were selected in this study, which are: (1) net present value (NPV), (2) cumulative oil production, (3) cumulative water production. Given the perspective of this issue, by selecting three functions as target functions, the problem becomes a multiobjective optimization problem. The first step in this path is to analyze the sensitivity and select the parameters with the greatest impact on the target functions in the integrated system. Regarding the complexity of the simultaneous sensitivity analysis on the three target functions, a simplistic assumption is used in this problem, so that only sensitivity is performed on the net present value function. This will not make many errors in the study because the NPV in this study is defined as follows:

where CAPEX is capital expenditure, OPEX equals operation expenses, cw is cost per barrel of water produced, cginj is gas injection cost (ignored in this study), i is interest rate, pg is gas price per thousand cubic foot, po equals oil price a barrel, Qo is the cumulative amount of oil produced in a time step, Qg is the cumulative amount of gas produced in a time step, and Wp is the cumulative amount of water produced in a time step.

In Eq. 1, it was assumed that the price per barrel of oil is equivalent to 75 dollars and every 1000 cubic feet of gas would be Sect. 3, and the production of a barrel of water would cost 5 dollars. As can be seen, the NPV itself is expressed as a function of cumulative production of water, oil, and gas, and in other words, it is affected by these three functions.

With a review of previous studies performed in this area, sixteen parameters are selected for sensitivity analysis (Artun 2011) (Avansi 2009) (Shepherd 2009) (Gao 2014). The sixteen selected parameters will include all three water-drive reservoirs, well, and surface systems. In order to perform sensitivity analysis of the net present value function to sixteen selected parameters, a two-level Plackett–Burman test is first designed in which each parameter is set to two upper and lower limit levels. The Plackett–Burman design is a factorial design in two levels and is used to study k factor or variables in two levels. In this method, if the number of experiments is equal to N, the number of factors (k) is obtained from the relation k = N − 1 (Plackett and Burman 1946a, b). Given that the number of parameters in this study is sixteen, so the minimum number of experiments will be equivalent to seventeen experiments. However, in order to enhance the statistical population and cover all aspects of the problem, the number of experiments in this study was considered to be 24 tests.

According to the Plackett–Burman method, the order and the procedure for performing the tests are determined. The values of each parameter are set in the designed integrated model, and with the model implementation, the cumulative oil, water, and gas production rate will be obtained. Moreover, using Eq. (1), the net present value will be obtained at each stage of the experiment. These are raw feed results for sensitivity analysis. The method used to perform sensitivity analysis and related parameters is summarized in Table 5. In this study, for the purpose of sensitivity analysis, the goal of fitting a model with high compliance with the NPV target function is based on the sixteen parameters; hence, we start the process with all possible variables. Then, in each step, the variable with the least impact will be eliminated from the model. The process stops when all of the P values of the variables in the model are less than or equal to the alpha to remove. Alpha is a parameter whose value is applied by the user. Asymptotic significance (P value) indicates that the relationship or difference observed in the sample is the result of a chance, and there is no such difference in the society where the sample is selected. In a simpler sense, P value provides us with information about the reality of a result. From technical point of view, the P value is a decreasing index of reliability of a result, and the larger it is, the confidence to the reality of the results reduces. P value shows the probability of an error in accepting the validity of the observed results, although noting that "credibility" means that the observed results represent a well-known society. Now, "selecting the P value level", the greater the value of which will be rejected as invalid, is contractual and subject to the condition of the problem and is known in our study as Alpha to remove (StatSoft 2006).

Now, by performing sensitivity analysis, the accuracy of the selected parameters as the most effective parameter on the net present value target function is investigated. For this purpose, the R2, which is a statistical parameter, is used. Since R2 is a parameter for assessing regression quality, it may create a question in the reader's mind to be used to validate the sensitivity analysis. In response to this question, as mentioned earlier, by analyzing the results of experiments designed using the Plackett–Burman method, the most effective parameters are selected and a mathematical model is created based on them for predicting the target function. In this section, R2 represents the degree of compliance of existing data to the fitted math model. Here, the details of the mathematical model are discarded, because the goal is only sensitivity analysis and building the proxy model in Sect. 3.2 will be discussed in detail. The R2 statistical parameter shows the status of the data around the regression diagram. Its value varies from zero to one hundred percent; 0% indicates the excessive dispersion of data around the fitted line and their significant distances from the average value. 100% indicates the data are close to the average fitted line on the data.

Therefore, the closer the R2 to 100 is, the higher the regression quality. The value of R2 in this study was calculated from Eq. 2.

where SS Error is residual sum of squares, SS Total is total sum of squares, \(y_{i}\) is dependent response for every independent input, \(\widehat{{y_{i} }}\) is value of \(y_{i}\) that line predicted, and \(\overline{{y_{i} }}\) is average value of \(y_{i}\). Adjusted R2 is the modified R2 parameter which is defined as Eq. 3:

where p is number of predictors and N is total sample size. If the optional predictor parameter is selected that has slight effect on the target function, the R2 may be affected, but the adjusted R2 value will remain constant. So it helps you determine whether you need to add a new predictor or not.

Define proxy and its validation

The response surface methodology, or RSM, is a set of statistical techniques and applied mathematics for constructing empirical models (Mahdiani et al. 2015). The goal in such schemes is to optimize the response (output variable), which is influenced by several independent variables (input variables). Theoretically, RSM was developed for modeling of empirical responses and then led to modeling numerical experiments. An important aspect of RSM is the design of experiments, commonly known as DOE. This strategy was originally developed for fitting experimental models, but can also be used for numerical experiments. The DOE's goal is to select the points where the response should be evaluated (Chatterjee et al. 2015). Selection of testing designs can have a great impact on the accuracy of the estimation and the cost of constructing the surface model (Naderi and Khamehchi 2017). In a traditional DOE, screening tests are carried out in the early stages of the process, when there are a large number of potential project variables that may have small effects or no effect on the response (Box and Draper 1987). To avoid this problem, in step 3.1, sensitivity analysis was performed to identify the variables that have a potential impact on the target function. The result was the selection of a number of affecting parameters on the net present value target function.

In order to build a proxy model, the three-level Box–Behnken design of experimental method (Ferreira 2007) and the analysis of the results are used. The Box–Behnken is a three-level experimental factorial design model and a subfield of the surface response method, which is made up of a combination of two-level factorial and incomplete block design in a special case. In this design, the selected parameters in Sect. 3.1 are set at three levels of low, average, and upper limit. By carrying out experiments in the integrated model, the volumetric flow rates of water, oil and gas are calculated, and then using Eq. 1, the net present value is calculated in each step. The proxy model is constructed by analyzing the response surface, taking into account the linear and quadratic effects of each parameter, as well as the effect of the interaction of the parameters with each other for each target function of cumulative oil production, cumulative water production, and the net present value separately.

Validation and evaluation of the proxy model for each of the target functions were done in three ways. The first method is to use the R-squared parameter, which was previously used for sensitivity analysis verification. In the second method of the proxy validation made for each of the target functions, the fitted model was analyzed and interpreted using the residual value-based curves. The issue that needs to be considered first for each regression is the underlying assumption that analysis is performed based on them. This is a very important point; unfortunately, it is often neglected in analysis and undermines the results of modeling. The underlying assumptions for a regression model are as follows:

-

1.

The error term ε has an average of zero.

-

2.

The error term ε has a constant variance.

-

3.

The error term ε is uncorrelated.

-

4.

The error term ε has a normal distribution.

If the fitting pattern is appropriate, the residues must confirm the above assumptions. It means that because we are fitting a linear model, we assume that the relationship really is linear, and that the errors, or residuals, are simply random fluctuations around the true line. We assume that the variability in the response does not increase as the value of the predictor increases. This is the assumption of equal variance. We also assume that the observations are independent of one another. Correlation between sequential observations, or auto-correlation, can be an issue with time series data that is with data with a natural time ordering.

The residue is the difference between the observed value and the fitted value by the model observed from Eq. 4.

where \({\varepsilon }_{i}\) is residual value, \({Y}_{i}\) is actual value of target function, and \({\widehat{Y}}_{i}\) is fitted value of target function. In other words, the residue is a measure of the variability of the response variable that is not expressed by the regression model. Residues can be considered a representative of pattern errors, so any deviation from the four regression assumptions about errors might be seen in the residues. A convenient way to see the regression model's efficiency value for fitting data is to plot the residual curve. Chatterjee et al. analyzed the graphs drawn for regression according to Fig. 3. Figure 3a is a favorable situation in which the variance of the errors is constant. In Fig. 3b, the points are dispersed in a funnel form and result in a nonconstant error of variance. In this case, it is not possible to conduct tests and form confidence intervals, nor to estimate the parameters in the least squares method, and it is necessary to estimate the coefficients using another method. Figure 3c, nonlinear diagram, shows that conversion is required, such as logarithmic or second power transformations, on a predictor variable, or a variable to be added to the proxy model (Chatterjee et al. 2015).

Interpretation of residual values in a regression and possible states. a Ideal mode, b nonuniformity mode, c nonlinear mode (Chatterjee et al. 2015)

In the third method of verifying the proxy model, the normal probability plot of residuals is used in terms of residual values. Since in the calculation of statistics for constructing a proxy model such as P value for regression tests and also for calculating confidence intervals, the assumption of normal-distributed errors is used, so large deviations from normal distribution can affect the accuracy and validity of the results obtained. Additionally, if the errors follow distributions with narrower or wider sequences than normal distributions, the least square fitting may be sensitive to a small change in the data. A simple way to check the assumption of normalization is to draw a normal probability plot of residuals. If we arrange εi as an ascending order and draw εi against the cumulative probability of normal residues, which is obtained from Eq. 5, the points must be placed on a straight line.

The presence of one or more large residues in this graph can be a sign of the existence of distant points, which should be examined precisely. The four different modes in these charts are predictable:

-

1.

A graph with no linear state: This behavior is unusual for the graph.

-

2.

The graph has a linear behavior but one or more points away from the diagram: data out of the range

-

3.

The graph shows the slope change: There is an undefined and unrecognized variable in the function.

-

4.

Linear and straight diagram: normal and ideal mode.

Multiobjective optimization

Obviously, the purpose of this study is to maximize the two functions of net present value and cumulative oil production and minimize cumulative water production. Water production imposes a lot of costs on our manufacturing system, and the aim is to prevent its production.

Proxy models made by analyzing the DOE results of the Box–Behnken experiment are optimized for three target functions, multiobjective optimization, and a parametric definition called desirability. For this purpose, in the first step, for each target function, individual desirability is defined according to the factors affecting them, and for a combination of several target functions, composite desirability is defined. The goal is to maximize the value of desirability. The individual desirability for each target function is defined according to Eq. 6:

The Ymini and Ymaxi correspond to the desired limits for target function i. In Eq. 6, a and b are user-defined weights which enable the user to determine tighter or wider desirability functions around a target value (Ti) for a response i. Target value for NPV has been set 5,000,000,000 $. This value differs with maximum quantity of target function (i.e., NPVmax). The desirability function method uses RSM approaches to fit polynomials for each response Yi(x), then substitute the fitted polynomial function in Eq. 6, and substitute the individual desirabilities di(Yi(x)) into Eq. 7 at the end. After that, a search-based optimization method is utilized to get x such that D(x) is maximized, because we want D(x) as close to unity as possible. So in this study, we used Hooke–Jeeves method in order to proxy model optimization.

Hence, after defining and obtaining the individual desirability values for each of the target functions proposed in this study, the composite desirability is defined as Eq. 7. The goal of multiobjective optimization is to maximize composite desirability:

As can be seen, the effect of the individual desirability (di) of each function depends on its importance (wi). In this paper, given that in the sensitivity analysis, the parameters were selected based on the net present value function, and this parameter takes the most importance (value 3). Cumulative oil production parameters and cumulative water production are also of prime importance. Parameters related to optimization are more fully presented in Table 6. The lower or upper limits are set according to the target function and the target values for each function. There is no constraint on the predictor parameters.

Results and discussion

Table 7 indicates sixteen selected parameters with each level up and down. Parameters are selected by searching the literature and from previous studies. The two-level Plackett–Burman method was designed to calculate the net present value based on the sixteen parameters studied, in which Table 8 shows the order and the way of the 24 stages of the experiment. The high level is indicated by the (+) and the lower level with the (−) sign for each parameter. Given the conditions and values stated in Table 8 for each parameter at each stage of experimental design, its value changes in the integrated production model. Then, by setting the values of all sixteen parameters in the integrated model, the model is implemented and first, the cumulative oil production, cumulative gas production, and cumulative water production are obtained in the separator. Then, using Eq. 1 discussed, the net present value (NPV) is calculated. Cumulative water production, cumulative oil production, and cumulative gas production resulting from the implementation of the test at each stage and the resulting net present value are shown in Table 9. The results of Table 9 are the raw feed for sensitivity analysis.

Sensitivity analysis was performed by analyzing the design of a two-level factorial (Plackett–Burman), and taking into account the amount of alpha to remove as 0.15, the sensitivity results were obtained according to Table 10. In this table, seven parameters with the greatest impact on the net present value (NPV) target function are observed. As noted earlier, all values of P value in this table are less than the alpha to remove value. The seven parameters that have the greatest impact on NPV include porosity, horizontal permeability, choke size, net pay thickness, separator operating pressure, reservoir-aquifer initial pressure, and aquifer thickness. Meanwhile, reservoir-aquifer initial pressure and net pay thickness had the nearest value to zero which indicates their high importance.

In the next step, the R2 statistical function is used to validate the sensitivity analysis performed by the Plackett–Burman method, the results of which are shown in Table 11. According to Table 11, the R2 value is 92.67%, which shows high compliance. But since in this study, the effect of seven independent variables on a target function is being studied, it is better to use the adjusted R2, because if another predictor is added to the model, which has little effect on the target function, then R2 changes, but the adjusted R2 will remain constant. The adjusted R2 is calculated according to Eq. 3. For sensitivity analysis, 24 samples were selected. Therefore, N in Eq. 3 will be twenty-four. Also, seven predictors were selected with the highest impact on the target function. Therefore, P is equal to seven. So, the adjusted R2 value is 89.46%, according to Table 11.

In order to construct a proxy model, the seven selected parameters in the sensitivity analysis are set at three levels: low, medium, and high, and the results are shown in Table 12. According to the seven predictors presented in this study, the Box–Behnken designs 57 experiments to build proxy model based on the predictors. By carrying out each stage of the test, cumulative oil, water, and gas production will be recorded. Then, according to Eq. 1, the net present value is calculated. The results of the target functions at each step of the experiment are presented in Table 13.

The proxy model is presented in terms of first-order and quadratic terms, and the effect of interaction of the parameters with each other in the form of Eqs. 8, 9, and 10 for each target function:

where \(\phi\) is porosity, \(k_{x}\) is horizontal permeability (md), h is perforated thickness, \(h_{{{\text{aqu}}}}\) is aquifer thickness, \(P_{{{\text{in}}}}\) is initial reservoir-aquifer pressure, \(P_{{{\text{sep}}}}\) is separator operating pressure, and \(d_{c}\) is choke size.

It should be noted that in the above equations the predictor parameters are encoded. The values of the input parameters must be numerically between the two lower and upper levels in the range [− 1, + 1]. Meanwhile, the values of h and dc are different for each well, but since the equation is encoded, when, for example, the value + 1 is assigned to each of the two parameters, it means that the upper level of that parameter in each well should be used in the equation. In addition, interaction effect of the two parameters has no significant effect on the target functions and did not appear in the proxy model. The seven parameters are therefore independent of each other and have their own separate effect on target functions.

Validation and evaluation of the above equations were done in three ways due to adapt them to the data used to construct the proxy and to check the prediction power of the testing data. The first method is to use the R-squared parameter, which was previously used for sensitivity analysis verification. Table 14 shows the results of validation using R2. These results are examined in two aspects.

Firstly, for two cumulative oil production and net present value target functions, R2 values are above 98%. Compliance between proxy model and test data is high. High adaptation is the result of the correct selection of predictive parameters. In other words, proxy results indicate the accuracy and validity of the sensitivity analysis.

Secondly, for the cumulative water production target function, the R2 ratio is less than the other two target functions (about 88%). The degree of compliance is acceptable since it should be assumed that the uncertainty about the water-drive parameters is high as the main cause of water production.

In the second verification method, the fitted model was analyzed and interpreted using the residual values-based curves. Figure 4 shows the status of the residue diagram for each of the three target functions in this study. Comparison of these diagrams with Fig. 3 makes it clear that Fig. 4a, c are corresponding graphs of net present value and cumulative oil production target function, respectively, which are symmetrical compared to the midline drawn in each graph and most similar to Fig. 3a. Figure. 4b, as seen in the R2 test, has a slight deviation in the results of the proxy model and actual values, but the difference is not significant (according to the R2 corresponding to this model). Therefore, an effective parameter on cumulative water seems to be ignored. But the model constructed for NPV and cumulative oil production is highly adapted and the residual-based graph is well distributed.

In the third method of proxy model verification, the normal residue probability chart is used in terms of residual values. Figure 5a–c shows linear behavior, so that points are scattered around the line drawn on the graph. The straight line diagram shows the normal and ideal state of the proxy model. In Fig. 5b, most points are located around the drawn line, but there are three points far from the straight line. According to the results of the Chatterjee et al. study, the presence of data out of the defined range for the proxy is deduced according to the shape of the graph.

If the results of the three validation methods are compared and summarized, it concludes that the proxy model for the two functions of cumulative oil production and net present value has a high accuracy and acceptable conformance with the data from the integrated model, and the seven parameters obtained from the sensitivity analysis have the ability to predict the values of the target functions. However, the proxy model made for the cumulative water production target function has a roughly ninety percent R-squared; according to the validation charts, it seems that by adding a parameter related to the amount of water production and aquifer physical properties to the proxy model, it can improve data compliance. In other words, the effect of a parameter is ignored.

By calculating the individual desirability values followed by the composite desirability value, a multiobjective optimization is performed. The results are in accordance with Table 15.

Net present value has the highest individual desirability among the three target functions. The main reason for this issue should be found in the proxy model for each of the functions. In the net present value mathematical model, three parameters have appeared with a second order: the initial reservoir-aquifer pressure, the separator pressure, and the perforated interval height. Meanwhile, with the change in the perforated interval height in the interval defined between the two upper and lower levels, the net present value function behaved quite descending. However, the other two parameters showed nonlinear behavior and their optimal values were calculated according to Table 15.

The net present value has a higher importance than two other target functions (w = 3). Therefore, in order to achieve the highest amount of composite desirability, it is reasonable that the individual desirability value of NPV would be set at its highest (d = 1).

Only two parameters of initial reservoir-aquifer pressure and aquifer thickness showed a nonlinear effect on composite desirability. The behavior of each of the parameters affecting the target functions in terms of the individual desirability and composite desirability is shown in Fig. 6. The behavior corresponds to the degree of parameters in the proxy model, because reservoir-aquifer pressure and aquifer thickness appeared in a second-order proxy model. However, other parameters have a linear relationship with the target functions, and their interaction with each other has no significant effect on the target function and the optimal values. By optimizing the multiobjective problem, optimal conditions were obtained for control variables. The value of the composite desirability in optimal mode, compared to the nonoptimal state of the current production of the reservoir, is 21% higher.

Lyu et al. (2019) aimed to provide an efficient method to optimize the well configuration in the fractured reservoir. They have proposed a method for the optimization of multilateral well trajectories and determination of the suitable well configuration in the fractured reservoir with the objective function being the maximum profits and minimum costs. The effectiveness and accuracy of the method have been verified through a series of benchmark tests, but they have not considered the water and gas production; meanwhile, they have not conducted the sensitivity analysis to identify the most influential parameters.

In addition, integration of well and surface facility development is crucial to guarantee the selection of the optimum configuration for oil and gas field developments. It is an important step in early planning to minimize investment and reduce the planning period. So Almedallah et al. have presented an integrated model that combines surface and subsurface infrastructure design. The model uses a Monte Carlo Markov chain method to explore the field connectivity, coupled with an optimization routine based on a detailed cost model to determine facility placement (Almedallah and Walsh 2019). But the main differences between this paper and previous works are:

-

Sensitivity analysis which examine the contribution of each parameter on target function.

-

Conduction multiobjective optimization by means of composite desirability function evaluation.

Conclusions

In this study, using Plackett–Burman method, a sensitivity analysis was performed to identify the most influential parameter on the net present value of the PUNQ-S3 oil field. Among the 16 parameters, seven effective ones were selected based on the comparison of the P value of each predictor with alpha to remove.

The mathematical model was constructed and verified to predict net present value, cumulative oil production, and cumulative water production of the field based on the seven selected parameters. The accuracy of constructed model was well over 90%, which indicates great compliance with test data.

By optimizing the multiobjective problem, optimal conditions were obtained for control variables. The value of the composite desirability in optimal mode, compared to the nonoptimal state of the current production of the reservoir, is 21% higher.

Abbreviations

- DOE:

-

Design of experiments

- IPR:

-

Inflow performance relationship

- NPV:

-

Net present value

- PVT:

-

Pressure–volume–temperature

- RSM:

-

Response surface methodology

- VLP:

-

Vertical lift performance

- \(\varepsilon_{i}\) :

-

Residual value

- \(\phi\) :

-

Porosity

- Q g :

-

Cumulative gas production

- Q o :

-

Cumulative oil production

- W p :

-

Cumulative water production

- \(d_{{\text{c}}}\) :

-

Choke size

- \(d_{{\text{i}}}\) :

-

Individual desirability

- \(D_{i}\) :

-

Composite desirability

- \(h\) :

-

Aquifer thickness

- i :

-

Interest rate

- k :

-

Number of factors

- \(k_{x}\) :

-

Horizontal permeability

- N :

-

Number of experiments

- p :

-

Number of predictors

- \(P_{i}\) :

-

Cumulative probability of normal residues

- \(P_{{{\text{in}}}}\) :

-

Initial reservoir-aquifer pressure

- \(P_{{{\text{sep}}}}\) :

-

Separator operating pressure

- SS Error:

-

Residual sum of squares

- SS Total:

-

Dependent response for every independent input

- T :

-

Target value for target function set by user

- U :

-

Limit for target function set by user

- \(w_{i}\) :

-

Importance of each factor

- \(y\) :

-

Value of target function at each step

- \(\widehat{{y_{i} }}\) :

-

Value of yi that line predicted

- \(\overline{{y_{i} }}\) :

-

Average value of yi

- \(Y_{i}\) :

-

Actual value of target function

- \(\hat{Y}_{i}\) :

-

Fitted value of target function

References

Al-Marhoun MA (1988) PVT correlations for Middle East crude oils. J Pet Technol 40(05):650–666

Almedallah MK, Walsh SD (2019) Integrated well-path and surface-facility optimization for shallow-water oil and gas field developments. J Petrol Sci Eng 174:859–871

Artun E et al (2011) Development of universal proxy models for screening and optimization of cyclic pressure pulsing in naturally fractured reservoirs. J Nat Gas Sci Eng 3(6):667–686

Avansi GD et al. (2009) Assisted procedures for definition of production strategy and economic evaluation using proxy models. In: 71st European association of geoscientists and engineers conference and exhibition

Beal C (1946) The viscosity of air, water, natural gas, crude oil and its associated gases at oil field temperatures and pressures. Trans AIME 165(01):94–115

Beggs HD (1980) Production optimization. Society of Petroleum Engineers

Beggs HD, Robinson JR (1975) Estimating the viscosity of crude oil systems. J Petrol Technol 27(09):1–140

Box GE, Behnken DW (1960) Some new three level designs for the study of quantitative variables. Technometrics 2(4):455–475

Box GEP, Draper NR (1987) Empirical model-building and response surfaces. Wiley, New York, p 424

Boyun G, William C, Ghalambor A (2007) Petroleum production engineering: a computer-assisted approach

Chatterjee S, Hadi AS (2015) Regression analysis by example. Wiley, Hoboken

Codas A, Campos S, Camponogara E, Gunnerud V, Sunjerga S (2012) Integrated production optimization of oil fields with pressure and routing constraints: the Urucu field.". Comput Chem Eng 46:178–189

Denney D (2003) Optimizing production operations. J Petrol Technol 55(03):59–60

Duns Jr. H, Ros NC (1963) Vertical flow of gas and liquid mixtures in wells. In: Proceedings of the 6th world petroleum congress, Frankfurt am Main, Germany, pp 22–106

Ehtesham MH, Alberto M, Ahmed A-S (2011) Facilities planning using coupled surface and reservoir simulation models. In: SPE/DGS Saudi Arabia section technical symposium and exhibition. Society of Petroleum Engineers

Ferreira SLC et al (2007) Box-Behnken design: an alternative for the optimization of analytical methods. Anal Chim Acta 597(2):179–186

Gao M (2014) Reservoir and surface facilities coupled through partially and fully implicit approaches. Diss

Glaso O (1980) Generalized pressure-volume-temperature correlations. J Petrol Technol 32(05):785–795

Gould TL, Rasin Tek M, Katz DL (1974) Two-phase flow through vertical, inclined, or curved pipe. J Petrol Technol 26(08):915–926

Griffith P, Wallis GB (1961) Two-phase slug flow. J Heat Transf 83:307–318

Gu Y, Oliver S, Dean. (2005) History matching of the PUNQ-S3 reservoir model using the ensemble Kalman filter. SPE J 10(02):217–224

Hagedorn AR, Brown KE (1965) Experimental study of pressure gradients occurring during continuous two-phase flow in small diameter vertical conduits. J Pet Technol 4:475–484

Hamedi H, Khamehchi E (2012) A nonlinear approach to gas lift allocation optimization with operational constraints using particle swarm optimization and a penalty function. Pet Sci Technol 30(8):775–785

Lake WL, Ximing L, Edgar TF, Al-Yousef A, Morteza S, Daniel W (2007) Optimization of oil production based on a capacitance model of production and injection rates. In: Hydrocarbon economics and evaluation symposium. Society of Petroleum Engineers

Lasater JA (1958) Bubble point pressure correlation. J Petrol Technol 10(05):65–67

Lyu Z, Song X, Geng L, Li G (2019) Optimization of multilateral well configuration in fractured reservoirs. J Pet Sci Eng 172:1153–1164

Marmolejo JA, Rodriguez R (2015) Fat tail model for simulating test systems in multiperiod unit commitment. Math Probl Eng

Naderi M, Khamehchi E (2016) Nonlinear risk optimization approach to water drive gas reservoir production optimization using DOE and artificial intelligence. J Nat Gas Sci Eng 31:575–584

Naderi M, Khamehchi E (2017) Well placement optimization using metaheuristic bat algorithm. J Petrol Sci Eng 150:348–354

Odi U (2013) Optimal process design for coupled CO2 sequestration and enhanced gas recovery in carbonate reservoirs. Diss

Perkins THOMASK (1993) Critical and subcritical flow of multiphase mixtures through chokes. SPE Drill Complet 8(04):271–276

Petrosky Jr. GE, Farshad FF (1993) Pressure-volume-temperature correlations for Gulf of Mexico crude oils. In: SPE annual technical conference and exhibition. Society of Petroleum Engineers

Petrosky Jr. GE, Farshad FF (1995) Viscosity correlations for Gulf of Mexico crude oils. In: SPE Production Operations Symposium. Society of Petroleum Engineers

Plackett RL, Burman JP (1946) The design of optimum multifactorial experiments. Biometrika 33(4):305–325

Plackett RL, Burman PJ (1946) The design of optimum multifactorial experiments. Biometrika 33(4):305–325

Rashidi F, Khamehchi E, Rasouli H (2010) Oil field optimization based on gas lift optimization. ESCAPE20 6.

Rietz HL, Carver HC (1924) Handbook of mathematical statistics, vol 3. Houghton Mifflin, Boston

Shepherd M (2009) Factors influencing recovery from oil and gas fields, pp 37–46

Standing MB (1947) A pressure-volume-temperature correlation for mixtures of California oils and gases. Drilling and Production Practice. American Petroleum Institute

StatSoft, Inc. (2006). Electronic statistics textbook. Tulsa: OK: Electronic Statistics Textbook.

Stoisits FR, Kelly DC, Donald MJ, Micheal DM (2001) Petroleum production optimization utilizing adaptive network and genetic algorithm techniques. U.S. Patent 6,236,894 issued May 22

Sutton RP, Bergman DF (2006) Undersaturated oil viscosity correlation for adverse conditions. In: SPE annual technical conference and exhibition. Society of Petroleum Engineers

Vasant P, Litvinchev I, Marmolejo-Saucedo JA (2017) Modeling, Simulation, and Optimization. Springer, New York

Vazquez M, Beggs HD (1977) Correlations for fluid physical property prediction. In: SPE annual fall technical conference and exhibition. Society of Petroleum Engineers.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rajabi-Kochi, M., Khamehchi, E. Integrated production optimization of an oil field based on constructing of a proxy model using DOE methods. J Petrol Explor Prod Technol 10, 2465–2482 (2020). https://doi.org/10.1007/s13202-020-00887-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13202-020-00887-4