Abstract

This paper develops ensemble machine learning model for the prediction of dead oil, saturated and undersaturated viscosities. Easily acquired field data have been used as the input parameters for the machine learning process. Different functional forms for each property have been considered in the simulation. Prediction performance of the ensemble model is better than the compared commonly used correlations based on the error statistical analysis. This work also gives insight into the reliability and performance of different functional forms that have been used in the literature to formulate these viscosities. As the improved predictions of viscosity are always craved for, the developed ensemble support vector regression models could potentially replace the empirical correlation for viscosity prediction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Knowledge of oil pressure–volume–temperature (PVT) properties is of great interest to petroleum engineers as they are critical in performing most reservoir engineering studies. Viscosity is one of these PVT properties and it controls the fluid flow through the porous media. It is therefore important to be able to estimate crude oil viscosity at different stages of oil exploration. Empirical correlations, based on easily acquired field data, are usually employed to estimate dead oil, bubble-point and undersaturated viscosities. However, performance of these empirical correlations is not usually satisfactory and improved predictions are always sought.

In general, viscosity can be defined as the internal resistance to the flow of fluid. Crude oil viscosity is an important physical property that controls and influences the flow of oil through porous media and pipes (Ahmed 2010). It is also an important parameter when developing reservoir models to predict ultimate recovery, in designing enhanced oil recovery operations and when designing pipelines for effective fluid flow.

The viscosity of a liquid is related directly to the type and size of the molecules which make up the liquid (McCain Jr 1991). Crude oil viscosity can be categorised into three classes depending on the reservoir pressure, namely dead oil viscosity, saturated oil viscosity and undersaturated oil viscosity.

-

Dead oil viscosity (μ od) is the viscosity of the crude oil with no free gas at atmospheric pressure and temperature.

-

Saturated/bubble-point oil viscosity (μ ob) is the viscosity of the crude oil at the bubble-point pressure and the reservoir temperature.

-

Undersaturated oil viscosity (μ oa) is the viscosity of the crude oil at a pressure and temperature above the bubble-point pressure and reservoir temperature.

Oil viscosity can ideally be determined by laboratory experimentation. However, this is always costly and time demanding and a high technical speciality is required. The primary alternatives to this are the use of equations of states (EOS) and empirical correlations. Unfortunately, the EOS do require crude oil compositions which can only be determined through laboratory analysis; thus, they do not eliminate the requirement for laboratory analysis. This has paved way for the adoption of empirical correlations over a period of time. Likewise, some machine learning (ML) techniques have been used to improve the prediction of oil viscosity. However, stand-alone ML techniques or their hybrid systems can become stuck in local minimal, hindering the generalisation capability of such systems. However, this local minima problem can be addressed by ensemble systems (Dietterich 2000).

ML is the process of writing computer programs to optimise a performance criterion using example data or past experience (Alpaydin 2014). Learning involves creation of a system which applies past experience to analogous new situations. Learning can be in or through many forms; it can be through new knowledge acquisition, cognitive skills acquisition, effective representation of new knowledge or new fact discovery through observation and experimentation (Carbonell et al. 1983). Hybrid ML system involves fusion of two or more ML techniques with the aim of strengthening one another. An example is the fusion of genetic algorithm (GA) with support vector machines (SVMs). The GA is used to optimise the learning parameters of SVM. However, such hybrid system might have assumed a local minima since a given space of hypotheses must have been searched for a given data set. On the other hand, effectively constructed ensemble system of SVM can overcome this problem since it involves fusion of systems that should have been constructed on different spaces of hypotheses searched by the learning algorithm on the training data set (Dietterich 2000).

ML techniques usually utilise input variables similar to the empirical correlations. Mostly, petroleum fluid properties which are easily measured in the field and which have direct physical relationship with the target output are used as the correlating variables (Standing 1947; Chew and Connally 1959). Also, a trial and error method can be used to eliminate any correlating variable that does not improve the performance of the correlation significantly (Chew and Connally 1959). Pruning of the correlating variables, often referred to as feature selection, can be achieved by some statistical tools to select input variables that are used in the regression analysis to develop the empirical correlations or by common feature selection techniques such as neighbourhood component analysis (NCA), sequential feature selection and LASSO. NCA is an embedded and nonparametric feature selection method. NCA mainly learns the feature weights with the aim of minimising the objective function that measures the mean leave-one-out regression or classification loss over the given training data set (Goldberger et al. 2005; Yang et al. 2012). LASSO minimises the residual sum of squares subject to the sum of the absolute value of the coefficients being less than a constant. LASSO includes a penalty term that constrains the size of the estimated coefficients to produce some coefficients that are exactly zero in order to trim the selected features for prediction (Tibshirani 1996, 2011). Sequential feature selection in its basic form involves minimisation of an objective function over all feasible feature subsets by adding or removing features from a candidate subset while evaluating the criteria (Liu and Motoda 2007; Stańczyk and Jain 2015).

This paper develops ensemble ML model based on SVM to predict dead oil, saturated and undersaturated viscosities. For each property, different functional forms were explored to determine its best correlating variables. The prediction results of the ensemble models are compared with the most commonly used correlations in the petroleum industry.

Literature review

A review of empirical correlations and ML techniques that have been developed for viscosity predictions is explored in this section.

Viscosity correlations

A brief review of the available correlations in the literature for the estimation of crude oil viscosities is presented in tabular forms. The adopted functional form(s), API range, origin of the data sets and reported statistical errors are included in the review.

Dead oil viscosity correlation

Correlation for μ od is usually developed with the API gravity (γ API) and T as the independent variables.

γ API is calculated using the specific gravity of an oil (γ o ) which is the ratio of oil density to that of water. Specific gravity for API is normally determined at 60 degrees Fahrenheit. It is thus given as:

Correlations for μ od usually introduce large errors when applied to data sets which are different from the ones used to develop the original correlations. The difference in the results is related to the difference in the oil base (asphaltic, paraffinic or mixed base) (Labedi 1992).

Some other correlations have included additional correlating variables such as average temperature, critical temperature, Watson characterisation factor (K w), bubble-point pressure (P b) and bubble-point gas/oil ratio (R sb). Bergman and Sutton (2009) indicated that most of the correlations that use only γ API and T usually have large errors and they are the least accurate compared to other methods that have additional correlating property. Alternative methods that could possibly give improved accuracy are the use of EOS or correlations that use crude oil compositions, though these are not usually available. Hence, the need to use simple methods that utilise easily acquired properties (γ API and T).

Table 1 presents a concise review of some common correlations for μ od.

Gas-saturated viscosity correlations

Gas-saturated viscosity (μ ob) can be defined as the viscosity of the crude oil with dissolved gas, just above the μ od, up to the bubble-point pressure at the reservoir temperature. The dissolved gas in crude oils reduces the observed value of the μ od. Correlations for μ ob are usually developed as a function of μ od and gas oil ratio (R s) or P.

Some other forms of correlations based on different input variables have also evolved for μ ob. Table 2 presents some of these common correlations.

Undersaturated viscosity correlations

Beal (1946) was the first to develop a correlation for undersaturated μ o and noted that the crude oil viscosity in this region increases proportionally with the increase in pressure. He used 52 data points of crude oil from the USA to develop the correlation and reported an E a of 2.7% on the data set. Subsequently, different undersaturated viscosities with different correlating variables have been presented in the literature. Table 3 presents some of these correlations.

Machine learning for viscosity predictions

Viscosity prediction has also benefitted from the machine learning (ML) modelling capability. ML techniques that have been used for viscosity modelling include radial basis function neural network (RBFNN), artificial neural network (ANN), fuzzy logic (FL), functional networks (FN), genetic algorithm (GA), SVM and group method of data handling (GMDH) (Table 4).

Overview of ensemble models

Ensemble ML is a combination of multiple base models of classifiers or regressors for classification and regression problems, respectively. Each base model covers a different part of the input space or the complete input space. Although there is no defined taxonomy for building the ensemble models, some successful approaches and methodologies have been widely adopted (Dietterich 2000; Zhou 2012; Bader-El-Den and Gaber 2012; Perry et al. 2015; Bader-El-Den et al. 2016).

After generating a set of base learners, the ensemble method will then be formed by combining the base models or a subset of them based on defined criteria or algorithm to form a generalised prediction model. Three main benefits of the combination can be attributed to statistical, computational and representational issues (Dietterich 2000; Zhou 2012).

-

Statistical Issue: there is always a large space of hypotheses for the base model to choose from. There is a chance that the base learning algorithm has not chosen the most efficient of these possible hypotheses. The combination approach tends to reduce the risk of choosing the wrong hypotheses for formulating the prediction models.

-

Computational Issue: ML algorithms usually involve searching for optimal parameters which may get stuck in local optima. Combination of different models reduces the risk of choosing a wrong local minimum.

-

Representational Issue: In many ML problems, the true unknown hypothesis cannot be truly modelled in the hypothesis space. However, combination of different hypotheses may be able to form a more accurate representative function that learns the problem.

The most common ways of combining base models in ensemble modelling are averaging and voting. Averaging is the most fundamental and common combination method for numeric output (i.e. regression problem), while voting is a common combination method for nominal output (i.e. classification problem). Averaging can either be simple or weighted.

There are two main ensemble paradigms: sequential ensemble methods and parallel ensemble methods (Zhou 2012). Sequential ensemble methods are where the base learners are generated sequentially with boosting as a representative, while parallel ensemble methods are where the base learners are generated in parallel, with Bagging as a representative.

Bagging (Breiman 1996) which is also known as bootstrap aggregation involves training multiple models with training sets of data randomly drawn with replacement from the base training data sets. The training data sets for the base models are called bootstraps. Hence, bagging involves training different models with different samples and usually predictions are obtained by averaging the results of the different base models for a regression problem.

Boosting involves training and improving a weak learning algorithm into a strong one (Schapire 1990). In boosting, the training data set for each subsequent model increasingly focuses on instances wrongly predicted by the previous weaker model. ADABOOST (adaptive boosting algorithm) is one of the most used boosting algorithms which automatically adapts to the data given to it.

Proposed ensemble model

An ensemble model based on SVM regression has been developed. The steps for the algorithm will be given and discussed.

Ensemble support vector regression

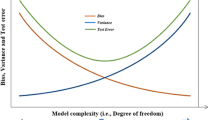

The version of SVM that is used for regression problem is known as support vector regression (SVR). SVM is a statistical machine learning method that generates input–output mapping functions from a set of training data. It uses the principle of structural risk minimisation, seeking to minimise the upper bound of the generalisation error rather than just minimising the training error. In a simple pattern recognition problem, SVM uses a linear separating hyperplane to create a classifier with a maximal margin. When the input cannot be linearly transformed (e.g. complex classification problem or regression problem), SVM first nonlinearly transforms the input space into a higher-dimensional feature space. The transformation is achieved by using nonlinear mapping functions which are generally referred to as kernel functions. Typical kernel functions include RBF, Gaussian and polynomial functions. The steps for creating the SVR ensemble model are highlighted in Algorithm 1. Ensemble pruning has been performed using E a. It is observed that similar performance is achieved when root mean squared error (RMSE) is used for the pruning. Ensemble pruning is basically the determination and selection of the final base models that will form part of the ensemble model.

The stratification process of selecting the sample input data ensures that random rows are selected. The four main parameters that control each SVR model are C, k, λ and ε. “C”, the penalty factor, is the trade-off between achieving minimal training error and the complexity of the model. If it is too large, there is a high penalty for non-separable points which may result in overfitting. If it is too small, there may be underfitting (Alpaydin 2014). The options for the kernel, k, have been limited based on a preliminary experimentation on the data set. Based on preliminary investigation, λ assumes the value of ε in each iteration.

The developed ensemble SVR has n numbers of based models which are selected from the simulated SVR models based on E a ranking. The base SVR models are ranked based on the values of E a to form an ensemble SVR model which is henceforth referred to as Ensemble_SVR_APRE. For analysis and error sensitivity test, RMSE is also used for pruning based on the same algorithm to generate another model which is called Ensemble_SVR_RMSE.

This innovative way of creating ensemble models will also give us the opportunity to compare the two error evaluation criteria, E a and RMSE, as there is no consensus on which of these two error evaluating criteria is the best (Chai and Draxler 2014).

Algorithm 1: Ensemble support vector machine regression

1. Select x data sets as 70% of the entire data sets (X) using stratification and the corresponding y from the output (Y) |

2. Iterate for C = 1 to N |

3. Iterate for kernel, k → {RBF, Gaussian, polynomial} |

4. Iterate for \(\varepsilon \in \left\{ {10^{ - 3},10^{ - 4} ,10^{ - 5} ,10^{ - 6} ,10^{ - 7} } \right\}\) |

5. Compute each SVR model F(C, k, ε) |

6. Evaluate each SVR model using E a |

7. Continue for the next ε |

8. Continue for the next k |

9. Continue until C = N |

10. Give ranks to the SVR model based on E a |

11. Choose the best n models based on their ranks to form the ensemble models based on E a ranking |

12. Predict the testing target Y from the testing input X using the n base SVM models |

13. Compute each ensemble output \(\frac{1}{n}\sum\nolimits_{i = 1}^{n} {\hat{Y}_{i} }\), where \(\hat{Y}_{i}\) is the predicted target by the ith SVR base model |

Implementation

This section focuses on data acquisition, feature extractions, simulation of the ensemble algorithm and statistical evaluation of the prediction results. To focus on the results of different functional forms for predicting oil viscosity, the ensemble SVR will only be compared with empirical correlations. Recently, the advantages of ensemble SVM compared to stand-alone SVM have been discussed (Oloso et al. 2016).

Data sets and input features selection

A total of 286 data points were available for the μ od and μ ob simulations. Among these, only about 250 data points have been used as other rows have missing values. For the μ oa simulation, 910 data points were used. A statistical summary of the data is presented in Appendix A. In each case, approximately 70% of the data has been used for training the models and 30% for testing. A stratification process which involves random selection of non-sequential rows is used to divide the data into training and testing sets. Before the simulation exercise, some common feature extraction techniques were used to examine the inputs that are likely to be mostly correlated and influential for each of the desired output. This would have possibly reduced the dimension of the input matrix. However, no consensus was reached among the methods.

Experimental work

For each of the PVT properties considered in this paper, more than one functional form, that is, combination of correlating variables has been used in the literature. Initially, some feature selection methods (such as NCA, LASSO and sequential feature selections) were investigated. However, all the investigated functional forms have been implemented for the ensemble SVM to allow fair comparison with the empirical correlations. Also, the listed feature selection techniques favoured different input variables.

-

A.

Investigated functional forms for μ od

$$\mu_{od} = f\left( {\gamma_{API} ,T} \right)$$$$\mu_{od} = f\left( {\gamma_{API} ,T,R_{sb} } \right)$$$$\mu_{od} = f\left( {\gamma_{API} ,T,P_{b} } \right)$$$$\mu_{od} = f\left( {\gamma_{API} ,T,R_{sb} ,P_{b} } \right)$$ -

B.

Investigated functional forms for μ ob

$$\mu_{ob} = f\left( {\gamma_{g} ,R_{s} ,\gamma_{o} ,T} \right)$$$$\mu_{ob} = f\left( {\gamma_{g} ,R_{s} ,\gamma_{API} ,T} \right)$$$$\mu_{ob} = f\left( {\gamma_{API} ,\mu_{od} ,P_{b} } \right)$$$$\mu_{ob} = f\left( {\mu_{od} ,R_{s} } \right)$$ -

C.

Investigated functional forms for μ oa

$$\mu_{oa} = f\left( {\mu_{ob} ,P_{b} ,P} \right)$$$$\mu_{oa} = f\left( {\mu_{ob} ,\mu_{od} ,P_{b} ,P,\gamma_{API} } \right)$$$$\mu_{oa} = f\left( {\mu_{ob} ,\mu_{od} ,P_{b} ,P} \right)$$

The proposed ensemble SVM model is simulated for all these functional forms and the results are compared with the available empirical correlations in the literature that utilise these functional forms.

During experimentation, it was noted that the results of the two ensemble SVR models are essentially the same. The ranks of the base models using RMSE and E a for ranking may not be the same, but the ordering of the samples is almost always the same. In other words, the results of both Ensemble_SVR_APRE and Ensemble_SVR_RMSE are essentially the same. Hence, results of only Ensemble_SVR_APRE model are used and reported. Henceforth, the model will simply also be referred to as ensemble SVR or ensemble SVM.

Three error statistical criteria are primarily used to evaluate the performances of the simulated ensemble SVR and the compared empirical correlations. These are RMSE, E a and maximum absolute error (E max). The best model is expected to give the lowest values across these three parameters or two. E max has been chosen as the third criterion to eliminate any tie between two models when both RMSE and E a are not minimum for a particular model. It should also be noted that a model with minimum E max is likely to have good prediction across the data points than the one with higher value.

Comparison with the previous ML studies for viscosity prediction

Commonly, an ML model for predicting oil viscosity assumes a particular functional form based on some empirical correlations. That is, the selected input variables in the ML model are similar to some correlations (Elsharkwy and Gharbi 2001; Omole et al. 2009). Contrary to this, different functional forms are selected for dead oil, saturated and undersaturated viscosities.

A novel approach was introduced in (Khoukhi et al. 2011) to predict the entire viscosity by training the parameters of the curve and bubble-point viscosity. However, the caveat to this method is its dependent on oil compositions which cannot be determined easily on the field, limiting the potential adoption of such methods for industrial application. Also, other works on viscosity prediction a stand-alone ML technique or hybrid systems have mainly adopted a single functional form (Elsharkwy and Gharbi 2001; Ghorbani et al. 2016; Hemmati-Sarapardeh et al. 2016). This paper aims to solve the problem of local minima by using ensemble model rather than a stand-alone SVM and the problem of preferential adoption of a single functional form by using different functional forms found in the literature for the prediction of oil viscosity.

Results and discussion

The simulation results for all the given functional forms for each of the three investigated PVT properties are presented. The developed ensemble SVR model clearly gives better performances than the compared empirical correlations in estimating the three viscosity variables.

Experimental results for μ od

The results for the ensemble SVR in modelling μ od using all the four stated functional forms are shown in Table 5. It is noticed that the functional form that gives the best result is f(γ API, T) with RMSE = 0.38784, E a = 10.31983 and E max = 29.1723. This result is followed by the functional form that incorporates R sb as the additional correlating variable. However, it is important to note that the additional variable has not essentially improved the simulation results.

Table 6 shows the performance of some common empirical correlations. Correlation of Naseri et al. (2005) gives the best results among these μ od correlations, followed by the correlation of Beal (1946). The additional correlating parameters in the correlation of Dindoruk and Christman (2004) have not improved its results compared to others.

Comparing the ensemble SVR model with the listed empirical correlations, the results of all the functional forms simulated by the ensemble SVR model are better than the results of all the empirical correlations in Table 6. Meanwhile, the same functional form f(γ API, T) gives the best result for both the ensemble model and the empirical correlation. It is noted that the correlation of Naseri et al. (2005) has lower RMSE than the ensemble simulation for f(γ API, T, R sb, P b), but the latter has both lower E a and E max. Hence, the ensemble SVR model with functional form f(γ API, T, R sb, P b) is better than the leading correlation method of Naseri et al. (2005). The results in Table 5 compared to Table 6 show that the ensemble SVR has better strength to model the uncertainties of μ od with overall best result from ensemble SVR with the functional form f(γ API, T). Figure 1 gives a graphical comparison of the ensemble SVR results with different functional forms for μ od prediction.

Experimental results for μ ob

Results of the ensemble SVR model for predicting μ ob based on the previously stated four functional forms are given in Table 7. Among all the investigated functional forms for μ ob, the best result is given by f(γ API, μ od, P b) with RMSE = 0.063275, E a = 7.036263 and E max = 23.35724. This is followed by the results of the functional form f(μ od, R s). From Table 4, poorer performances are displayed by the functional forms which include T.

Results of some empirical correlations for μ ob using the four functional forms are italicized in Table 8. The correlation of Chew and Connally (1959) which uses the functional form f(μ od, R s) gives the best performance among the empirical correlations for μ ob with RMSE = 0.090067, E a = 9.278021 and E max = 30.27401. The second best result among the empirical correlations is given by the correlation of Al-Khafaji et al. (1987) which also uses the functional form of f(μ od, R s).

Clearly, the results show that the ensemble SVR with the functional form f(γ API, μ od, P b) is the best in modelling the uncertainty in μ ob as it has given the lowest values of RMSE, E a and E max. This outperforms all the empirical correlations in Table 8. Figure 2 gives a graphical comparison of the ensemble SVR results with different functional forms for μ ob prediction.

Experimental results for μ oa

Ensemble SVR experimental results for predicting μ oa based on the considered three functional forms are shown in Table 9. The functional form f(μ ob, μ od, P b, P, γ API) has the best result with RMSE = 0.016043, E a = 1.189452 and E max = 7.945602. The second best performance is given by the functional form f(μ ob, μ od, P b, P).

Results of the investigated empirical correlations with different functional forms for modelling μ oa are shown in Table 10. The correlation of Labedi (1992) gives the best performance among the empirical correlations with RMSE = 0.022716, E a = 1.713268 and E max = 7.621104. It is noted that additional correlating variables in the μ oa modelling has improved the prediction results and the functional form of the best ensemble SVR model is the same as that of the best empirical correlation.

Similar to other two previous viscosity variables, μ od and μ ob, the ensemble SVR is again giving the best performance for μ oa prediction. The overall best performance is given by the ensemble SVR of functional form f(μ ob, μ od, P b, P, γ API) with lowest values of RMSE, E a and E max among all the ensemble SVR models, and lowest RMSE and E a among all methods. In fact, the results of other functional forms, f(μ ob, P b, P) and f(μ ob, μ od, P b, P) in modelling μ oa are also better than all the empirical correlations since they have lower RMSE and E a. This again shows consistency, reliability and good performing capability of the developed ensemble SVR in modelling the crude oil viscosity property. Figure 3 gives a graphical comparison of the ensemble SVR results with different functional forms for μ oa prediction.

Conclusion

The following conclusions can be drawn from this work.

-

(1)

This paper has presented a novel ensemble SVR model which uses E a in ranking and building the final ensemble model. It was observed during experimentation that using RMSE for selecting the base models for the ensemble system also gives similar and consistent results.

-

(2)

Different functional forms that are used for predicting μ od, μ ob and μ oa have been investigated.

-

(3)

In all cases, the ensemble SVR model gives the best results in predicting μ od, μ ob and μ oa with the least statistical error values.

-

(4)

For μ od modelling, the best result is given by ensemble SVR with functional form f(γ API, T). This is an indication that additional correlating variable may not necessarily improve the performance of a model.

-

(5)

For the μ ob prediction, the best functional form for the ensemble SVR simulation is f(γ API, μ od, P b). Among the investigated μ ob correlations, Chew and Connally (1959) give the best performance and it is based on the functional form f(μ od, R s).

-

(6)

For the μ oa modelling, ensemble SVR with respect to all the three investigating functional forms, f(μ ob, μ od, P b, P, γ API), f(μ ob, P b, P) and f(μ ob, μ od, P b, P), gives better performance than all the compared empirical correlations. The overall best performance for μ oa modelling is given by the ensemble SVR with functional form f(μ ob, μ od, P b, P, γ API).

-

(7)

It can be noted that the errors are very high for the μ od predictions from the empirical correlations. This has been noted by the previous works (Bergman and Sutton 2009). These are significantly reduced in the ensemble SVR model.

-

(8)

Finally, it can be satisfactorily concluded that the ensemble SVR has better ability to model the uncertainties in the prediction of dead, saturated and undersaturated oil viscosity.

Abbreviations

- APE:

-

Average per cent error

- AAPRE/E a :

-

Average absolute per cent error

- RMSE:

-

Root mean squared error

- E max :

-

Maximum absolute per cent error

- E min :

-

Minimum absolute per cent error

- SD:

-

Standard deviation of absolute per cent error

- P :

-

Reservoir pressure, psia

- T :

-

Reservoir temperature, 0F

- P b :

-

Bubble-point pressure, psia

- γ g :

-

Dissolved gas relative density (air = 1)

- γ o :

-

Oil gravity, stock tank oil relative density (water = 1)

- γ API :

-

API gravity, 0API

- R s :

-

Solution gas/oil ratio, scf/STB

- R sb :

-

Solution gas/oil ratio at bubble point, scf/STB

- μ o :

-

Oil viscosity, cp

- μ od :

-

Dead oil viscosity, cp

- μ ob :

-

Saturated oil viscosity, cp

- μ oa :

-

Undersaturated oil viscosity, cp

References

Ahmed T (2010) Reservoir Engineering Handbook. Gulf Professional Publishing, Boston

Al-Khafaji AH, Abdul-Majeed GH, Hassoon SF (1987) Viscosity correlation for dead, live and undersaturated crude oils. J Pet Res 6:1–16

Al-Marhoun MA, Nizamuddin S, Raheem AAA et al (2012) Prediction of crude oil viscosity curve using artificial intelligence techniques. J Pet Sci Eng 86:111–117

Almehaideb RA (1997) Improved PVT correlations for UAE crude oils. In: Middle east oil show and conference, SPE, Bahrain, 15–18 March 1997

Alomair O, Elsharkawy A, Alkandari H (2014) A viscosity prediction model for Kuwaiti heavy crude oils at elevated temperatures. J Pet Sci Eng 120:102–110

Alpaydin E (2014) Introduction to machine learning. MIT press, Cambridge

Ayoub MA, Raja DM, Al-Marhoun MA (2007) Evaluation of below bubble point viscosity correlations & construction of a new neural network model. In: Asia pacific oil and gas conference and exhibition, SPE, Jakarta, Indonesia, 30 Oct–1 Nov 2007

Bader-El-Den M, Gaber M (2012) Garf: towards self-optimised random forests. In: Neural information processing. Springer, pp 506–515

Bader-El-Den M, Teitei E, Adda M (2016) Hierarchical classification for dealing with the Class imbalance problem. In: Neural Networks (IJCNN), 2016 International Joint Conference on IEEE, pp 3584–3591

Beal C (1946) The viscosity of air, water, natural gas, crude oil and its associated gases at oil field temperatures and pressures. Trans AIME 165:94–115

Beggs HD, Robinson JR (1975) Estimating the viscosity of crude oil systems. J Pet Technol 27:1140–1141

Bennison T (1998) Prediction of heavy oil viscosity. In: IBC heavy oil field development conference, London, 2–4 December 1998

Bergman DF, Sutton RP (2007) An update to viscosity correlations for gas-saturated crude oils. In: SPE annual technical conference and exhibition, SPE, Anaheim, California, U.S.A, 11–14 November 2007

Bergman DF, Sutton RP (2009) A consistent and accurate dead-oil-viscosity method. SPE Reserv Eval Eng 12:815–840

Boukadi FH, Bemani AS, Hashmi A (2002) PVT empirical models for saturated Omani crude oils. Pet Sci Technol 20:89–100

Breiman L (1996) Bagging predictors. Mach Learn 24:123–140

Carbonell J, Michalski R, Mitchell T (1983) An Overview of Machine Learning. In: Michalski RS, Carbonell JG, Mitchell TM (eds) Machine Learning. Springer, Berlin, pp 3–23

Chai T, Draxler RR (2014) Root mean square error (RMSE) or mean absolute error (MAE)?–Arguments against avoiding RMSE in the literature. Geosci Model Dev 7:1247–1250

Chew J, Connally CA (1959) A viscosity correlation for gas-saturated crude oils. Trans AIME 216:23–25

De Ghetto G, Villa M (1994) Reliability analysis on PVT correlations. In: European petroleum conference, SPE, London, 25–27 October 1994

De Ghetto G, Paone F, Villa M (1995) Pressure–volume–temperature correlations for heavy and extra heavy oils. In: SPE international heavy oil symposium, SPE, Calgary, Alberta, Canada, 19–21 June 1995

Dietterich TG (2000) Ensemble methods in machine learning. In: Kittler J, Roli F (eds) Multiple classifier systems. Springer, Berlin, pp 1–15

Dindoruk B, Christman PG (2004) PVT properties and viscosity correlations for Gulf of Mexico oils. SPE Reserv Eval Eng 7:427–437

Egbogah EO, Ng JT (1990) An improved temperature–viscosity correlation for crude oil systems. J Pet Sci Eng 4:197–200

El-hoshoudy AN, Farag AB, Ali OIM et al (2013) New correlations for prediction of viscosity and density of Egyptian oil reservoirs. Fuel 112:277–282

Elsharkawy AM (1998) Modeling the properties of crude oil and gas systems using RBF network. In: SPE Asia pacific oil and gas conference and exhibition, SPE, Perth, Australia, 12–14 October 1998

Elsharkawy AM, Alikhan AA (1999) Models for predicting the viscosity of middle east crude oils. Fuel 78:891–903

Elsharkwy AM, Gharbi RBC (2001) Comparing classical and neural regression techniques in modeling crude oil viscosity. Adv Eng Softw 32:215–224

Elsharkawy AM, Hassan SA, Hashim YSK, Fahim MA (2003) New compositional models for calculating the viscosity of crude oils. Ind Eng Chem Res 42:4132–4142

Ghorbani B, Ziabasharhagh M, Amidpour M (2014) A hybrid artificial neural network and genetic algorithm for predicting viscosity of Iranian crude oils. J Nat Gas Sci Eng 18:312–323

Ghorbani B, Hamedi M, Shirmohammadi R et al (2016) A novel multi-hybrid model for estimating optimal viscosity correlations of Iranian crude oil. J Pet Sci Eng 142:68–76

Glasø Ø (1980) Generalized pressure–volume–temperature correlations. JPT 32(5):785–795. SPE-8016-PA. doi:10.2118/8016-PA

Goldberger J, Hinton GE, Roweis ST, Salakhutdinov RR (2005) Neighbourhood Components Analysis. In: Saul LK, Weiss Y, Bottou L (eds) Advances in neural information processing systems 17. MIT Press, Cambridge, pp 513–520

Hajizadeh Y (2007) Intelligent prediction of reservoir fluid viscosity. In: Production and Operations Symposium,SPE, Oklahoma City, Oklahoma, U.S.A, 31 Mar–3 Apr 2007

Hanafy HH, Macary SM, ElNady YM et al (1997) A new approach for predicting the crude oil properties. In: SPE production operations symposium, SPE, Oklahoma City, Oklahoma, 9–11 March 1997

Hemmati-Sarapardeh A, Aminshahidy B, Pajouhandeh A et al (2016) A soft computing approach for the determination of crude oil viscosity: light and intermediate crude oil systems. J Taiwan Inst Chem Eng 59:1–10

Hossain MS, Sarica C, Zhang H-Q, et al (2005) Assessment and development of heavy oil viscosity correlations. In: SPE international thermal operations and heavy oil symposium, SPE, Calgary, Alberta, Canada, 1–3 November 2005

Kartoatmodjo T, Schmidt Z (1991) New correlations for crude oil physical properties. SPE paper 23556

Kartoatmodjo T, Schmidt Z (1994) Large data bank improves crude physical property correlations. Oil Gas J 92(27):27

Khamehchi E, Rashidi F, Rasouli H, Ebrahimian A (2009) Novel empirical correlations for estimation of bubble point pressure, saturated viscosity and gas solubility of crude oils. Pet Sci 6:86–90

Khan SA, Al-Marhoun MA, Duffuaa SO, Abu-Khamsin SA (1987) Viscosity correlations for Saudi Arabian crude oils. In: Middle east oil show, SPE, Bahrain, 7–10 March 1987

Khoukhi A, Oloso M, Elshafei M et al (2011) Support vector regression and functional networks for viscosity and gas/oil ratio curves estimation. Int J Comput Intell Appl 10:269–293. doi:10.1142/S1469026811003100

Labedi R (1992) Improved correlations for predicting the viscosity of light crudes. J Pet Sci Eng 8:221–234

Liu H, Motoda H (2007) Computational methods of feature selection. CRC Press, Boca Raton

McCain WD Jr (1991) Reservoir-Fluid property correlations-state of the art. SPE Reserv Eng 6:266–272

Naseri A, Nikazar M, Dehghani SAM (2005) A correlation approach for prediction of crude oil viscosities. J Pet Sci Eng 47:163–174

Ng JTH, Egbogah EO (1983) An improved temperature–viscosity correlation for crude oil systems. In: Annual technical meeting. Petroleum Society of Canada

Oloso MA, Khoukhi A, Abdulraheem A, Elshafei M (2009) Prediction of crude oil viscosity and gas/oil ratio curves using recent advances to neural networks. In: SPE/EAGE Reservoir Characterization and Simulation Conference, SPE, Abu Dhabi, UAE, 19–21 October 2009

Oloso MA, Hassan MG, Buick J, Bader-El-Den M (2016) Oil PVT characterisation using ensemble systems. In: 2016 International conference on machine learning and cybernetics (ICMLC). IEEE, pp 61–68

Omole O, Falode OA, Deng AD (2009) Prediction of Nigerian crude oil viscosity using artificial neural network. Pet Coal 151:181–188

Perry T, Bader-El-Den M, Cooper S (2015) Imbalanced classification using genetically optimized cost sensitive classifiers. In: Evolutionary computation (CEC), 2015 IEEE Congress on IEEE, pp 680–687

Petrosky GE Jr, Farshad FF (1995) Viscosity correlations for Gulf of Mexico crude oils. In: SPE production operations symposium, SPE, Oklahoma City, Oklahoma, 2–4 April 1995

Petrosky GE Jr, Farshad F (1998) Pressure–volume–temperature correlations for Gulf of Mexico crude oils. SPE Reserv Eval Eng 1:416–420

Schapire RE (1990) The strength of weak learnability. Mach Learn 5:197–227

Stańczyk U, Jain LC (2015) Feature selection for data and pattern recognition. Springer, Berlin

Standing MB (1947) A pressure–volume–temperature correlation for mixtures of California oils and gases. In: Drilling and production practice, American Petroleum Institute, New York

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B 58:267–288

Tibshirani R (2011) Regression shrinkage and selection via the lasso: a retrospective. J R Stat Soc Ser B (Stat Methodol) 73:273–282

Twu CH (1985) Internally consistent correlation for predicting liquid viscosities of petroleum fractions. Ind Eng Chem Process Des Dev 24:1287–1293

Vazquez M, Beggs HD (1980) Correlations for fluid physical property prediction. J Pet Technol 32:968–970

Yang W, Wang K, Zuo W (2012) Neighborhood component feature selection for high-dimensional data. JCP 7:161–168

Zhou Z-H (2012) Ensemble methods: foundations and algorithms. CRC Press, Boca Raton

Acknowledgement

Oloso Munirudeen thanks the Petroleum Technology Development Fund, Nigeria, for sponsoring his PhD research at the University of Portsmouth. The authors also thank GeoMark Research for supplying part of the PVT data used for this research. The authors also wish to thank the anonymous referees for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Statistical descriptions of the data sets used for this study are presented in Table 11.

Appendix 2

The PVT correlations evaluated in this study are given below.

Dead oil viscosity

Beal (1946)

where

Beggs and Robinson (1975)

where

Glasø (1980)

where \(a_{1} = 54.5680543\), \(a_{2} = - 7.1795304\), \(a_{3} = - 36.447\) and \(a_{4} = 4.478879\)

Kartoatmodjo and Schmidt (1991)

where

Labedi (1992)

where

Petrosky Jr and Farshad (1995)

where

Elsharkawy and Alikhan (1999)

where

Dindoruk and Christman (2004)

where

Coefficient | Value |

|---|---|

a 1 | 14.505357625 |

a 2 | −44.868655416 |

a 3 | 9.36579e+09 |

a 4 | −4.194017808 |

a 5 | −3.1461171e−09 |

a 6 | 1.517652716 |

a 7 | 0.010433654 |

a 8 | −0.000776880 |

Naseri et al. (2005)

where

Saturated viscosity

Chew and Connally (1959)

where

Beggs and Robinson (1975)

where

Al-Khafaji et al. (1987)

Khan et al. (1987)

where

Labedi (1992)

where

Almehaideb (1997)

Elsharkawy and Alikhan (1999)

where

Dindoruk and Christman (2004)

where

Coefficient | Value |

|---|---|

a 1 | 1 |

a 2 | 4.740729e−04 |

a 3 | −1.023451e−02 |

a 4 | 6.600358e−01 |

a 5 | 1.075080e−03 |

a 6 | 1 |

a 7 | −2.191172e−05 |

a 8 | −1.660981e−01 |

a 9 | 4.233179e−01 |

a 10 | −2.273945e−04 |

Undersaturated viscosity

Beal (1946)

Vazquez and Beggs (1980)

where

Labedi (1992)

where

Elsharkawy and Alikhan (1999)

Appendix 3

Statistical measures for the performance analysis

Average per cent relative error

where

Average absolute per cent relative error

Maximum absolute per cent relative error

Standard deviation

where

Root mean squared

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Oloso, M.A., Hassan, M.G., Bader-El-Den, M.B. et al. Ensemble SVM for characterisation of crude oil viscosity. J Petrol Explor Prod Technol 8, 531–546 (2018). https://doi.org/10.1007/s13202-017-0355-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13202-017-0355-x