Abstract

Multi-component maintenance optimization is a well-studied area for age-based failure models but in contrast, incorporation of condition-based maintenance (CBM) is still an open area of research. Taking advantage of condition monitoring information for updating components’ health conditions demands a dynamic short-term approach when grouping multiple activities subject to CBM policy. Degradation models are commonly utilized in CBM for predicting the future condition of a given component to decide appropriate maintenance actions where inherent uncertainties exist in the degradation processes. There are a limited number of works in literature that account for degradation uncertainties where maintenance cost is a function of such uncertainty. This paper aims to develop a maintenance decision support for a multi-component system by incorporating CBM while considering the degradation uncertainties. In this paper, a two-stage stochastic programming is proposed to address such an issue and the problem is formulated for situations where maintenance opportunities are limited due to practical constraints (e.g., remote offshore maintenance operations of wind farms, unmanned platforms in oil and gas industries, etc.). The concept of marginal cost is used in developing the equation of optimality. This is a combinatorial problem and becomes intractable when the number of components is large therefore a heuristic is proposed to reduce the problem size which reduces the required computational time substantially. It is shown that significant cost savings are possible, especially, when the downtime cost and common setup cost are significant. A numerical example is provided with a system of six components achieving above \(10\%\) cost reduction when the degradation uncertainties are taken into account.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation

Ongoing development of sophisticated data-collection, processing, storage, and communication technologies (e.g. Wireless Sensor Networks (WSN), cloud computing, infrared thermography, advanced signal processing techniques, Internet of Things (IoT), Big Data analytics, augmented reality, etc.) are building the pave-way for more automated on-site data collection from the critical assets across different industries (Ali et al. 2020; Alaswad and Xiang 2017; Daily and Peterson 2017; Paolanti et al. 2018; Cachada et al. 2018; Spendla et al. 2017; Hashemian 2011). From the maintenance perspective, such development is very inspiring for the transition from traditional age-based maintenance to condition-based maintenance (CBM) where the true health condition of assets in a given environmental and operational setting is of interest instead of general failure histories of similar assets. The fundamental aim is to ensure timely maintenance actions to reduce the waste of an asset’s remaining useful life (RUL) while avoiding a costly failure. However, RUL is almost always stochastic in nature due to the underlying uncertainties of the degradation process and should be incorporated into a maintenance decision system to create more robust maintenance decision models.

It is often economically beneficial if multiple assets are maintained together (sharing a common setup cost, for instance). As a practical example, maintenance of wind turbines in a wind farm requires vessels carrying a maintenance crew and essential equipment such as spare parts (Seyr and Muskulus 2019), large lifting or transport equipment such as helicopters, jack-up equipment, crane vessels, etc. where maintenance is often not easily accessible and the rental and operating costs of these pieces of equipment are substantially high (Wang et al. 2021). Each visit consists of a substantial setup cost that may be incurred from the transportation cost of vessels, finding the right crews for the jobs, ordering of spare parts, etc., and Lua et al. (2018) showed a significant cost reduction in maintenance cost applying an opportunistic grouping approach compared to the schedule-based maintenance policy. Similar application areas are foreseeable in the development of unmanned and minimum manned platforms in the North Sea such as the Oseberg H from Equinor in the Norwegian Continental Shelf (NCS). Such platforms are built on the concept of minimum visit policy with an ambition to visit the platforms only once or twice a year and thus both CBM and multi-component maintenance become relevant (Tan et al. 2020). Costs of these visits are often very high for remote operations and Nachimuthu et al. (2019) reports that a corrective maintenance trip at an offshore wind farm can be expected to cost \(\$70,000-\$130,000\). Therefore, each avoidable visit has the potential for significant maintenance cost savings.

1.2 Overview of multi-component maintenance

Multi-component maintenance models are concerned with optimal maintenance policies for a system consisting of several units of machines or many pieces of equipment, which may or may not depend on each other (Cho and Parlar 1991). Possible types of dependencies are categorized mostly into economic, stochastic, and structural dependency (Thomas 1986). In brief, economic dependency implies that the cost of individual maintenance of each component is different than when they are jointly executed. This dependency can be both negative (e.g., manpower restriction, breaking redundancy structure to cause downtime) and positive (e.g., economies of scale) (Nicolai and Dekker 2008). Stochastic dependency is also known as failure interaction when one component’s failure has an impact on the lifetime distribution of another component’s failure or is subjected to common cause failure (Van Do et al. 2013). Structural dependence implies that two or more components are structured in a way that, repairing one component would disturb the working status of other components (Dao and Zuo 2017). In addition, Keizer et al. (2017) included resource dependency as an additional type of dependence that applies when the set of spares or tools are shared by multiple components and/or the number of maintenance workers is limited.

Grouping of multiple maintenance tasks has been investigated in depth for aging components measured based on the component failure history. The basic grouping optimization is based on static grouping where the groups of components are fixed and always executed throughout the entire planning horizon. However fixed grouping approach is unable to update any strategy when there is new information available e.g., changes in failure rate estimates, unexpected advancement, or postponement of the next planned preventive maintenance, etc. In such context, a more suitable maintenance model is known as the dynamic grouping based on the rolling horizon method where planning rules can be updated according to short-term information (Wildeman et al. 1997).

1.3 Contribution of this paper

Consider a situation where maintenance opportunities are limited by practical constraints (e.g., remote location, weather conditions, etc.). In addition, tasks often require prior preparation such as a long lead time for spare parts, a repairman with specific skills, etc. In such scenarios, when a maintenance team is already dispatched for executing the tasks and they reveal a need for maintenance of a certain component that they are not prepared for may result in huge unplanned costs. We propose to include an insurance under which management can be prepared for the maintenance and later decide whether to execute the task depending on the actual health state of the component. In this paper, under the scenario mentioned above, stochastic programming (SP) is proposed for group maintenance, capable of handling associated uncertainties to help managerial decision-making. We apply the marginal cost (introduced by (Berg 1980)) concept to develop the objective function. The additional cost of deferring a preventive replacement an additional time unit is expressed by marginal cost (Dekker and Roelvink 1995). As per this concept, a replacement is premature if the marginal cost of a component is below the minimum average cost and risky (e.g. failure) if it exceeds. The scope of this paper has been limited to positive economic dependencies and the context of the problem is generalized from the remote operations in oil and gas industries and windfarm alike. Offshore maintenance has accessibility constraints and is often disturbed by the environmental conditions (Cibulka et al. 2012) and degradation of machinery may differ as Palin-Luc et al. (2010) demonstrates that fatigue life can be reduced by more than 70% when exposed to extreme corrosion and sea-water flow. On the other hand, Tyapin et al. (2011) demonstrated in an example that it is possible to have four times of expected mean duration of the same maintenance performed compared to perfect weather conditions. Such factors are very relevant to the concept of setup costs and demand more efficient maintenance strategies for reducing operational costs. The main contributions of this paper are as follows:

-

This paper addresses both CBM and the grouping of multiple components based on current condition information and future prediction uncertainties.

-

From the modeling perspective, SP is proposed in this paper which would allow decision-makers to recourse actions to minimize the losses against unexpected scenarios.

-

In this paper, degradation uncertainties are more explicitly considered, often implicit or absent from most of the studies related to multi-component maintenance optimization models.

1.4 Structure of the paper

The second section provides a literature review focused on multi-component maintenance models applying CBM policies and economic dependencies. Section 3 provides the problem formulation and associated modeling assumptions. Section 4 describes the methodology used in this paper and develops the required equations. Section 5 explains our proposed heuristic. Section 6 provides a numerical example to demonstrate the application of the proposed approach. Sections 7 and 8 discuss this paper’s merit, limitations, interpretations, and future applications.

2 Literature rview

The full scope of multi-component maintenance is extremely broad, and a detailed discussion is beyond this paper’s scope. A general classification is shown in Fig. 1 based on Kobbacy et al. (2008). Dependency classification has already been introduced in the previous section. Stationary models generally assume an infinite planning horizon where maintenance rules do not change over time. In these models, short-term information such as component degradation, unexpected opportunities, etc. can not be incorporated contrary to the dynamic models. Among the optimization method used for multi-component maintenance optimization models, exact methods emphasize on finding the real optimal solution which is often computationally too expensive and therefore impractical for large and complex problems. Heuristics in such cases are often sought to find a near-optimum solution. Another approach is to find the optimum maintenance policies for a given planning problem. The rest of the litterateur review will be mostly focused on the economic dependence and policies concerning CBM. In terms of the planning aspect and optimization method, this paper is relevant to dynamic grouping models and heuristics respectively.

General classification of Multi-component maintenance models adopted from Kobbacy et al. (2008)

There are several review papers in the area of maintenance of the multi-component systems such as Nowakowski and Werbińka (2009), Dekker et al. (1997), Nicolai and Dekker (2008), however, Wang and Chen (2016) focus on the CBM context that was lacking in the previous reviews. The multi-component maintenance policies considering economic dependencies are summarized in two categories as in Fig. 2 by (Wang and Chen 2016) by following classifications from (Wang 2002) and (Nicolai and Dekker 2008). As the name suggests, opportunistic maintenance is dependent on the arrival of planned (e.g., scheduled shutdown, maintenance, etc.) or unplanned (e.g., corrective maintenance requirement of one component may create an opportunity for preventive maintenance of another component) opportunities. Group maintenance, on the other hand, does not rely on the occurrence of an opportunity and allows more flexibility in finding the optimal maintenance group at each maintenance time point (Wang and Chen 2016).

In failure opportunity policy, it is assumed that hard failures arise unexpectedly which creates an opportunity to check other component status and derive maintenance decisions accordingly. In the delay corrective maintenance policy, opportunities are sought to delay corrective maintenance to the point to perform the next preventive maintenance on other components. This policy is more appropriate for systems with redundancy and soft failures that do not cause major downtime. In this area, (Nguyen et al. 2014) presented a predictive CBM strategy for multi-component systems considering both economic and structural dependencies. They categorized failures as critical and non-critical where the latter can be postponed until a critical failure or the next preventive maintenance opportunity. In the opportunistic threshold policy, preventive maintenance thresholds are established and at each inspection point if at least one component requires a PM, then other components are also checked for opportunities for PM. Opportunistic maintenance policies offer advantages in terms of comprehensiveness, computational efficiency, etc. but disturb the planned feature of PM which may potentially cause unexpected production demand or spare parts shortage (Wang and Chen 2016).

There are two general methods of grouping- static and dynamic. In the static grouping method, the system is assumed to operate in a stable way infinitely. Thus, one or a set of maintenance rules can be generated that do not change over time. In contrast, dynamic grouping can incorporate short-term information such as changes in operating conditions, component failure rates, etc. In the CBM context for the multicomponent systems, any established group of maintenance tasks is subject to change with the availability of new information and thus dynamic grouping policy is more relevant (Wang and Chen 2016). The earliest work, to the best of the authors’ knowledge, is found to be the dynamic grouping based on rolling horizon, proposed by Wildeman et al. (1997). Several theorems are proved in Wildeman et al. (1997) that substantially reduce the number of groups to build an optimum grouping structure. They propose adaptive planning of a long-term tentative plan when new information becomes available. The failure model of this work is based on component age rather than their actual health condition.

Bouvard et al. (2011) and Van Horenbeek and Pintelon (2013) incorporated CBM for multi-component system on the basis of Wildeman et al. (1997) and the work of Bouvard et al. (2011) is among the earliest works of incorporating CBM and grouping decisions, to the best of the authors’ knowledge. Here the early maintenance actions are penalized for reducing the component’s RUL while late maintenance is penalized for increasing the component’s failure probability. They applied the rolling horizon approach to dynamically group and optimize maintenance operations for commercial heavy vehicles. They inspect the components regularly and at each inspection point, the failure probability function is calculated with measured current degradation information which is the basis for the adaptive scheduling of upcoming maintenance operations. The components’ degradation is modeled with a homogenous gamma process assuming a known shape and scale parameters. Van Horenbeek and Pintelon (2013) propose dynamic Predictive Maintenance (PdM) policy on the basis of both (Wildeman et al. 1997) and Bouvard et al. (2011) and extend them with different levels and combinations of dependencies between the components. In this work, the degradation model is modeled by a stationary gamma process and the random threshold variable is modeled by a Weibull probability distribution. Their approach closely resembles (Bouvard et al. 2011) and the main differences are associated with the additional consideration of different factors. For instance, Van Horenbeek and Pintelon (2013) additionally considered stochastic and structural dependence, imperfect maintenance, non-zero maintenance downtime, random failure threshold, etc. Although these considerations should make the algorithm more complex, however, the underlying modeling approach did not differ a lot. Similar result as Bouvard et al. (2011) is reported with some additional findings. From their result, it can be realized that dynamic and adaptive scheduling of maintenance tasks based on component conditions increases the utilization of components thus increasing the lifetime.

On the other hand, Keizer et al. (2016) propose clustering of maintenance tasks for k-out-of-n system with economic dependency and with redundancy. Unlike Bouvard et al. (2011) and Van Horenbeek and Pintelon (2013), they did not follow any predetermined strategy structure. In this approach, component states are discretized, and the optimal maintenance group is searched for all possible combinations of component states. Although these types of policies seem to be more applicable and provide more cost-effective solutions but can become computationally expensive for more than three components. Furthermore, the requirement of discrete state-space makes it challenging to utilize continuous degradation processes such as the gamma process to model degradation. (Keizer et al. 2016) used Poisson distribution for deterioration increment in their original model to guarantee a discrete state space but also discussed the continuous degradation with exponential deterioration increments briefly.

Among the other works that combine both multi-component systems and CBM, Tian and Liao (2011) propose a proportional hazard-based CBM policy where components are economically dependent. A numerical algorithm for cost evaluation is proposed and exemplified with real-world condition monitoring data. Do et al. (2015) propose a dynamic maintenance decision rule for components connected in series while considering availability and limited repairmen constraints. Genetic algorithm and MULTIFIT are used as optimization algorithms. When a component fails, Zhou et al. (2015) uses the opportunity to preventively maintain other components. Failures are simulated using Monte Carlo simulation to calculate the cumulative maintenance cost of the system. Rasmekomen and Parlikad (2016) use simulated annealing to demonstrate that considering stochastic dependency leads to a positive impact on the CBM policy and exemplified with an industrial cold box from a petrochemical plant. Dynamic maintenance grouping is combined with routing problems for geographically dispersed production systems by Nguyen et al. (2019). They use the Local Search Genetic Algorithm (LSGA) and Branch and Bound (BAB) method for finding the optimal group and route. Shi et al. (2020) use system reliability requirement as the criteria to develop a CBM decision framework using a rolling-horizon approach. The Bayesian method is used to update the posterior distributions of the failure model parameters. A dynamic opportunistic maintenance approach is developed by Vu et al. (2020) for different types of redundant systems. Oakley et al. (2022) consider both economic and stochastic dependency to optimize replacement decisions at maintenance opportunities. Their policy uses a utility/reward function and minimizes the overall cost by minimizing the total long-term penalty. These articles mostly focus on one or more of the dependencies and use different approaches for grouping, however, associated uncertainties in decision-making are not explicitly focused.

Finally, CBM-based multi-component maintenance models are rarely supported by case studies with real-life data mainly due to the lack of it. Therefore, the accurate estimation of model parameters is often hard to achieve which contributes to the uncertainty in the estimation of the actual health condition of the component (de Jonge and Scarf 2020). In recent years, industrial data are becoming more available due to the ongoing development of sensor technologies and condition monitoring systems (Choi et al. 2018).

3 Problem formulation and assumptions

There are four basic sources of difficulties in decision making that make decisions hard- complexity, inherent uncertainty, interests in achieving multiple objectives, and different influencing perspectives of multiple decision-makers (Clemen and Reilly 2013). This paper mainly reflects on the inherent uncertainty and attempts to develop a basic structure for decision analysis to help planners taking better maintenance decisions utilizing the condition monitoring information of an asset.

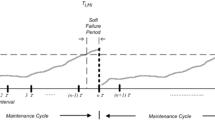

Consider several components degrading continuously following some stochastic processes. At the current time, \((t_0)\), the health condition of a component is assumed to be perfectly known and future conditions can be predicted with the knowledge of the failure threshold. Maintenance of these components can be executed in some periodic pre-scheduled points of time denoted as \(\tau _1, \tau _2,...\) as depicted in Fig. 3. This is justified for the context when the maintenance operations require visiting the site and are often associated with some setup costs and require specific resources such as spare parts that require ordering beforehand and arranging qualified crews for the task (Keizer et al. 2017). Each maintenance requires prior preparation and therefore immediate maintenance is not possible without prior planning. Preparation times are assumed to be \(\le \Delta \tau \ (= \tau _{n+1}-\tau _n)\). It implies that this time interval is long enough to have prepared for the task and any spare parts can be made available within \(\le \Delta \tau\). There is a setup cost whenever a maintenance opportunity is utilized, and multiple maintenances can share a single setup cost when conducted in the same window and the planning horizon is finite with a length of T time unit.

Now assume that, based on the current health information at \(t_0\) the optimum maintenance plan has been found that recommends maintenance of component number 1 and 4 at the opportunities \(\tau _1\) and \(\tau _3\) respectively while components 2 and 3 together at \(\tau _2\). The First Hitting Times (FHT) of each component are shown with numbers and crosses on the failure threshold \((L_j)\) in Fig. 3. At this point, the planning has been done based on the belief that the future degradation will follow the expected course regulated by the stochastic process. However, a different situation can be observed at \(t_0 + \tau\) or \(\tau _1\) which may make the previous plan not optimal anymore. For example, it could be required to maintain component 2 at \(\tau _1\) together with component 1 if it is found to be degraded much worse than anticipated. Moreover, it could be even possible to skip visit at \(\tau _2\) by postponing component 3 at \(\tau _3\). However, the maintenance team is already at \(\tau _1\) without any preparation for component 2 and therefore misses the opportunity to revise the existing plan. The objective is to optimally schedule the maintenance in the available opportunities under the degradation uncertainties of the components.

3.1 Modeling assumptions

Let J be the total number of degrading components that we need to schedule for maintenance where each individual component is presented by \(j = 1, 2,..., J\). Following properties are assumed for any j:

-

Degradation processes are assumed to be a homogeneous gamma process with shape parameter \(\alpha _j\) and intensity parameter \(\beta _j\) as it is especially suitable for deterioration models where inspections are involved (van Noortwijk 2009). Other degradation models can also be used.

-

Has a known failure threshold \(L_j^c\). Upon hitting the threshold the component is failed and downtime occurs.

-

The degradation process has inherent uncertainty. Degradation increment within the \(t_0\) to \(t_0+\Delta \tau\) can be between 0 and \(\infty\) which is regulated by gamma distribution as a function of its parameter \(\alpha \Delta t\) and \(\beta\). All the other parameters of the maintenance models are assumed to be strictly deterministic.

-

Only first three immediate opportunities \(\tau _1 = t_0 + \tau , \tau _2 = t_0 + 2\tau ,\) and \(\tau _3 = t_0 + 3\tau\) are assumed to be sufficient for maintenance planning. Note that, we can only execute any required maintenance at \(t_0 + \tau\) at the earliest. Whatever decision we make at \(t_0\) is subject to possible changes at \(t_0 + \tau\) as a function of the actual future health conditions of the component in the next inspection. If a component is currently (\(t_0\)) planned beyond \(\tau _1\) without a need for the insurance has the same significance if it were planned even later as in any case the plan will be revised in the next inspection. We are further assuming that the component health condition is not as volatile that a component would need immediate maintenance at \(t_0 + \tau\) whereas it was originally planned at \(t_0 + 4\tau\). Based on these arguments we believe that considering only the first three immediate opportunities are sufficient to relax some complexities in the calculation.

-

Any j can degrade slower or faster than our expectation. To account for this situation, it is assumed that the continuous distribution of possible degradation level at some future time can be discretized into finite number of realizations with some corresponding probabilities. Most simplistically, three scenarios are considered- slow, expected and fast degradation to be observed at \(t_0 + \tau\) which is sufficient to demonstrate the modeling structure.

-

Flexibility to decide on whether to execute maintenance at \(\tau _1\) for component j can be availed by paying a preparation cost \(c_j^{\pi }\) upfront which will function as insurance. When this preparation cost is paid at \(t_0\) for a component j, it can be executed at \(\tau _1\) if it deems more profitable given the condition of j is found to be worse than expected. Otherwise, it can be left for later execution. In this way, a component’s useful life can be extended by avoiding premature replacement. With two-stage SP we need to decide whether to buy insurances for a component j or not and for a given scenario how should we distribute the maintenance tasks.

3.2 Notations

-

\(\alpha _j:\) Shape parameter of component j

-

\(\beta _j:\) Intensity parameter of component j

-

\(t_0:\) Current time

-

\(\tau _m:\) \(m^{th}\) maintenance opportunity (\(\tau _m = m\tau\))

-

W : Total number of windows/maintenance opportunities considered and each individual window is presented by m where \(m = 1, 2,..., W\)

-

J : Total number of component and each individual component is presented with j

-

\(m_j:\) Corresponding window index considered for component j.

-

S, E, F : Representation of slow, expected and fast degradation progression respectively

-

\(\Omega :\) Set of all scenarios considered associated with degradation progression where \(\Omega \in \{S,E,F\}\)

-

\(\varvec{\zeta }:\) (note: this is bold zeta) Set of all possible combinations of scenarios associated with all component j. For example, for \(j \in \{1,2\}\) it would consist of 9 combinations such as (S, S), (S, E), ..., (F, F). Note that the combinations are presented as ordered pairs as for example, \((S,E) \ne (E,S)\).

-

\(\zeta :\) A particular combination (e.g. (S, S)) from \(\varvec{\zeta }\) and therefore \(\zeta \in \{1,2,..,\mid \varvec{\zeta }\mid \}\) where \(\mid \varvec{\zeta }\mid\) is the size or cardinality of the set \(\varvec{\zeta }\).

-

\(\zeta _j:\) Individually experienced scenario by a component j in a \(\zeta\). For example, for \(\zeta = 2\) in the above example, \(\zeta _{j = 1}=S\) and \(\zeta _{j = 2}=E\)

-

\(p(\zeta _j):\) Probability of occurring \(\zeta _j\)

-

\(p(\zeta ) = \prod _j p(\zeta _j):\) Probability of occurring a particular \(\zeta\) and it holds that, \(\sum \limits _{\zeta =1}^{\mid \varvec{\zeta }\mid }p(\zeta ) = 1\)

-

\(c_j^r:\) Universal cost of repair/replace of component j

-

\(c_j^{\pi }:\) insurance cost of component j. For this problem we assume this cost is same as the cost of preparation. If a maintenance is not executed at \(t_0 + \tau\) after paying the preparation cost, it will be lost and must pay again next time when the task will be executed

-

\(c_j^p = c_j^r + c_j^{\pi }:\) Total planned maintenance cost

-

\(c_j^b:\) Additional breakdown cost beyond \(c_{j}^p\) when a component is repaired/replaced while in a failed state

-

\(c_j^i:\) Inspection cost of component j

-

\(c_j^d:\) Downtime cost of component j per unit time

-

S : Setup cost per visit

-

\(\Phi _j^*:\) Optimum cost per unit time for component j derived from individual optimization

-

T : Total operational lifetime of the system

-

\(D_j^0:\) Current degradation level of j at \(t_0\)

-

\(D_j^{\zeta }:\) Future degradation level at \(\tau _1\) for the given scenario \(\zeta _j\)

-

\(L_j^c:\) Failure threshold of component j. Critical components are usually not allowed to reach the technical failure limit as the failure of such components may lead to additional accidents such as fire hazards, hydrocarbon leakages into the environment, etc. Therefore, this is more practical to set the level of this failure threshold on the basis of its economic life rather than its technical lifetime which is a common practice in the industries (Ahmadzadeh and Lundberg 2014). Alert limits- commonly used in CBM strategies, are usually and understandably set on the basis of the economic life of a component than its actual limit of hard failure.

-

\(L_j^p:\) Preventive maintenance threshold of component j

-

\(F_j(m_j,D_j^0,D_j^{\zeta }, L_j):\) Failure probability of a component j as a function of which window we are choosing to maintain it (\(m_j\)), current degradation level of j \((D_j^0)\), the future degradation level at \(\tau _1\) \((D_j^{\zeta })\) given the scenario \(\zeta _j\), and the corresponding failure threshold \((L_j)\)

-

\(E_j(m_j,D_j^0,D_j^{\zeta }, L_j):\) Expected length of downtime of a component j as a function of which window we are choosing to maintain it (\(m_j\)), current degradation level of j \((D_j^0)\), the future degradation level at \(\tau _1\) \((D_j^{\zeta })\) given the scenario \(\zeta _j\), and the corresponding failure threshold \((L_j)\)

4 Methodology

In a two-stage SP, decision variables are classified as first and second-stage decision variables where the first-stage decisions are made before knowing the values of the random variables, and the second-stage decisions are made after the value of random data are observed (Shapiro and Dentcheva 2021). Using similar notations of Birge and Louveaux (2011), let x and y be the first and second stage decision variables while \(\xi\) is the random vector to be observed. The second stage problem is also known as the recourse problem with an assumption that the so-called \(\xi\) is fixed. Now let \(k=1,2,...,K\) be the possible realizations of \(\xi\) with \(p_k\) be their probabilities. This deterministic equivalent problem now can be written into extensive form by assigning one set of second-stage decisions \((y_k)\) to each realization \(\xi\) to each realization of \(q_k, h_k\) and \(T_k\). The extensive form of this large-scale linear problem then can be written as Eq. (1).

4.1 First stage decision variables

In the first stage, a decision needs to be made on whether to pay for an insurance for a component j or not. If \(c_j^{\pi }\) is paid at \(t_0\), j can be maintained at \(\tau _1\) or leave it for later. Otherwise j can only be planned at \(\tau _2\) or \(\tau _3\). So following first-stage decision variables are considered:

-

\(x_{1,j}: 1\) if j is committed at \(\tau _1\) and the preparation cost is paid; 0 otherwise. Maintenance of j will be executed at \(\tau _1\) regardless of the actual condition at \(\tau _1\)

-

\(x_{2,j}: 1\) if j is planned with flexibility at \(\tau _1\) with an insurance; 0 otherwise. Maintenance of j can be done at any opportunities including \(\tau _1\). However, if not executed at \(\tau _1\) the preparation cost will have to be paid again for executing it later.

-

\(x_{3,j}: 1\) if j is planned beyond \(\tau _1\) without paying for the insurance; 0 otherwise. Maintenance of j is only possible at \(\tau _2\) or \(\tau _3\).

4.2 Second stage decision variables

In the second stage, a set of decisions need to be made regarding which windows are optimum to utilize as a function of first stage decisions for the corresponding degradation scenarios. Following variables are therefore introduced where \(y_{m_j,j}^{\zeta }\) indicates that for a given scenario combination of \(\zeta\), maintenance of component j is decided to be executed at window \(m_j \in \{1,2,3\}\):

-

\(y_{m_j=1,j}^{\zeta }: 1\) if j is decided at \(\tau _1\) for scenario combination \(\zeta\); 0 otherwise

-

\(y_{m_j=2,j}^{\zeta }: 1\) if j is decided at \(\tau _2\) for scenario combination \(\zeta\); 0 otherwise

-

\(y_{m_j=3,j}^{\zeta }: 1\) if j is decided at \(\tau _3\) for scenario combination \(\zeta\); 0 otherwise

4.3 Objective function

Any component j can be maintained at any \(m_j\tau\) during the operational lifetime of a component where \(m_j\) is an integer and according to our assumption \(m_j = 1,2,3\). Then for each j with a particular set of \(\alpha _j\) and \(\beta _j\), the probability of failure and therefore causing a downtime depend on their current health conditions \(D_j^0\), failure threshold \(L_j^c\), future degradation level at \(\tau _1\) (\(D_j^\zeta\)) and the length of \(m_j\tau\). The total expected unplanned downtime cost between \(t_0\) and \(m_j\tau\) is presented in Eq. (2) where the first part of the cost is associated with the breakdown maintenance cost itself were \(0 \le F(.) \le 1\). The second part is the associated downtime due to failure were \(0 \le E_j(.) \le m_j\tau\). If the health condition is monotonically degrading over time then \(Q(m_j,D_j^0,D_j^\zeta , L_{j})\) will keep increasing as \(m_j\) increases.

This optimization problem is about balancing the planned costs against the unplanned costs. The cost of one planned maintenance cost is the sum of the actual repair cost \(c_j^r\) and the preparation cost \(c_j^\pi\). During the total duration of the operational lifetime, the total number of required planned maintenance is not straightforward to find and we adopt an assumption from Vatn (2008) where for each component j, we find the first point of maintenance and assume that the rest of the operational period the component will be maintained at their corresponding individual due date incurring the minimum average cost. Now let \(\phi _j(\tau ,L_{j}^p)\) be the average cost per unit time for an individual component j for a given maintenance interval \(\tau\) and maintenance threshold \(D_j^p\) while \({\phi _j}^*(\tau ^*, L_j^{p^*})\) or \(\phi _j^*\) in short is the minimum average cost per unit time for the corresponding optimum values of \(\tau\) and \(D_j^p\). One major advantage of this approach is that it avoids repeated grouping of the components. Now the total cost of maintaining a component at \(t_0 + m_j\tau\) for a given scenario \(\zeta _j\) is given as in Eq. (3). It means that, the unexpected cost increases as we postpone our maintenance while the contribution of the \(\phi _j^*\) decreases and vice-versa. The quantities \(F_j(.), E_j(.)\) and \(\Phi _j^*\) and the processes for obtaining them are explained in more detail in subsequent sections.

Then the objective function of this two-stage SP minimizes the total cost of maintaining multiple component in the remaining operational lifetime \((T-t_0)\) as per Eq. (4) where \(k \in \{1,2,3\}\) present the first stage variables and in order to simplify the representation of the cost of first stage decision variables, another binary variable \(\omega _{k,j}\) is considered. \(\omega _{k,j} = 1\) when \(k = 2\) which is the case when insurance is paid and otherwise \(\omega _{k,j} = 0\).

Subject to the following constraints:

Here we use \(C_j(\zeta _j, m_j) = C_j(\zeta _j, m_j)^{'} - S\) to be able to include the contribution of the setup cost for multiple component which is not straightforward due to it’s dependency with \(y_{m_j,j}^\zeta\). Further note that the full planned cost is included in Eq. (3) and thus when the insurance is already bought and later the maintenance was actually done at \(\tau _1\) the preparation/insurance cost will be paid twice. Therefore the preparation cost is subtracted for such case to avoid double-counting of \(c_j^\pi\) and a binary variable \(\mu _j\) is used for handling such case,

Constraint 5 ensures that for a component j, not more than one first stage decision is considered. Constraint 6 ensures that only a single window is chosen for a component j for a corresponding scenario combination \(\zeta\). Constraint 7 implements the conditions between the first and second stage variables. Final constraint 8 declares the binary nature of both first and second stage variables.

4.3.1 Probability of failure

The probability that a component j will fail within the next \(t_j\) period is straightforward to obtain for degradation following a stationary gamma process (van Noortwijk 2009) for a given set of shape and scale parameters if the current degradation level \(D_j^0\) and the failure threshold \(L_j^c\) is known. The equation can be expressed as Eq. (9) where \(t_j = m_j\tau\).

Note that, Eq. (9) only computes the failure probability between current time \(t_0\) and \(m_j\tau\) only on the basis of current health information and disregard the uncertain degradation scenarios we are going to observe when we are at \(\tau _1\). In the deterministic equivalent problem presented by the Eq. (4) the different scenarios at \(\tau _1\) are known and presented by \(D_j^\zeta \in \{D_j^S,D_j^E,D_j^F\)}. If \(D_j^\zeta \ge L_j^c\), component j is already in a failed state at \(\tau _1\) and the \(F_j(.) = 1\) for all values of \(m_j\). When \(D_j^\zeta < L_j^c\) it is certainly known that j has already survived until \(\tau _1\) for this particular scenario and for the remaining part \(D_j^0\) will be replaced by \(D_j^\zeta\) in Eq. (9). For the completeness of the notation, this failure probability is presented as a function of \(D_j^\zeta\) as well.

4.3.2 Expected downtime

Finding the expected downtime between \(t_0\) and \(m_j\tau\) is more challenging and an obvious option is Monte Carlo simulation, but the calculation speed can be a major issue as it will be required to run many times for a large number of components. Therefore, the following approximation is proposed. Consider that, from our current decision point at \(t_0\) we are interested to find the expected downtime between \(t_0\) and \(t_0 + \tau\) (meaning \(m_j=1\)) and we start by splitting the period by n equally spaced interval with each interval of length \(\tau /n\). Then the probability that the system fails in a sub-interval i is given by Eq. (10) where G(.) is the CDF for the gamma distribution and \(D_j^{'} = L_j^c - D_j^0\).

Given the system fails in sub-interval i, the expected downtime can be approximated by Eq. (11).

Thus, the unconditional expected downtime is approximated by Eq. (12).

Note that, similar to Eq. (9), mean downtime is also dependent with the uncertain degradation scenarios that are known at \(\tau _1\). When \(D_j^\zeta < L_j^c\), component j is certainly in a functioning state and expected downtime within the interval \([t_0,\tau _1]\) is 0. For \([t_0,\tau _2]\) and \([t_0,\tau _3]\) we simply need to use Eq. (12) by changing the value of \(m_j\) and replacing \(D_j^0\) with \(D_j^\zeta\). When \(D_j^\zeta \ge L_j^c\), the component is known to have failed within \([t_0,\tau _1]\). This downtime can be known for a continuously monitored system or a system that self-announces the failure. However, in our case, the exact quantity is unknown and an expected length of downtime needs to be estimated. Taking advantage of using a stationary gamma process for degradation model, we assume that the degradation is linear from \(D_j^0\) and \(D_j^\zeta\) and the exact point of time \((t_j^{'})\) when degradation crosses the \(L_j^c\) can easily be found. Then the total downtime within \([t_0,m_j\tau ]\) for \(m_j = \{2,3\}\) is \(\tau _1-t_j^{'} + m_j\tau\).

4.3.3 Minimum average cost rate

From the renewal theorem mean maintenance cost per unit time can be expressed as in Eq. (13) where \(E[C_R]\) is the total expected cost in a renewal period and \(E[T_R]\) is the expected length of the renewal period. Note that each individual component can be individually optimized with respect to their maintenance thresholds and inspection intervals.

The optimal preventive maintenance threshold (\(L^{p^*}\)) and optimum inspection interval \(\tau ^*\) are obtained by minimizing Eq. (13) w.r.t. \(L^p\) and \(\tau\):

For a new component with a degradation process that follows stationary gamma process, Mean Time Between Replacement (MTBR) can be obtained as \(\frac{L^p\beta }{\alpha }\) for an interval of length \(\tau\). However, the gamma process is a jump process and it is very unlikely that the process will always hit \(L^p\) exactly and will overshoot above the threshold slightly (Van Noortwijk et al. 2005) and the mean time can be approximated by \(\frac{L^p\beta }{\alpha } + \frac{1}{2\alpha }\). In addition, we are monitoring periodically with an interval \(\tau\) and upon discovering a maintenance requirement, we need an additional \(\tau\) amount of time to execute maintenance. Therefore, expected renewal length can be approximated by,

Expected cost in a renewal period consists of several cost factors. Each inspection will incur an inspection cost regardless of maintenance, a setup cost, preventive or corrective maintenance cost, and associated downtime cost. Expected cost in a renewal period is then given by:

Equation (16) can be better understood by illustration presented in Fig. 4 directly adopted from Islam and Vatn (2020). Note that, the notations \(y_p\) and \(y_c\) in the figure are \(L^p\) and \(L^c\) according to this paper’s notation. a, b and c represent the situations when a component is already failed at \(\tau _c\) where \(\tau _c\) is the first inspection point to observe the degradation level above the preventive maintenance threshold, a component is above preventive maintenance threshold at \(\tau _c\) but survives until the maintenance arrives, and a component is above preventive maintenance threshold at \(\tau _c\) but fails before maintenance arrives respectively. Here \(p_a, p_b\) and \(p_c\) are probability that the component is in failed state at \(\tau _c\), probability that the component is in between \(L^p\) and \(L^c\), and the probability that the component will fail within the next \(\tau\) respectively. In addition, \(E(\delta _a)\) is the expected downtime in the interval \([\tau _c - \tau , \tau _c]\) and \(E(\delta _c)\) is the expected downtime in the interval \([\tau _c, \tau _c + \tau ]\). \(\Phi ^*\) is then found using Monte Carlo simulation and for the details of the process can be found in Islam and Vatn (2020).

Illustration of maintenance policy under degradation process directly adopted from Islam and Vatn (2020)

5 Computational challenge and heuristics

Grouping and scheduling of the maintenance activities are required to be conducted simultaneously in the dynamic grouping problem which is generally NP-hard (Vatn 2008). Such problems are often dealt with heuristics and meta-heuristics such as evolutionary algorithms. In this paper, we propose heuristics to solve the problem within a reasonable time frame. Equation (4) searches for the minimum solution for the decision variables \(x_{k,j}\) and \(y_{m_j,j}^\zeta\) by considering all the possible combinations and search space explodes in terms of size. As an example, consider two components \(j = \{1,2\}\) and three scenarios \(\{S,E,F\}\). Thus the total number of scenario combinations are \(\mid \varvec{\zeta }\mid = \Omega ^J = 9\) and the combination of windows that can be utilized is \(W^J = 9\). For the case when \(x_{2,1} = x_{2,2} = 1\), all first three opportunities can be used as part of candidate solutions in the second stage. Therefore for the part with the second stage variables \(y_{m_j,j}^\zeta\), we have to choose 1 window combination for each scenario combination leaving us to deal with a search space of \(9^9 \approx 387 \) million approximately. For the other combinations of x variables, the same process needs to be conducted. For more than 2 components the problem becomes intractable.

5.1 Modification of the objective function

Now consider that \(\Psi\) is a single combination of x variables of j components and \(x_{k,j}^\Psi\) is the corresponding value of x variables taken by j in that combination \(\Psi\) where \(k \in \{1,2,3\}\) and \(x_{k,j} \in \{0,1\}\). Then for each \(\Psi\), we can rewrite Eqs. (4) as (17). Note that, \(\omega _{k,j}^\Psi = 1\) if \(k = 2\) for corresponding combination \(\Psi\).

\(Z(x_{k,j},y_{m_j,j}^\zeta )\) or the minimum cost of the objective function is definitely represented by at least one of the first stage decision variable combination \(\Psi\). Therefore it holds that,

Equation (4) would check every possible combinations of \(y_{m_j,j}^\zeta\) (second stage variable) across all the possible scenario combinations \(\varvec{\zeta }\). However it is rather obvious that, the minimum cost of \(z(x_{k,j}^{\Psi },y_{m_j,j}^\zeta )\) is the summation of minimum costs under each scenario combinations. In another word, minimum cost is associated with the decisions of selecting window combinations that brings the cheapest cost for any particular scenario combination. Therefore for any particular \(\Psi\), with J number of component (\(j = \{1,2,..,J\}\)) and n corresponding scenario combinations (\(\zeta = \{1,2,..,n\}\)) the equation is elaborated as Eq. (19).

The minimum of Eq. (19) with respect to all \(\Psi\) as per Eq. (18) gives the minimum cost associated with the SP and the corresponding decision variables represent the optimum decisions. This observation dramatically reduces the required number of computations to find the optimum solution. For example, the numerical example presented in the next section would require only 81 computations for the case when insurances are bought for both components as opposed to the 387,420,489 numbers of required computations in the complete formulation.

6 Numerical results

This section presents a numerical result to verify the modified objective function. An example with two components is demonstrated against the full solution and the gain in computational time saving is discussed. All data are used with the assumption that component degradation follows a stationary gamma process with a known failure threshold and there is no uncertainty in the observation of the current health condition of the component. Component data presented in the table 1 is partially motivated by the work of Pedersen et al. (2020) where sensor data of several centrifugal pumps from an offshore oil platform located on the NCS were analyzed and the MTTF for pumps are roughly 15–20 months. The components in our study have been chosen within that range. In addition, in that study degradation related to only a single failure mode- impeller damage was considered where the degradation rate follows a constant trajectory. However other failure modes also influence the degradation rate and add uncertainty to the measure. Therefore, in our study, we selected a higher value of SD.

6.1 Grouping with two component

The mean time to failures (MTTF) of the components are respectively 20 and 18.33 time units when they are continuously monitored and replaced without delay. The initial health conditions are assumed to be degraded for this illustration. It is assumed that opportunity windows for maintenance come every 3 time unit and the maintenance threshold and average cost rate is optimized based on such assumptions. These data are summarized in Table 1. For the cost parameters, \(c_j^p = 1\), \(c_j^{\pi } = 0.5\), \(c_j^b = 2\) and \(c_j^i = 0.1\) are set for all j. In addition, downtime cost per unit time and setup cost is assumed to be \(c_j^d = 10\) and \(S = 4\) monetary unit respectively for all j. The total operational life (T) is assumed to be 1000 time units. The degradation level at \(t_0 + \tau\) or \(D_j^{\tau _1}\) is arbitrarily chosen for the convenience of demonstration as presented in Table 2.

Based on the degradation levels for corresponding scenarios and using the cost Eq. (3), Table 3 presents the individual cost of maintaining a component in the 1st, 2nd and 3rd window where the bold numbers represent the cheapest strategies for each scenario. In this particular case when they are considered individually, it can be seen that both components 1 and 2 are optimum to be maintained at 2nd window for slow and expected degradation while optimum at 1st window in the case of fast degradation.

6.1.1 Without incorporating uncertainty- deterministic solution

Recall that, buying an insurance provides the flexibility of maintaining a component immediately at \(t_0 + \tau\) even though the component was planned originally for either \(t_0 + 2\tau\) or \(t_0 + 3\tau\). In the deterministic case, buying an insurance is irrelevant and if the component is cheaper to be maintained at \(t_0 + \tau\) we would commit for the maintenance or otherwise we would simply plan it for the upcoming windows. This is represented by the Expected Value (EV) solution where the stochastic variables are replaced by their expected values for the optimization process. In this case, the result shows that the optimum strategy is to maintain both components at 2nd window with a total cost of 1928.72.

6.1.2 With incorporating uncertainty- stochastic solution

The stochastic solution is obtained by separately solving the Eqs. (4) and (19) inside the boundary of relevant constraints. Both approaches resulted in the same conclusion which is presented in Table 4. The first two columns are the chosen first-stage decision variables for components 1 and 2 respectively. Cost columns show the corresponding minimum cost of each decision combination taken in the first stage. Depending on the first-stage decisions number of combinations to evaluate in the second stage will vary. For example, when maintenance of both components is committed at \(\tau _1\), there is only a single combination to check for each of the scenario combinations. Obviously, the number of combinations is maximum for the cases when insurance is availed for both of the components resulting in maximum flexibility.

The result from the numerical example shows in Table 4 that, Eq. (4) is transformable to Eq. (19). The results are the same in both cases, however, the full calculation requires more than 2 hours compared to the modified calculation which requires only a few seconds (Elapsed time is only shown for the full calculation). The calculation is performed in a commercially available computer with moderate computing power with Intel(R) Core(TM) i7-7600U CPU @ 2.80GHz & 2.90 GHz processor and 16 GB RAM. For two components, the computational bottleneck lies in the case when both insurances are bought in the first stage which consumes about \(88\%\) of the total computational time.

Table 5 shows the result of corresponding second stage decisions in detail that generated the optimum solution. Column names denote the window number of components 1 and 2 respectively and each row represents a scenario combination. It shows that the SP solution recommends using the first window for both components when at least one component deteriorates more than expected. It also recommends the first window when both components experience the expected scenarios. For any other situations, both components should be maintained together at the second window.

6.1.3 Value of stochastic solution

In practical situations, SP is seldom used mainly due to its complexity (Birge 1982) and therefore it is only useful when the value of it over-weighs the computational cost of performing it. Value of Stochastic Solution (VSS), in this regard, is useful to measure the expected loss of using the deterministic solution or the expected profit of using SP. The goodness of the expected solution can be obtained by VSS by replacing the expected values with random values for the input variables (Escudero et al. 2007) as in Eq. (20).

where \(Z_{EEV}^*\) is the optimal solution by keeping the first-stage variables fixed as the deterministic solution which is, in this case, \(\forall x_k = 0\). \(Z_{SP}^*\) is the optimal solution of the stochastic problem. Recall the result for the case of two components where the 2nd window is optimum for both components without paying for any insurances. Therefore, maintenance can be done only either at the 2nd window or can be postponed at the 3rd window. The EEV solution for that problem is found to be 1931.398 monetary units and thus the VSS results in 2.22 monetary units. It implies that, for this example, it is beneficial to use SP instead of the deterministic solution and 0.11% cost can be saved.

6.2 Sensitivity analysis

In this Sect. 6 components are considered and With the increasing number of components (J), the number of scenarios \((\Omega )\), and the number of immediate windows to consider (W), the required number of calculations will escalate rapidly following \(\Omega ^{J\times W^J}\). Assuming three scenarios and the first three windows to consider for three components, this number already explodes to \(4.4\times 10^{38}\). With the modified approach, the computational bottleneck is regulated by \(\Omega ^J\times W^J\) and for 6 components computational bottleneck is a maximum of \(3^6 \times 3^6 = 531441\) number of computations for the case when insurance is bought for all the components. Table 6 shows the component data with their MTTF calculated for \(L_j^c = 200\).

A simple design of experiment is set up to study the different critical input parameters against the output variable- VSS. Three input parameters are chosen- downtime cost, setup cost, and insurance cost. Failure of a critical component in a remote operational environment usually contributes to high downtime costs. For example, oil industries can lose \(\$5.037\) million for only 3.65 days of unplanned downtime in a year (The impact of digital on unplanned downtime 2016). In this experiment three levels of downtime costs and setup costs along with 3 levels of insurance costs are considered and the data are summarized in Table 7 with other fixed parameters. Choosing an appropriate methods for discritization is not straightforward and we leave it to further research. A brief description of discretization process is presented in the appendix. In this study it is assumed that there is an equal probability of experiencing expected, faster or slower degradation scenarios and the degradation observed at \(t_0 + \tau\) can be \(\pm 25\) from the expected degradation level.

The result of the experiment is summarized in Table 8. Besides these input parameters, some tests are done with zero downtime cost which would eventually return VSS of 0 because the maintenance of failures can always be pushed backward and forward leaving no trade-off to optimize. With 0 downtime cost, we eliminate any impact of uncertainty and thus VSS returns 0 as expected.

The result shows the critical role of setup cost. When the setup cost is low it provides the flexibility of scheduling at any opportunity and thus tends to avoid highly expensive downtime by choosing a safer insurance of early maintenance. It reduces the uncertainty of the decisions taken and therefore there is a little benefit of SP in such cases. It is reflected in the result by returning a VSS of 0 for all the cases with setup cost of only 10, which is as low as a planned maintenance cost of one component. It can be also observed in the cases when the setup cost is 100 monetary unit. VSS increases as the downtime contribution increases from 10 to 100 monetary unit per unit time. However, it reduces for \(c_d^j=500\) as it is a very uneven competition between downtime and setup cost and thus decision uncertainty drops significantly. When the trade-off between setup cost and downtime cost is more balanced, SP can deliver significant benefit which is depicted by the cases where both S and \(c_d^j\) are 500. It is obvious that the VSS is going to be different according to the input parameters used. When the setup cost and downtime is high (e.g. offshore oil and gas operation, wind-farm maintenance, etc.), significant benefit can be possible. In this study, for example, a maximum of \(10.43\%\) cost savings has been shown.

7 Discussion

In this paper, it is assumed that the only source of uncertainty is associated with the degradation process which is aleatory in nature. There can be several other epistemic uncertainties that can play major roles in the decision-making process. In remote offshore operations, for example, weather conditions, transportation availability, etc. are some important factors that may add up to the uncertainty of having a pre-fixed schedule available. In addition, the failure thresholds are assumed to be known perfectly and fixed for each component, which is a common assumption in CBM-based research. It is often very difficult to find this quantity precisely and it is more realistic to represent the failure thresholds as a probability distribution as well.

Another assumption of this model is related to the number of maintenance tasks that are possible to execute in each visit. In this model, there is no limitation on how many maintenance tasks can be executed in each visit which is often not practical for remote operations. For example, if a helicopter is used for going to the site, then there is a limitation on crew capacities. Different tasks may require different expertise and accommodating them all on the same trip may not be possible. Alternative arrangements such as more than one trip or more specialized transportation may influence the setup cost for the visit. Such constraints are definitely of interest for incorporation in the model.

The probability distribution of uncertainty is discretized in only 3 scenarios which is a good starting point and often sufficiently facilitates decision-makers. Finer the probability distribution is divided such as 5, 7... scenarios, the higher the precision is going to be. In contrast, the finer the intervals, the higher the computational expense as the number of computations exponentially increases. Whether more scenarios are required or not should be further evaluated for each case. In addition, this two-stage SP problem is only considering the uncertainties associated with the degradation uncertainty at \(t_0 + \tau\) and the underlying assumption is that from that point the degradation trajectory will follow the expected scenarios. Therefore, the estimation of the failure probability and expected downtime between \(t_0+\tau\) and \(t_0+3\tau\) is only based on the possible component condition at \(t_0+\tau\) and the uncertainty between \(t_0+2\tau\) and \(t_0+3\tau\) is not accounted for. To encapsulate all these uncertainties will require a multi-stage SP formulation.

Discrete distribution is required to solve the SP problem given in Eq. (4). However, available computing power is usually limited to handle the cardinality of the problem together with the complexity of the decision model and thus distributions of stochastic parameters need to be approximated by discrete distributions with a limited number of outcomes (Kaut and Stein 2003). Decision analysts commonly approximate continuous probability density function with properly designed probability mass functions to represent the continuous distribution of uncertainties and this process is known as discretization (Hammond 2014). There are many ways to generate scenarios such as Monte Carlo simulation, Decision Trees, decision makers’ subjective assignment of the probabilities using their expert understanding of the system of interests, etc. Finding an appropriate method of discretization of the scenarios is a delicate process and can significantly influence the outcome of optimization problems. This is beyond the scope of this paper and therefore, one of the distribution-specific methods- Bracket Median is utilized for the purpose of the sensitivity analysis for its apparent simplicity of application. Note that, different approaches are expected to deliver different discretization results as conditional distributions are usually skewed. Distribution-specific method is very briefly described in the appendix.

The assumption associated with the degradation process following a stationary gamma process is not a strict condition and it is not required to model the degradation process with a stochastic model such as gamma, wiener, Markov process, etc. The only requirement is to be able to calculate the failure probability and expected downtime of a component given its current health condition. Any stochastic or data-driven degradation model fulfilling this condition can utilize this grouping algorithm. In addition, periodic opportunity to visit maintenance site is also not a hard constraint and the grouping algorithm is transformable with a little effort. The main challenge regarding non-periodic opportunities lies in the estimation of individual cost rate \((\Phi _j^*)\).

8 Conclusion

CBM decision-making is a two-step process wherein the first step condition monitoring information helps develop the deterioration modeling which is then followed up by the decision-making step to decide when and what to maintain (Ahmad and Kamaruddin 2012). Decision-making with CBM involves diagnostics and prognostics. Focusing on the prognostics, understandably, it is an uncertain quantity, most commonly presented in the form of a probability distribution, and thus under CBM strategy, decision-makers are further challenged to take more dynamic decisions. For profitable decision-making, intuitively, understanding the associated risks with each possible decision is of critical importance. In a complex industrial environment, decision-making is a complex problem of choice, and humans are limited in terms of making good decisions by considering all associated factors and their effects simultaneously (Saaty 1988). The main objective of this paper is to propose a maintenance optimization model to systematically deal with uncertainties in decision-making.

The main intent of this paper is to incorporate decision analysis in maintenance grouping under the CBM strategy to facilitate the decision-making process by systematically and quantitatively presenting all the decision alternatives and their associated risks under varying scenarios. This paper presents a maintenance model to serve this purpose and first of its kind, to the best of authors’ knowledge. Although there are several limitations of this model that are discussed in the previous section, there are some promising results to consider further development of this model. First, simultaneous grouping and scheduling of the maintenance tasks usually struggle with the cardinality of the problem. It is shown in this paper that the burden of computation can be dramatically reduced by the simple manipulation of the solution-search strategy. Undoubtedly incorporating more uncertainties and constraints discussed in the previous section will further complicate the problem and further study is required to develop a more practical model to use in industrial applications. Secondly, although for the given experimental design, the VSS is rather small (maximum \(0.45\%\)), they are always positive in all reasonable cases. Notice that, for a multi-million/billion-dollar project, the resulted savings are still significant and VSS can be larger than this experiment for a more volatile system. Be it large or small, a positive VSS indicates that there are opportunities for improvement and further studies should be conducted. Thirdly, discretization of the probability distribution of uncertainty is a critical factor for this model to be useful. This process is independent of the grouping algorithm and solely depends on how decision-makers describe the associated uncertainties of the system. It can be either qualitative (subjective assignment of the probabilities by domain experts) or quantitative (e.g., bracket methods, quadrature methods, etc.). However, the most critical thing is to represent the uncertainty reasonably that resembles the reality to avoid garbage in and garbage out situation. The discretization process should be meticulously selected before implementing this algorithm.

References

Abdel-Hameed M (1975) A gamma wear process. IEEE Trans Reliab 24(2):152–153

Ahmad R, Kamaruddin S (2012) A review of condition-based maintenance decision-making. Eur J Indu Eng 6(5):519–541

Ahmadzadeh F, Lundberg J (2014) Remaining useful life estimation. Int J Syst Assur Eng Manag 5(4):461–474

Alaswad S, Xiang Y (2017) A review on condition-based maintenance optimization models for stochastically deteriorating system. Reliab Eng Syst Saf 157:54–63

Ali I, Ahmedy I, Gani A, Talha M, Raza MA, Anisi MH (2020) Data collection in sensor-cloud: a systematic literature review. IEEE Access 8:184664–184687. https://doi.org/10.1109/ACCESS.2020.3029597

Berg M (1980) A marginal cost analysis for prevetive replacement policies. Eur J Oper Res 4(2):136–142

Bickel JE, Lake LW, Lehman J (2011) Discretization, simulation, and swanson’s (inaccurate) mean. SPE Econ Manag 3(03):128–140

Birge JR, Louveaux F (2011) Introduction to stochastic programming. Springer

Birge JR (1982) The value of the stochastic solution in stochastic linear programs with fixed recourse. Math Program 24(1):314–325

Bouvard K, Artus S, Bérenguer C, Cocquempot V (2011) Condition-based dynamic maintenance operations planning & grouping application to commercial heavy vehicles. Reliab Eng Syst Saf 96(6):601–610

Cachada A, Barbosa J, Leitño P, Gcraldcs CA, Deusdado L, Costa J, Teixeira C, Teixeira J, Moreira AH, Moreira PM et al. (2018) Maintenance 4.0: intelligent and predictive maintenance system architecture. In: 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA) 1:139–146

Cho DI, Parlar M (1991) A survey of maintenance models for multi-unit systems. Eur J Oper Res 51(1):1–23

Choi T-M, Wallace SW, Wang Y (2018) Big data analytics in operations management. Prod Oper Manag 27(10):1868–1883

Cibulka J, Ebbesen MK, Hovland G, Robbersmyr KG, Hansen MR (2012) A review on approaches for condition based maintenance in applications with induction machines located offshore

Clemen RT, Reilly T (2013) Making hard decisions with decision tools. Cengage Learn

Daily J, Peterson J (2017) Predictive maintenance: how big data analysis can improve maintenance. In: Supply Chain Integration Challenges in Commercial Aerospace, pp 267–278. Springer

Dao CD, Zuo MJ (2017) Selective maintenance of multi-state systems with structural dependence. Reliab Eng Syst Saf 159:184–195

de Jonge B, Scarf PA (2020) A review on maintenance optimization. Eur J Oper Res 285(3):805–824

Dekker R, Roelvink IF (1995) Marginal cost criteria for preventive replacement of a group of components. Eur J Oper Res 84(2):467–480

Dekker R, Wildeman RE, Van der Duyn Schouten FA (1997) A review of multi-component maintenance models with economic dependence. Math Methods Oper Res 45(3):411–435

Do P, Vu HC, Barros A, Bérenguer C (2015) Maintenance grouping for multi-component systems with availability constraints and limited maintenance teams. Reliab Eng Syst Saf 142:56–67

Escudero LF, Garín A, Merino M, Pérez G (2007) The value of the stochastic solution in multistage problems. TOP 15(1):48–64

GE: The impact of digital on unplanned downtime- an offshore oil and gas perspective (2016) Technical report, General Electric Company

Gulati R, Smith R (2009) Maintenance and Reliability Best Practices. Industrial Press Inc

Hammond RK (2014) Discrete approximations to continuous distributions in decision analysis. In: PhD Thesis

Hashemian H (2011) Wireless sensors for predictive maintenance of rotating equipment in research reactors. Ann Nuclear Energy 38(2–3):665–680

Islam AMAI, Vatn J (2020)Condition-based maintenance model for a single component subject to long preparation time and limited maintenance opportunities. In: 2020 European Safety and Reliability Conference (ESREL), pp 2945–2951. ESREL

Jardine AKS, Lin D, Banjevic D (2006) A review on machinery diagnostics and prognostics implementing condition-based maintenance. Mech Syst Signal Process 20(7):1483–1510. https://doi.org/10.1016/j.ymssp.2005.09.012

Kaut M, Stein W (2003) Evaluation of scenario-generation methods for stochastic programming. Humboldt-Universität zu Berlin, Mathematisch-Naturwissenschaftliche Fakultät

Keizer MCO, Teunter RH, Veldman J (2016) Clustering condition-based maintenance for systems with redundancy and economic dependencies. Eur J Oper Res 251(2):531–540

Keizer MCO, Flapper SDP, Teunter RH (2017) Condition-based maintenance policies for systems with multiple dependent components: a review. Eur J Oper Res 261(2):405–420

Kobbacy KA, Murthy DP, Nicolai RP, Dekker R (2008) Optimal maintenance of multi-component systems: a review. Springer, London, pp 263–286

Lua Y, Suna L, Kanga J, Zhang X (2018) Maintenance grouping optimization for offshore wind turbine considering opportunities based on rolling horizon approach. Pol Marit Res 25(2):123–131

Miller AC III, Rice TR (1983) Discrete approximations of probability distributions. Manage Sci 29(3):352–362

Nachimuthu S, Zuo MJ, Ding Y (2019) A decision-making model for corrective maintenance of offshore wind turbines considering uncertainties. Energies 12(8):1408

Nguyen K-A, Do P, Grall A (2014) Condition-based maintenance for multi-component systems using importance measure and predictive information. Int J Syst Sci Oper Logist 1(4):228–245

Nguyen HSH, Do P, Vu H-C, Iung B (2019) Dynamic maintenance grouping and routing for geographically dispersed production systems. Reliab Eng Syst Saf 185:392–404

Nicolai RP, Dekker R Optimal maintenance of multi-component systems: a review. In: Complex system maintenance handbook, pp 263–286 (2008)

Noortwijk Jv, Kok M, Cooke R (1997) Optimal maintenance decisions for the sea-bed protection of the eastern-scheldt barrier. In: Engineering Probabilistic Design and Maintenance for Flood Protection, pp 25–56. Springer

Nowakowski T, Werbińka S (2009) On problems of multicomponent system maintenance modelling. Int J Autom Comput 6(4):364

Oakley JL, Wilson KJ, Philipson P (2022) A condition-based maintenance policy for continuously monitored multi-component systems with economic and stochastic dependence. Reliab Eng Syst Saf 108321

Palin-Luc T, Pérez-Mora R, Bathias C, Domínguez G, Paris PC, Arana JL (2010) Fatigue crack initiation and growth on a steel in the very high cycle regime with sea water corrosion. Eng Fract Mech 77(11):1953–1962

Paolanti M, Romeo L, Felicetti A, Mancini A, Frontoni E, Loncarski J (2018) Machine learning approach for predictive maintenance in industry 4.0. In: 2018 14th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), pp 1–6. IEEE

Pedersen TI, Vatn J, Jørgensen K (2020) Degradation modelling of centrifugal pumps as input to predictive maintenance, pp 3423–3430

Rasmekomen N, Parlikad AK (2016) Condition-based maintenance of multi-component systems with degradation state-rate interactions. Reliab Eng Syst Saf 148:1–10

Saaty TL (1988) What is the analytic hierarchy process? In: Mathematical Models for Decision Support, pp 109–121. Springer

Seyr H, Muskulus M (2019) Decision support models for operations and maintenance for offshore wind farms: a review. Appl Sci 9(2):278

Shapiro A, Dentcheva D, Ruszczynski A (2021) Lectures on stochastic programming: modeling and theory. SIAM

Shi Y, Zhu W, Xiang Y, Feng Q (2020) Condition-based maintenance optimization for multi-component systems subject to a system reliability requirement. Reliab Eng Syst Saf 202:107042

Si X-S, Wang W, Hu C-H, Zhou D-H (2011) Remaining useful life estimation-a review on the statistical data driven approaches. Eur J Oper Res 213(1):1–14

Spendla L, Kebisek M, Tanuska P, Hrcka L (2017) Concept of predictive maintenance of production systems in accordance with industry 4.0. In: 2017 IEEE 15Th International Symposium on Applied Machine Intelligence and Informatics (SAMI), pp 000405–000410 . IEEE

Tan JH, Roberts B, Sundararaju P, Sintive C, Facheris L, Vanden Bosch J, Lehning V, Pegg M (2020) Transforming offshore oil and gas production platforms into smart unmanned installations. In: Offshore Technology Conference Asia. OnePetro

Thomas L (1986) A survey of maintenance and replacement models for maintainability and reliability of multi-item systems. Reliab Eng 16(4):297–309

Tian Z, Liao H (2011) Condition based maintenance optimization for multi-component systems using proportional hazards model. Reliab Eng Syst Saf 96(5):581–589

TM5-698-2 (2006) Reliability-centered maintenance (rcm) for command, control, communications, computer, intelligence, surveillance, and reconnaissance (c4isr) facilities, Tm 5-698-2 edn. Headquarters Department of the Army, Washington DC, USA

Tyapin I, Hovland G, Jorde J (2011) Comparison of markov theory and monte carlo simulations for analysis of marine operations related to installation of an offshore wind turbine. In: 24th International Congress on Condition Monitoring (COMADEM), Stavanger, Norway, pp 1071–1081

Van Do P, Barros A, Bérenguer C, Bouvard K, Brissaud F (2013) Dynamic grouping maintenance with time limited opportunities. Reliab Eng Syst Saf 120:51–59

Van Horenbeek A, Pintelon L (2013) A dynamic predictive maintenance policy for complex multi-component systems. Reliab Eng Syst Saf 120:39–50

van Noortwijk JM (2009) A survey of the application of gamma processes in maintenance. Reliab Eng Syst Saf 94(1):2–21

Van Noortwijk J, Kallen M, Pandey M (2005) Gamma processes for time-dependent reliability of structures. In: Advances in Safety and Reliability, Proceedings of ESREL, 1457–1464

Vatn J (2008) Maintenance in the rail industry. In: Complex System Maintenance Handbook, pp 509–531. Springer

Vu HC, Do P, Fouladirad M, Grall A (2020) Dynamic opportunistic maintenance planning for multi-component redundant systems with various types of opportunities. Reliab Eng Syst Saf 198:106854

Wang H (2002) A survey of maintenance policies of deteriorating systems. Eur J Oper Res 139(3):469–489

Wang R, Chen N (2016) A survey of condition-based maintenance modeling of multi-component systems. In: 2016 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), pp 1664–1668. IEEE

Wang J, Zhang X, Zeng J (2021) Optimal group maintenance decision for a wind farm based on condition-based maintenance. Wind Energy 24(12):1517–1535

Wildeman RE, Dekker R, Smit A (1997) A dynamic policy for grouping maintenance activities. Eur J Oper Res 99(3):530–551

Xu W, Wang W, (2012) An adaptive gamma process based model for residual useful life prediction. In: Proceedings of the IEEE 2012 Prognostics and System Health Management Conference (PHM-2012 Beijing), pp 1–4. IEEE

Zhou X, Huang K, Xi L, Lee J (2015) Preventive maintenance modeling for multi-component systems with considering stochastic failures and disassembly sequence. Reliab Eng Syst Saf 142:231–237

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital). This research is a part of the BRU21 NTNU Research and Innovation Program on Digital and Automation Solutions for the Oil and Gas Industry (www.ntnu.edu/bru21) and is financially supported by AkerBP in terms of a Ph.D. project.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Informed consent

No animals or human participants were involved in this research.

Human or animal rights

No animals or human participants were involved in this research.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Definition

Appendix A Definition

1.1 A.1 Condition-based maintenance (CBM)

Corrective or unplanned maintenance is the earliest maintenance technique and later time-based preventive maintenance, or planned maintenance came into practice which performs maintenance at periodic intervals. Eventually, with the increasing complexity of modern development, the cost of time-based preventive maintenance has also increased for industries (Jardine et al. 2006). In such a context, CBM is a more efficient alternative for time-based preventive maintenance. Note that, both CBM and time-based scheduled maintenance are under the same umbrella of preventive maintenance- aiming to maintain a component prior to an actual failure.