Abstract

In this paper we derive a characterization of the distribution of the number of exceedances among the components of a random vector in terms of order statistics of generators of D-norms (GOD). The computation of the fragility index is an immediate consequence.

Similar content being viewed by others

1 Introduction

Let \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) be a random vector that realizes in \(\mathbb {R}^{d}\). We are interested in the number of exceedances among the components \(X_{1},\dots ,X_{d}\) above high thresholds. Precisely, choose \(\boldsymbol {x} = (x_{1},\dots ,x_{d})\in \mathbb {R}^{d}\), \(k\in \left \{{1,\dots ,d}\right \}\) and put \(N_{\boldsymbol {x}}:={\sum }_{i=1}^{d} 1_{(x_{i},\infty )}(X_{i})\). We want to analyze in this paper the probability

for a large x, i.e., each component xi of x is large.

Suppose the vector \(\boldsymbol {x}=(x,\dots ,x)\in \mathbb {R}^{d}\) has constant entry \(x\in \mathbb {R}\) and put \(N_{x}:=N_{(x,\dots ,x)}\). The fragility index (FI) corresponding to X is the asymptotic conditional expected number of exceedances, given that there is at least one exceedance, i.e., \(\text {FI}=\lim _{x\nearrow }E(N_{x}\mid N_{x}>0)\).

The FI was introduced by Geluk et al. (2007) to measure the stability of the stochastic system \(\left \{X_{1},\dots ,X_{d}\right \}\). The system is called stable if FI = 1, otherwise it is called fragile.

In Falk and Tichy (2012) the asymptotic conditional distribution \(p_{k}:= \lim _{x \nearrow }P(N_{x}=k\mid N_{x}>0)\) was investigated under the condition that the components \(X_{1},\dots ,X_{d}\) are identically distributed.

It turned out that this asymptotic conditional distribution of exceedance counts (ACDEC) exists, if the copula C, which corresponds to X by Sklar’s theorem (c.f. Sklar (1959), Sklar (1996)), is in the max-domain of attraction of a multivariate extreme value distribution (EVD) G. This means that, for any \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\le \boldsymbol {0}\in \mathbb {R}^{d}\),

where G is a non degenerate distribution function (df) on \(\mathbb {R}^{d}\). This is quite a mild condition, satisfied by almost every textbook copula C, c.f. Section 3.3 in Falk (2019).

Falk and Tichy (2011) investigated the ACDEC, dropping the assumption that the margins \(X_{1},\dots ,X_{d}\) are identically distributed.

Recent results on D-norms (Falk (2019), Falk and Fuller (2021)) enable in the present paper the representation of the distribution of Nx in terms of order statistics corresponding to the generator of a D-norm, introduced below.

Let \(Z_{1},\dots ,Z_{d}\) be random variables with the properties

Then

defines a norm on \(\mathbb {R}^{d}\), called D-norm, and \(\boldsymbol {Z}=(Z_{1},\dots ,Z_{d})\) is a generator of the D-norm (GOD).

D-norms are the skeleton of multivariate extreme value theory (MEVT) as elaborated in detail in Falk (2019). Suppose, for example, that the random vector \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) follows a standard multivariate Generalized Pareto distribution (GPD). In this case there exists a D-norm \(\left \Vert \cdot \right \Vert _{D}\) on \(\mathbb {R}^{d}\) such that

for all x in a left neighborhood of \(\boldsymbol {0}=(0,\dots ,0)\in \mathbb {R}^{d}\), i.e., for all x ∈ [ε,0]d with some ε < 0. The characteristic property of a GPD is its excursion stability or exceedance stability, see Proposition 3.1.2 and Remark 3.1.3 in Falk (2019). This stability explains the crucial role of GPD in MEVT. In what follows, all operations on vectors such as x ≤y etc. are always meant componentwise.

According to equation (2.16) in Falk (2019), the survival function of a standard GPD is given by

where ≀ ≀x ≀ ≀D is the dual D-norm function pertaining to \(\left \Vert \cdot \right \Vert _{D}\). Note that the df of X is continuous on [ε,0]d and, thus, P(X ≥x) = P(X > x) for x ∈ [ε,0]d.

The generator Z of \(\left \Vert \cdot \right \Vert _{D}\) is not uniquely determined. But we know from Corollary 1.6.3 in Falk (2019) that the dual D-norm function ≀ ≀x ≀ ≀D, which corresponds to \(\left \Vert \cdot \right \Vert _{D}\), is independent of the particular generator Z of \(\left \Vert \cdot \right \Vert _{D}\).

So far we have considered the maximum \(\max \limits _{1\le i\le d}(\left \vert x_{i}\right \vert Z_{i})\) and the minimum \(\min \limits _{1\le i\le d}(\left \vert x_{i}\right \vert Z_{i})\), leading to the D-norm \(\left \Vert \boldsymbol {x}\right \Vert _{D}\) and dual D-norm ≀ ≀x ≀ ≀D by taking expectations. But we can clearly order the set \(\left \{{\left \vert {x_{1}}\right \vert Z_{1},\dots ,\left \vert {x_{d}}\right \vert Z_{d}}\right \}\) completely

and put, for \(k=1,\dots ,d\),

We call this sequence \(\left \Vert \boldsymbol {x}\right \Vert _{D,(1)}\le \left \Vert \boldsymbol {x}\right \Vert _{D,(2)}\le {\dots } \le \left \Vert \boldsymbol {x}\right \Vert _{D,(d)} \) of increasing functions ordered D-norms. In particular

is the dual D-norm function, and

is the D-norm. Note that only \(\left \Vert \boldsymbol {x}\right \Vert _{D,(k)}\) with k = d is actually a norm.

We have, for each \(k=1,\dots ,d\),

i.e., \(\left \Vert \cdot \right \Vert _{D,(k)}\) is homogeneous of order 1, and it is a continuous function on \(\mathbb {R}^{d}\), with \(\left \Vert \boldsymbol {0}\right \Vert _{D,(k)}=0\) for each \(k=1,\dots ,d\). The following result is, therefore, an immediate consequence of Theorem 3.5 in Falk and Fuller (2021).

Lemma 1.1.

For each \(k=1,\dots ,d\), the value \(\left \Vert \boldsymbol {x}\right \Vert _{D,(k)}\) does not depend on the choice of the particular generator Z of \(\left \Vert \cdot \right \Vert _{D}\).

For a vector with constant entry \(\boldsymbol {x}=(x,\dots ,x)\in \mathbb {R}^{d}\) we obtain

where \(Z_{1:d}\le \dots \le Z_{d:d}\) denote the ordered values of \(Z_{1},\dots ,Z_{d}\), i.e., the corresponding usual order statistics. This links the analysis of multivariate exceedances with the theory of univariate order statistics.

Though it has received much attention in the literature, the computation of the exact value E(Zk:d) of an arbitrary order statistic is by no means obvious, even if \(Z_{1},\dots ,Z_{d}\) are independent and identically distributed. The exact value is known only in a few cases. Put, for instance, Zi := 2Ui, 1 ≤ i ≤ d, where \(U_{1},\dots ,U_{d}\) are independent random variables with common uniform distribution on (0,1). In this case we have E(Zk:d) = 2k/(d + 1), see equation (1.7.3) in Reiss (1989).

In the general case, where \(Z_{1},\dots ,Z_{d}\) are not necessarily independent and identically distributed, the exact value E(Zk:d) is in general inaccessible. But a Monte-Carlo simulation would provide an obvious and easy to implement approximation.

The main result in Section 2 is Theorem 2.1, where we establish, for each 1 ≤ k ≤ d, the equation

for x in a left neighborhood of \(\boldsymbol {0}\in \mathbb {R}^{d}\), if \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) follows a standard GPD. From this result we can establish in Eq. 2.1 the exact distribution of the number Nx of exceedances. For \(\boldsymbol {x}=(x_,\dots ,x)\) with constant entry x, we obtain in Eq. 2.3 the corresponding FI.

In Section 3 we extend the results of Section 2 to a random vector X, whose copula is in a proper neighborhood of a shifted GPD, see Eq. 3.1. The main result is Theorem 3.1. It leads to the representation (3.5) of the probability that Xi > xi for at least k components under the mild condition that that the copula corresponding to the random vector \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) is in the max-domain of attraction of a multivariate max-stable df G. Note that this condition does not require an absolutely continuously distributed random vector X.

2 Main Result for Standard GPD

The following result reveals the particular significance of ordered D-norms concerning multivariate exceedances of standard GPD.

Theorem 2.1.

Suppose the random vector \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) follows a standard GPD with corresponding D-norm \(\left \Vert \cdot \right \Vert _{D}\) on \(\mathbb {R}^{d}\). Then we have

for any \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\) in a left neighborhood of \(\boldsymbol {0}\in \mathbb {R}^{d}\).

The assertion is obvious for k = d, because \(\left \Vert \cdot \right \Vert _{D,(1)}=\wr \!\!\wr \cdot \wr \!\!\wr _{D}\). For k = 1 we obtain

for x in a left neighborhood of \(\boldsymbol {0}\in \mathbb {R}^{d}\).

Proof 1 (Proof of Theorem 2.1 ).

According to equation (2.14) in Falk (2019), we can suppose the representation

where the random variable U is on (0,1) uniformly distributed and independent of Z. We can also suppose by Theorem 1.7.1 in Falk (2019) that the generator Z is bounded.

Conditioning on U = u, we obtain for \(\boldsymbol {x}\le \boldsymbol {0}\in \mathbb {R}^{d}\) close enough to 0, by the boundedness of Z,

using the general equation \(E(Y)={\int \limits }_{0}^{\infty } P(Y > u) du\) with a random variable Y ≥ 0. □

We obtain from the previous result the exact distribution of the number of exceedances for x in a left neighborhood of \(\boldsymbol {0}\in \mathbb {R}^{d}\) if \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) follows a standard GPD:

With

the numbers px,D(k), \(k=0,\dots ,d\), define the distribution of the exceedance counts Nx on \(\left \{0,1,\dots ,d\right \}\).

In case of a vector \(\boldsymbol {x}=(x,\dots ,x)\in \mathbb {R}^{d}\) with constant entries, we obtain, with Z0:d := 0,

This reveals a crucial role of the spacingsZd−k+ 1:d − Zk−d:d in the analysis of multivariate exceedances.

The expectation of Nx is by Eq. 2.2

by the fact that E(Zk) = 1, 1 ≤ k ≤ d. The fragility index is, therefore, with x < 0 close enough to zero,

Note that in this case of a standard GPD, the number E(Nx∣Nx > 0) does not depend on x, i.e., the FI does not require a limit. The number \(\left \Vert \boldsymbol {1}\right \Vert _{D}\) is known as the extremal coefficient, introduced by Smith (1990). It measures the tail dependence of the components \(X_{1},\dots ,X_{d}\) by just one number. If we have \(\left \Vert {\boldsymbol {1}}\right \Vert _{D}=d\), then there is tail independence, and in case \(\left \Vert {\boldsymbol {1}}\right \Vert _{D}=1\) we have complete dependence, see Falk (2019), equation (2.28).

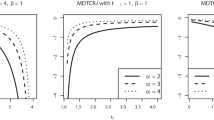

Example 2.1.

Take, for example, the Dirichlet D-norm \(\left \Vert \cdot \right \Vert _{D(\alpha )}\) with parameter α = 1, see Example 1.7.4 in Falk (2019). Its generator is \(\boldsymbol {Z}=(Z_{1},\dots ,Z_{d})\), where \(Z_{1},\dots ,Z_{d}\) are independent and identically standard exponential distributed random variable. We have

see equation (1.7.7) in Reiss (1989) and, thus, we obtain for a vector with constant entry \(\boldsymbol {x}=(x,\dots ,x)\in \mathbb {R}^{d}\)

Recall that the previous considerations require that the vector x is in a left neighborhood of \(\boldsymbol {0}\in \mathbb {R}^{d}\). Otherwise, with arbitrary x≠ 0, the preceding equation could not be true.

The fragility index is consequently,

with the extremal coefficient

Example 2.2.

Let \(U_{1},\dots ,U_{d}\) be independent and on (0,1) uniformly distributed random variables, i.e., P(Ui ≤ u) = u, u ∈ [0,1], 1 ≤ i ≤ d. Then \(\boldsymbol {Z}=(Z_{1},\dots ,Z_{d}):=2(U_{1},\dots ,U_{d})\) is a generator of a D-norm \(\left \Vert \cdot \right \Vert _{D}\). From the fact that E(Uk:d) = k/(d + 1), 1 ≤ k ≤ d, see equation (1.7.3) in Reiss (1989), we obtain E(Zk:d) = 2k/(d + 1) and, thus, by Eq. 2.2,

In this case, the conditional probability that we have exactly k exceedances above x among \(X_{1},\dots ,X_{d}\), given that there is at least one, is by Eq. 2.1,

which is the uniform distribution on the set of integers \(\left \{1,\dots ,d\right \}\). The fragility index is FI = (d + 1)/2 and the extremal coefficient is \(\left \Vert \boldsymbol {1}\right \Vert _{D}=1/E(Z_{d:d}) = (d+1)/2d\to _{d\to \infty }1/2\).

Suppose that the copula C of the random vector \(\boldsymbol {Y}=(Y_{1},\dots ,Y_{d})\) is a generalized Pareto copula (GPC), i.e., there exists a D-norm \(\left \Vert \cdot \right \Vert _{D}\) on \(\mathbb {R}^{d}\) such that

for some \(\boldsymbol {u}_{0}<\boldsymbol {1}\in \mathbb {R}^{d}\), see Section 3.1 in Falk (2019). Then we have equality in distribution

where Fi is the df of Yi, 1 ≤ i ≤ d, and the random vector \(\boldsymbol {U}=(U_{1},\dots ,U_{d})\) follows the GPC C. Note that the random vector X := U −1 then follows a GPD with D-norm \(\left \Vert \cdot \right \Vert _{D}\).

From the general equivalence F− 1(q) > t ⇔ q > F(t), valid for q ∈ (0,1), \(t\in \mathbb {R}\) and an arbitrary univariate df F, we obtain

for y large enough.

3 Extension to Multivariate Max-Domain of Attraction

In the next step we extend the considerations in the previous section to a copula C, which satisfies the max-domain of attraction condition (1.1). In this case there exists a D-norm \(\left \Vert \cdot \right \Vert _{D}\) on \(\mathbb {R}^{d}\) with

and the EVD G is given by

see Corollary 3.1.6 in Falk (2019).

Example 3.1.

Take an arbitrary Archimedean copula on \(\mathbb {R}^{d}\)

where φ is a continuous and strictly increasing function from (0,1] to \([0,\infty )\) with φ(1) = 0, see, for example, McNeil and Nešlehová (2009), Theorem 2.2. Suppose that

exists in \([1,\infty ]\). Then Cφ satisfies condition (3.1) with the D-norm \(\left \Vert \cdot \right \Vert _{D}\) being the logistic one \(\left \Vert {\boldsymbol {x}}\right \Vert _{D}=\left \Vert {\boldsymbol {x}}\right \Vert _{p}=\left ({\sum }_{i=1}^{p}\left \vert {x_{i}}\right \vert ^{p}\right )^{1/p}\), with parameter \(p\in [1,\infty ]\) and the convention \(\left \Vert {\boldsymbol {x}}\right \Vert _{\infty }=\max \limits _{1\le i\le d}\left \vert {x_{i}}\right \vert \), see Corollary 3.1.15 in Falk (2019).

Theorem 3.1.

Suppose that the random vector \(\boldsymbol {U}=(U_{1},\dots ,U_{d})\) follows a copula C, which satisfies Eq. 3.1. Then we have, for each \(k=1,\dots ,d\),

as t ↓ 0, for each \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\le \boldsymbol {0}\in \mathbb {R}^{d}\).

The preceding result implies for a random vector U, whose copula satisfies Eq. 3.1,

\(\boldsymbol {x}\le \boldsymbol {0}\in \mathbb {R}^{d}\), x≠0, as \(\left \Vert \boldsymbol {x}\right \Vert \to 0\), with an arbitrary norm \(\left \Vert \cdot \right \Vert \) on \(\mathbb {R}^{d}\).

By repeating the arguments in Section 2, we obtain for the FI corresponding to \(N_{tx}={\sum }_{i=1}^{d} 1_{(1+tx,1]}(U_{i})\), x < 0, if the copula C of \(\boldsymbol {U}=(U_{1},\dots ,U_{d})\) satisfies condition (3.1),

This was already observed in Falk and Tichy (2012), Theorem 4.1.

The following representation of \(\left \Vert \boldsymbol {x}\right \Vert _{D,(d-k+1)}\) will be a crucial tool in the derivation of Theorem 3.1. We briefly explain its notation. By \(\boldsymbol {e}_{i}=(0,\dots ,0,1,0,\dots ,0)\in \mathbb {R}^{d}\) we denote the i-th unit vector in \(\mathbb {R}^{d}\), \(i=1,\dots ,d\). Any \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\in \mathbb {R}^{d}\) can be represented as \(\boldsymbol {x}={\sum }_{i=1}^{d} x_{i}\boldsymbol {e}_{i}\). Choose a subset \(A\subset {\left \{{1,\dots ,d}\right \}}\). Then we set

with the convention \(\boldsymbol {x}_{\emptyset }=\boldsymbol {0}\in \mathbb {R}^{d}\). By \(\left \vert A\right \vert \) we denote the number of elements in a set A.

Lemma 3.1.

We have for any D-norm \(\left \Vert \cdot \right \Vert _{D}\) on \(\mathbb {R}^{d}\) and each \(k=1,\dots ,d\)

The preceding probabilistic result entails the following non probabilistic representation of the (d − k + 1)-th smallest value among arbitrary nonnegative numbers \(x_{1},\dots ,x_{d}\) in terms of maxima of subsets of \(\left \{{x_{1},\dots ,x_{d}}\right \}\).

Choosing the particular D-norm \(\left \Vert \cdot \right \Vert _{D}=\left \Vert \cdot \right \Vert _{\infty }\), with constant generator \(\boldsymbol {Z}=(1,\dots ,1)\), Lemma 3.1 implies, with \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\ge \boldsymbol {0}\in \mathbb {R}^{d}\),

In particular for k = d we obtain

which is a well known representation of a minimum of nonnegative numbers in terms of maxima, c.f. Lemma 1.6.1 in Falk (2019).

Proof 2 (Proof of Lemma 3.1 ).

We present a probabilistic proof of Lemma 3.1. Let \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) follow a standard GPD with D-norm \(\left \Vert \cdot \right \Vert _{D}\), i.e.,

for all x ∈ [ε,0]d, with some ε < 0. For such \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\) we obtain from Theorem 2.1, with \(k\in \left \{{1,\dots ,d}\right \},\)

The Inclusion-Exclusion Principle (see Corollary 1.6.2 in Falk (2019)) implies

where the final equation is due to the fact that \({\sum }_{S\subset T}(-1)^{\left \vert S\right \vert } = 0\), see equation (1.10) in Falk (2019).

Altogether we have shown that, for x ∈ [ε,0]d,

The fact that \(\left \Vert t\boldsymbol {x}\right \Vert _{D,(d-k+1)}=t\left \Vert \boldsymbol {x}\right \Vert _{D,(d-k+1)}\) and \(\left \Vert t\boldsymbol {x}_{S\cup T^{\complement }}\right \Vert _{D} = t \left \Vert \boldsymbol {x}_{S\cup T^{\complement }}\right \Vert _{D}\) for t ≥ 0 implies that the above equation is true for each \(\boldsymbol {x}\in \mathbb {R}^{d}\). □

Now we can prove Theorem 3.1 in a straightforward way.

Proof 3 (Proof of Theorem 3.1 ).

Let the random vector \(\boldsymbol {U}=(U_{1},\dots ,U_{d})\) follow a copula C, which satisfies Eq. 3.1. Choose \(k\in \left \{{1,\dots ,d}\right \}\) and \(\boldsymbol {x}=(x_{1},\dots ,x_{d})\le \boldsymbol {0}\in \mathbb {R}^{d}\). By repeating arguments in the proof of Lemma 3.1 we obtain

by Lemma 3.1. This completes the proof of Theorem 3.1. □

Consider next a random vector \(\boldsymbol {X}=(X_{1},\dots ,X_{d})\) that is in the max-domain of attraction of a multivariate max-stable df G. This is equivalent with the condition that the copula C corresponding to X satisfies Eq. 3.1, together with the condition that, for each \(i=1,\dots ,d\), the (univariate) df Fi of Xi is in the max-domain of attraction of a univariate max-stable df Gi; see, e.g. Proposition 3.1.10 in Falk (2019).

Then we obtain from Eq. 3.2, with \(\boldsymbol {U}=(U_{1},\dots ,U_{d})\) following the copula C, so that \(\boldsymbol {X}=(X_{1},\dots ,X_{d})=_{\mathcal D}\left (F_{1}^{-1}(U_{1}),\dots ,F_{d}^{-1}(U_{d})\right )\)

with an arbitrary norm \(\left \Vert \cdot \right \Vert \) on \(\mathbb {R}^{d}\). Note that we actually do not have to require in Eq. 3.5 that each univariate margin Fi is in the max-domain of attraction of a univariate max-stable df. The preceding equation is, therefore, true if the copula C corresponding to X satisfies condition (3.1). Note that this condition on the copula of X does not require that X is absolutely continuous, i.e., Eq. 3.5 is true for arbitrary univariate df \(F_{1},\dots ,F_{d}\), not necesscarily continuous ones.

But, if each Fi is in the max-domain of attraction of a max-stable df Gi, then there exist constants ait > 0, \(b_{it}\in \mathbb {R}\) for t > 0, with

see, for example, Falk (2019), equation (2.3). As a consequence we obtain from Eq. 3.5

if Gi(yi) ∈ (0,1], 1 ≤ i ≤ d.

Suppose identical distributions of the components of X, i.e., \(F_{1}=\dots =F_{d}=: F\) and identical entries of y, i.e., \(y_{1}=\dots =y_{d}=: y\). Then we can repeat the arguments in Section 2, with \(\boldsymbol {x}:=(F(y)-1,\dots ,F(y)-1)\), and obtain, with \(N_{y}:={\sum }_{i=1}^{d} 1_{(y,\infty )}(X_{i})\),

as \(y\uparrow \omega (F):= \sup \left \{t\in \mathbb {R}: F(t)<1\right \}\).

In particular we obtain for the FI

as already observed in (Falk and Tichy, 2012, Theorem 5.1).

If, for example, the underlying D-norm is a logistic one, \(\left \Vert \boldsymbol {x}\right \Vert _{D}=\left \Vert \boldsymbol {x}\right \Vert _{p}:=\left ({\sum }_{i=1}^{d}\left \vert x_{i}\right \vert ^{p}\right )^{1/p}\), with parameter \(p\in [1,\infty ]\), and the convention \(\left \Vert {\boldsymbol {x}}\right \Vert _{\infty }=\max \limits _{1\le i\le d}\left \vert {x_{i}}\right \vert \), then the FI is

If p = 1, the components \(X_{1},\dots ,X_{d}\) are tail independent with FI = 1, i.e., the system \(\left \{X_{1},\dots ,X_{d}\right \}\) is stable. If \(p=\infty \), the components \(X_{1},\dots ,X_{d}\) are completely tail dependent and FI = d, i.e., the system is extremely fragile.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Falk, M. (2019). Multivariate Extreme Value Theory and D-Norms. Springer International. https://doi.org/10.1007/978-3-030-03819-9.

Falk, M. and Fuller, T. (2021). New characterizations of multivariate max-domain of attraction and D-norms. Extremes 24, 849–879. doi: https://doi.org/10.1007/s10687-021-00416-4.

Falk, M. and Tichy, D. (2011). Asymptotic conditional distribution of exceedance counts: fragility index with different margins. Ann. Inst. Stat. Math. 64, 1071–1085. doi: https://doi.org/10.1007/s10463-011-0348-3.

Falk, M. and Tichy, D. (2012). Asymptotic conditional distribution of exceedance counts. Adv. Appl. Probab. 44, 270–291. doi: https://doi.org/10.1239/aap/1331216653.

Geluk, J.L., de Haan, L. and de Vries, C.G. (2007). Weak & strong financial fragility. Tinbergen Institute Discussion Paper. TI 2007-023/2.

McNeil, A.J, and Nešlehová, J. (2009). Multivariate Archimedean copulas, d-monotone functions and ℓ1,-norm symmetric distributions. Ann. Statist. 37, 3059–3097. doi: https://doi.org/10.1214/07-AOS556.

Reiss, R.-D. (1989). Approximate Distributions of Order Statistics: With Applications to Nonparametric Statistics. Springer Series in Statistics. Springer, New York. https://doi.org/10.1007/978-1-4613-9620-8.

Sklar, A. (1959). Fonctions de répartition à n dimensions et leurs marges. Pub. Inst. Stat. Univ. Paris 8, 229–231.

Sklar, A. (1996). Random variables, distribution functions, and copulas – a personal look backward and forward. In Distributions with fixed marginals and related topics. (L. Rüschendorf, B. Schweizer, and M.D. Taylor, eds), Lecture Notes – Monograph Series, vol. 28, 1–14. Institute of Mathematical Statistics, Hayward, CA. https://doi.org/10.1214/lnms/1215452606.

Smith, R.L. (1990). Max-stable processes and spatial extremes.Preprint. Univ. North Carolina, http://www.stat.unc.edu/faculty/rs/papers/RLS_Papers.html.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The author declares that he has no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Falk, M. Exceedance Counts and GOD’s Order Statistics. Sankhya A 85, 1651–1666 (2023). https://doi.org/10.1007/s13171-023-00307-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13171-023-00307-9

Keywords

- Multivariate extreme value theory

- multivariate max-domain of attraction

- fragility index

- D-norm

- generator of D-norm

- multivariate exceedance

- ordered D-norms

- generalized Pareto distributions

- generalized Pareto copula.