Abstract

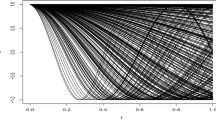

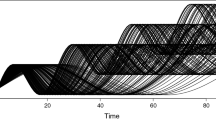

In this paper, we estimate the conditional cumulative distribution function of a randomly censored scalar response variable given a functional random variable using the local linear approach. Under this structure, we state the asymptotic normality with explicit rates of the constructed estimator. Moreover, the usefulness of our results is illustrated through a simulated study.

Similar content being viewed by others

Change history

08 March 2022

A Correction to this paper has been published: https://doi.org/10.1007/s13171-022-00279-2

Notes

Let (xn)n∈N be a sequence of real random variables. We say that (xn) converges almost-completely (a.co.) toward zero if, and only if, for all 𝜖 > 0, \({\sum }_{n=1}^{\infty } \mathbb {P}(\mid x_{n} \mid >\epsilon ) < \infty \). Moreover, we say that the rate of the almost-complete convergence of (xn) to zero is of order un (with n → 0) and we write xn = Oa.co.(un) if, and only if, there exists 𝜖 > 0 such that \({\sum }_{n=1}^{\infty }\mathbb {P}(\mid x_{n}\mid >\epsilon u_{n}) < \infty \). This kind of convergence implies both the almost-sure convergence and the convergence in probability.

References

Altendji, B., Demongeot, J., Laksaci, A and Rachdi, M (2018). Functional data analysis: estimation of the relative error in functional regression under random left-truncation model. Journal of Nonparametric Statistics 30, 472–490.

Ayad, S., Laksaci, A., Rahmani, S. and Rouane, R (2020). On the local linear modelization of the conditional density for functional and ergodic data. METRON 78, 237–254.

Al-Awadhi, F., Kaid, A., Laksaci, Z., Ouassou, I. and Rachdi, M. (2019). Functional data analysis: local linear estimation of the L1,-conditional quantiles. Statistical Methods and Applications 28, 217–240.

Baìllo, A. (2009). Local linear regression for functional predictor and scalar response. J. of Multivariate Anal 100, 102–111.

Barrientos, J., Ferraty, F. and Vieu, P (2010). Locally Modelled Regression Functional Data. J. Nonparametr. Statist 22, 617–632.

Beran, R (1981). Nonparametric Regression with Randomly Censored Survival Data, Technical report, University of California, Berkeley.

Berlinet, A., Elamine, A. and Mas, A (2011). Local linear regression for functional data. Inst. Statist. Math. 63, 1047–1075.

Bouanani, O., Laksaci, A., Rachdi, M. and Rahmani, S (2019). Asymptotic normality of some conditional nonparametric functional parameters in high-dimensional statistics. Behaviormetrika 46, 199–233.

Demongeot, J., Laksaci, A., Madani, F. and Rachdi, M (2013). Functional data: local linear estimation of the conditional density and its application. Statistics47, 26–44.

Demongeot, J., Laksaci, A., Rachdi, M. and Rahmani, S (2014). On the local linear modelization of the conditional distribution for functional data. Sankhya 76, 328–355.

Demongeot, J., Laksaci, A., Naceri, A. and Rachdi, M. (2017). Local Linear RegressionModelization When All Variables are Curves. Statistics and Probability Letters 121, 37–44.

Fan, J. and Gijbels, I. (1994). Censored regression: local linear approximations and their applications. Journal of the American Statistical Association 89, 560–570.

Ferraty, F., Laksaci, A. and Vieu, P. (2006a). Estimation of some characteristics of the conditional distribution in nonparametric functional models. Statist. Inference Stoch. Process. 9, 47–76.

Ferraty, F. and Vieu, P. (2006b). Nonparametric functional data analysis: Theory and Practice. Springer Series in Statistics, New York.

Ferraty, F., Mas, A. and Vieu, P (2007). Nonparametric regression on functional data: inference and practical aspects. Australian New Zealand J. Statistics49, 267–286.

Helal, N. and Ould-Saïd, E. (2016). Kernel conditional quantile estimator under left truncation for functional regressors. Opuscula Mathematica 36, 25–48.

Horrigue, W. and Ould-Saïd, E. (2011). Strong uniform consistency of a nonparametric estimator of a conditional quantile for censored dependent data and functional regressors. Random Operators and Stochastic Equations 19, 131–156.

Horrigue, W. and Ould-Saïd, E. (2014). Nonparametric regression quantile estimation for dependant functional data under random censorship: Asymptotic normality. Communications in Statistics – Theory and Methods 44, 4307–4332.

Kaplan, E. M. and Meier, P (1958). Nonparametric estimation from incomplete observations. Journal of the American Statistical Association 53, 457–481.

Laksaci, A., Rachdi, M. and Rahmani, S. (2013). Spatial modelization: local linear estimation of the conditional distribution for functional data. Spat. Statist. 6, 1–23.

Leulmi, S (2019). Local linear estimation of the conditional quantile for censored data and functional regressors. Communications in Statistics-Theory and Methods, 1–15.

Lipsitz, S. R. and Ibrahim, J. G. (2000). Estimation with Correlated Censored Survival Data with Missing Covariates. Biostatistics 1, 315–27.

Ling, N., Liu, Y. and Vieu, P (2015). Nonparametric regression estimation for functional stationary ergodic data with missing at random. Journal of Statistical Planning and Inference 162, 75–87.

Ling, N., Liu, Y. and Vieu, P (2016). Conditional mode estimation for functional stationary ergodic data with responses missing at random. Statistics 50, 991–1013.

Li, W. V. and Shao, Q. M. (2001). Gaussian processes: inequalities, small ball probabilities and applications. Handbook of Statistics 19, 533–597.

Elias Ould, S. and Sadki, Q. (2011). Asymptotic normality for a smooth kernel estimator of the conditional quantile for censored time series. South African Statistical Journal 45, 65–98.

Kohler, M., Kinga, M. and Márta, P. (2002). Prediction from randomly right censored data. Journal of Multivariate Analysis 80, 73–100.

Kohler, M., Màthè, K. and Pintèr, M. (2002). Prediction from randomly right censored data. Journal of Multivariate Analysis 80, 73–100.

Ren, J. J. and Gu, M (1997). Regression M-estimators with doubly censored data. Ann. Statist 25, 2638–2664.

Stute, W (1993). Consistent estimation under random censorship when covariables are present. J. Multivariate Anal. 45, 89–103.

Zhou, Z. Y. and Lin, Z. (2016). Asymptotic normality of locally modelled regression estimator for functional data. Nonparametric Stat. 28, 116–131.

Acknowledgements

The authors would like to thank the Editor and the two anonymous reviewers for their valuable comments and suggestions which improved substantially the quality of an earlier version of this paper. Moreover, authors are very grateful to the Laboratory of Stochastic Models, Statistics and Applications, University of Saida in Algeria for their administrative and technical support.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that there is no conflict of interest and declare that no funding was received to carry out this research.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Proof of Lemma 1

We mention that the proof of this lemma is very close to the proof of Theorem 2.1 of Bouanani et al. (2019). Specifically, we have:

Next, by using Eqs. 2.4, 3.5 can be rewritten as follows:

Let

and

Finally, the decomposition (3.5) becomes:

So, it suffices to apply Slutsky’s Theorem to prove the asymptotic normality of Eq. 3.5. This last is reached by proving the following asymptotic results:

1.2 Proof of Lemma 3

Proof of (i)

Using the fact that \( \delta _{1} T_{1} \overline {G}^{-1}(T_{1}) \leq \tau _{L} \overline {G}^{-1}(\tau _{L}) \) (see, Kohler et al. (2002) for more details) and by assumptions ((H.2)-(i)), (H.3) and (H.5) we have:

Therefore, we obtain:

Proof of (ii)

By applying Hypothesis (H.5) and using the fact that \(\displaystyle {1\!\!1_{Y_{1}\leq C_{1}}} \varphi \) \((T_{1})=\displaystyle {1\!\!1_{Y_{1}\leq C_{1}}} \varphi (Y_{1})\), where φ(.) is a measurable function, we get:

Proof of (iii)

From the previous relationship (ii) and by assumption (H.5), we have:

Proof of (iv)

This assertion is obtained by a straightforward application of the Glivenko-Cantelli Theorem for the censored case (see, for more details Kohler et al., 2002).

1.3 Proof of Lemma 4

By the definition of conditional variance we have:

For the second term of the right hand side of Eq. 3.9, by using our technical Lemma 3, we have:

Then, using an integration par parts and a change of variables together with the notation (2.5) allow to get:

This last result is obtained by using assumption ((H.1)-(iii)) and ((H.3)-(ii)).

On the other hand, the first term on the right hand side of Eq. 3.9, can be written as follows:

Again, by applying the same change of variables and using a Taylor expansion of order one of \(\overline {G}(y-th_{J})\), we obtain:

where y∗ is between y and y − thJ.

Now, under assumption ((H.3)-(ii)) and ((H.5)-(ii)), we have:

Concerning the term w1, with integration by parts we get:

By the continuity of Fx and remarking that \( {\int \limits }_{\mathbb {R}}2 J(t)^{\prime }{J(t)}F^{x}(y)dt=F^{x}(y)\), we deduce that: \(w_{1}=\displaystyle \frac {F^{x}(y)}{\overline {G}(y)}\).

Proof of Eq. 3.6

In order to apply the Lindeberg central limit Theorem, we need to compute the asymptotic term Θn. For this we have:

Concerning the second term on the right hand side of Eq. 3.10, we have:

Moreover, by using equation of Eq. 3.9, we get:

Now, for the first term on the right hand side of Eq. 3.10, we have:

Combining Lemmas A.1 in Zhou and Lin (2016) and Eqs. 4 with Eq. 3.11, we get:

and

To complete the proof of the claim (3.6), it suffices to use the Lindeberg’s central limit theorem on Rjn which satisfies the following condition:

where

μn is the mean of the first term on the right side.

On the other hand, we have:

From the technical lemma A.1 of Zhou and Lin (2016) and by using the fact that \( \mid J_{1} \delta _{1} [\overline {G}(Y_{1})]^{-1} -F^{x}(y)\mid \leq \overline {G}^{-1} (\tau _{L})+1\), (see Ould Saïd et al. 2011) and under assumption (H.3)(i), (H.3)(ii) and (H.4)(iii), we obtain

Therefore, for n large enough, we deduce that \(\left \lbrace \sqrt {n}\mid R_{1n}\mid >\epsilon \sqrt {n Var({\Theta }_{n})}\right \rbrace \) is an empty set. The proof of the claim Eq. 3.6 is therefore complete.

proof of Eq. 3.7

By using Cauchy-Schwarz’s inequality, we obtain:

Again, by applying the technical lemma A.1 of Zhou and Lin (2016) for the first term on the right-hand side of Eq. 3.14, we get:

Concerning the second term on the right-hand side of Eq. 3.14, we have:

By using the fact that \( \mid J_{i} \delta _{j} [\overline {G}Y_{i})]^{-1} -F^{x}(y)\mid \leq [\overline {G}(\tau _{L})]^{-1} +1\), (see, Ould Saïd et al. 2011) and by the application of the same technical lemma A.1 of Zhou and Lin (2016), we obtain under assumption (H3) and Eq. 3.11:

In addition, by combining (3.15) and (3.16), we deduce that

Finally to obtain the convergence in probability it suffices to use Bienaymé-Tchebychev’s inequality. Indeed, we obtain for all ε > 0

Proof of Eq. 3.8

By following the same idea as in the proof of claim (3.7), we have

In addition, by applying Bienaymé-Tchebychev’s inequality, we deduced that:

1.4 Proof of Lemma 2

To prove the convergence in probability of Rn(x, y) to 0, it suffices to show the two following expressions:

By the definition of \( \widehat {F}^{x}_{N}(y)\) and \(\widetilde {F}^{x}_{N}(y)\), we obtain:

On the other side, using Lemma 3 in Leulmi (2019), we have: \(\mid \widehat {F}^{x}_{D}\mid \leq \displaystyle \frac {log(n)}{n\phi _{x}(h_{K})}+1\) and under our technical Lemma 3 and by assumption (H.4)(ii) we get:

Proof of Eq. 3.18

By the definition of \(\widetilde { F}^{x}_{N}(y)\), we have:

where

Finally, by using Lemma 3.2 in Demongeot et al. (2014) and by assumption (H.4)(ii), we obtain

Conclusion

The theoretical study of this paper aims mainly at investigating relationships between scalar censored and functional variables throuth a local linear estimation of the CDF. The application part was devoted to a study of its performance on a finite sample. This study demonstrates more efficiency for the local linear method than the classical method in the presence of censored data. Accordingly, this paper displays three main statements. First, the focus has been on determining the leading terms, which are the bias and the asymptotic variance, and where results are obtained under standard hypotheses that allow a large flexibility in the selection of the parameters. Second, the proposed asymptotic study can be also considered as a preliminary investigation allowing various theoretical issues. Among them, optimal selection of the bi-functional operators β and δ, optimal choice of the smoothing parameters or the different types of censorship. Third, the estimation procedure used for this study can also be employed in other conditional models. Finally, this study contributes greatly in both theory and practice and which is assessed by the numerous open questions.

Rights and permissions

About this article

Cite this article

Rahmani, S., Bouanani, O. Local linear estimation of the conditional cumulative distribution function: Censored functional data case. Sankhya A 85, 741–769 (2023). https://doi.org/10.1007/s13171-021-00276-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13171-021-00276-x

Keywords

- Asymptotic normality

- Censored data

- Conditional cumulative distribution

- Functional data

- Local linear estimation