Abstract

Recently Oishi published a paper allowing lower bounds for the minimum singular value of coefficient matrices of linearized Galerkin equations, which in turn arise in the computation of periodic solutions of nonlinear delay differential equations with some smooth nonlinearity. The coefficient matrix of linearized Galerkin equations may be large, so the computation of a valid lower bound of the smallest singular value may be costly. Oishi’s method is based on the inverse of a small upper left principal submatrix, and subsequent computations use a Schur complement with small computational cost. In this note some assumptions are removed and the bounds improved. Furthermore a technique is derived to reduce the total computationally cost significantly allowing to treat infinite dimensional matrices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Main result

Certain periodic solutions of nonlinear delay differential equations with some smooth nonlinearity can be calculated by some Galerkin equations. Oishi’s paper [3] discusses how to obtain a lower bound for the smallest singular value of the coefficient matrix G of the linearized Galerkin equation.

Throughout this note \(\Vert \cdot \Vert \) denotes the \(\ell _2\)-norm, and we use the convention that matrices are supposed to be nonsingular when using their inverse. Dividing the matrix G into blocks

with a square upper left block A of small size, often \(\Vert G^{-1}\Vert \) is in practical applications not too far from \(\Vert A^{-1}\Vert \). In other words, the main information on \(\Vert G^{-1}\Vert \) sits in A. Moreover, the matrix G shares some kind of diagonal dominance so that \(\Vert D^{-1}\Vert \) can be expected to be not too far from \(\Vert D_d^{-1}\Vert \) for the splitting \(D = D_d+D_f\) into diagonal and off-diagonal part.

Based on that Oishi discusses in [3] how to obtain an upper bound on \(\Vert G^{-1}\Vert = \sigma _{\min }(G)^{-1}\) by estimating the influence of the remaining blocks B, C, D. Oishi proves the following theorem.

Theorem 1.1

Let n be a positive integer, and m be a non-negative integer satisfying \(m \le n\). Let \(G \in M_n(\mathbb {R})\) be as in (1) with \(A \in M_m(\mathbb {R})\), \(B \in M_{m,n-m}(\mathbb {R})\), \(C \in M_{n-m,m}(\mathbb {R})\) and \(D \in M_{n-m}(\mathbb {R})\). Let \(D_d\) and \(D_f\) be the diagonal part and the off-diagonal part of D, respectively. If

then G is invertible and

In a practical application the matrix G may be large. So the advantage of Oishi’s bound is that with small computational effort a reasonable upper bound for \(\Vert G^{-1}\Vert \), and thus a lower bound for \(\sigma _{\min }(G)^{-1}\), is derived. In particular the clever use of Schur’s complement requires only a small upper left part A of G to be inverted, whereas the inverse of the large lower right part D is estimated using a Neumann expansion.

For \(p:= \min (m,n)\), denote by \(I_{m,n}\) the matrix with the \(p \times p\) identity matrix in the upper left corner. If \(m=n\), we briefly write \(I_m\). Oishi’s estimate is based on the factorization [2] of G using its Schur complement

It follows

where for \(N \in M_{m,n-m}(\mathbb {R})\)

To bound \(\Vert (D-CA^{-1}B)^{-1}\Vert \) the standard estimate

based on a Neumann expansion is used. In practical applications as those in [3] this imposes only a small overestimation, and often the maximum in the middle of (4) is equal to \(\Vert A^{-1}\Vert \).

Thus it remains to bound \(\varphi (N)\) for \(N \in \{CA^{-1},A^{-1}B\}\) which is done in [3] by \(\varphi (N) \le \displaystyle \frac{1}{1-\Vert N\Vert }\) requiring \(\Vert N\Vert < 1\). The following lemma removes that restriction and gives an explicit formula for \(\varphi (N)\).

Lemma 1.2

Let \(H \in M_n(\mathbb {R})\) have the block representation

for \(1 \le k < n\) and \(N \in M_{k,n-k}(\mathbb {R})\) with \(\Vert N\Vert \ne 0\). Abbreviate \(\mu := \Vert N\Vert \) and define

Then

Proof

Let \(x \in \mathbb {R}^k\) and \(y \in \mathbb {R}^{n-k}\). Then

with equality if, and only if, \(\Vert Ny\Vert = \Vert N\Vert \Vert y\Vert \) and x is a positive multiple of Ny. Let \(u \in \mathbb {R}^k\) and \(v \in \mathbb {R}^{n-k}\) with \(\Vert u\Vert = \Vert v\Vert = 1\) and \(Nv = \Vert N\Vert u\) and define \(y:= \alpha v\) and \(x:= \sqrt{1-\alpha ^2} \, u\). Then \(\left\| \left( \begin{array}{cc} \hspace{-4pt} x \hspace{-4pt}\\ \hspace{-4pt} y \hspace{-4pt}\\ \end{array}\right) \right\| = 1\) and

so that the maximum of \(\nu (\alpha )\) over \(\alpha \in [0,1]\) is equal to \(\Vert H\Vert ^2\). A computation yields

Setting the derivative to zero and abbreviating \(\beta := \alpha ^2\) gives

with positive solution

for nontrivial N. That proves the lemma. \(\square \)

The lemma removes the first two assumptions in (2). In practical applications those are usually satisfied, so the advantage is that they do not have to be verified. That proves the following.

Theorem 1.3

Let n, m and \(G \in M_n(\mathbb {R})\) with the splitting as in (1) be given. With the splitting \(D = D_d+D_f\) into diagonal and off-diagonal part assume

Then G is invertible and

using \(\psi (\cdot )\) as in (5) in Lemma 1.2.

For a matrix G arising in linearized Galerkin equations the norm \(\Vert (D-CA^{-1}B)^{-1}\Vert \) of the inverse of the Schur complement is often dominated by \(\Vert A^{-1}\Vert \). That is because of the increasing diagonal elements \(D_d\) of the lower right part of G, implying that \(\Vert D_d^{-1}\Vert \) is equal to the reciprocal of the left upper element of \(D_d\) and \(\Vert D_d^{-1}(D_f-CA^{-1}B)^{-1}\Vert \) to become small. In that case the bound (8) reduces to \(\Vert G^{-1}\Vert \le \Vert A^{-1}\Vert \; \psi (A^{-1}B) \; \psi (CA^{-1})\). It can be adapted to other norms following the lines of the proof of Lemma 1.2.

2 Computational improvement and an infinite dimensional example

For both the original bound (3) and the new bound (8) the main computational effort is to estimate \(\Vert D_d^{-1}(D_f-C A^{-1} B)\Vert \) requiring \({\mathcal {O}}((n-m)^2\,m)\) operations. It often suffices to use \(\Vert N\Vert \le \sqrt{\Vert N\Vert _1\Vert N\Vert _{\infty }} =: \pi (N)\) and the crude estimate

That avoids the product of large matrices and to compute the \(\ell _2\)-norm of large matrices, thus reducing the total computational cost to \({\mathcal {O}}((n-m)m^2)\) operations. For instance, in Example 3 in [4] with n=10,000 and \(k=0.9\) the bound (9) is successful in the sense that \(\max \left( \Vert A^{-1}\Vert ,\frac{\Vert D_d^{-1}\Vert }{1-\gamma }\right) = \Vert A^{-1}\Vert \) for dimension \(m \ge 13\) of the upper left block A. The additional computational cost for (9) is marginal, so in any case it is worth to try. If successful, then the computational cost for dimension n=10,000 reduces from 8 minutes to less than 2 seconds on a standard laptop.

In order to compute rigorous bounds, an upper bound for the \(\ell _2\) norm of a matrix \(C \in M_n(\mathbb {R})\) is necessary. An obvious possibility is to use \(\Vert C\Vert _2 \le \sqrt{\Vert C\Vert _1\Vert C\Vert _\infty }\). Denoting the spectral radius of a matrix by \(\varrho (\cdot )\), better bounds are obtained by \(\Vert C\Vert _2^2 \le \Vert |C|\Vert _2^2 = \varrho (|C|^T|C|)\) and Collatz’ bound [1]

which is true for any positive vector x. Then a few power iterations on x yield an accurate upper bound for \(\Vert |C|\Vert _2\) in some \({\mathcal {O}}(n^2)\) operations. More sophisticated methods for bounding \(\Vert C\Vert _2\) can be found in [6].

A practical consideration, in particular for so-called verification methods [4, 5], is that the true value \(\mu = \Vert N\Vert \) is usually not available. However, \(\nu (N)\) in (6) is increasing with \(\mu \) and implies

For example, \(\pi (N)\) can be used for an upper bound \(\mu \) of \(\Vert N\Vert \). Applying this method to (8) to bound \(\psi (N)\) for \(N \in \{A^{-1}B,CA^{-1}\}\) using \(\Vert N\Vert \le \pi (N)\) entirely avoids the computation of the \(\ell _2\)-norm of large matrices.

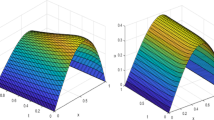

To that end we show how to compute an upper bound of \(\Vert G^{-1}\Vert \) for the third example in [4] for infinite dimension. The matrix G is parameterized by some \(k \in (0,5,1)\) with elements \(G_{ij} = k^{|i-j|}\) for \(i \ne j\) and \((1,\ldots ,n)\) on the diagonal. As before m denotes the size of the upper left block A.

We first show how to estimate \(\gamma \) in (9) for arbitrarily large n. Define

Then for every fixed \(s \in \{1,\ldots ,n-m\}\)

and

proves

That estimate is valid for any dimension \(n > m\). For \(Q = D_d^{-1} C\) we have \(Q_{ij}:= k^{|m+i-j|}/(m+i)\), so that for every fixed \(s \in \{1,\ldots ,n-m\}\)

and for every fixed \(s \in \{1,\ldots ,m\}\)

It follows

In order to estimate \(\Vert A^{-1}B\Vert \) we split \(B = [ S \;\; T]\) into blocks \(S \in M_{m,q}\) and \(T \in M_{m,n-m-q}\) and \(1 \le q < n-m\). Then \(B_{ij}:= k^{m-i+j}\) implies \(T_{ij}:= k^{q+m-i+j}\) and

so that

Summarizing and using (11), (12), and (13), (9) becomes

Note that only “small” matrices are involved in the computation of \(\delta \). For given k, we choose m and q large enough to ensure \(\max \left( \Vert A^{-1}\Vert ,\frac{\Vert D_d^{-1}\Vert }{1-\delta }\right) = \Vert A^{-1}\Vert \). Then it is true for the infinite dimensional matrix G as well. The computational effort is \({\mathcal {O}}(\max (m^2q,m^3))\)

Note that the bound is very crude with much room for improvement. Nevertheless, \(k=0.9\), \(m=25\) and \(q=35\) ensures \(\max \left( \Vert A^{-1}\Vert ,\frac{\Vert D_d^{-1}\Vert }{1-\delta }\right) = \Vert A^{-1}\Vert \) for infinite n with marginal computational effort.

In our example, symmetry implies \(\Vert A^{-1}B\Vert = \Vert C A^{-1}\Vert \), so that inserting the above quantities proves the bounds displayed in Table 1 for arbitrarily large dimension n.

Numerical evidence suggests that the true norm does not exceed 2.39. The total computing time for infinite dimensional G with \(m=200\) and \(q=100\) is less than 0.09 seconds on a laptop.

3 Comparison of the original and new bound

In [3] the bounds on \(\Vert G^{-1}\Vert \) are tested by means of three typical application examples. The first example is a tridiagonal matrix G with all elements equal to 2 on the first subdiagonal, all elements equal to 3 on the first superdiagonal, and \((1,\ldots ,n)\) on the diagonal. Therefore, with increasing dimension \(n \ge 21\), neither \(\varphi (CA^{-1})\) nor \(\varphi (A^{-1}B)\) change. Since the maximum in (4) is equal to \(\Vert A^{-1}\Vert \), the original bound and the new bound using (4) and (5) do not change for any \(n \ge 21\), i.e., they are valid for the infinite dimensional case. Computed bounds for different size m of the left upper block are displayed in Table 2. Computational evidence suggests that \(\Vert G^{-1}\Vert \) does not exceed 3.12 for infinite dimension.

In the second example in [3] the upper left \(5 \times 5\) matrix in G is replaced by some random positive matrix. Since the lower \((n-5)\times (n-5)\) block in G is still tridiagonal, again the original and new bound do not change for any dimension \(n \ge 21\). An example for the original, the new bound and for \(\Vert G^{-1}\Vert \) is 1.909, 1.648, 1.449.

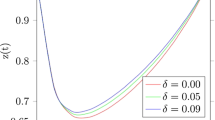

The third example in [3] was already treated in the previous section. For k close to 1 the bounds (3) and (8) are getting weak. For different values of k and dimension n the results are displayed in Table 3. For the true value of \(\Vert G^{-1}\Vert \) verified bounds were computed using verification methods, so that the displayed digits in the last column are correct.

We see not much dependency on the dimension of the original and the new bound, and practically no dependency of \(\Vert G^{-1}\Vert \). With k getting closer to the upper limit 1 there is more improvement by the new bound, but naturally the bounds become also weaker compared to \(\Vert G^{-1}\Vert \). For \(k \ge 0.97\) the original bound fails because the assumptions \(\Vert CA^{-1}\Vert < 1\) and \(\Vert A^{-1}B\Vert < 1\) are not satisfied.

Data availability

Not applicable.

References

Collatz, L.: Einschließungssatz für die charakteristischen Zahlen von Matrizen. Math. Z. 48, 221–226 (1942)

Horn, R., Johnson, C.: Matrix Analysis, 2nd edn. Cambridge University Press, Cambridge (2017)

Oishi, S.: Lower bounds for the smallest singular values of generalized asymptotic diagonal dominant matrices. Jpn. J. Ind. Appl. Math. (2023). https://doi.org/10.1007/s13160-023-00596-5

Oishi, S., Ichihara, K., Kashiwagi, M., Kimura, K., Liu, X., Masai, H., Morikura, Y., Ogita, T., Ozaki, K., Rump, S.M., Sekine, K., Takayasu, A., Yamanaka, N.: Principle of Verified Numerical Computations. Corona publisher, Tokyo (2018). (in Japanese)

Rump, S.M.: Verification methods: Rigorous results using floating-point arithmetic. Acta Numer. 19, 287–449 (2010)

Rump, S.M.: Verified bounds for singular values, in particular for the spectral norm of a matrix and its inverse. BIT Numer. Math. 51(2), 367–384 (2011)

Acknowledgements

Our dearest thanks to the anonymous referee for many helpful and constructive remarks.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Rump, S.M., Oishi, S. A note on Oishi’s lower bound for the smallest singular value of linearized Galerkin equations. Japan J. Indust. Appl. Math. 41, 1097–1104 (2024). https://doi.org/10.1007/s13160-024-00645-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13160-024-00645-7

Keywords

- Bound for the norm of the inverse of a matrix

- Minimum singular value

- Galerkin’s equation

- Nonlinear delay differential equation

- Schur complement