Abstract

This study considers structural optimization under a reliability constraint, in which the input distribution is only partially known. Specifically, when it is only known that the expected value vector and the variance-covariance matrix of the input distribution belong to a given convex set, it is required that the failure probability of a structure should be no greater than a specified target value for any realization of the input distribution. We demonstrate that this distributionally-robust reliability constraint can be reduced equivalently to deterministic constraints. By using this reduction, we can handle a reliability-based design optimization problem under the distributionally-robust reliability constraint within the framework of deterministic optimization; in particular, nonlinear semidefinite programming. Two numerical examples are solved to demonstrate the relation between the optimal value and either the target reliability or the uncertainty magnitude.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability-based design optimization (RBDO) is a crucial tool for structural design in the presence of uncertainty [2, 44, 56, 60]. RBDO adopts a probabilistic model of uncertainty and evaluates the probability that a structural design satisfies (or, equivalently, fails to satisfy) the performance requirements. An underlying premise is that complete knowledge on the statistical information of the uncertain parameters is available. However, it is often difficult to obtain statistical information with sufficient accuracy in practice. This has incented recent intensive studies on RBDO with incomplete statistical information [12, 13, 19, 25, 26, 28, 29, 43, 47, 48, 57, 61, 62, 64].

Another methodology that deals with uncertainty in structural design is robust design optimization [8, 24, 36]. Although several different concepts exist in robust design optimization, in this work we focus attention on the worst-case optimization methodology, which is known as robust optimization in the mathematical optimization community [6]. This methodology adopts a possibilistic model of uncertainty; that is, the set of possible values that the uncertain parameters can take is specified. We refer to this set as an uncertainty set. Subsequently, the objective value in the worst case is optimized, under the condition that the constraints are satisfied in the worst cases.

This study deals with RBDO when the input distribution is only partially known. In particular, we assume that the true expected value vector and the true variance-covariance matrix are unknown (i.e., the true values of the first two moments of the input distribution are unknown), but they are known to belong to a given closed convex set. For example, suppose that the input distribution is a normal distribution, and we only know that each component of the expected value vector and the variance-covariance matrix belongs to a given closed interval. Then, for each possible realization of a pair of the expected value vector and the variance-covariance matrix, a single corresponding normal distribution exists. The set of all such normal distributions is considered as an uncertainty set of the input distribution.Footnote 1 As another example, suppose that the distribution type of the input distribution is also unknown. In this case, the uncertainty set is the set of all probability distributions, the expected value vector and the variance-covariance matrix of which belong to a given set.Footnote 2 Among the probability distributions belonging to a specified uncertainty set defined as above, the worst-case distribution is that with which the failure probability takes the maximum value. Our methodology requires a structure to satisfy the reliability constraint that is evaluated with the worst-case distribution. In other words, for any probability distribution belonging to the uncertainty set, the failure probability should be no greater than a specified target value. Thus, the methodology guarantees robustness of the structural reliability against uncertainty in the input distribution.Footnote 3 The major contribution of this study is the demonstration, under several assumptions, that this structural requirement is equivalently converted into a form of constraints that can be handled in conventional deterministic optimization. As a result, a design optimization problem under this structural requirement can be solved using a deterministic nonlinear optimization approach.

RBDO methods with uncertainty in the input distribution have received considerable attention in recent years, because the number of available samples of random variables is often insufficient in practice. For example, Gunawan and Papalambros [19] and Youn and Wang [61] proposed Bayesian approaches to compute the confidence that a structural design satisfies a target reliability constraint when both a finite number of samples and probability distributions of uncertain parameters are available. Noh et al. [47, 48] proposed Bayesian methods to adjust an input distribution model to limited data with a given confidence level. Zaman et al. [63] and Zaman and Mahadevan [62] used a family of Johnson distributions to represent the uncertainty when the intervals of the input variables were provided as input information, Cho et al. [12] and Moon et al. [43] assumed that the input distribution types and parameters follow probability distributions. Therefore, the failure probability is a random variable, and the confidence level of a reliability constraint, i.e., the probability that the failure probability is no greater than a target value, is specified. To reduce the computational cost of this method, Jung et al. [29] proposed the so-called reliability measure approach, which was inspired by the performance measure approach [39, 41]. Subsequently, to the reduce computational cost further, Wang et al. [57] proposed the use of the second-order reliability method for computing the failure probability. Ito et al. [25] assumed that each of random variable follows a normal distribution with the mean and variance modeled as random variables, and demonstrated that RBDO with a confidence level can be converted into a conventional form of RBDO by altering the target reliability index value. Zhang et al. [64] proposed the use of the distributional probability box (the distributional p-box) [52] for RBDO with limited data of uncertain variables.Footnote 4 Kanno [34, 35] and Jekel and Haftka [27] proposed RBDO methods using order statistics. The methods in [27, 34, 35], which were based on the order statistics, did not make any assumptions regarding the statistical information of the uncertain parameters, and used random samples of the uncertain parameters directly to guarantee the confidence of the target reliability.

As per the above review, the majority of existing studies on RBDO with uncertainty in the input distribution [12, 25, 29, 43, 57] have considered probabilistic models of the input distribution parameters and/or distribution types. Accordingly, the confidence level evaluates the satisfaction of the structural reliability. In contrast, in this paper we consider a possibilistic model of the input distribution parameters. Hence, what this approach guarantees is a level of robustness [4] of the satisfaction of structural the reliability. In general, a possibilistic model may be less information-sensitive, and hence, useful when reliable statistical information of the input distribution parameters is unavailable.

From another perspective, with reference to Schöbi and Sudret [52], the uncertainty model presented in this paper can be viewed as follows: Uncertainty in a structural system is often divided into aleatory uncertainty and epistemic uncertainty [49]. Aleatory uncertainty, namely natural variability, is reflected by an (uncertain) input distribution. Epistemic uncertainty, namely state-of-knowledge uncertainty, is reflected by uncertainty in the input distribution moments. Thus, in our model, the aleatory uncertainty is probabilistic, whereas the epistemic uncertainty is possibilistic. That is, the state-of-knowledge uncertainty is represented as an uncertainty set of the input distribution moments.

We assume that only the design variables possess uncertainty, and that variation of a performance requirement can be approximated as a linear function of uncertain perturbations of the design variables. Furthermore, we do not consider an optimization problem with variations in the structural topology. We consider two concrete convex sets for the uncertainty model of moments of the input distribution. We demonstrate that the robust reliability constraint, i.e., the constraint that the structural reliability is no less than a specified value for any possible realizations of the input distribution moments, can be reduced to a system of nonlinear matrix inequalities. This reduction essentially follows the concept presented by El Ghaoui et al. [15] for computing the worst-case value-at-risk in financial engineering.Footnote 5 Nonlinear matrix inequality constraints can be dealt with within the framework of nonlinear semidefinite programming (nonlinear SDP) [59]. In this manner, an RBDO problem under uncertainty in the input distribution moments can be converted into a deterministic optimization problem. It is worth noting that several applications of linear and nonlinear SDPs, as well as eigenvalue optimization, exist for the robust design optimization of structures [7, 21,22,23, 30, 33, 38, 54, 55].

The remainder of this paper is organized as follows: In Sect. 2, we consider the reliability constraint when the input distribution is precisely known, and describe several fundamental properties. Section 3 presents the main result; we consider uncertainty in the expected value vector and the variance-covariance matrix of the input distribution, and examine the constraint that, for all possible realizations of the input distribution, the failure probability is no greater than a specified value. Several extensions of the obtained result are discussed in Sect. 4. Section 5 presents the results of the numerical experiments. The conclusions are summarized in Sect. 6.

In our notation, \({}^{\top }\) denotes the transpose of a vector or matrix. All vectors are column vectors. We use I to denote the identity matrix. For two matrices \(X=(X_{ij}) \in {\mathbb {R}}^{m \times n}\) and \(Y = (Y_{ij}) \in {\mathbb {R}}^{m \times n}\), \(X \bullet Y\) represents the inner product of X and Y, which is defined by \(X \bullet Y = \mathop {\mathrm {tr}}\nolimits (X^{\top } Y) = \sum _{i=1}^{n}\sum _{j=1}^{n}X_{ij}Y_{ij}\). For a vector \(\varvec{x} = (x_{i}) \in {\mathbb {R}}^{n}\), the notation \(\Vert \varvec{x} \Vert _{1}\), \(\Vert \varvec{x}\Vert _{2}\), and \(\Vert \varvec{x} \Vert _{\infty }\) designate its \(\ell _{1}\)-, \(\ell _{2}\)-, and \(\ell _{\infty }\)-norms, respectively, i.e.,

For a matrix \(X =(X_{ij}) \in {\mathbb {R}}^{m \times n}\), the matrix norms \(\Vert X \Vert _{1,1}\), \(\Vert X \Vert _{\mathrm {F}}\), and \(\Vert X \Vert _{\infty ,\infty }\) are defined by

Let \({\mathcal {S}}^{n}\) denote the set of \(n \times n\) symmetric matrices. We write \(Z \succeq 0\) if \(Z \in {\mathcal {S}}^{n}\) is positive semidefinite. Define \({\mathcal {S}}_{+}^{n}\) by \({\mathcal {S}}_{+}^{n} = \{ Z \in {\mathcal {S}}^{n} \mid Z \succeq 0 \}\). For a positive definite matrix \(Z \in {\mathcal {S}}^{n}\), the notation \(Z^{1/2}\) designates its symmetric square root, i.e., \(Z^{1/2} \in {\mathcal {S}}^{n}\) satisfying \(Z^{1/2} Z^{1/2} = Z\). We use \(Z^{-1/2}\) to denote the inverse matrix of \(Z^{1/2}\). We use \(\mathsf {N}(\varvec{\mu }, \varSigma )\) to denote the multivariate normal distribution with an expected value vector \(\varvec{\mu }\) and a variance-covariance matrix \(\varSigma \). The expected value and variance of a random variable \(x \in {\mathbb {R}}\) are denoted by \(\mathrm {E}[x]\) and \(\mathrm {Var}[x] = \mathrm {E}[(x - \mathrm {E}[x])^{2}]\), respectively.

2 Reliability constraint with specified moments

In this section, we assume that the expected value vector and variance-covariance matrix of the probability distribution of the design variable vector are precisely known. We first recall the reliability constraint, and subsequently derive its alternative expression that will be used in Sect. 3 to address uncertainty in the probability distribution.

Let \(\varvec{x} \in {\mathbb {R}}^{n}\) denote a design variable vector, where n is the number of design variables. Assume that the performance requirement in a design optimization problem is expressed as

where \(g : {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}\) is differentiable. For simplicity, suppose that the design optimization problem has only one constraint; the case in which more than one constraints exist is discussed in Sect. 4.

Assume that \(\varvec{x}\) is decomposed additively as

where \(\varvec{\zeta }\) is a random vector and \(\tilde{\varvec{x}}\) is a constant (i.e., non-random) vector. Therefore, in the design optimization problem considered in this study, the decision variable to be optimized is \(\tilde{\varvec{x}}\). We use \(\varvec{\mu } \in {\mathbb {R}}^{n}\) and \(\varSigma \in {\mathcal {S}}^{n}\) to denote the expected value vector and variance-covariance matrix of \(\varvec{\zeta }\), respectively, i.e.,

It should be noted that \(\varSigma \) is positive definite. Throughout the paper, we assume that, among the parameters in a structural system, only \(\varvec{\zeta }\) possesses uncertainty. Furthermore, we restrict our discussion to optimization without changes in the structural topology; i.e., we do not consider topology optimization.Footnote 6

For simplicity and clarity of the discussion, we assume \(\varvec{\zeta } \sim \mathsf {N}(\varvec{\mu }, \varSigma )\) in Sects. 2 and 3. In fact, the results that are established in these sections can be extended to the case in which the probability distribution type is unknown; in this case we require that the reliability constraint should be satisfied for any probability distribution with moments belonging to a specified set. We defer this case until Sect. 4.

As \(\varvec{x}\) is a random vector, \(g(\varvec{x})\) is a random variable. Therefore, constraint (1) should be considered in a probabilistic sense, which yields the reliability constraint

Here, the left side denotes the probability that \(g(\varvec{x}) = g(\tilde{\varvec{x}}+\varvec{\zeta })\) is no greater than 0 when \(\varvec{\zeta }\) follows \(\mathsf {N}(\varvec{\mu },\varSigma )\), and \(\epsilon \in ]0,1[\) on the right-hand side is the specified upper bound for the failure probability. Let \(g^{\mathrm {lin}}(\varvec{x})\) denote the first-order approximation of \(g(\varvec{x})\) centered at \(\varvec{x}=\tilde{\varvec{x}}\), i.e.,

Throughout the paper, we consider an approximation of constraint (2)

i.e.,

Therefore, the corresponding RBDO problem has the following form:

where \(f : {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}\) is the objective function, \(X \subseteq {\mathbb {R}}^{n}\) is a given closed set, and the constraint \(\tilde{\varvec{x}} \in X\) corresponds to, e.g., the side constraints on the design variables.

According to the basic property of the normal distribution, we can readily obtain the following reformulation of the reliability constraint.

Theorem 1

Define \(\kappa \) by

where \(\varPhi \) is the (cumulative) distribution function of the standard normal distribution \(\mathsf {N}(0,1)\). Then, \(\tilde{\varvec{x}} \in X\) satisfies (4) if and only if it satisfies

Proof

As \(g^{\mathrm {lin}}(\varvec{x})\) follows the normal distribution, it is standardized by

By using this relation, we can eliminate \(g^{\mathrm {lin}}(\varvec{x})\) from (3) (i.e., (4)) as follows:

This inequality is equivalently rewritten by using the distribution function \(\varPhi \):

Through direct calculations, we can observe that the expected value of \(g^{\mathrm {lin}}(\varvec{x})\) is

Moreover, the variance is

where the definition of the variance-covariance matrix of \(\varvec{\zeta }\) is used for the final equality. \(\square \)

In Sect. 3, we deal with the case in which \(\varvec{\mu }\) and \(\varSigma \) are known imprecisely. For this purpose, we reformulate \(\kappa \Vert \varSigma ^{1/2} \nabla g(\tilde{\varvec{x}}) \Vert _{2}\) in (6) into a form that is suitable for analysis. The following theorem is obtained in the same manner as in El Ghaoui et al. [15, Theorem 1].

Theorem 2

For \(\kappa > 0\), \(\varSigma \in {\mathcal {S}}_{+}^{n}\), and \(\nabla g(\tilde{\varvec{x}}) \in {\mathbb {R}}^{n}\), we obtain

Proof

We first demonstrate that the left side of the equation can be reduced to

For this observation, we apply the Lagrange multiplier method to the equality constrained maximization problem on the right side of (7). That is, the Lagrangian \(L_{1}: {\mathbb {R}}^{n} \times {\mathbb {R}}\rightarrow {\mathbb {R}}\) is defined by

where \(\mu \in {\mathbb {R}}\) is the Lagrange multiplier. The stationarity condition of \(L_{1}\) is

By solving this stationarity condition, we can observe that

are optimal. Hence, the optimal value is

which is reduced to the left side of (7).

Subsequently, it can be observed that the right side of (7) is further reduced to

Here, the first equality follows from the fact that the maximization of a linear function in an ellipsoid results in an optimal solution that lies on the boundary of the ellipsoid, whereas the final equality follows from the fact that the positive semidefinite constraint is equivalent to the nonnegative constraint on the Schur complement of \(\varSigma \) in the corresponding matrix, i.e., \(\kappa ^{2} - \varvec{\zeta }^{\top } \varSigma ^{-1} \varvec{\zeta } \ge 0\); see [9, Appendix A.5.5]. It is worth noting that the final expression in (8) is an SDP problem.

Finally, we demonstrate that the right side of the proposition in this theorem corresponds to the dual problem of the SDP problem in (8). As this dual problem is strictly feasible, the proposition follows from the strong duality of the SDP [10, Sect. 11.3]. We can derive the dual problem of (8) as follows. The Lagrangian is defined by

where \(z \in {\mathbb {R}}\), \(\varvec{\lambda } \in {\mathbb {R}}^{n}\), and \(\varLambda \in {\mathcal {S}}^{n}\) are the Lagrange multipliers. Indeed, because the positive semidefinite cone satisfies [31, Fact 1.3.17]

we can confirm that the SDP problem in (8) is equivalent to

The dual problem is defined by

As (9) can be rewritten as

we obtain

Therefore, the dual problem in (11) corresponds to the right side of the proposition of the theorem. \(\square \)

3 Worst-case reliability under uncertainty in moments

In this section, we consider the case in which the moments (in this study, the expected value vector and variance-covariance matrix) of the design variable vector are uncertain, or not perfectly known. Specifically, they are only known to belong to a given set, which is referred to as the uncertainty set. We require the structure to satisfy the reliability constraint for any moments in the uncertainty set. In other words, we require that the failure probability in the worst case is not larger than a specified value. We demonstrate that this requirement can be converted into a form of conventional constraints in deterministic optimization.

3.1 Convex uncertainty model of moments

Let \(U_{\varvec{\mu }} \subset {\mathbb {R}}^{n}\) and \(U_{\varSigma } \subset {\mathcal {S}}_{+}^{n}\) denote the uncertainty sets, i.e., the sets of all possible realizations, of \(\varvec{\mu }\) and \(\varSigma \), respectively. Namely, we only know that \(\varvec{\mu }\) and \(\varSigma \) satisfy

Assume that \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\) are compact convex sets. For notational simplicity, we write \((\varvec{\mu },\varSigma ) \in U\) if \(\varvec{\mu } \in U_{\varvec{\mu }}\) and \(\varSigma \in U_{\varSigma }\) hold.

Recall that we consider the reliability constraint in (4) with a linearly approximated constraint function. The robust counterpart of (4) against uncertainty in \(\varvec{\mu }\) and \(\varSigma \) is formulated as

Thus, we require that the reliability constraint should be satisfied for any normal distribution corresponding to possible realizations of \(\varvec{\mu }\) and \(\varSigma \). This requirement is equivalently rewritten as

That is, the reliability constraint should be satisfied in the worst case.

With the aid of Theorems 1 and 2, the following theorem presents an equivalent reformulation of (13).

Theorem 3

\(\tilde{\varvec{x}} \in X\) satisfies (13) if and only if there exists a pair of \(z \in {\mathbb {R}}\) and \(\varLambda \in {\mathcal {S}}^{n}\) satisfying

Proof

It follows from Theorem 1 that (13) is equivalent to

Furthermore, application of Theorem 2 yields

In the above expression, we observe that U is compact and convex, and the feasible set for the minimization is convex. Moreover, the objective function is linear in \(\varvec{\mu }\) and \(\varSigma \) for fixed z and \(\varLambda \), and it is linear in z and \(\varLambda \) for fixed \(\varvec{\mu }\) and \(\varSigma \). Therefore, the minimax theorem [10, Theorem 8.8] asserts that (17) is equivalent to

This inequality holds if and only if there exists a feasible pair of \(z\in {\mathbb {R}}\) and \(\varLambda \in {\mathcal {S}}^{n}\) satisfying

which concludes the proof. \(\square \)

The conclusion of Theorem 3 is quite abstract in the sense that the concrete forms of \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\) are not specified. To use this result into design optimization in practice, \(\max \{ \nabla g(\tilde{\varvec{x}})^{\top } \varvec{\mu } \mid \varvec{\mu } \in U_{\varvec{\mu }} \}\) and \(\max \{ \varSigma \bullet \varLambda \mid \varSigma \in U_{\varSigma } \}\) in (14) need to be reduced to tractable forms. A description of this process is provided in Sects. 3.2 and 3.3, where we consider two specific models of \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\).

3.2 Uncertainty model with \(\ell _{\infty }\)-norm

Let \(\tilde{\varvec{\mu }} \in {\mathbb {R}}^{n}\) and \({\tilde{\varSigma }} \in {\mathcal {S}}^{n}\) denote the best estimates of \(\varvec{\mu }\) and \(\varSigma \), respectively, where \({\tilde{\varSigma }}\) is positive definite. In this section, we specialize the results of Sect. 3.1 to the case in which the uncertainty sets are provided as follows:

In the above, \(\varvec{z}_{1} \in {\mathbb {R}}^{m}\) and \(Z_{2} \in {\mathcal {S}}^{k}\) are unknown vector and matrix reflecting the uncertainty in \(\varvec{\mu }\) and \(\varSigma \), respectively, \(A \in {\mathbb {R}}^{n \times m}\) and \(B \in {\mathbb {R}}^{n \times k}\) are constant matrices, and \(\alpha \) and \(\beta \) are nonnegative parameters representing the magnitudes of the uncertainties.

Example 1

A simple example of the uncertainty set in (18) is a box-constrained model. For example, if we set \(\tilde{\varvec{\mu }}=\varvec{0}\) and \(A=I\) with \(m=n\), (18) is reduced to

This means that the expected value vector \(\varvec{\mu }\) belongs to a hypercube that is centered at the origin, with edges that are parallel to the axes and with an edge length of \(2\alpha \). In other words, each component \(\mu _{j}\) of \(\varvec{\mu }\) can take any value in \([-\alpha ,\alpha ]\). Similarly, a simple example of the uncertainty set in (19) is that with \(B=I\) and \(k=n\), i.e.,

This means that, roughly speaking, the variance-covariance matrix \(\varSigma \) has component-wise uncertainty. More precisely, for each i, \(j=1,\dots ,n\) we have

and \(\varSigma \) should be positive semidefinite. It should be noted that, even if \({\tilde{\varSigma }}\) and \(\beta \) satisfy \({\tilde{\varSigma }} - \beta \varvec{1}\varvec{1}^{\top } \succ 0\) and \({\tilde{\varSigma }} + \beta \varvec{1}\varvec{1}^{\top } \succ 0\) (where \(\varvec{1}\) denotes an all-ones column vector), (20) does not necessarily imply \(\varSigma \succ 0\). Indeed, as for the example with \(n=2\), consider

Then we have

and, for example, we observe that

satisfies (20); however, \(\varSigma \not \succ 0\). \(\square \)

Two technical lemmas are required to derive the main result in this section stated in Theorem 4. Lemma 1 explicitly computes the value of \(\max \{ \nabla g(\tilde{\varvec{x}})^{\top } \varvec{\mu } \mid \varvec{\mu } \in U_{\varvec{\mu }} \}\) in (14). Lemma 2 converts \(\max \{ \varSigma \bullet \varLambda \mid \varSigma \in U_{\varSigma }\}\) in (14) into a tractable form.

Lemma 1

For \(U_{\varvec{\mu }}\) defined by (18), we obtain

Proof

The substitution of (18) into the left side yields

It is known that the dual norm of the \(\ell _{\infty }\)-norm is the \(\ell _{1}\)-norm [9, Appendix A.1.6], i.e.,

Therefore, we obtain

which concludes the proof. \(\square \)

Lemma 2

For \(U _{\varSigma }\) defined by (19), we obtain

Proof

We demonstrate that the right side corresponds to the dual problem of the SDP problem on the left side. Therefore, this proposition follows from the strong duality of SDP [10, Sect. 11.3], because the dual problem is strictly feasible.

As preliminaries, for a convex cone defined by \(K = \{ (s,S) \in {\mathbb {R}}\times {\mathcal {S}}^{k} \mid \Vert S \Vert _{1,1} \le s \}\), it can be observed that its dual cone is expressed by [9, Example 2.25]

from which we obtain

Using definition (19) of \(U _{\varSigma }\), the left side of the proposition of this theorem can be reduced to

The Lagrangian of this optimization problem is defined by

where \(v \in {\mathbb {R}}\), \(V \in {\mathcal {S}}^{k}\), and \(\varOmega \in {\mathcal {S}}^{n}\) are the Lagrange multipliers. Indeed, by using (10) and (21), we can confirm that problem (22) is equivalent to

Accordingly, the dual problem is defined by

As (23) can be rewritten as

we obtain

Therefore, the dual problem in (24) is explicitly expressed as follows:

Constraint \(\Vert B^{\top } (\varLambda + \varOmega ) B \Vert _{1,1} \le v\) becomes active at an optimal solution, which concludes the proof. \(\square \)

We are now in position to state the main result of this section. We obtain the following fact using Theorem 3, Lemmas 1, and 2.

Theorem 4

Let \(U_{\varvec{\mu }}\) and \(U _{\varSigma }\) be the sets defined by (18) and (19), respectively. Then, \(\tilde{\varvec{x}} \in X\) satisfies (13) if and only if there exists a pair of \(z \in {\mathbb {R}}\) and \(W \in {\mathcal {S}}^{n}\) satisfying

Proof

It follows from Lemmas 1 and 2 that (14) and (15) in Theorem 3 can be equivalently rewritten as

We use \(W = \varLambda + \varOmega \) to observe that this is reduced to

which is straightforwardly equivalent to (25) and (26). \(\square \)

It should be emphasized that Theorem 4 converts the set of infinitely many reliability constraints in (12) into two deterministic constraints, i.e., (25) and (26). The latter constraints can be handled within the framework of conventional (deterministic) optimization.

Remark 1

For non-probabilistic modelings of uncertainty, it is commonly assumed that each uncertainty parameter can take any value belonging to a specified close interval. Such an uncertainty model is used in the worst-case structural analysis known as interval analysis [11, 20, 40, 42, 45], based on interval linear algebra [46], and worst-case robust optimization of structures [32, 37]. Constraint (12) that is considered in this study can be linked to the worst-case approaches as follows. Consider an extreme case that the variances and covariances of \(\zeta _{1},\dots ,\zeta _{n}\) are sufficiently small, i.e., \(\varSigma \) satisfies \({\hat{\epsilon }} I \succeq \varSigma \) with sufficiently small \({\hat{\epsilon }} > 0\). Then, roughly speaking, the reliability constraint in (4) can be approximated as

Moreover, we use \(\beta =0\) in (19), i.e., \(U_{\varSigma }=\{ {\tilde{\varSigma }} \}\) and \(\varSigma = {\tilde{\varSigma }}\). In accordance with the approximation in (27), the distributionally-robust reliability constraint in (12) can be approximated as

It follows from (18) that this constraint can be explicitly rewritten as

In this case, each component of \(\varvec{z}_{1}\) belongs to closed interval \([-\alpha ,\alpha ]\), and the constraint is imposed for all possible realizations of such \(\varvec{z}_{1}\). Thus, we can observe that (28) has a form similar to that considered in the worst-case approaches with an interval uncertainty model. It should be noted that the latter approaches usually do not resort to linear approximation of the constraint function, unlike (28). \(\square \)

3.3 Uncertainty model with \(\ell _{2}\)-norm

In this section, we consider the uncertainty sets that are defined by

Example 2

As a simple example, we use \(\tilde{\varvec{\mu }}=\varvec{0}\) and \(A=I\) with \(m=n\) to obtain

This means that the expected value vector \(\varvec{\mu }\) belongs to a hypersphere that is centered at the origin with radius \(\alpha \). Similarly, using \(B=I\) and \(k=n\), we obtain

This means that the variance-covariance matrix \(\varSigma \) satisfies

and is symmetric positive semidefinite. \(\square \)

In a manner parallel to the proofs of Lemmas 1 and 2, we can obtain

In the above, the facts

and

have been used.

Accordingly, analogous to Theorem 4, we can obtain the following conclusion: \(\tilde{\varvec{x}} \in X\) satisfies (13) if and only if there exists a pair of \(z \in {\mathbb {R}}\) and \(W \in {\mathcal {S}}^{n}\) satisfying

Remark 2

The definitions of uncertainty sets \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\) that are adopted in this section are motivated by the ellipsoidal uncertainty sets that are widely used in robust structural optimization [7, 36, 38, 54, 55] as well as the so-called convex model approach to uncertainty analysis [3, 5, 50]. \(\square \)

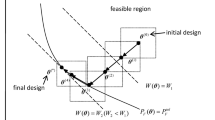

3.4 Truss optimization under compliance constraint

In this section, we present the manner in which the results established in the preceding sections can be employed for a specific RBDO problem. As a simple example, we consider a reliability constraint on the compliance under a static external load. We assume linear elasticity and small deformation.

For ease of comprehension, consider the design optimization of a truss. A truss is an assemblage of straight bars (referred to as members) connected by pin-joints (referred to as nodes) that do not transfer moment. In truss optimization, \(x_{j}\) denotes the cross-sectional area of member j \((j=1,\dots ,n)\), where n denotes the number of members. We attempt to minimize the structural volume of the truss \(\varvec{c}^{\top }\varvec{x}\) under the compliance constraint, where \(\varvec{x}\) is a design variable to be optimized and \(c_{j}\) denotes the undeformed length of member j. Figure 1 presents an example of a planar truss, where the bars and circles correspond to members and nodes, respectively. The locations of the bottom two nodes and the top left node are fixed. In this example, \(n=3\), \(c_{1}=1,\mathrm {m}\), \(c_{2}=\sqrt{2}\,\mathrm {m}\), and \(c_{3}=1\,\mathrm {m}\). Let \(\varvec{u} \in {\mathbb {R}}^{d}\) denote the nodal displacement vector, where d is the number of degrees of freedom of the nodal displacements. In the example depicted in Fig. 1, we have \(d=2\).

Let \(\varvec{p} \in {\mathbb {R}}^{d}\) denote the static external load vector. For a truss design \(\varvec{x}\), the compliance of the truss is defined by

where \(K(\varvec{x}) \in {\mathcal {S}}^{d}\) is the stiffness matrix of the truss. The compliance is a typical measure of the flexibility of structures: lower compliance indicates higher the structural stiffness. The first-order approximation of the compliance constraint is given by

where \({\bar{\pi }}\) \((>0)\) is a specified upper bound for the compliance. Accordingly, the design optimization problem to be solved is formulated as follows:

In the above, the specified lower bound for the member cross-sectional area, which is denoted by \({\bar{x}}_{j}\) \((j=1,\dots ,n)\), is positive, because in this paper we restrict our discussion to optimization problems without variations in the structural topology.

Regarding the uncertainty sets of the moments, consider, for example, \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\) studied in Sect. 3.3. For simplicity, use \(A=B=I\) to obtain

From the result in Sect. 3.3, we see that problem (29) can be equivalently rewritten as follows:

In the above, \(\tilde{\varvec{x}} \in {\mathbb {R}}^{n}\), \(z \in {\mathbb {R}}\), and \(W \in {\mathcal {S}}^{n}\) are variables to be optimized. It should be noted that problem (30) is a nonlinear SDP problem.

The remainder of this section is devoted to presenting a method for solving problem (30) that will be used for the numerical experiments in Sect. 5.

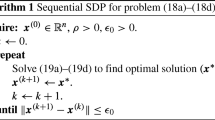

The method sequentially solves SDP problems that approximate problem (30), in a manner similar to sequential SDP methods for nonlinear SDP problems [38, 59]. Let \(\tilde{\varvec{x}}^{k}\) denote the incumbent solution that is obtained at iteration \(k-1\). Define \(\varvec{h}_{k} \in {\mathbb {R}}^{n}\) by

At iteration k, we replace \(\nabla \pi (\tilde{\varvec{x}})\) in (30c) and (30d) with \(\varvec{h}_{k}\). Moreover, to deal with \(\pi (\tilde{\varvec{x}})\) in (30c), we use the fact that \(s \in {\mathbb {R}}\) satisfies

if and only if

is satisfied [31, Sect. 3.1]. It is worth noting that, for trusses, \(K(\tilde{\varvec{x}})\) is linear in \(\tilde{\varvec{x}}\). Therefore, (31) is a linear matrix inequality with respect to \(\tilde{\varvec{x}}\) and s, and hence can be handled within the framework of (linear) SDP. In this manner, we obtain the following subproblem that is solved at iteration k for updating \(\tilde{\varvec{x}}^{k}\) to \(\tilde{\varvec{x}}^{k+1}\):

As this is a linear SDP problem, it can be solved efficiently with a primal-dual interior-point method [1].

4 Extensions

Several extensions of the results obtained in Sect. 3 are discussed in this section.

4.1 Robustness against uncertainty in distribution type

An important extension is that the obtained results can be applied to the case in which the moments as well as the probability distribution type are unknown. In this case, we consider any combination of all types of probability distributions and all possible moments (expected value vectors and variance-covariance matrices) in the uncertainty set, and the failure probability is required to be no greater than a specified value. This robustness against uncertainty in the distribution type is important as the input distribution is not necessarily known to be a normal distribution in practice.

Recall that, in Sects. 2 and 3, we assumed that the design variables, \(\varvec{x}\), follows a normal distribution. Then we consider the robust reliability constraint in (13). For the sake of clarity, we restate this problem setting in a slightly different manner. We have assumed that the random vector \(\varvec{\zeta }\) can possibly follow any normal distribution satisfying \(\varvec{\mu } \in U_{\varvec{\mu }}\) and \(\varSigma \in U_{\varSigma }\). We use \({\mathcal {P}}_{\mathsf {N}}\) to denote the set of such normal distributions, i.e.,

In other words, \({\mathcal {P}}_{\mathsf {N}}\) is the set of all possible realizations of the input distribution. We write \(p \in {\mathcal {P}}_{\mathsf {N}}\) if p is one of such realizations. With this new notation, (13) can be equivalently rewritten as

For \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\) defined in Sect. 3.2, Theorem 4 demonstrates that (34) is equivalent to (25) and (26).

We are now in position to consider any type of probability distribution. Only what we assume is that the input distribution satisfies \(\varvec{\mu } \in U_{\varvec{\mu }}\) and \(\varSigma \in U_{\varSigma }\), where, for a while, we consider \(U_{\varvec{\mu }}\) and \(U_{\varSigma }\) defined in Sect. 3.2. We use \({\mathcal {P}}\) to denote the set of such distributions, i.e.,

Then, instead of (34), we consider the following constraint:

That is, we require the reliability constraint to be satisfied for any input distribution p satisfying \(p \in {\mathcal {P}}\). A main assertion of this section is that by simply setting

instead of \(\kappa =-\varPhi ^{-1}(\epsilon )\), constraint (36) is equivalent to (25) and (26) in Theorem 4. We can show this fact in the following manner. Let \({\mathcal {P}}(\varvec{\mu },\varSigma )\) denote the set of probability distributions, the expected value vector and the variance-covariance matrix of which are \(\varvec{\mu }\) and \(\varSigma \), respectively. Observe that, with \({\mathcal {P}}(\varvec{\mu },\varSigma )\), (36) can be equivalently rewritten as

With relation to the inner supremum, consider the condition

El Ghaoui et al. [15, Theorem 1] proved that (39) holds if and only if (6) of Theorem 1 holds with \(\kappa \) defined by (37). Therefore, all of the subsequent results established in Sects. 2 and 3 hold by simply replacing the value of \(\kappa \) with that in (37). Thus, the robust reliability constraint with an unknown distribution type is also reduced to the form in (25) and (26) of Theorem 4.

The results in Sect. 3.3, which were established for the \(\ell _{2}\)-norm uncertainty model, are also extended to the case of an unknown distribution type by replacing \(\kappa \) with the value in (37).

4.2 Multiple constraints

In Sects. 2 and 3, we have restricted our discussion to the case in which the design optimization problem has a single performance requirement, (1). In this section, we discuss the treatment of multiple constraints.

Suppose that the performance requirement is written as

The first-order approximation yields

where \(g_{i}^{\mathrm {lin}}(\varvec{x}) = g_{i}(\tilde{\varvec{x}}) + \nabla g_{i}(\tilde{\varvec{x}})^{\top }\varvec{\zeta }\) \((i=1,\dots ,m)\). Suppose that we impose a distributionally-robust reliability constraint for each \(i=1,\dots ,m\) independently, i.e.,

In the above, \({\mathcal {P}}\) is the set of possible realizations of the input distribution (i.e., \({\mathcal {P}}\) is either \({\mathcal {P}}_{\mathsf {N}}\) in (33) or \({\mathcal {P}}\) in (35)). It is worth noting that in (40) the worst case distributions are considered independently for each \(i=1,\dots ,m\). Constraint (40) can be straightforwardly handled in the same manner as in Sect. 3.

In contrast, suppose that we consider a single (i.e., common) worst-case distribution for all \(i=1,\dots ,m\). Then the distributionally-robust reliability constraint is written as

Treatment of this constraint remains to be studied as future work. It is worth noting that constraint (40) is conservative compared with constraint (41).

5 Numerical examples

In Sect. 3.4 we observed that the optimization problem of trusses under the compliance constraint can be reduced to problem (30). In this section, we solve this optimization problem numerically.

The algorithm presented in Sect. 3.4 was implemented in Matlab ver. 9.8.0.Footnote 7 The SDP problem in (32) was solved using CVX ver. 2.2 [16, 17] with SeDuMi ver. 1.3.4 [51, 53]. The computation was carried out on a 2.6 GHz Intel Core i7-9750H processor with 32 GB RAM.

Example of Monte Carlo simulation for a single sample of \(\varvec{\mu }\) and \(\varSigma \) (example (I) with the \(\ell _{\infty }\)-norm uncertainty model; the probability distributions are assumed to be normal distributions). a Samples of \(x_{1}={\tilde{x}}_{1}+\zeta _{1}\), b samples of \(x_{2}={\tilde{x}}_{2}+\zeta _{2}\), and c computed values of \(g(\tilde{\varvec{x}}) + \nabla g(\tilde{\varvec{x}})^{\top }\varvec{\zeta }\)

Results of double-loop Monte Carlo simulation (example (I) with the \(\ell _{\infty }\)-norm uncertainty model; the probability distributions are assumed to be normal distributions). a Failure probability of linearly approximated constraint \(g(\tilde{\varvec{x}}) + \nabla g(\tilde{\varvec{x}})^{\top }\varvec{\zeta } \le 0\), and b failure probability of constraint \(g(\tilde{\varvec{x}}+\varvec{\zeta }) \le 0\) without approximation

Results of double-loop Monte Carlo simulation (example (I) with the \(\ell _{2}\)-norm uncertainty model; the probability distributions are assumed to be normal distributions). Failure probability of linearly approximated constraint \(g(\tilde{\varvec{x}}) + \nabla g(\tilde{\varvec{x}})^{\top }\varvec{\zeta } \le 0\)

Optimal value (example (I); no restriction on distribution type is assumed) versus a failure probability (with the \(\ell _{\infty }\)-norm uncertainty model), b magnitude of uncertainty (\(\ell _{\infty }\)-norm), c failure probability (with the \(\ell _{2}\)-norm uncertainty model), and d magnitude of uncertainty (\(\ell _{2}\)-norm)

5.1 Example (I): two-bar truss

Consider the plane truss depicted in Fig. 2. The truss has \(n=2\) members and \(d=2\) degrees of freedom of the nodal displacements. The elastic modulus of the members is \(20\,\mathrm {GPa}\). A vertical external force of \(100\,\mathrm {kN}\) is applied to the free node. The upper bound for the compliance is \({\bar{\pi }}=100\,\mathrm {J}\).

We first consider the uncertainty model with the \(\ell _{\infty }\)-norm, which was studied in Sect. 3.2. In the uncertainty model in (18) and (19), we use \(A=B=I\) with \(m=k=n\), as considered in Example 1. The best estimates, or nominal values, of \(\varvec{\mu }\) and \(\varSigma \) are set to

The uncertainty magnitudes are \(\alpha =0.2\) and \(\beta =0.01\). The specified upper bound for the failure probability is \(\epsilon =0.01\). The optimal solution obtained by the proposed method is indicated in the row “\(\ell _{\infty }\)-norm unc.” of Table 1, where “obj. val.” means the objective value at the obtained solution. For comparison, the optimal solution of the nominal optimization problem (i.e., the conventional structural volume minimization under the compliance constraint without considering uncertainty) is also listed.

The optimization result is verified as follows. We randomly generate \(\varvec{\mu } \in U_{\varvec{\mu }}\) and \(\varSigma \in U_{\varSigma }\), and subsequently generate \(10^{6}\) samples drawn from \(\varvec{\zeta } \sim \mathsf {N}(\varvec{\mu },\varSigma )\). Figure 3a and b depict the samples of \(\varvec{x}=\tilde{\varvec{x}}+\varvec{\zeta }\) that are generated in this manner. Figure 3c presents the values of the linearly approximated constraint function,

for these samples. Therefore, the ratio of the number of samples for which these function values are positive to the number of all samples (i.e., \(10^{6}\)) should be no greater than \(\epsilon \) \((=0.01)\). We computed this ratio for each of the \(10^{4}\) randomly generated samples of \(\varvec{\mu } \in U_{\varvec{\mu }}\) and \(\varSigma \in U_{\varSigma }\), where the continuous uniform distribution is used to generate the samples of the components of \(\varvec{\mu }\) and \(\varSigma \). Figure 4a presents a histogram of the values of this ratio computed in this manner, i.e., it shows the distribution of the failure probability estimated by the double-loop Monte Carlo simulation. It can be observed from Fig. 4a that, for each of the \(10^{4}\) probability distribution samples, the failure probability is no greater than \(\epsilon \). Thus, it is verified that the obtained solution satisfies the distributionally-robust reliability constraint in (13). Indeed, among these samples of the failure probability, the maximum value is 0.009054 \((<\epsilon )\). For reference, Fig. 4b presents a histogram of the failure probabilities computed for the constraint function values without applying the linear approximation, i.e., \(g(\varvec{x}) = \pi (\varvec{x}) - {\bar{\pi }}\). It can be observed from Fig. 4b that the failure probability exceeds the target value \(\epsilon \) \((=0.01)\) only in rare cases. Figure 5a shows the variations in the optimal value with respect to the upper bound for the failure probability \(\epsilon \), where \(\alpha =0.2\) and \(\beta =0.01\) are fixed. As \(\epsilon \) decreases, the optimal value increases. In contrast, Fig. 5b shows the variations in the optimal value with respect to \(\alpha \) and \(\beta \), where \(\epsilon =0.01\) is fixed. Although only the values of \(\alpha \) are provided in Fig. 5b, the values of \(\beta \in [0,0.02]\) also vary in a manner proportional to \(\alpha \). The optimal value increases as the magnitude of the uncertainty increases.

Subsequently, we consider the uncertainty model with the \(\ell _{2}\)-norm, which was studied in Sect. 3.3. The uncertainty set is defined with A, B, \(\tilde{\varvec{\mu }}\), \({\tilde{\varSigma }}\), \(\alpha \), and \(\beta \) used above. The specified upper bound for the failure probability is \(\epsilon =0.01\). The obtained optimal solution is indicated in the row “\(\ell _{2}\)-norm unc.” of Table 1. It can be observed that the objective value is small compared to the solution with the \(\ell _{\infty }\)-norm uncertainty model. This is natural because, with the common values of \(\alpha \) and \(\beta \), the uncertainty set with the \(\ell _{2}\)-norm is included in the uncertainty set with the \(\ell _{\infty }\)-norm. The optimization result is verified in the same manner as above. Figure 6 presents \(10^{4}\) samples of the failure probability, each of which is computed with \(10^{6}\) samples of \(\varvec{\zeta }\). Figure 7a and b depict the variations in the optimal value with respect to the failure probability, \(\epsilon \), and the uncertainty magnitudes \(\alpha \) and \(\beta \), respectively. These variations exhibit trends that are similar to those with the \(\ell _{\infty }\)-norm uncertainty model in Fig. 5a and b.

Finally, as discussed in Sect. 4.1, we consider, not only the normal distributions, but all of the probability distributions with \(\varvec{\mu }\) and \(\varSigma \) belonging to the uncertainty set. That is, the set of possible realizations of probability distributions is given by (35). Figure 8 displays the variations in the optimal value with respect to the failure probability, \(\epsilon \), and the uncertainty magnitudes \(\alpha \) and \(\beta \) (in the same manner as above, the values of \(\beta \in [0,0.02]\) are varied in a manner proportional to \(\alpha \)). As expected, compared to the results for the normal distributions in Figs. 5 and 7, the optimal value in Fig. 8 is large. Moreover, as \(\epsilon \) decreases, the optimal value in Fig. 8a and c increases drastically compared to the cases in Figs. 5a and 7a.

5.2 Example (II): 29-bar truss

Obtained designs of example (II). Optimal solutions a without considering uncertainty, b with the \(\ell _{\infty }\)-norm uncertainty model, and c with the \(\ell _{2}\)-norm uncertainty model. In b and c, no restriction on distribution type is assumed, \(\epsilon =0.005\), \(\alpha =0.2\), and \(\beta =0.01\)

Consider the plane truss depicted in Fig. 9, where \(n=29\) and \(d=20\). The elastic modulus of the members is \(20\,\mathrm {GPa}\). Vertical external forces of \(100\,\mathrm {kN}\) are applied to two nodes, as illustrated in Fig. 9. The upper bound for the compliance is \({\bar{\pi }}=1000\,\mathrm {J}\). The lower bounds for the member cross-sectional areas are \(\bar{x}_{j} = 200\,{\text{mm}}^{{\text{2}}} \) \((j=1,\dots ,n)\).

For the uncertainty model, we consider both the model with the \(\ell _{2}\)-norm, using \(A=B=I\) with \(m=k=n\). The best estimates of \(\varvec{\mu }\) and \(\varSigma \) are

where \(\varvec{1} \in {\mathbb {R}}^{n}\) is an all-ones column vector. The uncertainty magnitudes are \(\alpha =0.2\) and \(\beta =0.01\). The specified upper bound for the failure probability is \(\epsilon =0.01\).

The optimization results obtained by the proposed method are listed in Table 2. Figure 10a and b present the variations in the optimal value with respect to the failure probability and the uncertainty magnitude, respectively.

As in Sect. 4.1, we require the reliability constraint to be satisfied for all the probability distributions satisfying \(\varvec{\mu } \in U_{\varvec{\mu }}\) and \(\varSigma \in U_{\varSigma }\), i.e., for any probability distribution belonging to \({\mathcal {P}}\) in (35). For the \(\ell _{2}\)-norm uncertainty, Fig. 11a and b depict the variations in the optimal value with respect to the failure probability and the uncertainty magnitude, respectively. Figure 12 presents the optimal solutions of the optimization problem without uncertainty, as well as the distributionally-robust RBDO problems with the two uncertainty models. The width of each member in these figures is proportional to its cross-sectional area.

6 Conclusions

This paper has dealt with the reliability-based design optimization (RBDO) of structures, in which the knowledge of the input distribution that is followed by the design variables is imprecise. Specifically, it is only known that the expected value vector and the variance-covariance matrix of the input distribution belong to a specified convex set, and their true values are not known. Then we attempted to optimize a structure, under the constraint that, even for the worst-case input distribution, the failure probability of the structure is no greater than the specified value. This constraint, which we refer to as the distributionally-robust reliability constraint, is equivalent to infinitely many reliability constraints corresponding to all possible realizations of the input distribution. Provided that the change in a constraint function value is well approximated as a linear function of uncertain perturbations of the design variables, a tractable reformulation of the distributionally-robust reliability constraint has been presented.

The concept of distributionally-robust RBDO has been established and fundamental results have been provided. However, various aspects remain to be studied. For example, we have considered uncertainty only in the design variables. Other sources of uncertainty in structural optimization can be explored. Furthermore, as discussed in Sect. 4.2, multiple performance requirements in the form of (41) remain to be studied. Extensions to topology optimization are of great interest, as topology optimization has greater design flexibility than the size optimization handled in this paper. Moreover, we have relied on the assumption that the quantity of interest is approximated, with sufficient accuracy, as a linear function of the uncertainty perturbations of the design variables. Extensions to nonlinear cases can be attempted. Finally, the development of a more efficient algorithm for solving the optimization problem presented in this paper can be studied.

Notes

This uncertainty set is discussed in Sect. 3.

This uncertainty set is discussed in Sect. 4.1.

More precisely, the uncertainty discussed here means the uncertainty in the expected value vector and the variance-covariance matrix of the input distribution.

The distributional p-box specifies the distribution type, and the upper and lower bounds of the value of the (cumulative) distribution function at each value of a random variable. In contrast, in this study, we specify the sets of realizations of the expected value vector and variance-covariance matrix.

In topology optimization, it would be appropriate to consider the design variables of the removed structural elements as non-random variables. We do not discuss this issue in this paper.

The source code for solving the optimization problems presented in Sect. 5 is available on-line at https://github.com/ykanno22/moment_worst/.

References

Anjos, M.F., Lasserre, J.B.: Handbook on semidefinite, conic and polynomial optimization. Springer, New York (2012)

Aoues, Y., Chateauneuf, A.: Benchmark study of numerical methods for reliability-based design optimization. Struct. Multidiscip. Optim. 41, 277–294 (2010)

Au, F.T.K., Cheng, Y.S., Tham, L.G., Zheng, G.W.: Robust design of structures using convex models. Comput. Struct. 81, 2611–2619 (2003)

Ben-Haim, Y.: Info-gap decision theory: decisions under severe uncertainty, 2nd edn. Academic Press, London (2006)

Ben-Haim, Y., Elishakoff, I.: Convex models of uncertainty in applied mechanics. Elsevier, New York (1990)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust optimization. Princeton University Press, Princeton (2009)

Ben-Tal, A., Nemirovski, A.: Robust truss topology optimization via semidefinite programming. SIAM J. Optim. 7, 991–1016 (1997)

Beyer, H.-G., Sendhoff, B.: Robust optimization—a comprehensive survey. Comput. Methods Appl. Mech. Eng. 196, 3190–3218 (2007)

Boyd, S., Vandenberghe, L.: Convex optimization. Cambridge University Press, Cambridge (2004)

Calafiore, G.C., El Ghaoui, L.: Optimization models. Cambridge University Press, Cambridge (2014)

Chen, S., Lian, H., Yang, X.: Interval static displacement analysis for structures with interval parameters. Int. J. Numer. Methods Eng. 53, 393–407 (2002)

Cho, H., Choi, K.K., Gaul, N.J., Lee, I., Lamb, D., Gorsich, D.: Conservative reliability-based design optimization method with insufficient input data. Struct. Multidiscip. Optim. 54, 1609–1630 (2016)

Choi, J., An, D., Won, J.: Bayesian approach for structural reliability analysis and optimization using the Kriging dimension reduction method. J. Mech. Des. 132, 051003 (2010)

Delage, E., Ye, Y.: Distributionally robust optimization under moment uncertainty with application to data-driven problems. Oper. Res. 58, 595–612 (2010)

El Ghaoui, L., Oks, M., Oustry, F.: Worst-case value-at-risk and robust portfolio optimization: a conic programming approach. Oper. Res. 51, 543–556 (2003)

Grant, M., Boyd, S.: Graph implementations for nonsmooth convex programs. In: Blondel, V., Boyd, S., Kimura, H. (eds.) Recent advances in learning and control (a tribute to M. Vidyasagar), pp. 95–110. Springer, New York (2008)

Grant, M., Boyd, S.: CVX: Matlab software for disciplined convex programming. http://cvxr.com/cvx/ Accessed Apr 2021

Goh, J., Sim, M.: Distributionally robust optimization and its tractable approximations. Oper. Res. 58, 902–917 (2010)

Gunawan, S., Papalambros, P.Y.: A Bayesian approach to reliability-based optimization with incomplete information. J. Mech. Des. 128, 909–918 (2006)

Guo, X., Bai, W., Zhang, W.: Extreme structural response analysis of truss structures under material uncertainty via linear mixed 0–1 programming. Int. J. Numer. Methods Eng. 76, 253–277 (2008)

Guo, X., Bai, W., Zhang, W., Gao, X.: Confidence structural robust design and optimization under stiffness and load uncertainties. Comput. Methods Appl. Mech. Eng. 198, 3378–3399 (2009)

Guo, X., Du, J., Gao, X.: Confidence structural robust optimization by non-linear semidefinite programming-based single-level formulation. Int. J. Numer. Methods Eng. 86, 953–974 (2011)

Holmberg, E., Thore, C.-J., Klarbring, A.: Worst-case topology optimization of self-weight loaded structures using semi-definite programming. Struct. Multidiscip. Optim. 52, 915–928 (2015)

Huan, Z., Zhenghong, G., Fang, X., Yidian, Z.: Review of robust aerodynamic design optimization for air vehicles. Arch. Comput. Methods Eng. 26, 685–732 (2019)

Ito, M., Kim, N.H., Kogiso, N.: Conservative reliability index for epistemic uncertainty in reliability-based design optimization. Struct. Multidiscip. Optim. 57, 1919–1935 (2018)

Ito, M., Kogiso, N.: Information uncertainty evaluated by parameter estimation and its effect on reliability-based multiobjective optimization. J. Adv. Mech. Des. Syst. Manuf. 10, 16–00331 (2016)

Jekel, C.F., Haftka, R.T.: Risk allocation for design optimization with unidentified statistical distributions. AIAA Scitech 2020 Forum, Orlando (2020)

Jiang, Z., Chen, W., Fu, Y., Yang, R.-J.: Reliability-based design optimization with model bias and data uncertainty. SAE Int. J. Mater. Manuf. 6, 502–516 (2013)

Jung, Y., Cho, H., Lee, I.: Reliability measure approach for confidence-based design optimization under insufficient input data. Struct. Multidiscip. Optim. 60, 1967–1982 (2019)

Kang, Z., Zhang, W.: Construction and application of an ellipsoidal convex model using a semi-definite programming formulation from measured data. Comput. Methods Appl. Mech. Eng. 300, 461–489 (2016)

Kanno, Y.: Nonsmooth mechanics and convex optimization. CRC Press, Boca Raton (2011)

Kanno, Y.: An implicit formulation of mathematical program with complementarity constraints for application to robust structural optimization. J. Oper. Res. Soc. Japan 54, 65–85 (2011)

Kanno, Y.: Robust truss topology optimization via semidefinite programming with complementarity constraints: a difference-of-convex programming approach. Comput. Optim. Appl. 71, 403–433 (2018)

Kanno, Y.: A data-driven approach to non-parametric reliability-based design optimization of structures with uncertain load. Struct. Multidiscip. Optim. 60, 83–97 (2019)

Kanno, Y.: Dimensionality reduction enhances data-driven reliability-based design optimizer. J. Adv. Mech. Des. Syst. Manuf. 14, 19–00200 (2020a)

Kanno, Y.: On three concepts in robust design optimization: absolute robustness, relative robustness, and less variance. Struct. Multidiscip. Optim. 62, 979–1000 (2020b)

Kanno, Y., Guo, X.: A mixed integer programming for robust truss topology optimization with stress constraints. Int. J. Numer. Methods Eng. 83, 1675–1699 (2010)

Kanno, Y., Takewaki, I.: Sequential semidefinite program for robust truss optimization based on robustness functions associated with stress constraints. J. Optim. Theory Appl. 130, 265–287 (2006)

Keshtegar, B., Lee, I.: Relaxed performance measure approach for reliability-based design optimization. Struct. Multidiscip. Optim. 54, 1439–1454 (2016)

Köylüoǧlu, H.U., Çakmak, A.Ş, Nielsen, S.R.K.: Interval algebra to deal with pattern loading and structural uncertainties. J. Eng. Mech. 121, 1149–1157 (1995)

Lee, I., Choi, K.K., Gorsich, D.: Sensitivity analyses of FORM-based and DRM-based performance measure approach (PMA) for reliability-based design optimization (RBDO). Int. J. Numer. Methods Eng. 82, 26–46 (2010)

McWilliam, S.: Anti-optimization of uncertain structures using interval analysis. Comput. Struct. 79, 421–430 (2001)

Moon, M.-Y., Cho, H., Choi, K.K., Gaul, N., Lamb, D., Gorsich, D.: Confidence-based reliability assessment considering limited numbers of both input and output test data. Struct. Multidiscip. Optim. 57, 2027–2043 (2018)

Moustapha, M., Sudret, B.: Surrogate-assisted reliability-based design optimization: a survey and a unified modular framework. Struct. Multidiscip. Optim. 60, 2157–2176 (2019)

Muhanna, R.L., Mullen, R.L.: Uncertainty in mechanics problems—interval-based approach. J. Eng. Mech. 127, 557–566 (2001)

Neumaier, A.: Interval methods for systems of equations. Cambridge University Press, Cambridge (1990)

Noh, Y., Choi, K.K., Lee, I., Gorsich, D., Lamb, D.: Reliability-based design optimization with confidence level under input model uncertainty due to limited test data. Struct. Multidiscip. Optim. 43, 443–458 (2011)

Noh, Y., Choi, K.K., Lee, I., Gorsich, D., Lamb, D.: Reliability-based design optimization with confidence level for non-Gaussian distributions using bootstrap method. J. Mech. Des. 133, 091001 (2011)

Oberkampf, W.L., Helton, J.C., Joslyn, C.A., Wojtkiewicz, S.F., Ferson, S.: Challenge problems: uncertainty in system response given uncertain parameters. Reliab. Eng. Syst. Saf. 85, 11–19 (2004)

Pantelides, C.P., Ganzerli, S.: Design of trusses under uncertain loads using convex models. J. Struct. Eng. 124, 318–329 (1989)

Pólik, I.: Addendum to the SeDuMi User Guide: Version 1.1. Technical Report, Advanced Optimization Laboratory. McMaster University, Hamilton (2005) http://sedumi.ie.lehigh.edu/sedumi/ Accessed Apr 2021

Schöbi, R., Sudret, B.: Structural reliability analysis for p-boxes using multi-level meta-models. Probab. Eng. Mech. 48, 27–38 (2017)

Sturm, J.F.: Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optim. Methods Softw. 11–12, 625–653 (1999)

Takezawa, A., Nii, S., Kitamura, M., Kogiso, N.: Topology optimization for worst load conditions based on the eigenvalue analysis of an aggregated linear system. Comput. Methods Appl. Mech. Eng. 200, 2268–2281 (2011)

Thore, C.-J., Holmberg, E., Klarbring, A.: A general framework for robust topology optimization under load-uncertainty including stress constraints. Comput. Methods Appl. Mech. Eng. 319, 1–18 (2017)

Valdebenito, M.A., Schuëller, G.I.: A survey on approaches for reliability-based optimization. Struct. Multidiscip. Optim. 42, 645–663 (2010)

Wang, Y., Hao, P., Yang, H., Wang, B., Gao, Q.: A confidence-based reliability optimization with single loop strategy and second-order reliability method. Comput. Methods Appl. Mech. Eng. 372, 113436 (2020)

Wiesemann, W., Kuhn, D., Sim, M.: Distributionally robust convex optimization. Oper. Res. 62, 1358–1376 (2014)

Yamashita, H., Yabe, H.: A survey of numerical methods for nonlinear semidefinite programming. J. Oper. Res. Soc Japan 58, 24–60 (2015)

Yao, W., Chen, X., Luo, W., van Tooren, M., Guo, J.: Review of uncertainty-based multidisciplinary design optimization methods for aerospace vehicles. Progress Aerosp. Sci. 47, 450–479 (2011)

Youn, B.D., Wang, P.: Bayesian reliability-based design optimization using eigenvector dimension reduction (EDR) method. Struct. Multidiscip. Optim. 36, 107–123 (2008)

Zaman, K., Mahadevan, S.: Reliability-based design optimization of multidisciplinary system under aleatory and epistemic uncertainty. Struct. Multidiscip. Optim. 55, 681–699 (2017)

Zaman, K., Rangavajhala, S., McDonald, M.P., Mahadevan, S.: A probabilistic approach for representation of interval uncertainty. Reliab. Eng. Syst. Saf. 96, 117–130 (2011)

Zhang, J., Gao, L., Xiao, M., Lee, S., Eshghi, A.T.: An active learning Kriging-assisted method for reliability-based design optimization under distributional probability-box model. Struct. Multidiscip. Optim. 62, 2341–2356 (2020)

Acknowledgements

This work is supported by a Research Grant from the Maeda Engineering Foundation and JSPS KAKENHI (17K06633, 21K04351).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Kanno, Y. Structural reliability under uncertainty in moments: distributionally-robust reliability-based design optimization. Japan J. Indust. Appl. Math. 39, 195–226 (2022). https://doi.org/10.1007/s13160-021-00483-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13160-021-00483-x

Keywords

- Reliability-based design optimization

- Uncertain input distribution

- Worst-case reliability

- Robust optimization

- Semidefinite programming

- Duality