Abstract

The periodic behavior of real data can be manifested in the time series or in its characteristics. One of the characteristics that often manifests the periodic behavior is the sample autocovariance function. In this case, the periodically correlated (PC) behavior is considered. One of the main models that exhibits PC property is the periodic autoregressive (PARMA) model that is considered as the generalization of the classical autoregressive moving average (ARMA) process. However, when one considers the real data, practically the observed trajectory corresponds to the “pure” model with the additional noise which is a result of the noise of the measurement device or other external forces. Thus, in this paper we consider the model that is a sum of the periodic autoregressive (PAR) time series and the additive noise with finite-variance distribution. We present the main properties of the considered model indicating its PC property. One of the main goals of this paper is to introduce the new estimation method for the considered model’s parameters. The novel algorithm takes under consideration the additive noise in the model and can be considered as the modification of the classical Yule–Walker algorithm that utilizes the autocovariance function. Here, we propose two versions of the new method, namely the classical and the robust ones. The effectiveness of the proposed methodology is verified by Monte Carlo simulations. The comparison with the classical Yule–Walker method is presented. The approach proposed in this paper is universal and can be applied to any finite-variance models with the additive noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many real data exhibit periodic behavior. The periodicity can be manifested in the time series or in its characteristics. One of the characteristics that often manifests the periodic behavior is the sample autocovariance function. In this case, we can say about periodically correlated behavior and the corresponding theoretical process is called periodically correlated (PC) or second-order cyclostationary. The idea of periodically correlated processes was initiated in [1, 2] and then extensively extended by many authors [3,4,5]. One of the most known PC models is the periodic autoregressive moving average (PARMA) time series, [6, 7]. In the last years, the PARMA models were discussed in different directions [8,9,10,11,12,13,14,15]. They are treated as the generalization of the classical autoregressive moving average (ARMA) time series [16]. However, in the PARMA models the corresponding parameters are periodic in contrast to the ARMA time series, where they are constant. The PARMA models are also considered as a special case of the time-dependent coefficients ARMA time series [17,18,19]. The classical definition of the PARMA models assumes that innovations are finite-variance distributed, e.g., Gaussian distributed.

The PC models (and PARMA time series) were applied in various real problems, including mechanical systems [20, 21], hydrology [6, 22], climatology and meteorology [23, 24], economics [25, 26], medicine and biology [27, 28] and many others.

In the literature, there are many known modifications of classical PARMA models that are more adequate for the specific behavior of the data. One of the most known is the replacement of the finite-variance innovations (mostly Gaussian distributed) by the innovations with non-Gaussian distribution. In general, one can consider the PARMA models with infinite-variance distribution (e.g., heavy-tailed). Such models were considered in different applications, e.g., finance [29], physics [30], electricity market [31], technical diagnostics [32,33,34], geophysical science [35, 36] and many others. See also the bibliography positions, where the PARMA models with heavy-tailed behavior were considered from the theoretical point of view [37,38,39].

When one analyzes the real measurement data with some specific behavior (like PC behavior) adequate for given theoretical model, we may assume it is always disturbed by measurement noise. Thus, in practice we do not observe the trajectory of the “pure” model. Therefore, one may assume that the model under consideration is disturbed by the additional noise that may be related to the noise of the measurement device or to other sources influencing the observations. This problem was discussed for instance in [40], where the authors considered the “pure” fractional Brownian motion with the additive noise. See also interesting bibliography positions where the similar problem was considered for various models [41,42,43,44,45].

In this paper, we consider the model described by Eq. (1) that is the classical PAR (periodic autoregressive) time series disturbed by the additive noise with finite-variance distribution. The assumption that the real data correspond to this model influences that the classical estimation methods for the PARMA models’ parameters may not be effective. The situation is simpler, when the additive noise has smaller variance. In that case, the known algorithms can be accepted. However, when the level of the additive noise is noticeable, the classical algorithms need to be modified in order to include the additional disturbances. Moreover, as it is shown in our simulation study, the distribution of the additive noise has also influence on the final results. The different behavior we observe in case of the Gaussian additive noise and completely different in case when the additional noise is considered as the additive outlier, i.e., large observations (in absolute value) appearing in the data with given probability. The considered problem in the context of the PARMA models was discussed in [46] where the authors proposed to use the robust algorithms for PARMA models’ parameters estimation without changing the estimation procedures. See also [47,48,49,50,51,52,53,54].

In this paper, we go step forward and propose to modify the classical algorithm of the PARMA models’ parameters estimation taking under consideration the additive noise. The classical estimation method useful for the PARMA models is based on the so-called Yule–Walker approach [4]. It utilizes the autocovariance function of the time series, and at the final step, the classical measure of dependence is replaced by its empirical counterpart. The classical Yule–Walker algorithm is efficient for “pure” PARMA models. Moreover, it does not assume any specific distribution of the data; thus, in this sense it is universal. However, when the model under consideration is described by the process given in Eq. (1), the classical Yule–Walker algorithm seems to be not effective, especially for the additional noise with large variance.

The main goal of this paper is to introduce the general model that is a sum of the “pure” PAR time series and the additive noise and demonstrate its main properties. We have shown that the considered model is still PC; however, it does not satisfy the PARMA equation. The second goal is to propose the simple modification of the classical estimation algorithm and demonstrate its effectiveness for the considered model. Moreover, we introduce the classical and robust version of the modified Yule–Walker method and show their efficiency with respect to the distribution of the additive noise. The estimation results for the new algorithms are compared with the classical Yule–Walker approach with classical and robust estimator of the autocovariance function for various distributions of the additive noise.

The rest of the paper is organized as follows. In Sect. 2, we introduce the considered model and present its main properties. Next, in Sect. 3 we propose a novel estimation algorithm for the considered model’s parameters and demonstrate its classical and robust version. In Sect. 4, using the Monte Carlo simulations, we demonstrate the effectiveness of the proposed methodology and present the comparative study, where the results for the modified and classical Yule–Walker algorithms are discussed. The last section concludes the paper and presents the future study.

2 Model description

The model under consideration is defined as follows:

where \(\{X_t,~t\in \mathbb {Z}\}\) is the periodic autoregressive time series of order 1 (PAR(1)) with period T and finite-variance innovations (called later the finite-variance PAR(1) model), while \(\{Z_t,~t\in \mathbb {Z}\}\) is the sequence of independent random variables (called later the additive noise) with zero mean and constant variance \(\sigma _Z^2\). We assume that the time series \(\{X_t\}\) and \(\{Z_t\}\) are independent. In the further parts of the paper, model defined by Eq. (1) will be called the finite-variance PAR(1) time series with additive noise.

In the following part, we remind the definition of finite-variance PAR(1) model and the properties that guarantee the existence of its unique bounded solution. The PAR(1) time series is a special case of periodic autoregressive moving average (PARMA) model that is a periodic extension of the well-known ARMA (autoregressive moving average) system [16]. The finite-variance (called also second-order) PARMA(\(p,\,q\)) time series is defined as follows.

Definition 1

[55] The sequence \(\{X_t,~t\in \mathbb {Z}\}\) is a second-order PARMA(p,q) (\(p,q\in \mathbb {N}\)) model with period \(T\in \mathbb {N}\) when it satisfies the following equation:

In Eq. (2), the \(\{\xi _t,~t\in \mathbb {Z}\}\) sequence constitutes a sample of uncorrelated random variables with mean equal to zero. We assume that the variance for each \(\xi _t\) is \(\sigma _{\xi }^2(t)\). Moreover, the scalar sequences \(\{\phi _i(t),~i=1,\ldots ,p\}\), \(\{\theta _j(t),~j=1,\ldots ,q\}\) and \(\sigma _{\xi }^2(t)\) are periodic in t with the same period T.

Usually, it is assumed that \(\{\xi _t\}\) is a sequence of Gaussian distributed random variables. And this is the case considered in this paper. However, all presented properties hold also for any finite-variance distribution. For \(T=1\), the finite-variance PARMA time series reduces to the classical finite-variance ARMA model [16].

When \(p=1\) and \(q=0\), the PARMA model given in Definition 1 is called the PAR(1) time series and is given by the following equation:

In that case, the unique bounded (in the sense of \(l_2\) norm) solution of Eq. (3) exists if and only if:

and is given by [17]:

where \(\varPhi _{k}^n=\prod _{r=k}^n\phi (r)\) with the convention \(\varPhi _k^n=1\) when \(k>n\), see also [39]. One of the main features of finite-variance PARMA time series (and thus its special case finite-variance PAR(1) model) is that it exhibits the second-order cyclostationarity, called periodically correlated (PC) property. We remind that the finite-variance time series \(\{X_t,~t\in \mathbb {Z}\}\) is periodically correlated with period \(T\in \mathbb {N}\) if its mean and autocovariance functions are periodic in t with the period T, i.e., when the following conditions hold for any \(s,t\in \mathbb {Z}\) [4]:

where \(\mathrm{cov}(X_s,X_t)=\mathbb {E}(X_sX_t)-\mathbb {E}(X_s)\mathbb {E}(X_t)\) is the autocovariance function of the time series \(\{X_t\}\). Indeed, under the condition given in Eq. (4), using Eq. (5), the fact that the sequence \(\{\xi _t\}\) constitutes a sample of uncorrelated random variables and the fact that, for each \(t\in \mathbb {Z}\), \(\mathbb {E}\xi _t=0\), \(\mathrm{Var}(\xi _t)=\sigma ^2_{\xi }(t)\), we obtain the following:

The interesting properties of finite-variance PAR(1) time series in the time and frequency domains one can find for instance in [56].

Using the properties of the finite-variance PAR(1) model presented above, one can discuss the similar properties of the model defined in Eq. (1). One can easily show that the time series \(\{Y_t\}\) has mean equal to zero and autocovariance function given by:

where \(\mathbb {I}_A\) is the indicator of the set A. Thus, from Eq. (8), one can conclude that the sequence \(\{Y_t\}\) also exhibits the PC property. However, it does not satisfy the PAR(1) model given in Eq. (3). In the following remark, we confirm this statement.

Remark 1

Let the time series \(\{Y_t,~t\in \mathbb {Z}\}\) be defined as in Eq. (1), where the time series \(\{X_t\}\) is finite-variance PAR(1) model with period T defined in Eq. (3) and \(\{Z_t\}\) is the sequence of independent random variables with zero mean and finite variance \(\sigma ^2_Z\). Moreover, the time series \(\{X_t\}\) and \(\{Z_t\}\) are independent. Then, the time series \(\{Y_t\}\) satisfies the following equation:

Proof

The proof of Eq. (9) follows directly from the definition of the time series \(\{Y_t\}\) given in Eq. (1) and the definition of the finite-variance PAR(1) time series \(\{X_t\}\) given in Eq. (3). Indeed, we have the following:

And thus, we obtain:

which corresponds to the thesis. \(\square\)

Using the form of the bounded solution for PAR(1) time series given in Eq. (5), one can show that when the sequences \(\{\xi _t\}\) and \(\{Z_t\}\) are Gaussian distributed, the time series \(\{Y_t\}\) is also Gaussian distributed.

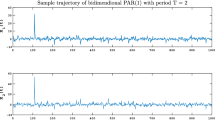

In Fig. 1a, we present the sample trajectory of the PAR(1) time series with period \(T=10\) and exemplary values of the parameters (i.e., \(\phi (1),\ldots ,\phi (10)\)) with Gaussian distributed innovation sequence \(\{\xi _t\}\) with zero mean and unit variance for each t. One can clearly see that the periodic behavior is observable on the level of the time series. In Fig. 1b–d, we present the sample trajectories of the model defined in Eq. (1) with three different \(\{Z_t\}\) sequences added to the trajectory presented in panel (a). In panel (b), we assume that \(\{Z_t\}\) constitutes a sequence of independent random variables from Gaussian distribution with zero mean and \(\sigma _Z=0.8\), in panel (c) we assume that for each t \(Z_t\) has Student’s t distribution with \(\nu =3\) degrees of freedom \(t(\nu )\). The definition of Student’s t distribution is presented in “Appendix.” In panel (d), we demonstrate the sample trajectory of the model (1) when \(\{Z_t\}\) is the sequence of additive outliers (AO) with parameters \(a=10\) and \(p=0.02\) (AO(a, p)). The definition of AO is also presented in “Appendix.” One can see that the main difference between the trajectories presented in panels (a)-(d) in Fig. 1 is related to the scale (amplitude) of the data.

The sample trajectory of PAR(1) time series with Gaussian innovations (a) and the corresponding sample trajectories of the model (1) with three different distributions of \(\{Z_t\}\) sequences: b Gaussian, c Student’s t and d AO

For comparison, we present the sample variances (i.e., \(\mathrm{cov}(Y_t,Y_t))\)) calculated for \(M=\) 10,000 simulated trajectories of the model (1), see Fig. 2. Similarly as previously, we assume that \(\{X_t\}\) is a PAR(1) time series with \(T=10\) with Gaussian distributed innovation series with zero mean and unit variance. We took the same three different distributions (with the same parameters) of the sequence \(\{Z_t\}\) as discussed above, i.e., in panel (b) we present the Gaussian distribution case, in panel (c)—the Student’s t distribution case, while in panel (d)—AO case. One can see, for the pure PAR(1) time series, the periodic behavior is clearly seen. The same situation we have for the Gaussian distributed case (panel (b)). For Student’s t distribution and AO cases, the periodic behavior is disturbed by the variance of the \(\{Z_t\}\) sequence. Moreover, the scales in panels (c) and (d) differ from those presented in panels (a) and (b).

The sample variances for \(M=\) 10,000 trajectories of PAR(1) time series with Gaussian innovations (a) and the corresponding sample variances for trajectories of model (1) with three different distributions of \(\{Z_t\}\) sequences: b Gaussian, c Student’s t and d AO

3 The estimation methods for finite-variance PAR(1) time series with additive noise

In this section, we present the algorithms that can be used for estimation of the analyzed model parameters. First, we remind the classical method of estimation of finite-variance PAR(1) model’s parameters, namely, the classical Yule–Walker algorithm [4]. This approach can be acceptable when the \(\sigma ^2_Z\) parameter in model (1) is relatively small. Then, we propose a modification of the classical Yule–Walker algorithm that takes under consideration the additive noise \(\{Z_t\}\) in the model (1). Finally, we discuss the robust version of the classical Yule–Walker algorithm and the modified Yule–Walker method introduced in this paper that utilizes the robust estimator of the autocovariance function [57]. It is worth mentioning that in all considered algorithms we do not assume the specific distribution of the innovations of the PAR(1) model nor the \(\{Z_t\}\) sequence in the model (1). The only assumption needed here is the finite variance of both sequences.

In the classical Yule–Walker approach dedicated to finite-variance PAR(1) time series with period T, we proceed as follows. Let us note that each \(t\in \mathbb {Z}\) can be represented as \(t=nT+v\), where \(n\in \mathbb {Z}\), \(v=1,2\ldots ,T\). Thus, Eq. (3) can be written in the following form:

Because \(\{X_t\}\) is a PC sequence, we take the notation for its autocovariance function:

Now, taking under consideration that the sequence \(\{\xi _t\}\) has zero mean and assuming that \(|P|<1\), we multiply the above equation by \(X_{nT+v}\) and take the expected value of both sides. Thus, we obtain the following:

We repeat the above step by multiplying Eq. (10) by \(X_{nT+v-1}\) and taking the expected value of both sides:

Thus, we obtain the following formulas for \(\phi (v)\) and \(\sigma ^2_{\xi }(v)\) parameters.

Remark 2

If \(\{X_t,~t\in \mathbb {Z}\}\) is the finite-variance PAR(1) time series with period T given in Eq. (3), then the parameters of the model satisfy the following system of equations:

In the classical Yule–Walker approach, the theoretical autocovariance functions \(\gamma (\cdot ,\cdot )\) in Eq. (14) are replaced by their empirical counterparts. In this paper, we consider two estimators of the autocovariance function.

If \(X_1,X_2,\ldots ,X_N\) is a sample realization of the finite-variance PAR(1) model with period T given in Eq. (3), then the classical estimator for \(\gamma _X(v,k)\) for \(v=1,2,\ldots ,T\) and \(k\in \mathbb {Z}\) is given by:

where

to ensure that both indices \(nT+v\) and \(nT+v-k\) are in the range \(1,2,\ldots ,N\) for each n.

Besides the classical estimator of the sample autocovariance function for a given random sample, one can also consider its robust version. One of the examples is the estimator presented in [57]. It is based on the following scale-based formula for autocovariance:

The robustness of the described algorithm comes from the application of the robust scale estimator, proposed in [58]. For a sample \(V = (V_1,\ldots ,V_N)\), it is the following order statistic:

where c is a constant for consistency purpose. For Gaussian distribution, we set \(c=2.2191\). From Eqs. (17) and (18), we can obtain the following robust autocovariance estimator for a sample \(X_1,\ldots ,X_N\):

where

with l and r defined as in Eq. (16). Let us note that the time complexity of Q(V) calculation from Eq. (18) is \(O(N^2)\). However, one can compute it in \(O(N \log N)\) time using the algorithm introduced in [59]. In the simulation study, its implementation in the MATLAB library LIBRA [60] was used.

In this paper, we consider two classical Yule–Walker algorithms: the classical Yule–Walker method (YW) and the robust classical Yule–Walker method (robust YW). The latter was introduced in [46].

In the second approach proposed in this paper, we introduce the modified Yule–Walker algorithm that is strictly dedicated to the model defined in Eq. (1) and takes into consideration the additive noise. Similarly as for the pure PAR(1) time series, Eq. (9) can be written in the equivalent form:

for \(n\in \mathbb {Z}, \,v=1,2\ldots ,T\). Because \(\{Y_t\}\) time series is PC, we take the notation:

In the next step, we proceed analogously as in the classical Yule–Walker algorithm. First, we multiply Eq. (21) by \(Y_{nT+v}\) and take the expected value of both sides of the obtained equation:

In the second step, we multiply Eq. (21) by \(Y_{nT+v-1}\) and take the expected value of both sides of the obtained equation:

In the final step, we multiply Eq. (21) by \(Y_{nT+v-2}\) and take the expected value of both sides of the obtained equation:

Taking into consideration Eqs. (23), (24) and (25), we obtain the following remark.

Remark 3

Let the time series \(\{Y_t,~t\in \mathbb {Z}\}\) be defined as in Eq. (1), where the time series \(\{X_t\}\) is finite-variance PAR(1) model with period T defined in Eq. (3) and \(\{Z_t\}\) is the additive noise that constitutes sequence of uncorrelated random variables with zero mean and finite variance \(\sigma ^2_Z\). Moreover, the time series \(\{X_t\}\) and \(\{Z_t\}\) are independent. Then, the corresponding parameters satisfy the following system of equations:

Similarly as previously, in the estimation algorithm, we replace the theoretical autocovariance functions in Eq. (26) by the empirical counterparts. Thus, in this paper, we consider two modified Yule–Walker algorithms: the modified Yule–Walker method (MYW) and the robust modified Yule–Walker method (robust MYW). Let us note \(\sigma _Z^2\) is not dependent on v parameter; thus, in the estimation algorithm \(\hat{\sigma }^2_Z\) is calculated as the mean for all \(v=1,2,\ldots ,T\) from the values \(\gamma _Y(v-1,0) - \frac{\gamma _Y(v,1)\gamma _Y(v-1,1)}{\gamma _Y(v,2)}\).

4 Simulation study

In this section, the methods presented in the previous section are compared using Monte Carlo simulations of PAR(1) model with additive noise (see Eq. (1)). We focus on the estimation of \(\phi (t)\) coefficients. First, we analyze the case when \(\{Z_t\}\) is Gaussian distributed. After that, the other mentioned types of additive noise (i.e., Student’s t and AO) are considered. The simulations are performed for the two following set-ups assuming \(T=2\):

-

Model 1: \(\phi (1) = 0.6\), \(\phi (2) = 0.8\),

-

Model 2: \(\phi (1) = 0.1\), \(\phi (2) = 0.8\).

Throughout the whole study, we set the constant variance of innovations \(\sigma ^2_{\xi }(t)=1\).

4.1 Gaussian additive noise

In our study, we simulate \(M=1000\) trajectories of length N of Model 1 with additive noise from Gaussian distribution with \(\sigma _Z = 0.8\). The boxplots of the estimated values for all considered methods for the case of \(N=1000\) are presented in Fig. 3. One can see the clear difference between both classical and both modified versions of Yule–Walker estimator. The results show that the former are significantly biased, unlike the latter which take the presence of additive noise into account. The boxplots for longer samples with \(N=\) 10,000, presented in Fig. 4, confirm these observations—although the variance of all methods is lower than in the previous case, the estimated values for both classical estimators (i.e., YW and robust YW) are still significantly different than the true ones.

To quantify the effectiveness of the analyzed estimators, let us calculate the parameter-wise mean squared errors:

Their values for both \(\phi (t)\) parameters and both considered lengths N are presented in Table 1. The results confirm the advantage of the introduced modified approaches. In particular, the MYW estimator seems to be the most effective. As mentioned before, it is caused by the significant bias of the classical Yule–Walker estimators and its lack in the modified algorithms, which can be seen in the bias results presented in Table 2. The bias is calculated as the difference between the median of the estimated values and the corresponding true value:

Let us note that the performance of classical estimators is visibly worse for a larger value of \(\phi (2)=0.8\) than for \(\phi (1) = 0.6\).

Now, let us consider Model 2. Here, the only change in comparison with the previous case is that we set \(\phi (1) = 0.1\), which is a value closer to zero than in Model 1. We perform the same experiment as before. The boxplots of the estimated values for \(N=1000\) are presented in Fig. 5. For the case of \(\phi (1)\), the observed behavior is similar to the ones seen for Model 1—in particular, the YW and robust YW methods are visibly biased. However, in this case the bias is not that significant as before, as the true value is close to zero. On the other hand, both MYW and robust MYW methods once again seem to be unbiased, although with larger variance than the classical estimators.

However, the results for \(\phi (2) = 0.8\) show that the introduced methods may significantly fail, regardless of the autocovariance estimator used. To explain this behavior, let us recall the form of the proposed estimator with additive noise consideration: \(\phi (2) = \frac{\gamma _Y(2,2)}{\gamma _Y(1,1)}\). It turns out that when \(\phi (1)\) is close to zero, the denominator of the expression for \(\phi (2)\) is too. Hence, even small errors of denominator estimation may result in large errors of \(\phi (2)\) estimation. The results for \(N=\) 10,000, illustrated by the boxplots in Fig. 6, show that for longer samples this drawback of the modified Yule–Walker methods is mitigated and, because of their unbiasedness, they prevail also in this case. The parameter-wise mean squared errors and biases for this set of simulations are presented in Tables 3 and 4, respectively. In particular, let us note that even for \(\phi (2)\) and \(N=1000\), where the introduced methods are prone to large errors, they have much less significant bias than the classical Yule–Walker algorithms.

Now, let us analyze the performance of all methods for different values of the additive noise standard deviation \(\sigma _Z\). We simulate \(M=1000\) trajectories of a given model (Model 1 or Model 2) of length \(N=1000\) with additive noise from Gaussian distribution with standard deviation \(\sigma _Z\) and perform the estimation. This experiment is done for \(\sigma _Z = 0,\,0.1,\ldots ,1\). For each case, we compute the mean squared error (MSE), which is the average of parameter-wise mean squared errors (see Eq. (27)):

Moreover, we calculate the mean absolute bias (MAB) which is the average of the absolute values of biases for both estimated parameters (see Eq. (28)):

The results for Model 1 are presented in Fig. 7. In the left panel, one can see that for low values of \(\sigma _Z\), when the additive noise can be considered as not significantly relevant, the YW and robust YW algorithms yield slightly better results than the modified versions. However, for larger \(\sigma _Z\), the advantage of the latter increases rapidly and is clearly visible for \(\sigma _Z \geqslant 0.5\). As before, it is caused by the bias of classical methods. Their MAB values, as one can see in the right panel of Fig. 7, are growing for higher scales of additive noise—whereas for the modified estimators they seem to be at a constant, low level. Such observation is consistent with the fact that the proposed methods take into account the additive noise in the model. Among these two estimators, the MYW performs better in this case.

In Fig. 8, the results for Model 2 are illustrated. As it was shown in the boxplots described before, for this set of \(\phi (t)\) parameters, the introduced modified methods may yield large errors, which is confirmed by the plot of MSE values in the left panel of Fig. 8. Nevertheless, for larger \(\sigma _Z\), their MAB values are still much closer to zero than the ones for classical estimators, as one can see in the right panel of Fig. 8.

4.2 Other types of additive noise

In this part, we discuss the effectiveness of the considered algorithms for other than Gaussian distributed additive noise. Here, we perform simulations only for Model 1. First, let us consider the case when \(\{Z_t\}\) has the Student’s t distribution with \(\nu =3\) degrees of freedom. Similarly as before, we simulate \(M=1000\) trajectories of length \(N=1000\) of Model 1 with such additive noise and perform the estimations. The boxplots of the estimated values are presented in Fig. 9. Once again, one can see the significant advantage of the modified Yule–Walker methods. Even though the variance of the YW and robust YW estimators is relatively low, they are still biased—even stronger than in the corresponding Gaussian additive noise case. Let us note that in this case the robust MYW method is better than its counterpart to the classical autocovariance estimator, as it copes better with the outliers resulting from the heavy-tailed Student’s t distribution of the additive noise. The MSE and MAB results for this case are presented in Table 5.

Let us now turn to another type of additive noise. We perform the same set of simulations as the latest one, but for \(\{Z_t\}\) being additive outliers with \(a=10\) and \(p=0.02\). The boxplots of the estimated values in this case are presented in Fig. 10. The MSE and MAB results can be found in Table 5. First of all, one can see that now the robust YW method (which was introduced specifically for the additive outliers presence, see [46]) has a very good performance. Although it is still slightly biased, it is able to achieve lower MSE than for the MYW method because of the much lower variance. However, once again, the best results are obtained for the robust MYW method. Let us mention that this estimator connects the traits of both previously mentioned methods—it takes into account the presence of additive noise in its derivation (hence, it has low bias) and is able to handle the outliers (thus, it has lower variance than the plain MYW).

5 Conclusions

In this paper, the PAR(1) time series with additive noise was considered. The analyzed model is more practical than the “pure” model as it takes under consideration the possible noise of the measurement device that influences the real observations. The considered model shares important properties of the PAR(1) time series; namely, it is still finite-variance distributed and exhibits periodically correlated behavior. However, it does not satisfy the PAR(1) equation. The additive noise included in the model makes that the classical estimation methods for PAR model’s parameters are not effective in the considered case. Thus, we proposed a new estimation algorithm that is a simple modification of the classical Yule–Walker technique. We have shown its effectiveness for various distributions of additive noise, including the Gaussian, Student’s t and noise which is the additive outliers sequence. The results were compared with the classical algorithm.

The future study will be related to a more extensive discussion about the new estimator properties, like its limiting distribution. Moreover, the obtained theoretical results will be extended to the general PARMA model. The comparison with the other existing methods will be performed taking into account the robust versions of the known algorithms. Moreover, other distributions need to be discussed. The new area of interest is also related to the analysis of the model with additive noise that is also described by some time series (e.g., ARMA model). This aspect has great practical potential as such behavior is observed for many real trajectories. The last point that needs to be mentioned is the consideration of the models with infinite-variance distributions.

Code availability

Code is available upon request.

References

Guzdenko, L.: The small fluctuation in essentially nonlinear autooscillation system. Dokl. Akad. Nauk USSR 125(1), 62–65 (1959)

Gladyshev, E.G.: Periodically correlated random sequences. Sov. Math. 2, 385–388 (1961)

Hurd, H.L.: An investigation of periodically correlated stochastic processes. PhD Dissertation, Duke University, Department of Electrical Engineering (1969)

Hurd, H.L., Miamee, A.: Periodically correlated random sequences: spectral theory and practice, vol. 355. Wiley (2007)

Napolitano, A.: Cyclostationarity: new trends and applications. Signal Process. 120, 385–408 (2016)

Jones, R., Brelsford, W.: Time series with periodic structure. Biometrika 54(3–4), 403–408 (1967)

Troutman, B.: Some results in periodic autoregression. Biometrika 66(2), 219–228 (1979)

Hipel, K.W., McLeod, A.I.: Time Series Modelling of Water Resources and Environmental Systems, Series Developments in Water Science. Elsevier, vol. 45 (1994)

Adams, G.J., Goodwin, G.C.: Parameter estimation for periodic ARMA models. J. Time Ser. Anal. 16(2), 127–145 (1995)

Lund, R., Basawa, I.V.: Recursive prediction and likelihood evaluation for periodic ARMA models. J. Time Ser. Anal. 21(1), 75–93 (2000)

Basawa, I.V., Lund, R.: Large sample properties of parameter estimates for periodic ARMA models. J. Time Ser. Anal. 22(6), 651–663 (2001)

Shao, Q., Lund, R.: Computation and characterization of autocorrelations and partial autocorrelations in periodic ARMA models. J. Time Ser. Anal. 25(3), 359–372 (2004)

Anderson, P.L., Meerschaert, M.M.: Parameter estimation for periodically stationary time series. J. Time Ser. Anal. 26(4), 489–518 (2005)

Ursu, E., Turkman, K.F.: Periodic autoregressive model identification using genetic algorithms. J. Time Ser. Anal. 33(3), 398–405 (2012)

Anderson, P.L., Meerschaert, M.M., Zhang, K.: Forecasting with prediction intervals for periodic autoregressive moving average models. J. Time Ser. Anal. 34(2), 187–193 (2013)

Brockwell, P.J., Davis, R.A.: Introduction to Time Series and Forecasting. Springer, New York (2002)

Makagon, A., Weron, A., Wyłomańska, A.: Bounded solutions for ARMA model with varying coefficients. Appl. Math. (Warsaw) 31(3), 273–285 (2004)

Jachan, M., Matz, G., Hlawatsch, F.: Time-frequency ARMA models and parameter estimators for underspread nonstationary random processes. IEEE Trans. Signal Process. 55(9), 4366–4381 (2007)

Zielinski, J., Bouaynaya, N., Schonfeld, D., O’Neill, W.: Time-dependent ARMA modeling of genomic sequences. BMC Bioinform. 9(Suppl 9), S14 (2008)

Antoni, J.: Cyclostationarity by examples. Mech. Syst. Signal Process. 23(4), 987–1036 (2009)

Antoni, J., Bonnardot, F., Raad, A., El Badaoui, M.: Cyclostationary modelling of rotating machine vibration signals. Mech. Syst. Signal Process. 18(6), 1285–1314 (2004)

Bukofzer, D.C.: Optimum and suboptimum detector performance for signals in cyclostationary noise. J. Ocean. Eng. 12(1), 97–115 (1987)

Bloomfield, P., Hurd, H.L., Lund, R.B.: Periodic correlation in stratospheric ozone time series. J. Time Ser. Anal. 15(2), 127–150 (1994)

Dargaville, R.J., Doney, S.C., Fung, I.Y.: Inter-annual variability in the interhemispheric atmospheric CO\(_2\) gradient. Tellus B 15(2), 711–722 (2003)

Broszkiewicz-Suwaj, E., Makagon, A., Weron, R., Wyłomańska, A.: On detecting and modeling periodic correlation in financial data. Physica A 336(1–2), 196–205 (2004)

Franses, P.H.: Periodicity and Stochastic Trends in Economic Time Series. Oxford University Press, Oxford (1996)

Donohue, K.D., Bressler, J.M., Varghese, T., Bilgutay, N.: Spectral correlation in ultrasonic pulse-echo signal processing. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 40(3), 330–337 (1993)

Fellingham, L., Sommer, F.: Ultrasonic characterization of tissue structure in the in vivo human liver and spleen. IEEE Trans. Sonics Ultrason. 31(4), 418–428 (1984)

Mittnik, S., Rachev, S.T.: Stable Paretian Models in Finance. Wiley, New York (2000)

Takayasu, H.: Stable distribution and Lévy process in fractal turbulence. Prog. Theor. Phys. 72(3), 471–479 (1984)

Nowicka-Zagrajek, J., Weron, R.: Modeling electricity loads in California: ARMA models with hyperbolic noise. Signal Process. 82(12), 1903–1915 (2002)

Żak, G., Wyłomańska, A., Zimroz, R.: Periodically impulsive behaviour detection in noisy observation based on generalised fractional order dependency map. Appl. Acoust. 144, 31–39 (2019)

Żak, G., Wyłomańska, A., Zimroz, R.: Data driven iterative vibration signal enhancement strategy using alpha-stable distribution. Shock. Vib. Article ID 3698370 (2017)

Chen, Z., Ding, S.X., Peng, T., Yang, C., Gui, W.: Fault detection for non-Gaussian processes using generalized canonical correlation analysis and randomized algorithms. IEEE Trans. Ind. Electron. 65(2), 1559–1567 (2018)

Palacios, M.B., Steel, M.F.J.: Non-Gaussian Bayesian geostatistical modeling. J. Am. Stat. Assoc. 101(474), 604–618 (2006)

Gosoniu, L., Vounatsou, P., Sogoba, N., Smith, T.: Bayesian modelling of geostatistical malaria risk data. Geospat. Health 1(1), 127–139 (2006)

Kruczek, P., Zimroz, R., Wyłomańska, A.: How to detect the cyclostationarity in heavy-tailed distributed signals. Signal Process. 172, 107514 (2020)

Kruczek, P., Wyłomańska, A., Teuerle, M., Gajda, J.: The modified Yule–Walker method for alpha-stable time series models. Physica A 469, 588–603 (2017)

Nowicka, J., Wyłomańska, A.: The dependence structure for PARMA models with alpha-stable innovations. Acta Phys. Pol. B 37(11), 3071–3081 (2006)

Lanoiselée, Y., Sikora, G., Grzesiek, A., Grebenkov, D.S., Wyłomaáńska, A.: Optimal parameters for anomalous-diffusion-exponent estimation from noisy data. Phys. Rev. E 98(6), 062139 (2018)

Parida, P.K., Marwala, T., Chakraverty, S.: A multivariate additive noise model for complete causal discovery. Neural Netw. 103, 44–54 (2018)

Peters, J., Janzing, D., Scholkopf, B.: Causal inference on discrete data using additive noise models. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2436–2450 (2011)

Peters, J., Janzing, D., Schölkopf, B.: Identifying cause and effect on discrete data using additive noise models. In: Teh, Y.W., Titterington, M. (eds.) Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Series Proceedings of Machine Learning Research, vol. 9, pp. 597–604 (2010)

Surrel, Y.: Additive noise effect in digital phase detection. Appl. Opt. 36(1), 271–276 (1997)

Zaikin, A.A., Schimansky-Geier, L.: Spatial patterns induced by additive noise. Phys. Rev. E 58(4), 4355–4360 (1998)

Sarnaglia, A.J.Q., Reisen, V.A., Lévy-Leduc, C.: Robust estimation of periodic autoregressive processes in the presence of additive outliers. J. Multivar. Anal. 101(9), 2168–2183 (2010)

Sarnaglia, A.J.Q., Reisen, V.A., Bondou, P., Lévy-Leduc, C.: A robust estimation approach for fitting a PARMA model to real data. In: 2015 IEEE Statistical Signal Processing Workshop (SSP), pp. 1–5 (2016)

Samadi, A.A., Al-Quraam, A.M.: Estimation of the seasonal ACF of PAR(1) model in the presence of additive outliers. J. Appl. Stat. Sci. 19(2), 169–182 (2011)

Sarnaglia, A.J.Q., Reisen, V.A., Bondon, P., Lévy-Leduc, C.: M-regression spectral estimator for periodic ARMA models. An empirical investigation. Stoch. Environ. Res. Risk Assess. 35(3), 653–664 (2021)

Shao, Q.: Robust estimation for periodic autoregressive time series. J. Time Ser. Anal. 29(2), 251–263 (2008)

Reisen, V.A., Lévy-Leduc, C., Cotta, H.H.A., Bondon, P., Ispany, M., Filho, P.R.P.: An overview of robust spectral estimators. In: Chaari, F., Leskow, J., Zimroz, R., Wyłomańska, A., Dudek, A. (eds.) Cyclostationarity: Theory and Methods—IV. Springer, pp. 204–224 (2020)

Battaglia, F., Cucina, D., Rizzo, M.: Detection and estimation of additive outliers in seasonal time series. Comput. Stat. 35, 1393–1409 (2020)

Bellini, T.: The forward search interactive outlier detection in cointegrated VAR analysis. Adv. Data Anal. Classif. 10, 351–373 (2016)

Cotta, H., Reisen, V., Bondon, P., Stummer, W.: Robust estimation of covariance and correlation functions of a stationary multivariate process. In: 25th European Signal Processing Conference (EUSIPCO 2017), (2017)

Vecchia, A.V.: Periodic autoregressive-moving average (PARMA) modeling with applications to water resources. J. Am. Water Resour. Assoc. 21(5), 721–730 (1985)

Wyłomańska, A.: Spectral measures of PARMA sequences. J. Time Ser. Anal. 29(1), 1–13 (2008)

Ma, Y., Genton, M.G.: Highly robust estimation of the autocovariance function. J. Time Ser. Anal. 21(6), 663–684 (2000)

Rousseeuw, P.J., Croux, C.: Alternatives to the median absolute deviation. J. Am. Stat. Assoc. 88, 1273–1283 (1993)

Croux, C., Rousseeuw, P.J.: Time-efficient algorithms for two highly robust estimators of scale. Comput. Stat. 1, 411–428 (1992)

Verboven, S., Hubert, M.: LIBRA: a MATLAB library for robust analysis. Chemom. Intell. Lab. Syst. 75, 127–136 (2005)

Hazewinkel, M.: Student Distribution, Encyclopedia of Mathematics. Springer (1994)

Solci, C.C., Reisen, V.A., Sarnaglia, A.J.Q., Bondon, P.: Empirical study of robust estimation methods for PAR models with application to the air quality area. Commun. Stat. Theory Methods 49(1), 152–168 (2020)

Funding

The work of A.W. was supported by National Center of Science under Opus Grant 2020/37/B/HS4/00120 “Market risk model identification and validation using novel statistical, probabilistic, and machine learning tools.”

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Student’s t-distribution

The Student’s t-distribution is defined through its probability density function (PDF) given by the formula [61]:

where the parameter \(\nu >0\) is called the number of degrees of freedom. In this paper, the distribution defined by PDF in Eq. (31) we denote as \(t(\nu )\). In the above definition, \(\varGamma (\cdot )\) is the gamma function. The variance of the Student’s t distributed random variable Z is defined only for \(\nu >2\) and takes the form:

1.2 Additive outlier

In this paper, the random variable Z is called the additive outlier (AO) with parameters \(a \in \mathbb {R}\) and \(p\in [0,1]\) if it has the following distribution [62]:

We denote the random variable Z defined as in Eq. (33) as AO(a, p). The variance of Z is defined for all \(a,\,p\) parameters and is given by:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Żuławiński, W., Wyłomańska, A. New estimation method for periodic autoregressive time series of order 1 with additive noise. Int J Adv Eng Sci Appl Math 13, 163–176 (2021). https://doi.org/10.1007/s12572-021-00302-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12572-021-00302-z

Keywords

- PAR model

- Finite-variance distribution

- Additive noise

- Estimation

- Robust estimator

- Yule–Walker equations

- Monte Carlo simulations