Abstract

Food security measurement is of paramount importance as it guides governance, policy formulation and intervention projects targeting and monitoring and evaluation. The measurement of food insecurity has proven to be a difficult task owing to the multi-dimensionality of the construct and different measurements have been developed to measure different dimensions of food insecurity. However, it is difficult to apply the different measurements to a holistic food security measurement as their classification might not agree. The current study proposes a composite food insecurity indicator by aggregating items from Household Dietary Diversity Score (HDDS), Household Food Insecurity Access Scale (HFIAS) and Months of Inadequate Household Food Provision (MIHFP) for Windhoek households’ data into a single measure using the Rasch Testlet Response Model. The composite measure was internally validated against the HDDS, HFIAS and MIHFP and externally validated against household income and the Lived Poverty Index (LPI) using Spearman's Rank Correlation and Cohen's Kappa. The validation results suggest that the metric could be a promising measure of aggregate food insecurity worth further investigation and discussion. However, more research is needed in coming up with cut-points for categorizing households into food insecurity statuses. In addition, the metric has only been computed and tested on a single urban sample in the Global South and hence it is not generalizable to different setups. Other researchers who might want to use the index can try to estimate and validate the index in different scenarios and suggest ways the index can be improved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Poverty reduction, food insecurity and undernutrition remain high on the development agenda (HLPE, 2017; Leroy et al., 2015) while targeting initiatives continue to be an important concern and have become more relevant in this era of climate change hazards, ever-shrinking economies and aid budgets. To be successful, targeting systems and policy formulation must use indicators that are valid and reliable in identifying at-risk beneficiaries, progress monitoring and evaluation and remain straightforward, simple and inexpensive to use (Chung, 1997). Historically, food availability (United Nations, 1975) has been the main aspect of food security measurement (Bertelli, 2020; Haysom & Tawodzera, 2018). However, the conceptualization of food security has developed significantly to incorporate the elements of individual access to food, implying that food can be inadequate/inaccessible despite it being readily available in markets (Webb et al., 2006). The construct was re-defined at the World Food Summit of 1996 as “a situation that exists when all people at all times have physical, social and economic access to sufficient, safe and nutritious food that meets their dietary needs and preferences for an active healthy life” (FAO, 1996). In short, food security is measured as a household-level concept that refers to uncertain, insufficient or unacceptable availability, access or utilization of food (Wunderlich & Norwood, 2006). FAO (1996) defined four pillars of food security; (1) food availability (2) food access (3) food utilization and (4) food stability. However, this multidimensionality of the attributes has posed challenges in its measurement.

Food security measurement is of paramount importance as it allows comparisons between individuals, households and groups. It guides governance (Coates, 2013), policy formulations, project interventions and safety-net programmes targeting, (Chung, 1997) and highlights changes and progress towards achieving set targets as measurement drives diagnosis and response (Barrett, 2010). Given the top prioritization of food security by many governments and global development communities and its conceptualization as a challenge (McCordic et al., 2022), there is a need to improve the understanding of food security measurement (Leroy et al., 2015, Bartelli, 2020; Jones et al., 2013; Deitchler et al., 2010). However, there is a rigorous international debate on how best to quantify levels of food insecurity (Coates, 2013; Haysom & Tawodzera, 2018; Headey et al., 2013; Leroy et al., 2015). Methodological concerns in the measurement of food insecurity have been highlighted (Abuelhaj, 2007; Haysom & Tawodzera, 2018), and the measurement of the trait has proven to be a difficult task, owing to it being multidimensional (Barrett, 2010), resulting in different measures of food insecurity used interchangeably and at times classification by different indices is not in agreement. As a result, analysts have resorted to measuring the distinct facets of food security rather than holistically measuring the attribute. However, measurement tools designed to measure certain contexts can overlook important parameters in a different context.

Several measures have been developed to measure household food access and these include; the Household Food Insecurity Access Scale (HFIAS), the Household Dietary Diversity Score (HDDS) and Months of Inadequate Household Food Provisioning (MIHFP) developed and utilized by the United States Agency for International Development (USAID) Food and Nutrition Technical Assistance (FANTA) Project. The HFIAS is a nine-item experience-based scale to measure the prevalence and severity of household food insecurity in developing countries (Abuelhaj, 2007; ENN, 2007), based on universal experiences; anxieties and uncertainties households may have faced with limited access to food. The nine HFIAS questionnaire items, arranged in order of the levels of severity of food insecurity they measure have response codes 1 “never” if the household has not experienced the condition in the past month, 2 “rarely” for a household that experienced the condition less than three times, 3 “sometimes” for a household that had between three and 10 experiences and 4 “often” for a household with more than 10 experiences of the condition. The scale, ranging between 0 and 27, is a summation of the responses for a particular household. The HDDS is a proxy for the quality and quantity of food (Abuelhaj, 2007; Leroy et al., 2015), aimed to assess the variety of the diet by adding up the number of food groups eaten by household members or individuals in the last 24 h before data collection. The MIHFP is a food security measure based on the total months in which households had inadequate food provision (Bilinsky & Swindale, 2010). The survey items for measuring the HFIAS, the HDDS and the MIHFP ate shown in Table 1.

In addition to coming up with an HFIAS score by adding up housing responses to the nine items in the scale, the score can be further categorized into the Household Food Insecurity Access Prevalence (HFIAP) with four food insecurity classes 1) Food Secure 2) Mildly Food Secure 3) Moderately Food Insecure and 4) Severely Food Insecure. There is no standard method for categorizing the HDDS and MIHFP into food insecurity classes. However, some scholars have attempted to categorize the HDDS and MIHFP as shown in Table 2.

Although the HFIAS, the HDDS and the MIHFP are intended to measure the same dimension: food access, they might not be congruent in classifying households hence it might be challenging to incorporate all three measures during safety-net targeting or progress monitoring and evaluation and policy formulation. It is argued that the HFIAS does not capture important elements of household food security, dietary diversity and coping strategies (Coates et al., 2006; Kirkland et al., 2013) and thus should be used in conjunction with other primary indicators of dietary diversity and coping strategies (Kirkland et al., 2013), to adequately locate vulnerable households across geographical and cultural settings. Similarly, the National Research Council (2005) criticized the use of a similar scale, the Household Food Security Survey Module (HFSSM) used in the Current Population Survey, highlighting that it does not capture much information relevant to capture frequency and duration of food insecurity levels. In addition, these measures were originally designed for measurement in rural settings and might not be compatible with measurement in urban areas where households do not directly rely on agriculture and it might be difficult to detect months of instability, diets might be influenced more by ethnicity than food security status, coupled with lack of safety-net interventions. Haysom and Tawodzera (2018) urged a renewed focus on building food security metrics that apply to the unique characteristics of urban food systems. An international workshop on simple tools for measuring household food access and dietary diversity was held in Nairobi, 2007 and one of the objectives was to explore how tools can be applied and “integrated” to improve the food security information systems (ENN, 2007).

This study attempted to come up with a single measure of household food access levels based on the responses to items for measuring all three scales by considering the sections as subtests, defined by Wang and Wilson (2005) as testlets, within one test intended to measure the food access dimension. The three measures were selected for this study because (1) they are normally collected in one questionnaire by institutions that adopt the FANTA measurement scales for food (in)security measurement and thus were readily available in the secondary data that was available for analysis (2) the proposed metric incorporates the (chronic) duration of food insecurity (MIHFP) and acute food insecurity through incorporation of the HFIAS and HDDS which capture access to dietary variety and perceptions about insufficient food quality and quantity into one measure. Although the proposed model might violate the comprehensibility-simplicity trade-off, that is, as much as it might portray the food insecurity phenomenon, it might be too complicated, it is computed from household instead of individual-level data, which requires less time and fewer resources to collect than individual-level data (Jones et al., 2013). In addition, the data is usually collected in the same survey. The household level metric is appropriate for targeting food aid, screening at-risk households and monitoring changes in food security over time.

1.1 The testlet item response models

Item response theory (IRT) models, are generalized linear mixed effects models (Johnson, 2004) and are a special case of latent variable models (Wunderlich & Norwood, 2006), concerned with the measurement of inherently unobservable attributes such as food insecurity. They are stochastic mathematical models represented as item response function (IRF), which relates the respondent probability of observing a particular response to a categorically scored manifest variable (questionnaire item) to individual proficiency (\(\theta\)), assumed to have a 0 mean Gaussian distribution, giving the position of the respondent on the latent construct being measured (in this case food insecurity) and item discriminant parameter \((a)\) and difficulty parameter (\(b\)). The latent variable is regarded as fixed for a given respondent (household) and values for the item responses for that household are regarded as independent and represent different aspects of food security. The connection between \(\theta\) and \(X\)’s is assumed probabilistic so that households with different proficiency (food insecurity) levels can still have different values for the observed \(X\) values. The parameter \(a\) indicates how much the measurement error changes theta (𝜃) and large values result in low measurement error and the stronger the relationship between theta (𝜃) and the questionnaire item.

Standard IRT models such as the Rasch model, the two-parameter logistic (2PL) model, and the Generalized Partial Credit Model (GPCM) for polytomous responses assume that the latent variable \((\theta )\) being measured is unidimensional and one person’s responses to different items are independent (local item independence) and responses of different persons to the same item are independent (local person independence). In addition, IRT models assume that the probability of endorsing a response option is monotonically non-decreasing, that is, respondents with higher levels of the latent continuum have higher chances of endorsing more severe items and item categories.

1.2 Application of IRT in food security access measurement

The application of IRT modelling to food security measurement is not new. The World Food Programme (WFP) Voice of the Hungry (VoH) uses the Rach IRT model for estimating the Food Insecurity Experience Scale. Nord (2014) illustrated the use of the one-parameter logistic (Rasch) model in food security modelling. The United States Department of Agriculture (USDA) uses the Rasch IRT model to measure household food security for originally polytomous responses dichotomized before estimation (Wunderlich & Norwood, 2006). A panel of experts reviewed the use of the Rasch model food insecurity measurement for dichotomous, originally polytomous responses and suggested the modification of the IRT model to handle the polytomous nature of the data collected. Johnson (2004) illustrated the applicability of IRT models in food security measurement, explaining various estimation methods, the appropriateness of IRT models on food security measures and the determination of cut-off points for the classification of individuals into food insecurity categories. He concluded that the assumptions need to be met before the model can be used for inference and there is a need to validate if the propensity measured by the IRT model is related to the true food security score. Some scholars have applied the Rasch model to validate the Household Dietary Diversity Score (Vellema et al., 2016; Charamba et al., 2019) while some scholars applied the polytomous IRT models to validate the HFIAS (Charamba et al., 2019; Deitchler et al., 2010; Bertelli, 2020). IRT methods have an advantage in that they provide mechanisms to evaluate the contribution of an individual item to the latent measure being estimated, by assessing the item parameter estimates and item fit statistics (see Charamba et al., 2019) thus it is not necessary to perform sensitivity analysis to assess individual item contribution in the model.

1.3 The rationale for an aggregate food security access measure

Some scholars suggested disaggregated suites of indicators to capture the complexity and diversity of the food insecurity construct (Coates, 2013). However, the use of different measures to quantify the food access dimension makes it difficult to compare results from different studies within the same spatial and temporal domains as different measures yield different estimates of food insecurity (Haysom & Tawodzera, 2018) and different metrics may classify the same household differently. For example, Mutea et al. (2019) noted that the HDDS and the Food Consumption Scale (FCS) revealed less food insecurity than the MAHFP, Coping Strategy Index (CSI) and HFIAS. This might pose a challenge in household food insecurity classification for reporting, beneficiary targeting in programme intervention and policy formulation, especially since food security has been conceptualized as a development challenge (HLPE, 2017; Leroy et al., 2015; McCordic et al., 2022). As a result, there is a pressing need to develop decision-support mechanisms to support food security policy and research (Mock et al., 2013) and interventions. Several authors have suggested a re-think of food security measurement (e.g. Headey & Ecker, 2013) with arguments that existing measures are too varied. Maxwell et al. (2013), considered the possibility of combining food security metrics or using them in a complementary way to yield a more multidimensional picture of household food insecurity, arguing that the “holy grail” of food security measurement would be a single measure that is valid, reliable and comparable over time and space, capturing different elements of insecurity. However, no single measure meets these criteria (Coates & Maxwell, 2012). McCordic et al. (2022) combined the HDDS, HFIAS and MAHFP into a new index by using an adjusted geometric mean and observed a correlation between food insecurity and inconsistent resource access. They reiterated that how scores are aggregated can significantly affect the index stability and such an index should provide means for normalization that ensures equal priority of measures being aggregated. It is behind this background that the current study proposed an alternative aggregate food access measure by combining the HFIAS, HDDS and MIHFP using the IRT modelling technique. These indicators selected were selected because they have a low-respondent burden (Leroy et al., 2015) and are suitable for large surveys as they exclude questions on household income and expenditure, consumed quantities, as well as quantities produced and distance to markets which might be difficult to obtain. In addition, they are usually combined in one survey by many institutions measuring food insecurity based on the FANTA metrics.

1.4 The goodness of fit tests and construct validity

To be useful, food security indicators should be valid, well-constructed, reliable, accurate and suitable for providing analytical measurement for a given purpose (Johnson, 2004; Leroy et al., 2015). An important step in the validation of food security measures is to assess the extent to which the data are consistent with the assumption of the measurement model (Nord, 2014; Bertelli, 2020; Deitchler et al., 2010; Johnson, 2004). Charamba et al. (2019) conducted a validity study on the applicability of the HDDS and HFIAS scales in measuring Windhoek urban households' (current data) food insecurity levels. The Rasch IRT model and the area under the curve (AUC) and the receiver operator curve (ROC) were applied to validate the HDDS with binary items while the Partial Credit Model (PCM) validated the polytomous item response HFIAS. The Principal Components Analysis (PCA) and the Mokken analysis were used to determine the dimensionality and monotonicity, that is, an increasing trend of item severity is preferred, so that items and item response categories are arranged in such a way that a household with a higher food insecurity levels has a higher probability of endorsing higher-order items or item categories. Their study concluded that the HFIAS is unidimensional and items are monotonic, with Loevinger coefficients > 0.5 implying that they are monotonically arranged (see Charamba et al., 2019). However, all items in the HDDS had Loevinger coefficients < 0.3, implying that they are not Guttman items, that is, they are not monotonically arranged according to the level of food insecurity levels they measure. In addition, items 2 and 5 of the HFIAS were not monotonically placed among other items. The results from the ROC curve showed that the HDDS is effective in separating Windhoek households according to their dietary quality and quantity. Vellema et al. (2016) validated the HDDS and HFIAS against household income and the Progress out of Poverty Index among other variables and Nickanor et al. (2017) compared household food security levels across households' socio-economic characteristics and observed a relationship between food insecurity and household type and location, and LPI, with food insecurity levels increasing with poverty levels. McCordic et al. (2022) observed a negative correlation between inconsistent access to water, electricity, cooking fuel and cash income, with different correlation magnitudes observed across cities in the Global South. The contribution of the current study to food security measurement literature is that it is probably the first initiative to try and bring together the experience-based coping strategy and dietary diversity indicators into one measure of food security using IRT methods.

2 Material and methods

2.1 Research objectives

The objectives of the study are to:

-

1.

Create an aggregate metric for assessing food access, utilization and stability by aggregating the HDDS, HFIAS and MAHFP score items using the Rasch testlet item response theory model.

-

2.

Internally and externally validate the proposed metric against the indices whose items were aggregated and against common determinants and consequences of food insecurity.

To address the stated objectives, the investigation first created an aggregate index for Windhoek households’ food access, utilization and stability by aggregating the HDDS, MIHFP and HFIAS items using the testlet item response model and validated the model against the Lived Poverty Index (LPI) and household income levels. In addition, the metric was correlated against the HDDS, HFIAP and MIHFP categories (Mutea et al., 2019; Bilinsky & Swindale, 2010) to assess the individual contribution of the HDDS, HFIAS and MIHFP to the new measure.

2.2 The data

The current study is based on secondary data analysis for data collected through a face-to-face survey in Windhoek, the capital city of Namibia. The data was collected from urban households under a study funded by the Open Society Foundation for South Africa, conducted by the Department of Statistics and Population Studies of the University of Namibia (UNAM) in partnership with the African Food Security Urban Network (AFSUN), the Hungry Cities Partnership (HCP) and Balsillie School of International Affairs, aimed at assessing food security status for urban households in the Global South. A total of 875 households were selected through a two-stage random sampling procedure. The first stage involved the random selection of 35 Primary Sampling Units (PSU) demarcated for the 2011 Population and Housing Census (Namibia Statistics Agency, 2013), using the probability proportional to size (PPS) from all the 10 Windhoek constituencies, where the number of PSUs selected per constituency was by the number of PSUs, number of households and population in the constituency. Only one PSU was selected from Windhoek Rural constituency due to its proximity to Windhoek urban as the original study was mainly concerned with the measurement of food security of urban households. Table 3 gives details on the distribution of PSUs, households and population per constituency. The second stage involved the systematic selection of 25 households from each of the 35 PSUs. The data on several demographic characteristics and socio-economic attributes including housing, income levels, access to water, cooking energy, electricity, access to medical facilities and food access and consumption patterns were collected from household heads or their proxies using the AFSUN-HCP Household Food Security Baseline Survey questionnaire. Food access and consumption data collected include the HFIAS, HDDS and MIHFP. The data was collected using the tablet method.

2.3 Estimation of the aggregate food access index

A testlet is a bundle of items that share a common “stimulus” (Wang & Wilson, 2005), that is, items that are related to a common sub-component of the latent variable being measured. Testlet models were developed to handle tests where items can be grouped into subtests measuring a sub-category of the stimuli being measured, as items in one subtest may be related, probably violating the local item independence assumption as there is a possibility that items in a subtest are related. They include random parameters added to handle the dependence that exists among items within the same bundle. The Rasch Testlet Model (Wang & Wilson, 2005) is a model for both dichotomous and polytomous items. Suppose \({\varvec{X}}\) is the manifest variable that codes the response of a food security question as \(k, k = 1\dots K,\) then the model used in the current study, generalized to allow items to have variant discrimination abilities and adjusted to cater for polytomous items has an IRF which relates the probability of endorsing a specific response category given a specific proficiency level as in Eq. (1).

where \({P}_{jik}\) represents the probability of person \(j\) with ability \({\theta }_{j}\) getting a score \(k\) on item \(i\) in a testlet \(d\) with step difficulty \({b}_{ik}\), \({\theta }_{j}\) is the food insecurity level for household \(j\) and \({a}_{i}\) is the discrimination parameter for item \(i\). \({\gamma }_{jd(i)}\) is the effect of item \(i\) in testlet \(d\) on respondent \(j\). The model exhibits a monotonic non-decreasing relationship between the latent variable and the probability of affirming an item response category. As \(\theta\) increases, the probability of affirming or endorsing a response category increases. As \({b}_{ik}\) increases, the item category becomes “harder” and the probability of a person affirming a response category decreases for a household with a specific value of \(\theta\). The discriminant parameter \(a\) must be at least 0 and indicates the strength of the connection between the latent variable \(\theta\) and the manifest questionnaire item \({X}_{i}\). When \(a\) is near 0, the connection is weak and when \(a\) is 0, then there is no connection. When \(a\) is positive and large, the item is highly discriminating and there is a strong connection between the item and the latent measure (food insecurity) implying less measurement error, and a high ability to disaggregate households according to their food insecurity classes. The model parameters and hyper-parameters have the following prior distributions:

where N, LN and IG denote the Normal, Lognormal and Inverse Gamma distributions respectively. The Lognormal distribution was selected for the discriminant parameter (\(a)\) to obtain non-negative values near 1 and to make the item-characteristic curve monotonically non-decreasing. The testlet model was chosen for the current study ahead of standard IRT for polytomous data based on the assumption that there is a possibility of correlation that might exist for items in the same testlet (HFIAS, HDDS and MIHFP). All 9 items of the HFIAS scale (testlet 1), all 12 items of the HDDS (testlet 2) and all the 12 items of the MAHFP (testlet 3) were used in the current testlet model. The local item independence was not tested for the current model, however, the magnitude of the testlet variance of at least 0.25 imply significant testlet effects (Wang & Wilson, 2005).

The model parameters were estimated using the Bayesian estimation with Markov Chain Monte Carlo (MCMC) using the Gibbs Sampling methods in WinBUGS 1.4.3 (Lunn et al., 2000). Convergence was checked by visualizing the trace and history plots of the four chains that were initialized with different starting values as well as when the Brook-Gelman-Rubin (BGR, Gelman et al., 1995) statistics becomes close to 1. The category characteristic curves (CCC) were assessed to check if the combined function is monotonically non-decreasing.

2.4 Categorization of the IRT food security measure

The categorization of households into food security levels similar to one of the expert judgement methods proposed by Johnson (2004) to categorize households under the HFSSM into food secure, mildly food insecure, moderately food insecure and severely food insecure categories were adopted in the current study. The method used assumed that a household belonging to a particular food insecurity class has a fixed minimum probability of affirming a specific questionnaire item. For example, experts may want to find a cut-point \({\tau }_{2}\) so that \(\mathrm{Pr}({X}_{i7}=1|hunger)=0.95\) or (some large probability) where item 7 is the item that asks the individual if they were ever hungry but could not eat over the past year, and then solve for the equation \({Pr}_{7}\left({\tau }_{2}\right)=0.95\). In the current study, the specific items demarcating households according to food security categories were determined according to Coates et al.'s (2007) categorization of households in HFIAP classes. The current procedure is based on the existence of a measure \({Y}_{k}\), \(k=1, 2, 3, 4\) denoting the realization that a household is food secure, mildly food insecure, moderately food insecure and severely food insecure respectively. A perfect monotone relationship is assumed between the food insecurity category and the propensity \(\theta\) measured from the IRT model such that there exists cut-points \({\tau }_{1}\), \({\tau }_{2}\), and \({\tau }_{3}\) such that:

and these points were used to categorize households into four food security categories. The relationship between \({Y}_{k}\) and \(\theta\) is assumed to be probabilistic, where \(\theta\) depends on the food security category \({Y}_{k}\) of household \(k\). Let \(F(\theta /Y=y)\) denote the distribution of the propensity given the household food security status \(Y=y\), the distributions are assumed to be stochastically ordered such that:

For all values \(t\). The propensities are assumed to be normally distributed within each food security category with means \({\mu }_{1}\le {\mu }_{2}\le {\mu }_{2}\le {\mu }_{4}\) and \({\sigma }_{1}={\sigma }_{2}={\sigma }_{3}={\sigma }_{4}.\)

According to the computation of the Household Food Insecurity Access Prevalence (HFIAP) categories (Coates et al., 2007), a household that responded with a 1 (never) or 2 (rarely) for item 1 of the HFIAS is assumed to be food secure, while households responding the item with 3 (sometimes) and 4 (often) are considered food insecure. This can be mathematically written as:

and implicitly

However, Eq. (2) implies that all items are Guttman items and all severely food insecure households have infinitely large propensities. However, the assumption was relaxed based on the findings from the study by Charamba et al. (2019) where items 2 and 5 of the HFIAS scale were not monotonically placed among other items of the scale and all HDDS items were not Guttman-items, as the measure was not designed to depict monotonicity as the food groups in the testlet are not weighted. It was assumed that households in each category have a probability of ascertaining a certain discriminatory item with a probability of 0.7 (expert judgement). As a result, a response of 3 (sometimes) to item 1 “Worry that the household would not have enough food”, a response of 3 to item 3 “Eat a limited variety of foods” and a response of 3 to item 6 “Eat fewer meals in a day” were set as boundaries to calculate the cut-off points such that \({Pr}_{13}\left({\tau }_{1}\right)=0.7\), \({Pr}_{33}\left({\tau }_{2}\right)=0.7\), and \({Pr}_{63}\left({\tau }_{1}\right)=0.7\), were used for determination of \({\tau }_{1}\), \({\tau }_{2}\) an \({\tau }_{3}\).

2.5 Internal and external validation of the composite IRT food insecurity measure

The IRT food insecurity measure was checked for internal index consistency by assessing the strength of the association with the subscales that were combined to make up the aggregate measure, i.e., the HFIAS, the HDDS, and the MIHFP using the Pearson’s Correlation coefficient (following McCordic et al., 2022). A negative correlation will mean that an increase in the subscale results in a decrease in the aggregate index while a low correlation will mean that the subscale did not play a major contribution (lower discrimination ability). The HFIAP was computed according to Coates et al. (2007). As there is no standard way of categorizing the HDDS and MIHFP, the categorization for the HDDS in the current study was tailor-made for the current study following Oldewage-Theron and Kruder (2008), Swindale and Bilinsky (2006) and Huluka and Wondimagegnhu (2019) and 1–3 food groups were categorized as low, 4–6 groups as medium, 7–9 as high and 10–12 very high dietary quality. The months of inadequate household food supply were categorized by reversing Mutea et al. (2019) categories for the MIHFP categories where households that had inadequate supply for 9–12 months were considered to be severely food insecure, 6–8 months as moderately food insecure, 3–5 as mildly food insecure and households that experienced food shortages for 0–2 months as food secure.

For external validation, the IRT aggregate metric was correlated with the household income and Lived Poverty Index (LPI). As the surveyed households are urban holds, they purchase most of their food items for consumption, thus household income is a determinant of food insecurity. As a result, there is an expected relationship between urban food access and household income. The LPI is a barometer of quality of life that measures the subjective experience of poverty (Mattes et al., 2016) and is an indication of how often a household goes without basic commodities, including food, implying that a household that lacks basic commodities (food included) is likely to be food insecure, making LPI a determinant and consequence of food insecurity. As a result, the new IRT index was externally validated against the household income and LPI which captures most of the variables McCordic et al. (2022) validated their index against.

Income quartiles were computed by first ranking the households from poorest to wealthiest based on household income, and then grouping them into four income quartiles and assigning 1 to the wealthiest and 4 to the poorest so that each quartile had approximately 25% of the population. LPI was computed on a 5-point (0, absence of poverty -4, constant absence of basic necessities) and then categorized into 4 classes according to the quartiles (< 1, 1–2, 2–3, 3–4) and these were considered to have a one-to-one correspondence with the food security classes. The agreement between the household food insecurity levels measured by the Testlet model and other measurement methods was assessed using Spearman’s rank correlation coefficient (\(r)\) and Cohen's Kappa (\(\kappa\)) agreement.

3 Results

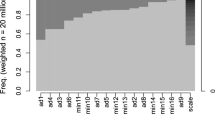

From the category characteristic curve (CCC) in Fig. 1, the probability of endorsing an item category for the combined testlet data is monotonically non-decreasing. For example, households with lower ability parameters (food insecurity level) are more likely to endorse the first category (never) in the HFIAS testlet and “no” in the HDDS and MIHFP testlets and less likely to endorse category 4 (often). On the other hand, households high up on the food insecurity continuum are more likely to endorse category 4. However, category 2 is less likely to be endorsed by households across all food security classes as its distribution is flatter.

Figure 2 shows the graphical presentation of the ability (IRT food insecurity) estimates for all the households in the study. The abilities seem to fluctuate between \(\pm 3\) across all households, and there is no obvious pattern in the distribution of food insecurity levels according to the household observation order.

Figure 3 gives the distribution of the difficulty parameter estimates across items. The first 9 items are from the HFIAS scale, items 10 to 21 are from the HDDS section while items 22 to 33 are from the MIHFP scale. Items in the HFIAS testlet are relatively difficult. Item 10, which asked about the consumption of starch products such as pasta, rice and maize, millet and sorghum flour meals, was considered the easiest item (consumed by many) while item 16 on the consumption of fish and item 17 on the consumption of beans, nuts and lentils were considered most difficulty (less consumed). Item 14 which collected data on meat consumption also peaked downwards, implying that Windhoek households might have high meat consumption patterns.

The discriminant parameter (\({a}_{i}\)) estimates for the items are given in Fig. 4. The results show that the items in the HDDS are less discriminating, that is, they contribute less to the aggregate households' food insecurity levels compared to items in the HFIAS and the MIHFP testlets. For example, item number 12 on vegetable consumption and number 16 on fish and seafood consumption were considered less discriminating.

From the results in Table 4, households are distributed according to their food insecurity levels from -3.54 (less food insecure) to 3.03 (higher levels of food insecurity) with an average of about 0. The first and second thresholds were disordered as the first threshold is higher than the second threshold while the third threshold was higher, implying that the transition from Category 2 to Category 3 was easier than the transition from Category 1 to Category 2. However, threshold 3 was higher, implying that the last category of the HFIAS testlet was endorsed by households higher up on the food insecurity continuum. In addition, items in the MIHFP testlet were more difficult compared to items in the HDDS testlet, implying that there were months when some Windhoek urban households had inadequate food supply.

3.1 Calculation of cut-off points for categorization of households into food insecurity classes

The values that were imputed into Eq. (1) to calculate the θ values for cut-off points \({\tau }_{1}\), \({\tau }_{2}\), and \({\tau }_{3}\), are shown in Table 5. The values were then used to categorize households according to estimated food insecurity levels and classes in Table 6 results.

3.2 Comparison with other food security/insecurity measures

The results are shown in Table 6, together with the categorisation from the Household Food Insecurity Access Prevalence (HFIAP: see Nickanor et al., 2017; Charamba et al., 2019).

The results in Table 6 show that there isn’t much difference in the proportions of respondents categorized as food insecure according to the different indices and this is supported by high p-values from the Wilcoxon paired ranks test (p > 0.05). However, the IRT index estimated more food-secure households than the other indices.

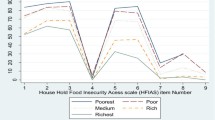

Table 7 gives the correlation coefficients (in the upper triangle) and Cohen’s Kappa (κ) lower triangle) values to measure the correlation and agreement between food security measures and categories respectively, for the different measurement methods. The results showed that the IRT food insecurity measure had the highest correlations and highest values of the Cohen’s Kappa agreement with the individual food insecurity scores that were aggregated in its formation, thus the index is internally consistent. The IRT categories had a substantial agreement (\(\kappa\) = 0.644) with the HFIAP categories and a fair agreement (\(\kappa\) = 0.24) with the HDDS food insecurity classes. Food insecurity decreased with an increase in income levels with a moderate correlation according to Mukaka (2012) \((r=-0.533)\) and increase with the LPI, with a moderate positive correlation (\(r=0.679\)), that is, low-income households in extreme poverty had higher food insecurity levels. The IRT behaved as expected against the LPI and the income levels. Making the index externally valid.

4 Discussion

Poverty reduction, food insecurity and undernutrition remain high on the development agenda. The need for coming up with good food insecurity measurements for project targeting and governance cannot be underestimated. Targeting based on poor indicators can be costlier than not targeting at all. In practice, it is difficult to detect which measure is best for which situation (Maxwell et al., 2013), and oftentimes, indices classifications of households are not in agreement. As such, having a single measure that captures all the information presented by different indicators might be the best alternative in food security measurement. The objective of the current study was to come up with an aggregate food insecurity indicator for improved targeting of safety-net, policy intervention and governance by incorporating the household experiential perceptions on food insecurity (HFIAS), dietary quality measure (HDDS) and the duration of food insecurity (MIHFP) into one food access measure. The metric can be constructed using evidence-based data that can be collected timeously at a relatively low cost and analysis as they can be collected/are usually collected in one questionnaire, thus having limited effect on time and financial implications. In addition, the application of the testlet model requires limited time and resources as free software and programmes are readily available.

The category characteristic curves (CCC) for the combined data showed monotonicity, that is, lower-order response categories were more likely to be endorsed by food-secure households while higher higher-order response categories were most likely to be endorsed by households that were more food insecure. Similar findings were observed by Charamba et al. (2019) in their study to validate the use of the HFIAS for the measurement of Windhoek households' food security levels. The curve for the second category was flat, implying that it was chosen by fewer respondents. This could imply that due to income disparities in Windhoek, there is a higher proportion of food secure and severely food insecure households than mildly and moderately food insecure. It could also imply that the second category option was not necessary for the Windhoek households. The difficulty parameter estimates for the HDDS testlet were lower, probably because households endorsed similar food groups. However, the higher difficulty parameter for the MIHFP might mean that the households had several months of inadequate food provisioning.

The IRT food insecurity measure recorded the highest correlation and level of agreement with the FANTA indicators that were incorporated in its computation, suggesting that the index is internally consistent and the single components measured by these indicators were a proper representation of the individual metrics being combined. In addition, the metric behaved as expected, as it increased with poverty levels, and MIHFP and decreased with increased dietary quality and income levels suggesting that poorer households had higher food insecurity levels. This is an indication that the IRT measure can be further developed into a food security measurement that combines access to dietary variety and the months of inadequate household food provision and anxiety and uncertainty, insufficient quality and quantity food intake and their physical consequences into one measure. The correlation coefficients and Cohen's Kappa (κ) agreement measurements between the IRT food security measures and the FANTA measurements were the lowest for the HDDS. This is probably because the testlet model considered the HDDS items to be less discriminating compared to HFIAS and MIHFP items, which could be emanating from a similar diet across households.

The current index has been noted to be internally consistent and externally valid with moderated correlations between the food insecurity status and LPI and income levels, concurring with observations by McCordic et al. (2022) who observed significant moderate correlations between inconsistent access to water, medical facilities, electricity and cash income against food insecurity index computed by combining the HFIAS, HDDS and MAHFP via a mini-max normalization and geometric means for aggregation. The lower Kappa values for HDDS and MIHFP (against relatively high correlations) may be because of the cut-off points for categorization of the IRT, HDDS and MIHFP which is non-standard and might be worth further investigation. The prevalence estimates that each indicator provides are functions of cut-off points used for the categorization of households into different food security classes. Since there are no universally acceptable cut-offs for most of these measures, these may be subject to further research and debate. The cut-offs used in this paper are not intended for application in other contexts without location-specific consideration being considered. They are just meant to trigger investigation and debate on the possibility of combining several metrics of food insecurity into one measure. Further studies are required on algorithms to come up with cut-off points for different metrics, including the IRT metric itself. However, the lower Kappa values might mean a different classification of the same households by the indices. However, the food insecurity classes were compared with income and LPI quartiles. It might not necessarily be the case that food insecurity classes in Windhoek have equal representation and this might have posed a challenge in the external validation process used.

The current study has been conducted on data collected from urban households. However, the applicability of the HFIAS, HDDS and MIHFP to urban households had been questioned (Haysom & Tawodzera, 2018) as they had been originally designed for application in rural communities. It is worthy of further investigation to assess the applicability of the current model to data collected from rural households to which these tools had been specifically designed. Considering the income disparity and dietary diversity for households in Windhoek, it might be worth further investigation to incorporate a variable that accounts for subgroups of respondents that might exist within a population (see Wunderlich & Norwood, 2006).

The validity of the IRT propensity as a measure of food security narrows down to whether the IRT latent construct is associated with the true food security (Johnson, 2004). Johnson (2004) added that if the true food security is not related to the IRT construct, it doesn’t matter how well the item responses and the construct adhere to the specific IRT model and thus can only be ascertained through validity studies. A validity study suggested by Johnson (2004) requires monitoring several individuals (households) over 12 months to determine their food security status and then administer a food security tool, and then conduct an analysis to determine the relationship between the IRT propensity and the actual food security levels. Further studies incorporating such a validity study might be worth undertaking to assess the validity of using the combined measure in different contexts. The proposed measure does not cover all aspects of food access as those go beyond what the HFIAS. HDDS and MIHFP measure, but rather provide a proxy for its measurement.

McCordic et al. (2022) suggested normalizing the HFIAS, HDDS and MAHFP before aggregating them so that they maintain equal contribution, arguing that other methods would only support household ranking other than scoring. However, normalizing is not necessary for the current model neither is equal contribution assumed. The IRT model is advantageous as an estimation method in that it provides mechanisms for assessing the contribution of each item to each measure based on the item discrimination and item goodness of fit parameters (see Charamba et al., 2019). In addition, it supports scoring as the latent variable is measured on a continuous continuum. It will be difficult to perform sensitivity analysis and confusion matrices using the current model of food insecurity on a continuous continuum and classification which is done later, is not part of the model algorithm.

Although all the items in the HFIAS, HDDS and MIHFP were used to calculate the current metric, the items on vegetable and fish and seafood consumption had lower discrimination ability, that is, they play a less significant role in separating households according to their food insecurity levels and could have been dropped from the analysis. The two items have been noticed by Charamba et al. (2019) to have low (0.21) and negative (-0.41) discrimination ability respectively and also have the highest inlier-sensitive fit (infit) and outlier-sensitive fit (outfit) statistics outside the acceptable range (Na et al., 2015; Nord, 2014), implying that they do not fit well in the scale for this data. Infit and outfit statistics, normally expressed as the mean of summed squared standardized residuals (MNSQ), indicate the goodness of fit of a test item to the model and index being measured. Although the items had a minimal contribution to scale, they were not dropped from the analysis because the IRT scale was meant to be an aggregate of the HFIAS, HDDS and MIHFP where none of the items was dropped, and it was to be compared against these individual scores for internal consistency checks. However, for estimation purposes, one might consider dropping off items with minimal contribution to the latent measure. Item performance may not be universal and need not be generalized to all data sets. For example, items that performed poorly in the Windhoek households' data set might behave differently in a different set-up with different food systems. The lack of statistical differences between the food security categories determined from the IRT index observed in Table 6 could have been because the statistical power has been compromised by a small sample size (only four categories). However, the problem of a small sample size could have been addressed by the employment of a non-parametric test for comparison.

5 Conclusion and recommendations

Much of the demand for indicators of food insecurity is to measure the impact of programmes, policies and to determine household groups that require interventions. The conceptual and measurement frameworks proposed in the current study have presented opportunities for a more holistic measure of food insecurity, as the proposed measure has been externally validated against other measures of food security and household socio-economic status. In addition, most food security measurements have been originally designed for measurement in rural settings, the combined measure can provide an alternative for food insecurity measurement in urban areas where food systems are different and there is high-income disparity, especially in Windhoek, and diets can be defined more by ethnicity that food security levels, coupled with lack of formal safety-nets The study can help in the translation of research into policy as it avoids the use of single measures which might not be congruent. However, the measure had only been computed using a single urban sample in the Global South and hence is not generalisable unless it is tested in several different set-ups with different food systems, possibly both rural and urban settings. It is difficult to establish cut-off points for classifying households into different levels of food insecurity. The empirical results in the current study do not specifically provide the thresholds or cut-offs for any of the indicators discussed, but only to trigger further research and debate on a composite food insecurity metric. Further research and analysis over a much broader range of contexts will be required to empirically assess the applicability of the proposed model in measuring household food insecurity to substantiate the current findings and document if the proposed food insecurity construct applies to different contexts. Considering that data similar to common metrics such as the HFSSM, the FCS had been incorporated into the scale, further research may consider incorporating other metrics such as the CSI into the computation of the food insecurity construct using the testlet model. For the IRT measure, cut-off points will require suggestions from Johnson (2004).

Data availability

The data is available from the authors upon request.

References

Abuelhaj, T. (2007). Methodological concerns in the measurement of undernourishment, dietary diversity and household food insecurity. Retrieved July 23, 2018, from www.ipcinfo.org

Barrett, C. B. (2010). Measuring food insecurity. Science, 327(5967), 825–828.

Bertelli, O. (2020). Food security measures in sub-Saharan Africa. A validation of the LSMS-ISA scale. Journal of African Economies, 29(1), 90–120.

Bilinsky, P., & Swindale, A. (2010). Months of adequate household food provisioning (MIHFP) for measurement of household food access: Indicator guide. Washington, DC: FANTA. Version 4.

Charamba, V., Nickanor, N., & Kazembe, L. N. (2019). HCP discussion paper no. 37: Validation of the HCP survey tool for measuring urban food insecurity: An item response theory approach.

Coates, J. (2013). Build it back better: Deconstructing food security for improved measurement and action. Global Food Security, 2(3), 188–194.

Coates, J., & Maxwell, D. (2012). Reaching for the stars?: Universal measures of household food security. UNFAO international scientific symposium on food security and nutrition security information: From valid measurement to effective decision making. Rome.

Coates, J., Swindale, A., & Bilinsky, P. (2007). Household food insecurity access scale (HFIAS) for measurement of food access: Indicator guide. Washington, DC: Food and Nutrition Technical Assistance Project, Academy for Educational Development. version 3.

Coates, J., Frongillo, E. A., Rogers, B. L., Webb, P., Wilde, P. E., & Houser, R. (2006). Commonalities in the experience of household food insecurity across cultures: What are measures missing? The Journal of Nutrition, 136(5), 1438S–1448S.

Chung, K. (Ed.). (1997). Identifying the food insecure: The application of mixed-method approaches in India. The International Food Policy Research Institute.

Deitchler, M., Ballard, T., Swindale, A., & Coates, J. (2010). Validation of a measure of household hunger for cross-cultural use. Washington, DC: FANTA.

ENN. (2007). Simple tools for measuring household food access and dietary diversity. Field Exchange, 31, 14. Retrieved April 2, 2022, from www.ennonline.net/fex/31/simpletools

FAO. (1996). Rome Declaration on world food security. World Food Summit. Rome: Food and Agriculture Organization.

Gelman, A., Carlin, J. B., Stern, H. S., & Rubin, D. B. (1995). Bayesian data analysis. Chapman and Hall/CRC.

Haysom, G., & Tawodzera, G. (2018). Measurement drives diagnosis and response: Gaps in transferring food security assessment to the urban scale. Food Policy, 74, 117–125.

Headey, D., & Ecker, O. (2013). Rethinking the measurement of food security: From first principles to best practice. Food Security, 5(3), 327–343.

HLPE. (2017). Nutrition and food systems. High-level panel of experts on food security and nutrition. Rome: Committee on World Food Security.

Huluka, A. T., & Wondimagegnhu, B. A. (2019). Determinants of household dietary diversity in the Yayo biosphere reserve of Ethiopia: An empirical analysis using sustainable livelihood framework. Cogent Food & Agriculture, 5(1), 1690829.

Johnson, M. S. (2004). Item response models and their use in measuring food insecurity and hunger. Washington, DC: NAS Committee on National Statistics Workshop on the Measurement of Food Insecurity and Hunger.

Jones, A., Ngure, F., Pelto, G., & Young, S. (2013). What are we assessing when we measure food security? A compendium and review of current metrics. Advances in Nutrition, 4, 481–506.

Kirkland, T. M., Kemp, R. J., Hunter, L. M., & Twine, W. M. (2013). Toward an improved understanding of food security: A methodological examination based in rural South Africa. Food, Culture & Society, 16(1), 65–84.

Leroy, J., Ruel, M., Frongillo, A., Harris, J., & Ballard, T. (2015). Measuring the food access dimension of food security: A critical review and mapping of indicators. Food and Nutrition Bulletin, 36, 167–195.

Lunn, D. J., Thomas, A., Best, N., & Spiegelhalter, D. (2000). WinBUGS — a Bayesian modelling framework: Concepts, structure, and extensibility. Statistics and Computing, 10, 325–337.

Maxwell, D., Coates, J., & Vaitla, B. (2013). How do different indicators of household food security compare? Empirical evidence from Tigray (pp. 1–19). Feinstein International Cente.

Mattes, R., Dulani, B., & Gyimah-Boadi, E. (2016). Africa’s growth dividend? Lived poverty drops across much of the continent. Afrobarometer.

McCordic, C., Frayne, B., Sunu, N., & Williamson, C. (2022). The household food security implications of disrupted access to basic services in five cities in the global south. Land, 11(5), 654.

Mock, N., Morrow, N., & Papendieck, A. (2013). From complexity to food security decision-support: Novel methods of assessment and their role in enhancing the timeliness and relevance of food and nutrition security information. Global Food Security, 2(1), 41–49.

Mukaka, M. M. (2012). A guide to appropriate use of correlation coefficient in medical research. Malawi Medical Journal, 24(3), 69–71.

Mutea, E., Bottazzi, P., Jacobi, J., Kiteme, B., Speranza, C. I., & Rist, S. (2019). Livelihoods and food security among rural households in the north-western Mount Kenya region. Frontiers in Sustainable Food Systems, 3, 98.

Na, M., Gross, A. L., & West, K. P. (2015). Validation of the food access survey tool to assess household food insecurity in rural Bangladesh. BMC Public Health, 15, 1–10.

Namibia Statistics Agency. (2013). Namibia 2011 population and housing census: Main report. Windhoek, Namibia: Namibia Statistics Agency.

National Research Council. (2005). Measuring food insecurity and hunger: Phase 1 report. National Academies Press.

Nickanor, N., Kazembe, L., Crush, J., & Wagner, J. (2017). The supermarket revolution and food security in Namibia (rep., pp. i-87). Kingston, ON and Cape Town: African Food Security Urban Network. Urban Food Security Series No. 26.

Nord, M. (2014). Introduction to item response theory applied to food security measurement: Basic concepts, parameters, and statistics. Retrieved June 30, 2018, from http://www.fao.org/economic/ess/ess-fs/voices/en

Oldewage-Theron, W. H., & Kruger, R. (2008). Food variety and dietary diversity as indicators of the dietary adequacy and health status of an elderly population in Sharpeville, South Africa. Journal of Nutrition for the Elderly, 27(1–2), 101–133.

Swindale, A., & Bilinsky, P. (2006). Household dietary diversity score (HDDS) for measurement of household food access: Indicator guide. Food and Nutrition Technical Assistance Project, Academy for Educational Development.

United Nations. (1975). Report of the World Food Conference. United Nations.

Vellema, W., Desiere, S., & Haese, M. (2016). Verifying validity of the household dietary diversity score: An application of Rasch modelling. Food and Nutrition Bulletin, 37, 27–41.

Wang, W. C., & Wilson, M. (2005). Exploring local item dependence using a random effects facet model. Applied Psychological Measurement, 29(4), 296–318.

Webb, P., Coates, J., Frongillo, A., Rogers, B., Swindale, A., & Bilinsky, P. (2006). Measuring household food insecurity: Why it’s so important and yet so difficult to do. Journal of Nutrition, 136, 1404S-1408S.

Wunderlich, G. S., & Norwood, J. L. (2006). Food insecurity and hunger in the United States. National Academies Press.

Acknowledgements

The researchers acknowledge the Open Society Foundation for South Africa, the former Department of Statistics and Population Studies (University of Namibia), the African Food Security Urban Network (AFSUN), the Hungry Cities Partnership (HCP) and the Balsillie School of International Affairs (BSIA) for the secondary data that was used in this research.

Funding

Open access funding provided by University of Namibia.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare no conflicts of interest regarding the publication of this paper.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Charamba, V., Kazembe, L.N. & Nickanor, N. Application of item response theory modelling to measure an aggregate food security access score. Food Sec. 15, 1383–1398 (2023). https://doi.org/10.1007/s12571-023-01388-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12571-023-01388-y