Abstract

We survey efforts that track food security in Africa using phone surveys during the COVID-19 pandemic. Phone surveys are concentrated in a few countries mostly focusing on a narrow theme. Only a few allow heterogeneous analyses across socioeconomic, spatial, and intertemporal dimensions across countries, leaving important issues inadequately enumerated. We recommend that the scientific community focuses on countries (and regions and groups within countries) where the evidence base is thin, and that policymakers in less researched areas attract more research by improving their statistical capacity, openness, and governance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The COVID-19 pandemic and its countermeasures have shaped lives and livelihoods around the world, causing economic contractions (IFPRI, 2020), worsening poverty (Laborde et al., 2021) and food insecurity (Dasgupta & Robinson, 2022; Egger et al., 2021; Jaacks et al., 2021). Given their weak economic and health care systems and largely immunocompromised populations, African countries carry a particularly heavy burden in terms of COVID-19 induced welfare losses (Djankov & Panizza, 2020; IFPRI et al., 2020). At the same time, COVID-19 risks reinforcing pre-existing socioeconomic disparities within and across countries in the region (Nechifor et al., 2021; Poudel & Gopinath, 2021).

Empirical evidence on the scale and the nature of the impacts of the pandemic and its countermeasures, while growing, is quite limited, partly due to the lack of suitable, comparable, and timely micro-level data (Delius et al., 2020; Gourlay et al., 2021). This lack of data also stems from the way COVID-19 is transmitted person-to-person, which inhibits face-to-face survey data collection. To overcome this challenge, high-income countries have managed to rely on real-time economic data as well as web-based surveys. In low-income countries in Africa, these options were not widely available and may even have worsened as the National Statistical Offices (NSOs) in these countries were hit particularly hard by the pandemic (UNDESA and World Bank, 2020)Footnote 1. Fortunately, the nascent expansion of mobile phone subscriptions as well as the learning experience from the 2014 Ebola outbreak in West Africa and the 2017 drought- and conflict- related food insecurity crisis in West and East Africa helped to deploy phone surveys quickly at the beginning of the ongoing pandemic (Gourlay et al., 2021; Hoogeveen & Pape, 2020).

The application of the phone surveys involves several challenges. First, phone surveys involve constraints regarding the type and size of questions that could be included in the interviews. In order to limit respondent fatigue, interview questions need to be kept short and simplified and answer choices limited (e.g., yes or no). Furthermore, interlinked and complex questions such as consumption modules are difficult to include in phone surveys (Hirvonen et al., 2021). While such a concern about respondent fatigue is not uncommon in surveys in general (Ambler et al., 2021), it is more pronounced in phone surveys (Abate et al., 2021). Second, contrary to face-to-face surveys, phone surveys don’t allow enumerators to observe visual non-verbal cues from respondents. While rigorous training of enumerators and certain lead-in scripts and probes could help identify and reduce the problem, they don’t fully address it (Dillon, 2012). Relatedly, the absence of in-person communication during phone interviews might make it difficult to build trust with the respondents, introducing willful error by a respondent, especially if the questions are sensitive (Dabalen et al., 2016).

There are also certain limitations that are more relevant with respect to conducting phone surveys in Africa and low-income countries’ settings in general. First, sampling bias is a concern since the survey could only be administered to respondents with working phones and phone ownership varies systematically across and within countries based on sociodemographic characteristics (e.g. age, education and wealth status) and place of residence (rural vs. urban areas) (Dabalen et al., 2016; Dillon, 2012; Kühne et al., 2020). While the use of representative baseline survey data could reduce the bias, it does not fully eliminate it (Ambel et al., 2021). Another limitation of phone surveys in Africa relates to the availability and the systematic variation in infrastructure particularly electricity and mobile signal, which is rampant in the continent (World Bank, 2009). Such a disparity among locations effectively creates a sampling problem by introducing bias, since availability and quality of infrastructure is likely to be correlated with other important characteristics, such as urban proximity, availability and quality of public goods (e.g. road, health centers, water supply) and average wealth (Dillon, 2012).

Notwithstanding their shortcomings, phone surveys have proven useful and cost-effective in collecting data in remote and conflict areas and in circumstances where face-to-face data collection appears to be risky to the safety of the enumerators and the survey respondents (Dabalen et al., 2016; Delius et al., 2020; Hoogeveen & Pape, 2020; Sturges & Hanrahan, 2004). Due to this and following the onset of the ongoing pandemic, large number of phone-based interviews are being conducted throughout the continent. However, we currently lack an overview of efforts to trace food security in Africa using phone surveys, risking duplication or omission of data collection efforts. We address this knowledge gap by reviewing all phone surveys tracking food security in Africa since the beginning of the pandemic, including our own phone survey called Life with Corona-Africa (LwC-Africa). We concentrate our review on five key issues, namely the topical, temporal, and geographic dimensions as well as geospatial coding and open access of the data.

It is true that phone surveys came to prominence in Africa due to the COVID-19 pandemic. However, their use might continue into the future as a standalone data collection model or in combination with face-to-face interviewing (Gourlay et al., 2021). The experience during the pandemic highlights that data such as those obtained through phone surveys have the potential to strengthen and modernize core data collection programs and be a key component of the national data systems (UNDESA and World Bank, 2020). Therefore, our review is helpful to highlight the broader picture of the size, the content, and the spatial and temporal distribution of the phone surveys as well as identify evidence gaps to inform future designs.

The rest of the paper is organized as follows. The next section first presents the data sources used in the paper and then describes the timeline, distribution and contents of the phone surveys. Section 3 discusses the implication of the results. Section 4 concludes.

2 Data and results

2.1 Data sources

For our review, we searched for phone-based surveys on COVID-19 and food security in Africa since the beginning of the pandemic in four stepsFootnote 2. First, we searched international repositories for registered COVID-19 and food security-related surveys and projects on Africa: the central registry of American Economic Association; the Economics Observatory (ECO) of European Economic Association (EEA), and the RECOVR research hub of Innovation for Poverty Action (IPA). Second, we searched for mentions of phone surveys in blogs, news articles, policy briefs, and academic literature on the websites of Google Scholar, IPA, and Relief Web, combining the terms (“COVID 19” OR “COVID-19” OR CORONA OR coronavirus), “food security”, (“phone survey” or “telephone survey”) for Africa, for the sub-regions and the individual countries. Third, and building on the findings of step two, we searched on the websites of African national statistical offices and several international organizations (World Bank, FAO, WHO, and WFP) using the same search terms. Finally, we evaluated all identified phone surveys to compile our final census of phone-based surveys on COVID-19 and food security in Africa since the beginning of the pandemic.

To examine the correlates of phone survey intensity, we used several indicators extracted from multiple data sources including the World Development Indicators (WDI) at https://data.worldbank.org/indicator, Fragile State Index (FSI) generated and made available by the Fund for Peace (FFP) at https://fragilestatesindex.org/ and COVID-19 caseloads and deaths from https://ourworldindata.org.

2.2 Results

2.2.1 Description of phone surveys

Our search yielded 234 completed or ongoing phone surveys on COVID-19 in Africa as of November 15, 2021 (Table 1)Footnote 3. A large share of these (90, or 39%) are rapid surveillance surveys aimed at assessing knowledge and perceptions of coronavirus. Typically, these are cross-sectional and individual-level opinion surveys conducted at the onset of the pandemic across multiple countries in or including Africa. The major leading organizations of such surveys include Partnership for Evidence-based COVID-19 Response (PERC), 60 Decibels, GeoPoll, and FinMark Trust (Table 5 in the Appendix). The World Bank and WFP are two prominent organizations that have been collecting near real-time phone survey data across most of the countries in the continent. The World Bank capitalized on its pre-pandemic cooperation with national statistical offices (NSO) to collect High Frequency Phone Surveys (HFPS) or Household Monitoring Surveys (HMS) in a large number of countries, including most countries in Africa, to inform a wide range of knowledge products (Gourlay et al., 2021)Footnote 4. To supplement inputs used in its global hunger monitoring system, the World Food Program (WFP) conducts continuous phone-based food security monitoring through call centers. At the end of 2021, the system was already set up in several developing countries, including 26 African countries, to collect data on a rolling basis over a three-month periodFootnote 5. Other surveys include rural household surveys implemented by CGIAR Research Centers (e.g. IFPRI), surveys conducted by academic intuitions (e.g., the university of Oxford, and ETH Zürich), and others (e.g., IGZ/ISDC, the hosts of our study, LwC-Africa). Table 9 in the Appendix presents the complete list of all the phone surveys including start time, sample size, number of survey rounds and internet links.

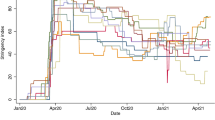

Panel A of Table 2 presents the main descriptive characteristics of the phone surveys. Typically, the surveys we identified are medium-sized (~ 1,000 respondents), cover both rural and urban areas (71%), run for 2 or 3 rounds, collect data for a short period of time (six months or less), focusing on a narrow theme. For a little more than half of the surveys, the unit of analysis are individuals (57%) and the same respondents were contacted repeatedly over time (i.e. panel) (53%). In the review, we included our own study, life with Corona Africa (LwC-A), collecting phone surveys in four African countries — Uganda, Tanzania, Sierra Leone, and Mozambique (see Table 6 in the Appendix for brief description of the survey). About three-quarters of the surveys had been started during the first four months of the beginning of the pandemic between March and June 2020. Over the subsequent periods, the number of ongoing surveys tailed off as existing surveys were phased out and few new surveys were started (Fig. 1).

Panel B of Table 2 presents a description of baseline data used for COVID-19 related phone surveys in Africa. Three major types of baseline survey data are distinguishable. The first type is one-off specialized surveys, typically undertaken before the pandemic for other studies (e.g. rural household surveys conducted by IFPRI) and later adapted to assess the impact of the pandemic. The second type is long-term specialized panel surveys. These are long-running panel surveys specialized by their thematic area or spatial focuses (e.g. the Feed the Future (FtF); the Productive Safety Net Program (PSNP); the Young Lives surveys; Integrated Household Budget Survey). The third type is general-themed, long-running, and integrated household panel surveys. Living Standard Measurement Studies (LSMS); Household Integrated Panel Surveys (HIPS); Life Panel Surveys and the National Income Dynamics Study (NIDS) are the most popular in this category.

When relevant baseline data is not available, two other types of sampling frames are commonly used for phone surveys in lower-income settings. The first is the use of lists of phone numbers, for example from a mobile network operator or contact details of beneficiaries of a program. Another option is to use phone numbers created through random digit dialing (RDD). These two methods jointly account for about 60% of the sampling frame used in phone surveys. Between the two, RDD is slightly more used (Table 2, Panel B).

2.2.2 Review of phone surveys

In this part, we concentrate our review on five key dimensions, namely the topical, temporal, and geographic dimensions as well as geospatial coding and open access of the data. These are dimensions that a survey should accommodate to adequately inform the pattern in the evolution and the socio-economic impacts of and responses to the pandemic (Gourlay et al., 2021; Kühne et al., 2020; Stojetz et al., 2022).

-

(A)

Topical dimension

The identified phone surveys vary widely in terms of the topical areas covered. Table 3 shows that survey modules related to COVID-19 exposure and food (in)security are the two most common ones. COVID-19 exposure is typically assessed based on simple yes/no answers to such questions as “whether the respondents think they or somebody they know had COVID-19”. Another related module common among the phone surveys is on adherence to public health and social measures (PHSMs). Many of these involve data collections that elicit information on how much respondents followed hygiene and social distance measures such as hand washing, avoiding large gatherings and wearing face masks.

From among the 234 phone surveys included in the review, 231 (or 98.7%) of them include some indicators of food security, such as changes in income or access to the food market due to the pandemic.Footnote 6 While most of these questions provide useful insight, not all of them reflect real changes in food security (Cafiero et al., 2018). Table 3 shows that only 66% of all surveys contain standardized modules on food security such as the Food Insecurity Experience Scale (FIES), Food Consumption Score (FCS), or the number of months of food shortage (food gap). Access to food, or the lack thereof, is the most commonly used food security module (97%) followed by food gap (60%). FCS and FIES are less frequently used perhaps because they require adding a relatively large number of questions (cf. FCS) or they involve questions that are less straightforward or require extensive enumerator training or monitoring (cf. FIES). The number of surveys that include multiple food security measures is even lower (34%).

Other commonly surveyed welfare measures include changes in employment status (66%), income changes (79%), access to services such as drinking water and health services (59%) and coping mechanisms (74%). Less than a quarter of surveys included mental health questions. This is despite the significant increase in mental health issues since the onset of the pandemic (Abreu et al., 2021; Brülhart et al., 2021). Furthermore, Table 3 shows that the surveys are limited in terms of comprehensiveness. While a clearer understanding of the pandemic requires survey data that cover multiple welfare and behavioral dimensions, more than half of the phone surveys we reviewed mostly focus on a narrow theme (e.g. only one dimension of food security or only the health impact of COVID-19 exposure).

-

(B)

Temporal dimension

Given its comprehensive nature, the full impact of the pandemic might not be apparent in the short term based on one-shot surveys (IFPRI, 2020). To be more useful for research, phone surveys need to be collected throughout the pandemic, covering periods of lockdowns and infection peaks and allow comparison before, during and after. However, Table 2 indicates that about half of the phone surveys are cross-sectional and hence are less useful to assess the evolution, the responses to, and the socioeconomic impacts of the pandemic over time. Even when the surveys are repeated, they typically do not last more than 3 rounds. The average number of survey rounds is 2.5. For about 63% of the surveys, the duration of the surveys – the number of months between the start and end of the survey – is less than 6 months. Only 12% of the surveys cover more than a year (Table 2).

Furthermore, Panel A of Fig. 2 shows that the number of ongoing surveys has continuously been declining. Between March and June 2020, the number of surveys was growing and in June 2020, 120 different surveys were in progress in the continent. Since June, the size has been declining persistently to reach about 30 by the end of the year. Panel B further shows that the number of ongoing surveys has continuously been declining regardless of the progression of the pandemic.

-

(III)

Geographic dimension

The COVID-19 pandemic and the subsequent lockdowns and social distancing measures have largely halted in-person surveys. As a result, following the onset of the pandemic, phone-based surveys became the main, often the only, alternative source of data in most countries in Africa (Gourlay et al., 2021). Given the uncertainty that accompanied the pandemic, phone surveys appeared critical to fully understand, manage and mitigate the human, social and economic effects of the shock. However, the distribution of the phone surveys is highly uneven. Kenya is the most surveyed country in the continent accounting for 11.5% of all phone surveys, amounting to more than 15.3% of all interviews (Table 4). The top five surveyed countries — Kenya, Ghana, Uganda, Ethiopia, and South Africa — account for more than 35% of all surveys and more than 40% of interviews, while accounting for only about 20% of the continent’s population. Other frequently surveyed countries include Nigeria, Malawi, Zambia, and Tanzania. These nine countries account for more than half of the phone surveys related to COVID-19 and food security. The picture remains the same regardless of the measure of survey intensity used – number of surveys, survey rounds, number of respondents, or number of interviews conducted (Table 4).

What may explain the uneven distribution of phone surveys across African countries? To answer this, we investigated the simple bivariate correlations between survey intensity and factors that are broad indicators of the perceived costs or ease of conducting research. These factors include population size, statistical capacity score (SCS), official development assistance (ODA), Fragile State Index (FSI), COVID-19 caseloads and deaths, and the use of English as an official language. We identified these factors from previous literature that looked at the distribution of research across African countries and beyond (Das et al., 2013; Porteous, 2020; Robinson et al., 2006).

We derived data on these indicators from multiple sources. Data on population size, SCS, and ODA are extracted from the World Development Indicators at https://data.worldbank.org/indicator. SCS is a composite score on a scale of 0-100 assessing the capacity of a country’s statistical system on methodology, data sources, and periodicity and timelinessFootnote 7. ODA consists of disbursements of loans made on concessional terms and grants by official agencies of the members of the Development Assistance Committee (DAC), multilateral institutions, and non-DAC countries. FSI is generated and made available by the Fund for Peace (FFP). It summarizes the economic and political instability of countries based on 12 conflict risk indicatorsFootnote 8. COVID-19 caseloads and deaths refer to the number of COVID-related cases/deaths corresponding to the first three months of the pandemic, and are extracted from: https://ourworldindata.org.

Generally, one might expect differences in resources or COVID cases and deaths to drive survey locations. However, a pairwise correlation result presented in Table 8 in the appendix indicates that this is not the case (also see Fig. 3, top left panel)Footnote 9. Instead, survey location choices are related to the size of the country (population size) and the availability of pre-existing data or indeed statistical capacity (SCS) (bottom left panel). Figure 3 also shows that phone surveys are less (more) correlated with fragility index (net official development assistance (ODA) (top right panel). Finally, the bottom right panel shows that phone surveys are more prevalent in countries with English as an official language, which is consistent with other studies (Porteous, 2020).

Correlates of phone survey intensity in Africa.

Notes: The fit line is from a linear regression of the number of interviews (log) on population (log), statistical capacity score (SCS), net official development assistance (ODA) (log), fragile state index (FSI), and number of COVID related cases/deaths.

The use of representative and up-to-date pre-pandemic baseline data as a sampling frame in phone surveys is vital to correct the biases associated with the sample selection process (Ambel et al., 2021). In line with this, 39% of all phone surveys and 73% of panel surveys in our review used pre-pandemic face-to-face survey datasets as a baseline. When used, the preferred baseline data are large-scale, representative, long-running, and integrated panel surveys (Panel B, Table 2). However, since the pre-pandemic distribution of large-scale datasets across African countries is highly uneven (Porteous, 2020), this has led to a significant disparity in data collected during the pandemic as shown above.

-

(D)

Geospatial coding

One of the downsides of using a phone survey, compared to alternative ways of data collection, is that it allows for limited sets of questions to be included. Fortunately, there is large useful and open access information (e.g. diseases statistics, government measures, public goods, price trends, conflicts, weather data, etc.) that can be extracted and spatially and temporally matched with survey data. To take advantage of this, a few phone surveys include either location information or use baseline data that already collected GPS information. However, this is not widespread. In our review, only 39% of the surveys include such information (Table 3).

-

(E)

Open access of the data

Another desirable, yet largely missing, quality of phone surveys is the availability of the resulting data as open access for public use. While this enables widespread use of the data, it would also allow pooling across surveys in cross-country analyses. Regardless, only 24% of the phone surveys are currently available for public use (Table 2). Eight (~ 3.42%) other surveys are not yet open access but indicated that the corresponding data will become open access in the future. For the remaining others, we are unable to find information to determine if they will become open access or not. The most popular of the open access data is from the World Bank data portal, based on which the Bank creates harmonized indicators and disseminates through High-Frequency Monitoring Dashboard.Footnote 10

To summarize and further elaborate on the above five dimensions, we generated an index representing the pooling potential of the reviewed phone surveys based on 14 selected survey and questionnaire features, each of which is coded as a binary variable that takes a value of 1 if desirable, 0 otherwise. The index, thus, ranges from zero to 14. The selected 14 survey and questionnaire features include, (i) survey is panel; (ii) survey involves continuous data collection; (iii) survey data available for public use; (iv) survey sample is large (greater than 1000 respondents); (v) questionnaire includes standardized food security questions; (vi) questionnaire include change in employment; (vii) questionnaire includes change in income; (viii) questionnaire includes access to services; (ix) questionnaire includes mental health questions; (x) questionnaire includes coping mechanisms; (xi) survey allows merging with external data; (xii) survey uses pre-crisis baseline data; (xiii) pre-crisis baseline is representative and (xiv) survey covers both urban and rural areas.

In our study, this indicator takes an average overall value of 6.8 (out of the possible 14 points), suggesting that many studies do not fulfill the requirements which would allow pooling across surveys (Fig. 4). Furthermore, the right-hand side graph indicates that this quality of phone surveys is positively correlated with the number of interviews suggesting that less studied countries are disadvantaged not only in terms of survey intensity but also in terms of pooling potential to study cross-regional and cross-country issues.

3 Discussion

Our review of phone surveys in Africa during the COVID-19 pandemic provides several interesting insights. First, we find that the distribution of phone surveys in the continent is highly uneven - and systematically so. The variation across countries is strongly related to factors that are broad indicators of the perceived costs or ease of research (population size, inflow of aid, statistical capacity, and fragility of economies) rather than the potential benefits to the communities (e.g. Coronavirus incidence). Since the distribution of data was already uneven before the pandemic, and that design of quality phone surveys benefits from the availability of representative and up-to-date pre-pandemic data (Ambel et al., 2021), COVID-19 may have perpetuated (or even exacerbated) the existing uneven distribution of data between countries in Africa. Since the uneven distribution of data implies an uneven distribution of research (Brück et al., 2014), which in turn translates into an uneven evidence base for policy-makers (Porteous, 2020), we call on the scientific community to focus further research on locations where the evidence base is thin. Policymakers in less researched areas could also help attract more research by improving their statistical capacity, openness, and governance.

Our review indicates that the current intensity of data collection is strongly influenced by past scores of statistical capacity. Indeed, countries that were able to rapidly launch phone surveys at the beginning of the pandemic were those with long-term and representative pre-pandemic data that serves as a sampling frame as well as with up-to-date information and communication technology (ICT) infrastructure for the implementation of longitudinal household surveys (Gourlay et al., 2021; UNDESA and World Bank, 2020). This suggests that countries should invest in ICT infrastructure, particularly on National Statistical Offices (NSOs) to provide them with reliable internet access and computer hardware and software for data collection, storage, and processing.

Second, our results demonstrate that the existing surveys mostly focus on a narrow theme and only some of them allow for heterogeneous analysis across socioeconomic, spatial, and intertemporal dimensions. Most surveys focus on food security (even if not measured consistently), employment, and income losses. Non-economic aspects such as the interaction with or the impact of the pandemic on mental health, social capital, trust, governance, and intra-household relationships are not fully accommodated. As these are important correlates of household welfare and are significantly affected by the pandemic (Brooks et al., 2020; Ravens-Sieberer et al., 2021), their exclusion from surveys could lead to an underestimation of the impact of the pandemic.

Third, most of the surveys were designed as short-term projects. While it has so far been natural to focus on the pandemic’s short-term impact, it is also critical to monitor how the pandemic unfolds and assess its implications for medium- and long-term food security to inform policy decisions. For instance, the large-scale countermeasures implemented in most countries have changed patterns in education attendance, consumption, and household labor allocation (IFPRI, 2020). While the short-term effects of these changes are profound, they are also likely to determine the speed of recovery and the long-term growth trajectory of affected households and countries. Furthermore, it is not yet clear whether and how these COVID-shaped trajectories may interact with existing vulnerabilities such as old age, household size, income sources, or poverty.

Fourth, only 24% of the phone surveys are available for public use. This constraints widespread distribution and use of the data to support research-based policy solutions. Even when the data are available, the idiosyncratic nature of many surveys prevents meaningful pooling of surveys across Africa, closing an avenue of learning open to standardized surveys like DHS, LSMS, or MICS. Furthermore, it is relatively less common for researchers and statistical offices in Africa to register their surveys and projects in international registries. This reduces the potential synergies among different projects from harmonization of survey instruments. A widespread registry of surveys also helps to identify and draw attention to relatively understudied areas and topics.

Finally, due to a lack of geospatial information or alternative location information, most surveys are not suitable for matching with secondary sources of information on, inter alia, diseases statistics, government measures, price trends, conflicts, or weather data, reducing the scope for multidisciplinary research around the pandemic.

To address some of the shortcomings in the extant surveys, we designed the Life with Corona - Africa (LwC-Africa) survey. LwC-Africa is based in four African countries — Uganda, Tanzania, Sierra Leone, and Mozambique and builds on and complements the global LwC online survey (https://lifewithcorona.org/). The survey is based on country representative samples and allows statistically meaningful and valid comparisons between and within countries across different socio-demographic groups (e.g., age, gender, and place of residence). The survey follows a stratified random sampling method and interviewed 500 respondents per month per country over 12 months in 2021. The questionnaire contains modules on COVID exposure and experiences on a wide range of topics, including economic, health, social, psychological, and political issues. Specifically, the questionnaire contains the following six modules: (i) household demographic characteristics; (ii) Coronavirus exposure; (iii) Economic well-being, financial insecurity, coping mechanisms, and external support; (iv) Social capital; (v) Food and nutrition security; and (vi) Mental health and wellbeing. It also allows geospatial matching with secondary data sources. We will avail the data for research and public use upon publication of this article.

4 Conclusion

The COVID-19 pandemic is a global crisis with multiple interlinked dimensions, including health, economic, social, and political consequences. Yet, the effects differ significantly across and within countries, over time, and among individuals based on sociodemographic characteristics and place of residence. Therefore, in order to clearly understand the evolution and the socio-economic impacts of and responses to the pandemic, surveys would benefit from collecting data across multiple countries, multiple topics, continuously throughout the pandemic and allow matching with external datasets, such as disease statistics or information on countermeasures.

However, our review indicates that phone surveys in Africa are concentrated in a few countries; mostly focusing on a narrow theme and a single country; and only a few allow heterogeneous analyses across socioeconomic, spatial, and intertemporal dimensions. We, therefore, highlight the importance for the scientific community to focus its research much more on countries (and regions and groups within countries) as well as topics where the evidence base is thin. Longer-term studies with more continuous data collection would help understand the complex dynamics that COVID-19 will have for food security specifically and societies in general in Africa. More geo-coding and more standardized study protocols would allow creation of synergies between surveys, akin to large-scale data programs like DHS, LSMS, and MICS. Policymakers can also attract more research on food security in less researched areas by improving their statistical capacity, openness, and governance.

Notes

The UNDESA report indicates that in May 2020, 97% of NSOs in sub-Saharan African countries were not able to meet international reporting requirements due to the pandemic, as opposed to 38.5% in NSOs in high-income countries.

Phone surveys generally take three forms. First type is Computer Assisted Telephone Interviews (CATI), where interview responses are recorded on a computer based on an interactive survey questionnaire. Second type is Interactive Voice Response (IVR) where data is collected based on automated, prerecorded questions. The third category is text message-based surveys (SMS) where questions are sent and responses are collected using text messages. Most COVID-19 monitoring surveys in Africa used CATI mode which, compared to the IVR and SMS modes, is more expensive but allows potential depth and breadth of data gathering (Glazerman et al., 2020).

Our review includes only household and individual level surveys. A major excluded survey is firm/company level surveys. All African countries are included in the search. However, survey descriptions from eight countries - Burundi, Cabo Verde, Comoros, Eritrea, Eswastini (formerly named Swaziland), Equatorial Guinea, Guinea Bissau, and Lesotho - is not included in the paper because we didn’t find any phone survey information from these countries.

The bank maintains a “COVID-19 High-Frequency Monitoring Dashboard” to trace and update surveys and to generate and regularly disseminate harmonized indicators for public consumption. The dashboard is accessible at http://bit.ly/wbcovid19dashboard.

Details on the methodology including the list of surveyed countries, daily and weekly COVID-19 and hunger snapshots are accessible at https://hungermap.wfp.org/.

The other three surveys are related to learning loss during the pandemic, country’s preparedness and response capacities to the pandemic and collective action of school leaders during the pandemic.

Table 7 in the Appendix presents a descriptive statistics of key demographic, economic, and COVID-19 related indicators across the countries in the continent. The result shows notable differences among the included countries. However, these differences don’t seem to derive the choices of survey locations (See Table 8 in the Appendix).

The dashboard is accessible through: http://bit.ly/wbcovid19dashboard.

References

Abate, G. T., Brauw, A., De, Hirvonen, K., & Wolle, A. (2021). Measuring Consumption over the Phone: Evidence from a Survey Experiment in Urban Ethiopia (IFPRI Discussion Paper 02087, Issue December).

Abreu, L., Koebach, A., Díaz, O., Carleial, S., Hoeffler, A., Stojetz, W., Freudenreich, H., Justino, P., & Brück, T. (2021). Life With Corona: Increased Gender Differences in Aggression and Depression Symptoms Due to the COVID-19 Pandemic Burden in Germany. https://doi.org/10.3389/fpsyg.2021.689396

Ambel, A., McGee, K., & Tsegay, A. (2021). Reducing Bias in Phone Survey Samples Effectiveness of Reweighting Techniques Using Face-to-Face Surveys as Frames in Four African Countries (Policy Research Working Paper 9676; Issue May).

Ambler, K., Herskowitz, S., & Maredia, M. K. (2021). Are we done yet? Response fatigue and rural livelihoods. Journal of Development Economics, 153(February), 102736. https://doi.org/10.1016/j.jdeveco.2021.102736

Brooks, S. K., Webster, R. K., Smith, L. E., Woodland, L., Wessely, S., Greenberg, N., & Rubin, G. J. (2020). The psychological impact of quarantine and how to reduce it: rapid review of the evidence. The Lancet, 395(10227), 912–920. https://doi.org/10.1016/S0140-6736(20)30460-8

Brück, T., Esenaliev, D., Kroeger, A., Kudebayeva, A., Mirkasimov, B., & Steiner, S. (2014). Household survey data for research on well-being and behavior in Central Asia. Journal of Comparative Economics, 42(3), 819–835. https://doi.org/10.1016/j.jce.2013.02.003

Brülhart, M., Klotzbücher, V., Lalive, R., & Reich, S. K. (2021). Mental health concerns during the COVID-19 pandemic as revealed by helpline calls. Nature, 600, 121. https://doi.org/10.1038/s41586-021-04099-6

Cafiero, C., Viviani, S., & Nord, M. (2018). Food security measurement in a global context: The food insecurity experience scale. Measurement, 116(October), 146–152. https://doi.org/10.1016/j.measurement.2017.10.065

Dabalen, A., Etang, A., Hoogeveen, J., Mushi, E., Schipper, Y., & von Engelhardt, J. (2016). Mobile Phone Panel Surveys in Developing Countries: A Practical Guide for Microdata Collection. The World Bank. https://doi.org/10.1596/978-1-4648-0904-0

Das, J., Do, Q. T., Shaines, K., & Srikant, S. (2013). U.S. and them: The geography of academic research. Journal of Development Economics, 105(1), 112–130. https://doi.org/10.1016/j.jdeveco.2013.07.010

Dasgupta, S., & Robinson, E. J. Z. (2022). Impact of COVID-19 on food insecurity using multiple waves of high frequency household surveys. Scientific Reports, 12(1), 1–15. https://doi.org/10.1038/s41598-022-05664-3

Delius, A., Himelein, K., & Pape, U. J. (2020). Conducting Rapid Response Phone Surveys (RRPS) to Fill Data Gaps. Poverty and Equity Notes. https://openknowledge.worldbank.org/handle/10986/34300

Dillon, B. (2012). Using mobile phones to collect panel data in developing countries. Journal of International Development, 24, 518–527. https://doi.org/10.1002/jid

Djankov, S., & Panizza, U. (Eds.). (2020). COVID-19 in Developing Economies. Centre for Economic Policy Research.

Egger, D., Miguel, E., Warren, S. S., Shenoy, A., Collins, E., Karlan, D., Parkerson, D., Mobarak, A. M., Fink, G., Udry, C., Walker, M., Haushofer, J., Larreboure, M., Lopez-Pena, S. A., Benhachmi, P., Humphreys, S., Lowe, M., Meriggi, L., Wabwire, N. F., Davis, A., & Vernot, C. A. (2021). C. Falling Living Standards during the COVID-19 Crisis: Quantitative Evidence from Nine Developing Countries. Science Advances, April.

Glazerman, S., Rosenbaum, M., Sandino, R., & Shaughnessy, L. (2020). Remote Surveying in a Pandemic: Handbook Executive Summary. https://www.poverty-action.org/publication/remote-surveying-pandemic-handbook

Gourlay, S., Kilic, T., Martuscelli, A., Wollburg, P., & Zezza, A. (2021). Viewpoint: High-frequency phone surveys on COVID-19: Good practices, open questions. Food Policy, 105, 102153. https://doi.org/10.1016/j.foodpol.2021.102153

Hirvonen, K., de Brauw, A., & Abate, G. T. (2021). Food Consumption and Food Security during the COVID-19 Pandemic in Addis Ababa. American Journal of Agricultural Economics, 103(3), 772–789. https://doi.org/10.1111/ajae.12206

Hoogeveen, J., & Pape, U. (Eds.). (2020). Data collection in fragile states: Innovations from Africa and beyond. Palgrave Macmillan. https://doi.org/10.1007/978-3-030-25120-8

IFPRI. (2020). In J. Swinnen, & J. Mcdermott (Eds.), Covid19 & Global Food Security. International Food Policy Research Institute (IFPRI).

Jaacks, L. M., Veluguri, D., Serupally, R., Roy, A., Prabhakaran, P., & Ramanjaneyulu, G. (2021). Impact of the COVID-19 pandemic on agricultural production, livelihoods, and food security in India: baseline results of a phone survey. Food Security, 13(5), 1323–1339. https://doi.org/10.1007/s12571-021-01164-w

Kühne, S., Kroh, M., Liebig, S., & Zinn, S. (2020). The Need for Household Panel Surveys in Times of Crisis: The Case of SOEP-CoV. Survey Research Methods.

Laborde, D., Martin, W., & Vos, R. (2021). Impacts of COVID-19 on global poverty, food security, and diets: Insights from global model scenario analysis. Agricultural Economics. https://doi.org/10.1111/agec.12624

Nechifor, V., Ramos, M. P., Ferrari, E., Laichena, J., Kihiu, E., Omanyo, D., Musamali, R., & Kiriga, B. (2021). Food security and welfare changes under COVID-19 in Sub-Saharan Africa: Impacts and responses in Kenya. Global Food Security, 28(January), 100514. https://doi.org/10.1016/j.gfs.2021.100514

Porteous, O. (2020). Research Deserts and Oases: Evidence from 27 Thousand Economics Journal Articles on Africa (Working Paper).

Poudel, D., & Gopinath, M. (2021). Exploring the disparity in global food security indicators. Global Food Security, 29, 100549. https://doi.org/10.1016/j.gfs.2021.100549

Ravens-Sieberer, U., Kaman, A., Erhart, · Michael, Devine, J., Schlack, R., & Otto, C. (2021). Impact of the COVID-19 pandemic on quality of life and mental health in children and adolescents in Germany. European Child & Adolescent Psychiatry, 1, 3. https://doi.org/10.1007/s00787-021-01726-5

Robinson, M. D., Hartley, J. E., & Schneider, P. H. (2006). Which countries are studied most by economists? An examination of the regional distribution of economic research. Kyklos, 59(4), 611–626. https://doi.org/10.1111/j.1467-6435.2006.00352.x

Stojetz, W., Ferguson, N. T. N., Baliki, G., Botía, O. D., Elfes, J., Esenaliev, D., Freudenreich, H., Koebach, A., de Lopes, L., Peitz, L., Todua, A., Schreiner, M., Hoeffler, A., Justino, P., & Brück, T. (2022). The life with corona survey. Social Science & Medicine, 306(May), 115109. https://doi.org/10.1016/j.socscimed.2022.115109

Sturges, J. E., & Hanrahan, K. J. (2004). Comparing telephone and face-to-face qualitative interviewing: a research note. Qualitative Research, 2, 991–994.

UNDESA and World Bank. (2020). Monitoring the state of statistical operations under the COVID-19 Pandemic Highlights from a global COVID-19 survey of National Statistical Offices. Issue August).

World Bank (2009). World Development Report: reshaping economic geography. The world Bank.

World Bank (2022). World development indicators. https://databank.worldbank.org/source/world-development-indicators

Acknowledgements

We are grateful to Ghassan Baliki, the Editors, and two anonymous reviewers for their constructive comments and suggestions that have improved the manuscript. All errors are the sole responsibility of the authors.

Funding

Open Access funding enabled and organized by Projekt DEAL. The Life with Corona Africa (LwC-A) project received financial support from the German Federal Ministry of Education and Research (BMBF) - grant number: 01KI20533A.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• Following the onset of COVID-19, phone surveys have become popular.

• Most surveys inadequately track the full effects of COVID-19 on food security.

• Surveys need to be broader in topical, geographical and intertemporal dimensions.

• Life with Corona - Africa, our survey, addresses the highlighted gaps.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Brück, T., Regassa, M.D. Usefulness and misrepresentation of phone surveys on COVID-19 and food security in Africa. Food Sec. 15, 423–453 (2023). https://doi.org/10.1007/s12571-022-01330-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12571-022-01330-8