Abstract

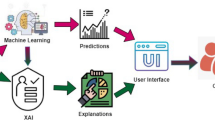

Artificial intelligence (AI) systems are increasingly used in healthcare applications, although some challenges have not been completely overcome to make them fully trustworthy and compliant with modern regulations and societal needs. First of all, sensitive health data, essential to train AI systems, are typically stored and managed in several separate medical centers and cannot be shared due to privacy constraints, thus hindering the use of all available information in learning models. Further, transparency and explainability of such systems are becoming increasingly urgent, especially at a time when “opaque” or “black-box” models are commonly used. Recently, technological and algorithmic solutions to these challenges have been investigated: on the one hand, federated learning (FL) has been proposed as a paradigm for collaborative model training among multiple parties without any disclosure of private raw data; on the other hand, research on eXplainable AI (XAI) aims to enhance the explainability of AI systems, either through interpretable by-design approaches or post-hoc explanation techniques. In this paper, we focus on a healthcare case study, namely predicting the progression of Parkinson’s disease, and assume that raw data originate from different medical centers and data collection for centralized training is precluded due to privacy limitations. We aim to investigate how FL of XAI models can allow achieving a good level of accuracy and trustworthiness. Cognitive and biologically inspired approaches are adopted in our analysis: FL of an interpretable by-design fuzzy rule-based system and FL of a neural network explained using a federated version of the SHAP post-hoc explanation technique. We analyze accuracy, interpretability, and explainability of the two approaches, also varying the degree of heterogeneity across several data distribution scenarios. Although the neural network is generally more accurate, the results show that the fuzzy rule-based system achieves competitive performance in the federated setting and presents desirable properties in terms of interpretability and transparency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction and Motivations

The extensive reliance on artificial intelligence (AI) and machine learning (ML) tools in the healthcare sector poses significant challenges, especially concerning the concept of trust. Any AI system must meet the requirements of robustness, fairness, and transparency throughout its whole life cycle. Furthermore, sensitive health-related data hold an intrinsic value and become a lucrative target for cyber attacks.

The concept of trustworthy AI has recently been considered also by government entities: European Union, for example, is at the forefront for AI regulation as witnessed by the proposal of the “AI ACT”Footnote 1 (2021), which is often referred to as the first law on AI and is conceived for introducing a common regulatory and legal framework for AI. The European Commission had previously promoted the definition of the “Ethics guidelines for trustworthy AI” [1], which identifies lawfulness, ethics, and robustness as key pillars for trustworthiness and describes the requirements for an AI system to be deemed trustworthy. The ethical aspects are pivotal in the healthcare domain given the sensitive nature of patient data, the disclosure of which poses serious risks. For example, discrimination based on such data can occur in the insurance field: insurance companies could decide to charge different fees depending on the individual health status. Likewise, securing the non-discrimination in financial services is nowadays perceived as an important matter, as witnessed for example by the regulations on the “right to be forgotten” for cancer survivors [2]. Finally, special attention should be paid to specific domains (e.g., mental health), due to the stigmatized nature of some types of illness.

In the pursuit of trustworthiness, data privacy and transparency emerge as pivotal enablers, especially in the healthcare domain. While data privacy is considered an invaluable right, it somehow clashes with the creation of accurate ML models that, to date, require large amounts of data in their training phase. The common scenario is in fact that many different entities (be they individuals, medical centers, or hospitals) have few or limited amounts of data and are often reluctant to share their assets and sensitive information with other parties. The processes of data mining and knowledge extraction are therefore hampered by the unfeasibility of data collection for centralized processing. The requirement of transparency encompasses the traceability of the learning process, beginning from the data gathering phase, and the ability to comprehend the structure and the functioning of the ML model itself. The latter challenge is the central focus of a branch of AI named Explainable AI (XAI) [3,4,5,6]. The right for explanation is explicitly mentioned both in the “Ethics guidelines for trustworthy AI" [1], “[...] AI systems and their decisions should be explained in a manner adapted to the stakeholder concerned”, and in the recital 71 of the General Data Protection Regulation (GDPR) [2] “[...] the processing should be subject to suitable safeguards, which should include specific information to the data subject and the right to obtain human intervention, to express his or her point of view, to obtain an explanation of the decision reached after such assessment and to challenge the decision.” Indeed, the ability to understand the inner working of an AI system represents a cornerstone of trust and holds particular significance in high-stakes applications in the healthcare domain.

In this work, we embrace the challenge of enhancing trustworthiness of AI systems in medicine investigating technical enablers for the requirements of data privacy and explainability.

Data access limitations, driven by privacy requirements and by the need to prevent ethical risks associated with the disclosure of sensitive data, have prompted the development of new paradigms for training ML models, including federated learning (FL) [7, 8]. FL enables multiple parties to collaboratively train an ML model without any disclosure of private raw data. Essentially, a shared global model is learned through proper aggregation of locally computed updates from remote data owners, thus removing the need of centralizing data for training purposes.

The requirement of explainability is typically addressed through two main categories of approaches [3, 4]: the exploitation of interpretable by-design models and the adoption of post-hoc explainability techniques. Interpretability and transparency refer to inherent properties of a model and consist in the ability to understand how decisions have been taken and what is the structure of the model itself, respectively. Post-hoc explanation techniques, instead, typically address the goal of explaining why a model provides a decision. It follows that an interpretable model results to be also explainable. The property of interpretability is generally attributed to models such as decision trees (DTs) and rule-based systems (RBSs): in fact, they consist of (or can be traced back to) collection of “IF antecedent THEN consequent” rules. As a consequence, the inference process turns out to be highly understandable. Understandability can be defined as “the characteristic of a model to make a human understand its function (that is, how the model works) without any need for explaining its internal structure or the algorithmic means by which the model processes data internally” [3]. It is worth emphasizing that the concept of understandability is strongly related to the targeted audience in terms of their a-priori knowledge and cognitive skills. For example, a rule-based inference requires familiarity with logic and, possibly, an adequate training depending on the specific implementation adopted in the antecedent and consequent parts of the rules.

Post-hoc techniques are applied on models which are referred to as opaque or “black boxes,” such as Neural Networks (NNs) and ensemble models, to explain their outcomes. The vast majority of existing post-hoc methods can be roughly ascribed to the following categories, which emulate different nuances of human reasoning: feature importance explanations, rule-based explanations, prototypes explanations, contrastive/counterfactual explanations, and textual or visual explanations [3, 9]. Notably, since the field of research is constantly evolving, the list should not be considered exhaustive; in addition, different post-hoc strategies can be specifically tailored based on the different kinds of data (e.g., images or text) and based on the specific models involved [9]. In the context of XAI, a further distinction is also made between local and global explanations: the former refers to the inference process and focuses on how/why the decision is taken for any single input instance. The latter refers to structural properties of the models (thus pertaining to the concept of transparency) or to aggregated information computed over the entire dataset.

The awareness of the importance of explainability and privacy preservation has greatly increased in recent years. While FL inherently tackles the challenge of preserving data privacy in decentralized ML, it typically lacks integrated solutions for the issue of explainability [10]. Actually, FL was originally conceived for models optimized through stochastic gradient descent (SGD) (e.g., Deep Neural Networks (DNNs)), and the application of post-hoc techniques is not straightforward in the federated setting.

This work lies at the intersection between FL and XAI and contributes to a research area named Fed-XAI (acronym for federated learning of XAI models) [11,12,13]. We explore the adoption of Fed-XAI approaches within the healthcare domain for predicting the progression of Parkinson’s disease (PD), formulated as a regression task. We consider a plausible scenario where sensitive raw data originate from different medical centers, making centralized learning unfeasible. In particular, the task is to predict one of the most commonly used indicators for the severity of PD symptoms, namely the Unified PD Rating Scale (UPDRS, firstly introduced in 1987 [14]), by exploiting real-world voice recordings. The analysis extends a recent work [15] and encompasses two approaches for Fed-XAI in order to explore the trade-off between accuracy and trustworthiness. The first approach adopts an interpretable by-design model, learned in a federated fashion. The second one employs an opaque model, where both training and post-hoc explanation are compliant with the federated setting. On one hand, we can assess the generalization capability of the models in the regression problem by exploiting a real, publicly available dataset; on the other hand, trustworthiness is meant here as the concurrent attainment of explainability in all its nuances, from both local and global perspectives, and privacy preservation through the adoption of FL.

As for the interpretable by-design model, we employ the Takagi-Sugeno-Kang Fuzzy Rule-Based system (TSK-FRBS) [16] which is considered as a transparent and interpretable model: its inference method mimics a cognitive process typical of human reasoning in the form of if-then rules. The partitioning of numerical variables into fuzzy sets, which is one of the defining aspects of TSK-FRBS, has proven to enable competitive levels of performance for classification and regression tasks [17] and has a twofold implication. First, fuzziness in rule-based systems enhances semantic interpretability through linguistic representation of numerical variables. Second, a fuzzy set can be interpreted as a formal representation of an information granule, intended as a generic and conceptually meaningful entity [18, 19]: in this context, a fuzzy set allows any number in the real unit interval to represent the membership degree of a feature value to the information granule. As a consequence, the adopted TSK-FRBS fits into the paradigm of granular computing and makes use of information granules in the explainable decision-making pipeline.

As for the opaque model, we employ a well-known biologically inspired model, namely Multi Layer Perceptron Neural Network (MLP-NN). FL is performed exploiting the popular federated averaging (FedAvg) aggregation strategy [7]. Furthermore, the SHAP explainer [20], purposely adapted to comply with the federated setting based on a recently proposed approach [21], is used for explaining the output of the MLP-NN by attributing the contribution (i.e., importance) of each feature to each prediction.

The main contributions of this work can be summarized as follows:

-

We simulate a scenario in which several medical institutions cooperate in creating a PD progression prediction model pursuing the requirements of explainability and privacy preservation;

-

To achieve this goal, we implement and exploit two Fed-XAI approaches, based on TSK-FRBS, and MLP-NN plus SHAP, which represent state-of-art techniques for by-design interpretability and post-hoc explainability, respectively, in the federated setting;

-

We discuss the accuracy of the approaches under several data distribution scenarios, considering the independent and identically distributed (i.i.d.) case and three different non-i.i.d. cases;

-

For each scenario, we compare the FL scheme with two baselines, namely centralized learning and local learning, to verify the suitability of the federated approach;

-

We discuss the explainability of MLP-NN and the interpretability of TSK-FRBS, both from a local and a global perspective;

-

We discuss about the consistency of explanations provided by the two approaches in the federated setting. Here, consistency is achieved when different participants in the FL process obtain the same explanation given the same input information.

The rest of the paper is organized as follows: in Section 2, we provide a brief overview of recent works that adopt XAI and FL tools in healthcare and more specifically in the context of PD. Furthermore, we describe recent advances in the field of Fed-XAI. Section 3 describes the background related to FL, detailing the approaches for FL of TSK-FRBS and FL of MLP-NN. Furthermore, SHAP is introduced as post-hoc explainability technique, and a recent approach for exploiting SHAP in the federated setting is presented. Section 4 describes the PD progression prediction case study, providing details about the experimental setup: we outline the different data distribution scenarios, the evaluation strategies, and the configuration of the two approaches. In Section 5, we report and discuss the experimental results. The considerations regarding interpretability and explainability are given in Section 6. Finally, in Section 7, we draw some conclusions.

Related Works

The adoption of AI techniques in healthcare has been widely investigated. In this section, we first discuss the most relevant works concerning the adoption of FL and XAI in this application domain. Then, we discuss existing works related to XAI in Parkinson’s disease studies. Finally, we discuss recent algorithmic efforts for combining FL paradigm and XAI approaches.

Federated Learning and XAI in Healthcare Scenarios

The opportunities and the practical utility of FL in the healthcare domain have been recently acknowledged in the specialized literature [22,23,24], with applications mainly in the fields of medical imaging [25] and precision medicine [26]. FL is presented as a solution to protect sensitive data for privacy concerns and ethical constraints [27] and also in relation to cyber attacks [28]. At the same time, the interest in XAI is increasingly widespread, especially in the attempt to “open” the so-called black-boxes [29, 30], which have enabled unprecedented performance in the field of deep learning (DL) in medicine. The surveys on XAI for healthcare applications usually delve into the problem of how to present the AI results and their explanations to physicians, medical staff, patients, and caregivers: the explanations should be a tool to understand the outcomes of an AI system, but also a way to allow interaction and enhance stakeholders’ trust in AI (human-centered AI). The XAI goal is usually achieved through the adoption of post-hoc methods for opaque models, often concerning image data analysis (e.g., X-rays and CT scans).

Authors of a recently published survey [10] provide a review of clinical cases where post-hoc methods and interpretable by-design models are applied to more than 20 different medical case studies, spanning from COVID-19 diagnosis and early detection to diagnostic for breast cancer. Different data types are exploited, depending on the application: images (e.g., EEG, MRI) are often involved, and SHAP is among the most used post-hoc methods. Example case studies include prediction of depressive symptoms from texts with adoption of a post-hoc method for the estimation of word importance [31] and Alzheimer classification using Random Forest and SHAP [32].

In the same survey [10], the practical utility of FL in healthcare applications is discussed, especially considering DL approaches, horizontal data partitioning, and FedAvg optimization strategy. Example case studies include the detection of COVID-19 from decentralized medical data, with Convolutional Neural Networks applied on anterior and posterior chest X-rays [33]. Authors in [34] exploit tabular electronic health records (demographics, past medical history, vital signs, lab tests results) from five hospitals to predict mortality in patients diagnosed with COVID-19 within a week of hospital admission. However, it is worth noticing that the applications of FL and XAI are treated separately, emphasizing the substantial lack of works that simultaneously address the requirements of privacy through FL and transparency through XAI in the healthcare domain.

XAI in Parkinson’s Disease Studies

PD is diagnosed in about 10 million people worldwide [35]: after the Alzheimer, it is one of the most prevalent neurodegenerative diseases. Given its socioeconomic relevance, several AI methods have been proposed for supporting diagnosis and monitoring [35, 36]. The most commonly used data types exploited for PD studies include images and speech signals [37, 38].

A few works discuss the topic of explainability in the context of PD studies supported by AI techniques: for instance, authors in [39] apply the LIME [40] post-hoc method on a DNN used to classify healthy from not-healthy subjects using images from SPECT scanning. Authors in [41] provide explanations for different ML model outcomes using three post-hoc methods, namely LIME, SHAP, and SHAPASH (a tool for making ML models more understandable and interpretable for general audience), on a multiclass classification task. Since the aspect of data privacy holds high relevance in this context, few recent works elaborate upon the exploitation of the FL paradigm for PD-related applications [42,43,44].

In this work, we consider the Parkinson Telemonitoring dataset, which has been analyzed in several recent works for both classification [45, 46] and regression [47,48,49] tasks. None of the works mentioned above, however, considers the aspects of privacy and explainability simultaneously.

The primary goal of our analysis is to understand the potentialities of the Fed-XAI paradigm in a PD-related application. In the following, we provide a brief overview of the most relevant approaches for Fed-XAI proposed in the literature, relaxing the constraint on the application domain.

Federated Learning of XAI Models

Explainability in FL has been pursued either using post-hoc [20, 50,51,52,53,54,55] or ex-ante [13, 56,57,58,59] approaches. A thorough review of such approaches has been provided in several recent works [11, 12, 60]. Here, we describe the most recent advances on the topic.

Bogdanova et al. [60] have proposed a novel approach (named DC-SHAP) for consistent explainability over both horizontally (different instances, same features) and vertically (different features, same instances) partitioned data for the Data Collaboration (DC) paradigm. This paradigm consists of two stages: first, participants obtain intermediate representations of data through irreversible transformations and transmit them to a central server (unlike FL, which typically shares models rather than data). Then, the server combines such intermediate representations into a single dataset, trains an ML model, and distributes it back to the participants. Unlike other ex-ante [58] and post-hoc [50, 61] explainability approaches tailored for the decentralized setting, DC-SHAP ensures consistency of explanations: In this context, the property of consistency is met if the explanations of the same data instance for a global model are the same for different participants. As underlined by the authors in [60], model-agnostic post-hoc explainability methods are prone to misalignment of client-side explanations, since they rely on probing the global model with various inputs generated from the local data distribution (typically referred to as background or reference dataset). In their proposal for horizontally partitioned data, they use a set of auxiliary synthetic data shared among the participants to solve the issue of different background datasets and show how this allows the mitigation of feature attribution discrepancies among the participants. The approach proposed in [51] is conceived to obtain a consistent global feature attribution score for horizontal FL. A model-specific post-hoc explainability method, namely Integrated Gradients (IGs) [62], has been adopted for computing feature relevance. The integrated gradients get averaged and thus unified among the clients; however, local explainability is not addressed.

The issue of Fed-XAI for PD has been recently discussed in [63], with the aim of identifying digital bio-markers for the progress of the disease. Three assumptions constitute the privacy model, considering a scenario with multiple hospitals, each with its own patients: (i) input records and corresponding labels are isolated; (ii) the raw inputs are isolated between patients; (iii) the target labels are isolated between hospitals.

A hierarchical framework is adopted to build the FL model: local FL processes allow to collaboratively train a model among patients in the same hospital, whereas a global FL process aggregates models from each hospital for generating the complete model. An adaptation of SHAP is then adopted as post-hoc method for feature importance explanation. To address the issue of misalignment of client-side explanations, background datasets are generated sampling from a Gaussian distribution: the parameters of such Gaussian distribution (mean and variance) are estimated for each feature in a hierarchical way, by combining the parameters estimated intra- and inter-hospitals. It is shown that the average feature importance computed in the federated fashion is qualitatively similar to but quantitatively different from that obtained in the centralized fashion, where the union of the participants’ training sets can be used as background dataset. Although the proposed method theoretically allows for it, the aspect of local explainability/interpretability is not however discussed.

Authors in [21] have proposed an approach for obtaining SHAP explanations [20] in horizontal FL. Specifically, the explanation of an instance prediction made by the federated ML model is obtained by aggregating the explanation of the participants. Such an approach ensures consistency of explanations and is shown to be a faithful approximation of the SHAP explanations obtained in a centralized setting. However, the approach requires that test instances are available to all participants, which may be undesirable or unfeasible in real-world applications where privacy must be guaranteed also at inference time.

In the framework of FL of interpretable-by-design models, TSK-FRBSs [13, 57, 59] and DTs [56, 58] have been considered as XAI models to be learnt in a federated fashion. Approaches proposed in [57, 59] for federated TSK-FRBS rely on a clustering procedure for the structure identification stage and on a federated adaptation of classical gradient-based learning schemes for adjusting the parameters of the consequent part of the rules. In this work, we consider the approach introduced in [13], which leads to more interpretable TSK-FRBSs compared to the ones considered in [57] and [59]. Additional details on such an approach are reported in Section 3.1.

As for DTs, the IBM FL framework [56] supports, among others, a federated adaptation of the ID3 algorithm for horizontally partitioned data. Specifically, an orchestrating server grows a single decision tree by exploiting client contributions based on their local data, in an iterative, round-based, procedure. Similarly, the approach proposed in [58] allows multiple clients to collaborate in the generation of a global DT by transmitting encrypted statistics, but it refers to the vertical data partitioning scenario. Finally, Polato et al. [64] have proposed a federated version of the AdaBoost algorithm, posing minimal constraints on the learning settings of the clients, enabling a federation of DTs, and without relying on gradient-based methods.

Background

The categorization of FL approaches is typically based on the data partitioning scheme and the scale of federation. Data partitioning can be broadly categorized into horizontal and vertical settings. In the horizontal setting, training instances from different participants refer to the same set of features, whereas in the vertical setting, the feature set itself is partitioned among participants. The scale of federation refers to the number of participants and is typically classified into cross-silo FL, involving a low number of participants with ample data and computational power, and cross-device FL, where a large number of participants, often represented by smartphones or personal equipment, may feature a relatively small amount of data and computational power.

The PD progress prediction case study discussed in this work pertains to a cross-silo horizontal FL setting. This section reports background information for the two approaches adopted to address this task, which can be ascribed to the Fed-XAI research field: federated TSK-FRBS and federated MLP-NN with post-hoc explainability.

Federated Learning of TSK-FRBS

Let \(\textbf{X}=\left\{ X_{1}, X_{2}, \dots , X_{M}\right\} \) and Y be the set of M input variables and the output variable, respectively. A generic input instance is in the form \(\textbf{x}_{i} = [x_{i,1}, x_{i,2}, \dots , x_{i,M}]^T\) and has an associated output value \(y_i\). Let \(U_{j}\) be the universe of discourse of variable \(X_j\) and \(P_{j} =\left\{ A_{j,1}, A_{j,2}, \dots , A_{j, T_{j}} \right\} \) be a fuzzy partition over \(U_j\) with \(T_{j}\) fuzzy sets, each labeled with a linguistic term. The term \(A_{j,t}\) indicates the \(t^{th}\) fuzzy set of the fuzzy partition over the \(j^{th}\) input variable \(X_j\). A TSK-FRBS consists of a collection of fuzzy if-then rules, where the antecedent part of each rule is a conjunction of fuzzy propositions and the consequent part implements a regression model. In case of the commonly used first-order regression model, the generic \(r^{th}\) rule is expressed as follows:

where \(\gamma _{r,j}\) (with \(j=0,\dots ,M\)) are the coefficients of the linear model that evaluates the output prediction \(y_r\).

The parameters of the rules are determined through a data-driven approach. The if part (antecedent) is generated either using grid-partitioning or fuzzy clustering over the input space. Once the antecedent is determined, the then part (consequent) estimation consists of local linear models obtained, for instance, through the least squares method.

At inference time, TSK-FRBS exploits the rule base as follows. Given an input instance, first the strength of activation of each rule is computed as

where \(\mu _{j, t_{r,j}}(x_{i,j})\) is the membership degree of \(x_{i,j}\) to the fuzzy set \(A_{j,t_{r,j}}\). Then, the final output can be evaluated with either weighted average or maximum matching policy. In the former case, the TSK-FRBS output is computed as the average of the outputs of all the activated rules weighted by their strengths of activation. In the latter case, the output corresponds to the output of the rule with the maximum strength of activation.

The maximum matching policy enhances the interpretability of TSK-FRBS, since a single rule explains a predicted output for an input instance. Furthermore, the fuzzy linguistic representation of numerical variables fosters the semantic interpretability of the model itself, whose operation, based on the evaluation of rules, turns out to be highly intuitive.

From an algorithmic perspective, FL of TSK-FRBS, as well as of other families of highly interpretable models, requires ad-hoc strategies. In this work, we rely on the approach for building TSK-FRBSs in a federated fashion recently proposed in [13]. We consider horizontally partitioned data: every participant produces a local TSK-FRBS and sends it to the server. Subsequently, the server consolidates the received rule bases by juxtaposing the rules received from the participants and by resolving potential conflicts. A conflict occurs when rules from different local TSK-FRBSs have the same antecedent, thus identifying the same specific region of the input space, but they have different consequents. In this case, the federated TSK-FRBS summarizes conflicting rules in a single rule with the same antecedent as the conflicting rules and with the consequent obtained by computing the weighted average of the regression model coefficients in the consequents of the conflicting rules. Such average takes into account the weight associated with each rule, which is estimated on the local training set as the harmonic mean of its support (how many instances activate the rule), and confidence (average quality of the prediction of the rule).

With the aim of ensuring the consistency of the rules among participants and increasing the system interpretability, the input variables are partitioned by using a strong uniform fuzzy partition with triangular fuzzy sets An example of strong uniform fuzzy partition with five triangular fuzzy sets is shown in Fig. 1. Here, each fuzzy set is associated with a meaningful label that is used to express linguistically the rules.

It should be noted that building a fuzzy system requires a careful design especially regarding the choice of its hyperparameters (e.g., number, shape, and position of fuzzy sets for each partition), also considering their impact on interpretability [65]. Uniform fuzzy partitions with triangular fuzzy sets are generally deemed highly interpretable since they satisfy the criteria of coverage, completeness, distinguishability, and complementarity [66]. However, in practical applications, a meaningful partition should be agreed with the human users who are expected to interact with the AI system and to interpret the given rules. An interesting future development of this work would consist in examining the choice of the uniform partitioning together with domain experts (e.g., physicians).

Federated Learning of MLP-NN

Models optimized through Stochastic Gradient Descent (SGD), such as NNs, can be learned in a federated fashion by exploiting an aggregation strategy based on, or derived from, the popular federated averaging (FedAvg) procedure. FedAvg is an iterative, round-based procedure, in which each round encompasses the following steps: the server sends the global model to the participants; each participant locally updates the model through SGD on its local training set and sends the updated model to the server; the server obtains an updated global model by computing the weighted average of the locally updated models, where weights are based on the local training set cardinality. Several extensions of FedAvg have been proposed in the literature, mostly aimed at addressing FL in heterogeneous settings [67, 68]. In this paper, we focus on classical FedAvg and deal in more detail with the issue of explainability of MLP-NN learned in a federated fashion. To this end, in the following, we first describe a popular post-hoc method for explainability, namely SHAP (SHapley Additive exPlanations) method [20], and then an approach for the adoption of SHAP in the federated setting.

Post-hoc Explainability: The SHAP Method

One of the most popular post-hoc strategies used to explain a model prediction is to assess the importance of each feature in producing the output. In general, given a model f, an input instance \(\textbf{x}_{i}\), and a predicted output \(\hat{y_i} =f(\textbf{x}_{i})\), the explainer assigns to each input component \(x_{i,j}\) a value that reflects how much that particular feature is important for the prediction. These values are interpreted in terms of sign and magnitude: if the sign is positive (negative), then that feature contributes positively (negatively) to the prediction output; as per the magnitude, the larger it is, the higher the impact of the corresponding feature on the output.

In this work, we adopt the SHAP method [20] which is one of the most widely used approaches to assess the feature importance for both regression and classification tasks. SHAP provides local explainability, that is, it explains individual predictions. Global explainability insights can be obtained by aggregating the individual explanations over a set of data.

SHAP computes the importance of the individual features using the optimal Shapley values introduced by L. Shapley in 1953 [69] in game theory. In SHAP, the connection between game theory and explainability is that a prediction for an individual instance \(\textbf{x}_{i}\) can be explained by conceiving the features \(X_i\) as the “players” of a “game” where the prediction \(\hat{y_i}\) is the game “payout.” Intuitively, the different M players of the game (features) receive different rewards, called Shapley values \(\phi _j\), depending on their contribution to the total prediction, i.e., \(\hat{y_i} = \phi _0 + \sum _{j=1}^M \phi _j\) where \(\phi _0\) is a reference value (baseline) computed as the average of output values. In this game-explanation analogy, the player who contributes with the larger \(\phi _j\) to the total prediction is the most important feature in the explanation.

Since the computation of the Shapley values involves testing all the possible combinations of the features (coalitions of the players in the game theory) by perturbating the instance \(\textbf{x}_i\), the time increases exponentially with the number of features [70]. Thus, various approaches have been proposed to estimate them efficiently, including SHAP. There are several kinds of SHAP methods, corresponding to different ways of approximating the Shapely values. In this work, we consider the widely adopted KernelExplainer variant of SHAP (KernelSHAP), as it is model-agnostic [21]. Indeed, other methods, such as TreeExplainer, result to be more efficient but are model-specific. Algorithm 1 describes the KernelSHAP procedure.

KernelSHAP algorithm, from [70].

Notably, KernelSHAP requires a background dataset that serves as a reference: whenever a feature is excluded from a coalition, its value is replaced using an instance randomly sampled from such dataset. The choice of a representative background dataset is crucial for obtaining accurate estimates of the Shapley values. For this reason, the training set is typically adopted for this purpose. However, it is not the unique possible choice: a different, generally smaller, dataset can be used at the condition of being representative of the data distribution of the training set. In the literature, representative objects such as medoids or centroids of clusters generated by applying a clustering algorithm on the training set have allowed faster estimations of the Shapley values. Additional details on SHAP and Shapley values can be found in [70].

Federated SHAP

Let H be the number of clients involved in the federation, \(\textbf{x}_{i} { aninputinstance},\,{ and}f(\textbf{x}_{i}){ thepredictiontobeexplained}.{ Inthiswork},\,{ themodel} f(\cdot ) \) is an MLP-NN learnt in a federated fashion. Following the setup proposed in [21], the goal is to achieve a federated explanation for \(f(\textbf{x}_{i}){ consideringtheinputinstancesimultaneouslyavailabletoalltheclients}.{ Itisworthunderliningthatthismaybeundesirableorunfeasibleiftheinstance}\textbf{x}_{i} { issubjecttotheprivacyconstraint}.{ Notably},\,{ insomeparticularscenarios},\,{ forexample},\,{ ifthepatientsrequiremultiplemedicalconsultations},\,{ theinstance}\textbf{x}_{i} \) could, under specific agreements, be shared to the other clients. Another scenario is represented by the presence of a benchmark dataset available to research entities, with the objective of comparing the goodness of the explainability produced by different methods.

As discussed in Section 3.2.1, the application of the SHAP method requires a background dataset. Typically, this is the dataset used to train the prediction model. However, in the federated setting, local training sets belong to different entities and cannot be shared due to privacy issues. Therefore, the server has no reference data to be used as background. Federated SHAP proposed in [21] represents a possible strategy to overcome this issue and to achieve federated explanations by exploiting the additive property of the Shapley values.

In the federated SHAP procedure, first of all, each client estimates the Shapley values using the local dataset as background dataset; then the values are transmitted to the server that evaluates their average. In this way, the data privacy is preserved, since the raw data are never shared. Furthermore, it is shown that the average can be considered a good approximation of the Shapley values calculated if the union of the local training sets was available to the server.

Schematically, the overall procedure for FL with post-hoc explainability technique entails the following steps: (i) each participant \(h ({ with}h=1, \dots , H){ contributestothecreationofanFLmodel}.{ Attheendofthefederatedprocedure},\,{ thefederatedmodelismadeavailabletoeachparticipant};\,({ ii}){ givenanunseeninstance}\textbf{x}_{i},\,{ eachparticipant}h{ computestheShapelyvalues}\phi ^{(i,h)}_j{ with}j = 1, \dots , M,\,{ evaluatingKernelSHAPlocally},\,{ byexploitingtheprivatetrainingsetasbackgrounddataset};\,({ iii}){ theShapelyvalues}\phi ^{(i,h)}_j{ estimatedatparticipantlevelaretransmittedtotheserver},\,{ whichperformssimpleaveragingtoobtainthefederatedestimationoftheShapleyvalues}\phi ^{(i)}_j{ forexplainingtheprediction}f(\textbf{x}_i)\).

Case Study: Federated Learning for Parkinson’s Disease Progress Prediction

In this section, we describe the case study for the evaluation of the Fed-XAI approaches. First, details about the PD telemonitoring dataset are provided. Then, we describe the experimental setup in terms of data partitioning scenarios and evaluation strategies. Finally, we give the configurations of the ML models adopted in the two Fed-XAI approaches based on MLP-NN and TSK-FRBS, respectively.

The Parkinson Telemonitoring Dataset

The Parkinson Telemonitoring dataset is a well-known regression dataset available within the UCI Machine Learning Repository [71]. The dataset is composed of 5875 instances of biomedical voice measurements from 42 patients with early-stage PD. Data are acquired remotely during a 6-month trial. Each instance corresponds to one voice recording, characterized by 22 features as reported in Table 1. The regression task consists in predicting the total Unified PD Rating Scale score (total_UPDRS) associated with a given voice recording. Differently from motor_UPDRS, which is related only to the motor symptoms, total_UPDRS is related to the overall set of symptoms.

Federated Learning Scenarios

In this paper, extending the preliminary analysis performed in [15], we consider the challenging setting in which the raw dataset is not available on a single node for centralized processing, as in traditional ML, but it is instead scattered over multiple physical locations, e.g., hospitals or healthcare institutions. Specifically, we simulate several scenarios featuring 10 hospitals (cross-silo FL setting), in order to evaluate the performance of two Fed-XAI approaches under different horizontal data partitioning schemes that could be encountered in real-world situations.

In the following, we formally define the four scenarios considered in our experimental analysis. Let \(P_h(\textbf{x},y){ bethelocaldistributionofinputdata}\textbf{x} { andassociatedtargetvalues}y\) (total_UPDRS) for the hospital \(h,\,{ and}P(\textbf{x},y)\) the overall data distribution.

-

Scenario IID It is a simple independent and identically distributed (i.i.d.) setting; formally,

$$\begin{aligned} P_h(\textbf{x},y) \sim P(\textbf{x},y) \; \; \; \forall h \in \{1,\dots ,10\} \end{aligned}$$(5)The training data of the ten hospitals follow the same distribution, with about 500 instances each.

-

Scenario NIID-Q (acronym for non-i.i.d. quantity skew). It is a non-i.i.d. setting with quantity skew [72]: different hospitals can hold different amounts of training data, which follow the same overall distribution.

-

Scenario NIID-F (acronym for non-i.i.d. feature skew). It is a non-i.i.d. setting with feature distribution skew [72] based on the \(age\) feature; formally,

$$\begin{aligned} P_g(\textbf{x},y) \ne P_h(\textbf{x},y) \; \; \; \forall g,h \in \{1,\dots ,10\}, \; g\ne h \end{aligned}$$(6)Each hospital contains training data from only a specific range of ages (e.g., 56 to 57, 58 to 59, \(\dots \), more than 75 years old). In this scenario, we aim to have training sets with as similar amount of data as possible.

-

Scenario NIID-FQ (acronym for non-i.i.d. feature and quantity skew). It is a non-i.i.d. setting with both quantity skew and feature distribution skew based on the \(age\) feature. Each hospital contains training data from only a specific range of ages; furthermore, different hospitals can hold different amounts of data.

The four scenarios concern different partitioning schemes for training data. As for the testing data, we consider the case of an external publicly available test set, valid for all the scenarios. The test set follows the overall data distribution (i.e., representative of all age groups) and has 588 instances. The distribution of the training data in the four scenarios is summarized in Table 2 and in Fig. 2.

As for the NIID-F and NIID-FQ scenarios, it is worth underlining that other features besides age may be affected by bias or skewness. However, this contingency still meets the definition of feature distribution skew. Therefore, the four scenarios enable a thorough and extensive evaluation of the performance of the two Fed-XAI approaches based, respectively, on MLP-NN and TSK-FRBS.

Evaluation Settings

Typically, the performance evaluation of a model in the federated setting is performed not only in absolute terms, but also comparatively against two baseline settings [15, 73, 74]: local learning and centralized learning. Figure 3 provides a schematic overview of the three learning settings.

Federated learning (FL), local learning (LL), and centralized learning (CL) can be summarized for the dataset under consideration as follows:

-

FL: the hospitals collaborate in obtaining a single federated model without sharing their raw data. The privacy of sensitive data is preserved.

-

LL: each hospital locally learns a model from its private training data. As a consequence, the privacy of sensitive data is preserved, as in the FL case, but there is no collaboration among different hospitals.

-

CL: training data from all hospitals are collected in a single central repository in the server and exploited to learn a global model. CL implies indeed maximum collaboration among hospitals, but violates privacy, as private sensitive data are moved from their owner to the server.

A model learned in the FL setting is expected to be more accurate than the ones learned in the LL setting. On the other hand, a model learned in the CL setting can outperform the other models (both LL and FL), in terms of accuracy, because it can rely on the union of the training datasets. The CL approach, however, is not viable in real applications where privacy protection is a mandatory constraint.

Regression Problem and Fed-XAI Models

The PD progress prediction is formulated as a regression problem where the target variable is the Total_UPDRS score. In our experiments, we replicated the preprocessing steps adopted in [15], namely, (i) a robust scaling using 0.025 and 0.975 quantiles is applied to the input features to remove outliers and clip the distribution in the range [0,1], and (ii) the output variable is normalized in the range [0,1].

Unlike in [15], the univariate feature selection procedure is carried out independently for the three learning settings, for a fair comparison of the entire regression pipelines. We select the \(G=4\) best features in terms of Mutual Information (MI) [75] with the target variable. The estimate of MI and the subsequent feature selection is done individually by each participant in the LL setting, based on the local training sets, and globally in the CL setting, based on the union of the training sets. As for the FL setting, the federated feature selection procedure is schematized in Fig. 4, considering the example of the IID scenario. Each participant computes the MI score for all the features and transmits such information to the server. The server computes the average MI score for each feature and communicates the \(G\) best features to each participant. Thereafter, the FL process starts considering only the selected subset of features. In the example of Fig. 4 concerning the IID setting, the federated feature selection procedure selects the following features: age, test_time, DFA, and HNR. Note that the feature importance scores of each participant may change depending on the data distribution scenario: thus, the selected features may vary and may generally differ from the CL setting.

The choice of the \(G\) value is guided by the following considerations: a reduced number of features generally improves the explainability task, both for post-hoc and interpretable by-design approaches. In addition, TSK-FRBSs struggle to handle high dimensional datasets [76]: the set of candidate rules grows exponentially with the number of features, thus jeopardizing the accuracy and the interpretability of the system. We have verified that \(G=4\) ensures a good generalization capability for both models and an increase in the number of features does not lead to a significant improvement in performance.

In our experiments, for each data distribution scenario, we trained a TSK-FRBS and an MLP-NN according to FL, LL, and CL settings. The experimental analysis is approached from a twofold perspective: model accuracy and model explainability. We assess the accuracy of the predictions obtained by the regression models as in [15] by using two popular metrics, namely Root Mean Squared Error (RMSE) and Pearson correlation coefficient (\(r\)). They are defined as follows:

where \(N{ isthenumberofsamplesconsideredfortheevaluation},\,{ and}y_i{ and}\hat{y}_i{ arethegroundtruthvalueandthepredictedvalueassociatedwiththe}i\)-th instance, respectively. Finally, \(\bar{y} { isthemeanofgroundtruthvalues},\,{ and}\hat{\bar{y}} { isthemeanofthepredictedvalues}.{ Obviously},\,{ thegoalistominimizeRMSEandmaximize}r\).

It is worth underlining that the evaluation of an FL system typically covers other aspects besides accuracy such as computation and communication efficiency. These aspects, however, represent often crucial requirements or potential bottlenecks in cross-device FL, with many devices featuring limited computational resources [77]. In a cross-silo FL scenario, as the one considered in this work, such aspects are generally deemed less critical.

Interpretable By-design Fed-XAI: TSK-FRBS Configuration

As in [15], we employ a first order TSK-FRBS model (described in Section 3.1). We adopt a strong uniform fuzzy partition on the features with five triangular fuzzy sets, as shown in Fig. 1. The choice of five fuzzy set is driven by the indication of the specialized literature and by the pursuit of a reasonable trade-off between model complexity and generalization capability. The number of linguistic terms associated with a linguistic variable should be below the limit of \(7\pm 2\) [78]. Indeed, it has been shown that this represents a threshold for information processing capability, and thus exceeding it undermines the interpretability of the system [79]. With the aim of describing linguistically a given rule, the five fuzzy sets can be labeled with the following linguistic terms: VeryLow, Low, Medium, High, and VeryHigh. Furthermore, it should be noted that different features may be partitioned using a different number of fuzzy sets, e.g., by exploiting domain knowledge to enhance understandability. Although this represents an interesting future development, we did not conduct extensive hyperparameters optimization. Rather, we verified in the CL setting that beyond 5 fuzzy sets, there is a substantial increase in model complexity, without significant improvement in terms of performance metrics. The choice of 5 fuzzy sets ensures a high linguistic interpretability and represents a reasonable trade-off between model complexity and generalization capability.

Post-hoc Explainable Fed-XAI: MLP-NN Configuration

The MLP-NN consists of two hidden layers with 128 neurons, each with the ReLu activation function. The Mean Squared Error (MSE) is adopted as loss function and Adam as optimizer. The minibatch size is set to 64. The overall number of epochs is set to 100 in the CL and LL settings. In the FL setting, we set the number of local epochs and the number of rounds as 5 and 20, respectively. We have not performed a thorough optimization of hyperparameters for each learning setting and each data distribution scenario individually; however, we have empirically observed that a further increase in the capacity of the models in terms of number of layers, number of neurons, and training epochs does not lead to a significant increase in the generalization capability of the MLP-NN.

Analysis of the Experimental Results

Table 3 presents the RMSEs and \(r\) coefficients obtained by the TSK-FRBS and MLP-NN models for all the learning settings and the data distribution scenarios. As regards the LL setting, we report the average values \(\pm \) standard deviation obtained by the models learned locally in each participant. In the table, we have highlighted in bold the best results for each row, considering the comparison between TSK-FRBS and MLP-NN. Notably, in certain cases, the result is obtained from a distribution of values and is expressed in terms of mean and standard deviation: this occurs in the LL setting when ten local models are evaluated on the test sets and, regardless of the learning setting, when performance metrics are evaluated on ten training sets from as many hospitals. In such cases, we highlighted in bold the best result only if there exists a statistical difference in metrics values between TSK-FRBS and MLP-NN. The statistical significance has been assessed through a pairwise Wilcoxon signed-rank test [80] with confidence level \(\alpha =0.05.{ Ingeneral},\,{ federatedmodelsoutperformthelocalcounterparts},\,{ bothintermsofRMSEand}r\). The benefit of FL over the LL setting is particularly evident for the TSK-FRBS and especially in the non-i.i.d. settings.

Federated TSK-FRBS and federated MLP-NN achieve comparable performances. The most noticeable difference occurs in the NIID-F setting, in which the two metrics provide diverse insights: on one hand, RMSE indicates that the deviation of predictions from true values is lower for the MLP-NN (10.268) compared to the TSK-FRBS (16.848); on the other hand, predictions and true values are more correlated for TSK-FRBS (\(r=0.461\)) than for MLP-NN (\(r=0.205\)).

The non-i.i.d. setting with quantity skew (NIID-Q) does not harm particularly the performance of the models: RMSE and \(r\) values are comparable to those of the IID setting, for both TSK-FRBS and MLP-NN. In the case of TSK-FRBS, the simple average of the performances measured on the training sets shows poor performance for the LL setting, where some models likely suffer from low data availability. The aggregation strategy based on the rule weight (defined as a combination of confidence and support) ensures that this unfavorable situation is mitigated in the FL setting.

Scenarios with feature distribution skew (NIID-F and NIID-FQ) turn out to be the most challenging for both models. The generalization capability of models built in the LL setting is rather poor, due to exposure to data from a limited age range during training: both TSK-FRBS and MLP-NN perfectly model the training data (resulting in low RMSE values and an \(r\) coefficient around 0.9 on training sets) but fail in properly predicting the total_UPDRS score of the test instances (resulting in high RMSE and low \(r).{ DiscrepancyinRMSEand}r\) values between training and test sets is noticeable in the LL setting, whereas it is limited or negligible in the FL setting.

It is worth highlighting that the performances in the FL setting are always worse than those obtained in the CL setting, both for TSK-FRBS and MLP-NN. In general, the centralized MLP-NN is able to achieve the best performance with a slight improvement in terms of RMSE and \(r\) over the centralized TSK-FRBS. The superior performance of the centralized model can be attributed to the utilization of all data for conventional training. However, it is deemed unfeasible when privacy preservation represents a critical requirement.

The results in Table 3 provide an aggregate view of the LL setting. A better understanding of the outcomes can be gained by analyzing the specific performance obtained in each hospital: such detailed results are illustrated in Figs. 5 and 6 through the empirical cumulative distribution function (ECDF) for the RMSE metric.

For both models, the ECDF is reported for the values of the difference, for each hospital, of the RMSE achieved in the FL setting and the 10 locally computed values of RMSE obtained in the LL setting: each plot, therefore, is made up of 10 points. The plot can be interpreted as follows: if a point lies in the negative half-plane (negative RMSE difference), then the RMSE value of the FL model is lower (and therefore the FL model is better) than the RMSE value of the LL model. The fine-grained analysis shown in Figs. 5 and 6 confirms that the FL setting generally outperforms the LL setting.

Finally, we report on the overall complexity of the models, which will be further discussed in Section 6. In the case of the MLP-NN, the network architecture is fixed: the complexity, intended as the number of parameters, does not change with the learning setting. In the case of TSK-FRBS, the complexity can be assessed in terms of the number of rules in the rule base. Figure 7 shows the complexity for each data distribution scenario and each learning setting.

The number of rules of the federated TSK-FRBS never exceeds, by construction, that of the centralized TSK-FRBS, which in any case is limited (433 rules). As expected, the federated TSK-FRBS is more complex than locally learned ones. In the IID and NIID-Q scenarios (in which each hospital has data representative of the entire distribution), the 10 local models have approximately half the number of rules of the federated ones, indicating that common antecedents are often found in the rule aggregation phase. The complexity of locally learned TSK-FRBSs in the presence of feature distribution skew (NIID-F and NIID-FQ) is significantly lower than the one in IID setting, and the gain in accuracy provided by the federated models comes at a cost in terms of number of rules, which is approximately five times higher.

Explainability Analysis

The extensive adoption of AI systems in the healthcare field depends not only on the achievement of adequate levels of accuracy, but also on how much they are perceived to be trustable. In particular, the ability to explain how the outcomes have been produced by the models is more and more required and represents the main driver of XAI. This section discusses the aspect of transparency of AI systems, focusing on how it is defined for the two Fed-XAI approaches analyzed in this paper. First, we analyze the explainability of the MLP-NN model, in which SHAP is used as post-hoc method. Then, we discuss the interpretability by-design of the TSK-FRBS. We consider models built in a federated fashion according to the IID data partitioning scheme: the discussion of the outcomes is limited to such case, but the pipeline for explainability analysis can be easily replicated for any data distribution scenario. Furthermore, we recall that all the input variables and the output variable are normalized in the unit interval \([0,1]\): the considerations in this section refer to the predicted values before inverse transformation.

Post-hoc Explainability of MLP-NN

In this section, we discuss the explainability of the MLP-NN after the application of the agnostic post-hoc method SHAP. We recall that, given an input instance, the Shapley value associated with each feature represents the contribution given by such feature to the predicted value. In this sense, for each prediction, SHAP explains why the model produces a particular output.

We adopt the Federated SHAP approach proposed in [21] and introduced in Section 3.2.2. KernelSHAP is employed in each hospital to estimate the Shapley values considering the full local training set as background dataset.

It is worth underlining a first crucial aspect concerning the explainability of MLP-NN: unlike interpretable-by-design Fed-XAI approaches, the post-hoc method affects the overall efficiency of the systems, both from a computation and a communication point of view. As for the former aspect, the estimation of the Shapley values with KernelShap is time consuming and the runtime increases with the number of features and the size of the adopted background dataset [70]. As for the latter aspect, Federated SHAP introduces a communication overhead, as Shapley values need to be transmitted by the participants for central aggregation. Conversely, the interpretable-by-design TSK-FRBS has no computation and communication overhead for generating the explanations.

MLP-NN: Global Insights

Globally, an MLP-NN is generally considered “opaque,” due to the presence of several layers of non-linear information processing. In our case, the structure consists of two hidden layers with 128 neurons, resulting in 17,281 trainable parameters. The high number of parameters and the relations among these parameters make very hard to provide a global explanation of the model. Thus, indirect methods based, for instance, on feature importance are typically used to provide global explainability information [3].

As Shapley values represent additive feature importance scores for each particular prediction, the overall feature importance can be assessed by computing the average of the absolute Shapely values across the data [70]. Of course, the larger the average absolute value of the contribution given by a feature, the greater the importance of that feature. The assessment of the feature importance of a model is typically independent of the test data. In the case of the MLP-NN, it can be estimated as follows. First, each hospital \(h{ evaluatestheimportance}I{ ofthefeature}i\) on its training data:

where \(\phi _j^{(i,h)} { istheShapelyvalueforafeature}j{ andatraininginstance}i{ athospital}h,\,{ and}N_h{ isthesizeofthetrainingsetathospital}h\). Then, each client can transmit locally computed features importance to the server, and the overall features importance for the federated model can be computed by the server as follows:

where \(H{ isthenumberofhospitalsand}N = \sum _{h=1}^{H}N_h\).

Figure 8 shows the global feature importance scores for the MLP-NN, as per (10): in the IID setting, the most relevant feature is age, while test_time seems to be less relevant than vocal features, namely DFA and HNR, which in turn are of similar relevance.

MLP-NN: Local Insights

Figure 9 reports the SHAP values for two instances of the test set; they correspond to two cases where both models (MLP-NN and TSK-FRBS) obtain high and low errors, respectively. The absolute error (AE) made by the MLP-NN is around 0.55 for instance #2496 and around 0 for instance #2323.

The Shapley values reveal, a-posteriori, the relevance of the corresponding features in the prediction performed by the model: as expected, they are different when considering different instances. In the former case (Fig. 9a), age has little negative influence, while the other features have a large and positive impact on the output value. In the latter case (Fig. 9b), the most influential feature is test_time, which has a negative impact on the output. Notably, the SHAP values for individual features are evaluated, for both instances, with respect to the same baseline value \(\phi _0 = 0.46\).

Interpretability By-design of TSK-FRBS

TSK-FRBSs are often considered as “light gray box” models [81]: their operation is highly interpretable, since they consist of a collection of linguistic, fuzzy, if-then rules. However, in the first-order TSK-FRBSs used in this paper, the adoption of a linear model in the consequent part, which certainly improves the accuracy with respect to the zero-order TSK-FRBS, makes the interpretation of a single rule less intuitive than the zero-order counterpart.

A substantial difference with respect to the MLP-NN model analyzed in Section 6.1 is that interpretability information is given without additional overhead in terms of computation and communication (as it is the case for the calculation of Shapley values on the MLP-NN model).

The following analysis aims to characterize both global and local interpretability of TSK-FRBS learnt in a federated fashion.

TSK-FRBS: Global Insights

The global interpretability of TSK-FRBSs can be quantitatively assessed by measuring the complexity of the system in terms of the number of rules and/or parameters. Less complex models, i.e., those with fewer rules, can be generally considered more interpretable [66]. Figure 7 reports the number of rules for each learning setting and each data partitioning scenario. As underlined in Section 5 for the IID case, the number of rules in the FL setting is rather limited (i.e., 397) and just double that in the LL case (despite the presence of 10 participants).

The model is therefore comprehensively described by the rule base, which can be represented in the intelligible form reported in the following.

The number of parameters of a rule in a TSK-FRBS can be estimated as follows, considering the presence of four input variables: it is given by the sum of four parameters for the antecedent part (one for each input variable, to identify a fuzzy set of the a-priori partitioning) and five parameters for the linear model of the consequent part. Ultimately, the considered TSK-FRBS has 3573 parameters overall.

In the case of TSK-FRBS, a measure of feature importance can be obtained by averaging the absolute values of the coefficients of the linear models in the rule base. Formally, the importance \(I{ ofthefeature}j\) is evaluated as follows:

where \(\gamma _{r,j} { isthecoefficientforthevariable}j{ intherule}r\).

Figure 10 suggests that feature importance computed for the TSK-FRBS model is consistent with the one computed for the MLP-NN by the SHAP method in the IID setting. Age and test_time are identified as the most and the least relevant features, respectively. Furthermore, the importance of HNR and DFA is similar, consistently with what is observed for the MLP-NN.

A direct comparison of the importance values between Fig. 8 (MLP-NN) and 10 (TSK-FRBS) is not meaningful. Indeed, in the case of MLP-NN, Fig. 8 shows the average absolute contribution for each feature with respect to the baseline value. In case of TSK-FRBS, Fig. 10 represents the average absolute value of the coefficients of the linear models used in the case of TSK-FRBS.

It is worth underlining that model-agnostic nature of SHAP can be exploited to compute post-hoc explanations also on the TSK-FRBS model. Thus, we can directly compare the feature importance obtained by averaging the absolute values of the coefficients of the linear models in the rule base and reported in Fig. 10, with that obtained by averaging the absolute Shapely values across the data for TSK-FRBS (9) and (10). The latter approach results in the scores reported in Fig. 11.

It is interesting to note that age and test_time are still identified as the most and least important features, indicating a summary agreement among the results obtained with different important attribution methods in the IID scenario. There is a discrepancy, on the other hand, between the relative values of DFA and HNR, possibly because the two approaches estimate importance values with different criteria. Furthermore, it is worth noting that feature importance scores computed for TSK-FRBS using SHAP are consistent with those computed for MLP-NN and shown in Fig. 8, also in terms of range of values.

The identification of age as the most important feature regardless of the model and the attribution method adopted is not surprising. First, in the IID scenario, each hospital can have data for all age ranges and therefore the feature entails a high variability. Second, such evidence is reflected in the specialized literature, which indicates that age is the best predictor of the progression of Parkinson’s disease and the most important risk factor for the development of the disease [82].

TSK-FRBS: Local Insights

The interpretability of the TSK-FRBS derives from its structure and the type of inference strategy we use. Indeed, for any given input instance, the predicted output depends on a single rule: the antecedent part isolates a region (a hypercube) of the input space, where its consequent part defines a local linear model. The coefficients of this model indicate how the input features contribute to form the TSK-FRBS output in that region: a positive (negative) coefficient for a given feature indicates that the output increases (decreases) with that feature. Notably, all instances belonging to that region will refer to the same linear model.

Given an instance \(\textbf{x}_{i} { andarule}r,\,{ whichistheonewiththemaximumstrengthofactivationfor}\textbf{x}_{i} \), the actual contribution of each feature to the prediction \(\hat{y_{i}} { isobtainedas}\gamma _{r,j}\cdot x_{i,j} \). In other words, contributions are obtained as the element-wise product between the coefficients of the linear model and the feature values of an instance. Figure 12 reports both the feature contributions and the coefficients of the linear model of the TSK-FRBS for the same two instances of the test set analyzed in the case of the MLP-NN (instances #2496 and #2323).

TSK-FRBS local explainability: coefficients of the linear model and actual feature contributions for two instances in the test set. The absolute error for each instance is reported above the relevant plot along with the ID of the rule considered for the prediction and the term \(\gamma _0\) of the linear model

The two instances activate different rules: as a consequence, the contributions are significantly different. Furthermore, in general, the contributions are reduced compared to the coefficients, since each feature is normalized in the unit interval.

Local Explanations: Comparison Between TSK-FRBS and MLP-NN

A relevant outcome can be drawn by comparing the barplots of Figs. 9 and 12: although the absolute errors are similar (we have verified that the predicted values are similar as well), the two models “reason” differently and assign different—sometimes diametrically opposed—contributions to the features, also because the term \(\gamma _0\) is different from the baseline value of SHAP.

To better examine this aspect, we focus on a set of instances and analyze the explanations provided by the two models. Specifically, we consider the instance resulting in a high AE for both models (ID #2496) and all the instances of the test set that activate the same rule (namely, \(R_{223} \)) of the TSK-FRBS. In this way, we isolate four instances (ID #827, ID #2266, ID #2496, ID #5146) which are inevitably close to each other in the input space.

The local interpretability of the TSK-FRBS is straightforward: predictions are obtained by applying the following rule:

In the case of MLP-NN, the prediction is explained through the Shapley values: Fig. 13 shows the contributions for the MLP-NN considering the four instances of the test set that activate rule \(R_{233} \) of the TSK-FRBS system.

It is evident that the Shapley values for the MLP-NN vary greatly even though the instances are fairly close in the input space: as an example, age has a negative contribution for ID #827 and a positive one for ID #5146. For this reason, it is equally evident that a correspondence cannot be found between the explanations offered by SHAP for the MLP-NN and the interpretation of the linear model of the TSK-FRBS. For example, SHAP always assigns a positive contribution to DFA, while the relevant coefficient is negative for TSK-FRBS.

As noted above, the divergence in explanations between TSK-FRBS and MLP-NN in the IID scenario does not correspond to a divergence in output values. We have verified that the predicted outputs are similar (and indeed the reported AE values are similar): different models, which achieve similar results, lead to different explanations from a local point of view. This is not to be considered odd: our analysis entails different feature importance methods (inherent and post-hoc) and different models (TSK-FRBS and MLP-NN, respectively). Actually, it has been empirically shown that the feature importance score may suffer from numerical instability (when model, instance and attribution method are the same), solution diversity (if different models are considered, but on the same instance with the same attribution methods), or disagreement problem (if different attribution methods are considered, but on the same instance and the same model) [83]. These scenarios are due to the so-called Rashomon effect [84], whereby for a given dataset there may exist many models with equally good performance but with different solution strategies.

Consistency of Explanations

As mentioned in Section 2.3, the property of consistency in the FL setting, introduced in [60], is met if different participants receive the same explanation of an output obtained with the federated model given the same data instance.

Evidently, for all the different operative scenarios discussed in this paper, the explanations for the federated TSK-FRBS are consistent: any local explanation obtained in a given hospital depends only on the input instance and on the activated rule of the federated model.

Conversely, the approach proposed in [21] and adopted in this work as post-hoc technique for the MLP-NN explainability does ensure the consistency of the explanations only in the situation where the test instances are shareable to all the clients. The local explanation obtained in a given hospital, in fact, depends not only on the input instance and the federated MLP-NN, but also on the background dataset used for estimating the Shapley values. Since each hospital has its own private dataset, the Shapley values for the same input instance may differ, in general, from one hospital to another. The Federated SHAP approach allows obtaining an explanation for each test instance by averaging the local explanations from different hospitals. On one hand, this ensures that a unique and unambiguous explanation is obtained. On the other hand, this requires that any test instance is shared with other hospitals at inference time, which may be problematic due to privacy and/or latency issues.

In the following, we quantitatively assess the misalignment of client-side explanations obtained with SHAP for the MLP-NN, before applying the averaging operation that characterizes Federated SHAP. We consider an input test instance (ID# 2496, discussed also in the previous examples) and suppose that it is available at every hospital. Specifically, we evaluate how the prediction for such instance would be explained on different clients, in case that sharing Shapley values for averaging is precluded for privacy reason.

Figure 14 reports the Shapley values for each hospital and each feature in the IID scenario.

The barplot suggests that the explanations are consistent in the IID scenario, albeit showing some slight variability, which is reasonable since the background datasets are identically distributed. Indeed, explanations are in line with the average pattern reported in Fig. 14c.

The variability among client-side explanations turns out to be substantial in non-i.i.d. scenarios. Figure 15 shows the Shapley values for the same instance for each hospital and each feature when considering the NIID-FQ scenario. We recall that such scenario entails both a quantity skew and a feature skew on the age feature. Furthermore, it is worth mentioning that the feature selection process is part of the FL pipeline. As a consequence, the set of selected features depends on the data distribution scenario: this explains the presence of different features compared to the IID case, with Jitter(Abs) replacing HNR.

Figure 15 suggests that the misalignment of explanations is severe, especially for the contribution assigned to the age feature by different hospitals. The relevant Shapley value goes from negative in hospitals with younger patients to positive in hospitals with older patients. Probing the global model with data from heterogeneous distributions results in a difference also in the importance assigned to the DFA feature. In summary, the same instance, analyzed with the same model, is explained in very different ways on different hospitals. Thus, the property of consistency among explanations is not achieved.

The consistency of SHAP explanations in the FL setting can be achieved by avoiding the use of private training data as background: Chen et al. [63], for example, propose to use synthetic background datasets generated sampling from a Gaussian distribution whose parameters are estimated on the server side based on the contributions of all participants. However, such explanations may be different from those obtained using actual training data. Ensuring both consistency and accuracy of explanations, intended as agreement with the centralized case, is one of the interesting future developments of this work.

Conclusions

In this paper, we have addressed the problem of developing a trustworthy AI system for a healthcare application, with specific focus on a Parkinson’s disease (PD) progression prediction task. For this purpose, we designed two approaches that simultaneously meet the requirements of data privacy preservation and explainability, which are usually deemed crucial for enabling trustworthiness. The first approach adopts a Takagi-Sugeno-Kang Fuzzy Rule-Based system (TSK-FRBS), which is interpretable by-design. TSK-FRBSs make use of fuzzy sets as information granules, thus guaranteeing high semantic interpretability. The second approach employs a Multi Layer Perceptron Neural Network (MLP-NN): as a “black-box” model, it requires the adoption of a post-hoc technique for explainability purposes. In this paper, we have adopted SHAP, which is considered as a state of art feature importance explainability method.

For both approaches, the federated learning (FL) paradigm has been exploited as it inherently enables privacy preservation during global model training procedures in decentralized settings. In detail, we devised an experimental setting assuming that sensitive data originate from ten hospitals and cannot be shared for privacy reasons. In order to cover several real-world situations, four (one i.i.d. and three non-i.i.d.) scenarios with different degrees of heterogeneity are simulated.

The critical analysis of the two approaches has concerned the following aspects: (i) the accuracy of the models, in terms of Root Mean Squared Error (RMSE) and Pearson correlation coefficient \(r,\,{ dependingonthelearningsetting}({ federatedlearning},\,{ locallearning},\,{ centralizedlearning}){ andthefourdatadistributionscenarios},\,{ and}({ ii}){ theexplainabilityofthemodelsatglobalandlocallevels}.{ Thekeyfindingscanbesummarizedasfollows}.{ Fromtheperspectiveofperformancemetrics},\,{ resultshighlightthatthefederatedmodelsareabletooutperformtheoneslearnedbyusingonlylocaldata},\,{ bothintermsofRMSEand}r\) values, hence highlighting the benefits of the federation. This is particularly evident in the non-i.i.d. settings. Also, results suggest that federated TSK-FRBS and federated MLP-NN achieve comparable performance, within the context of the considered case study.

As regards explainability, we have presented the results of the post-hoc explainability of MLP-NN and of the by-design interpretability of TSK-FRBS, also providing a comparative analysis of the two approaches. It turns out that the two approaches can lead to different local explanations, even if the underlying models achieve similar results in terms of regression metrics. A first major difference between the two approaches lies in their nature: the TSK-FRBS model provides insights about “how” an outcome is obtained, whereas the post-hoc method provides insight about “why” an outcome is provided. Consequently, a qualitative comparison is more reasonable than a quantitative one. We have to take into account, however, that if a model is able to provide a glimpse on how an outcome has been obtained, implicitly it is also making manifest why that outcome has been computed from the inputs.