Abstract

Robots destined to tasks like teaching or caregiving have to build a long-lasting social rapport with their human partners. This requires, from the robot side, to be capable of assessing whether the partner is trustworthy. To this aim a robot should be able to assess whether someone is lying or not, while preserving the pleasantness of the social interaction. We present an approach to promptly detect lies based on the pupil dilation, as intrinsic marker of the lie-associated cognitive load that can be applied in an ecological human–robot interaction, autonomously led by a robot. We demonstrated the validity of the approach with an experiment, in which the iCub humanoid robot engages the human partner by playing the role of a magician in a card game and detects in real-time the partner deceptive behavior. On top of that, we show how the robot can leverage on the gained knowledge about the deceptive behavior of each human partner, to better detect subsequent lies of that individual. Also, we explore whether machine learning models could improve lie detection performances for both known individuals (within-participants) over multiple interaction with the same partner, and with novel partners (between-participant). The proposed setup, interaction and models enable iCub to understand when its partners are lying, which is a fundamental skill for evaluating their trustworthiness and hence improving social human–robot interaction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Trust is a fundamental component of social interaction. For an individual, it is crucial to gain the partners’ trust and, at the same time, to assess their trustworthiness. One of the main elements normally adopted to evaluate whether someone should be trusted or not is the veridicality of their claims; since, the occurrence of lies naturally undermines the trust given to a partner [1, 2]. Being able to recognize when someone is lying to us plays an important role in shaping our trust toward them and the entire social rapport.

If robots are meant to become autonomous agents active in our society, they should consider the relevance of mutual trust with their human partners. Recently, researchers and social media raised public awareness on how much artificial intelligence and robots can be trusted [3]. On the other hand, it will be necessary also for the social robot to evaluate how much the human partner is trustworthy and consistently adapt its behavior. Several Human–Robot Interaction (HRI) studies explored the factors that influence humans’ trust toward robots. For example, robots’ shape and performances can affect trust and its development [4,5,6]. Additionally, robot’s transparency [7, 8], behavior explanation [9] and perceived reliability [10,11,12,13] have been shown to affect trust. To measure trust in human–robot collaboration different scale metrics have been developed [14,15,16]. However, little research has focused on the opposite scenario: how a robot should assess human partner’s trustworthiness. Vinanzi et al. [17] and Patacchiola et al. [18] worked on a developmental cognitive architecture based on the Theory of Mind. Their architecture exploits episodic memory to feed a Bayesian model of trust, making the iCub and Pepper humanoid robots able to decide whether to trust or not the human partners. Importantly, in these models, trust is assessed based on whether the human has provided a veridical or a false indication to the robot, but this information is not dynamically updated in further interactions. Hence, the ability to detect lies represents for a robot a crucial skill to evaluate whether its partner should be trusted. Indeed, detecting lies has been proved to be an effective way to evaluate partner’s trustworthiness in a social interaction [1]. In the context of human robot interaction, a robot capable of detecting lies, could use it as a quantitative measure to understand and predict the human partners’ behaviors.

Lie detection has been well explored in the literature. De Paulo et al. [19] and Honts et al. [20] showed how lying can be related to an increment of cognitive load with respect to truth telling. This cognitive effort is due to the creation and maintenance of a credible and coherent story [21]. Therefore, traditional methods of lie detection involve the monitoring of physiological metrics like skin conductance, respiration rate, heartbeat, or blood pressure, all reflecting variations of cognitive load and stress. The polygraph, one of the most used lie detection devices, relies on the aforementioned metrics reaching an accuracy between 81 and 91% [22] (but see [20] about the possibility of bypassing the measure). Other lie detection methods rely on fMRI images [23], skin temperature variations [24], micro-expressions [25], photoplethysmography [26] or acoustic prosody [27]. Most of these methods (i) are invasive or require cumbersome devices, not easily portable to everyday life scenarios; (ii) are expensive; (iii) or require experts to evaluate the measures. These characteristics make these approaches not suitable for porting them to robotic platforms.

Recent findings [28,29,30,31,32,33] proved how pupillometry measurements [34] and, in particular, Task Evoked Pupillary Responses (TEPRs) [35], can be used to evaluate the task-evoked cognitive load. Beatty et al. [35] identified mean pupil dilation, peak dilation and latency to peak as useful task-evoked pupillary responses. Dionisio et al. [36] studied the task-evoked pupil dilation related to lie telling. They asked students to lie or tell the truth, answering questions about episodic memory. They reported a significant greater pupil dilation during lie production with respect to truth telling. Gonzalez-Billandon et al. [37] and Aroyo et al. [38] found that participants had a higher mean pupil dilation when lying with respect to telling the truth both in human–human and human–robot interaction. Both, mobile head mounted [39, 40], and remote eye tracker [41, 42] devices have been used as minimally invasive methods to measure pupillometric features, more appropriate for real-world scenarios. Recent research showed the possibility to measure TEPRs from RGB cameras, suitable for robotic platforms, making pupillometry a promising candidate to detect lies in real-life human–robot interactions [43,44,45,46].

Beyond minimizing the invasiveness of the sensors used, the social robot should perform this evaluation while preserving the pleasantness of the interaction. This is particularly important for humanoid robots that aim to act as teachers, caregivers, or just friendly companions. Conversely, state-of-the-art setups and scenarios for lie detection are long, strict, and interrogatory-like [26, 27, 37].

In this paper, we propose a method to detect lies in real-time via pupillometry-driven cognitive load assessment, by learning how each individual partners’ pupil dilation changes while lying. From a technical point of view, we show how specific pupil dilation patters can be related to an increase in cognitive load due to lying, and how a robot can exploit them to work out if the human partner is lying. We validate the approach in a quick and entertaining interaction autonomously led by the iCub humanoid robot. The iCub asked participants to describe 12 gaming cards and to lie about a few of them. iCub autonomously processed in real-time participants’ pupil dilation to detect the deceptive card description based on our proposed method. During a first phase of the game (Calibration Phase) participants had to lie about one predefined card among six. Afterwards, participants could freely decide whether to lie or not for each of the next 6 cards in the game (Testing Phase). In this second phase, iCub exploited the knowledge about pupil dilation acquired in the Calibration phase to detect the player’s lies, without knowing in advance the number of true or false descriptions. The robot obtained an average accuracy of 70.8%, during the game, among the two phases, where the number of lies was either fixed (1 over 6 cards, Calibration) or it was arbitrarily chosen by each participant (Testing). To improve the robustness of the approach, we designed novel classification methods to adapt iCub’s knowledge over multiple interactions with the same individual. Last, we propose an attempt to train a generic machine learning model, able to detect lies without any previous information about the specific human partner.

In the following sections we will first describe the experimental procedure and the setup used to run the validation experiment (Sect. 2), the collected measures (Sect. 3) and the architecture enabling the robot to conduct the game and detect lies (Sect. 4). Then, we describe the data preparation procedures and the datasets built with the collected data (Sect. 5). Last, we will report the results of the experimental validation with naïve subjects and the results of machine learning methods aimed at improving within-subject detection and lie detection in presence of novel partners (Sect. 6). Results suggest that with the proposed interaction and lie detection models iCub could reliably assess when the human partners were lying.

2 Methods

To prove the effectiveness of our lie detection method, we performed an HRI experiment. The setup and a subset of the procedure have been previously described in [47].

2.1 Setup and Materials

The room was arranged to replicate an informal interaction scenario between a human and a robot (Fig. 1). The participants sat in front of the iCub humanoid robot separated by a table covered with a black cloth. On the table, the experimenter placed: six green marks (95 × 70 mm); a deck of 84 cards from Dixit Journey card game with the back painted in blue; a keyboard; and a Tobii Pro Glasses 2 eye-tracker. On participants’ left there was a little drawer (deployment area); while on the right, a black curtain hid the experimenter from participants’ sight. Behind iCub, a 47 inches television showed iCub's speech during the interaction (to prevent any speech misunderstanding). A Logitech Brio 4k webcam, fixed on the television, recorded the scene from iCub's point of view at a resolution of 1080p (Fig. 1, left).

The Dixit Journey card deck is composed by 84 cards (80 × 120 mm) with different toon-styled drawings meant to stimulate creative thinking [48] (Fig. 2, right). Designing the card game, we tried to avoid any cue—other than the wearable eye-tracker—for the participants about the method used by iCub to detect their false card descriptions; in this sense, we avoided any machine-readable mark (i.e., QR codes on cards’ back) that iCub could use to recognize the cards. The Tobii Pro Glasses 2 eye tracker recorded participants’ pupillometric features at a frequency of 100 Hz and streamed in real-time the participants’ pupil dilations at a frequency of 10 Hz (Fig. 2, center). The window blinders were closed, and the room was lit with artificial light to ensure a stable light condition for all the participants.

(Left) Participant describing a card to iCub, while wearing the Tobii Pro Glasses 2 eye tracker (Logitech Brio 4 k webcam point of view); (Center) Point of view of the participant during the interaction collected through the Tobii glasses; (Right) Examples of Dixit Journey gaming cards (authored by Jean-Louis Roubira, designed by Xavier Collette and published by Libellud). (Color figure online)

The iCub humanoid robotic platform [49] played the role of a magician. iCub autonomously led the whole interaction thanks to the autonomous end-to-end (E2E) architecture in Fig. 3 (see Sect. 4). The experimenter monitored the scene through the iCub’s left eye ensuring the safety of the participants and the correct execution of the experiment.

2.2 Procedure

At least a day before the experimental session, the participants filled in a set of questionnaires meant to assess their personality (see Sect. 3). After signing the informed consent, the experimenter led the participants to the experimental room. They were asked to sit on the chair in front of iCub and informed that the robot would have played a game with them. Then, the experimenter hid himself behind the black curtain and started the experiment.

The human–robot interaction was composed of two phases, Calibration Phase and Testing Phase, both led autonomously by iCub.

2.2.1 Calibration Phase

As the game started, iCub asked the participants to shuffle the cards deck, extract six cards without looking at them and put the deck on the deployment area. Then, iCub asked them to draw out one of the cards (referred as secret card) and memorize it. Afterwards, iCub instructed the participants to look at all the cards, one by one, shuffle them and put them facing down on the six green marks on the table. iCub explained that it was going to point each card one by one and they had to take the pointed card, look at it, describe it and then put it back facing down on the table. Then, iCub explained the game rules: “The trick is this: if the card you take is your secret card, you should describe it in a deceitful and creative way. Otherwise, describe just what you see”. Finally, iCub asked the participants to wear the Tobii Pro Glasses 2 eye tracker, take a deep breath and relax.

iCub randomly pointed to each of the six cards, while listening to participants’ description, and acknowledging it with a short greeting sentence (e.g., “ok”, “I see”, etc.). After the last description, iCub guessed the participants’ secret card and asked them to put the six cards aside to validate the detection or show to iCub the real secret card to reject it. Participants’ confirmation is meant to select the correct secret card in case iCub fails to detect it. Before the beginning of the Testing Phase, the experimenter could manually override the detected secret card with the one presented by the participants, in case the robot failed the guess. Finally, iCub asked them to remove the six cards to start a new game.

2.2.2 Testing Phase

As soon as the participants removed the six cards from the table, iCub asked to take the deck again and draw out six new cards. iCub told the participants to look at all the cards, one by one, then shuffle them and place them on the six green marks. Afterward, iCub instructed the participants that it was going to point to all the cards from right to left (with respect to participants’ point of view) and instructed them to handle the pointed card as in the first game. However, it added: “This time you can choose, for each card, whether to describe it in a creative and deceitful way, or to describe just what you see”. While the robot was explaining the rules, the participants kept wearing the Tobii Pro Glasses 2.

For each card, iCub (i) pointed it, (ii) listened to participants’ description, (iii) acknowledged it with a short sentence, (iv) tried to classify the description as truthful or false and, (v) asked for a confirmation. The participants had to show the card they just described to reject iCub’s classification or do nothing to validate it.

2.2.3 General Remarks

During the rule explanation of the two phases, iCub instructed the participants to press a button on the keyboard to move to the next task (i.e., after shuffling the cards deck, or after memorizing the secret card). No time limit was given to shuffle the card, to look at them, to memorize the secret card nor to describe them. iCub’s pointing has been designed to replicate a human-like gesture: first moving the gaze toward the target, then the arm, fingers, and torso with a biological inspired velocity profile.

After the second game, the experimenter led the participants to the initial room and asked them to fill in a questionnaire meant to evaluate their task load and self-report their performance during the game (see Sect. 3). Finally, the experimenter deeply debriefed the participants and let them have the chance to ask questions about the experiment before receiving their monetary compensation.

2.3 Participants

39 participants (25 females, 14 males), with an average age of 28 years (SD = 8) and a broad educational background took part in the experiment. They signed an informed consent form approved by the ethical committee of the Regione Liguria (Italy) where it was stated that cameras and microphones could record their performance and agreed on the use of their data for scientific purposes. After the experiment, they received a monetary compensation of 10€. Although all participants completed the game, 5 were excluded from further analysis: 2 for technical issues, 2 because they did not follow the rules of the game. The last one was considered an outlier, as she concluded the game in 38 min (a duration longer than 3SD plus the average game duration, which lasted 17 min). Hence, the final sample includes N = 34 participants (22 females, 12 males).

3 Measurements

3.1 Pre-Questionnaires

Before the experiment, the participants filled in the following questionnaires: The Big Five personality traits (extroversion, agreeableness, conscientiousness, neuroticism, openness) [50]; the Brief Histrionic Personality Disorder (BHPD) [51]; and the Short Dark Triad (SD3, machiavellianism, narcissism, and psychopathy) [52].

3.2 Post-Questionnaires

After the experiment, the participants filled in the NASA-TLX [53] and a set of questions regarding: (i) the experienced fun, (ii) creative effort, (iii) strategies adopted in fabricating a deceitful and creative description during the game, (iv) previous experience about the Dixit Journey card game, (v) previous experience about improvisation and acting, and, (vi) habits on playing deception-related games.

3.3 Gaze Measurements

From the full set of pupillometric features measured by the Tobii Pro Glasses 2 eye tracker, we collected and used only the pupil dilation, in millimeters, for right and left eyes. To avoid any impact on the informality of the social interaction, we avoided the eye tracker calibration phase; indeed, the calibration does not affect the pupil dilation measurement [54]. Pupil dilation data points are synchronized over the YARP robotic platform with the annotation events.

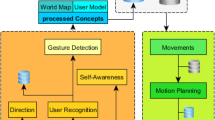

4 Robot Architecture

iCub autonomously leads the human–robot interaction thanks to the end-to-end architecture in Fig. 3. An initial version of the architecture, designed to handle the Calibration Phase only, is described in [47]. With the Turn Detector iCub detects the beginning and end of each card description by tracking the number of green (marks) and blue (cards) blobs visible in the scene. This is also used to understand participants’ confirmations. The Tobii Streamer reads participants’ pupillometric features from the Tobii Pro Glasses 2 eye-tracker and streams and logs them in real-time over the YARP robotic platform [55]. The Game Controller implements the main game engine: (i) it controls iCub’s movements and speech; and (ii) it segments the start and end of each pointing, card description and phases, logging annotation events. The logged annotation events and pupil data points are synchronized over the YARP robotic platform [55], providing an autonomous annotation for future analysis.

Finally, the Secret Card Detector and the Card Classifier enable iCub to identify participants’ lies during the game. iCub detects (Calibration Phase) and classifies (Testing Phase) players’ lies thanks to a specific Task Evoked Pupillary Response: the fabrication of a credible and consistent deceptive card description triggers an increase in players’ cognitive load [56, 57]; this increment reflects on a higher pupil dilation with respect to a truthful card description [19, 21, 58]. iCub aggregates participants’ eye pupil dilation data points, computing the mean pupil dilation during each card description and use them to detect players’ lies. We focused on right eye’s pupil dilation since both Tobii documentation [54] and previous results indicate that pupil dilation is not different between right and left eye [37]. The components implement two heuristic methods:

Calibration Heuristic (Fig. 4, left) During the Calibration Phase, iCub detects as secret card the one related to the highest mean pupil dilation among the six card descriptions. This approach has been described in [47].

Testing Heuristic (Fig. 4, right) At the end of the Calibration Phase, iCub knows 6 mean pupil dilation data points: 1 related to the secret card, and 5 related to truthful cards. With them, it computes two reference scores: the true reference score is the average of the 5 mean pupil dilations of truthful cards; the false reference score is just the secret card mean pupil dilation. For each Testing Phase card description, the mean pupil dilation was computed and compared to the two reference scores. By taking the minimum absolute difference iCub could label the current description as fake or as true.

5 Data Preparation

From the pupil dilation data points collected in real-time we built two datasets following the computational workflow in Fig. 5.

5.1 Card Segmentation (Fig. 5, Top)

The card trial annotation is autonomously performed by the Game Controller (Fig. 4) by rising annotation events on the YARP robotic platform for the beginning and end of each pointing and card description. We segmented the pupil dilation time series into 3 temporal intervals for each card trial: (i) robot's turn: iCub’s pointing gesture, from the moment iCub starts the pointing gesture till the participant takes the pointed card from the green mark; (ii) player's turn: a card description, from the moment the participant takes the card from the green mark, till they put it back on it; (iii) card trial: the whole interaction for a single card, from the moment iCub starts the pointing gesture till the participant puts the card back on the green mark.

5.2 Data Preprocessing (Fig. 5, Center)

We fitted and resampled the time series at 10 Hz to make it consistent with the real-time processing, then applied a median filter to remove the outliers and a rolling window mean filter to smooth the time series and infer any eventual missing data points. We then corrected each time series subtracting a baseline value for each participant [59]. In this reference system, a positive value represents a dilation, while a negative value represents a contraction with respect to the baseline. We corrected the time series with respect to two different baselines: (i) In the Single Baseline Correction, the baseline is computed as the average pupil dilation during the 5 seconds before the first pointing of the Calibration Phase and applied to all the cards of both phases; (ii) in the Per-card Baseline Correction, a specific baseline is computed for each card as the average pupil dilation during the 5 seconds before each pointing.

5.3 Feature Extraction (Fig. 5, Bottom)

Finally, we aggregated the time series of each temporal interval, and computed several features. For each player's turn, robot's turn, and card trial we computed the maximum, minimum, mean and standard deviation of the pupil dilation in millimeters, and the duration in seconds. Moreover, on the whole card trial we computed a set of 26 specific time series features using the python module Time Series Feature Extraction Library (TSFEL) [60]. In particular, the TSFEL features are: (i) Statistical Features: median, median absolute deviation, mean absolute deviation, kurtosis, skewness and variance; (ii) Temporal Features: absolute energy, area under the curve, autocorrelation, centroid, entropy, mean absolute difference, mean difference, median absolute difference, median difference, peak to peak distance, slope, total energy; (iii) Spectral Features: fundamental frequency, maximum frequency, median frequency, spectral centroid, spectral entropy, spectral kurtosis, spectral skewness, spectral slope. We considered the features for both eyes as separate data points to augment the datasets and hence included a feature to discriminate from the right and left eye. The resulting feature set is composed of 42 features; based on them, we defined two different datasets:

5.3.1 Single Baseline Dataset

This dataset includes the data points of both phases, replicating the data structure used in real-time. It is meant to explore an incremental learning over multiple interactions with the same individual.

5.3.2 Per-card Baseline Dataset

This dataset, instead, includes only data from the Testing Phase; it is meant to train a generic machine learning model, independent from the specific interacting partner.

Shapiro–Wilk and D’Agostino K-squared normality tests showed that some of the features of the datasets were not normally distributed. Therefore, we opted to use non-parametric tests for all the following statistical analyses. Additionally, we decided to focus on data points from participants’ right eye (unless otherwise specified), since there is no difference between right and left eye pupillary features [54].

6 Results

In this section we report the in-game and questionnaires results, along with the post-hoc analysis on the collected pupillometric data. In the post-hoc analysis, we mainly focus on the learning from the Calibration to the Testing Phase and on the second phase per-se; for a deeper analysis of the Calibration Phase see [47].

6.1 In-Game Results

The interaction lasted on average 17 min (SD = 5) from the beginning of iCub explaining the Calibration Phase’s rules till the final greeting of the Testing Phase.

The Calibration Phase lasted on average 8 min (SD = 3), during which, iCub successfully detected the players’ secret card with an accuracy of 88.2% (against a chance level of 16.6%, N = 34). The Testing Phase lasted on average 8 min (SD = 2). The participants were free to choose whether to lie or not, producing on average 2.73 (SD = 0.94, 45%) false descriptions among 6 cards. ICub successfully classified each card description as true or false with accuracy = 70.8%, precision = 73.6%, recall = 57% and F1 score = 64.2% (N = 34).

Considering the questionnaires, Table 1 summarizes the results of the Big Five personality traits [50], Brief Histrionic Personality Disorder [51] and Short Dark Triad [52] questionnaires, performed before the experiment. Average scores for the Big Five were Agreeableness: M = 0.659, SD = 0.113; Conscientiousness: M = 0.481, SD = 0.072; Neuroticism: M = 0.387, SD = 0.16; Openness to experiences: M = 0.476, SD = 0.07 and Extraversion: M = 0.486, SD = 0.061. Considering the Dark Triad, the scores were Psychopathy: M = 0.191, SD = 0.113; Machiavellianism: M = 0.438, SD = 0.129 and Narcissism: M = 0.396, SD = 0.15. For the Brief Histrionic Personality Disorder, the average score was M = 0.481, SD = 0.26.

After the experiment, participants filled in the NASA-TLX questionnaire, rating on a 10-points Likert scale their effort on performing the task. On average, they reported a low task load (M = 3.717, SD = 1.041). Among the components, Mental Effort (M = 5.41, SD = 1.78), Fatigue (M = 5.07, SD = 2.14) and Performance (M = 5.35, SD = 2.32) are slightly higher than Temporal Effort (M = 2.59, SD = 1.72), Frustration (M = 2.72, SD = 1.83) and Physical Effort (M = 1.21, SD = 0.49). This is consistent with the requirements of the task. Also, we asked participants to self-report, on a 5-points Likert scale the effort put on fabricating creative and deceptive descriptions (Lie Effort: M = 4.17, SD = 0.71) and the experienced fun (Fun: M = 4.59, SD = 0.57).

Then, we explored whether pupil dilation features were dependent on participants’ personality traits. We considered the Testing Phase data from the Per-card Baseline Dataset, to minimize the impact of card presentation order on pupil features, normalizing each card for its own baseline. We fit two linear regression models with the personality traits from the pre-questionnaire as independent variables and, as dependent variables the difference between mean pupil dilation for false and true cards or the mean pupil dilation baseline. Results show that only Neuroticism correlates significantly with the mean pupil dilation baseline (t = 2.492, p = 0.021, Adj. R2 = 0.115). We also tested whether pupil features correlated with the average description duration, Fun, Lie Effort, task load or Mental Effort, but we did not find any significant correlation.

6.2 Learning from a Brief Interaction

To investigate in more detail the relationship between pupil dilation and lying observed during the game, we started analyzing the Single Baseline Dataset which resembles the data structure used in real-time.

The Single Baseline Dataset presents a multilevel structure (multiple phases for the same participant, nested in card trials, nested in turns) with unbalanced card classes (one secret card among six—about 16.6%—in the Calibration Phase and on average 45% of false cards in the Testing Phase). Since the real-time game was based on participants’ mean pupil dilation during the player's turn, we decided to focus on such temporal intervals.

We fitted a mixed effects model for the player turns with mean pupil dilation as the outcome variable. As fixed effects we entered “card label” (two levels: true, false), “phase” (two levels: calibration, testing) and their interaction into the model. As random effect we had intercept for participants. We set the reference level on the Testing Phase and false card label. Results show a highly significant effect of card label (B = −0.223, t = −8.885, p < 0.0001) revealing a higher mean pupil dilation for the false card descriptions with respect to the truthful ones. We also found a significant effect of phase (B = 0.104, t = 2.428, p = 0.016), with a significantly lower mean pupil dilation in the Testing Phase, and no significance of the interaction between the two factors (B = −0.052, t = −1.023, p = 0.307) (Fig. 6).

As an exploratory analysis, we fit another mixed effects model on the robot's turn, with the same abovementioned structure. Results show no effect on the card label (B = −0.035, t = −1.373, p = 0.171), but a highly significant effect on the phase (B = 0.124, t = 3.490, p = 0.0005) confirming a lower mean pupil dilation in the Testing Phase with respect to the Calibration one also for this turn. Finally, we found no effect of the interaction of card label and phase factors (B = −0.014, t = −0.331, p = 0.741).

6.2.1 Incremental Testing Heuristic

Even if the Testing Heuristic demonstrated a quite good accuracy—humans perform near chance on detecting lies [61]—it has a low recall score (recall = 57%, accuracy = 70.8%, precision = 73.6%, N = 34), that is it recognizes only a relatively low proportion of the false statements made by the participants.

Figure 7 provides two examples of correct (left graph) and wrong (right graph) classifications. The two panels show the mean pupil dilations of participant A (left graph) and participant B (right graph) as processed by the Testing Heuristic. In each graph, the two data points on the left represent the two reference scores: the red square is the mean pupil dilation for the secret card, while the green circle is the average of the mean pupil dilations for the truthful cards. On the right there are the mean pupil dilation data points for each card of the Testing Phase. For participant A, pupil dilations for false and true descriptions remain consistent with the average values measured during the previous phase and the classification is always successful. Conversely, all the Testing Phase mean pupil dilations of participant B (right graph) fall in the range of the true reference score. Hence all the false card descriptions have been misclassified as false positives (red circles).

The observed errors are determined by two assumption on which the heuristic is based: (i) the difference in pupil dilation between false and true sentences remains almost the same between the two phases; and (ii) participants’ pupil dilation remains almost stable between the two phases. The first assumption is confirmed by the non-significant difference in the interaction of “phase” and “card labels” in both turns. However, the statistical analysis showed that participants’ pupil dilation is on average lower during the Testing Phase.

To compensate for this effect and increase the robustness of the heuristic, we explored the possibility to incrementally adapt the reference scores for truthful and false card description. After each card classification, the new card value is aggregated with the reference scores. This way iCub incrementally learns how the human partner lies and tells the truth, improving the classification performances trial by trial. We simulated the Testing Heuristic based on mean pupil dilation during the player's turn, as in the real-time game, but including the incremental learning. For each Testing Phase card trial, both the reference scores are updated computing the mean between each score and the novel mean pupil dilation data point. The heuristic performance increases to accuracy = 76.7%, precision = 76.1%, recall = 73.7% and F1 score = 75.6%.

Then, we simulated the Testing Heuristic performing a grid search on several parameters: (i) all the possible combinations of the available features (limited to a maximum of 3 features considered at the same time, see Sect. 5.3 for the full list); (ii) methods to compute the true reference score (mean, median, minimum); (iii) methods to update the reference scores (mean, difference, quadratic error); (iv) whether to update both scores or just the one of the correct class; (v) whether to update the reference scores only if the card trial is misclassified. Since we assume that for a lie detection system it is preferable to detect a greater amount of true negative (i.e., spot a larger amount of lies) even at the expenses of having a few false positives, we prioritized the recall score. The best heuristic has an accuracy = 78.7%, precision = 76%, recall = 80% and F1 score = 77.9%. It is based on both the mean and minimum pupil dilation during player's turn, which are compared by a 2D Euclidean distance with the reference scores; the true reference score is computed as the minimum among mean pupil dilations for the truthful cards descriptions during the Calibration Phase; both the reference scores are updated in any case, averaging each score with the new value.

6.2.2 Random Forest Classifier

Even if the new heuristic method performs better than the one exploited in real-time, it is still not generic and robust enough to describe the variability of participants’ pupil dilation between the two phases. Indeed, the Testing Heuristic is meant to adapt to each specific individual. We supposed that, by relaxing this constraint, it would be possible to compensate for the variability between the two phases. We trained a machine learning model able to learn from the Calibration Phase on the whole participants sample, and to exploit the gained knowledge on the Testing Phase. The classification problem is a binary classification defined by a couple [X, Y] where: X (42 × 1) is the vector of input features and Y ∈ [0: true; 1: false] is the vector of desired outputs. We included all the features from player’s turn, robot’s turn, and the whole card trial intervals, along with the TSFEL features on the latter one (for an exhaustive list of the features see Sec 5.3). We defined a within-participant split, considering the Calibration Phase data as training set and the Testing Phase data as validation and test (with a splitting ratio of 50:50). Calibration Phase data points have two main issues: (i) they are unbalanced (1 secret card among 6 cards); and (ii) the set is relatively small (6 cards, for 34 participants, 2 eyes for participants, for a total of 408 data points). We considered features from both eyes to augment the dataset. Due to these limitations we selected a Random Forests algorithm [62]. This kind of model should not overfit when increasing the number of trees, even with relatively small datasets. Also, we tackled the unbalancing problem by oversampling the Calibration Phase data points with the synthetic minority oversampling technique (SMOTE) [63]. We did not oversample the Testing Phase data points validating and testing on realistic data. Even if not strictly required by the Random Forest algorithm, we applied a min max normalization [64] to all the features within the data points of each participant in both phases. The idea is that a value that is relevant for a participant could be not relevant for another. We performed a grid search validation, with fixed validation set, searching the best hyper-parameters and feature set for the random forest classifier. Due to the unbalanced dataset, we rely on the F1 score, precision, recall and AUROC score. The best random forest classifier trained on the full features set achieved an F1 score of 56.5%, a precision of 57.1%, a recall of55.9% and AUCROC score of 59.6%.

6.2.3 Lying as an anomaly: One-Class Support Vector Machine

Given the low performance of the random forest classifier we changed approach and we considered the lie detection task as an anomaly detection problem. In this frame, the model knows just the values associated to true descriptions and learns to consider as a lie what is not truthful. We trained a one-class support vector machine (SVM) anomaly detector on the Calibration Phase data points, validating and testing it on the Testing Phase data points. We considered as training set the truthful card description of the Calibration Phase and we carefully balanced Testing Phase data points, preserving the ratio between true and false card descriptions in the validation and test sets. We performed a grid search validation, with fixed validation set, searching the best hyper-parameters and feature set for the one-class SVM model. Due to the nature of the anomaly detection problem, we evaluate it based on precision, recall and F1 score. The best one-class SVM model achieved a F1 score of 67.7%, a precision of 60% and a recall of 77.8%. It is based on features from both the player’s turn (minimum, maximum and mean pupil dilation); and the whole card trial (minimum, maximum, mean, and median pupil dilation; total energy, absolute energy, and autocorrelation).

6.3 Detecting Lies from Novel Human Partners

After having analyzed how previous knowledge gained during an interaction, can be used to improve lie detection in a subsequent task, we explored the possibility of building a pupil-dilation based lie detector able to classify false card descriptions from novel human partners. This could be the first step toward a minimally invasive and ecological lie detector able to classify a generic sentence as true or false, without any previous interaction with the specific partner. In this sense, it is important to consider the card descriptions as independent as possible from the specific participant and the description order. Hence, we focused on the Per-card Baseline Dataset which includes only Testing Phase data points. In the Per-card Baseline Dataset, the baseline is computed as the average of the pupil dilation, for each eye separately, during the 5 s before each card trial. This baseline is subtracted to the pupil dilation time series of the relative card description (see Sect. 5.2). We considered only the data from the Testing Phase since the nature of the task—“This time, you can choose, for each card, if describe it in a deceitful and creative way, or describe what you see” makes each card description more similar to a generic and standalone lie.

First, we analyzed whether the use of a Per-card baseline determined substantial differences with respect to the descriptive and statistical analysis conducted with the single baseline. We fitted a mixed effects model with mean pupil dilation as the outcome variable. We considered “card label” (two levels: true, false) and “turn” (two levels: robot, player) and their interaction as fixed factors, and we had as random effect the intercept for participants. We set the reference level on the player's turn and false card label. Results show a highly significant effect on the card label (B = −0.234, t = −6.58, p < 0.001), the turn (B = −0.321, t = −6.39, p < 0.001) and their interaction (B = 0.255, t = 5.205, p < 0.001). This pattern of results (Fig. 8) is similar to that observed for the Testing phase in the analysis with the “same” baseline (cf. Fig. 6).

We also analyzed whether the other features differed significantly between the true and false card descriptions. We computed the average of each feature for true and false cards and performed Wilcoxon signed-rank tests. Results show that also the minimum pupil dilation (Z = 570.0, p < 0.001) and the maximum pupil dilation (Z = 530.0, p < 0.001) during player’s turn were significantly different. Regarding the whole card trial, the mean pupil dilation (Z = 555.0, p < 0.001), the median pupil dilation (Z = 561.0, p < 0.001), the minimum pupil dilation (Z = 542.0, p < 0.001), the maximum pupil dilation (Z = 500.0, p < 0.001) and the slope (Z = 550.0, p < 0.001) were significantly different. Also, the total energy (Z = 477.0, p = 0.001), the absolute energy (Z = 457.0, p = 0.003), the autocorrelation (Z = 458.0, p = 0.003), and the area under the curve (Z = 442.0, p = 0.007) on the whole card trial were significantly different. Finally, we found no significance on robot’s turn features.

6.3.1 Random Forest Classifier

To design a lie detector that could classify a card description as true or false with no prior knowledge of the participants, we started from the statistical findings: we selected a subset of the available features, excluding the five features (max, min, mean, standard deviation of the pupil dilation and duration) related to the robot’s turn. The classification problem is a binary classification defined by a couple [X, Y] where: X (37 × 1) is the vector of input features and Y ∈ [0: true; 1: false] is the vector of desired outputs. Considering data points from both participants’ eyes, we split Testing Phase data between-participants. We considered 25 randomly selected participants (75%) as training and validation set and the remaining as test set. We did not apply any within-participant normalization of the features. We ran a 4-fold grid search cross validation looking for the best values of the hyper-parameters for the classifier. Even if Testing Phase data points are more balanced (47% of false card description, against 16.6% during the Calibration Phase), we still embedded the SMOTE algorithm [63] in the cross validation. This way it is possible to oversample just the training set, avoiding any synthetized sample in the validation set. The best model achieves a precision, recall and F1 score of 71.1% and AUCROC score of 73.3%.

7 Discussion

In this study we endowed iCub with the capability to detect lies in the context of a natural game-like interaction, using pupil responses to detect cognitive load associated to lying. Games are known to provide ecological assessments, preserving the relationship between the interacting partners [6, 65,66,67]. Also in the context of HRI, games have been successfully exploited to perform diverse types of measurements, even related to cognitive load assessment [40, 68,69,70]. In the current work, the game is a perfect scenario to demonstrate that our lie detection method based on a heuristic function is quick, interactive and does not depend on invasive measures. The game results also provide evidence of the feasibility of our approach, with an overall accuracy of 70.8% (F1 score of 64.2%) during the Testing Phase, when basing the lie detection on mean pupil dilation alone. We also show that such accuracy can increase up to 78.7% (F1 score of 77.9%) by enabling an iterative adaptation to each individual partner and by leveraging on a combination of different pupil-related features. The effect on which the lie detection heuristic was based, i.e., the difference in pupil dilation during false or true card descriptions, was relatively robust and did not depend on participants’ personality traits, nor on the characteristics of the game (e.g., the experienced fun or the description duration),

Moreover, we explored the possibility to extend the lie detection (i) over multiple interactions with the same individual and (ii) with novel partners. First, we trained a random forest classifier splitting within-subject over the two phases. However, the model did not perform better than the heuristic (F1 score = 56.5%). We assume that this depends on the unimodality of the features, the small number of data points and the strong reliance on synthesized data on the training set. We expect that a machine learning model trained on more real data would be more robust and generic with respect to a real-world human–robot interaction. We tried to overcome these issues by tackling the problem as an anomaly detection: we trained a one-class SVM anomaly detector on the truthful examples of the Calibration Phase and tested on the whole Testing Phase (F1 score = 67.7%). Needing only truthful examples makes the models independent from collecting lying examples. This could facilitate the learning, considering, for instance, a humanoid robot that wants to improve the lie detection model online in a supervised way. Finally, thinking about a generic lie detection system, we trained a random forest classifier (F1 score = 71.7%) between-subject to classify false card descriptions from novel individuals. The main difference between the heuristic methods and the machine learning models is in that, the heuristics’ knowledge is limited to a single individual. Hence, even if the machine models’ performances are worse than the heuristic methods’ ones, the formers should be more robust against unexpected behaviors from the participants. Additionally, they offer features that ease their portability on a real-world human–robot interaction i.e., the need of truthful examples only for the one-class SVM model or the ability to classify lies without any previous interaction for the last random forest classifier. Even if both the heuristic and the models can detect human partners’ lies online, they both evaluate partners’ behavior after their turn. This is acceptable in an informal human–robot interaction, but it could be an issue for future applications like interrogatories or security checks (i.e. at airports). In the future, it will be necessary to consider the temporal evolution of the pupil dilation timeseries during the interaction; for instance, by segmenting players’ turn into smaller windows and applying machine learning models (i.e., LSTM) which consider windows’ temporal evolution [71,72,73]. Moreover, it will be necessary to train such models on a bigger dataset, involving both a higher number of individuals and longer interactions with each of them. The proposed models are light and independent from any network connections; this makes them suitable to be implemented with extreme simplicity in the context of HRI and avoiding untreatable computation demand. The other advantage of the presented contribution is that the robot can autonomously address all the stages of the interaction keeping the human partner engaged and assessing deceptive behavior in real-time. At the current development stage, the only potential intervention is required if the robot fails to detect the secret card at the end of the Calibration Phase. However, also this intervention could be made autonomously by the robot: after iCub’s detection, the participants have to show the correct secret card in order to reject it; iCub could detect the correct card position, thanks to the HSV (Hue, Saturation, Value) color threshold of cards and marks, and hence self-learn the correct false reference score.

The current implementation relies on the players’ pupil dilation measured with a head mounted eye-tracker, such as the Tobii Pro Glasses 2. This device tends to be dependent on the environmental light condition and could impact the naturalness of the human–robot interaction. We tried to limit the latter factor by removing the calibration step (not needed to measure participants’ pupil dilation). However, skipping the calibration, we could not use the other features from the eye-tracker (e.g., gaze orientation). The ideal solution would be to measure a full set of pupillometric features from the RGB cameras embedded on the robotic platform. Recent findings suggest that this approach could be feasible [43,44,45,46, 74]; hence, we look forward to removing this limitation, making the system completely non-intrusive. Other than due to cognitive load changes, pupil dilation tends to be affected by other factors like excitement, stress, and environmental light conditions. Looking toward a real-world application, it would be necessary to compensate for pupil dilation limitations. For instance, a multimodal solution adding both the audio and video of the scene could help the robot understanding the context of the interaction and explain unexpected pupillary responses of the human partner.

The analysis of pupil dilation revealed that 38% of the participants (N = 13 out of 34) presented a lower pupil dilation, during the second phase with respect to the first one. We speculate that this is associated to a reduction in cognitive load and that this effect depends on several factors that contribute to making the Testing Phase less stressful. First, in this phase participants do not need to remember the secret card and can freely choose how to play the game. As a result, there is no need to prepare in advance the deceptive and creative card description. Moreover, participants are also more used to play the game, even if there are small differences, and they are more aware of their role and iCub’s behavior and capabilities. Additionally, iCub provides a feedback after each card description, eliminating the need to wait for the phase completion to know if the lie had been discovered or not. All these factors could have contributed to decrease of participants' cognitive load. However overall, the interaction has been judged as entertaining and not too cognitively demanding in the questionnaires, suggesting that also the Calibration phase was not too challenging for the participants.

We designed the human–robot interaction to validate our lie detection method in an informal interactive scenario. Since the game is based on 84 different cards, with complex and diverse drawings, we speculate that the results we obtain cannot be explained by artifacts on pupil dilation based on the nature of the cards (e.g., different colors, or emotions in the cards’ pictures). Hence, we think our approach is modular and generic enough to be ported to different application fields. For instance, in an elderly caregiving scenario, the cards could be pills bottles a patient has to take; the robot could ask the patient if he took the medication, detecting a lie from the patient. Also, the modularity of the end-to-end architecture makes it easy to replace iCub with other robotic platforms, developing a consistent way to present the items based on the application context.

By detecting lies a humanoid robot could evaluate whether the interacting partner is trustworthy or not. Furthermore, the robot could adapt its social behavior over multiple interactions based on this evaluation. However, the system is not perfectly accurate; hence, how the robot should perform its judgment and adaptation should be carefully managed to minimize the impact on the partners’ trust toward the humanoid. For instance, in the abovementioned elderly caregiving case, if a caregiver robot detected patient’s lies several times it might need to report the patient’s behavior to the doctor along with its confidence about the performed measure, rather than accusing the patient to be a liar. In the future, it would be necessary to explore the impact of a misclassification of both truthful and false sentences, on the interacting partners, along with the effect on their trust toward the robot.

Besides the practical applications of detecting lies to assess trustworthiness, the proposed setup, interaction, and methods are based on measuring the task-evoked cognitive load related to creativity. The evaluation is performed in real-time, providing entertainment [47]. This is novel with respect to the long, strictly controlled, and tedious cognitive-load measurement tasks from the literature [37, 38, 41]. For instance, the system could be used to assess creative thinking abilities, before and after a creativity training session [75]. Also, one could use the system to monitor patients’ cognitive load during a training task in order to provide a correct support [76], adapt task difficulty [70], evaluate their progress [77] or schedule proper resting sessions [78].

8 Conclusion

In the current manuscript we proposed novel methods to enable robots to detect whether the human partner is lying in a quick and entertaining interaction. We have shown that the detection works and that it is possible to improve it if the model can adapt to each partner during the interaction (F1 score = 78%). The approach, however, could still succeed in a first encounter with a new participant (F1 score = 71%). The ability to autonomously detect lies could be relevant for robots as a basis to build a model of its human partners’ trustworthiness. The naturalness of the approach proposed here would allow to do so without impacting on the sociality of the human–robot interaction. Mutual trust is important to ensure healthy and stable social interactions and this should hold also for HRI. Hence, we believe that novel methods to understand human deceptive behavior will be more and more important in pursuing effective human–robot cooperation.

Availability of data and material

The collected dataset will be made available on a public repository (i.e., Zenodo) upon manuscript publication.

Code availability

The code of both the robot architecture and the post-hoc analysis will be made available on a public repository (i.e., GitHub) upon manuscript publication.

References

Mccornack SA, Parks MR (1986) Deception detection and relationship development: the other side of trust. Ann Int Commun Assoc 9(1):377–389. https://doi.org/10.1080/23808985.1986.11678616

G. Lucas, S. Lieblich, and J. Gratch, “Trust Me : Multimodal Signals of Trustworthiness,” In: ICMI ’16: Proceedings of the 18th ACM International Conference on Multimodal Interaction, 2016, pp. 5–12, https://doi.org/10.1145/2993148.2993178.

M. Rueben, A. M. Aroyo, C. Lutz, J. Schmolz, P. Cleynenbreugel, A. Corti, S. Agrawal, and W. Smart, “Themes and Research Directions in Privacy-Sensitive Robotics,” EEE Work. onAdvanced Robot. its Soc. Impacts, 2018.

Hancock PA, Billings DR, Schaefer KE, Chen JYC, De Visser EJ, Parasuraman R (2011) A meta-analysis of factors affecting trust in human-robot interaction. Hum Factors 53(5):517–527. https://doi.org/10.1177/0018720811417254

A. Freedy, D. Ph, G. Weltman, D. Ph, and U. S. A. Rdecom-sttc, “Measurement of Trust in Human-Robot Collaboration,” In: International Symposium on Collaborative Technologies and Systems, 2007, pp. 106–114, https://doi.org/10.1109/CTS.2007.4621745.

Aroyo AM, Rea F, Sandini G, Sciutti A (2018) Trust and social engineering in human robot interaction: will a robot make you disclose sensitive information, conform to its Recommendations or Gamble? IEEE Robot Autom Lett 3(4):3701–3708. https://doi.org/10.1109/LRA.2018.2856272

Dzindolet MT, Peterson SA, Pomranky RA, Pierce LG, Beck HP (2003) The role of trust in automation reliance. Int J Hum Comput Stud 58:697–718. https://doi.org/10.1016/S1071-5819(03)00038-7

S. Ososky, T. Sanders, F. Jentsch, P. Hancock, and J. Y. C. Chen, “Determinants of system transparency and its influence on trust in and reliance on unmanned robotic systems,” In: PIE—The International Society for Optical Engineering, 2014, no. February 2015, p. 90840E, doi: https://doi.org/10.1117/12.2050622.

N. Wang, D. V Pynadath, S. G. Hill, N. Wang, and D. V Pynadath, “Building Trust in a Human-Robot Team with Automatically Generated Explanations Building Trust in a Human-Robot Team with Automatically Generated Explanations, In: Proc. Interservice/Industry Training, Simul. Educ. Conf., no. 15315, pp. 1–12, 2015.

S. Agrawal and H. Yanco, “Feedback Methods in HRI: Studying their effect on Real-Time Trust and Operator Workload,” In: HRI’12—Proc. 7th Annu. ACM/IEEE Int. Conf. Human-Robot Interact., pp. 73–80, 2012, https://doi.org/10.1145/2157689.2157702.

Muir BM (1987) Trust between humans and machines, and the design of decision aids. Int J Man Mach Stud 27(5–6):527–539. https://doi.org/10.1016/S0020-7373(87)80013-5

Aroyo AM, Pasquali D, Koting A, Rea F, Sandini G, Sciutti A (2020) Perceived differences between on-line and real robotic failures, in RO-MAN 2020 - Trust, Acceptance and Social Cues in Human-Robot Interaction - SCRITA, 2020.

M. Desai, M. Medvedev, M. Vázquez, S. McSheehy, S. Gadea-Omelchenko, C. Bruggeman, A. Steinfeld, and H. Yanco, “Effects of changing reliability on trust of robot systems,” Proc. seventh Annu. ACM/IEEE Int. Conf. Human-Robot Interact—HRI ’12, p. 73, 2012, doi: https://doi.org/10.1145/2157689.2157702.

K. E. Schaefer, “The Perception and Measurement of Human-Robot Trust”, Ph.D. Diss. University of Central Florida, 2013. http://purl.fcla.edu/fcla/etd/CFE0004931.

Charalambous G, Fletcher S, Webb P (2016) The Development of a Scale to Evaluate Trust in Industrial Human-robot Collaboration. Int J Soc Robot 8(2):193–209. https://doi.org/10.1007/s12369-015-0333-8

Yagoda RE, Gillan DJ (2012) You Want Me to Trust a ROBOT? The Development of a Human-Robot Interaction Trust Scale. Int J Soc Robot. https://doi.org/10.1007/s12369-012-0144-0

Vinanzi S, Patacchiola M, Chella A, Cangelosi A (2019) Would a robot trust you? Developmental robotics model of trust and theory of mind. CEUR Workshop Proc 2418:74. https://doi.org/10.1098/rstb.2018.0032

Patacchiola M, Cangelosi A (2020) A Developmental Cognitive Architecture for Trust and Theory of Mind in Humanoid Robots. Cybern, IEEE Trans. https://doi.org/10.1109/TCYB.2020.3002892

DePaulo BM, Lindsay JJ, Malone BE, Muhlenbruck L, Charlton K, Cooper H (2003) Cues to deception. Psychol Bull 129(1):74–118. https://doi.org/10.1037/0033-2909.129.1.74

C. R. Honts, D. C. Raskin, and J. C. Kircher, “Mental and physical countermeasures reduce the accuracy of polygraph tests.,” J. Appl. Psychol., vol. 79, no. 2, pp. 252–9, Apr. 1994, Accessed: Jul. 07, 2019. [Online]. Available: http://www.ncbi.nlm.nih.gov/pubmed/8206815.

Kassin SM (2005) On the psychology of confessions: does innocence put innocents at Risk? Am Psychol 60(3):215–228. https://doi.org/10.1037/0003-066X.60.3.215

Gaggioli A (2018) “Beyond the Truth Machine: Emerging Technologies for Lie Detection”, Cyberpsychology. Behav Soc Netw 21(2):144–144. https://doi.org/10.1089/cyber.2018.29102.csi

M. Gamer, “Detecting of deception and concealed information using neuroimaging techniques. In: HRI’20 Human-Robot Interaction, 2011, pp. 90–113, https://doi.org/10.1017/CBO9780511975196.006.

Rajoub BA, Zwiggelaar R (2014) Thermal facial analysis for deception detection. IEEE Trans Inf Forensics Secur 9(6):1015–1023. https://doi.org/10.1109/TIFS.2014.2317309

C.-Y. Ma, M.-H. Chen, Z. Kira, and G. AlRegib, “TS-LSTM and Temporal-Inception: Exploiting Spatiotemporal Dynamics for Activity Recognition,” Mar. 2017, Accessed: Jul. 07, 2019. [Online]. Available: http://arxiv.org/abs/1703.10667.

V. Karpova, V. Lyashenko, and O. Perepelkina, “‘ Was It You Who Stole 500 Rubles ?’ — The Multimodal Deception Detection,” in ICMI ’20 Companion: Companion Publication of the 2020 International Conference on Multimodal Interaction, 2020, pp. 112–119https://doi.org/10.1145/3395035.3425638

(Leslie) Chen X, Ita Levitan S, Levine M, Mandic M, Hirschberg J (2020) Acoustic-prosodic and lexical cues to deception and trust: deciphering how people detect lies. Trans Assoc Comput Linguist 8:99–214. https://doi.org/10.1162/tacl_a_00311

May JG, Kennedy RS, Williams MC, Dunlap WP, Brannan JR (1990) Eye movement indices of mental workload. Acta Psychol (Amst) 75(1):75–89. https://doi.org/10.1016/0001-6918(90)90067-P

M. Nakayama and Y. Shimizu, “Frequency analysis of task evoked pupillary response and eye-movement. In: Proceedings of the Eye tracking research & applications symposium on Eye tracking research & applications—ETRA’2004, 2004, pp. 71–76. https://doi.org/10.1145/968363.968381.

Van Orden KF, Limbert W, Makeig S, Jung TP (2001) Eye activity correlates of workload during a visuospatial memory task. Hum Factors 43(1):111–121. https://doi.org/10.1518/001872001775992570

J. A. Stern, L. C. Walrath, and R. Goldstein, “The endogenous eyeblink.,” Psychophysiology, vol. 21, no. 1, pp. 22–33, Jan. 1984, Accessed: Jul. 07, 2019. [Online]. Available: http://www.ncbi.nlm.nih.gov/pubmed/6701241.

Goldwater BC (1972) Psychological significance of pupillary movements. Psychol Bull 77(5):340–355. https://doi.org/10.1037/h0032456

Andreassi JL (2010) Psychophysiology: human behavior and physological response. Psychology Press

Mathôt S (2018) Pupillometry: psychology, physiology, and function. J Cogn. https://doi.org/10.5334/joc.18

J. Beatty and B. Lucero-Wagoner 2020 The pupillary system. Handb. Psychophysiol. 2, 2000.

D. P. Dionisio, E. Granholm, W. A. Hillix, and W. F. Perrine, “Differentiation of deception using pupillary responses as an index of cognitive processing.,” Psychophysiology, vol. 38, no. 2, pp. 205–11, Mar. 2001, Accessed: Jul. 07, 2019. [Online]. Available: http://www.ncbi.nlm.nih.gov/pubmed/11347866.

Gonzalez-Billandon J, Aroyo AM, Tonelli A, Pasquali D, Sciutti A, Gori M, Sandini G, Rea F (2019) Can a robot catch you lying? a machine learning system to detect lies during interactions. Front Robot AI. https://doi.org/10.3389/frobt.2019.00064

A. M. Aroyo, J. Gonzalez-Billandon, A. Tonelli, A. Sciutti, M. Gori, G. Sandini, and F. Rea, “Can a Humanoid Robot Spot a Liar?. In: IEEE-RAS 18th Int. Conf. Humanoid Robot., 2018.

Szulewski A, Roth N, Howes D (2015) The use of task-evoked pupillary response as an objective measure of cognitive load in novices and trained physicians: a new tool for the assessment of expertise. Acad Med 90(7):981–987. https://doi.org/10.1097/ACM.0000000000000677

Ahmad MI, Bernotat J, Lohan K, Eyssel F (2019) Trust and Cognitive Load During Human-Robot Interaction, In AAAI Symposium on Artificial Intelligence for Human-Robot Interaction. https://arxiv.org/abs/1909.05160v1

J. Klingner, “Measuring Cognitive Load During Visual Task by Combining Pupillometry and Eye Tracking,” Ph.D. Diss., no. May, 2010, http://purl.stanford.edu/mv271zd7591.

G. Hossain and M. Yeasin, “Understanding effects of cognitive load from pupillary responses using hilbert analytic phase,” In: IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work., pp. 381–386, 2014 https://doi.org/10.1109/CVPRW.2014.62.

Wangwiwattana C, Ding X, Larson EC (2018) Pupilnet, measuring task evoked pupillary response using commodity RGB tablet cameras. Proc ACM Interactive, Mobile, Wearable Ubiquitous Technol 1(4):1–26. https://doi.org/10.1145/3161164

S. Rafiqi, E. Fernandez, C. Wangwiwattana, S. Nair, J. Kim, and E. C. Larson, “PupilWare: Towards pervasive cognitive load measurement using commodity devices. In: 8th ACM Int. Conf. PErvasive Technol. Relat. to Assist. Environ. PETRA 2015—Proc., no. August, 2015, doi: https://doi.org/10.1145/2769493.2769506.

Eivazi S, Santini T, Keshavarzi A, Kübler T, Mazzei A (2019) Improving real-time CNN-based pupil detection through domain-specific data augmentation. Eye Track Res Appl Symp. https://doi.org/10.1145/3314111.3319914

R. Mazziotti et al., “MEYE: Web-app for translational and real-time pupillometry,” bioRxiv, p. 2021.03.09.434438, 2021, [Online]. Available: https://doi.org/10.1101/2021.03.09.434438.

D. Pasquali, J. Gonzalez-Billandon, F. Rea, G. Sandini, and A. Sciutti 2021 Magic iCub: a humanoid robot autonomously catching your lies in a card game. https://doi.org/10.1145/3434073.3444682.

“Dixit 3: Journey | Board Game | BoardGameGeek.” https://boardgamegeek.com/boardgame/119657/dixit-3-journey (accessed Sep. 27, 2020).

G. Metta, G. Sandini, D. Vernon, L. Natale, and F. Nori, “The iCub humanoid robot. In: Proceedings of the 8th Workshop on Performance Metrics for Intelligent Systems - PerMIS ’08, 2008, p. 50, https://doi.org/10.1145/1774674.1774683.

Flebus GB (2015) Versione Italiana dei Big Five Markers di Goldberg.

Ferguson CJ, Negy C (2014) Development of a brief screening questionnaire for histrionic personality symptoms. Pers Individ Dif 66:124–127. https://doi.org/10.1016/j.paid.2014.02.029

Jones DN, Paulhus DL (2014) Introducing the Short Dark Triad (SD3): a brief measure of dark personality traits. Assessment 21(1):28–41. https://doi.org/10.1177/1073191113514105

F. Bracco and C. Chiorri, “Versione Italiana del NASA-TLX.”

Tobii Pro, “Quick Tech Webinar - Secrets of the Pupil.” https://www.youtube.com/watch?v=I3T9Ak2F2bc&feature=emb_title.

Fitzpatrick P, Metta G, Natale L (2008) Towards long-lived robot genes. Rob Auton Syst 56(1):29–45. https://doi.org/10.1016/j.robot.2007.09.014

Sweller J, Ayres P, Kalyuga S (2011) Cognitive load theory. psychology of learning and motivation, vol 55. Elsevier, NY

Leppink J (2017) Cognitive load theory: practical implications and an important challenge. J Taibah Univ Med Sci 12(5):385–391. https://doi.org/10.1016/j.jtumed.2017.05.003

Webb AK, Honts CR, Kircher JC, Bernhardt P, Cook AE (2009) Effectiveness of pupil diameter in a probable-lie comparison question test for deception. Leg Criminol Psychol 14(2):279–292. https://doi.org/10.1348/135532508X398602

Mathôt S, Fabius J, Van Heusden E, Van der Stigchel S (2018) Safe and sensible preprocessing and baseline correction of pupil-size data. Behav Res Methods 50(1):94–106. https://doi.org/10.3758/s13428-017-1007-2

Barandas M, Folgado D, Fernandes L, Santos S, Abreu M, Bota P, Liu H, Schultz T, Gamboa H (2020) TSFEL: time series feature extraction library. SoftwareX. https://doi.org/10.1016/j.softx.2020.100456

Bond CF, DePaulo BM (2006) Accuracy of deception judgments. Personal Soc Psychol Rev 10(3):214–234. https://doi.org/10.1207/s15327957pspr1003_2

Breiman L (2001) Random forests. Mach Learn. https://doi.org/10.1023/A:1010933404324

Chawla NV, Bowyer KW, Hall LO (2006) SMOTE: synthetic minority over-sampling technique nitesh. J Artif Intell Res. https://doi.org/10.1613/jair.953

Patro SGK, Sahu KK (2015) Normalization: a preprocessing stage. Iarjset. https://doi.org/10.17148/iarjset.2015.2305

Ahn HS, Sa IK, Lee DW, Choi D (2011) A playmate robot system for playing the rock-paper-scissors game with humans. Artif Life Robot 16(2):142–146. https://doi.org/10.1007/s10015-011-0895-y

I. Gori, S. R. Fanello, G. Metta, and F. Odone, “All gestures you can: A memory game against a humanoid robot. In: IEEE-RAS Int. Conf. Humanoid Robot., pp. 330–336, 2012, https://doi.org/10.1109/HUMANOIDS.2012.6651540.

I. Leite, M. McCoy, D. Ullman, N. Salomons, and B. Scassellati, “Comparing Models of Disengagement in Individual and Group Interactions,” In: ACM/IEEE Int. Conf. Human-Robot Interact., vol. 2015-March, no. March, pp. 99–105. https://doi.org/10.1145/2696454.2696466.

M. Owayjan, A. Kashour, N. Al Haddad, M. Fadel, and G. Al Souki, “The design and development of a Lie Detection System using facial micro-expressions. In: 2012 2nd International Conference on Advances in Computational Tools for Engineering Applications (ACTEA), 2012, pp. 33–38. https://doi.org/10.1109/ICTEA.2012.6462897.

K. Kobayashi and S. Yamada, “Human-Robot interaction design for low cognitive load in cooperative work,” In:Proc.—IEEE Int. Work. Robot Hum. Interact. Commun., no. April, pp. 569–574, 2004 https://doi.org/10.1109/ROMAN.2004.1374823.

S. M. Al Mahi, M. Atkins, and C. Crick, “Learning to assess the cognitive capacity of human partners,” In: ACM/IEEE Int. Conf. Human-Robot Interact., pp. 63–64, 2017. https://doi.org/10.1145/3029798.3038430.

Karim F, Majumdar S, Darabi H, Chen S (2017) LSTM fully convolutional networks for time series classification. IEEE Access 6:1662–1669. https://doi.org/10.1109/ACCESS.2017.2779939

Avola D, Cinque L, De Marsico M, Fagioli A, Foresti GL (2020) LieToMe: Preliminary study on hand gestures for deception detection via Fisher-LSTM. Pattern Recognit Lett 138:455–461. https://doi.org/10.1016/j.patrec.2020.08.014

Zhou Y, Shang L (2020) Time sequence features extraction algorithm of lying speech based on sparse CNN and LSTM, vol 12463. Springer International Publishing, LNCS

T. Fischer, H. J. Chang,Y. Demiris (2018) “RT-GENE : Real-Time Eye Gaze Estimation in Natural Environments,” in European Conference on Computer Vision, 2018, pp. 339–357. https://doi.org/10.1007/978-3-030-01249-6_21

Redifer JL, Bae CL, Debusk-lane M (2019) Implicit theories, working memory, and cognitive load : impacts on creative. Thinking. https://doi.org/10.1177/2158244019835919

G. Belgiovine, F. Rea, J. Zenzeri, A. Sciutti (2020) “A Humanoid Social Agent Embodying Physical Assistance Enhances Motor Training Experience,” in 29th IEEE International Conference on Robot and Human Interactive Communication, RO-MAN 2020, 2020, no. ii, pp. 553–560. https://doi.org/10.1109/RO-MAN47096.2020.9223335

Koenig A, Novak D, Omlin X, Pulfer M, Perreault E, Zimmerli L, Mihelj M, Riener R (2011) Real-time closed-loop control of cognitive load in neurological patients during robot-assisted gait training. IEEE Trans Neural Syst Rehabil Eng 19(4):453–464. https://doi.org/10.1109/TNSRE.2011.2160460

Westbrook A, Braver TS (2015) Cognitive effort: a neuroeconomic approach cognitive, affective and behavioral neuroscience, vol 15. Springer, New York, pp 395–415

Funding

Open access funding provided by Istituto Italiano di Tecnologia within the CRUI-CARE Agreement. This work has been supported by a Starting Grant from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme. G.A. No 804388, wHiSPER. Researchers of the SIRRL lab at University of Waterloo are supported, in part, thanks to funding from the Canada 150 Research Chairs Program.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pasquali, D., Gonzalez-Billandon, J., Aroyo, A.M. et al. Detecting Lies is a Child (Robot)’s Play: Gaze-Based Lie Detection in HRI. Int J of Soc Robotics 15, 583–598 (2023). https://doi.org/10.1007/s12369-021-00822-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-021-00822-5