Abstract

Designers of educational interventions are always looking for methods to improve the learning experience of children. More and more, designers look towards robots and other social agents as viable educational tools. To gain inspiration for the design of meaningful behaviours for such educational social robots we conducted a contextual analysis. We observed a total of 22 primary school children working in pairs on a collaborative inquiry learning assignment in a real world situation at school. During content analysis we identified a rich repertoire of social interactions and behaviours, which we aligned along three types of interaction: (1) Educational, (2) Collaborational, and (3) Relational. From the results of our contextual analysis we derived four generic high-level recommendations and fourteen concrete design guidelines for when and how a social robot may have a meaningful contribution to the learning process. Finally, we present four variants of our Computer Aided Learning system in which we translated our design guidelines into concrete robot behaviours.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Social robots are increasingly used as educational tools in topics such as STEM and language learning [5, 52]. In the EU-FP7 project EASELFootnote 1 we look specifically at learning interventions that use robots in a collaborative inquiry learning assignment, described later in more detail. To develop such interventions, we must design robot behaviour and human-robot interaction patterns that are appropriate for the learner and the educational context. Generalised pedagogical and educational psychology theories inform us about what we might generally expect by way of interactions that occur between learners, but do not specify in what way such social interactions carry across to child-robot learning situations. To ground the design of actual behaviour and interaction of the robot we also need real examples of behaviours exhibited by children in the specific environment and collaborative task in which the robot should operate. From such examples we can derive concrete opportunities and guidelines for when and in what form exactly robots may contribute to a child’s learning.

In this paper we report on a contextual analysis that we carried out to derive guidelines for the design of social robots to support primary school children in a collaborative inquiry learning assignment. This work was guided by the following research questions:

-

RQ1 Which typical behaviours and social interactions do we identify in learners working with our assignment and how do these behaviours align with theory?

-

RQ2 How do children progress through the various phases of our learning assignment and do the types of learning behaviour they exhibit vary with these phases?

-

RQ3 What are opportunities for social robot interventions in regard to interaction patterns, specific behaviours and roles, to contribute to the child’s learning in our assignment?

The paper has the following structure: Sect. 2 presents our view on social learning through the lens of the literature. Section 3 describes the approach of our contextual analysis, in which we annotated video recordings of pairs of children collaborating on a learning assignment in their school. Section 4 presents the annotation schemes we used to analyse these video recordings, the results of which are presented in Sect. 5. Based on these results, we formulated four generic recommendations and fourteen concrete design guidelines for robot behaviour, presented in Sect. 6. Finally, Sect. 7 describes how we applied these guidelines to build four variants of child-robot learning scenarios. Here, we also reflect anecdotally on the social learning behaviour exhibited by children interacting with these variants. Limitations and future work are discussed in Sect. 8, after which we conclude the paper in Sect. 9.

2 Background

In this section we briefly discuss the literature and theories from educational psychology and pedagogy related to the type of learning assignments and social interactions that occur in our collaborative inquiry learning context. Insights presented here are partly based on an earlier paper by Charisi et al. [15].

2.1 In Learning, Students Construct Knowledge

The well-known developmental theory of Piaget [55] describes learning as a dynamic process comprised of successive stages of adaption to reality. According to this constructivist framework, children are active learners who construct their knowledge by creating and testing their own theories and beliefs. During play, children explore the real world, organise their thoughts, and perform logical operations [54]. This occurs mainly in relation to concrete objects rather than abstract ideas [59].

Inquiry learning is one of the tools to support a more structured exploration of abstract ideas, often by using physical objects. During inquiry learning tasks learners adopt the scientific method of exploration to discover underlying rules and principles that govern phenomena in the world around them. Knowledge is assimilated by conducting experiments during which learners construct hypotheses and argumentations, and reflect upon applied methods and gathered results [39, 40, 46, 71]. A typical inquiry cycle consists of five consecutive steps: (1) Preparation; (2) Hypothesis generation; (3) Experimentation; (4) Observation; and (5) Conclusion. Learners often require additional support when they encounter difficulties while working on inquiry tasks [46].

Vygotsky introduced the notion of scaffolding to describe the supportive structures offered to a learner that lead to the construction of higher mental processes [74]. The amount of information and guidance offered to the learner influences the complexity of the task. Bell et al. [3] and Banchi et al. [1] describe a continuum of several levels of inquiry. Learners start with highly-structured “confirmation inquiry tasks” in which questions, hypotheses, methods, and results are explicitly provided. They then gradually move to more “open inquiry tasks” in which they formulate their own questions, hypotheses, and methods, after which they construct arguments to explain experiment results [1]. By offering appropriate scaffolds (e.g. predefined task structures or explicit instructions) learners are able to progress from closed to more open forms of inquiry.

2.2 Learning is Inherently Social

In solitary learning situations, scaffolding is traditionally offered by non-social (technological) tools. For example, by providing templates or background information about the topic, or by constraining the learner’s interaction with the learning environment [46, 71, 72]. However, in most classroom situations learners learn together with “someone else”, which can be beneficial to the learning process.

Social educational “partners” help each other to optimally develop their abilities and knowledge. According to Vygotsky [74] cognitive development occurs and is promoted by an individual’s interaction with the world and by collaboration with others. He distinguished between two developmental levels: the level of actual development and that of potential development. The actual development is the level that the learner has already reached and where they can comfortably solve problems independently. The potential level of development is the level that the child should be able to reach, if he/she interacts with more knowledgeable others. The space between the actual level of development and the potential level of development is referred to as the Zone of Proximal Development (ZPD) [74]. It is through guidance or collaboration with others that learners bridge the ZPD and manage to grow from their actual level of development to their potential level of development [15, 20].

There are many definitions of collaborative learning. For instance, Dillenbourg defines collaborative learning as “a situation in which two or more people learn or attempt to learn something together” [24, p. 1], focusing on participation of multiple parties in the learning process. Similarly, Rogoff’s [60] definition of collaboration describes mutual involvements, engagement, and participation in shared endeavours, which may or may not serve to promote cognitive development. Finally, the process of peer learning has been defined by Topping as “the acquisition of knowledge and skill through active helping and supporting among status equals or matched companions” [68, p. 1].

An important shared characteristic of such descriptions of collaboration, is the requirement for a certain interactivity between learners, which is defined by the extent to which learning partners influence each other’s cognitive processes [24].

The role of the learner and their social partner shape how they collaborate with each other. Firstly, the social partner can be a “more knowledgeable other”, e.g. a teacher/tutor or more advanced student [76]. Secondly, the social partner could be a “similarly or differently knowledgeable other”, e.g. a fellow student of a similar level working on the same task [24, 68]. Finally, the learner could be working with a “less knowledgeable other”, e.g. a less advanced student in need of support of their own. Tutoring other students can contribute to the learning of the more advanced student as well [56].

2.3 ECR: A Three-Level Model to Organise Social Learning Interactions

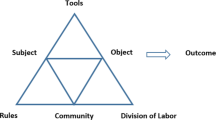

Related literature and theories on social learning describe several forms of social interactions that take place between collaborating learning partners. In this section we summarise and organise this theoretical background to derive a pragmatic model that can be used as a lens through which to look at social learning. We propose the “ECR” model, as illustrated in Fig. 1, which describes three levels of social interactions. Firstly, there are Educational interactions (e.g. asking for help and explaining). This requires “being in sync” with each other, which is pursued through Collaborational interactions (e.g. discussing joint goals and strategies, and performing joint actions). To achieve this, co-learners need to be “working towards the same thing” and they need to “know the value of their partner”, which can be calibrated through Relational interactions (e.g. bonding, shared experiences/emotions, team role assignments).

2.3.1 Social Learning Requires Educational Interactions

In collaborative inquiry learning, students working together provide each other with scaffolding. They do this by sharing and explaining their knowledge, ideas, and techniques [74]. This indicates that a less knowledgeable learner can benefit from support offered by a more- or differently knowledgeable other. This is done through what we call educational interactions. Through such interactions learners help each other to develop skills on both a cognitive level, related to learning content (how to understand a problem), and on the metacognitive level, related to the learning process (how to approach a problem).

Scaffolds and explanations offered by child A to child B not only help child B, but also contribute to the learning of child A. Offering explanations to a partner triggers certain cognitive processes in the learner. These cognitive processes are considered helpful for learners to gain a deeper conceptual understanding of the subject matter [56]. In inquiry learning situations, this process of explanation often occurs when making predictions or drawing conclusions.

Literature typically describes two forms of explanation: (1) self-explanation, which refers to explanation of the subject of interest to oneself [17]; and (2) interactive explanation, which refers to explanation to another person [56]. In the latter case, the presence of a social partner facilitates children’s verbalisation of their thinking (e.g. by asking probing questions). However, depending on the type of the social partner, children may exhibit different behaviours.

Tutors and teachers are typically more knowledgeable than the learner and have a more holistic view of the learner’s progress. Therefore, they can offer additional explanations and scaffolding in the various stages of the inquiry learning process. Furthermore, tutors can help learners to appraise their own performance and efficacy. Finally, tutors can use their knowledge about the skill level and personality of children to tailor the explanations and scaffolding.

2.3.2 Social Learning Requires Collaborational Interactions

When working on a joint activity, such as a learning task, collaborators rely on an intricate coordination and communication process to reach a level of alignment [18, 41]. Klein and Feltovich [41] describe such joint activities in terms of criteria, requirements, and choreography. As criteria for joint activities they state that there should be an intention to work together, and the work must be interdependent. Regarding requirements for joint activities; there should be a degree of interpredictability between partners, there must exist a sufficient degree of common ground, and each partners’ actions must be directable by the other. Throughout the interaction, as they progress through the phases of the activity, partners use signalling to facilitate the choreography of collaborating on joint activities.

Such principles of joint activities apply similarly to the context of collaborative learning. In this context collaboration implies that both co-learners are more or less on the same status level, can perform the same actions, and often have a common objective [24]. Furthermore, offering meaningful scaffolding to one another requires that learners align their goals, ideas, plans, and reasoning in an explicit and understandable way [45, 67]. Establishing and maintaining this alignment takes place through certain types of interactions: we call these collaborational interactions.

Learners may seek alignment regarding the overall task objective, the strategy for attaining this objective, and the actions necessary for executing this strategy.

In inquiry learning, the task objective is often imposed by the learning task. Co-learners can align their interpretation of the task objective by agreeing upon the meaning of the task and by discussing possible completion criteria. The phases of the inquiry cycle outline the strategy that learners may take to reach the overall objective. Learners can establish alignment regarding their strategy by discussing the inquiry phases and may maintain this alignment by signalling their progression through the phases. Each phase of the inquiry cycle requires a specific sequence of actions. Learners should discuss such actions and agree upon a division of labour to prevent disrupting each other.

2.3.3 Social Learning Requires Relational Interactions

Effective collaborative inquiry learning requires a healthy working relationship. Firstly, for partners to accurately judge each other’s interests, abilities, skills, and knowledge, they need to interact and understand one another on a personal level. Secondly, in order to agree upon shared objectives, strategies, and actions, partners need to be “allies in learning”; meaning that they maintain mutually benevolent attitudes towards each other.

Through their past experiences and interactions, partners shape and maintain common ground [41]. Doing so, they are able to more accurately envision their partner’s strengths and weaknesses. This enables them to create a fair and appropriate role and task division.

While working on a task, it is important for partners to remain mutually invested in the learning process. The importance of positive feelings during the learning process has been stressed before [43]. They promote the individual’s openness to new experiences and resilience against possible negative situations [26]. Furthermore, dynamic behaviours might involve reciprocal influences between emotion and cognition [25]. For instance, emotions affect the ways in which individuals perceive reality, pay attention, and remember previous experiences, as well as the skills that are required for an individual to make decisions. Shared success and failures are ideal moments in the learning process where partners may offer each other encouragement and motivation.

2.4 Related Work from HRI

We envision that a social robot is able to navigate the social space of all three of the ECR levels by taking on a variety of roles in the collaborative learning process. As a starting point, we look at examples from related work, which help us to highlight and illustrate possible contributions of such a robot.

Robots in educational settings are often presented to the learner as a “peer” or “tutor” [53, 64, 78]. Within these roles the robot may have a different impact on the child’s learning and may use a different repertoire of behaviours and techniques to offer scaffolding.

Robots presented as facilitator, teaching assistant, or tutor, often take on a role of “more knowledgeable other” to guide one or more learners in the learning task (e.g. [13, 14, 36, 48, 62, 77]). Operating in this role, a robot may contribute to the learning process through traditional tutoring methods; such as direct instruction, praise, encouragement, or explicit feedback [76]. For example, Kanda et al. [36] used a social robot as a facilitator to offer direct instruction and explanations to children working on a collaborative task. Their results suggest that the social nature of the robot contributed to increased motivation in early phases of the learning task. Similarly, Chandra et al. [13] found that a robot who acted as facilitator to two collaborating children, improved their feelings of responsibility. Additionally, Saerbeck et al. [62] have shown that a robotic tutor with social supportive behaviours can have a positive effect on the performance of the learner. However, in certain tutoring situations prone to distractions, too much socially appropriate behaviours seem to have a detrimental effect to learning [37].

Alternatively, robots can be presented to the child as co-learners or peers. As such, the robot may portray a “differently (or equally) knowledgeable other” and may seek collaboration with the child within the learning task. In this role the robot may offer proactive anticipatory helping behaviours to improve the fluency of their collaboration [2, 28]. Such peer-like robots often engage with children over multiple interaction sessions, seeking to build a social relationship [34, 65]. A common approach for promoting learning with a peer-like robot is during playful game-like interactions. For example, the robot might play either an “ally” or an “opponent” of the child, challenging them to achieve a higher performance (e.g. [4, 12, 31]). To sustain such interactions over extended periods of time, the robot and child can engage in a diverse variety of such activities [19]. Other approaches focus on interactive storytelling with a social robot [44]. For example, Gordon et al. [27] used such a robot to improve children’s second language skills.

Finally, robots portraying a “less knowledgeable other” tend to evoke caregiving behaviour in the child. Children are naturally inclined to help a “robot in need” when it displays distressed behaviour. However, the extent of helping depends on how the child was introduced to the robot [6, 7]. Tanaka et al. [66] use such a care-receiving robot to promote vocabulary learning. In such cases, the child themselves may act as a tutor, correcting the robot’s mistakes (e.g. [29, 47]) or explaining concepts to the robot (e.g. [23]). Through this learning by teaching paradigm, the child gains a deeper understanding of the learning content [16, 61].

In conclusion, here are some of the various (social) roles the robot could have: explaining to the learner, be explained to by the learner, encouraging the learner, praising the learner in various ways, giving good or bad examples as a fellow learner, and many more. The effectiveness of these actions depends to a certain extent on the relation between the learner and the other, and how the other behaves and presents itself to the learner. To gain further inspiration regarding the design of concrete robot roles and behaviours we conducted a contextual analysis.

3 Contextual Analysis

The type of robot that we investigated would have to navigate the social interactions with learners in the context of an inquiry learning assignment. From theory and related work we found that the robot may navigate several roles, as outlined in the previous section: more-, differently-, and less knowledgeable other. Within such roles, we expect three different types of interactions to take place: educational, collaborational, and relational. As input to our design guidelines we carried out a contextual analysis to determine concrete opportunities for when and how robots may contribute to a child’s learning process.

3.1 Method

The field of Human-Computer Interaction often emphasises the importance of considering the context in which interactive products are to be used (e.g. [8, 9, 38, 49]). Over the years different terms have been used for contextual analysis, such as “Context-of-use analysis”, “Usability Context Analysis”, or “Contextual Design”. Assessing and accounting for contextual factors is considered as an integral part of a user-centred design process aimed at optimising usability of products. By mapping the challenges and opportunities that exist in a certain context, the outcomes of a contextual analysis will typically have a drastic influence on the design of an interactive product. Therefore, such a contextual analysis should take place at an early stage—before having a fully developed prototype or product.

The method of contextual analysis aims to take into account as much as possible, all factors that may influence the use of a product. Such factors include: characteristics of the user group; tasks that users aim to carry out with the product; and the technical, organizational, and physical environment where the product is to be used. A contextual analysis typically consists of approaches like stakeholder analysis, surveys, and focus groups [49], as well as ethnographic strategies like case studies and field studies [50] (or combinations thereof).

In our contextual analysis we used such ethnographic methods to investigate the context in which children learn with our specific inquiry assignment. We conducted a field study where we recorded pairs of children working with our assignment. We then used a grounded theory approach to design an annotation scheme with which we analysed the observed behaviours [51, 63].

3.2 Venue and Participants

Since we are interested in gaining insights in typical behaviours of children as they occur in practice, we aimed to maintain a high degree of ecological validity throughout the study. Therefore, we focused on pairs of children working on a learning assignment in their own school during regular school hours, without robot. The 22 Dutch Montessori elementary school students (50% female), aged 6–9 years old (the target age range of the EASEL project) participated in the study. Children in this age range show a variance of cognitive development, verbal, and non-verbal behaviour. As such, including children across this age range will give us a broad spectrum of insights on possible behaviours. The school is located in a residential area near the University of Twente, in the city of Enschede, The Netherlands.

3.3 Procedure

Prior to the study, ethical approval was granted by the ethical boards of the Behavioural, Management and Social sciences (BMS) and the Electrical Engineering, Mathematics and Computer Science (EEMCS) faculties of the University of Twente. Permission forms were distributed to the children’s parents through the school’s regular communication channels.

The experiment started with an explanation to a pair of children about the assignment. The researcher showed them the learning materials and demonstrated how they worked. This introduction took approximately 5 min. After the introduction, the children would each receive the assignment sheets with five tasks from the experimenter. The children were given approximately 30 min to complete the assignment. When a pair finished the assignment, the experimenter would thank them for their participation and say goodbye. During the experiment, the experimenter was present so that children could ask for help if they had any questions.

The interactions were recorded by three cameras: two for recording actions of each of the two children and an overview camera to record the learning materials.

3.4 Balance Scale Materials

The physical balance scale instrument was originally designed by Inhelder and Piaget [30]. In our study, children used such a balance scale (see Fig. 2) to explore the moment of force. The moment of force (or torque) is a physical concept that describes the rotational force acting upon an object in relation to a reference point. To explore this concept, our children did small experiments in which they placed pots of various weights at various distances from the scale’s central pivot point.

The two-armed wooden balance had a central pivot point and three pins at equal increasing distances on each arm. These pins were numbered 1 to 6 from left to right. Numbers 1 to 3 were given a blue label and numbers 4 to 6 were given a green label. The children received four pots that could be placed over the pins: two heavy red pots and two lighter yellow pots. Two wooden blocks could be placed under the balance to secure it in a horizontal position.

3.5 Learning Assignment

Children received a total of five numbered tasks as part of their learning assignment. Task instructions were printed on paper and consisted of brief textual descriptions and illustrations.

Task 1, 2, 3, and 4 were similar in nature. In these tasks the children’s instructions and actions were symmetrical and synchronous: each child had the same task instructions and could complete the same actions. Each task was structured according to the steps of the inquiry learning. In the preparation step, children were first asked to place a wooden block underneath each arm to prevent the balance from tilting prematurely. They were then asked to place two pots on the balance (pot color and position were given explicitly in each task). In the prediction step, children were asked to predict what they thought would happen when the wooden blocks were to be removed. They could select one of three options: (1) the blue side will go down; (2) the balance will stay in equilibrium; or (3) the green side will go down. In the experiment step, children were asked to remove the wooden blocks, allowing the balance to tilt freely. During the observation step they explicitly stated their observation about the position of the balance and explained whether this was in agreement or disagreement with their initial prediction. Finally, in the conclusion step they would give an explanation for their observation using their own words (explain why the balance tipped left, right, or stayed in equilibrium). They gave this explanation verbally to each other and the experimenter.

Task 5 was structured differently in order to possibly trigger more diverse collaborative behaviours. In this final task children worked asynchronously and were given asymmetrical task instructions: here it was necessary for the children to take consecutive steps, where each child took different actions. First, child A would choose one of the pots and place it on the blue side of the balance. Then, child B would choose one of the pots and give it to child A. Subsequently, they had to answer the following question together: “where should the pot be placed on the green side to keep the balance in equilibrium?” Child A would then place the pot on the green side where they thought it should be placed to keep the balance in equilibrium. Finally, after removing the blocks, the children would explain to each other if their prediction was correct and why the balance was in this position.

4 Annotation Scheme

To further understand the children’s educational, collaborational, and relational interactions and to understand how the children progress through the phases of the inquiry cycle, we manually annotated the video recordings using the ELAN tool.Footnote 2

The annotation scheme was divided into five layers. To determine the label sets for these layers, we first inspected sample fragments from the recordings to get a sense of the kind of behaviours that could be found in them. We used this to choose the possible labels for annotating each of the three levels from the ECR model. In the fourth annotation layer we labeled video fragments according to the occurrence of the inquiry cycle phases. The fifth layer only had the label Supplementary interactions. This label was used to indicate fragments containing behaviour that we identified as important for the learning process, but did not fit cleanly in the existing label descriptions in our code book. These Supplementary interactions were subsequently also ordered along the three levels of the ECR model.

Using the developed annotation schemes, coder A labeled 100% of the data. To calculate the inter-annotator agreement score, coder B labeled an overlapping 20% of the data. On the combined annotations of the educational and collaborational levels, we computed a Cohen’s Kappa of 0.11. Inspection of the disagreement data showed that this low score was mostly caused by one coder assigning a label to a certain video fragment while the other coder did not. Upon discussion of such fragments, it became clear that the majority of the behaviours that were missed by one of the coders, did indeed rightfully belong under the annotated label that the other coder assigned to it. When we excluded these annotation instances that were missed by one of the coders, Cohen’s Kappa increased to 0.77. The Cohen’s Kappa for all annotations in the inquiry process category was 0.61. After excluding the fragments that were missed by one of the coders, Cohen’s Kappa increased to 0.91. These inter-annotator agreement scores left us confident that the labels that we gathered from the annotation procedure were meaningful enough for further analysis, even though a number of instances of relevant interactions might not have been assigned a label.

The results of our annotations will allow us to identify how children express themselves when navigating various roles in relation to their learning partner. Such insights will form the basis for our behaviour design guidelines for a social robot that will function in similar roles. Additionally, these annotations will allow us to identify when children express certain behaviours, which gives us clues to when the robot might contribute. Finally, we will be able to assess whether our specific learning task is appropriate for the target user group, and whether it lends itself to investigate the full inquiry learning cycle.

The annotation schemes are described in more detail in the next five sections.

4.1 Label Set for Educational Interactions

During the course of an assignment, children often engaged in interactions that were educational by nature. That is, they engaged in discussion and knowledge construction around the learning content. On the one hand, we observed very explicit educational exchanges (for instance, child A asking child B for an explanation or opinion). On the other hand, children engaged in implicit educational interactions (for example, child B integrating an argument in their explanation that was previously made by child A). Additionally, we observed instances where children would engage in discussions where they agreed or disagreed with each other’s answers or actions.

The following labels were used to identify the interactions on the educational level. These labels were partly based on work by van Dijk et al. [69], who investigated collaborative drawing behaviours.

-

Elicitation/asking questions When children want to extract knowledge or support from their partner, mostly in the form of a question. For example, “What do you think will happen?”.

-

Explaining When a child gives any form of verbal explanation directed at the partner about the assignment or the principles of balance. This explanation may be spontaneous or elicited. For example, “This pot is at the same spot as the other, so I think it will stay in equilibrium”.

-

Thinking aloud This label includes cases where a child thinks aloud about the construction of their argumentation, but this externalisation is not explicitly directed towards to the partner. Such utterances are often less elaborate than explanations. For example, “hmm... I think... hmm... the left side will go down”.

-

Agreement or disagreement Instances where a child shows basic (dis)agreement with their partner’s reasoning, without explicitly showing comprehension. This includes, for example, verbal utterances (“yes, I think so too” or “no, that’s not right”) and nonverbal gestures (nodding or shaking their head).

-

Integrating This label goes beyond basic agreement, when a child uses the content of a partners’ explanation in their own line of argumentation. For example, child A says: “I think the balance tipped left because the pot on the blue side is placed more towards the end” and child B responds: “Because it is more towards the end of the balance the left side is heavier”. In this case, the explanation of child A is labeled as Explaining and the response of child B as Integrating.

-

Elaborate critique When a child criticises or corrects the content of the explanation of the partner, going beyond basic disagreement. For example, child A explains “The yellow pot is heavier”, and child B responds “No, it’s lighter!” the response of child B is annotated as Elaborate critique.

4.2 Label Set for Collaborational Interactions

While working on their assignment, children had to collaborate in order to share the physical learning materials. Doing so effectively required them to align their interpretations of task objectives, communicate their approach, and agree upon actions. The following labels were used to identify the interactions on the collaborational level.

-

Reading aloud When a child reads a task or instruction out loud. Since it is often difficult to distinguish between reading out loud for oneself and reading for the partner, this label includes both cases.

-

Coordination When a child makes explicit suggestions or explanations about how to proceed with the task, or explicitly mentions their progress within the task. For example, “it’s your turn to place a pot” or “I’m finished with this one”.

4.3 Label Set for Relational Interactions

In order to collaborate effectively, partners needed to maintain mutually benevolent attitudes with respect to each other. Such attitudes were shaped and maintained through relational interactions, which took the form of shared experiences, emotions, and frustrations. We did not have a grounded annotation scheme for interactions that took place on the relational interaction level. Instead, we labeled salient episodes in this domain as part of the ‘Supplementary interactions’ category.

4.4 Label Set for Inquiry Processes

A typical inquiry learning cycle is composed of five processes: (1) preparation; (2) hypothesis generation; (3) experimentation; (4) observation; and (5) conclusion. To analyse how children progressed through these phases, we identified behaviours that represent these processes using the following labels:

-

Preparation When children prepare the physical learning materials in accordance to the task, e.g. adding pots on the balance and placing the wooden blocks.

-

Hypothesis generation In this phase, children state their hypothesis, e.g. by verbalising out loud to each other, or by selecting a hypothesis on their assignment sheet.

-

Experimentation Performing the actual experiment, i.e. removing the wooden blocks so that the balance tips over.

-

Observation In this phase children state their observations about the experiment, by verbalising or pointing (e.g. “That side went down!”), or by documenting the observation on their assignment sheet.

-

Conclusion When children draw conclusions about the current task, by explaining to each other. This phase only occurred verbally, e.g. by explaining whether their hypothesis was correct and why.

4.5 Supplementary Interactions

We observed many salient behaviours and interactions that appeared to be relevant to the above label sets, but did not fit cleanly into any of the available labels. These spanned a wide range of actions. For example, at one point a child grabbed and took materials from another child; this action happened only in one session, but was considered very salient for collaborational interaction. Therefore, to fully capture the richness of the social interactions we labeled such additional observed behaviours as Supplementary interactions. Behaviours with this label were then ordered along the three levels of the ECR model and are reported and discussed as such in the respective sections below.

5 Results of the Contextual Analysis

This section reports the content of the annotations. All the 11 pairs of children completed tasks 1, 2, 3, and 4, each of which followed a similar structure. However, in 36% of the tasks they needed some assistance from the researcher. In most cases, this simply meant the researcher reading the task out loud or rephrasing the task instructions in different words. In cases where that did not help, the researcher would ask thought-provoking open questions without revealing the answer.

Task 5 took on a different structure, which likely increased its complexity. During this final task, children required more support from the researcher; only 3 out of 11 pairs of children were able to complete the last task without any help or guidance. The children expressed confusion about the structure of this task, which was composed of different steps than the previous tasks. In particular, they often did not understand the different responsibilities that they needed to fulfill in order to complete the task. Still, during this task we did observe interactions related to learning.

5.1 Educational Interactions

In identifying the educational interactions between children, a total of 458 annotations were performed. Frequencies for individual labels on the educational level are shown in Table 1.

The most prevalent labels were Elicitation, Explaining, and (Dis)agreement, which indicates that children were intentionally helping each other by explicitly sharing knowledge. Their helping behaviours focused primarily on topics related to the process (e.g. “I don’t understand what we have to do”) and the learning content (e.g. “I don’t understand what happened”). Additionally, learners unintentionally helped their partner during behaviours labeled as Thinking aloud, by implicitly sharing knowledge about the task or content. Finally, we sporadically found instances where children explicitly integrated or critiqued their partner’s explanation or reasoning process, illustrating a deeper level of discussion and debate.

In addition to the annotations labeled according to our annotation scheme, we performed an explorative analysis of the annotations that were labeled as Supplementary interactions. Firstly, in these annotations we observed many instances of pointing and gesturing behaviour. This was often used as a nonverbal clarification or explanation (e.g. pointing at a pot or location on the balance, or gesturing the position of the balance, or pointing at other’s assignment sheet to indicate the correct answer). Secondly, we found several implicit and explicit indications that children were comparing and aligning their own answers to those of their partner. For instance, they would look at and sometimes copy the answer of their partner, they would discuss explicitly (e.g. “What are you going to fill in?”), or they would announce when they had a breakthrough of their own (e.g. “I know the answer!”). Thirdly, although most children accepted lessons learnt through observations of the embodied learning materials (e.g. “I have now of course seen it... so I have to believe it”), we occasionally saw instances of confirmation bias. For instance, some children would alter reality to fit their prediction (e.g. perhaps jokingly, they would say “See, I was correct, I said it would stay balanced”, while artificially holding the balance in equilibrium with one hand). Others would adapt their original (incorrect) hypothesis to match their observations on the fly (e.g. “But I did think this would happen beforehand” or “You know, actually I knew this was going to happen”). Nevertheless, in some instances this prompted children to discuss an alternative hypothesis or propose an alternative experiment (e.g. “What would happen if we would place this pot here and the other here?”). Finally, some children showed curiosity about the balance unrelated to the assignment, which provoked them to investigate beyond the scope of the subject matter (e.g. they would try to figure out what was inside the weighted pots or they would place unrelated items on the balance).

From these additional observations we found that children discussed their observations and updated their knowledge while progressing through tasks. We also found that gestures and gaze behaviours played an important supplementary role in the collaborative inquiry learning interactions: children seemed to naturally take advantage of the physical nature of the embodied learning materials when explaining to their partner.

5.2 Collaborational Interactions

In identifying collaborational interactions between children, a total of 76 annotations were performed. Frequencies for individual collaborational labels are shown in Table 2. In general, we found behaviours corresponding to all of the criteria, requirements, and choreography of joint activities discussed in Sect. 2.3.2.

Behaviours that fell under the label Reading aloud included children reading (parts of) the assignment out loud. Often, this role was taken on by the child who was most proficient in reading. However, when both children had difficulties reading the task instructions, the experimenter would read the assignment out loud. By reading instructions out loud, the children seemed to ensure that both team members were in agreement on the task objectives. Additionally, this naturally provided whoever was listening, the opportunity to ask clarifying questions, or suggest a plan of action.

Among behaviours labeled as Coordination, we mostly found interactions that were related to the planning and execution of individual actions. For instance, children would take turns manipulating the learning materials (e.g. “Now it’s your turn to place the pots”), or they would share responsibility (e.g. “I’ll place this pot, you do that one”). Furthermore, they would occasionally plan ahead based on the structure of the task (e.g. “Hang on, before we can remove the blocks we first have to give our answer”). Finally, we identified instances where children explicitly mentioned their progress in the assignment (e.g. “There, that was task 2 done, let’s move on to the next!”).

From the explorative analysis of the behaviours in the supplementary interactions category, we identified several additional situations that were relevant on the collaborational level. Firstly, in addition to the explicit verbal interactions coded under the Coordination label, we found several instances of successful implicit coordination. While children worked together on the physical learning materials, they would often maintain a shared focus of attention through mutual gaze and by observing each other’s actions. Additionally, we noticed that children would often use behaviours, body language, pointing gestures, or gaze to signal their intentions to their partner. For example, children would seek for approval or permission while doing actions (e.g. very slowly/hesitantly starting an action, while searching for eye contact, giving their partner opportunity to intervene), or they would assist their partner’s actions (e.g. holding the balance steady while the partner removes or places pots). Secondly, we observed instances where misalignment of actions or goals occurred. Children could often explicitly signal such a situation to their partner verbally (e.g. by stating “This pot is in the way” or “I think we did it wrong”) or nonverbally (e.g. obstructing or overruling their partner’s actions, for instance by forcefully taking or removing a pot). In such cases, partners would seek re-alignment through more explicit coordination. They would, for instance, seek agreement with their partner before performing actions (e.g. “Can I do this now?” or “Are you choosing that pot..? Ok good!”) or they would explicitly time and synchronise their actions (e.g. “Now? Yes? ... Yes now!” or “3..2..1..Now!”). Finally, there were instances where misalignment of task progress occurred. For example, children would often check their partner’s progress in the task by looking at and comparing each other’s assignment sheets. If they noticed that their partner was falling behind, children would often use either of the following two strategies: (1) they would either dawdle patiently while waiting for their partner to catch up, not directly contributing to their partner’s progress (although some children asked the experimenter “Do we need to wait for each other?”). Or they would (2) directly interfere with their partner’s progress by prompting the answer (e.g. “Fill this one in [pointing at answer sheet]”), or by taking on a more dominant role where they would give their partner explicit tasks or would explicitly direct the actions of their partner (e.g. “Now you do this” or “You have to do that”).

5.3 Relational Interactions

Since we did not have a grounded annotation scheme for behaviours that constitute relational interactions, we looked at the interactions that fell under the supplementary interactions category. In doing this, we identified several situations that appeared relevant on the relational level. Firstly, in addition to mutual eye contact and shared focus of attention, children would often share mutual emotions while progressing through the tasks. For example, they would smile and laugh together when something (unexpected) happened, they would celebrate or cheer together when they had made a correct prediction (e.g. “Yaaay!”), or they would explicitly share their confusion or frustration when their predictions were proven wrong (e.g. “...HUH...??!!”). In addition, some partners would occasionally mimic actions of the other for comic effect, often resulting in shared laughter (e.g. task related actions such as placing pots in mirroring locations, or non-task related actions such as stacking papers). Secondly, on the one hand we observed examples of friendly courtesy towards each other (e.g. “[handing over a pot] if you want, you can place this one” or “you may remove the blocks if you like”). On the other hand, we saw signs of frustration about their partner’s actions, often expressed as deep sighs or rolling their eyes. Finally, we observed cases where partners engaged in mutual “mischief” outside of the scope of the task, where they would reinforce each other’s mischievous behaviours.

The behaviours that we identified on the relational level suggest that children worked towards establishing and maintaining a positive relationship. Mutual shared experiences (both positive and negative) contribute to a stronger social bond, which in turn can strengthen the feeling of being “allies in learning”. Although we did observe instances of frustration, overall the children acted mutually benevolent towards each other. Additionally, we anecdotally identified behaviours that could be associated with building rapport. For example, children mirrored or mimicked each other’s behaviours, gestures, and facial expressions, suggesting a level of interpersonal synchrony.

5.4 Inquiry Processes

In identifying the inquiry processes children engaged in, a total of 846 annotations were performed. Frequencies for individual labels are shown in Table 3.

These results verify that children go through all phases of the inquiry learning process when working with the balance task. However, we found an imbalance between the frequency of observations in the various phases: children more frequently engaged in preparation, experimentation, and observation behaviours. In fact, within a single task, children would often complete multiple quick, successive cycles of preparing and experimenting, skipping the hypothesis generation and conclusion phases. Preparation, experimentation, and observation phases were composed primarily of actions and verbalisations related to the practical or physical nature of the task (e.g. placing/removing pots and blocks, or remarking on the tilt of the balance). On the contrary, behaviours that were associated with the hypothesis generation and conclusion phases were more cognitive and reflective in nature and focus on constructing and organising knowledge (e.g. discussing or explaining about how to interpret the experiment outcome, or combining prior knowledge and new knowledge to construct an updated hypothesis).

5.5 Conclusion of the Contextual Analysis

In our analysis we have identified a rich collection of behaviours aligned with our ECR model that children exhibit in practice, in the context of our specific learning assignment. Furthermore, we found that children went through all phases of inquiry and displayed learning behaviours appropriate to each phase. These results show firstly, that our inquiry learning assignment is suitable for conducting research on collaborative interactions. Secondly, they show that our assignment is usable for the children in our target user group.

Based on these findings from the contextual analysis we would like to identify specific opportunities for when and how a social robot may make a meaningful contribution to the learning process. In the following section we present concrete design guidelines that focus on specifying potential roles and behaviours for the robot, as well as opportunities in the learning cycle where such a robot may potentially intervene.

6 Design Guidelines for Educational Robots

In this section we present four general Recommendations and fourteen concrete Design Guidelines (DG 1-14) for developing social robot behaviours, derived from our findings in the literature and our contextual analysis, and we offer concrete examples of possible robot behaviours and phrases that illustrate how each design guideline may materialise in practice.

6.1 Recommendation 1—Designing Educational Behaviours: The Robot Should Offer Adequate Content Support and Process Support

Learners who construct knowledge [55] through the process of inquiry learning [40] require a certain degree of scaffolding to achieve their next level of cognitive development [74]. In the observations from our contextual analysis we found many instances where children scaffolded each other’s learning process. In some instances, we see behaviours that don’t contribute much to learning, such as directly prompting answers or even blatantly copying answers. However, in most cases scaffolding takes place through explanations from one child to the other.

6.1.1 DG 1: The Robot Could Use the Physical Learning Materials to Supplement Its Scaffolding

From our observations of children interacting we found that, when explaining, they naturally used the physical learning materials as tools or props to supplement their explanations. A robot could take advantage of similar techniques when scaffolding the child’s learning.

Both the robot and the learning materials are physically embodied in the environment of the child, which can be quite naturally leveraged to create more coherent scaffolding behaviour. By referring to objects or aspects of the learning materials, the robot can further supplement its explanations or increase the legibility of its instructions. Such supplementary didactic behaviours may take several forms depending on the robot’s embodiment and physical capabilities.

For example, the robot may use nonverbal behaviours like gazing, gesturing, or pointing to reference locations, objects, or components of the learning materials. Such behaviours can be used while the robot is explaining or reading out loud, or when objects are referenced by the child or assignment. Furthermore, robots with fine motor skills may physically manipulate learning materials, to offer an illustration (e.g. the robot may pick up a red pot and a yellow pot and say “this one seems heavier, what do you think?”).

6.1.2 DG 2: A Robot Should Explicitly Take on a Certain Role

Based on our observations we found that an individual child might be more knowledgeable in one aspect of the task while they are less knowledgeable in another. Furthermore, we saw that children naturally and effortlessly switched between such roles as they progressed through the learning tasks together. In the current analysis we have not investigated in detail how children establish and regulate their respective roles of more knowledgeable and less knowledgeable team member—a process which seems to occur implicitly. However, we do see opportunities for a robot to support the learning process by explicitly navigating a certain role.

For a robot to effectively help children learn, the child must know how they can benefit from the robot’s presence. In other words, the types and forms of support (e.g. actions, information, knowledge) that the robot will be able to offer them will depend on the role that the robot takes. Since we are unsure how the robot may navigate between roles implicitly, we suggest that the robot should have explicit means of presenting itself in a certain role, in order for children to more easily appraise the support that they may potentially receive from the robot.

For example, a robot could explicitly introduce itself in a certain way or the designer could provide the robot with a certain background story. On the one hand, a robot navigating the role of a less- or differently knowledgeable other, could for instance, introduce itself: e.g. “Hi, my name is [..]. I’m interested in learning about topic X, would you like to investigate it together?” or could be introduced by the designer with a background story: e.g. “This is [..], an exchange student from the robot school. Like you, it is studying topic X, maybe you can study together.” On the other hand, a robot navigating the role of a more knowledgeable other, could for instance, introduce itself: e.g. “I have already learned something similar to this topic, maybe I can help you with it.” or the designer could describe the background story of the robot: e.g. “The robot has practiced this task a lot of times before, so you can ask it for instructions.” The choice for which role fits best for the robot will likely depend upon how the designer wishes to present and introduce the robot to the child, as well as the looks of the robot, the type of task, the learning context, and the research interests.

6.1.3 DG 3: A Robot Operating in the Role of Differently Knowledgeable or Less Knowledgeable Other Could Leverage the Interactive Explanation Paradigm

In our observations we often saw educational interactions by the more knowledgeable child being triggered by something that the less knowledgeable child said or did (e.g. making mistakes, looking confused, or asking). The more knowledgeable child would then use their expertise to provide support to the other child (e.g. correcting, explaining, or thinking/reading aloud) .

In such situations a peer-like robot can offer social facilitation (i.e. audience effect) through which it can leverage the interactive-explanation or the learning-by-teaching paradigm [56] by prompting task-related questions to provoke a (verbal) response. This may trigger the child to (re)organise specific elements of their knowledge to offer a coherent explanation.

For example, in some cases, the robot may ask questions that require the child to reflect on the process of the learning assignment (e.g. “What did we do in the last step?” or “What does the task say about the next step?” or “What was our initial hypothesis, was it correct or not?” or more generically, “What happened to the balance?”). In other cases, the robot may prompt for specific knowledge that children should have previously acquired, or highlight information that they need to answer the current task (e.g. “Can you remember which of the pots was heaviest?” or “Why is that pot further away from the centre than the other pot?” or “In the last exercise the balance tipped to the left, so why does it stay balanced now?” or “What do you think would happen if we moved one of the pots closer to the pivot?” or more generically, “Can you explain why the balance behaves like this?”).

6.1.4 DG 4: A Robot Operating in the Role of a More Knowledgeable Other Could Offer Tutoring to the Learner

Our observations revealed many instances where children needed process and content support to understand the learning assignment. In many cases such support was offered by the more knowledgeable child. The experimenter would offer additional tutoring when both children were unable to continue with the task.

In such cases, a tutor robot may offer the child directions, advice, and explanations regarding the learning content and learning process. It may help them in specific areas where they are struggling or where they require additional information. Depending on the robot’s reasoning capabilities it could either attempt to automatically recognise when a child requires tutoring or could respond to a child’s explicit request for help. In some cases it would be sufficient for the robot to read the instructions out loud and in other cases the robot may need to employ more advanced forms of tutoring.

For example, regarding the inquiry learning process, the robot may offer additional background information about the steps of inquiry (e.g. “In the hypothesis step we must think about what we expect will happen” or “When giving a conclusion, try to think about whether your hypothesis was correct or not” or simply reading the assignment out loud). Regarding learning content the robot may offer, for example, analogies that the child might be familiar with (e.g. “This balance is like a seesaw on the playground. What happens if a mouse and an elephant sit on either side of a seesaw?”), or it might refer to prior knowledge (e.g. “Remember, we saw last time that the red pot is heavier than yellow”), or might explain underlying conceptual principles (e.g. “When a pot is further away from the pivot it puts more force on that side of the balance”).

6.2 Recommendation 2—Designing Collaborational Behaviours: The Robot and Child Should Engage in Joint Activities

Effective collaborational interactions require collaborators to work towards aligning their goals, objectives, strategies, abilities, and actions [18, 41]. According to Klein and Feltovich [41] successful joint activities are achieved (among other things) through an interplay between the following five aspects: (a) an intention to collaborate; (b) a level of interdependence between collaborators; (c) common ground; (d) directability of one’s actions by their partner; and (e) effective signalling behaviour. In our observations we saw each of these aspects materialised in one form or another in the children’s behaviours and interactions. A social robot aimed at collaborating with a child should be designed to also navigate these aspects of joint activities. In the next five design guidelines we discuss each of Klein and Feltovich’s requirements and translate them into more specific robot behaviours.

6.2.1 DG 5: To Express an Intention for Collaboration, the Robot Should Disclose Its Own Goals and Should Discuss Task Objectives, Strategies, and Joint Actions

Klein and Feltovich [41] describe the intention to collaborate as a mostly implicit and unspoken agreement between all team members. From our observations, however, we found examples where children agreed upon goals and strategies, and examples where children discussed intentions to perform individual actions. By doing so they explicitly expressed their intention to collaborate, in addition to any implicit unspoken agreement that might have preexisted.

Similarly, we suggest that a robot should express such intentions for collaborating. Since we did not investigate unspoken intentions, we can only offer suggestions for explicit robot behaviours. It is important to note that educational robots are usually created and programmed to accomplish a certain objective (e.g. to improve the child’s learning in some form or another). Such an objective is not necessarily known to the child. However, to signal to the child that the robot has a benevolent intention to collaborate, it could disclose a different “personal goal” (e.g. with an introduction or a background story). While working on the learning assignment with the child, the robot should continue to express its intention for collaboration by discussing task objectives, strategies, and actions. The robot may take various approaches, depending on the type and content of the assignment, the learning context, and the role of the robot in relation to the child.

For example, a more knowledgeable or tutoring robot may introduce its personal goal to the child as: “teaching subject X”. During the rest of the interaction, that robot could then act as a leader, tutor, or expert: reading the instructions out loud to the child (e.g. “The task says we should do: X..Y..and..Z”), referring explicitly to the task instructions when they are violated (e.g. “I think we’re doing X wrong”), and proclaiming progress when the instructions are followed (e.g. “We finished X, now we do Y to move on to the next step”). However, a less knowledgeable or differently knowledgeable robot may tell the child that its personal goal is: “wanting to learn about subject X”. That robot could then act like a peer-learner during the remainder of the interaction: asking the child to read the assignment out loud, asking explicitly whether the current step meets all task instructions (e.g. “Did we do everything that the task says?”), ask explicitly about the next actions (e.g. “What should we do next?”), and inquire about progression through tasks (e.g. “How far are we? When can we move on to the next step?”).

6.2.2 DG 6: To Support a Level of Interdependence, the Robot and Child Should Facilitate Each Other’s Actions

Interdependent activities, according to Klein and Feltovich [41], are interwoven actions between collaborators; their actions mutually influence and affect each other. Our learning assignment inherently imposed a certain level of interdependence. Since both children had to share the same physical learning materials, actions from the one, naturally influenced the other. In our observations we mostly saw examples of children working together (e.g. assisting each other with placing pots). However, we also saw instances of children working in separation (e.g. each doing “their own task” in sequence) and even obstructing each other (e.g. taking away pots that their partner had just placed).

A robot that aims to collaborate with children should aim to engage in interdependent interactions. Such interdependence in collaborative learning assignments may be introduced in different ways. Firstly, the designer of the learning assignment may choose to impose explicit interdependencies between the child’s and the robot’s actions. Secondly, the robot and child may assist each other’s actions even if this is not imposed explicitly by the structure of the learning assignment.

For example, the assignment might require two different actions to be performed at the same time, or might require a sequence of actions (e.g. first the robot must complete action A, then the child must complete action B, only then can the robot complete action C, and so on). In such situations the robot may either take on a dominant role (e.g. “It’s your turn to do action X”) or a submissive role (e.g. “What should I do next?”). In other cases, the robot may ask the child for assistance when it is incapable of performing a certain action (e.g. asking the child for an object that is outside the robot’s reach). Furthermore, depending on the robot’s physical capabilities it may also attempt to assist the child’s actions (e.g. handing over/pushing objects to the child or manipulating other aspects of the learning materials).

6.2.3 DG 7: The Robot Should Build and Maintain Common Ground with the Child in the Context of the Learning Assignment

Klein and Feltovich [41] describe common ground between collaborators as the shared mutual knowledge, beliefs, and assumptions, which continuously evolve during interactions. In general, a strong common ground can result in more efficient communication and collaboration during joint activities. We speculate that, among other things, our children’s implicit mutual gaze, attentive gaze, and shared focus of attention contributed to their common ground. Additionally, we saw instances where children sought explicit alignment by discussing task instructions and actions.

A strong common ground in child-robot interactions may contribute to an increased legibility and predictability of the robot, enabling the child to more accurately anticipate and infer the robot’s future actions. In turn, this increased predictability may enable the child to align their own actions and react to the robot accordingly. Children from the same class might have a level of established common ground, allowing more implicit forms of alignment. However, we expect that not to be the case during initial child-robot interactions: the child may not be aware of the robot’s knowledge, beliefs, and assumptions. Therefore, the robot should use explicit verbal statements and questions so that the child can build common ground, in addition to implicitly contributing to common ground through gaze behaviours.

For example, the robot should firstly seek explicit alignment on various aspects of the learning assignment: a) alignment on the learning objectives (e.g. “I think the idea of the assignment is to investigate topic A” or “What do you think topic A is about?’); b) the steps to take towards achieving these learning objectives (e.g. “To investigate topic A we need to do complete tasks X, Y, and Z” or “What information from task X do we need to use in task Y?”); c) the actions that are required within each step (e.g. “In this step we must first place ‘object 1’ here and then ‘object 2’ there”); and d) the references to physical objects and locations (e.g. “Is this the ‘object’ that we have to use in this task?” or “There is the ‘location’ where we have to put the object”). Secondly, depending on the available sensors and actuators, the robot may attempt to emulate the gaze behaviours that we observed with our children: a) emulate paying attention by reactively gazing towards the child’s actions; b) emulate mutual gaze by reactively gazing towards the child when they gaze towards the robot; and c) emulate shared focus of attention by gazing towards where the child gazes or points.

6.2.4 DG 8: The Robot’s Actions Should be Directable by the Child and Should be Communicated as Such

According to Klein and Feltovich [41] directability of each partner’s actions plays a key role in successful interdependent activities. Through mutual directability of their actions, collaborators signal to each other that their behaviours affect one another in a meaningful way. In our observations of children, we saw instances where children would mutually direct their actions, or where one child would overrule the actions of the other.

We imagine it can be challenging to design robot behaviours in such a way that they are truely directable by the child throughout an entire interaction. To exhibit some level of directability we suggest that designers focus on implementing directable actions that are isolated to specific aspects or moments of an interaction.

For example, asking the child for help (e.g. “Can you tell/show me how to do this?” or “What do I have to do next?”), explicitly asking for directions (e.g. “Which object would you like me to use?” or “Where would you like me to place this object?”), inviting the child to correct the robot’s actions (e.g. “Am I doing this right?” or “Which answer should I pick?”), or synchronising timing of actions (e.g. “Shall I do this now?” or “Let’s count down together!”). In addition to such explicit actions the robot could use implicit social cues to signal that its behaviours are directable. For instance by displaying hesitation or waiting for mutual eye contact before executing an action, or by allowing the child to interrupt the robot’s speech and behaviours.

6.2.5 DG 9: The Robot Should Use Appropriate Signalling Behaviours for the Coordination of Joint Activities

Finally, Klein and Feltovich [41] state that to effectively plan and execute joint actions collaborators must clearly signal their own intentions, goals, and actions. In addition they must signal their recognition and interpretation of their partner’s intentions, goals, and actions. Clear and legible signalling is particularly important in situations where common ground is weak (e.g. during first familiarisation), where something in the joint task has gone wrong (e.g. misunderstood task instructions), or when partners’ goals or actions have become misaligned (e.g. working on different sub-tasks).

When using digital learning environments or sensorised physical learning materials, the system is capable of reliably recognising the user’s actions, enabling the robot to detect and respond to deviations from task instructions. However, reliable automatic detection, recognition, and interpretation of an interaction partner’s underlying intentions and goals is quite challenging, which makes it all the more important for the robot to use appropriate signalling behaviours towards the child. The robot’s signalling behaviour can be composed of explicit verbal statements and implicit non-verbal behaviours.

For example, in some cases, explicit verbal statements can be aimed at communicating the robot’s goals and actions (e.g. “I am working on ‘goal A’ now” or “I think we should first focus on achieving ‘goal A’, then move onto ‘goal B’ afterwards” or “I’m going to do ‘X’ now” or “I’d like to do ‘X’, can you help me by doing ‘Y’?”). In other cases, the robot may explicitly ask the child to clarify their goals and actions (e.g. “Are you working towards ‘goal B’?” or “Are you going to do ‘Y’ next?”). Such explicit verbal statements of intention can be supported through implicit non-verbal behaviours. For example, through attentive gaze, mutual gaze, and shared focus of attention the robot signals to the child that it is “following”, “paying attention to”, and attempting to “recognise” their actions. Furthermore, through proactive behaviours and actions (e.g. by proactively handing over objects to the child, or by reciting information relevant to the task at hand) the robot signals that it is has interpreted the child’s intentions and is anticipating their next action accordingly. Additionally, such proactive behaviours may be signals of the robot’s benevolent intentions.

6.3 Recommendation 3—Designing Relational Behaviours: The Robot Should Work Towards Building and Maintaining a Positive Social Relationship with the Child

Effective collaborative learning requires a positive social relationship between learning partners. Partners need to judge each other’s interests, abilities, skills, and knowledge, and must trust each other to act benevolently. Learning partners can take advantage of their social relationship to offer each other positive emotional support when faced with new experiences or setbacks.

The children who participated in our contextual analysis were from the same class and had already established interpersonal social relationships. Although we did not observe the formation of new social relationships, we did observe instances where children engaged in meaningful relational interactions. For example, children had mutual emotional experiences in response to successes and failures, they displayed prosocial behaviours towards each other, and they engaged in entertaining activities outside of the learning assignment. Designers of learning interventions using robots and other social agents, can take inspiration from such observations. Previous research has shown that children are prone to build relationships with robots (e.g. [6, 33, 35, 65]). Fostering such positive child-robot relationships can indeed have beneficial effects for the child (e.g. [4, 36, 66]). The following design guidelines are suggestions on how to implement relational behaviours on an educational social robot.

6.3.1 DG 10: The Robot Could Display Emotional Responses to Learning Events

Children often display an emotional response when encountering learning events such as successes and failures. By designing the robot to display similar emotional responses it can attempt to emulate a mutual experience with the child.

For example, a basic version of such a robot may use prescripted responses to successes and achievements (e.g. “Yeah! We got it right!” or “Aha! I knew it!” or simply cheering and/or smiling). More advanced technological implementations may attempt to analyse the child’s facial expressions, body poses, gestures, and affective speech to offer a tailored response, more accurately emulating a mutual emotional experience. If the intervention and the context of the learning assignment are appropriate, the robot may also emulate mutual experiences during failures (e.g. “Hrmpf.. Now I have to start all over again!” or “Grrr! I was so sure my answer would be correct!”). However, such a negative response may have a detrimental effect on the child’s learning experience and motivation.

6.3.2 DG 11: The Robot Could Offer Emotional Support

In addition to mutual experiences, there are several opportunities for the robot to offer more explicit emotional support when children encounter frustrating setbacks that may leave them demotivated.

For example, firstly, while children work on a task, the robot may offer friendly motivational support, emphasising their task-related effort (e.g. “I see you’re really doing your best!” or “You must have thought really hard on that answer!”). Secondly, the robot could focus on offering positive feedback when children experience successes (e.g. “Well done! Onto the next challenge!”) as well as when they encounter failures (e.g. “That was a good effort, just a bit more practice and I’m sure we will make it!” or “I don’t mind making mistakes, as I always learn something new!”). Finally, as children advance through various levels, assignments, or difficulties, the robot could offer praise regarding their progress (e.g. “Great! Thanks to your hard work we got a new highscore!” or “We really nailed that task, well done!” or “I see you learned a lot, let’s try an even more difficult challenge next!”).

6.3.3 DG 12: The Robot Could Show Prosocial Behaviour

In our contextual analysis we found instances of reciprocal, prosocial, benevolent behaviours between co-learners. In cases where it contributes to the learning experience, a designer may choose to translate specific instances of such prosocial behaviours to the robot.

For example, firstly, the robot could exhibit proactive behaviours that contribute to task progress (e.g. the robot offers help at appropriate moments, the robot hands over objects, fills out answers, or reads relevant task instructions). Secondly, the robot may offer the child the opportunity to perform the most amusing, comical, or entertaining actions themselves. What these actions are depends to a large extent on the task context; for our inquiry learning assignment children seemed to mostly enjoy manipulating the physical objects when preparing (e.g. placing pots on the balance) and initiating the actual experiment (e.g. removing wooden blocks from under the balance). Finally, on occasion the robot may directly offer the correct answer when the child is stuck (e.g. “Pssst..I think the answer should be ‘A’!”), thus enabling them to continue with the remainder of the assignment. However, it is likely that directly prompting such answers doesn’t contribute much to the child’s knowledge. Therefore, we advise that the robot only uses this particular relational prosocial behaviour if the answer at hand is not directly related to the main learning goals of the intervention. Otherwise, if the answer is directly related to the learning goals, the robot should offer educational scaffolding instead.

6.3.4 DG 13: The Robot Could Engage with the Child in Activities Beyond Learning

We observed many positive relational interactions (e.g. mutual smiling and laughing) when children engaged in non-learning activities (e.g. neatly stacking papers or participating in mischief). More generally, past research has shown that engaging in off-topic activities with a social agent, such as social dialogues and smalltalk, can contribute to a positive relationship [10, 11].

While we do not necessarily recommend that the robot promotes mischief, it still might be beneficial to the social relationship if the child and robot engage in non-learning activities. In practice, such activities would depend on the child’s personal interests and the robot’s (conversational) capabilities.

Examples of activities that children might like to do with a robot could include drawing and colouring, dancing, storytelling, or playing games.

6.4 Recommendation 4—The Robot Should Stimulate the Cognitive Processes of Inquiry Learning

In effective inquiry learning, students alternate between processes that are practical in nature (e.g. the process of preparing, experimenting, and observing) and cognitive in nature (e.g. the process of hypothesising and concluding) [39]. Learning interventions should focus on promoting an optimal balance between both types of processes. However, in our observations we found children’s practical actions to be disproportionally more prevalent than behaviours associated with cognitive processes. Designers of learning interventions may address this by offering scaffolding to trigger such cognitive behaviours.