Abstract

Recent disruptive events, such as COVID-19 and Russia–Ukraine conflict, had a significant impact of global supply chains. Digital supply chain twins have been proposed in order to provide decision makers with an effective and efficient tool to mitigate disruption impact. This paper introduces a hybrid deep learning approach for disruption detection within a cognitive digital supply chain twin framework to enhance supply chain resilience. The proposed disruption detection module utilises a deep autoencoder neural network combined with a one-class support vector machine algorithm. In addition, long-short term memory neural network models are developed to identify the disrupted echelon and predict time-to-recovery from the disruption effect. The obtained information from the proposed approach will help decision-makers and supply chain practitioners make appropriate decisions aiming at minimizing negative impact of disruptive events based on real-time disruption detection data. The results demonstrate the trade-off between disruption detection model sensitivity, encountered delay in disruption detection, and false alarms. This approach has seldom been used in recent literature addressing this issue.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Local and global crises severely impact global supply chains. Hurricane Katrina in 2006, the Japanese tsunami in 2011, COVID-19 in late 2019, and the Suez Canal blockage in 2021 disrupted the flow of goods and materials in global supply chains. Recent power outages and industrial shutdowns in China have affected many supply chains with limited supply and long delays (Feng 2021). Furthermore, climate change risks may evolve and disrupt global supply chains through natural disasters, resulting in plant shutdowns and disruptions to mining operations and logistics (Ghadge et al. 2019). Finally, the Russia–Ukraine conflict is expected to adversely impact many supply chains worldwide and global logistics (Eshkenazi 2022).

In 2021, 68% of supply chain executives reported constantly facing disruptive events since 2019 (Gartner 2022). Therefore, proper disruption management is vital to minimise negative disruption impacts and avoid supply chain collapse. Supply chain disruption management refers to the approaches and policies adopted to recover from unexpected disruptive events which cause a high adverse impact on supply chain performance and are characterised by low occurrence frequency (Ivanov 2021). Some disruptive events, such as supplier unavailability, can have a prolonged impact during the post-disruption period due to delayed orders and backlogs. Supply Chain Resilience (SCR) refers to the supply chain’s ability to withstand, adapt, and recover from disruptions to fulfil customer demand and maintain target performance (Hosseini et al. 2019). For dynamic systems, SCR is a performance-controlled systemic property and goal-directed. In other words, disruption absorption allows for maintaining the intended performance in the event of a disruption. At the same time, the feedback control embodied in recovery control policies makes SCR self-adaptable (Ivanov 2021).

SCR considers disturbances in the supply chain, such as supplier unavailability and disruption impact on supply chain performance. Moreover, SCR seeks to restore normal operations by adopting recovery policies. As a result, SCR guarantees the firm’s survival after severe adverse events. Resilience may be realised by (1) redundancies, such as subcontracting capabilities and risk mitigation stocks, (2) recovery flexibility to restore regular performance, and (3) end-to-end supply chain visibility (Ivanov 2021).

With the evolution of Industry 4.0, many businesses were encouraged to carry out the transition towards digitalisation. Gartner (2018) predicted that by 2023, at least half of the world’s largest corporations would be employing Artificial Intelligence (AI), advanced analytics, and the Internet of Things (IoT) in supply chain operations. Big Data Analytics (BDA) advancements and real-time data availability offered by IoT technologies resulted in the emergence of Digital Twins (DTs). A DT is a digital representation of a real-world physical system (Qamsane et al. 2019).

A Digital Supply Chain Twin (DSCT), as defined by Ivanov et al. (2019), is “a computerised model of the physical system representing the network state for any given moment in real-time”. The DSCT imitates the supply chain, including any vulnerability, in real-time. This real-time representation helps improve SCR through an extensive end-to-end supply chain visibility based upon logistics, inventory, capacity, and demand data (Ivanov and Dolgui 2020).

DSCTs can improve SCR, minimise risks, optimise operations, and boost performance (Pernici et al. 2020). DTs provide up-to-date real-time data which reflects the most recent supply chain state. Real-time data allows for the early detection of supply chain disruptions and rapid response through recovery plans. Moreover, optimisation engines integration with DTs enable making the most cost-effective operational decisions (Frazzon et al. 2020).

The concept of Cognitive Digital Twins (CDTs) has emerged during the past few years which refers to the DTs that possess additional capabilities, such as communication, analytics, and cognition (Zheng et al. 2021a). CDTs have been firstly introduced in the industry sector in 2016, followed by several attempts to provide a formal definition of CDTs (Zheng et al. 2021a). For instance, Lu (2020) defined CDTs as “DTs with augmented semantic capabilities for identifying the dynamics of virtual model evolution, promoting the understanding of inter-relationships between virtual models and enhancing the decision-making”. CDTs which utilise machine learning can sense and detect complex and unpredictable behaviours. Therefore, a Cognitive Digital Supply Chain Twin (CDSCT) permits disruption detection in the supply chain and quick deployment of recovery plans in real-time upon disruption detection.

Motivated by recent global supply chain disruptions, digital transformation efforts, and absence of operational frameworks that utilize CDSCT for disruption detection and time-to-recovery prediction from the literature, this paper introduces a framework to help enhance Supply Chain Resilience (SCR) through decision support by adopting Digital Supply Chain Twins (DSCTs), building upon the introduced conceptual framework by Ivanov and Dolgui (2020) Additionally, the adoption of data-driven AI models in DSCTs enable monitoring supply chain state that help detect supply chain disruptions in real-time and optimising recovery policies to recover from these disruptions. Real-time disruption detection enables the decision-makers to respond quickly to disruptions through early and efficient deployment of recovery policies. AI models play an important role in discovering abnormal patterns in data. As a result, this paper introduces a hybrid deep learning approach for disruption detection in a make-to-order three-echelon supply chain. The proposed approach is presented within a CDSCT framework to improve SCR through real-time disruption detection. The introduced approach allows the decision-makers to identify the disrupted echelon and obtain an estimate of the Time-To-Recovery (TTR) from a disruptive event upon disruption detection.

The remainder of this paper is organised as follows. Section 2 reviews the relevant literature. Then, Sect. 3 introduces and describes the problem at hand. Afterwards, Sect. 4 demonstrates pertinent machine learning concepts, followed by Sect. 5, demonstrating the development steps. The results are shown in Sect. 6 followed by Sect. 7, demonstrating the managerial implications. Finally, Sect. 8 provides concluding remarks, current research limitations, and directions for future work.

2 Review of literature

2.1 Supply chain resilience

Many scholars proposed several signal-based approaches to evaluate SCR (Falasca et al. 2008; Chen and Miller-Hooks 2012; Spiegler et al. 2012; Melnyk et al. 2013; Torabi et al. 2015). The proposed approaches involved simple models, such as simple aggregation models, and sophisticated models, such as deep learning. An aggregation-based approach was introduced to evaluate operational SCR (Munoz and Dunbar 2015). A single evaluation metric across multiple tiers in a multi-echelon supply chain was developed by aggregating several transient response measures. The transient response represents the change in supply chain performance due to a disruptive event. The transient response measures evaluated supply chain performance across multiple dimensions. These dimensions were (1) TTR, (2) disruption impact on performance, (3) performance loss due to disruption, and (4) a weighted-sum metric to capture the speed and shape of the transient response. This approach could explain the performance response to supply chain disruptions better than individual dimensions of resilience at the single-firm level.

A system dynamics-based approach was proposed to quantify SCR at a grocery retailer (Spiegler et al. 2015). SCR was evaluated based on the supply chain response to the dynamic behaviour of stock and shipment in a distribution centre replenishment system. Considering the inherent non-linear system behaviour eliminates preliminary analysis of non-linearity effects which helps simulate complex supply chains (Ivanov et al. 2018).

A hierarchical Markov model was introduced to integrate advance supply signals with procurement and selling decisions (Gao et al. 2017). The proposed model captured essential features of advance supply signals for dynamic risk management. In addition, the model could be used to make a signal-based dynamic forecast. The strategic relationship between signal-based forecast, multi-sourcing, and discretionary selling was revealed. However, future supply volatility and variability are expected to affect the future supply forecast. The findings revealed a counter-intuitive insight. A model that disregards both volatility and variability of the uncertain future supply might outperform the one that considers the variability of the uncertain future supply. Finally, a signal-based dynamic supply forecast was recommended under considerable supply uncertainty and a moderate supply–demand ratio.

Deep learning models for enhancing SCR could outperform the classical models. A deep learning approach was introduced based on Artificial Neural Networks (ANNs) (Radosavljević et al. 2021). This approach aims at identifying disruptions related to temperature anomalies in the cold supply chain during transport. The ANN-based model was compared to another approach based on BDA and mathematical modelling. Based on a simulation model and a real-world case, the ANN-based model outperformed the other model based on BDA and mathematical modelling.

Moreover, hybrid deep learning models could outperform deep learning models for anomaly detection. A hybrid-deep learning approach was presented to detect anomalies in a fashion retail supply chain (Nguyen et al. 2021). The hybrid deep learning model involved a deep Long-Short term memory (LSTM) autoencoder and classic machine learning to extract meaningful information from the data. Then, semi-supervised machine learning was applied in the shape of a One-Class Support Vector Machine (OCSVM) algorithm to detect sales anomalies. Based on a real case for a company in France, the results showed that hybrid approaches could perform better than deep learning-based approaches.

2.2 Digital supply chain twins for enhancing supply chain resilience

Several studies extended the application of DSCTs in many aspects to support decision-making and enhance SCR. A machine learning approach was introduced to improve SCR through resilient supplier selection in a DT-enabled supply chain (Cavalcante et al. 2019). The introduced approach could analyse the supplier performance risk profiles under uncertainty through data-driven simulation for a virtual two-echelon supply chain. The results revealed that combining machine learning-based methods with DSCT could enhance SCR, especially when redesigning the supply network.

A notion of DSCT to support decision-making and improve SCR was explained in (Ivanov et al. 2019). The interrelationships between supply chain digital technology and disruption risk effects in the supply chain were investigated. Then, a framework for risk management in supply chain management was introduced. The results indicated that future decision support systems would utilise DSCTs and digital technologies, such as IoT and BDA. As a result, the available real-time data could provide information regarding the scope and impact of disruptions. The feedback from DSCTs could be used to restore the pre-disruption performance by testing different policies. The integration between BDA and a DT for an automotive supply chain was introduced to support decision-making and adapt to new scenarios in real-time (Vieira et al. 2019).

Another framework based on real-time disruption detection was presented to support decision-making for a DSCT for disruption risk management (Ivanov and Dolgui 2020). This framework would enable efficient deployment of recovery policies, reliable disruption scenarios creation for supply chain risk analysis, and revealing the connections between risk data, disruption modelling, and performance evaluation.

The weaknesses in SCR modelling were highlighted in the face of foreseeable disruptions (Golan et al. 2021). The findings showed that DSCTs could better allow decision-makers to evaluate efficiency/resilience trade-offs. Furthermore, during the post-disruption phase, DTs can help optimise system performance.

Corresponding to the COVID-19 impact on global supply chains, DSCTs were used to examine the effect of a real-life pandemic disruption scenario on SCR for a food retail supply chain (Burgos and Ivanov 2021). The results uncovered the underlying factors that affect supply chain performance, such as pandemic intensity and customer behaviour. The findings assured the importance of DSCTs for building resilient supply chains.

2.3 Cognitive digital twins

Many scholars introduced different architectures and implementations for CDTs in various fields, such as condition monitoring of assets, real-time monitoring of finished products for operational efficiency, and supporting demand forecasting and production planning (Zheng et al. 2021b). In the field of manufacturing and supply chains, introduced architectures focused on detecting anomalous behaviour in manufacturing systems, improving operations, and minimizing cost across the supply chain (Qamsane et al. 2019; Răileanu et al. 2019). A CDT architecture was proposed for real-time monitoring and evaluation for a manufacturing flow-shop system (Qamsane et al. 2019). The CDT platform could forecast and identify abnormalities using the available data from interconnected cyber and physical spaces. In addition, another architecture was introduced for a shop floor transportation system to predict and identify anomalous pallet transportation times between workstations (Răileanu et al. 2019). Based on two different showcases, both architectures showed that CDTs could improve operations through optimal scheduling in real-time and enhanced resource allocation.

A CDT framework was introduced for logistics in a modular construction supply chain (Lee and Lee 2021). The proposed CDT could predict logistics-related risks and arrival times to reduce costs using IoT and Building Information Modeling (BIM). Furthermore, an approach for a CDT was proposed in agile and resilient supply chains (Kalaboukas et al. 2021). The CDT could predict trends in dynamic environments to guarantee optimal operational performance. This approach was elaborated through a connected and agile supply chain. The deployed model considers collaboration among different actors as enablers for information exchange, processing, and actuation.

In addition, a deep learning-based approach has been introduced to predict TTR in a three-echelon supply chain (Ashraf et al. 2022). The introduced approach was presented within a theoretically proposed CDSCT framework to enhance SCR. Obtained results showed that predicted TTR values tend to be relatively lower than the actual values at early disruption stages, then improve throughout the progression of the disruption effect on the supply chain network.

It has been observed from the literature that many recent contributions were directed towards SCR in response to the COVID-19 pandemic impact on global supply chains. Many scholars were concerned with quantifying SCR and deploying DSCTs frameworks. On the one hand, deep learning-based models outperformed the classic ones for enhancing SCR. On the other hand, few contributions concerned with enhancing SCR through deep learning-based techniques in a CDSCT environment have been observed. In addition, the literature emphasized the role of CDSCTs in the field of supply chain disruption management. However, few contributions on the implementation of different CDSCT modules for disruption detection was observed. Therefore, this paper contributes to the literature through developing the CDSCT enabling modules for disruption detection.

This paper extends the proposed framework by Ashraf et al. (2022) through incorporating an additional layer for disrupted echelon identification. Furthermore, this paper extends their work by introducing: (1) a hybrid deep learning approach for disruption detection and (2) deep learning-based model for disrupted echelon identification. The introduced approaches are presented as sub-modules of CDSCT for a make-to-order virtual supply chain. In addition, this paper reconsiders inputs for the TTR prediction modules with the aim of obtaining better TTR estimates.

This study tries to answer two research questions. The main research question is “Is there a way to exploit the benefit of cognitive digital twins in the field of supply chain disruption management?” The second research question is “How to validate the introduced framework for incorporating cognitive digital twins into supply chain disruption management. The first research question is addressed by introducing a CDSCT framework that allows early disruption detection in a CDT-enabled make-to-order virtual supply chain. Early disruption detection is enabled through a hybrid deep learning-based approach using a deep autoencoder neural network and the OCSVM algorithm. In addition to early disruption detection, the CDSCT permits disrupted echelon identification and TTR prediction. The first research question is addressed throughout the introduced framework, while the second research question is addressed throughout system the implementation.

3 Problem statement

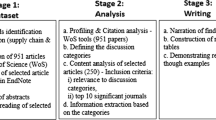

This paper introduces a hybrid deep learning approach for disruption detection within a CDSCT framework to enhance SCR. This approach involves (1) a training phase and (2) an operational phase. The training phase involves training the disruption detection module and models for disrupted echelon identification and TTR prediction. After the training phase, the CDSCT can detect supply chain disruptions, identify disrupted echelons, and predict TTR from disruptions. Figure 1a demonstrates the CDSCT during the operational phase. Supply chain disruptions are detected based on a real-time data stream from an existing supply chain. The literature indicated that real-time data, enabled by IoT, is collected in multiple means, such as sensors and RFID tags (Ivanov and Dolgui 2020). Then, the disrupted echelon is identified upon disruption detection, and TTR estimates are obtained. In addition, future supply chain states can be forecasted due to the disruption impact.

Needed supply chain data for training the anomaly (disruption) detection module and TTR prediction model can be obtained from multiple sources. These sources include historical records, real-time data from an IoT-enabled supply chain, or a simulation model depicting a real or a virtual system. Figure 1b demonstrates the framework during the training phase. This phase involves the training based on historical data feed representing the supply chain performance in normal and disrupted states. The disrupted echelon is identified upon disruption detection. Then, a TTR estimate is obtained after feeding the labelled training data to the CDT. In practice, sufficient historical records of disruptions for training purpose may be unavailable due to the unpredictability and low occurrence frequency of disruptive events. In such cases, simulation modelling becomes the most convenient tool for augmenting the training data required for the development of machine learning models. This paper uses simulation modelling to simulate different disruption scenarios. In addition, the developed simulation model is used to generate the required data for training the disruption detection module for a make-to-order virtual three-echelon supply chain.

4 Methodology

4.1 Deep autoencoders

An autoencoder is a special type of feedforward neural network trained to copy its input to its output by representing its input as coding (Goodfellow et al. 2016). Autoencoder consists of three main components, other than the input and output, (1) encoder, (2) coding, and (3) decoder, Fig. 2. Input data compression and decompression through the encoder and decoder, respectively, makes autoencoders ideal for applications involving dimensionality reduction and feature extraction. The coding, z, represents the compressed representation of the input vector x, which contains the most representative information of the input.

An autoencoder with three or more hidden layers in the encoder or the decoder network is considered a deep one (Subasi 2020). The autoencoder is trained to minimise the reconstruction error between the input x and the output \({\hat{x}}\). It is expected that an autoencoder trained on normal (non-disrupted) data will result in a high reconstruction error when given anomalous (disrupted) data (Malhotra et al. 2016). Therefore, an autoencoder neural network is used for the problem at hand of disruption detection.

4.2 The one-class support vector machine algorithm

OCSVM is a machine learning algorithm used for binary classification and anomaly detection. Anomaly detection refers to discovering outliers or abnormalities embedded in a large amount of normal data (Ma and Perkins 2003). OCSVM works in a semi-supervised manner when considering anomaly detection as the application area. During training an OCSVM, it learns to construct the boundary that separates observations under normal conditions from abnormal observation. The work proposed by Schölkopf et al. (1999); Ma and Perkins (2003) introduced the inherent mechanism of OCSVM for anomaly detection in a more detailed manner. OCSVM is usually trained using normal points representing the positive class because full consideration of all disruption scenarios is quite impossible. Then, during operation, the OCSVM checks whether new data points belong to the normal class or not. Suppose an input data point is considered anomalous. In that case, it lies outside the boundary and belongs to the other class, usually referred to as the negative class (anomalous).

The OCSVM algorithm is applied for automatic disruption (anomaly) detection in a three-echelon supply chain. As a binary classification and anomaly detection algorithm, OCSVM was chosen as it enables disruption detection without a prohibitively extensive study of all potential disruption scenarios. The first principal component of the reconstruction error obtained from the autoencoder is used as the input to the OCSVM algorithm. The OCSVM algorithm eliminates the need for statistical analyses to set a threshold above which a data point is considered anomalous. In addition, the OCSVM algorithm does not necessitate any specific assumptions about the data, i.e., reconstruction error is normally distributed (Nguyen et al. 2021).

4.3 Long-short term memory neural networks

LSTM neural network is an important Recurrent Neural Networks (RNNs) class. LSTM neural networks were proposed by (Hochreiter and Schmidhuber 1997). They provide memory to retain long-term dependencies among input data without suffering from the vanishing gradient problem (Li et al. 2019). Therefore, LSTM networks are suitable to represent sequential data, i.e., time series. A simple LSTM neural network of one neuron, Fig. 3a, receives an input \(x_{i}\), produces an output \(y_{i}\), and resends that output to itself. When the LSTM neural network is unfolded through time, it has the form of a chain of repeated modules, Fig. 3b. At each time step (frame) \(i, i \in \left\{ 1, 2,\ldots , t \right\} \), that recurrent neuron receives the inputs \(x_{i}\), and its output from the previous time step \(h_{i-1}\), to produce an output \(y_{i}\).

5 System implementation

This section lays out the implementation steps for developing the proposed approach on a desktop computer with a 2.9 GHz Intel Core i7 processor and 8 GB RAM. A virtual supply chain is modelled as a discrete event simulation model using AnyLogic 8.7 simulation software. Machine learning models are developed using Python 3.8, Scikit-learn 0.24, and Keras 2.6. The training time for different models ranged between two and five hours.

5.1 The virtual supply chain structure

The three-stage flow line model with limited buffer capacity introduced by Buzacott et al. (1993) is used to develop a make-to-order virtual three-echelon supply chain. It is assumed that there is a single product under consideration, and alternatives to any echelon are not available. Hence, the service protocol permits backlogging. Fig. 4 shows the main components of the virtual supply chain with potential sources of disruption.

A single supplier, manufacturer, and distributor constitute the three-echelon virtual supply chain. An additional component, demand, corresponds to the initiated customer order quantity. After a customer order is generated, it enters a First-Come-First-Served (FCFS) queue waiting to be fulfilled. The customer order generation rate follows a Poisson distribution with a mean value of \(\lambda \).

The supplier provides the required raw material with a mean rate \(\mu _{1}\). The supplier is assumed to have unlimited buffer capacity. In contrast, the remaining two echelons are assumed to have a limited buffer capacity of ten units. After the raw material is prepared and delivered to the manufacturer, the products are manufactured with a processing rate \(\mu _{2}\). Then, the customer order is ready to be fulfilled through the distributor after being processed with a processing rate \(\mu _{3}\). The processing rates at the supplier, manufacturer, and distributor are assumed to follow an exponential distribution.

Different scenarios are considered to account for the supply chain performance under normal and disrupted circumstances. The normal scenario is denoted by \(S_{0}\), while potential disruption scenarios include unexpected failures at any single echelon i and are denoted by \(S_{i}, i \in \{ 1, 2, 3 \}\), where echelons 1, 2, and 3 correspond to the supplier, manufacturer, and distributor, respectively. In addition, the surge in demand scenario is considered and denoted by \(S_{4}\). The simulation model parameters assumed values are shown in Table 1.

Several parameters and metrics reflecting the supply chain state and performance are monitored. The parameters include (1) the interarrival time, \(T_{a}\), and (2) the processing time at echelon i, \(T_{pi}, i \in \{ 1, 2, 3 \}\). Monitored metrics include (1) units in the system WIP, (2) queue length at echelon i, \(L_{qi}, i \in \{ 1, 2, 3 \}\), (3) lead time LT, (4) flow time FT, and (5) the daily output K in units. Lead time refers to the total time between customer order generation and fulfilment. The flow time refers to the elapsed time from the order beginning of processing by the supplier until fulfilment. Daily records are averaged throughout the day. The WIP and \(L_{qi}\) are recorded on an hourly basis, while the remaining parameters and metrics are recorded upon order fulfilment.

5.1.1 Simulation model validation

The simulation model is validated using the closed-form model given by Buzacott et al. (1993) for a particular system configuration. That configuration assumes an infinite number of orders in front of the supplier. In addition, buffer capacity is not allowed at either the manufacturer or the distributor. The calculated rate at which orders leave the system (output rate) for that configuration, using the closed-form model, is compared to the estimated rate from the simulation model.

The simulation model is validated before generating the required data sets to verify the introduced approach. Therefore, a total of 916 single day replications are used for validation. The calculated output rate from the closed-form model is 10.69 units per day. The estimated output rate was \(10.48 \pm 0.221\) units per day with a \(99\%\) confidence level. Moreover, a comparison between the calculated and estimated rates using a Z-test shows no significant difference with a 0.01 significance level.

5.1.2 Data sets generation

Five data sets are generated corresponding to represent five scenarios \(S_{i}, i \in \left\{ 0, 1, 2, 3, 4 \right\} \). These scenarios consider both normal and disrupted circumstances. The generated data sets for each scenario represent a multivariate time series that consists of 916 time records per replication. Each time step includes thirteen parameters (features). These features are (1) interarrival time, (2) supplier processing time, (3) manufacturer processing time, (4) distributor processing time, (5) supplier queue length, (6) manufacturer queue length, (7) distributor queue length, (8) work in process, (9) lead time, (10) flow time, (11) waiting time, (12) processing time, and (13) daily output.

Each disruptive event has a direct impact on some input features in the generated datasets. The surge in demand is represented by a decrease in feature (1) which consequently results in an increase in feature (5), (9), and (11). The second type of disruptive events, capacity loss at any echelon disrupts the whole system and affects features (2–13). For example, considering the capacity loss at the supplier, some of affected features is impacted directly, such as feature (2), and others are impacted indirectly, such as feature (6), because the discontinuity of incoming material flow from the supplier due to the disruptive event.

5.2 The disruption detection module

A semi-supervised hybrid deep learning approach is adopted to detect disruptions in the above-mentioned virtual supply chain, as depicted in Fig. 5. The monitored supply chain parameters and performance metrics produce a multivariate time series with multiple time-dependent variables. Consequently, each variable may depend on other variables besides time dependency, making building an accurate model for disruption detection and TTR prediction a complex task. Therefore, a hybrid deep learning-based approach is adopted to tackle this challenge by using automatic feature extraction and learning of the underlying patterns in the input data.

5.2.1 Data preprocessing

TThe input time series data are split into train, validation, and test sets using a split ratio of 60%, 20%, 20%, respectively, for all scenarios. Due to different scales on which input variables are measured, data preprocessing is carried out by normalising the inputs using a min–max scaler, Eq. (1).

where \(x_{norm}^{i}\) denotes the normalised vector for a time-variate variable. \(x_{min}^{i}\) and \(x_{max}^{i}\) are the minimum and maximum values of vector \(x^{i}\), and k is the number of variables in (length of) the time series. Due to the relatively long time series, a sliding window of size 14 is applied as a preprocessing step. Afterwards, deep autoencoders and OCSVM algorithm detect disruptions based on the first principal component of the reconstruction error. Moreover, two LSTM neural networks are used to identify the disrupted echelon and predict TTR.

5.2.2 Disruption detection

A deep autoencoder of three encoder-decoder pairs is developed to reconstruct the inputs. The hidden and coding layers have a size of 256, 128, 64, and 32, respectively. The learning rate and batch size are set to \(10^{-4}\) and 128, respectively. The autoencoder is trained for 1000 epochs using input data considering normal circumstances generated from the scenario \(S_{0}\). An epoch refers to a complete pass made by the model on the input data set during training.

In the beginning, the OCSVM algorithm is trained using the first principal component of the obtained absolute error vectors for the test set only under normal circumstances, considering the scenario \(S_{0}\). Then, the OCSVM algorithm is tested using the test sets under disrupted circumstances under scenarios \(S_{i}\) \(\forall i \in \left\{ 1, 2, 3, 4 \right\} \). Model hyperparameters \(\nu \) and \(\gamma \) are set to 0.025 and 100, respectively. The first hyperparameter, \(\nu \), controls the sensitivity of the support vectors, while the latter, \(\gamma \), controls the boundary shape. High values of \(\nu \) lead to a more sensitive model, while high values of \(\gamma \) result in an overfit to the training data. At the end of this section, balancing model sensitivity with other performance metrics is discussed.

The OCSVM model results are mapped to a labelled data set for further performance evaluation. The selected performance metrics for the disruption detection model include (1) accuracy, (2) precision, (3) recall, and (4) F1-score. The accuracy, Eq. (2), describes the overall model performance by calculating the ratio of correctly identified observations to the total observations. The precision, Eq. (3), determines the ratio of correctly identified normal observations to the total number of normal observations. On the contrary, the recall, Eq. (4), defines the model sensitivity by realising the ratio of correctly identified normal observations to total observations identified as normal. Finally, the F1-score, Eq. (5), is a weighted average of precision and recall.

where \(\text {TP}\), \(\text {FP}\), \(\text {FN}\), and \(\text {TN}\) are true positive, false-positive, false-negative, and true negative. The true positive refers to the number of correctly identified observations as normal. In contrast, the false-positive represents the number of incorrectly identified observations as normal. False-negative defines the number of abnormal observations that are incorrectly identified as normal. The true negative represents the number of abnormal observations that are correctly identified.

In order to provide the decision-maker with more relevant measures, another two additional performance measures, (1) lag and (2) false-positive percentage, are introduced. The lag describes the encountered delay in disruption detection. On the other hand, the ratio of incorrectly classified observations prior to disruption occurrence defines the false-positive percentage. These additional performance measures provide a better understanding of the impact of changing model hyperparameters on model performance.

The OCSVM-based disruption detection model hyperparameters are selected by adopting a grid search approach. The main objective is to find the best performing combination of hyperparameter values based on different performance measures. Figure 6 summarises the results from the grid search concerning the effect of changing \(\nu \) and \(\gamma \) values on different performance measures. The x-axis represents \(\nu \) on a linear scale, while the y-axis represents \(\gamma \) using a log scale. A good model performance can be represented by a combination of high values of accuracy and F1-score in addition to low false alarm percentage. Evidently, better performance is realised at \(\nu \) in the range below 0.1 and relatively moderate values of \(\gamma \) between 0.1 and 100.

Further analysis is conducted to examine the individual effect of each hyperparameter while the other is fixed on the mean lag and false alarms within the range where good model performance has been observed. Figure 7a, b show the effect of changing \(\nu \) while \(\gamma \) is fixed at different \(\gamma \) values. The x-axis represents \(\nu \) while the y-axis represents the performance measure value. As indicated from the shown graphs, \(\gamma \) barely affects the performance measures. On the contrary, \(\nu \) significantly affects model’s performance at \(\nu \le 0.1\).

Figure 7c, d examine the effect of changing \(\gamma \) while \(\nu \) is fixed at \(\nu \le 0.1\). The x-axis represents \(\gamma \) on a log scale while the y-axis represents the performance measure value. As per the shown graphs, \(\gamma \) does not have a significant effect on the performance measures when compared to \(\nu \) at \(\gamma \in \left[ 0.01, 1000 \right] \). On the contrary, \(\nu \) significantly affects the model’s performance. On the one hand, the increase in \(\nu \) results in a significant improvement in the mean lag, but, more false alarms arise. Therefore, the selected values for \(\nu \) and \(\gamma \) are chosen to achieve as short lags as possible with the fewest false alarms.

5.2.3 Disrupted echelon identification

An LSTM neural network classifier is developed to identify the disrupted echelon upon disruption detection. The LSTM classifier is trained in a fully supervised manner. Therefore, the input class sequence is converted from a class vector to a binary class matrix using one-hot encoding. Then, the train and validation sequences are used to train the classifier with a learning rate of \(10^{-4}\) and a batch size of 32 for 20 epochs. Finally, the classifier is tested using the test set. The LSTM neural network classification model consists of two LSTM layers. Each layer has 16 units and a dropout rate of 0.1.

5.2.4 Time-to-recovery prediction

An LSTM neural network-based model is developed to predict TTR using the incoming signal from the simulation model for different parameters and metrics. Different hyperparameter values are tested, and the best-performing set is chosen to predict the TTR based on the minimum validation loss. These hyperparameters are used to develop four TTR prediction models by considering a single disruption scenario at a time. The four TTR prediction models correspond to the four potential disruption scenarios \(S_{i}, i \in \left\{ 1, 2, 3, 4 \right\} \).

Each model has two LSTM layers with 64 LSTM units each. The learning rate is set to \(10^{-4}\) and the dropout rate is 0.1 for each layer. An l1 regularisation is applied to the first layer with a regularisation factor of \(10^{-3}\). Each model is trained with a batch size of 16 for twenty epochs. Each model is evaluated based on (1) Mean Absolute Error (MAE), (2) Mean Squared Error (MSE), (3) Root Mean Squared Error (RMSE), and Mean Absolute Percentage Error (MAPE). Each performance measure is given by Eqs. (6), (7), (8), and (9), respectively.

where N is the number of TTR observations, while y and \({\hat{y}}\) represent the actual and predicted TTR vectors.

6 Results

The generated data for the virtual supply chain are used to verify the proposed approach. This section evaluates the performance of different modules, mainly the disruption detection module, disrupted echelon identification, and time-to-recovery prediction.

6.1 Simulation-generated data sets

After the simulation model is validated, a single data set for each scenario is generated. Then, each data set was labelled and normalised. Finally, each data set was split into train, validation, and test sets. The train and validation sets for scenario \(S_{0}\) were used to train the deep autoencoder model. Then, the test set for the \(S_{0}\) scenario was used for testing the deep autoencoder model and the OCSVM algorithm. In addition, the test sets for scenarios \(S_{i}\) \(\forall i \in \left\{ 1, 2, 3, 4 \right\} \) were used for testing the deep autoencoder model, evaluating OCSVM algorithm performance, testing the disrupted echelon classification model, and TTR prediction models. The disrupted echelon classification model and TTR prediction models were trained and validated using the train and validation sets for scenarios \(S_{i}\) \(\forall i \in \left\{ 1, 2, 3, 4 \right\} \).

6.2 Disruption detection using deep autoencoders and one-class support vector machine algorithm

The deep autoencoder is trained using sequences of \(14 \text {timesteps} \times 13 \text {features}\). These sequences are generated by applying a sliding window of size 14 that corresponds to a biweekly stream of data to better capture the changes due to disruptive events in a reasonable timely manner, in comparison to 116 days as in (Ashraf et al. 2022). Input sequences are converted to a one-dimensional vector due to the inability of the autoencoder to process two-dimensional data as input. The flattened vector has a length of 182 elements. The MAE Function is used to evaluate the autoencoder model loss. The model loss represents differences between the actual values and the estimations from the model. A learning curve compares the model loss on training and validation data sets. The obtained learning curve demonstrates a slight difference between both data sets, which ensures a good fit of the autoencoder model, Fig. 8. A significant model loss decrease is noted during the first 100 epochs, followed by a gradual decrease until stability at epoch number 900.

After training the autoencoder model, it is used to obtain the absolute reconstruction error using the test sets under normal and disrupted circumstances. The absolute reconstruction error \(e_{t}^{i}\) for feature i at time t is given by Equation 10.

where \(x_{t}^{i}\) and \({\hat{x}}_{t}^{i}\) are the actual and estimated values of the test set for feature i at time t, respectively. A significant difference between the normal and abnormal circumstances was realised due to the low values for the first principal component under normal circumstances. The vast majority of the first principal component values under normal circumstances fall below \(-0.2\), which are much lower than those under disruption and recovery, which falls between \(-0.5\) and 3.5.

Then, the OCSVM algorithm is trained using the first principal component vector of the obtained reconstruction error under normal circumstances, which defines the positive class. The first principal component explains \(92.39\%\) of the overall variability in the absolute reconstruction error across input features. Finally, the first principal component vector under disrupted circumstances is used for disruption detection using the trained OCSVM disruption detection model. Table 2 shows the performance evaluation results for the disruption detection model.

There is a considerable difference between the model performance for both data sets. However, the disruption detection model achieved good performance under disrupted circumstances. The high recall value implies that \(97.6\%\) of these observations are correctly identified among all normal observations.

Incorrectly classified observations under normal circumstances exist due to the model’s sensitivity to outliers in the train data. The reconstruction error is affected by noise, representing instantaneous disruptions (operational variability). That variability produces extremely low or high values for the principal component of the reconstruction errors under normal circumstances, affecting the OCSVM algorithm performance. Consequently, model sensitivity to such variability is a matter which requires further investigation.

The false-positive percentage reflecting the percentage of false alarms prior to disruption is \(2.5\%\). The false alarm count is 1530, roughly corresponding to approximately seven incorrect observations per replication. The average delay in disruption detection (lag) is 7.1 days. The lag distribution is shown in Fig. 9. The maximum and median lag values are 23 and 4 days, respectively.

Despite the apparent good model performance, the realised lag is a matter of concern depending on the anticipated speed in detecting disruptions. The trade-off between achieving shorter delays and reducing false alarms depends on the model sensitivity, controlled by the hyperparameter \(\nu \). Although small hyperparameter values are recommended to achieve few false alarms, the disruption detection model becomes less sensitive to disruptions (anomalies). Thus, a significant increase in maximum lag (delay) is encountered. Large \(\nu \) values can achieve an efficient disruption detection model through delay minimization. However, the model becomes too sensitive to , leading to many false alarms and poor performance in terms of accuracy, precision, recall, and F1-score. Therefore, the decision-maker should compromise the combination between the acceptable limits for the performance measures. A suggested solution is to maintain shorter delays. The false alarms can be handled using the proposed LSTM neural network classification model.

The first principal component of the obtained absolute error for a single replication and different scenarios is plotted against time, Fig. 10. The left y-axis represents the first principal component, while the right y-axis represents the corresponding metric/performance measure for each scenario in days. The first principal component for all disrupted scenarios is notably higher than the scenario under normal circumstances. The red dots refer to the anomalous points. Some points before the estimated recovery are normal points, affecting the model performance measures since the data are labelled based on a predefined threshold.

6.3 Disrupted echelon identification using long-short term memory neural network model

The LSTM model for disrupted echelon identification is trained to learn the multivariate time series pattern. The model is trained using the train and validation data sets for scenarios \(S_{i}\) \(\forall i \in \left\{ 1, 2, 3, 4 \right\} \). The model should predict the most likely class to which a given sequence belongs. Input data are labelled to consider the disrupted echelon, recovery phase, and normal circumstances during pre-disruption and post-recovery phases. The categorical cross-entropy function J, Equation 11, is used for model evaluation during training (Géron 2019).

where N is the number of classes, \(y_{i, k} \in \left\{ 0, 1 \right\} \) is a binary indicator if class label k is the correct classification for observation i, and \(p_{i, k} \in \left[ 0, 1 \right] \) is the predicted probability observation i is of class k. Lower cross-entropy values indicate better convergence of predicted sample probability towards the actual value. The learning curve shows a significant loss decrease after a few epochs, Fig. 11.

Once the LSTM neural network model for disrupted echelon identification is trained, it is tested using the test data. The model performance is evaluated using precision, recall, and F1-score. Overall, the model performs well except for identifying the recovery phase, Table 3. The precision during recovery is highly affected by the incorrectly classified observations that belong to the normal class, as depicted by the confusion matrix, Fig. 12. The confusion matrix summarises the LSTM model classification results by showing the count values for each class.

6.4 Time-to-recovery prediction using long-short term memory neural network models

The TTR is predicted based on an LSTM neural network prediction model. The model is trained to predict TTR based on multivariate inputs considering a single disruption scenario at a time. Therefore, four prediction models are developed to correspond to each disruption scenario \(S_{i}, i \in \left\{ 1, 2, 3, 4 \right\} \). Training and validation data sets are used to train the proposed models. The MAE function monitors the loss for each model. The four models possess a rapid loss decrease after a few epochs, and stability is realised after the eighth epoch, Fig. 13.

The TTR prediction models are tested using the test sets considering different disruption scenarios \(S_{i}, i \in \left\{ 1, 2, 3, 4 \right\} \). It is evident from the performance evaluation results, Table 4, that the proposed models perform much better than the results obtained by Ashraf et al. (2022) for all disruption scenarios. Reducing the number of input features has significantly improved the TTR prediction models performance.

After the TTR prediction models are tested, the actual and predicted TTR are compared at different replications. The TTR values at a randomly selected time step, t, are sketched in Fig. 14. The predicted TTR values tend to be slightly lower than the actual ones. However, minor variations exist in many cases. The TTR prediction error is obtained by calculating the difference between actual and predicted TTR values. Figure 15 shows the corresponding prediction error to the data used in Fig. 14. Significant positive deviations pertain to the early disruption stages.

The progression of predicted TTR values is further examined for a single replication considering different disruption scenarios, Fig. 16. A short delay in TTR prediction is observed at early disruption stages. That delay is followed by a higher TTR prediction than the actual. By the end of the disruption, the predicted TTR values tend to be close to the actual ones.

7 Managerial implications

Data-driven driven digital supply chain twins hold a significant potential for boosting the overall performance of supply chain operations and resilience. Enhanced end-to-end supply chain visibility, enabled by DSCTs, help decision-makers navigate the complexities of supply chains under disruptive events. DSCTs can monitor and determine the supply chain state in real-time as well as provide timely and accurate information to draw crucial insights for effective decision-making support. Integrating the proposed models, in this paper, into a cognitive digital supply chain twin further amplifies the potential of DSCTs. DSCTs can help decision-makers make appropriate decisions based on real-time disruption detection data.

Early disruption detection is a key advantage of incorporating the introduced model in DSCTs. Early detection allows for early deployment of recovery policies, minimising negative impact on supply chain performance due to disruption, leading to faster supply chain recovery and improved supply chain resilience. In addition, the disrupted echelon identification at early disruption stages allows the decision-makers to find explore other alternatives alternative strategies that can effectively mitigate disruption impact. Furthermore, obtaining the predicted Time-To-Recovery (TTR) at early stages provides an estimate for the duration of contractual agreements, if they exist applicable, when considering different recovery options.

At the end, utilizing data-driven DSCTs not only would improve supply chain visibility and decision-making capabilities, but also it would enable a more resilient and adaptive supply chain to unforeseen disruptive events. This is becoming more and more important given the global disruptions that are taking place since 2020 as manifested by COVID-19, Suez Canal blockage, global supply chains crises, and the current wars erupting in Europe, the Middle East and Africa. This proactive approach to supply chain disruption management can reduce downtime, delays, and foster supply chain recovery. As organizations aim to respond to uncertainties efficiently, DSCTs emerges as a solution to maintain a competitive edge in the realm of digital supply chains.

8 Conclusion

This paper introduced a new hybrid deep learning-based approach for disruption detection within a data-driven cognitive digital supply chain twin framework. Referring to the first research question “Is there a way to exploit the benefit of cognitive digital twins in the field of supply chain disruption management?” The presented approach mainly contributes to the field of supply chain disruption management by offering better end-to-end supply chain visibility which enhances supply chain resilience through enabling real-time disruption detection, disrupted echelon identification, and time-to-recovery prediction. The developed modules permit the CDSCT to detect disruption occurrence though combining a deep autoencoder neural network with a one-class support vector machine classification algorithm. Then, if a disruption is detected, long-short term memory neural network models identify the disrupted echelon and predict time-to-recovery from the disruption. Referring to the second research question: “How to validate the introduced framework for incorporating cognitive digital twins into supply chain disruption management?” The presented framework is validated under several potential disruption scenarios in a virtual three-echelon supply chain. The disruption scenarios accounted for the surge in demand and unexpected failures at any echelon.

The obtained results indicated a trade-off between disruption detection model sensitivity, encountered delay until disruption detection, and false alarm count. Based on the excellent performance of the proposed model for disrupted echelon identification, that model may be suggested to replace the former approach for disruption detection based on deep autoencoder and one-class support vector machine algorithm. However, the OCSVM algorithm-based anomaly detection model is indispensable because it does not require an extensive definition of all possible disruption scenarios. Developed models for time-to-recovery prediction revealed that predicted time-to-recovery values tend to be lower than the actual ones at early disruption stages. Then, these predictions improve throughout disruption progression with slight variation.

Current research limitations include (1) the difficulty in accurately identifying the transition of the system from a disrupted state to a fully recovered one, (2) considering a single type of disruption at a time, (3) as a first initiative, the introduced approach has only been tested on simulation-generated data set, (4) scalability to include more than three echelons, and (5) responding to supply chain structural changes. Future work directions may include (1) investigating the concurrent occurrence of more than one disruption type, (2) developing a dynamic forecast model to forecast possible supply chain states upon disruption detection, (3) integrating the cognitive digital supply chain twin with an optimization engine to optimize operational decisions to enhance supply chain resilience, (4) examining the performance of other machine learning algorithms, such as transformers and attention-based neural networks for anomaly detection, (5) applying the introduced framework to a real-world case, (6) exploring the potential of federated learning to enable scalability across many echelons without reinventing the wheels for the already-existing echelons, (7) revealing the potential of reinforcement learning to better accommodate supply chain structural dynamics and changing goals over time.

Availability of data and materials

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.

References

Ashraf M, Eltawil A, Ali I (2022) Time-to-recovery prediction in a disrupted three-echelon supply chain using LSTM. IFAC-PapersOnLine 55(10):1319–1324. https://doi.org/10.1016/j.ifacol.2022.09.573

Burgos D, Ivanov D (2021) Food retail supply chain resilience and the COVID-19 pandemic: a digital twin-based impact analysis and improvement directions. Transport Res Part E: Logist Transport Rev 152:102412. https://doi.org/10.1016/j.tre.2021.102412

Buzacott JA, Shanthikumar, George J (1993) Stochastic models of manufacturing systems. Prentice-Hall International, London

Cavalcante IM, Frazzon EM, Forcellini FA, Ivanov D (2019) A supervised machine learning approach to data-driven simulation of resilient supplier selection in digital manufacturing. Int J Inf Manage 49:86–97. https://doi.org/10.1016/j.ijinfomgt.2019.03.004

Chen L, Miller-Hooks E (2012) Resilience: an indicator of recovery capability in intermodal freight transport. Transport Sci 46(1):109–123. https://doi.org/10.1287/trsc.1110.0376

Eshkenazi A (2022) Russia’s invasion of ukraine: The supply chain implications. Association for supply chain management. https://www.ascm.org/ascm-insights/scm-now-impact/russias-invasion-of-ukraine-the-supply-chain-implications/

Falasca M, Zobel C, Cook D (2008) A decision support framework to assess supply chain resilience. In: Proceedings of ISCRAM 2008—5th international conference on information systems for crisis response and management, 596–605. https://www.scopus.com/inward/record.uri?eid=2-s2.0-84893678362 &partnerID=40 &md5=29f8388ca656f4441f3b8a096098ca0f

Feng E (2021) Why China has to ration electricity and how that could affect everyone. NPR. https://www.npr.org/2021/10/01/1042209223/why-covid-is-affecting-chinas-power-rations

Frazzon EM, Freitag M, Ivanov D (2020) Intelligent methods and systems for decision-making support: toward digital supply chain twins. Int J Inf Manage 57:102281. https://doi.org/10.1016/j.ijinfomgt.2020.102281

Gao L, Yang N, Zhang R, Luo T (2017) Dynamic supply risk management with signal-based forecast, multi-sourcing, and discretionary selling. Prod Oper Manag 26(7):1399–1415. https://doi.org/10.1111/poms.12695

Gartner (2018) Gartner predicts 2019 for supply chain operations. gartner.com/smarterwithgartner/gartner- predicts-2019-for-supply-chain- operations

Gartner (2022) 5 Strategic supply chain predictions for 2022. https://www.gartner.com/en/articles/the-rise-of-the-ecosystem-and-4-more-supply-chain-predictions

Ghadge A, Wurtmann H, Seuring S (2019) Managing climate change risks in global supply chains: a review and research agenda. Int J Prod Res 58(1):1–21. https://doi.org/10.1080/00207543.2019.1629670

Golan MS, Trump BD, Cegan JC, Linkov I (2021) Supply chain resilience for vaccines: review of modeling approaches in the context of the COVID-19 pandemic. Ind Manag Data Syst 121(7):1723–1748. https://doi.org/10.1108/imds-01-2021-0022

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press. http://www.deeplearningbook.org

Géron A (2019) Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow (Vol 2). Canada, O’Reilly Media, Inc

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Hosseini S, Ivanov D, Dolgui A (2019) Review of quantitative methods for supply chain resilience analysis. Transport Res Part E: Logist Transport Rev 125:285–307. https://doi.org/10.1016/j.tre.2019.03.001

Ivanov D (2021) Introduction to Supply chain resilience, management, modelling, technology (1st ed). Springer, Cham. https://doi.org/10.1007/978-3-030-70490-2

Ivanov D, Dolgui A (2020) A digital supply chain twin for managing the disruption risks and resilience in the era of Industry 4.0. Prod Plan Control 32(9):1–14. https://doi.org/10.1080/09537287.2020.1768450

Ivanov D, Dolgui A, Das A, Sokolov B (2019) Digital supply chain twins: managing the ripple effect, resilience, and disruption risks by data-driven optimization, simulation, and visibility. In: Handbook of ripple effects in the supply chain (pp 309–332). Springer, Cham. https://doi.org/10.1007/978-3-030-14302-2_15

Ivanov D, Sethi S, Dolgui A, Sokolov B (2018) A survey on control theory applications to operational systems, supply chain management, and Industry 4.0. Annu Rev Control 46:134–147. https://doi.org/10.1016/j.arcontrol.2018.10.014

Kalaboukas K, Rožanec J, Košmerlj A, Kiritsis D, Arampatzis G (2021) Implementation of cognitive digital twins in connected and agile supply networks-an operational model. Appl Sci 11(9):4103. https://doi.org/10.3390/app11094103

Lee D, Lee S (2021) Digital twin for supply chain coordination in modular construction. Appl Sci 11(13):5909. https://doi.org/10.3390/app11135909

Li Z, Li J, Wang Y, Wang K (2019) A deep learning approach for anomaly detection based on SAE and LSTM in mechanical equipment. Int J Adv Manuf Technol 103(1–4):499–510. https://doi.org/10.1007/s00170-019-03557-w

Lu JZ (2020) Cognitive twins for supporting decision-makings of internet of things systems. Lecture notes in mechanical engineering, 105–115. https://doi.org/10.1007/978-3-030-46212-3_7

Ma J, Perkins S (2003) Time-series novelty detection using one-class support vector machines. Proc Int Joint Conf Neural Netw 2003(3):1741–1745. https://doi.org/10.1109/ijcnn.2003.1223670

Malhotra P, Ramakrishnan A, Anand G, Vig L, Agarwal P, Shroff G (2016) LSTM-based encoder–decoder for multi-sensor anomaly detection. arXiv:1607.00148

Melnyk SA, Zobel CW, Macdonald JR, Griffis SE (2013) Making sense of transient responses in simulation studies. Int J Prod Res 52(3):617–632. https://doi.org/10.1080/00207543.2013.803626

Munoz A, Dunbar M (2015) On the quantification of operational supply chain resilience. Int J Prod Res 53(22):6736–6751. https://doi.org/10.1080/00207543.2015.1057296

Nguyen H, Tran K, Thomassey S, Hamad M (2021) Forecasting and anomaly detection approaches using LSTM and LSTM autoencoder techniques with the applications in supply chain management. Int J Inf Manage 57:102282. https://doi.org/10.1016/j.ijinfomgt.2020.102282

Pernici B, Plebani P, Mecella M, Leotta F, Mandreoli F, Martoglia R, Cabri G (2020) Agilechains: agile supply chains through smart digital twins. In: 30th european safety and reliability conference, esrel 2020 and 15th probabilistic safety assessment and management conference, psam15 2020 (pp 2678–2684). https://doi.org/10.3850/978-981-14-8593-0_3697-cd

Qamsane Y, Chen CY, Balta EC, Kao BC, Mohan S, Moyne J, Barton K (2019) A unified digital twin framework for real-time monitoring and evaluation of smart manufacturing systems. In: 2019 IEEE 15th international conference on automation science and engineering (CASE) 00, 1394–1401. https://doi.org/10.1109/coase.2019.8843269

Radosavljević AR, Lučanin VJ, Rüger B, Golubović SD (2021) Big data analytics and anomaly prediction in the cold chain to supply chain resilience. FME Trans 49(2):315–326. https://doi.org/10.5937/fme2102315l

Răileanu S, Borangiu T, Ivănescu N, Morariu O, Anton F (2019) Integrating the digital twin of a shop floor conveyor in the manufacturing control system. Service oriented, holonic and multi-agent manufacturing systems for industry of the future (pp 134–145). Springer. https://doi.org/10.1007/978-3-030-27477-1_10

Schölkopf B, Williamson RC, Smola A, Shawe-Taylor J, Platt JC (1999) Support vector method for novelty detection. Advances in neural information processing systems (vol. 12, pp 582–588)

Spiegler V, Potter A, Naim M, Towill D (2015) The value of nonlinear control theory in investigating the underlying dynamics and resilience of a grocery supply chain. Int J Prod Res 54(1):265–286. https://doi.org/10.1080/00207543.2015.1076945

Spiegler VLM, Naim MM, Wikner J (2012) A control engineering approach to the assessment of supply chain resilience. Int J Prod Res 50(21):6162–6187. https://doi.org/10.1080/00207543.2012.710764

Subasi A (2020) Chapter 3: machine learning techniques. A. Subasi (Ed.), Practical machine learning for data analysis using python (pp 91–202). Academic Press. https://www.sciencedirect.com/science/article/pii/B9780128213797000035. https://doi.org/10.1016/B978-0-12-821379-7.00003-5

Torabi S, Baghersad M, Mansouri S (2015) Resilient supplier selection and order allocation under operational and disruption risks. Transport Res Part E: Logist Transport Rev 79:22–48. https://doi.org/10.1016/j.tre.2015.03.005

Vieira AA, Dias LM, Santos MY, Pereira GA, Oliveira JA (2019) Simulation of an automotive supply chain using big data. Comput Ind Eng 137:106033. https://doi.org/10.1016/j.cie.2019.106033

Zheng X, Lu J, Kiritsis D (2021) The emergence of cognitive digital twin: vision, challenges and opportunities. Int J Prod Res, 1–23. https://doi.org/10.1080/00207543.2021.2014591

Zheng X, Lu J, Kiritsis D (2021) The emergence of cognitive digital twin: vision, challenges and opportunities. Int J Prod Res, 1–23. https://doi.org/10.1080/00207543.2021.2014591

Acknowledgements

Thanks to Prof. Amin Shoukry (Department of Computer Science Engineering, Egypt-Japan University of Science and Technology, New Borg El-Arab City, Alexandria, Egypt) for his valuable guidance.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). This work was supported by the Egyptian Ministry of Higher Education (Grant number 10.13039/501100004532) and the Japanese International Cooperation Agency (Grant number 10.13039/501100002385).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. The first draft of the manuscript was written by Mahmoud Ashraf and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ashraf, M., Eltawil, A. & Ali, I. Disruption detection for a cognitive digital supply chain twin using hybrid deep learning. Oper Res Int J 24, 23 (2024). https://doi.org/10.1007/s12351-024-00831-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12351-024-00831-y