Abstract

Driven by the ongoing migration towards Industry 4.0, the increasing adoption of artificial intelligence (AI) has empowered smart manufacturing and digital transformation. AI enhances the migration towards industry 4.0 through AI-based decision-making by analyzing real-time data to optimize different processes such as production planning, predictive maintenance, quality control etc., thus guaranteeing reduced costs, high precision, efficiency and accuracy. This paper explores AI-driven smart manufacturing, revolutionizing traditional approaches and unlocking new possibilities throughout the major phases of the industrial equipment lifecycle. Through a comprehensive review, we delve into a wide range of AI techniques employed to tackle challenges such as optimizing process control, machining parameters, facilitating decision-making, and elevating maintenance strategies within the major phases of an industrial equipment lifecycle. These phases encompass design, manufacturing, maintenance, and recycling/retrofitting. As reported in the 2022 McKinsey Global Survey (https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2022-and-a-half-decade-in-review), the adoption of AI has witnessed more than a two-fold increase since 2017. This has contributed to an increase in AI research within the last six years. Therefore, from a meticulous search of relevant electronic databases, we carefully selected and synthesized 42 articles spanning from 01 January 2017 to 20 May 2023 to highlight and review the most recent research, adhering to specific inclusion and exclusion criteria, and shedding light on the latest trends and popular AI techniques adopted by researchers. This includes AI techniques such as Convolutional Neural Networks (CNN), Generative Adversarial Networks (GAN), Bayesian Networks, Support Vector Machines (SVM) etc., which are extensively discussed in this paper. Additionally, we provide insights into the advantages (e.g., enhanced decision making) and challenges (e.g., AI integration with legacy systems due to technical complexities and compatibilities) of integrating AI across the major stages of industrial equipment operations. Strategically implementing AI techniques in each phase enables industries to achieve enhanced productivity, improved product quality, cost-effectiveness, and sustainability. This exploration of the potential of AI in smart manufacturing fosters agile and resilient processes, keeping industries at the forefront of technological advancements and harnessing the full potential of AI-driven solutions to improve manufacturing processes and products.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present era of manufacturing is characterized by intense market competition, intricate and dynamic production processes, uncertain conditions, and a volatile market. To thrive in this globally competitive landscape, manufacturing companies are compelled to offer customized products while simultaneously reducing costs, shortening time-to-market, maintaining product quality, and ensuring customer satisfaction [1, 2]. To achieve these manufacturing objectives, numerous developed countries are actively promoting the use of advanced technologies as part of their national manufacturing strategies and initiatives. Examples include China's Made in China 2025 strategy, Germany's Industry 4.0, UK's Industry 2050 strategy, the USA's Industrial Internet, South Korea's manufacturing innovation 3.0, and Japan's society 5 [3]. At the heart of these strategies lies the concept of intelligent manufacturing, which emphasizes the seamless integration of artificial intelligence (AI) and advanced manufacturing techniques [4].

In an era driven by smart manufacturing strategies and advanced sensor technologies, manufacturing operations generate an immense amount of data, reaching around 1000 Exabytes yearly. This data contains valuable insights about various aspects of the manufacturing process, from critical events and alerts to production line activities [5]. Unfortunately, many manufacturing companies aren't fully utilizing this valuable resource. Within this vast data pool lies the untapped potential to uncover profound insights about machinery, processes, and systems. By using advanced data processing and analysis techniques, transformative insights can revolutionize how we understand and optimize manufacturing methods.

The advent of Industry 4.0 introduces a transformative era powered by groundbreaking technologies such as Blockchain (BC), Big Data Analysis (BDA), Artificial Intelligence (AI), Digital Twin (DT), Digital Twin Triplet (DT3), Internet of Things (IoT), Additive Manufacturing (AM), and Cyber-Physical Systems (CPS) [6,7,8]. The advent of Industry 4.0 introduces a transformative era powered by groundbreaking technologies such as Blockchain (BC), Big Data Analysis (BDA), Artificial Intelligence (AI), Digital Twin (DT), Digital Twin Triplet (DT3), Internet of Things (IoT), Additive Manufacturing (AM), and Cyber-Physical Systems (CPS) [9].

Throughout the intricate journey of a product or industrial equipment, which spans design, manufacturing, maintenance, and the cycles of recycling, reuse, and retrofitting, a diverse array of data emerges. This reservoir of data encapsulates the entire lifecycle's footprint. In the realm of Industry 4.0, the concept of Product Lifecycle Management (PLM) has gained prominence. PLM excels in orchestrating a product's existence, guiding it from inception through maturity and gracefully into its twilight [10]. Encompassing the domain of Industry 4.0, a symphony of technologies led by the likes of AI, IoT, Big Data (BD), and cloud computing are transforming advanced manufacturing. The convergence of these technological marvels paints a portrait of a manufacturing renaissance, where insights are illuminated, efficiency is elevated, and the very essence of how we craft and steward the life of a product is redefined [11]. The main contribution of this comprehensive literature review is that it provides valuable insights into the extensive applications of AI techniques throughout the lifecycle of industrial equipment.

Figure 1 visually depicts the paper's organizational framework. The paper's structure is as follows: Sect. 2 outlines the research methodology, including the literature review protocol and search query strings. Section 3 provides concise insights into modern production and the product lifecycle. Section 4 offers a brief overview of AI. Section 5 delves into popular AI techniques and their applications across industrial equipment lifecycles. Section 6 lists the benefits and challenges of integrating AI in industrial equipment lifecycles. Finally, Sect. 7 presents the research conclusion.

It is important to clarify our understanding of generic products and industrial equipment/components before commencing the literature review. Industrial equipment is a specific kind of generic product, customized for industrial applications. A generic product encompasses a wide range of items serving a function without industry-specific tailoring. These products are versatile and commonly mass-produced for various uses, like chairs providing seating in homes, offices, and restaurants. In contrast, industrial equipment includes machinery, tools, and systems created for industrial settings like factories and construction sites. Industrial equipment can be used standalone as a self-contained component or as part of other devices or machinery for use in industrial settings. These items are optimized for efficiency, durability, and performance in demanding environments. While both serve a function, industrial equipment is specialized for industrial needs, forming a focused subset of generic products designed for industry requirements.

The commonly used terms in this paper, such as AI (artificial intelligence), AM (additive manufacturing), PdM (predictive maintenance), etc. are abbreviated in Table 1, which furnishes a complete list of abbreviations and their meanings.

2 Research methodology

The objective of this literature review is to provide a thorough examination of the utilization of AI techniques throughout the various phases of the product/industrial equipment lifecycle. The review aims to identify the predominant AI techniques employed to address production challenges at each phase and determine their popularity. Additionally, it aims to explore how the application of AI at different lifecycle stages enhances collaboration along the manufacturing chain and the overall product lifecycle. Each publication included in this review has been meticulously analyzed and compiled to offer a comprehensive overview of the current state of the art and its potential for future advancements.

2.1 Review protocol

A protocol was established to guide the article selection process for this review paper. The protocol encompasses the identification of appropriate sources for literature selection, the formulation of search queries, and the establishment of inclusion and exclusion criteria for the chosen publications. The details of this protocol are outlined in the following subsection.

2.2 Selection of search sources

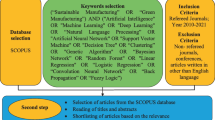

Various databases and search engines, such as Scopus, Web of Science, and Google Scholar, offer extensive collections of publications for researchers to explore. For this review, the Web of Science and Scopus databases were chosen based on several factors. Firstly, these databases enjoy popularity within the scientific community. Secondly, researchers and students have free access to Scopus and Web of Science through institutional agreements. Lastly, both databases provide comprehensive and reliable search results that can be utilized consistently.

2.3 Search query

The selection of appropriate query strings is a crucial aspect of this review and plays a key role in achieving its objectives. It is essential to use relevant and popular keywords that resonate with the research community to retrieve high-quality literature from scientific databases. This subsection focuses on analyzing the keywords employed in this review. Instead of using a complex single query string to capture articles related to all phases of an industrial equipment, separate query strings were formulated for the design, manufacturing, maintenance, and reuse-recycle-retrofit phases. Table 2. Query strings used to find publications from selected literature database presents the query strings utilized for each phase.

Each query string comprises two parts, each containing specific keywords. The first part focuses on newer and trending keywords in the literature that are relevant to the core subject of this paper, such as "machine learning," "deep learning," "industrial intelligence," "artificial intelligence," and "big data," amongst others. The second part consists of keywords that are specific to each phase of the product life cycle. For example, for the design phase, keywords like "generative design," "design optimization," "computer aided design," and "sustainable product design" are used.

It is important to note that terms like "Data Analytics ", "Data Mining", or "Stochastic Learning" are sometimes used interchangeably with "Machine Learning" and "Deep Learning" as they are all related to extracting knowledge from data in the field of data science. However, using these terms in the query string could potentially introduce a bias and affect the focus of this study. Moreover, specific keywords related to Machine Learning (ML) or Deep Learning (DL) techniques, such as "convolutional neural network (CNN)", "generative adversarial networks (GAN)", "Random Forest (RF)", or "k-means," are not included as search keywords to avoid artificially boosting the results of these specific techniques when addressing the second research question regarding the most used AI techniques throughout the product lifecycle.

To evaluate the selected keywords for the query strings, a brief bibliometric analysis was conducted using VOSviewer.Footnote 1 VOSviewer is a popular software tool developed by researchers at the Centre for Science and Technology Studies at Leiden University. It is designed for bibliometric analysis and visualization of scientific literature. VOSviewer enables users to analyze and visualize patterns, networks, and relationships among publications based on various bibliographic data, such as keywords, authors, journals, and citations. It provides valuable insights into research trends, collaborations, and the structure of scientific knowledge domains. The purpose of bibliometric analysis was to gain a preliminary understanding of the impact of various keywords and combinations on the results of the queries across all phases of the product life cycle. The queries were executed on selected literature databases on May 20, 2023.

After conducting the database searches for each phase of the product life cycle, the publications obtained were subjected to bibliometric analysis. The analysis focused on the keywords specified by the authors in these papers. The VOSviewer network visualization tool was used to present the analysis results. In the network, nodes represented by circles or rectangles correspond to keywords or items, with the size of each node indicating its importance based on the frequency of occurrence. The connections between nodes represent their co-occurrence, and the spatial distance between keywords reflects their relationship. For better visibility, a filter was applied to display a maximum of 45 items per graph. The "overlay visualization" feature was utilized to show the average publication year for each keyword through a color scale. The queried keywords within the first parenthesis were highlighted with a red rectangle for easier identification. The resulting networks are presented in Fig. 2.

2.4 Literature collection

Following the query of scientific databases using the chosen keywords, a significant number of search results were obtained, covering the period from 1961 to 20 May 2023. The distribution of publications by year for each phase of the product lifecycle is depicted in Fig. 2. In Fig. 3 the bars highlighted in green indicate the inclusion of the most recent publications in this study (from 1 January 2017 to 20 May 2023). The acceptance and rejection criteria are further elucidated in Sect. 2.4.

2.5 Acceptance and rejection rules

This study aims to investigate the application of AI techniques, including machine learning, deep learning, reinforcement learning, and natural language processing (NLP), in optimizing industrial processes throughout the various stages of a product's lifecycle within the context of smart manufacturing. This literature review focuses on recent publications from 1 January 2017 to 20 May 2023 written in English. To ensure the selection of the most relevant literatures for evaluation, specific acceptance and rejection criteria were established for this review. The acceptance and rejection criteria are summarized in Table 3 and further explained in the next paragraph.

2.6 Literature collection summary

The literature search process is summarized in Fig. 4, outlining the comprehensive approach followed to identify relevant publications. After an extensive search, articles that met the predetermined acceptance criteria were selected. A total of 43 articles were deemed suitable for further analysis.

3 Modern production and product lifecycle

The integration of artificial intelligence into the manufacturing industry has become increasingly crucial in optimizing complex production processes. In pursuit of greater efficiency and sustainability, research efforts have focused on leveraging Industry 4.0 technologies such as AI, IoT, big data, cloud computing and many more. These technologies aim to enhance the resilience and sustainability of production systems [12]. Smart factories, as a prime example of this integration, utilize context-aware applications and self-regulating mechanisms to optimize production processes [13].

The growing importance of innovation and digitalization in products, services, and processes has underscored the necessity of adopting advanced manufacturing technologies such as AI and ML. These algorithms have emerged as crucial tools for addressing complex problems and handling vast amounts of data, which are inherent challenges in supply chain networks. With a specific focus on computer science and engineering, AI and ML offer numerous advantages across industrial sectors, including enhanced innovation, process optimization, resource utilization, and improved quality [14]. Notably, these benefits have revolutionized the product lifecycle, enabling optimization of processes, resources utilization, and product and process quality at every stage, ranging from design and manufacturing to removal and disposal. Moreover, these advancements extend to all levels of supply chain stakeholders, facilitating collaboration and efficiency throughout the entire supply chain.

A generic product’s lifespan does not start at the factory where it is made and ends at the store where it is sold. A product’s life progresses from its conception as an idea through design, testing, manufacture, usage, and eventually retirement and disposal [15]. The product lifecycle encompasses the different phases that a product experiences from its creation to its ultimate disposal. Generally, the product lifecycle can be categorized into three primary phases: the beginning of life (BOL), the middle of life (MOL), and the end of life (EOL) as presented in Fig. 5. During the BOL phase, the product concept is developed, designed, and physically realized through production. The MOL phase involves the distribution and maintenance of the product by customers and distributors. Finally, the EOL phase requires manufacturers to recycle or dispose of products or their components that are no longer repairable or reusable [15, 16].

The phases of the lifecycle are connected in a loop (Fig. 5), mainly to show the interconnections and relationships between the phases. For instance, the EOL and BOL periods are connected because products are remanufactured, reused, retrofit, or recycled. However, based on the product or its component condition it may be disposed of and become part of landfill.

From the manufacturer's perspective, a product lifecycle encompasses the entire process starting from the conceptual design stage to the acquisition of raw materials, production, distribution, utilization, after-sale service, and ultimately, recycling and disposal. This holistic view of the product lifecycle takes into account every step involved in the journey of a product, ensuring a comprehensive understanding of its progression from creation to its eventual end [15].

Every stage of the product lifecycle entails distinct activities, involves specific personnel and departments, and generates substantial amounts of data. Understanding the objectives and requirements associated with each stage is crucial for all supply chain partners to effectively manage the product and achieve their respective goals. By comprehending the unique characteristics and demands of each stage, stakeholders can work collaboratively to ensure efficient product management throughout its lifecycle [13]. Figure 5 adapted from the original source [17] and modified by the author, provides a visual representation of the different stages involved in the lifecycle of a product. It illustrates the progression of a product, starting from its initial conceptualization or idea generation phase, followed by the design and manufacturing processes. Subsequently, the product flows through the supply chain, involving various actors and stakeholders who contribute to its distribution and usage. Ultimately, at the end of its lifecycle, the product is either reclaimed by recycling companies or disassemblers, or it may be discarded and end up in landfills as waste.

As illustrated in Fig. 6 a substantial amount of high-dimensional and diverse data is generated throughout the lifecycle of a product. This data can be of numerical nature, originating from sensors, cameras, or other vision-related devices, or textual data arising from customer services, market analysis reports, and end-user reviews. For instance, the BOL period encompasses the design and manufacturing stages. The design phase takes input data such as customer demands, product functions, and product quality derived from market analysis. It produces product design specifications as output. This includes computer aided drawings (CAD) files, computer programming codes, and a variety of configuration parameters like tolerance and location parameters.

Similarly, the production stage focuses on procurement, production planning, maintenance, and logistics. Here, real-time sensory data is collected from plant processes, along with historical data from database pertaining to machinery and its components. Information regarding component or machinery failure frequency, failure rate, supplier details, and outsourcing companies is also captured. Consequently, the production stage provides vital outputs like production scheduling and specifications, production plans, instructions for operators and assemblers, and inventory planning and status updates. The evolution of a product through these various stages results in a wealth of data that encompasses both numerical and textual aspects, all of which contribute to optimizing and streamlining the product lifecycle.

During the MOL period, a product goes through the maintenance, logistics, and utilization stages. At this stage, the product has reached its final form, and issues related to logistics, onsite maintenance, and services become increasingly significant and require careful attention. The MOL phase involves various businesses like maintenance, repair, and work processes carried out by different end-users of the product. After production, the products are delivered to distributors and sale points based on customer and market demand. Furthermore, users receive delivery services following the purchase of the product. In this context, optimal and efficient strategies are crucial for logistics planning to ensure accurate and timely transportation of goods. Logistics planning must be optimized using inventory data, order data, location data, etc., to ensure precise and timely delivery of products [16, 18, 19].

During the EOL period, the maintenance history, product status information, and working environment data gathered from the MOL period, utilized to calculate the product's health and remaining useful life. It is crucial to consider the product's status to make informed decisions about the best EOL recovery options, such as recycling, reuse, remanufacturing, disassembling, or disposal. By maximizing the value of EOL products, the most suitable recovery option can be chosen. Advanced analytics techniques play a significant role in obtaining a well-optimized project schedule, determining when, how, where, and what to recycle, thus ensuring efficient and effective EOL management [16,17,18].

4 AI overview, methods, and techniques

In this section, we offer a concise overview of the captivating field of AI and emphasize the subtle yet significant difference between AI methods and techniques. The terms “AI technique” and “AI method” are often used interchangeably, leading to confusion in some studies [19]. However, it is crucial to distinguish between these terms as it enriches our understanding of the diverse approaches employed in AI. By clarifying these concepts, we aim to provide a more accurate and comprehensive grasp of the broad spectrum of methodologies utilized throughout the life cycle of a product. For those eager to delve deeper into the realm of AI, we recommend exploring the study conducted by Mukhamediev et al., [20]. In this comprehensive review, the authors provide invaluable insights into AI technologies, their adoption in industry and society, as well as the advantages, challenges, and concerns surrounding their implementation.

4.1 An introductory overview of AI

AI is an intriguing and transformative field in computer science that seeks to replicate human-like intelligence in machines. It encompasses a vast array of techniques and algorithms that empower computers to perform tasks that would typically require human intelligence, such as learning, reasoning, problem-solving, and decision-making. The evolution of AI has been rapid, driven by advancements in computing power, the availability of vast datasets, and continuous algorithmic innovations [21,22,23].

A fundamental distinction within AI is the division between weak AI and strong AI. Weak AI, often referred to as Narrow AI, pertains to AI systems designed for specific tasks and applications. These systems excel in their designated domains and can outperform humans in their specialized functions. Examples of weak AI include virtual assistants like SiriFootnote 2 and Alexa,Footnote 3 language translation tools, recommendation systems, and facial recognition software. Despite their impressive performance in their respective areas, these AI systems lack the broader capabilities associated with human general intelligence. Conversely, strong AI, also known as Artificial General Intelligence (AGI), represents a higher aspiration in AI research. AGI aims to create AI systems that possess a level of general intelligence like that of humans, allowing them to understand, learn, and adapt across diverse domains. Achieving strong AI remains an ambitious goal and is yet to be fully realized. Developing AGI requires an in-depth understanding of human cognition, consciousness, and the ability to handle complex and unstructured tasks [24, 25].

AI is an interdisciplinary field that draws upon various scientific areas and domains such as computer science, deep learning, machine learning, evolutionary biology (evolutionary algorithms), expert systems, natural language processing, computer vision, robotics, and planning. As AI continues to advance, its impact spans across numerous industries, including healthcare, finance, manufacturing, transportation, and entertainment. It has led to remarkable applications such as autonomous vehicles, medical image analysis, financial fraud detection, and natural language processing. The potential of AI is vast, and it has the capability to revolutionize various aspects of society and improve human lives significantly. However, with its widespread adoption, AI also raises critical questions about ethics, bias, data privacy, and the responsible deployment of AI technologies. Addressing these challenges will be crucial in harnessing the full potential of AI for the betterment of humanity.

4.2 AI methods and techniques

The terms “AI technique” and “AI method” are often used interchangeably, but there is a subtle difference between the two. In the upcoming section, we will aim to provide a detailed clarification.

4.2.1 AI methods

AI methods represent the fundamental approaches and paradigms that guide the development of intelligent systems. These overarching methodologies serve as blueprints for problem-solving and knowledge acquisition. Some prominent AI methods include:

-

1.

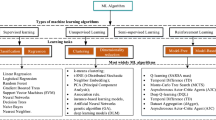

Machine learning (ML): ML is a core method in AI that empowers machines to learn from data without being explicitly programmed. It involves creating algorithms and models that can automatically identify patterns and relationships within the data and make predictions or decisions based on those patterns. ML can be broadly categorized into three main branches: Supervised Learning (SL), Unsupervised Learning (UL), and Reinforcement Learning (RL).

-

2.

Deep learning (DL): DL is a specialized subset of machine learning that utilizes artificial neural networks, inspired by the structure and functioning of the human brain. These deep neural networks consist of multiple layers of interconnected nodes (neurons) that process data hierarchically. DL has shown remarkable success in tasks such as image recognition, natural language processing, and speech synthesis. Its ability to process vast amounts of unstructured data, like images and texts, makes it particularly effective in complex and high-dimensional problem domains.

-

3.

Natural language processing (NLP): NLP is an AI method that enables machines to understand, interpret, and generate human language. NLP algorithms analyze and process text and speech data, transforming it into a format that machines can work with. This technology has revolutionized applications like language translation, sentiment analysis, chatbots, and voice assistants. By bridging the gap between human language and machine understanding, NLP has facilitated more natural and intuitive human–machine well-suited.

-

4.

Expert systems: Expert systems are AI programs designed to mimic the decision-making capabilities of human experts in specific domains. These systems rely on a rule-based system and knowledge representation to provide recommendations and solutions based on their domain-specific expertise. Expert systems excel in areas where human expertise is crucial, such as medical diagnosis, financial analysis, and troubleshooting complex technical issues.

-

5.

Evolutionary algorithms: Inspired by the principles of Darwinian evolution, Evolutionary Algorithms optimize solutions through an iterative process of candidate solution evolution. By using selection, crossover, and mutation operators, evolution algorithms explore and refine potential solutions to complex optimization problems. These algorithms have proven useful in various applications, including optimization, engineering design, and financial modeling.

-

6.

Reinforcement learning (RL): Reinforcement Learning introduces the concept of agents interacting with an environment and learning through trial and error. Agents take actions in the environment and receive feedback in the form of rewards or penalties, guiding their learning process. RL is well-suited for tasks where there is no readily available labeled data, and the agent must learn from its actions to achieve long-term goals. It has been successfully applied in areas such as game-playing, robotics, and autonomous systems.

-

7.

Probabilistic graphical models: Combining probability theory and graph theory, probabilistic graphical models offer a structured framework for reasoning under uncertainty and analyzing complex dependencies.

-

8.

Transfer learning: By leveraging knowledge from one task to improve performance on related tasks, transfer learning addresses data scarcity and facilitates efficient learning in diverse domains.

-

9.

Adversarial learning: Adversarial learning encompasses both defending AI models against adversarial attacks and crafting adversarial examples to evaluate model robustness.

-

10.

Swarm intelligence: Inspired by collective behavior in nature, swarm intelligence algorithms enable decentralized, self-organizing systems to collaboratively solve problems.

4.2.2 AI techniques

Complementing these overarching AI methods, various AI techniques serve as specialized tools and algorithms employed within the broader methodologies. These techniques play pivotal roles in addressing specific challenges and achieving superior performance in focused tasks. Some key AI techniques include:

-

1.

Convolutional neural networks (CNN): CNN is a deep learning technique designed specifically for image and video analysis. It uses convolutional layers to automatically extract meaningful patterns and features from images, enabling accurate tasks like object detection, image recognition, and image segmentation.

-

2.

Generative adversarial networks (GAN): GAN is a deep learning technique that consists of two neural networks, a generator, and a discriminator, which are trained adversarial. The generator generates synthetic data, while the discriminator tries to differentiate between real and synthetic data. This process leads to the creation of realistic synthetic data, benefiting applications like image synthesis and data augmentation.

-

3.

Language modeling: Language Modeling is an NLP technique used to predict the probability of a sequence of words in a sentence. It plays a crucial role in language understanding and generation tasks, helping machines generate coherent and contextually relevant sentences in tasks like machine translation and text generation.

-

4.

Rule-based systems: Rule-based Systems are techniques within Expert Systems that rely on a set of if–then rules to make decisions. These rules represent human expertise and domain knowledge, allowing machines to provide recommendations and solutions in specific domains, such as medical diagnosis and fault troubleshooting.

-

5.

Genetic algorithms: Genetic Algorithms are techniques under Evolutionary Algorithms that draw inspiration from the process of biological evolution. By iteratively evolving and refining candidate solutions through selection, crossover, and mutation, genetic algorithms optimize solutions for complex problems in engineering, optimization, and finance.

-

6.

Q-learning: It is a technique under Reinforcement Learning, which is used for training agents to make decisions in dynamic environments. The agent learns through trial and error and adjusts its actions based on feedback (Q-values) to maximize long-term rewards, making it suitable for tasks such as game-playing and autonomous navigation.

-

7.

First-order logic: First-order Logic is a technique under Knowledge Representation and Reasoning that formalizes logical relationships between entities and facts. It provides a logical framework for representing knowledge and reasoning, essential for applications requiring complex logical inferences and decision-making.

-

8.

Bayesian networks: It is a technique under Probabilistic Graphical Models, capturing probabilistic relationships between variables.

-

9.

Pretrained models: Pretrained Models are techniques under Transfer Learning that use models pre-learned on vast datasets to initialize and fine-tune models for specific tasks. This approach saves time and computational resources and improves performance on target tasks, making it beneficial for applications with limited training data.

-

10.

Ant colony optimization: A technique under Swarm Intelligence, solving optimization problems inspired by ant foraging behavior.

-

11.

Support vector machines (SVM): Support Vector Machines is a powerful supervised learning technique used for classification and regression tasks. SVM finds the optimal hyperplane that best separates different classes in the data space. It works by mapping data into a high-dimensional feature space and identifying the hyperplane with the maximum margin between classes. SVM has been widely applied in various domains, including image recognition, text classification, and bioinformatics.

-

12.

Random forest (RF): Random Forest is an ensemble learning technique that utilizes multiple decision trees to make predictions. It constructs a multitude of decision trees during the training process and combines their outputs to produce a more robust and accurate prediction. Random Forest is known for its ability to handle high-dimensional data and mitigate overfitting. It is commonly used in tasks such as classification, regression, and feature selection.

In summary, AI technique refers to a specific algorithm or approach used to address a particular AI problem, while AI method represents a more comprehensive set of techniques and strategies used to solve broader classes of AI challenges. It is important to note that the classification of AI techniques into specific AI methods may not always be straightforward, as many real-world applications such as vision, speech, planning, and robotics, often involve a combination of multiple AI methods and techniques to achieve the desired outcomes. The selection of AI methods depends on the nature of the problem, the availability of labeled data, and the complexity of the tasks involved. It is essential to recognize that the distinction between AI methods and techniques can sometimes be blurred, and authors may use different terminology in the literature. Nonetheless, understanding the various methods and techniques available in the realm of AI is crucial for comprehensively reviewing the applications of AI techniques throughout the lifecycle of industrial equipment.

5 Application of AI techniques through lifecycle of industrial equipment

Creating effective solutions for establishing collaborative networks across different phases of a product’s lifecycle presents a significant challenge. These solutions need to assess, analyze, and make informed decisions regarding how the product design impacts every stage of the lifecycle. This requires engineers involved in various phases to have a comprehensive understanding of the entire process and relevant information or data. As a result, managing substantial amounts of reliable information becomes crucial, necessitating the utilization of diverse technological solutions. By integrating artificial intelligence tools in a well-planned manner, synergies can be achieved across all factory functions, resulting in improved productivity, quality, cost-effectiveness, sustainability, and more. To fully realize these benefits, it is important to carefully select suitable artificial intelligence techniques and technologies for each specific stage of the product lifecycle.

To classify the methods and objectives of AI techniques in different stages of the product lifecycle, AI is clearly divided into four phases in this paper: the product design phase, product manufacturing phase, product maintenance phase and recycle/re-use/retrofit phase. Collaboration between these phases is crucial to exchange information among smart production units, smart logistics, smart products, smart organizational and engineering units, as well as individuals to achieve agile and resilient processes [10, 19, 26].

5.1 AI at design phase

The human design process plays a crucial role in creating technologies and environments that impact various aspects of our lives, including food, household products, and machinery. Product design engineering involves iterative processes and decision-making, starting with requirement identification and concluding with a detailed product description. Conventional product and engineering design processes have traditionally revolved around human-centric approaches, relying on the expert knowledge of individuals with scientific, intuitive, experiential, and creative methods [19]. Due to the adoption of AI into the different stages of the product and engineering design process, the conventional design approaches are changing dramatically. The AI-supported design techniques streamline complex design operations, enabling designers to concentrate on innovative and creative aspects while AI handles repetitive tasks like design comparison, evaluation, and parameter estimation. This activity reduces design time, delivers accurate results, and lowers overall design costs. Moreover, AI's high computational power, big data processing capabilities, and objective decision-making abilities make it superior to humans in executing these tasks [26,27,28].

The design process can be divided into three major phases: product design specification, conceptual design, and detailed design (design synthesis and optimization) [29]. Conceptual design is a critical stage where decisions can greatly impact the complexity of operations. It involves analyzing customer and design requirements, identifying the primary function, and seeking principles for solving the fundamental design problem. Evaluation and selection of a feasible concept are also crucial [30, 31]. Embodiment design focuses on clarifying, confirming, or optimizing details in primary design functions, considering aspects such as form, material, manufacturing process, assembly, and cost [32, 33]. Detailed design determines specifications, overall cost, and key factors in detail, aiming to finalize a manufacturable solution for production [33, 34].

The design engineering spectrum encompasses a wide variety of applications, ranging from designing machines and components like electric motors, lock nuts, thrust washers, super gear, etc.; aircraft models, and ship propellers to small-scale metamaterials [35]. The next subsections list the various AI techniques used at different phases of product design.

5.1.1 Unleashing creativity: AI's role in design inspiration and concept generation

Idea generation is a crucial stage in product design. However, designers often encounter difficulties in generating innovative ideas due to psychological inertia, commonly known as design fixation [36]. This cognitive barrier hampers the creative thinking process and obstructs the exploration of new design concepts during the conceptual design phase, posing a significant challenge to achieving the ideal design outcome like user requirements, expenditure, visual appeal, user comfort, operational capabilities, manufacturing techniques, and sustainability. Recognizing the importance of developing popular and novel products, the conceptual design phase is regarded as a critical aspect of the overall product design process. To overcome this obstacle and foster creativity, it is essential to integrate thorough market research, customer acceptance, domain expertise, intuition, intellectual acuity, and creative skills into the conceptual design process [31, 37, 38]. Furthermore, the decisions made during the conceptual design stage have a profound impact on various aspects of a product, including costs, performance, reliability, safety, and environmental impact. However, it is important to acknowledge that the design requirements and constraints at this early phase are often imprecise, approximate, and sometimes impractical [16]. Delays in this phase can lead to increased production costs and decreased market share [29].

During the stage of inspiration and concept generation in product design, a wealth of insights is derived from market analysis and customer feedback, providing valuable guidance for creating innovative and user-centric products. Additionally, the exploration of patent databases unveils a treasure trove of technical documents brimming with cutting-edge technology and design information. These documents shed light on intricate details, encompassing the realms of technical functionalities, material compositions, working principles, and visionary conceptual aspects, fueling the imaginative process of design and product development [39].

However, the analysis of data obtained from market and patent databases presents considerable challenges due to the inherent unstructured nature of the data, potential biases that may arise, and the susceptibility to human errors. These factors intricately inter-play to create intricate complexities during the process of data analysis, demanding meticulous attention and robust methodologies to derive meaningful insights [40]. Hence AI has emerged as a valuable tool for overcoming design fixation by providing verbal, written, and visual inspiration, stimulating innovative thinking, and facilitating the generation of fresh design ideas [38, 41].

During the concept generation stage, researchers used evolutionary algorithms as well as ML and DL methods to explore design space. In [42], a genetic algorithm (GA) was used to explore various configurations for a powertrain system comprising an engine, transmission, and drive shaft. With 3000 possibilities, the algorithm outperformed human experts, indicating its potential for automated product design. In [43], researchers conducted a study where an Artificial Neural Network (ANN) was utilized for product configuration, proving its effectiveness in determining optimal design configurations based on customer preferences. In [43], researchers utilized an ANN to determine the ideal form of a perfume bottle by generating combinations of product forms. Similarly, in [44], researchers have employed ANN and crowdsourcing to identify new design concepts, utilizing datasets that included spoken language. This approach facilitated the quick scanning of large datasets, such as surveys, competitions, and patents, to identify innovative ideas. In the study made in [44], a combination of ANN and GA is used to formulate the design concept of an aesthetic product. The ANN utilizes survey data to determine product form features, which are subsequently employed by the GA to generate alternative design concepts for washing machines, coffee makers, and mixers with aesthetic appeal.

Other machine learning and data mining techniques have demonstrated successful applications in idea generation, particularly in extracting both explicit and hidden ideas from textual and visual sources. Extensive datasets, including patent databases, academic journals, web pages, survey data, and speech data, can be systematically searched and analyzed to extract and categorize ideas. These methods provide a valuable means to harness the wealth of information available in these sources for innovative idea generation [45, 46]. For example, in a relevant study [40], the authors employed a combination of supervised and unsupervised machine learning algorithms to analyze customer needs using a large dataset sourced from customer reviews, complaints, and online surveys. The supervised algorithm, fastText,Footnote 4 was utilized to extract relevant data, while the unsupervised algorithm, Valence Aware Dictionary and Sentiment Reasoner (VADER), was employed for the identification and classification of customer needs. In [47], a Decision Tree (DT) algorithm (an ML algorithm) was employed to generate innovative ideas in product design by identifying customer needs. This approach aims to achieve multiple objectives, including cost reduction, enhanced product quality, accelerated new product development, and improved competitiveness.

Computer vision, coupled with DL algorithms, offers the potential to generate novel product options. By leveraging DL techniques, such as image recognition and analysis, visual data in the form of photos and videos can be effectively interpreted and analyzed. This opens opportunities for the generation of innovative product alternatives [19], through Generative Adversarial Networks (GANs) [48] and Variational autoencoders (VAEs) [49]. In studies [50, 51], the authors employed GANs and Conditional Variational Autoencoders (cVAEs) to generate a wide range of design options for mechanical structures, such as airfoils and wheels, even with limited input design data. Researchers in [52], proposed an approach based on Performance Augmented Diverse GANs (PaDGAN), which is a modified version of GANs specifically designed for industrial design applications. The modification in the loss function of general GAN aims to enhance GANs' performance and enable the generation of high-quality designs beyond the boundaries of the training data. In another study [53], a combination of a Convolutional Neural Network (CNN) and Deep Convolutional Generative Adversarial Network (DCGAN) was utilized to generate unique 3D-shaped concepts for mechanical components, such as springs. The authors trained the DCGAN model using perspective views (2D-shape) of 130 3D spring models, generating new 2D spring models. The CNN model was then employed to match the geometric and structural properties of the 3D models based on the 2D spring images. Utilizing this approach, a distinct 3D spring model was created by estimating its geometrical and structural properties. Notably, achieving such results with a relatively small dataset is remarkable, and further improvements can be made by incorporating the generated models into the training set.

Additionally, there have been efforts by researchers to integrate human input into AI-assisted design generation frameworks. In [54], electroencephalography (EEG) signals were utilized to capture the brain's electrical activity when exposed to physical products or images. These signals were then used to create a dataset that represented the recorded voltage fluctuations resulting from neuronal activity. Subsequently, a Long Short-Term Memory (LSTM) algorithm, a type of Recurrent Neural Network (RNN), was trained to correlate these signals with the desirability of specific design features. To further enhance the process, a GAN model conditioned on these design features was trained, allowing the generation of product concept images solely based on EEG signals. This approach holds significance as it enables AI methods to learn and interpret design requirements that may not be easily expressed verbally.

5.1.2 Revolutionizing design synthesis through artificial intelligence techniques

Design synthesis has always been a crucial aspect of engineering, as it involves creating innovative and efficient solutions to complex design problems having crucial geometric considerations such as automobile and aerospace design. In the past, design synthesis heavily relied on manual iterations and human expertise, which could be time-consuming and limited in exploring the design space. However, with the integration of AI techniques, the process of shape synthesis has been revolutionized. One area of focus is 2D and 3D shape synthesis within the aerospace domain, which aims to generate optimal shapes that meet specific design requirements and constraints [19].

In the realm of aerospace engineering, researchers have devoted considerable attention to the design of airfoils, which are the cross-sectional shapes of wings. This area of study specifically focused on 2D shape synthesis. Airfoils play a pivotal role in numerous engineering applications, ranging from propeller and rotor design to turbine blade development. Due to the crucial importance of airfoil performance parameters, a significant amount of research is focused on the conditional generation of airfoils tailored to fulfill specific performance criteria [26]. Hence in [55], researchers have employed conditional GANs (CGAN) and Deep Convolutional Neural Networks (DCNN) to generate new airfoil shapes based on desired performance. The DCNN structures address challenges associated with shape parametrization in traditional methods, allowing for pattern detection and utilization at a lower level of abstraction. The framework is demonstrated through the training of generator and discriminator networks using a database of airfoil shapes and conditional information. Once trained, the CGAN generator can produce customized airfoil shapes based on specified stall conditions or drag polar information. Also, some researchers have employed deep generative models to acquire knowledge of shape parameters through spline interpolation. For example, the study conducted in [56] proposed a Reinforcement Learning (RL) approach, in which the agent learns optimal policies by learning the equation coefficient.

The field of 3D object generation through DL has garnered significant attention in computer science. Researchers have been actively exploring methods to generate realistic shapes and objects in three-dimensional space. Various representations such as voxels, point clouds, and meshes are commonly used to represent 3D shapes and objects. In this area, advancements in 3D shape synthesis have heavily relied on techniques such as GANs and AEs, as well as RNNs, Transformers, and GNNs. In the realm of engineering design, most of the research on 3D shape synthesis has emphasized the inclusion of design performance considerations. In [57], researchers have proposed a hybrid approach for data-driven 3D shape synthesis. The approach utilizes VAE, an unsupervised DL technique to extract a compact latent design representation from a corpus of 3D designs. This latent representation captures important design information and allows for the generation of new designs through sampling, interpolation, and extrapolation in the latent space. Also, a simple latent space design crossover technique is used that enables a genetic optimizer to produce a diverse set of new designs for 3D aircraft models through stochastic interpolation and extrapolation of latent vectors.

In [58], authors proposed architecture for conditionally generating 3D objects in the form of point clouds. This architecture consists of AEs and CGAN. 3D objects are converted to latent vector space through AE and then a CGAN is trained on this latent space. The new objects are created in latent space by trained CGAN. However, in order to make the proposed architecture suitable for industrial applications, there is a need to enhance the precision of dimension specifications addressed in this study. To improve the quality of generated data, researchers in [59] employ an iterative retraining strategy, wherein a GAN is retrained on high-performance models assessed using computational fluid dynamics (CFD) evaluation. The method is applied to point cloud aircraft models sourced from ShapeNet,Footnote 5 with the objective of minimizing aerodynamic drag as the chosen performance metric. The authors utilize a conventional GAN loss and adopt a discriminator architecture from [60]. To address the challenge of sparse conditioning in data-driven inverse design tasks, researchers in [61], have proposed a range-constrained deep generative model, called Range-GAN, with a label-aware self-augmentation technique for automatic design synthesis with range constraints for GAN training. Additionally, they incorporate a "range loss" mechanism to enforce adherence to design constraints associated with parameter limits and apply this methodology to the generation of 3D aircraft models.

5.1.3 Advancing Topology optimization through artificial intelligence techniques

In the field of engineering design, topology optimization plays a crucial role in determining the ideal product configuration, improving its features, maximizing functionality, minimizing costs, and achieving lightweight components, amongst other objectives. The goal is to generate or identify the most favorable design while considering a multitude of constraints. By utilizing design optimization techniques, companies can achieve significant improvements in product performance, efficiency, and overall competitiveness. Over the years, various techniques have been developed to tackle topology optimization problems, ranging from classical mathematical programming approaches to more recent data-driven methods. In recent years, the emergence of deep generative models has brought about a paradigm shift in topology optimization. Deep generative models, such as generative adversarial networks (GANs) [48] and variational autoencoders (VAEs) [49], have shown tremendous potential in capturing complex patterns and generating novel designs. By leveraging the power of Deep Learning (DL), these models enable engineers to explore and optimize design spaces in ways that were previously unattainable.

Deep generative models offer significant advantages in topology optimization compared to traditional approaches. These models provide a flexible framework for representing complex design spaces, allowing for the exploration of unconventional solutions. They also facilitate the incorporation of design constraints, ensuring the generation of feasible and manufacturable designs. Furthermore, deep generative models enable engineers to explore design alternatives and trade-offs, considering multiple conflicting objectives. This helps in making informed decisions and gaining valuable insights into the design space. Additionally, these models allow for the integration of domain knowledge and expert guidance, combining data-driven learning with engineering expertise. Although the field of utilizing deep generative models in topology optimization is still evolving, ongoing research aims to address challenges such as model interpretability, computational efficiency, and handling high-dimensional design spaces. Despite these challenges, the potential for deep generative models to revolutionize topology optimization is undeniable, offering the possibility of more efficient, functional, and sustainable product designs.

The authors in [62] conducted an experiment where they trained a Wasserstein Generative Adversarial Network (WGAN) using a dataset generated through topology optimization (TO). In addition to training the WGAN, they also trained another network called an auxiliary network. The purpose of the auxiliary network was to predict performance metrics related to the generated designs. By implementing the proposed method, it has been demonstrated that the wheel design for automobiles can be automatically generated without the need for human intervention. The results have revealed that this process produces designs that are not only aesthetically superior but also possess significant technical value. The authors in [63] conducted a study where they trained VAEs using a dataset generated through TO for heat transfer. In addition to training the VAEs, they incorporated an additional loss function based on style transfer [64], which is a technique used in image processing tasks. The purpose of this additional loss was to enhance the quality and style of the generated designs. Furthermore, the authors proposed iterative strategies that leverage the latent space of the VAEs for targeted design optimization.

In [51, 65], the authors presented an iterative method for generative-network-fitting that incorporates TO and filtering of similar designs. They employ a modified version of the Boundary Equilibrium Generative Adversarial Network (BEGAN) which is an extension of the WGAN, trained on pre-existing TO-generated design topologies. Unlike previous generative network-fitting approaches [62, 63, 66], this method includes retraining and re-optimization steps, enabling the exploration of new design regions. The focus of their application is on wheel design, aiming to achieve a balance between aesthetics and structural performance. Through empirical evidence, the authors demonstrate the effectiveness of their approach in the context of wheel design.

The study conducted in [67] presents a method that tackles the problem of gaps or unexplored regions within the design space of topologies. They propose the use of a Variational Deep Embedding (VaDE) (VaDE) [68] approach. Initially, a dataset is generated using TO. The proposed method then proceeds to identify voids or unexplored areas in the design space by utilizing the VaDE. Designs are decoded from these voids, optimized using TO, and subsequently added to the training dataset. This iterative process helps to fill the gaps in the design space and expand the range of feasible designs. This work has focused on automated retraining methods for topology design generation. However human inputs can be incorporated into the training phase. Researchers in [69] introduce a framework for topology design generation that combines human input with a Conditional Generative Adversarial Network (CGAN). The designer actively participates by selecting design clusters iteratively, refining the process towards preferred designs. This collaborative approach merges the designer's expertise and preferences with the generative capabilities of the CGAN model, facilitating an iterative design process. The aim is to harness the synergy of human creativity and machine generative power to achieve optimal topology designs.

The performance of deep generative models (DGMs) in topology generation has been enhanced in various studies by incorporating the physical properties of the design domain, resulting in improved baseline results. Researchers in [70] proposed an approach for generating synthetic topologies using the CGAN architecture called “Pix2Pix” architecture. In their method, spatial fields of different physical parameters such as von mises stress, displacement and strain energy density are used as input to the generator. The ground truth for training the generator is obtained from the topologies generated by TO. Additionally, the authors introduce a novel generator architecture that combines the squeeze and excitation of ResNetFootnote 6 with U-Net,Footnote 7 aiming to improve the performance of the generator in generating accurate and realistic topologies. Similarly, in [71], researchers utilize a Neural Network (NN) to generate optimized topologies based on the given loading conditions. They propose an iterative approach to evaluate and improve the deviation of proposed solutions from the optimal conditions imposed by the problem. During the training process, they gradually expand their dataset by recalculating optimal solutions using TO for the proposed solutions that exhibit the most significant violation of the provided conditions optimality. This iterative process allows them to improve the performance of the NN by incorporating additional training examples that focus on challenging cases where the proposed solutions deviate the most from the desired optimality conditions.

The field of electric machine design also benefits from the application of AI techniques to optimize design topologies, such as motor design. Optimizing the design of electric machines poses a multi-objective and nonlinear challenge [72]. For example, during the design of the Switched Reluctance Motor (SRM), numerous geometric parameters come into play, including the number of stator and rotor poles, bore diameter, pole arc angles, taper angles, air gap length, and more. These parameters must be carefully selected to meet the specific requirements of the application in an efficient manner. To improve the torque profile of a 3-phase 12/8 SRM, the researchers in [73] have employed a Generalized Regression Neural Network (GRNN) to determine the optimal stator pole arc angle and rotor pole arc angle. They utilized Finite Element Analysis (FEA) to obtain the static torque characteristics of the specific motor being studied. The results obtained by FEA is used to train an ANN for approximating the objective function. Another research [74] introduced an enhanced variant of GRNN to model a 4-kW 12/8 SRM. They used the Fruit Fly Optimization Algorithm (FFOA) to optimize the spread parameter of GRNN. In this study, the researchers employed the fruit fly optimization algorithm to optimize the spread parameter of the GRNN. Their model effectively captured the nonlinear correlation between the ripples, operational efficiency, and three geometric variables: stator pole arc angle, rotor pole arc angle, and rotor yoke thickness.

In addition to SRMs, ML algorithms have also been employed to design different types of electric motors. For example, the design optimization of Permanent Magnet Synchronous Motors (PMSMs) [75] proposes the use of Extreme Learning Machines (ELM), the goal is to achieve optimal performance in terms of high average thrust, low thrust ripple, and low total harmonic distortion at various operating speeds. ELM is used to map the complex relationship between input factors and motor performance using data obtained from FEA. Then a Gray Wolf Optimizer Algorithm (GWOA) is employed for iterative optimization of multi-objective functions, leading to the identification of optimal performances and structural parameters. In [77], researchers adopted the Support Vector Regression (SVR) approach for design optimization of a 3-kW 6-phase concentrated-winding direct-drive PMSM to meet the electric vehicle performance requirements. The primary objective of using SVR was to expand the range of design solutions within the design space. A Pareto frontFootnote 8 method was then applied to get the optimal design models with maximum torque density and minimum torque ripple.

5.1.4 Summary

In concluding this section, it is important to highlight that the upcoming tables and figures will refer to "Standard GAN" techniques. It is essential to understand that Modified GAN entails upgrades and adjustments to the loss function of the standard GAN, resulting in enhanced performance and capabilities. Throughout our research, we have extensively explored the application and utilization of AI techniques during the product design phase. This investigation encompasses three major stages within this phase: Inspiration and concept generation, Shape synthesis, and Topology optimization. Our analysis has revealed the use of 18 different AI techniques in these stages, with Generative Adversarial Networks (GAN) and Deep Learning (DL) being the most prevalent techniques.

Table 4 provides an exhaustive compilation of AI techniques commonly applied throughout various design stages. In the "Inspiration and concept generation" phase, Artificial Neural Networks (ANN) emerge as the most prevalent AI technique, owing to their prowess in learning and mapping complex functions. Following closely behind are Genetic Algorithms (GA) and both modified and standard Generative Adversarial Networks (GAN), which share equal significance and extensive utilization in this creative stage. Conversely, Autoencoders (AE) and their variants, Decision Trees (DT), and Convolutional Neural Networks (CNN) find relatively lesser usage during this phase.

In the realm of shape synthesis, Autoencoders (AE/VAE), Deep Convolutional Networks (DCN), and both modified and standard GAN techniques play equally critical roles, providing diverse avenues for generating innovative designs. The well-balanced application of these techniques showcases their adaptability and effectiveness in shaping the artistic aspect of the design process. In the realm of Topology optimization, there is a considerable research focus on utilizing AI algorithms from the Generative Adversarial Networks (GAN) family, particularly emphasizing the modified GAN variant. Additionally, Autoencoders (AE) and style transfer techniques are also utilized, while ANN and SVR techniques are the least utilized for Topology optimization tasks.

In Fig. 7, the bar chart provides a visual depiction of the prevalence of AI techniques across various stages of the product design process. This encompasses crucial phases such as Inspiration and concept generation, Shape synthesis, and Topology optimization. On the vertical axis, we have the spectrum of AI techniques, while the horizontal axis quantifies the volume of publications linked to each of these techniques. Evidently, the chart highlights the significant influence of AI algorithms, with a specific emphasis on those within the Generative Adversarial Networks (GAN) family, within this field. Notably, the modified GAN variant has emerged as a widely favored option among professionals. Additionally, other influential techniques come into focus. Autoencoders (AE), acknowledged for their capabilities in creating latent space representations, hold considerable importance. The chart also emphasizes the use of Artificial Neural Networks (ANN) and traditional GAN methods. This visualization adeptly illustrates the prevailing pattern of AI methods intricately integrating into the various phases of product design. It portrays a scenario where the fusion of innovative strategies, encompassing specialized GAN variants, reliable Autoencoders, and resilient ANN techniques, collectively drive the progression of product design.

Within the confines of the pie chart in Fig. 8, a compelling narrative of AI technique adoption across distinct stages of product design unfolds. This visual representation unravels a fascinating tapestry of insights. As we delve into the slices of Fig. 8, a clear pattern emerges, casting light on the dynamic landscape of AI's integration into the realm of design. At the forefront, Topology optimization (TO) commands a substantial share, standing at an impressive 39%. This signals a resounding resonance with this AI technique, underscoring its pivotal role in refining and enhancing the design process. It emerges as the beacon guiding the intricacies of design optimization, steering creations toward their most efficient and refined forms. Moving across the canvas of the pie chart, we encounter the realm of Inspiration and concept generation, occupying a significant portion at 37%. This notable chunk signifies the recognition of AI's creative potential in sparking novel ideas and conceptual frameworks. It speaks to the harnessing of AI's capabilities to ignite the initial sparks of innovation, setting the stage for visionary creations to take shape As we explore further, the segment dedicated to shape synthesis captures our attention at 24%. While relatively smaller in comparison with the other two stages, by no means diminishes the importance of this stage. Here, AI’s hand guides the evolution of design shapes, instilling a touch of ingenuity in the journey toward finalizing a product's form.

In essence, Fig. 8 encapsulates a narrative of AI techniques interwoven seamlessly throughout the tapestry of product design. It underscores the strategic utilization of TO, the fertile ground of Inspiration and concept generation, and the subtle yet impactful role of shape synthesis. Collectively, these insights underscore the nuanced interplay between AI and design, painting a vivid picture of innovation's evolving landscape. Figure 9 presents a comprehensive overview of the popularity of various AI techniques in the design phase of a product. The major pie chart, which accounts for 79% of the total area, showcases the most frequently utilized AI techniques during this phase.

Moreover, Genetic Algorithms (GA) and Style transfer techniques contribute 8% and 5%, respectively, to the design phase. In addition, various other techniques such as Decision Trees (DT), Deep Convolutional Networks (DCN), FOA-GRNN, FastText, VADER, Gated Recurrent Neural Networks (GRNN), GWOA-ELM, Long Short-Term Memory (LSTM), ResNet with U-Net, and Reinforcement Learning (RL) each maintain a moderate presence of 3%.

On the other hand, the minor pie chart, formed from an arc of the major pie chart and representing 21% of the total area, highlights the utilization of AI techniques that belong to the Generative Adversarial Networks (GANs) family but have modified loss function which is an enhancement of standard GAN. In this paper they are combined under the term "Modified GAN," these techniques play a significant role in the design phase. CGANs contribute 8% to the overall utilization, showcasing their prominence in this context. Other GAN techniques, such as PaDGAN, DCGAN, BEGAN, WGAN, and Range-GAN, each have a 3% share.

Overall, the pie of pie chart provides valuable insights into the distribution of AI techniques during different stages of the product design phase. AI's indispensable role in product design is evident across all stages. From igniting the creative spark during inspiration and concept generation to navigating the intricacies of shape synthesis and optimizing topology, AI techniques leverage data analysis, generative models, and optimization algorithms to enhance creativity, efficiency, and overall design excellence. By seamlessly incorporating AI into these stages, designers gain access to a wealth of opportunities, enabling groundbreaking innovation, accelerated iteration cycles, and meticulously fine-tuned product designs. The harmonious blend of human ingenuity and AI's computational capabilities propels product design into an era of boundless possibilities.

5.2 AI at manufacturing phase

The manufacturing phase in the product lifecycle encompasses all the activities involved in transforming raw materials or components into finished products. This phase involves various processes and tasks that contribute to the production and assembly of the product. Some key aspects and activities typically included in the manufacturing phase are:

-

1.

Production planning: This involves determining the production requirements, creating production schedules, and establishing the necessary resources and facilities for manufacturing.

-

2.

Procurement: The procurement process involves sourcing and acquiring the required raw materials, components, and equipment needed for production.

-

3.

Production operations: This includes the actual manufacturing processes, such as machining, assembly, welding, molding, or any other specific operations involved in converting raw materials into finished products.

-

4.

Quality control: Quality control activities ensure that the manufacturing processes meet the required quality standards. This includes inspections, testing, and monitoring of the production processes and the finished products to ensure they meet the specified criteria.

-

5.

Inventory management: Managing inventory levels and ensuring the availability of materials and components throughout the manufacturing process is crucial to avoiding delays or disruptions.

-

6.

Equipment maintenance: Regular maintenance and servicing of manufacturing equipment are essential to ensure optimal performance, minimize downtime, and extend equipment lifespan.

-

7.

Supply chain management: Coordinating with suppliers, managing logistics, and overseeing the flow of materials and components from suppliers to the manufacturing facility is critical for a smooth manufacturing process.

-

8.

Process optimization: Continuously improving manufacturing processes, identifying inefficiencies, and implementing lean manufacturing principles to enhance productivity and reduce waste.

-

9.

Environmental and safety compliance: Adhering to environmental regulations and ensuring a safe working environment for employees during the manufacturing process is essential.

Overall, the manufacturing phase focuses on efficiently and effectively producing the desired quantity and quality of products while meeting cost, time, and quality objectives. The manufacturing industry is witnessing a transformative shift with the integration of artificial intelligence (AI) and machine learning (ML) technologies into various stages of the production process. AI is revolutionizing manufacturing by enabling intelligent decision-making, optimizing processes, and improving overall process efficiency and product quality [76]. One of the major advancements facilitated by AI in manufacturing is the intelligent integration of subtractive manufacturing (SM) and additive manufacturing (AM) processes. By combining traditional subtractive manufacturing techniques, such as machining, with additive manufacturing methods like 3D printing, manufacturers can benefit from the strengths of both approaches. AI algorithms are used to analyze design requirements, material properties, and production constraints to determine the optimal combination of processes, resulting in improved product quality, reduced waste, and increased manufacturing speed [77,78,79].

AI also finds applications in procurement, supply chain management, supplier selection, and warehousing/logistics management. By leveraging AI-powered systems, manufacturers can streamline their procurement processes, optimize inventory management, and enhance supplier selection based on factors such as pricing, quality, delivery time, and reliability. AI algorithms can analyze supply chain data, forecast demand, and optimize distribution and logistics networks to ensure efficient material flow and timely delivery, leading to improved operational efficiency and cost savings. Furthermore, ML (a subset of AI) and DL (a subset of ML) found applications in quality assessment [20, 24]. Quality assessment based on DL is a prominent application of AI in the manufacturing phase. DL algorithms can analyze large volumes of data, including sensor readings, images, and process parameters, to assess product quality in real time. By training DL models on historical data and known quality standards, manufacturers can detect anomalies, classify defects, and ensure consistent and high-quality production outcomes. AI-driven quality assessment minimizes the need for manual inspection, improves defect detection rates, and enhances overall product reliability [76, 79, 80].

In the next sections, we will explore the various AI techniques utilized in the manufacturing (AM and SM) phase. We will discuss real-world examples, case studies, and emerging trends that highlight the transformative potential of AI in intelligent integration, process optimization, supply chain management, human–robot collaboration, and quality assessment. By embracing AI in the manufacturing phase, organizations can achieve enhanced productivity, improved quality control, optimized supply chain operations, and a competitive edge in the rapidly evolving manufacturing landscape.

5.2.1 AI application in additive manufacturing

In recent years, additive manufacturing (AM), commonly referred to as 3D printing, has made significant advancements and has gained prominence across various industries [81]. AM involves the layer-by-layer fabrication of products or components based on the design specifications derived from a 3D model. It offers numerous advantages over traditional manufacturing methods, such as rapid prototyping and swift design iteration, the ability to create highly customized products, components with intricate geometries, and tailored material properties, while minimizing material waste [82]. As AM technology continues to evolve, the ASTM F42Footnote 9 standards categorize AM processes into seven different categories, with several of them capable of producing metallic parts for applications in sectors like automotive and aerospace [80, 83].

However, despite its potential, the industrial adoption of AM faces challenges related to production speed, issues with surface quality and dimensional accuracy of the parts [84], and microstructural deviations that can impact the mechanical properties and overall product quality [85]. Moreover, AM is typically not suitable for manufacturing large-sized products due to limitations in build volume capacity and constraints on the range of materials that can be used during the printing process [86, 87]. The primary cause of these shortcomings in the AM process is the simultaneous formation of both the shape and material properties of a part during the AM process. The production of an AM part entails intricate interactions among design, material, and process elements throughout a multi-stage process that comprises five key steps: design, process planning, building, postprocessing, and testing and validation [88]. The successful fabrication of a qualified part relies on the meticulous and precise execution of each of these steps.