Abstract

In this paper, we study a model of a market with asymmetric information and sticky prices—the dynamic Stackelberg model with a myopic follower and infinite time horizon of Fujiwara ("Economics Bulletin" 12(12), 1–9 (2006)). We perform a comprehensive analysis of the equilibria instead of concentrating on the steady state only. We study both the equilibria for open loop and feedback information structure, which turn out to coincide, and we compare the results with the results for Cournot-Nash equilibria.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Price stickiness is a way to model non-instantaneous price adjustment. This market imperfection is an important topic in macroeconomics, with many papers proving it from various types of data (e.g. Anderson et al. 2015; Lünnemann and Wintr 2011; Gorodnichenko and Weber 2016), but it is also worth considering in microeconomic problems like the market equilibrium in an oligopoly. Such a market can be modelled as a dynamic game—a differential game. The first such formulation of a model with sticky prices has been introduced by Simaan and Takayama (1978). The theoretical model has been further exploited e.g. by Fershtman and Kamien (1987, 1990), Tsutsui and Mino (1990), Piga (2000), Dockner and Gaunersdorfer (2001), Cellini and Lambertini (2004, 2007), Benchekroun et al. (2006), Colombo and Labrecciosa (2021), Hoof (2021), Wiszniewska-Matyszkiel et al. (2015), Wang and Huang (2015, 2018, 2019), Valentini and Vitale (2021) and Liu et al. (2017). There are also extensions of such models, considering additionally also advertising (e.g. Lu et al. 2018; Raoufinia et al. 2019) or applications of such a model adjusted to suit refineries Tominac and Mahalec (2018). For more exhaustive reviews of the subject see e.g. Dockner (2000) and Colombo and Labrecciosa (2017).

However, all of the models mentioned above focus on the market structure of Cournot oligopoly, in which firms produce a homogeneous product and they have entirely symmetric information. Analogous model with information asymmetry in which one of the firms (the leader) takes into account how the other firm (the follower) reacts to its strategy is called the Stackelberg model.

In static games, both the idea and solution of the Stackelberg problem are relatively simple and the informational advantage can be easily interpreted as a generalized first mover advantage: the sequence of moves with the leader as the first mover, or binding declaration of the leader about his choice of strategy before the choice of the follower (then the actual sequence of moves does not matter). Moreover, as a sequential optimization, the problem has a solution under only upper semicontinuity and compactness assumptions, unlike the Nash equilibrium problem requiring also the existence of a fixed point. In differential games, and, more generally, dynamic games, this generalized first mover advantage may be either required at each stage of the game, but it may also concern declaration of the leader’s strategy in the entire game before the first move and, depending on the information structure of the game, additional assumptions may be required. The situation is simple for the open loop information structure, when the strategies of the players are functions of time only, because then the standard definition applies. Conversely, a problem appears for the feedback information structure, with strategies (called feedback or Markov perfect) dependent on the current state. In the latter case, there are two extensions of the Stackelberg equilibrium. One of those concepts corresponds to the leader being the first mover at each stage. The other one, called global Stackelberg equilibrium, describes the situation in which the leader declares his feedback strategy before the game and the follower best responds to it. This approach is either equivalent to using threat strategies in order to enforce the global maximum of the leader’s payoff, or it requires imposing additional assumptions on the leader’s strategy. The reason is that calculating the best response of the follower to every possible leader’s strategy and then optimizing leader’s payoff with the resulting follower’s best response is ill-posed, since the best response to discontinuous strategies ceases to exist even in nice problems. Therefore, there are some a priori constraints of the class of the leader’s strategies: it is usually assumed that the leader’s strategy is linear. Nevertheless, it is enough to consider a class of functions defined by several real parameters. The resulting problem of the leader, however, is not a standard optimal control any more, but it becomes usual finite-dimensional optimization and the resulting strategy may be suboptimal if the leader’s optimal control after declaration does not belong to the assumed class of functions, so the solution may be not time-consistent. For deeper insight, see e.g. Başar and Olsder (1998) or Haurie et al. (2012) for general theory, while Martín-Herrán and Rubio (2021) for rare cases of coincidence of those two classes of Stackelberg equilibria with state-dependent information structure.

Similarly, the open loop Stackelberg equilibria, which are simpler to derive, are usually not subgame-perfect and it often turns out that the leader has incentives to change the declared strategy after the follower chooses his strategy being the best response to it.

Generally, solving the feedback Stackelberg problem is analytically very complicated and restriction to time-consistent, subgame-perfect solution makes it substantially more complicated, especially if the strategy sets are constrained, which even in linear quadratic problems with linear constraints leads to only piecewise-linear solutions. It can be expected that the best response of the follower to the leader’s strategy that is piecewise-linear with \(k\) pieces may result in the best response of the follower being piecewise linear with more than \(k\) pieces. This makes the leader’s optimization problem piecewise-linear-quadratic with more than \(k\) pieces.

The class of linear quadratic problems with linear constraints has been extensively studied for resource extraction problems for common or interrelated renewable resources sold at a common market, known also as productive asset oligopolies. Inherent constraints, like nonnegativity of the state variable and control or the constraint by admissibility of resource, in linear quadratic problems, may lead to numerous problems for Nash equilibria. Examples of such problems are as follows: the value function is piecewise quadratic with infinitely pieces for some parameters (Singh and Wiszniewska-Matyszkiel 2018), the problem is intractable in the standard way and the solution not even piecewise-linear (Singh et al. 2020), all the symmetric Nash equilibria are discontinuous (Singh and Wiszniewska-Matyszkiel 2019). Some difficulties may appear even in such optimal control problems, like e.g., in Singh and Wiszniewska-Matyszkiel (2020), where the solution is piecewise-linear with infinitely many pieces and the standard undetermined coefficient method returns a control far from the unique optimum. Nevertheless, such complications does not have to happen always in this kind of problems: there is a sequence of works, with piecewise linear dynamics in which this problem does not appear at a Nash equilibrium: Benchekroun (2008), Benchekroun et al. (2020), Vardar and Zaccour (2020), or at a Stackelberg equilibrium: Colombo and Labrecciosa (2019).

It is worth emphasizing that, as it has been proven in Wiszniewska-Matyszkiel et al. (2015), in the Cournot oligopoly with sticky prices, the strategies of the players in a feedback equilibrium are only piecewise linear with two pieces and the same applies to best responses to linear feedback strategies. So, what can be expected, a typical way of defining the global feedback Stackelberg equilibrium, in which calculating the best response of the follower is restricted to linear strategies of the leader only and the leader’s equilibrium strategy is indeed linear, cannot lead to a time-consistent global feedback Stackelberg equilibrium. Moreover, assuming a two-piece linear strategies of the leader results in only piecewise linear dynamics in the follower’s problem. Thus, a three-pieces linear best response can be expected, and it cannot be a priori excluded that the best response of the leader has more than two pieces. So, the global Stackelberg problem becomes extremely complicated.

Therefore, various simplifications of the dynamic Stackelberg equilibrium are considered. One of them is a model in which the leader’s informational advantage is increased by the fact that the less informed follower is also myopic.

Various models with myopia of at least one of two players, usually Stackelberg follower, are examined in marketing channels models, e.g. Taboubi and Zaccour (2002), Benchekroun et al. (2009), Liu et al. (2016), Martín-Herrán et al. (2012) and Wang et al. (2019), and in environmental problems, e.g. Hämäläinen et al. (1986) or Crabbé and Van Long (1993).

The first work with an attempt to capture the sticky price dynamics in a market with asymmetric information of this type is Fujiwara (2006), proposing a Stackelberg duopoly model with a myopic follower who expects immediate price adjustment. To the best of our knowledge, the subject has not been continued in the published literature. In Fujiwara (2006), the calculations are restricted only to finding the steady state of the open loop equilibrium (i.e. the information structure in which the strategies are functions of time only, not price) and the results are not fully proven. So, a natural step is to complete that analysis.

In this paper, we perform a complete analysis of both open loop and feedback form of the leader’s strategies in the model proposed by Fujiwara and we obtain interesting phenomena.

We compare our results with those for analogous market with Cournot duopoly structure derived in Wiszniewska-Matyszkiel et al. (2015).

2 Formulation of the model

We consider a differential game with 2 players, producers of the same good. Products of both producers are perceived by consumers as identical. Each of the firms has the same quadratic cost functions

where \(c\) is some positive constant and \(q_i \ge 0\) denotes the production of \(i\)-th player.

The market is described by the inverse demand function

However, the price does not adjust immediately, but its behaviour is defined by a differential equation

where \(s > 0\) measures the speed of adjustment and \(A\) is some positive constant substantially greater that \(c\), which can be interpreted as the market capacity.

So, it is natural to consider the resulting problem as a differential game with players maximizing \(\varPi _i(q_1, q_2) = \int _0^{\infty } {{\,\mathrm{e}\,}}^{-rt} \big ( p(t) q_i(t) - C(q_i(t)) \big ) dt\), where \(q_i(t)\) is the decision at time \(t\). The above problem has been extensively studied in the literature (see the introduction). In this paper, we want to consider a serious asymmetry between the players. Firstly, only the leader (player 1) is far-sighted, he knows the dynamics of price and his aim is to maximize

where \(r > 0\) is a discount factor, while \(p\) is defined by (2).

The follower (player 2) is assumed to be myopic and at each time instant he behaves like in the static Stackelberg duopoly. Hence, given a decision of the leader \(q_1(t)\), he chooses \(q_2(t)\) maximizing

as in Fujiwara (2006).

There may be several reasons of myopia of the less sophisticated player. Two most obvious ones are related to stronger position of the leader. The first one is when the leader is an established firm at the market and there are unrelated follower entrants at separate time instants, each of the entrants existing for one time instant. The same applies if there is only one firm but not sure whether it is going to exist in the future. This encompasses, among many other cases, the asymmetry between a fashion firm and a counterfeiter or, in a slightly different approach, a company with fishing rights and a poacher. The other obvious explanation assumes that the leader is the one who dictates prices and the follower just does not know the pricing rules of the leader—so there is partly a problem with distorted information as in Wiszniewska-Matyszkiel (2016) and Wiszniewska-Matyszkiel (2017).

We return to those interpretations in Sect. 5 after stating the results.

There is also one more explanation, already examined in the literature: being myopic may be a behavioural choice as in e.g. Benchekroun et al. (2009), which has already been studied in papers on sticky prices Liu et al. (2016) and Liu et al. (2017).

We end the formulation of the problem by recalling that the leader knows the way the follower behaves.

We would like to mention that although we write \(q_1(t)\) and \(q_2(t)\) while defining \(\varPi _1\) and \(\pi _2\), we just do it in order to have a concise notation at this stage, while in the sequel, we consider not only open loop strategies of the leader, but also feedback strategies (dependent on current price only) and strategies of the follower at each stage being a function of the current decision of the leader.

2.1 The behaviour of the follower, the implications for the leader and the static model

Let us consider a time instant \(t\). If we solve the optimization problem of the follower given the decision of the leader \(q_1(t)\), we get the best response of the follower

whenever it is positive, which, as we shall see, holds for all reasonable levels of the leader’s production.

This best response is knowns to the leader and therefore, taken as an input into his optimization problem. So, the optimization problem of the leader reduces to the maximization of

given by (3) with \(p\) is defined by

We also need the static Stackelberg model with immediate adjustment of prices for comparison to the results of our dynamic game. In the static Stackelberg model, the leader also maximizes \(\pi _1\) defined analogously to Eq. (4), and the only difference is in information – the leader knows that the strategy of the follower is the best response to \(q_1\), given by (5). So, the leader’s optimization problem is to maximize \({\pi _1(q_1, q_2(q_1)): = (A - q_1 - q_2(q_1))q_1 - C(q_1)}\), where \(q_2(q_1)\) is the best response of the follower. This results in static Stackelberg equilibrium

For comparison, the results for static Cournot-Nash equilibrium are

3 The myopic-follower Stackelberg equilibrium for open loop strategies of the leader

We start the analysis from the open loop strategies of the leader, i.e., the strategies of the leader being measurable functions \(q_1 :{\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\), directly dependent on time, without any dependence on price. The set of such strategies is denoted by \({\mathbb {Q}}_{OL}\). In the case when discontinuity appears, the price adjustment Eq. (7) is required to hold almost everywhere. The reaction of the follower is given by Eq. (5).

We apply the necessary conditions given by Theorem 11.

Lemma 1

For the current value Hamiltonian

the following properties hold.

If \(q_1^* \in \mathop {{\mathrm{Argmax}}} _{q_1\in {\mathbb {Q}}_{OL}} J(q_1)\) and \(p\) is the corresponding trajectory of price, then there exists an absolutely continuous costate trajectory \(\lambda :{\mathbb {R}}_+ \rightarrow {\mathbb {R}}\) such that for a.e. \(t\)

and

With the derived transversality condition (12), the costate trajectory is calculated backwards, as usually in the reasoning based on the Pontryagin maximum principle. In the sequel, in Theorem 4, we transform the conditions (12) and (13) to an initial condition, which is unique given \(p_0\), analogously to the technique of proof used in Wiszniewska-Matyszkiel et al. (2015).

Proof

The assumptions of Theorem 11 are fulfilled (see appendix A.3). Applying relations of Theorem 11 yields formulae (10) and (11). By the terminal condition given in Theorem 11, \(I(t) = \int _t^{\infty } {{\,\mathrm{e}\,}}^{-r w} {{\,\mathrm{e}\,}}^{-sw} q_1^*(w) dw\) converges absolutely and that \(\lambda\) fulfils

As proven in Appendix A.3, the set of control parameters that can appear in the optimal control is bounded, so

and

Thus, \(\lambda (t) {{\,\mathrm{e}\,}}^{-r t} \rightarrow 0\) as \(t \rightarrow \infty\) and it is nonnegative.

Suppose that \(\lambda ({\hat{t}}) = 0\) for some \({\hat{t}} > 0\). Since the integral of a nonnegative function can be zero only if the function is 0 almost everywhere, without loss of generality, the optimal control fulfils \(q_1(w) = 0\) for all \(w \ge {\hat{t}}\).

First, we check the case when \(p(w) > c\) for some \(w \ge {\hat{t}}\). Then, by continuity of trajectories, there exist \(\epsilon ,\delta >0\) such that increasing \(q_1\) to \(\epsilon\) on some small interval \([w, w + \delta ]\) (on which the corresponding strategy, \(p_{\epsilon ,\delta }\) fulfils \(p_{\epsilon ,\delta }(t)>c\)) would increase payoff. Indeed,

This leads to a contradiction with optimality of the leader’s strategy.

Next, we assume that \(p(w)>c\) does not hold for any \(w\ge {{\hat{t}}}\). So, \(p(w) \le c\) for all \(w \ge {\hat{t}}\).

We recall that the optimal control fulfils \(q_i(w)=0\) for all \(w\ge {{\hat{t}}}\) and note that, by the fact that \(A>c\), Eq. (7) with \(q_1(w)=0\) for \(w \ge {\hat{t}}\) implies \({\dot{p}}(w) > 0\) and the unique steady state of Eq. (7) for such \(q_1\) is greater than \(c\). So, the the price corresponding to such a \(q_1\) grows to this steady state and the trajectory exceeds \(c\) at some finite time, which leads to a contradiction.

Therefore \(\lambda (t) > 0\) for all \(t\). \(\square\)

To maximize the present value Hamiltonian with respect to \(q_1\), we calculate its zero derivative point and we obtain \(q_{1}(t)= p(t) - c - \frac{2}{3}s\lambda (t).\) Taking into account the nonnegativity constraints, this implies

where

and

Applying Lemma 1 to our problem yields

Substituting the follower’s best response (5) yields

Therefore, the optimality of the leader’s strategy implies that the state and the costate variables must fulfil the following system of ODEs.

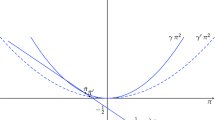

Again, \(\lambda\) has the terminal condition given by Eq. (12), while \(p\) the initial condition given as in Eq. (7). So, we have a backward-forward ODE with a mixed terminal-initial condition, which we are going to transform to a joint initial condition. As the first step to do this, we formulate the following Theorem (Fig. 1).

The phase diagram of Eq. (20). The green line with horizontal bars denotes the \(p\)-null-cline, the red line with vertical bars denotes the \(\lambda\)-null-cline. The blue dashed line splits the costate-state space into the sets \(\varOmega _1\) and \(\varOmega _2\). The black solid line represents the stable manifold of the steady state, while the light brown line the unstable manifold

Theorem 2

Let \((\lambda (t),p(t))\) be a solution to Eq. (20) with an initial value \((\lambda _0,p_0)\). Then \(\lambda (t) {{\,\mathrm{e}\,}}^{-r t}>0\) and it converges to 0 as \(t\rightarrow \infty\) if and only if \((\lambda _0,p_0)\in \Gamma\) , where \(\Gamma\) is the stable manifold of the steady state \((\lambda ^*, \mathbf{p }^*_{OL})\) of Eq. (20).

The point \((\lambda ^*, \mathbf{p }^*_{OL})\in \varOmega _2\) (for \(\varOmega _1, \varOmega _2\) defined in (16) and (17)) and

The corresponding steady state production of the leader is

Moreover, the curve \(\Gamma\) intersects with the line \(p = \left( \frac{2}{3}s + r\right) \lambda +c\) at the point \(({{\bar{\lambda }}}, {{\bar{p}}})\) with

The stable manifold \(\Gamma\) consists of the steady state \(\{ (\lambda ^*, \mathbf{p }^*_{OL}) \}\) and

Proof

First, we analyse the phase portrait, presented in Fig. 1, to determine the existence of solutions. We can see that for each variable the null-clines are as follows:

As the \(p\)-null-cline has the slope smaller than the line dividing the \((p, \lambda )\) space into \(\varOmega _1\) and \(\varOmega _2\), there exists exactly one solution in the positive quadrant and it corresponds to positive leader’s production. It is easy to calculate (21) and then (22) by substituting to (15).

In \(\varOmega _{1}\), the solution of (20) has the form

In \(\varOmega _{1}\), to the right from the stable manifold, for \(t\rightarrow \infty\), \(\lambda (t)\) asymptotically behaves as \(c_2 {{\,\mathrm{e}\,}}^{(r+s)t}\) and \(p(t)\) as \(c_1 {{\,\mathrm{e}\,}}^{-st}\). Therefore, \(\lim _{t\rightarrow \infty }{{\,\mathrm{e}\,}}^{-rt}\lambda (t) \ne 0\).

We can see from the phase diagram that each solution with the initial condition right to the stable manifold eventually enters \(\varOmega _1\), so the above reasoning applies also to other trajectories right to the stable manifold.

We can also see that for every trajectory with the initial condition left to the stable manifold, \(\lambda (t) \le 0\) from some time instant on.

In \(\varOmega _2\), Eq. (20) reduces to:

where

The determinant \(\det B = -s \big ( \frac{5r+7s}{3} \big ) < 0\), which confirms that the steady state is a saddle point. The eigenvalues of \(B\) are

while the corresponding eigenvectors are

This implies the equation describing the part of \(\Gamma\) in \(\varOmega _2\) is \(\Gamma _2\) and \(\Gamma _3\).

Analogously, to get \(\Gamma _1\), we solve Eq. (20) for \((\lambda , p)\) in \(\varOmega _1\) with the condition that for some \(t\), (\(\lambda (t),p(t))= ({{\bar{\lambda }}},{{\bar{p}}})\). \(\square\)

Lemma 3

The leader’s optimal strategy in the open loop form \(\mathbf{q }_{1,OL}\) fulfils for a.e. \(t\)

where \({{\bar{p}}}\) is given by (23).

Proof

Immediate by Lemma 1 and Theorem 2. \(\square\)

Theorem 4

There is a unique leader’s optimal strategy in the open loop form.

The equilibrium production is given by

where

\(\mu _2\) is given by (26), \({\bar{p}}\) and \({\bar{\lambda }}\) are given by (23) and (24) and \(\lambda ^*\) , \(\mathbf{p }^*_{OL}\) and \(\mathbf{q }^*_{1,OL}\) by (21) and (22).

The equilibrium price level is given by

The steady state \((\mathbf{p }^*_{OL},\mathbf{q }^*_{1,OL})\) is stable with respect to changes of \(p_0\).

Proof

We use Theorem 2 and Lemma 3 and solve the set of equations (20) along the stable manifold of the steady state \(\Gamma\).

\(\lambda _0\) corresponding to \(p_0\) is uniquely defined by the terminal condition and the condition \(\lambda (t)>0\), and it is such that \((\lambda _0,p_0)\) belongs to the stable manifold of the steady state. \(\square\)

We would like to emphasize that, although the steady state \((\lambda ^*,\mathbf{p }^*_{OL})\) is a saddle point, \(\mathbf{p }^*_{OL}\) and \(\mathbf{q }^*_{1,OL}\) are stable with respect to changes of the initial condition \(p_0\). This holds because the terminal condition for \(\lambda\) at infinity together with the positivity condition imply unique initial condition \(\lambda _0\) corresponding to \(p_0\) such that the trajectory is in the stable manifold of the steady state. Besides, the costate variable \(\lambda\) is only an auxiliary variable that has to exist and it shouldn’t be treated in the same way as the actual state variable \(p\).

4 Feedback strategies

The next problem we want to solve is the optimization of the leader for the feedback information structure, i.e. the problem in which the set of controls of the leader is the set of functions \(q_1:{\mathbb {R}} \rightarrow {\mathbb {R}}_+\), with price as the argument, such that Eq. (7) with \(q_1(t)\) replaced by \(q_1(p(t))\) has a unique absolutely continuous solution. In the feedback approach, the problem is solved more generally for arbitrary values of the initial condition.

We recall that for the Cournot duopoly considering the feedback information structure leads to results which are not equivalent to results of considering the open loop information structure and even the steady states are not equivalent (see Cellini and Lambertini 2004; Fershtman and Kamien 1987; Wiszniewska-Matyszkiel et al. 2015).

To calculate the myopic-follower Stackelberg equilibrium assuming the feedback form of leader’s strategies, we use the standard sufficient condition using Bellman or Hamilton-Jacobi-Bellman (HJB) equation (see e.g. Dockner 2000; Fleming and Soner 2006; Zabczyk 2009) which returns the auxiliary value function, i.e., a function \(W:{\mathbb {R}}_+\rightarrow {\mathbb {R}}\) such that for every \(p\), \(W(p)\) is the optimal payoff of the leader if the initial price is \(p\).

In our case, the sufficient condition for a continuously differentiable function \(W\) to be the value function is the Bellman equation for every price \(p\in {\mathbb {R}}_+\)

with the terminal condition \(\limsup \limits _{t\rightarrow \infty }{{\,\mathrm{e}\,}}^{-rt}W(p(t)) = 0\) for every admissible price trajectory \(p\).

If \(W\) is the value function, then every \(\mathbf{q }_{1,F}\) that maximizes the rhs. of the Bellman equation, i.e., that for every price \(p\in {\mathbb {R}}_+\), fulfils

is an optimal control.

Theorem 5

The value function of this optimization problem is defined by

where

and it is nonnegative, increasing, continuous and continuously differentiable.

The feedback optimal solution is defined by

strictly increasing whenever positive. The corresponding price trajectory is defined by

where

The price \({{\tilde{p}}}\) is the price at which the leader starts production, while the time instant \({{\tilde{t}}}\) is the time instant at which the optimal price trajectory originating from \(p_0\) attains the level \({{\tilde{p}}}\).

Proof

The methodology of finding the value function is analogous to Wiszniewska-Matyszkiel et al. (2015). First, we solve the analogous dynamic optimization without constraint on \(q_1\). We assume that

for some \(\alpha\), \(\beta\), \(\gamma\). We substitute the leader’s production maximizing the right hand size of (31)

and we obtain

This leads to solving the following equation

Simple calculations yield (34) and another solution differing by plus sign before the square root in \(\alpha\). We choose \(\alpha\) with minus sign, because the other solution results in \(q_1\) decreasing in price and negative above \({{\tilde{p}}}\), so we cannot treat it as a good candidate for the optimal strategy.

Next, we return to the initial problem. By that moment, we haven’t considered the constraint \(q_1 \ge 0\). We calculate the price \({\tilde{p}}\) at which \(q_1\) given by (39) is equal to zero.

We replace \(q_1(p)\) by 0 for \(p \le {\tilde{p}}\) and we proceed to prove that the modified strategy is optimal.

The resulting trajectory of price for \(p_0 < {\tilde{p}}\) as long as \(p\) stays in this set, is as follows.

Let \({\tilde{t}}\) be the time needed for the price described by equation (42) to reach the level \({\tilde{p}}\). Such \({\tilde{t}}\) exists, because for \(p<{\tilde{p}}\), the price is increasing, since \({\dot{p}}(t)>0\) and immediate transformation of (42) proves that it is as in (37).

If the modified \(q_1\) given by (35) is the optimal control and the value function is as in (38) for \(p \ge {\tilde{p}}\), then the value function for \(p<{\tilde{p}}\) is \(W({\tilde{p}})\) discounted from the time instant \({\tilde{t}}\) to time instant 0.

Substitution of \({{\tilde{t}}}\) yields \(W^-(p)=\Big ( \frac{2A+c}{3} - p \Big )^{-\frac{r}{s}} \Big ( \frac{2A+c}{3} - {\tilde{p}} \Big )^{\frac{r}{s}} \Big ( \frac{\alpha }{2}{\tilde{p}}^{2} + \beta {\tilde{p}} + \gamma \Big ) .\)

In order to check sufficiency, besides checking the Bellman equation and the terminal condition, we have to prove that \(W(p)= {\left\{ \begin{array}{ll} W^+(p) &{} \text {for } p \ge {\tilde{p}}, \\ W^-(p) &{} \text {otherwise}, \end{array}\right. }\) is continuously differentiable.

Continuity of \(W\) is straightforward as \(W^+({\tilde{p}}) = W^-({\tilde{p}})\). We focus on proving the continuity of the derivative, which is not obvious at \({\tilde{p}}\).

Since the Bellman equation is fulfilled for \({\tilde{p}}\) and \(W\) is continuous, we have

To prove monotonicity and nonnegativity, we first prove that \((W^+)'\) is positive for \(p\ge {{\tilde{p}}}\). Since \(\alpha >0\), \((W^+)'\) is strictly increasing, so, it is enough to check it at \({{\tilde{p}}}\). \(W^-\) is positive since it is a discounted value of \(W^+({{\tilde{p}}})\), which is positive, since it is equal to its derivative at this point multiplied by a positive constant \(\frac{r}{s} \cdot \frac{3}{2A+c-3{{\tilde{p}}}}\). Analogously, \((W^-)({{\tilde{p}}})'\) is positive, since it is equal to \(W^-({{\tilde{p}}})\) multiplied by a positive constant \(\frac{s}{r} \cdot \frac{2A+c-3{{\tilde{p}}}}{3}\).

Consider an arbitrary control \(q_1\) and the corresponding trajectory \(p\). Nonnegativity of \(W\) implies that \(\limsup \limits _{t\rightarrow \infty } W(p(t)){{\,\mathrm{e}\,}}^{-rt}\ge 0\). Noting that if \(p(t)>A\), then \(p'(t)<0\) until \(p(t)=A\) implies that \(\limsup \limits _{t\rightarrow \infty } W(p(t)){{\,\mathrm{e}\,}}^{-rt}\le 0\), which completes the proof that \(W\) is the value function.

Since \({\mathbf {q}}_{1,F}\) is defined as the zero-derivative point of the maximized function in the rhs. of the Bellman equation whenever it is nonnegative, zero otherwise, and the maximized function is strictly concave in \(q_1\), it is the optimal control.

Next, we calculate the trajectory corresponding to the optimal control.

The equation defining it whenever \(p(t)\ge {\tilde{p}}\) is

So, if \(p_{0}>{\tilde{p}}\), then the whole trajectory is given by

while if we consider a trajectory with \(p_0 <{{\tilde{p}}}\) which reaches \({{\tilde{p}}}\) at time \({{\tilde{t}}}\), then for \(t\ge {{\tilde{t}}}\)

For \(p_{0} \le {\tilde{p}}\) and \(t < {{\tilde{t}}}\)

which implies

\(\square\)

5 Open loop and feedback myopic-follower equilibria, limitations of the model and self-verification of the follower’s false beliefs in the game

After solving both problems, we present the solutions graphically.

In Fig. 2, we present productions of both firms, compared to their static Stackelberg equilibrium strategies and the static Cournot-Nash equilibrium production level, while in Fig. 3, the equilibrium price compared to static Stackelberg and Cournot-Nash price.

Production trajectory for the model parameters \(A=10\), \(c=1\), \(r=0.15\), \(s=0.5\) and \(p_0=1.1\). The red solid line corresponds to the leader, while the blue dashed line to the follower. The dashed horizontal lines correspond to static equilibria productions—from the bottom: the Stackelberg follower, a Cournot competitor, the Stackelberg leader. The dashed vertical line corresponds to the moment at which the leader starts production, \({{\bar{t}}}={{\tilde{t}}}\)

Price trajectory for the model parameters \(A=10\), \(c=1\), \(r=0.15\), \(s=0.5\) and \(p_0=1.1\)—the solid line. The horizontal lines correspond to the following levels of price, from the bottom: \({{\bar{p}}}={{\tilde{p}}}\), static Stackelberg equilibrium, static Cournot equilibrium. The dashed vertical line corresponds to the moment at which the leader starts production, \({{\bar{t}}}={{\tilde{t}}}\)

As we can see, the open loop and feedback solutions coincide. It is not only a property for a specific set of data, but a general principle. This is different from the situation observed for the analogous dynamic Cournot-Nash equilibrium, in which feedback strategies at the corresponding trajectory are larger than the open loop strategies, with the opposite inequality for prices, as it has been proven in Wiszniewska-Matyszkiel et al. (2015). This coincidence in our model is a result of myopia of the follower. We can formally write the following theorem.

Theorem 6

The open loop and feedback myopic-follower Stackelberg equilibrium trajectories coincide, and the open loop optimal strategy of the leader coincides with his optimal feedback strategy along this trajectory, and the same applies to the follower’s best response and

Proof

By substitution of the constants from Eqs (34) and (37) to \({\mathbf {p}}_{F}\) from Eq. (36) and meticulous simplifying and then by substitution of those constants and \({\mathbf {p}}_{F}\) to \({\mathbf {q}}_{1,F}\) and simplifying.

The last inequality in (46) simplifies to \(A>c\), the first one has already been proven in Theorem 2. \(\square\)

As we can see from Fig. 2, for small prices, until \({{\bar{t}}}\), the leader does not produce, waiting for the price to grow. At the same time interval, the follower has maximal production. Afterwards, the leader’s production continuously increases, while the follower’s production decreases. They intersect and they converge to their steady states, with the steady state of the leader above his static Stackelberg equilibrium level, and the steady state of the follower below his static Stackelberg equilibrium level. When prices are considered, the steady state of the dynamic equilibrium price is below the static Stackelberg equilibrium price, which can be also confirmed by analytic calculations.

Another interesting thing that can be seen from Fig. 2 is that the model does not behave as everybody can expect for \(p\) close to and below \(c\) (to make it visible, we started from \(p_0\) close to the minimal marginal cost \(c\)). While the leader does not produce, the follower observing the leader’s production, has a large constant level of production.

This leads us to the concept of self-verification. The fact that instead of the total payoff in the dynamic game, the follower maximizes only expected current payoff, may be caused by two different reasons.

-

1.

The less realistic explanation is that the leader has already been at the market, while there are multiple unrelated follower firms—entrants—who do not know the leader’s pricing strategy, i.e., sticky prices with speed of price adjustment \(s\). Each of those follower firms exists only one time instant, at most one at each time instant. After obtaining the profit lower then expected, each follower firm resigns. And the profit is lower then expected, because if the leader offers a lower price, then the entrant has to decrease his price, too.

-

2.

The follower is not conscious that his current choice influences future price. In this case, we have a game with distorted information, in which players have some beliefs on how their current decision influences future aggregates and values of the state variable, non-necessarily consistent with reality. Depending whether the beliefs are deterministic (realizations regarded as possible versus those impossible) or stochastic (a probability distribution on future realizations), there are two corresponding concepts of belief distorted Nash equilibrium, introduced in Wiszniewska-Matyszkiel (2016) and Wiszniewska-Matyszkiel (2017), respectively. A part of those concepts is self-verification of the equilibrium profiles which, briefly speaking, means that the beliefs influence the behaviour of the players such that the beliefs cannot be falsified by subsequent play. Moreover, the correct current value of the state variable and opponent’s behaviour is a part of both equilibrium concepts.

For steady-state initial prices, the follower’s belief of no influence on future prices is self-verifying and the current leader’s behaviour and price is guessed correctly. For lower prices, they do not have this property, which is especially visible for initial prices close to \(c\). This suggests that the analysis of a dynamic optimization model with sticky prices cannot be restricted to the steady-state only and it suggests that further studies are required to derive a model that behaves as expected also for small values of the initial price.

Moreover, we would like to emphasize some limitation of the sticky price models usually not emphasized and therefore, not perceived, since the focus is usually on their nice mathematical behaviour. The economic justification of introducing first sticky prices models was by the fact that prices below the static equilibrium level are often observed at real world markets and it is obtained by gradual increase of prices. Such a situation in a model can happen only if the initial market price does not exceed the steady state price (which in our case is slightly below the static Stackelberg equilibrium).

This assumption is not needed in any of the mathematical results and their proofs, which hold for arbitrary positive initial price.

Nevertheless, in economics, the reverse situation is unrealistic. As we can see from the dynamics of price for the equilibrium strategies in various sticky prices models, above the steady state price, there is a permanent excess supply. Sticky prices approach is related to behaviour of producer facing excess demand but constrained by e.g. menu costs. In reality, permanent excess supply and the resulting need to dispose the excess amount of product would cause qualitative change of behaviour of the producers, e.g. immediate reduction of price in order to sell the excess product.

So, if the initial price is above the steady state, which can happen if e.g. an entrant suddenly appears at a previously monopolistic market, an immediate reduction of price by the ex-monopolist can be expected. So, after the reduction, there will be no excess supply and the new initial price to the sticky prices dynamics will not exceed the steady state price.

6 Dependence on the speed of price adjustment

An interesting question is how the equilibria depend on the speed of price adjustment \(s\). In Fig. 4, we compare production levels for two different \(s\). We can see that increasing \(s\) results in faster switching on production of the leader, and faster growth of production at the beginning, but later convergence to a lower steady state. The opposite inequalities apply to the production of the follower. Analogous comparison of the price for various \(s\) in Fig. 5 reveals that this anomaly of production trajectories is not strong enough to affect anomalies in prices—the price at each time instant is a strictly increasing function of \(s\).

Dependence of productions on \(s\) for the model parameters \(A=10\), \(c=1\), \(r=0.15\) and \(p_0=1.1\). From left: \(s=0.25\), \(s=1\). The red solid line corresponds to the leader, while the blue dashed line to the follower. The dashed horizontal lines correspond to static Stackelberg equilibrium productions—from the bottom: the follower, the leader

7 The asymptotic values of the equilibria

In many previous works, e.g. Fershtman and Kamien (1987), Cellini and Lambertini (2004) and Wiszniewska-Matyszkiel et al. (2015), it has been proven that the feedback Cournot-Nash equilibrium does not converge to the static Cournot-Nash equilibrium when \(s\rightarrow \infty\), which corresponds to immediate price adjustment. So, an interesting question is what happens in our model as \(s\) tends to its limits, especially when \(s\rightarrow \infty\).

First, we recall the form of the steady states for the feedback equilibrium.

Next, their limits as \(s\rightarrow 0\)

Finally, their limits as \(s\rightarrow \infty\).

For \(s<\infty\) \(\mathbf{p }^{*}_{OL}=\mathbf{p }^{*}_{F} < p^{SB}\), \(\mathbf{q }^{*}_{1,OL}=\mathbf{q }^{*}_{1,F}>q_{1}^{SB}\) and \(\mathbf{q }^{*}_{2,OL}=\mathbf{q }^{*}_{2,F}<q_{2}^{SB}\).

As we can see, all the values converge to their static Stackelberg analogues as the speed of adjustment tends to infinity, i.e. the immediate adjustment.

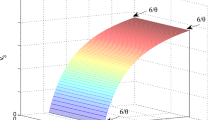

In Fig. 6, we present the steady state of productions of both firms, while in Fig. 7, the steady state of price. As we can see, the steady state production of the leader is decreasing in \(s\) and it converges to the static Stackelberg leader production from above, with the opposite inequalities for the follower, and the steady state price is increasing in \(s\) and it converges to the static Stackelberg price from below.

Asymptotic production levels as a function of the speed of price adjustment \(s\rightarrow \infty\) for the model parameters \(A=10\), \(c=1\), and \(r=0.15\). The red solid line corresponds to the leader, while the blue dashed line to the follower. The dashed horizontal lines correspond to static equilibria productions—from the bottom: the follower, a Cournot competitor, the leader

8 Comparison to the Cournot model

Last, but not least, we want to compare our results with the results of the Cournot oligopoly case. The complete results for the Cournot model with sticky prices has been derived in Wiszniewska-Matyszkiel et al. (2015). We do not cite the exact values of constants, we only present the comparison graphically in Figs. 8 and 9, with a zoomed view of an initial time interval for better readability.

The myopic-follower Stackelberg leader optimal production compared to open loop and feedback equilibrium production of each player in the Cournot doupoly with the parameters \(A=10\), \(c=1\), \(r=0.15\), \(s=0.5\) and \(p_0=1.01\). The red solid line corresponds to the leader, the blue dashed line to the open loop Cournot case, the blue dotted line to the feedback Cournot case. A zoomed view at the right

The myopic-follower Stackelberg equilibrium price compared to the open loop and feedback equilibrium Cournot doupoly price with the parameters \(A=10\), \(c=1\), \(r=0.15\), \(s=0.5\) and \(p_0=1.01\). The red solid line corresponds to the myopic-follower Stackelberg case, the blue dashed line to the open loop Cournot case, the blue dotted line to the feedback Cournot case. A zoomed view at the right

As we can see, at the myopic-follower Stackelberg equilibrium, the leader starts the production later than the Cournot competitors in the feedback case and slightly before the Cournot competitors in the open loop case. Afterwards, his production first grows slower than that in both Cournot cases, then faster and, after intersecting the open loop equilibrium strategy twice and feedback equilibrium strategy once, it converges to a larger steady state. The myopic-follower Stackelberg price first grows slower, but afterwards it intersects the feedback Cournot price trajectory and converges to a steady state between the steady states of the feedback and open loop Cournot equilibrium price.

9 Conclusions

In this paper, we have extensively studied the model of a dynamic Stackelberg type duopoly at a market with price stickiness in which the follower is myopic, first proposed and partially studied by Fujiwara (2006), called myopic-follower Stackelberg model. We have analysed it both with open loop and feedback information structure of the leader. In this model, we have obtained convergence to a stable steady state with the price and follower’s production below while the leader’s production above their static Stackelberg levels. However, an interesting result can be observed for low initial prices, when the leader’s production is below the myopic follower’s production, and, if the initial price is low enough, the leader initially waits in order to increase it, while the follower produces maximally. This waiting time is for a longer time interval than for the feedback Cournot equilibrium. Interesting anomalies can be observed as the speed of adjustment changes, but the limits as it converges to infinity are equal to their static Stackelberg counterparts. Besides, unlike in the Cournot model, open loop and feedback solutions coincide.

The results of this paper concerning the behaviour of the follower for small prices show that the analysis of a dynamic game model with sticky prices cannot be restricted to the steady state only and it suggests that further studies are required to derive a model that behaves as expected also for small values of the initial price.

Therefore, an analogous analysis with a different model of the follower’s behaviour, observing rather the price than the leader’s behaviour is an obvious future continuation of this paper. In such a case, we can introduce more myopic followers, being price takers, which results in ”a cartel and a fringe” models (see e.g. Groot et al. 2003 or Benchekroun and Withagen 2012 for applications of such differential game models).

Notes

In fact, Aseev and Veliov in Aseev and Veliov (2012, 2014, 2015) used the concept of locally weakly overtaking optimal solution, which is one of possible extensions of the concept of optimality when infinite payoffs are not excluded. In the problem of our paper, it is equivalent to local optimality.

References

Anderson E, Jaimovich N, Simester D (2015) Price stickiness: Empirical evidence of the menu cost channel. Rev Econ Stat 97(4): 813–826

Aseev S, Veliov V (2014) Needle variations in infinite-horizon optimal control. Var Optim Control Probl Unbounded Domains 619: 1–17

Aseev SM, Veliov VM (2012) Maximum principle for infinite-horizon optimal control problems with dominating discount. Dyn Contin Discret Impuls Syst 19: 43–63

Aseev SM, Veliov VM (2015) Maximum principle for infinite-horizon optimal control problems under weak regularity assumptions. Proc Steklov Inst Math 291(1): 22–39

Balder E (1983) An existence result for optimal economic growth problems. J Math Anal Appl 95(1): 195–213

Başar T, Olsder GJ (1998) Dynamic noncooperative game theory. SIAM

Benchekroun H (2008) Comparative dynamics in a productive asset oligopoly. J Econ Theory 138(1): 237–261

Benchekroun H, Chaudhuri AR, Tasneem D (2020) On the impact of trade in a common property renewable resource oligopoly. J Environ Econ Manag 101: 102304

Benchekroun H, Martín-Herrán G, Taboubi S (2009) Could myopic pricing be a strategic choice in marketing channels? A game theoretic analysis. J Econ Dyn Control 33(9): 1699–1718

Benchekroun H, Withagen C (2012) On price taking behavior in a nonrenewable resource cartel-fringe game. Games Econ Behav 76(2): 355–374

Benchekroun H, Xue L (2006) Cartel stability in a dynamic oligopoly with sticky prices. Tech Rep. https://core.ac.uk/download/pdf/7130746.pdf

Cellini R, Lambertini L (2004) Dynamic oligopoly with sticky prices: closed-loop, feedback, and open-loop solutions. J Dyn Control Syst 10(3): 303–314

Cellini R, Lambertini L (2007) A differential oligopoly game with differentiated goods and sticky prices. Eur J Op Res 176(2): 1131–1144

Colombo L, Labrecciosa P (2018) Differential games in industrial organization. In: Handbook of Dynamic Game Theory, pp 779–825. Springer

Colombo L, Labrecciosa P (2019) Stackelberg versus Cournot: a differential game approach. J Econ Dyn Control 101: 239–26

Colombo L, Labrecciosa P (2021) A stochastic differential game of duopolistic competition with sticky prices. J Econ Dyn Control 122:104030

Crabbé P, Van Long N (1993) Entry deterrence and overexploitation of the fishery. J Econ Dyn Control 17(4): 679–704

Dockner E (2000) Differential games in economics and management science. Cambridge University Press, Cambridge

Dockner EJ, Gaunersdorfer A (2001) On the profitability of horizontal mergers in industries with dynamic competition. Jpn World Econ 13(3): 195–216

Fershtman C, Kamien MI (1987) Dynamic duopolistic competition with sticky prices. Econometrica 55(5): 1151–1164

Fershtman C, Kamien MI (1990) Turnpike properties in a finite-horizon differential game: dynamic duopoly with sticky prices. Int Econ Rev 31(1):49–60

Fleming WH, Soner HM (2006) Controlled Markov processes and viscosity solutions, vol. 25. Springer, Berlin

Fujiwara K (2006) A Stackelberg game model of dynamic duopolistic competition with sticky prices. Econ Bull 12(12): 1–9

Gorodnichenko Y, Weber M (2016) Are sticky prices costly? Evidence from the stock market. Am Econ Rev 106(1):165–199

Groot F, Withagen C, De Zeeuw A (2003) Strong time-consistency in the cartel-versus-fringe model. J Econ Dyn Control 28(2): 287–306

Hämäläinen RP, Ruusunen J, Kaitala V (1986) Myopic Stackelberg equilibria and social coordination in a share contract fishery. Mar Res Econ 3(3): 209–235

Haurie A, Krawczyk JB, Zaccour G (2012) Games and dynamic games. World Scientific Publishing Co. Pte. Ltd., UK

Hoof S (2021) Dynamic monopolistic competition. J Optim Theory Appl 189(2):560-577

Liu G, Sethi SP, Zhang J (2016) Myopic far-sighted behaviours in a revenue-sharing supply chain with reference quality effects. Int J Prod Res 54(5): 1334–1357

Liu Y, Zhang J, Zhang S, Liu G (2017) Prisoner’s dilemma on behavioral choices in the presence of sticky prices: Farsightedness vs. myopia. Int J Prod Econ 191:128–142

Lu F, Tang W, Liu G, Zhang J (2018) Cooperative advertising: A way escaping from the prisoner’s dilemma in a supply chain with sticky price. Omega 86:87–106

Lünnemann P, Wintr L (2011) Price stickiness in the US and Europe revisited: evidence from internet prices. Oxford Bull Econ Stat 73(5): 593–621

Martín-Herrán G, Rubio SJ (2021) On coincidence of feedback and global Stackelberg equilibria in a class of differential games. Eur J Op Res 293(2): 761–772

Martín-Herrán G, Taboubi S, Zaccour G (2012) Dual role of price and myopia in a marketing channel. Eur J Op Res 219(2): 284–295

Piga CA (2000) Competition in a duopoly with sticky price and advertising. Int J Ind Organ 18(4): 595–614

Raoufinia M, Baradaran V, Shahrjerdi R (2019) A dynamic differential oligopoly game with sticky price and advertising: Open-loop and closed-loop solutions. Kybernetes 48(3): 586–611

Simaan M, Takayama T (1978) Game theory applied to dynamic duopoly problems with production constraints. Automatica 14(2): 161–166

Singh R, Dwivedi AD, Srivastava G, Wiszniewska-Matyszkiel A, Cheng X (2020) A game theoretic analysis of resource mining in blockchain. Clust Comput 23(3): 2035–2046

Singh R, Wiszniewska-Matyszkiel A (2018) Linear quadratic game of exploitation of common renewable resources with inherent constraints. Topol Methods Nonlinear Anal 51: 23–54 https://doi.org/10.12775/TMNA.2017.057

Singh R, Wiszniewska-Matyszkiel A. (2019) Discontinuous Nash equilibria in a two stage linear-quadratic dynamic game with linear constraints. IEEE Trans Autom Control 62: 3074–3079. https://doi.org/10.1109/TAC.2018.2882160

Singh R, Wiszniewska-Matyszkiel A (2020) A class of linear quadratic dynamic optimization problems with state dependent constraints. Math Method Op Res 91: 325–355

Taboubi S, Zaccour G (2002) Impact of retailer’s myopia on channel’s strategies. In Optimal Control and Differential Games. Springer, pp 179–192

Tominac P, Mahalec V (2018) A dynamic game theoretic framework for process plant competitive upgrade and production planning. AIChE J 64(3): 916–925

Tsutsui S, Mino K (1990) Nonlinear strategies in dynamic duopolistic competition with sticky prices. J Econ Theory 52(1): 136–161

Valentini E, Vitale P (2021) A dynamic oligopoly with price stickiness and risk-averse agents. Italian Econ J 1–22

Vardar NB, Zaccour G (2020) Exploitation of a productive asset in the presence of strategic behavior and pollution externalities. Math 8(10): 1682

Wang B, Huang M (2015) Dynamic production output adjustment with sticky prices: A mean field game approach. In Decision and Control (CDC), 2015 IEEE 54th Annual Conference on, IEEE, pp 4438–4443

Wang B, Huang M (2018) Mean field games for production output adjustment with noisy sticky prices. In 2018 Chinese Control And Decision Conference (CCDC). IEEE, pp 3185–3190

Wang B, Huang M (2019) Mean field production output control with sticky prices: Nash and social solutions. Automatica 100: 90–98 https://doi.org/10.1016/j.automatica.2018.11.006

Wang J, Cheng X, Wang X, Yang H, Zhang S (2019) Myopic versus farsighted behaviors in a low-carbon supply chain with reference emission effects. Complexity

Wiszniewska-Matyszkiel A (2016) Belief distorted Nash equilibria: introduction of a new kind of equilibrium in dynamic games with distorted information. Annals Op Res 243(1–2): 147–177

Wiszniewska-Matyszkiel A (2017) Redefinition of belief distorted Nash equilibria for the environment of dynamic games with probabilistic beliefs. J Optim Theory Appl 172(3): 984–1007

Wiszniewska-Matyszkiel A, Bodnar M, Mirota F (2015) Dynamic oligopoly with sticky prices: Off-steady-state analysis. Dyn Games Appl 5(4): 568–598

Zabczyk J (2009) Mathematical control theory: an introduction. Springer, Berlin

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research was financed by grant 2016/21/B/HS4/00695 of National Science Centre, Poland. All data generated or analysed during this study are included in this published article.

Appendix A: Open loop – existence of optimal solution and appropriate necessary conditions for infinite horizon optimal control problem

Appendix A: Open loop – existence of optimal solution and appropriate necessary conditions for infinite horizon optimal control problem

In this section, we formulate the necessary condition, analogous to the core relations of the Pontryagin maximum principle for finite time horizon, in the case of the infinite time horizon.

We consider an optimal control problem with the state space being an open convex set \({\mathbb {X}} \subseteq {\mathbb {R}}^n\), the set of control parameters \({\mathbb {U}} \subseteq {\mathbb {R}}^m\) and the open loop information structure, so, consequently, the set of open loop control functions \({\mathscr {U}}^{OL} = \{u : {\mathbb {R}}_+ \rightarrow {\mathbb {U}} \text { measurable}\}\). As the objective we consider maximisation of

where the trajectory \(x\) is the trajectory corresponding to \(u\) and it is defined by

the discount rate is \(r > 0\), and the integration denotes integration with respect to the Lebesgue measure.

Obviously, the set \({\mathbb {X}}\) is assumed to be invariant set of equation (48) for every control function \(u\).

We assume a priori that the functions \(g\) and \(f\) are such that the objective function is finite for every \(u \in {\mathscr {U}}^{OL}\) and the corresponding trajectory \(x\).

An absolutely continuous function \({x :\mathbb {R}_+ \rightarrow {\mathbb {X}}}\) being a solution to the system (48) with \(u \in {\mathscr {U}}^{OL}\) is called the (admissible) trajectory corresponding to \(u\).

We denote this dynamic optimization problem by (P).

In all further results we assume that both sets \({\mathbb {U}}\) and \({\mathbb {X}}\) are nonempty, \({\mathbb {U}}\) is compact, and the functions \({f :\mathbb {R}_+ \times {\mathbb {X}} \times {\mathbb {U}} \rightarrow \mathbb {R}^{n}}\), and \({g :\mathbb {R}_+ \times {\mathbb {X}} \times {\mathbb {U}} \rightarrow \mathbb {R}}\) are measurable.

Any pair \((u, x)\), where \(u\) is a control and \(x\) is an admissible trajectory corresponding to it, is called an admissible solution.

A pair \((u^{*}, x^{*})\) is called an optimal solution of the problem (P) if it is an admissible solution, and the value of \(J_{0, x_0}(u^*)\) is maximal, that is \(J_{0, x_0}(u) \le J_{0, x_0}(u^*)\) for every admissible solution \((u, x)\).

1.1 A.1 Aseev and Veliov extension of the Pontryagin maximum principle

Here we cite the maximum Pontryagin principle for the problem (P), which is infinite horizon, non-autonomous, discounted dynamic optimization problem. As it has been mentioned before (see Section 3), the maximum principle, especially the terminal condition \(\lim _{t \rightarrow \infty } \lambda (t) {{\,\mathrm{e}\,}}^{-r t} = 0\), is not necessary in such a problem.

Results that can be applicable in this paper has been proved by Aseev and Veliov (2012). First, we formulate three suitable assumptions. Consider the dynamic optimization problem (P) and let \((u^*, x^*)\) be an optimal solution to it.

-

(A1)

For almost all \(t \ge 0\) and every \((x, u)\in {\mathbb {X}} \times {\mathbb {U}}\), partial derivatives \(f_x(t, x, u)\) and \(g_x(t, x, u)\) exist. The functions \(f\) and \(g\) and their partial derivatives with respect to \(x\) are Lebesgue-Borel measurable in \((t, u)\) for every \(x\), continuous in \(x\) for almost every \(t \ge 0\) and every fixed \(u \in {\mathbb {U}}\), uniformly bounded as functions of \(t\) over every bounded set of \((x, u)\).

-

(A2)

There exist a continuous function \(\gamma :[0, \infty ) \rightarrow [0, \infty )\) and a locally integrable function \({\phi :[0, \infty ) \rightarrow {\mathbb {R}}}\) such that \(\{x : \Vert x - x^*(t) \Vert \le \gamma (t)\} \subseteq {\mathbb {X}}\) for all \(t \ge 0\), and for almost all \(t \ge 0\) we have

$$\begin{aligned} \max _{x : \Vert x - x^*(t) \Vert \le \gamma (t)} \left\{ \Vert f_x(t, x, u^{*}(t)) \Vert + \Vert g_x(t, x, u^{*}(t)) \Vert {{\,\mathrm{e}\,}}^{-r t} \right\} \le \phi (t). \end{aligned}$$ -

(A3)

There exist a number \(\beta > 0\) and a nonnegative integrable function \(\mu :[0, \infty ) \rightarrow {\mathbb {R}}\) such that for every \(\zeta \in {\mathbb {X}}\) with \(\Vert \zeta - x_0 \Vert < \beta\), Eq. (48) with \(u = u^{*}\) and the initial condition replaced by \(x(0) = \zeta\), has a solution on \([0, \infty )\), denoted by \(x^{\zeta }\), and it fulfils for a.e. \(t\),

$$\begin{aligned} \max _{x \in {{\,\mathrm{Conv}\,}}(x^{\zeta }(t), x^{*}(t))} \left| {{\,\mathrm{e}\,}}^{-r t} \left\langle g_x(t, x, u), x^{\zeta }(t) - x^{*}(t) \right\rangle \right| \le \Vert \zeta - x_0 \Vert \mu (t). \end{aligned}$$

The formulation of necessary conditions uses a Hamiltonian function \(H\) and an adjoint variable \(\psi\).

Definition 7

The Hamiltonian is a function \(H :{\mathbb {R}}_+ \times {\mathbb {X}} \times {\mathbb {U}} \times {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) such that

where \(\langle \cdot , \cdot \rangle\) denotes the inner product in \({\mathbb {R}}^n\).

Definition 8

For an admissible solution \((u^*, x^*)\) an absolutely continuous function \(\psi :{\mathbb {R}}_+\rightarrow {\mathbb {R}}^n\) is called an adjoint (or costate) variable corresponding to \((x^{*}, u^{*})\), if it is a solution to the following system

Definition 9

We say that an admissible pair \((x^{*}, u^{*})\) together with an adjoint variable \(\psi ^*\) corresponding to \((x^{*}, u^{*})\), satisfies the core relations of the normal-form Pontryagin maximum principle for the problem (P), if the following maximum condition holds on \([0, \infty )\)

Let’s turn to the main part of this section – the definition of the Pontryagin maximum principle for the infinite time horizon:

Theorem 10

(Aseev-Veliov maximum principle) Suppose that the conditions (A1)–(A3) are satisfied and \((x^{*},u^{*})\) is an optimal solutionFootnote 1for problem (P). Then there exists an adjoint variable \(\psi ^*\) corresponding to \((x^{*}, u^{*})\) such that

-

(i)

\((x^{*}, u^{*})\) , together with \(\psi ^*\) satisfy the core relations of the normal-form Pontryagin maximum principle,

-

(ii)

for every \(t\ge 0\) the integral

$$\begin{aligned} I^{*}(t) = \int \limits _{t}^{\infty } {{\,\mathrm{e}\,}}^{-r w} \left[ Z_{(x^{*}, u^{*})}(w) \right] ^{-1} \frac{\partial g(w, x^{*}(w), u^{*}(w))}{\partial x} dw, \end{aligned}$$where \(Z_{(x^{*}, u^{*})}(t)\) is the normalised fundamental matrix of the following linear system

$$\begin{aligned} {\dot{z}}(t) = - \left[ \frac{\partial f(t, x^{*}(t), u^{*}(t))}{\partial x}\right] ^T z(t), \end{aligned}$$converges absolutely and the ”transversality condition” is

-

(iii)

\(\psi ^*(t) = Z_{(x^{*}, u^{*})}(t) I^{*}(t)\).

For problems with discounting considering the formulation as in Theorem 10 may result in non-autonomous problems to solve, even if the functions \(f\) and \(g\) are independent of time. We can avoid this problem and simplify calculations by considering current value Hamiltonian and a rescaled costate variable. Consider

for \(\lambda ^*(t) = {{\,\mathrm{e}\,}}^{r t} \psi ^*(t)\).

Theorem 11

Suppose that the conditions (A1)–(A3) are satisfied and \((x^{*},u^{*})\) is an optimal solution. The two core-relation equations in the new notation reduce to \(u^*(t) \in \mathop {{\mathrm{Argmax}}}\limits _{u \in {\mathbb {U}}} H^{\text {CV}}(t, x^{*}(t), u, \lambda ^*(t)) \text { for a.e. } t\) and \(\dot{\lambda ^*}(t) = r \lambda ^*(t) - \left[ \frac{\partial f(t, x^{*}(t), u^{*}(t))}{\partial x} \right] ^{T} \lambda ^*(t) - {{\,\mathrm{e}\,}}^{-r t} \frac{\partial g(t, x^{*}(t), u^{*}(t))}{\partial x}, \quad \text { for a.e. } \;\; t \ge 0,\) while the ”transversality condition” to \(\lambda ^*(t) = {{\,\mathrm{e}\,}}^{rt}Z_{(x^{*}, u^{*})}(t) I^{*}(t)\).

1.2 A.2 Existence of the optimal solution

We use the existence theorem of Balder (1983, Theorem 3.6), which we cite in a simplified form, previously used in Wiszniewska-Matyszkiel et al. (2015).

-

(B1)

For all \(t \ge 0\), \(f(t, \cdot , \cdot )\) is continuous, \(g(t, \cdot , \cdot )\) is upper semicontinuous with respect to \((x, u)\), and the sets \({\mathbb {X}}\) and \({\mathbb {U}}\) are closed.

-

(B2)

For all \(x \in {\mathbb {X}}\), and \(t \in {\mathbb {R}}_+\), the set

$$\begin{aligned} Q(t, x) = \{(z_{0}, z) \in {\mathbb {R}}^{n+1} : z_{0} \le g(t, x, u) {{\,\mathrm{e}\,}}^{-r t}, \quad z = f(t, x, u), \quad u \in {\mathbb {U}}\} \end{aligned}$$-

(a)

is convex, and

-

(b)

\(\displaystyle Q(t, x) = \bigcap _{\delta > 0} \mathrm {cl} \biggl ( \bigcup _{|x-y| \le \delta } Q(t,y) \biggr )\), where \(\mathrm {cl}(Q)\) is a closure of the set \(Q\).

-

(a)

-

(B3)

There exists a constant \(\alpha \in {\mathbb {R}}\) such that the set of admissible pairs

$$\begin{aligned} \varOmega _{\alpha } = \left\{ (x, u) \text { admissible pairs } : J_{0, x_0}(u) \ge \alpha \right\} \end{aligned}$$is nonempty, \(\displaystyle \{ f(\cdot , x(\cdot )), u(\cdot )|_{[0,T]} : (x, u) \in \varOmega _{\alpha } \}\) is uniformly integrable for each \(T \ge 0\), and

$$\begin{aligned} G = \{ g^+(\cdot , x(\cdot ), u(\cdot )) {{\,\mathrm{e}\,}}^{-r \cdot } : (x, u) \in \varOmega _{\alpha } \} \end{aligned}$$(where \(g^+\) denotes \(\max \{0, g\}\)), is strongly uniformly integrable, that is for every \(\epsilon > 0\) there exists \(h \in L_1^+({\mathbb {R}}_+)\) such that

$$\begin{aligned} \sup _{\zeta \in G} \int _{\{t : |\zeta (t)| \ge h\}} |\zeta (t)| dt \le \epsilon . \end{aligned}$$

Theorem 12

(Balder) If conditions (B1)–(B3) are fulfilled, then there exists an optimal pair \((x^{*}, u^{*})\) for the problem (P).

1.3 A.3 Checking the assumptions of Theorem 10

First, we are going to restrict the state and control sets in a way that does not change the optimal control for realistic initial conditions. If the initial price \(p_0\) is greater than \(\frac{2A + c}{3}\), then every admissible trajectory is contained in \((-\infty , p_0]\). Note that whenever the initial price \(p_0 < A\), what we assume in our paper, then we can restrict the set of state variables (prices) to \((-\infty , A]\). Next, suppose that at some time \(t\) the price is below \(c\). Denote by \({\hat{t}}\) the time instant when the price reaches \(c\) and consider the time interval \([{\hat{t}}, t]\) such that the price does not exceed \(c\). Consequently, the leader’s instantaneous payoff is nonpositive. Thus, his optimal strategy is \(q_1 = 0\) a.e. on \([{\hat{t}}, t]\). It follows that \({\dot{p}}(t) > 0\) on the considered time interval and so values below \(c\) cannot be reached if the initial price is at least \(c\). This implies that if the initial price is in \([c,A]\), then the whole optimal trajectory of price remains in \([c, A]\). So, adding the constraint \(p\in [c,A]\) on possible prices does not change the optimal control if the initial price is in this interval.

Next, let us note that the set of control variables can also be constrained. Considering the leader’s instantaneous payoff \(\pi _1 = (p - c) q_1 - \frac{1}{2} q_1^2\), with \(p\in [c,A]\), we can see that if the leader’s production exceeds some sufficiently large \(q_{\max }\), then his current payoff becomes negative. Therefore, the optimal control of our problem is equal to the optimal control for the problem with an additional constraint \(q_1 \in [0, q_{\max }]\).

Suppose that \((p^*, q_1^*)\) is an optimal solution to the dynamic optimization problem (P) with \(g(t, p, q_1) = (p - c) q_1 - \frac{1}{2} q_1^2\) and \(f(t, p, q_1) = \frac{1}{3}s(2A + c - 3p - 2q_1)\).

-

(A1)

The functions \(f\), \(g\) and their partial derivatives \(f_p\), \(g_p\) are Lebesgue-Borel measurable in \((t, p, q_1)\) for every \(p\), continuous in \(p\) and uniformly bounded as functions of \(t\) hence they are independent of \(t\).

-

(A2)

For \(\gamma (t) = \min \{ q^*(t) - c, \; A - q^*(t) \}\), \(\{p : \Vert p - p^*(t) \Vert \le \gamma (t)\} \subseteq {\mathbb {X}}\) for all \(t \ge 0\) and we have

$$\begin{aligned} \max _{p : \Vert p - p^*(t) \Vert \le \gamma (t)} \left\{ s + q_1^*(t) {{\,\mathrm{e}\,}}^{-r t} \right\} = s + q_1^*(t) {{\,\mathrm{e}\,}}^{-r t} \le s + q_{\max } {{\,\mathrm{e}\,}}^{-r t}. \end{aligned}$$ -

(A3)

There exist a number \(\beta > 0\) and a nonnegative integrable function \(\mu :[0, \infty ) \rightarrow {\mathbb {R}}\) such that for every \(\zeta \in {\mathbb {X}}\) with \(\Vert \zeta - x_0 \Vert < \beta\), Eq. (48) with \(u = u^*\) and initial condition replaced by \(x(0) = \zeta\), has a solution on \([0, \infty )\), denoted by \(x^{\zeta }\), and for a.e. \(t\), We have

$$\begin{aligned} \begin{aligned}&\max _{p \in {{\,\mathrm{Conv}\,}}(p^{\zeta }(t), p^*(t))} \left| {{\,\mathrm{e}\,}}^{-r t} q_1 (p^{\zeta }(t) - p^*(t)) \right| \\&\quad = {{\,\mathrm{e}\,}}^{-r t} q_1 \left| p^{\zeta }(t) - p^*(t) \right| \\&\quad = {{\,\mathrm{e}\,}}^{-r t} q_1 \left| \zeta {{\,\mathrm{e}\,}}^{-st} + {{\,\mathrm{e}\,}}^{-st} \int _{0}^{t} {{\,\mathrm{e}\,}}^{sw}q_1^*(w)dw - \bigg ( p_{0} {{\,\mathrm{e}\,}}^{-st} + {{\,\mathrm{e}\,}}^{-st} \int _{0}^{t} {{\,\mathrm{e}\,}}^{sw}q_1^*(w)dw \bigg ) \right| \\&\quad \le \Vert \zeta - x_0 \Vert q_{\max } {{\,\mathrm{e}\,}}^{-(r + s) t}. \end{aligned} \end{aligned}$$Thus, it is sufficient to take \(\mu (t) = q_{\max } {{\,\mathrm{e}\,}}^{-(r + s) t}\) and \(\beta > 0\) arbitrary.

1.4 A.4 Checking the assumptions of Theorem 12

-

(B1)

For all \(t \ge 0\), the functions \(f(t, \cdot , \cdot )\) and \(g(t, \cdot , \cdot )\) are continuous in \((p, q_1)\) and sets \({\mathbb {X}} = [c, A]\) and \({\mathbb {U}} = [0, q_{\max }]\) are closed.

-

(B2)

The set

$$\begin{aligned} Q(t, p) = \left\{ (z_{0}, z) \in {\mathbb {R}}^2\ :\ z_{0} \le q_1 \bigg ( p - c - \frac{1}{2} q_1 \bigg ) {{\,\mathrm{e}\,}}^{-rt} ,\ z = \frac{1}{3} s (2A + c - 3p - 2q_1) \bigg ),\ q_1 \in [0, q_{\max }] \right\} \end{aligned}$$-

(a)

is convex,

-

(b)

\(Q(t, p) = \bigcap \limits _{\delta > 0} {\text {cl}} \Bigg ( \bigcup \limits _{|p-y| \le 0} Q(t, y) \Bigg )\).

-

(a)

-

(B3)

For \(\alpha = 0\), the set \(\varOmega _0 = \{ (p, q_1) \text { corresponding to } J_{0, p_0}(q_1) \ge 0 \}\) is nonempty as it contains \(q_1 \equiv 0\). Since for every admissible trajectory \({\dot{p}}(t) \in \big [ \frac{1}{3} s (2A + c - 3p - 2q_{\max }), \frac{1}{3} s (2A + c - 3p) \big ]\), there exist some constants \(d_1\), \(d_2\) for which \(p(t) \in \big [ p_0 + d_1 {{\,\mathrm{e}\,}}^{-st}, p_0 + d_2 {{\,\mathrm{e}\,}}^{-st} \big ]\). Hence, \(q_1 \in [0, q_{\max }]\) and both \(p\) and \(q_1\) are measurable, it follows that \(f(\cdot , p(\cdot ), q_1(\cdot ))\) for all \((p, q_1)\) are uniformly integrable on every finite interval. The constraint \(g^{+}(t, p(t), q_1(t)) {{\,\mathrm{e}\,}}^{-rt} \in [0, p q_{\max } {{\,\mathrm{e}\,}}^{-rt}]\) together with measurability of the functions \(p\), \(q_1\) and \({{\,\mathrm{e}\,}}^{-rt}\) yield strong uniform integrability of \({G = \{ g^{+}(\cdot , p(\cdot ), q_1(\cdot )) {{\,\mathrm{e}\,}}^{-r \cdot } : (p, q_1) \in \varOmega _{0} \}}\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kańska, K., Wiszniewska-Matyszkiel, A. Dynamic Stackelberg duopoly with sticky prices and a myopic follower. Oper Res Int J 22, 4221–4252 (2022). https://doi.org/10.1007/s12351-021-00665-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12351-021-00665-y

Keywords

- Differential games

- Dynamic market model

- Myopic-follower Stackelberg equilibrium

- Cournot-Nash equilibrium

- Sticky prices