Abstract

Health care systems worldwide have faced a problem of resources scarcity that, in turn, should be allocated to the health care providers according to the corresponding population needs. Such an allocation should be as much as effective and efficient as possible to guarantee the sustainability of those systems. One alternative to reach that goal is through (prospective) payments due to the providers for their clinical procedures. The way that such payments are computed is frequently unknown and arguably far from being optimal. For instance, in Portugal, public hospitals are clustered based on criteria related to size, consumed resources, and volume of medical acts, and payments associated with the inpatient services are equal to the smallest unitary cost within each cluster. First, there is no reason to impose a single benchmark for each inefficient hospital. Second, this approach disregards dimensions like quality (and access) and the environment, which are paramount for fair comparisons and benchmarking exercises. This paper proposes an innovative tool to achieve best-practices tariff. This tool merges both quality and financial sustainability concepts, attributing a hospital-specific tariff that can be different from hospital to hospital. That payment results from the combination of costs related to a set of potential benchmarks, keeping quality as high as possible and higher than a user-predefined threshold, and being able to generate considerable cost savings. To obtain those coefficients we propose and detail a log-linear piecewise frontier function as well as a dual–primal approach for unique solutions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In several countries worldwide, health care providers are paid by the services that they provide to the citizens. These providers include physicians, nurses, and mostly primary care centres and hospitals. Usually these payments depend on dimensions included in contracts celebrated between the payer (e.g. the State) and the payee (the provider). Several schemes are available: (a) block budget, featured by a periodic prospective payment associated with the activity, (b) capitation, based on the number of enrolled patients, multiplied by a unitary price, (c) case-based payments, such that health care providers are paid a prospective/retrospective lump sum per episode of care, and (d) fee for service, characterized by the payment of a price per medical act in a retrospective way. Advantages and disadvantages of these schemes can be found in the relevant literature (e.g. Marshall et al. 2014; Friesner and Rosenman 2009; Street et al. 2011).

It is important to stress that resources, namely the financial ones, are scarcer each passing day, motivating an optimization exercise to better allocate them. To the best of our knowledge, this is the first attempt to optimize payments to the health care providers. A common feature of all payment schemes is the paid price or tariff, which is usually set as the average cost per patient/medical act/episode of care. We remark that health care is a public interest service and, as such, resources allocation and payments should account not only for the efficiency of providers but also for their quality and access (Ferreira and Marques 2018b). Despite some contracts contain a number of quality and access parameters to be fulfilled, in most of the cases penalties are not sufficient to induce good practices. Instead of fixing these parameters (quality, access) and hoping that providers do not adopt misconducts, we should find out a reference set of providers for each health care provider. Such a reference set should contain only those entities whose quality and access observations are above a pre-defined threshold that indicates a minimum acceptable level of social performance. This intends to avoid that poor quality providers can be potential benchmarks. Additionally, optimal allocation of resources requires fair comparisons in terms of internal and external operational environment (Karagiannis and Velentzas 2012; Cordero et al. 2018). Efficiency of health care providers is dependent on epidemiology and demographics as well as on their specialization degree (Ferreira et al. 2017), hence disregarding these dimensions from the payment optimization is likely to produce biased results. Hence, we restrict even more the aforementioned reference set using operational environment variables such that the reference set contains the entities with good quality and access levels and, simultaneously, operate under similar conditions as the provider whose payment we want to optimize. The construction of this reference set constitutes a bridge between operational research and health economics/management, which appears to be innovative in the field.

Once the reference set has been constructed, we should use a benchmarking tool to derive an efficiency frontier, where benchmarks are placed. It is usual to construct a common frontier by using the entire set of health care providers. But since we want neither unfair comparisons nor potential benchmarks with poor quality, we use the reference set to construct such a frontier. Because each provider has its own comparability set, it is difficult to parametrically define a frontier shape equal for all providers and expect good outcomes, including fitting parameters. Therefore, the frontier should be empirically constructed, i.e. based only on inputs, outputs, and the reference set that is fixed as the overall comparability set for each provider. Data Envelopment Analysis (DEA) (Charnes et al. 1979; Banker et al. 1984) is probably the most common method to reach this goal. Because of the frontier convexity, DEA-based efficiency levels can be sometimes underestimated, meaning that inputs (outputs) should be decreased (increased) beyond what they would if there was not that bias. Indeed, nonconvexity is usually a more natural assumption for the frontier. The extreme case corresponds to (FDH) (Deprins et al. 1984; Daraio and Simar 2007), although it assumes that each provider can have one and only one benchmark. Nevertheless, we believe that such an assumption is too restrictive but still we should keep nonconvexity. In view of that, we extend the work of Banker and Maindiratta (1986) and construct a log-linear piecewise frontier with a directional nature. Properties of this model are studied, including the existence of multiple solutions. By consequence, we propose an extension of that model that results from the work of Sueyoshi and Sekitani (2009) and mitigates the aforementioned problem. Moreover, in some circumstances, the log-linear program may become infeasible; thus, we propose a slightly change on the model to cope with this problem. Based on the optimized parameters resulting from the log-linear programming tool, we can derive optimal payments as well as potential cost savings related to the achieved tariffs. These are innovations in operational research.

The structure of the manuscript is as follows. Section 2 presents some useful concepts and definitions. Section 3 details and explores the new best practice tariff-based tool (the core of this study). Section 4 related efficiency, optimal payments, and cost savings for the commissioner. Section 5 applies the new tool to the Portuguese public hospitals. Section 6 provides some concluding remarks and explains the economic impact of this tool to the Portuguese National Health Service.

2 Useful concepts and definitions

2.1 Overview

Defining optimal payments due to the health care providers depends on a number of distinct features.Footnote 1 The first one is the level of care, service, or Diagnostic Related Groups (DRG) under analysis. Each country or health care service pays for clinical acts on different levels, e.g. directly to the physician or to the nurse, per service (inpatients, outpatients, surgeries, \(\ldots\)), per speciality, per diagnosis group, and per severity level of each diagnosis group. The concept of DRG, for instance, has long been accepted by health care management researchers as a way of accounting for the patient-mix, which is to say a measure of heterogeneity among the patients admitted to the hospital services. Researchers have analysed the efficiency and productivity of hospitals based on such a concept. For instance, recently Johannessen et al. (2017) considered some DRG scores resulting from hospitalization, day-care, and outpatient consultations, so as to investigate the productivity improvement following a Norwegian hospital reform. Some references cited therein also considered DRG to homogenize the hospital activity.

The second feature is the set of variables used to establish such a payment (or tariff) and to avoid that it results from unfair comparisons and/or from benchmarks that disregarded important dimensions for citizens, such as quality and access. Hence, we need inputs (traditionally defined as the operational expenses required to treat patients), outputs (the quantity of treated patients), operational environment (which can be internal and/or external to the hospital), and quality (which also includes access). It is important to point out that, in our framework, quality is assumed to positively contribute to the hospital overall performance. In other words, from two quality observations, the largest one presents a higher utility to the hospital. However, in some cases, quality is measured through undesirable dimensions (e.g. avoidable mortality in low severity levels), demanding for an appropriate rescaling. It is not the goal of our paper, although an example is given in the next section.

Although DEA and models alike have been extensively utilized with DRG altogether, to the best of our knowledge, no other study has previously employed them to optimize payments in the health sector, particularly in DRG-based financing systems. This study can, then, be seen as a first step in order to optimize those payments, making them fairer and more sustainable, with adjustments for quality and operational environment.

The idea underlying the use of quality and operational environment-based dimensions is to construct comparability sets for each health care provider. Such sets should contain only those observations that are close to the provider whose tariff (payment) we want to optimize. Closeness (or proximity) is fixed by the so-called bandwidth. At this point, the comparability set associated with quality dimensions are formulated in a distinct way because, differently from size and environment, the higher the quality of potential benchmarks (best practices), the better. In fact, given a certain health care provider, if there is, at least, another entity delivering better health care with fewer resources per patient, then it should be a potential benchmark for the former one. Health care is a public interest service with several stakeholders, including citizens, staff, and the Government (either central or not). They usually have a minimum level of quality that is acceptable to a provider be considered a good performer. Because in our framework poor performers cannot be benchmarks, we impose a threshold that is defined as that minimum acceptable level per quality dimension.

2.2 Notation

In this paper, we have adopted the following notation:

-

\(\varOmega =\{1,\ldots ,j,\ldots ,J\}\) is the list of indexes denoting each hospital from the sample, with \(j,J\in {\mathbb {N}}\);

-

\(\varPsi =\{1,\ldots ,\ell ,\ldots ,L\}\) is the list of indexes denoting each service or DRG under analysis, for which the optimal tariff is being assessed, with \(\ell ,L\in {\mathbb {N}}\);

-

\(\varGamma ^{(\ell )} = \{1,\ldots ,r^{(\ell )},\ldots ,R^{(\ell )} \},~\ell \in \varPsi ,\) is the list of indexes denoting each quality dimension associated with the \(\ell\)th service/DRG, with \(r^{(\ell )},R^{(\ell )}\in {\mathbb {N}}\);

-

\(\varPhi ^{(\ell )} = \{1,\ldots ,c^{(\ell )},\ldots ,C^{(\ell )} \},~\ell \in \varPsi ,\) is the list of indexes of environment-related dimensions, with \(c^{(\ell )},C^{(\ell )}\in {\mathbb {N}}\);

-

\(x^{j(\ell )}\) denotes the total operational expenses related to the hospital \(j\in \varOmega\) and the service/DRG \(\ell \in \varPsi\), being \(x^{j(\ell )}>0\);

-

\(y^{j(\ell )}\) denotes the total number of in/outpatients associated with the hospital \(j\in \varOmega\) and the service/DRG \(\ell \in \varPsi\), being \(y^{j(\ell )}>0\);

-

\(q_{r^{(\ell )}}^{j(\ell )}\) is the \(r^{(\ell )}\)th quality observation related to the hospital \(j\in \varOmega\) and the service/DRG \(\ell \in \varPsi\);

-

\(z_{c^{(\ell )}}^{j(\ell )},\ell \in \varPsi ~,\) is the observation associated with the \(c^{(\ell )}\)th environment dimension, the hospital \(j\in \varOmega\), and the service/DRG \(\ell \in \varPsi\);

-

\(\mathbf {X}^{(\ell )} = (x^{1(\ell )},\ldots ,x^{j(\ell )},\ldots ,x^{J(\ell )})^\top ,~\ell \in \varPsi ,\) is the column vector of total operational expenses for the J hospitals in dataset \(\varOmega\);

-

\(\mathbf {Y}^{(\ell )} = (y^{1(\ell )},\ldots ,y^{j(\ell )},\ldots ,y^{J(\ell )})^\top ,~\ell \in \varPsi ,\) is the column vector of total in/outpatients handled by the J hospitals in dataset \(\varOmega\);

-

\(\mathbf {Q}^{(\ell )}\) is the matrix of J observations related to the \(R^{(\ell )}\) quality dimensions, where

$$\begin{aligned} \mathbf {Q}^{(\ell )}=\begin{bmatrix} q_{1}^{1(\ell )}&\cdots&q_{1}^{j(\ell )}&\cdots&q_{1}^{J(\ell )} \\ \vdots&\ddots&\vdots&\ddots&\vdots \\ q_{r^{(\ell )}}^{1(\ell )}&\cdots&q_{r^{(\ell )}}^{j(\ell )}&\cdots&q_{r^{(\ell )}}^{J(\ell )} \\ \vdots&\ddots&\vdots&\ddots&\vdots \\ q_{R^{(\ell )}}^{1(\ell )}&\cdots&q_{R^{(\ell )}}^{j(\ell )}&\cdots&q_{R^{(\ell )}}^{J(\ell )} \\ \end{bmatrix},~\ell \in \varPsi ; \end{aligned}$$ -

\(\mathbf {Z}^{(\ell )}\) is the matrix of J observations associated with the \(C^{(\ell )}\) environment dimensions, where

$$\begin{aligned} \mathbf {Z}^{(\ell )}=\begin{bmatrix} z_{1}^{1(\ell )}&\cdots&z_{1}^{j(\ell )}&\cdots&z_{1}^{J(\ell )} \\ \vdots&\ddots&\vdots&\ddots&\vdots \\ z_{c^{(\ell )}}^{1(\ell )}&\cdots&z_{c^{(\ell )}}^{j(\ell )}&\cdots&z_{c^{(\ell )}}^{J(\ell )} \\ \vdots&\ddots&\vdots&\ddots&\vdots \\ z_{C^{(\ell )}}^{1(\ell )}&\cdots&z_{C^{(\ell )}}^{j(\ell )}&\cdots&z_{C^{(\ell )}}^{J(\ell )} \\ \end{bmatrix},~\ell \in \varPsi ; \end{aligned}$$ -

\(b_{y}^{j(\ell )}\) is a strictly positive bandwidth related to the jth hospital, the \(\ell\)th service/DRG, and the total number of in/outpatients treated in that hospital, \(y^{j(\ell )}\);

-

\(b_{q_r}^{j(\ell )}\) is a strictly positive bandwidth associated with the jth hospital, the \(\ell\)th service/DRG, and the \(r^{(\ell )}\)th quality dimension;

-

\(b_{z_c}^{j(\ell )}\) is a strictly positive bandwidth related to the jth hospital, the \(\ell\)th service/DRG, and the \(c^{(\ell )}\)th environment dimension;

-

\(t_{q_r}^{(\ell )}\) is a strictly positive user-defined threshold associated with the \(r^{(\ell )}\)th quality dimension and the \(\ell\)th service/DRG—thresholds do not depend on \(j\in \varOmega\).

2.3 Technology

A production technology that transforms the total operational expenses, \(\mathbf {X}^{(\ell )}\), into in/outpatients, \(\mathbf {Y}^{(\ell )}\), can be featured by the technology set \({\mathcal {T}}^{(\ell )}\subset {\mathbb {R}}_+\times {\mathbb {R}}_+\):

The technology set \({\mathcal {T}}^{(\ell )}\) follows some axioms.

Axiom 1

(Closeness) \({\mathcal {T}}^{(\ell )}\) is a closed set. Accordingly, the input requirement set, \({\mathcal {C}}(\mathbf {y}^{(\ell )})\), and the output correspondence set, \({\mathcal {P}}(\mathbf {x}^{(\ell )})\), are also closed, being \({\mathcal {C}}(\mathbf {y}^{(\ell )})=\{\mathbf {x}^{(\ell )}\in {\mathbb {R}}_+^J~|~ (\mathbf {x}^{(\ell )},\mathbf {y}^{(\ell )})\in {\mathcal {T}}^{(\ell )} \}\) and \({\mathcal {P}}(\mathbf {x}^{(\ell )}) = \{\mathbf {y}^{(\ell )}\in {\mathbb {R}}_+^J~|~ (\mathbf {x}^{(\ell )},\mathbf {y}^{(\ell )})\in {\mathcal {T}}^{(\ell )} \}\).

Axiom 2

(No free lunch) Should \(x^{j(\ell )}=0\) and \(y^{j(\ell )}>0\) for \(j\in \varOmega\), and \((x^{j(\ell )},y^{j(\ell )})\notin {\mathcal {T}}^{(\ell )}\).

Axiom 3

(Free disposability) If \((x^{j(\ell )},y^{j(\ell )})\in {\mathcal {T}}^{(\ell )}\), \({\tilde{x}}^{j(\ell )} \geqslant x^{j(\ell )}\), and \({\tilde{y}}^{j(\ell )} \leqslant y^{j(\ell )}\), then \(({\tilde{x}}^{j(\ell )},y^{j(\ell )})\in {\mathcal {T}}^{(\ell )}\), \((x^{j(\ell )},{\tilde{y}}^{j(\ell )})\in {\mathcal {T}}^{(\ell )}\), and \(({\tilde{x}}^{j(\ell )},{\tilde{y}}^{j(\ell )})\in {\mathcal {T}}^{(\ell )}\), (p. 21, Daraio and Simar 2007).

Axiom 4

(Boundedness) The set \({\mathcal {A}}^{(\ell )}(x^{(\ell )}) = \{ ({\tilde{x}}^{(\ell )},y^{(\ell )})\in {\mathcal {T}}^{(\ell )}~|~ {\tilde{x}}^{(\ell )} \leqslant x^{(\ell )} \}\) is bounded for each \(x^{(\ell )}\in {\mathbb {R}}_+^J\) (Mehdiloozad et al. 2014).

Axiom 5

(Normal convexity of \(\log {\mathcal {T}}^{(\ell )}\)) Let \(\log {\mathcal {T}}^{(\ell )} \leftarrow \left\{ (\log \mathbf {X}^{(\ell )},\log \mathbf {Y}^{(\ell )})\in {\mathbb {R}}^J\times {\mathbb {R}}^J~|~ (\mathbf {X}^{(\ell )},\mathbf {Y}^{(\ell )})\in {\mathcal {T}}^{(\ell )} \right\}\). Let \((\log {\tilde{x}}^{j(\ell )},\log {\tilde{y}}^{j(\ell )})\in \log {\mathcal {T}}^{(\ell )}\) and \((\log {\tilde{x}}^{k(\ell )},\log {\tilde{y}}^{k(\ell )})\in \log {\mathcal {T}}^{(\ell )}\) for \(j,k\in \varOmega\). The set \(\log {\mathcal {T}}^{(\ell )}\) is (normal) convex if and only if \((\zeta \log {\tilde{x}}^{j(\ell )} + (1-\zeta ) \log {\tilde{x}}^{k(\ell )}, \zeta \log {\tilde{y}}^{j(\ell )} + (1-\zeta ) \log {\tilde{y}}^{k(\ell )} )\in \log {\mathcal {T}}^{(\ell )}\), for any \(\zeta \in [0,1]\).

Axiom 6

(Geometric convexity) \({\mathcal {T}}^{(\ell )}\) is (geometric or log-) convex if and only if \(\log {\mathcal {T}}^{(\ell )}\) is (normal convex). It is to say that, for any \(({\tilde{x}}^{j(\ell )},{\tilde{y}}^{j(\ell )})\in {\mathcal {T}}^{(\ell )}\) and \(({\tilde{x}}^{k(\ell )},{\tilde{y}}^{k(\ell )})\in {\mathcal {T}}^{(\ell )}\) with \(j,k\in \varOmega\), then

2.4 Concepts and definitions

This subsection provides the mathematical formulations of (1) bandwidths; (2) comparability sets used to constraint the original sample to those hospitals similar to the one whose optimal payment we want to assess and also exhibiting quality levels above a user-defined threshold; and (3) best practice tariff, also called optimal payment (or optimal price), based on a set of coefficients to be optimized and the values observed for the entities belonging to the overall comparability set.

2.4.1 Bandwidths

In general and because hospitals should be comparable, some measures of proximity (or closeness) among them are desirable. Such measures are the so-called bandwidths. The larger the bandwidth, the broader the set of hospitals accepted as comparable with the hospital k, whose optimal payment we want to assess. In view of that, optimal bandwidths are paramount.

The choice of optimal bandwidths, \(b_y^{k(\ell )},~b_{q_r}^{k(\ell )}\), and \(b_{z_c}^{k(\ell )}\), can be debatable since there is a number of ways to compute a bandwidth. All of those ways present advantages but also shortcomings. Particularly, a bandwidth can be either global or local. A bandwidth is global if it is the same across the whole sample of hospitals; otherwise, it is local.

On the one hand, global bandwidths can easily be computed by using, for instance, the Silverman’s rule of thumb. Let f denote a probability density function. We usually plug-in f by a kernel with order \(\gamma >0\). Hence, the global bandwidth related to a variable V, whose observations in \(\varOmega\) have standard deviation \({\hat{\sigma }}_V\), is (Silverman 1986):

According to Ferreira et al. (2017), we should use kernel functions with compact support, i.e., \(f(u)>0\) if \(|u|\leqslant 1\) and \(f(u)=0\) otherwise, and symmetric around k, i.e. \(\gamma\) should be equal to 2 to avoid negative parts on f. In that case, the bandwidth simplifies to \(b_V = 2{\hat{\sigma }}_V \left( \frac{\sqrt{\pi } \int _{-\infty }^{+\infty } f^2(u)du}{ 6 J \left( \int _{-\infty }^{+\infty } u^2f(u)du\right) ^2} \right) ^{1/5}\). For example, if the triweight kernel is used, we have \(b_V \approx 3.62 {\hat{\sigma }}_V J^{-1/5}\). The smaller and the more heterogeneous the sample, the larger the bandwidth.

On the other hand, local bandwidths can be obtained e.g. through the so-called k-Nearest Neighbor method (Daraio and Simar 2007). First, one defines a grid of N units, say \(N\in [\epsilon _1 J, \epsilon _2 J]\), with \(0<\epsilon _1\leqslant \epsilon _2\leqslant 1\). Then, one finds the value of N within such range that minimises the score function CV(N). For the case of bandwidth \(b_{q_r}^{k(\ell )}\), the CV function is as follows:

where f denotes a univariate kernel function. Therefore, \(b_{q_r}^{k(\ell )}\) is the local bandwidth associated with hospital k chosen such that there are N points verifying \(|q_{r^{(\ell )}}^{j(\ell )} - q_{r^{(\ell )}}^{i(\ell )} |\leqslant b_{q_r}^{k(\ell )},~r^{(\ell )}\in \varGamma ^{(\ell )}\). Naturally, we can specify similar local bandwidths for the case of the output and the operational environment data. There are other alternatives for the local bandwidths’ formulation, including the (data-driven) least squares cross validation procedure to minimise the integrated squared error (Bǎdin et al. 2010; Hall et al. 2004; Li and Racine 2007).

2.4.2 Comparability sets

The idea underlying our approach is to restrict the original set of hospitals \(\varOmega\) based on constraints related to size (because of potential economies of scale), quality, access, and environment. To simplify the exposition, we will assume that access dimensions can be included into the group of quality variables and handled like them. Therefore, we may introduce three comparability sets that are subsets of \(\varOmega\).

The first comparability set presented regards the size of the health care provider. It is important to guarantee that best practices related to hospital k have similar operations’ scale as this one. For that reason, we constrain the set of admissible best practices for k by using the concept of bandwidth and centring the observations associated with that comparability set on \(y^{k(\ell )}\), which is assumed to be the proxy for the size of k.

Definition 1

(Size-related comparability set, \(\varOmega ^{k(\ell )}_y\)) Given a service or DRG \(\ell \in \varPsi\), the size-related comparability set for hospital \(k\in \varOmega\) is defined as the set of hospitals whose sizes, as measured by the output level \(\mathbf {Y}^{(\ell )}\), are close to the dimension of hospital k, i.e., \(y^{k(\ell )}\). Let the bandwidth \(b_y^{k(\ell )}\) be the closeness measure. Hence, \(\varOmega ^{k(\ell )}_y\) is as follows:

In the following definition, we introduce the so-called quality-related comparability set, which, as before, requires a bandwidth. However, it also needs a user predefined threshold per quality measure so as to ensure that no unit with low levels of quality (including access to health care services) can be addressed as a potential best practice for the hospital k whose optimal payment is being computed. In fact, we could achieve lower levels of resources consumption at the cost of reducing the quality of supplied services, endangering the societal mission of health care providers. In practice, we would be interested on best practices that would outperform k in terms of both quality and access, i.e. verifying \(q^{j(\ell )}_{r^{(\ell )}}\geqslant q^{k(\ell )}_{r^{(\ell )}}\) for any \(r^{(\ell )}=1,\ldots ,R^{(\ell )}\). Nonetheless, we note that hospitals with very high quality levels may sometimes be technically inefficient on resources consumption for the quantity of services delivered. It means that, if hospital k reaches the maximum quality within the dataset, then, according to our previous two conditions, no other hospital could be a benchmark for k even if it would be more technically efficient than the latter. This constitutes a problem because the optimal payment for k would not be Pareto-efficient, jeopardising the health system sustainability. In practice, usually one can reduce a little the quality levels of k by using bandwidths, if it results on meaningful cost savings and as long as the best practices found for k would present quality levels at least above the user-defined threshold.

Definition 2

(Quality-related comparability set, \(\varOmega ^{k(\ell )}_q\)) Given a service or DRG \(\ell \in \varPsi\), the quality-related comparability set for hospital \(k\in \varOmega\) is defined as the set of hospitals whose quality dimensions \(\mathbf {Q}^{(\ell )}\) are close to the quality observed for hospital k (or even above it), \(q_{r^{(\ell )}}^{k(\ell )}\), and simultaneously larger than the user-defined threshold \(t^{(\ell )}_{q_r}\) for all \(r^{(\ell )}\in \varGamma ^{(\ell )},~\ell \in \varPsi\). Let the bandwidth \(b_{q_r}^{k(\ell )}\) be the closeness measure. Hence, \(\varOmega ^{k(\ell )}_q\) is as follows:

The definition of an environment-related comparability set is similar to the one associated with size (vide supra).

Definition 3

(Environment-related comparability set, \(\varOmega ^{k(\ell )}_z\)) Given a service or DRG \(\ell \in \varPsi\), the environment-related comparability set for hospital \(k\in \varOmega\) is defined as the set of hospitals whose environment \(\mathbf {Z}^{(\ell )}\) are close to the conditions observed for hospital k, \(z_{c^{(\ell )}}^{k(\ell )}\), for all \(c^{(\ell )}\in \varPhi ^{(\ell )},~\ell \in \varPsi\). Let the bandwidth \(b_{z_c}^{k(\ell )}\) be the closeness measure. Hence, \(\varOmega ^{k(\ell )}_z\) is as follows:

Using these three definitions, we can construct the overall comparability set, \(\varOmega ^{k(\ell )}\), which is a subset of \(\varOmega\).

Definition 4

(Overall comparability set, \(\varOmega ^{k(\ell )}\)) The overall comparability set related to service/DRG \(\ell \in \varPsi\) and the hospital \(k\in \varOmega\), results from the intersection of the three previously defined comparability sets:

Figure 1 exemplifies the achievement of the overall comparability set associated with the hospital \(k=4\) as well as the corresponding comparability sets. In this example, there are fifteen hospital in the dataset; they could be potential best practices for k if features like size, quality, and environment would be disregarded. However, we have considered one quality and another environment dimensions. Let the blue and the green areas (left) define the the size- and the environment-related comparability sets for \(k=4\), respectively. The intersection between them will result on a subset composed of the following hospitals: \(\varOmega ^{k(\ell )}_y \cap \varOmega ^{k(\ell )}_z = \{3, 4, 7, 14, 15 \}\). The red line (right) identified the user-defined threshold. Arrows explain that the quality of potential best practices should be larger than or equal to 30. However, the quality observed for hospital k is equal to 70 and the bandwidth is 22, meaning that the quality of those best practices for hospital k should, in fact, be larger than 48. Accordingly, we have \(\varOmega ^{k(\ell )}_y \cap \varOmega ^{k(\ell )}_q = \{ 1, 4, 7, 15 \}\) and \(\varOmega ^{k(\ell )} = \{ 4, 7, 15 \}\). Therefore, the set of potential best practices related to k is composed of itself and of two more hospitals: 7 and 15. In the next subsection we will explain how the best practice tariff of k can be obtained from \(\varOmega ^{k(\ell )}\). To do so, we have to introduce the following notation:

-

\(J^k\) is the length of the list \(\varOmega ^{k(\ell )}\);

-

\(\mathbf {X}^{k(\ell )},~\ell \in \varPsi ,\) is the vector of total operational expenses associated with the \(J^k\) hospitals in \(\varOmega ^{k(\ell )}\);

-

\(\mathbf {Y}^{k(\ell )},~\ell \in \varPsi ,\) is the vector of total in/outpatients handled by the \(J^k\) hospitals in \(\varOmega ^{k(\ell )}\).

In the previous example, \(J^k=3\), \(\mathbf {X}^{k(\ell )}=(x^{4(\ell )} , x^{7(\ell )}, x^{15(\ell )})^\top\), and \(\mathbf {Y}^{k(\ell )}=(y^{4(\ell )} , y^{7(\ell )}, y^{15(\ell )})^\top\).

2.4.3 Best practice tariffs

The best practice tariff of hospital \(k\in \varOmega\) and service/DRG \(\ell \in \varPsi\) is, by definition, the paid price per medical act which is assessed using the information of benchmarks (best practices), which, in turn, are at least as good performer as hospital k in the provision of service/DRG \(\ell\). Because hospitals present distinct technologies and face heterogeneous environments, they must be comparable using data related to the inn- (size, complexity of inpatients) and the out-operational environment (demographics, epidemiology). Likewise, poor quality hospitals should not be considered potential best practices for k. For that reason, we have created the concept of comparability sets associated with this hospital. Using it we can formulate the optimal (best practice based) tariff paid to hospital k.

Definition 5

(\({\mathcal {O}}\)-order best practice tariff paid to hospital k and service/DRG \(\ell\), \({\mathscr {P}}^{k(\ell ,{\mathcal {O}})}\)) Let \(k\in \varOmega\) be a hospital, \(\ell \in \varPsi\) a service or a DRG, and \(\varOmega ^{k(\ell )}\) the overall comparability related to the former two. The weighted Hölder (or power/generalized) mean with order \({\mathcal {O}}\) of a vector \(\mathbf {V}=(V_1,\ldots ,V_i,\ldots ,V_n)^\top\) and weights \({\varvec{\mu }}=(\mu _1,\ldots ,\mu _i,\ldots ,\mu _n)^\top\), verifying \(\sum _{i=1}^{n} \mu _i = 1\), is

where \(\langle \cdot ,\cdot \rangle\) denotes the inner-product of two vectors. The \({\mathcal {O}}\)-order best practice tariff that should be paid to hospital k is the relationship between the optimal costs and the number of in/outpatients for that hospital. We assume that optimal expenses can be modelled as weighted Hölder averages with order \({\mathcal {O}}\) and weights \({\varvec{\mu }}^k\):

In this case, we assume that the number of in/outpatients is out of control by the hospital managers. If this is not the case, we simply have to replace the denominator of Eq. (8) by \({\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,{\mathcal {O}})\).

The \({\mathcal {O}}\)-order best practice tariff paid to hospital k for the service/DRG \(\ell\) in the previous example is, according to Eq. (8):

The problem associated with Eq. (8) is that, in general, we do not know the weights \({\varvec{\mu }}^k\) to use. Therefore, instead of imposing quantities with little or even no empirical support, these weights should be optimized, for instance, using linear programming tools. In the next subsections, we discuss how cost savings can arise.

3 A new best practice tariff-based tool

In the last section, we have defined the overall comparability set and explained how the best practice tariffs can be expressed in terms of some coefficients that should be optimized using benchmarking tools. In our case, we develop and explore a model similar to DEA that constructs a log-linear piecewise frontier and which is more flexible than DEA itself. This flexible model is named multiplicative (or log-) DEA. Those coefficients \({\varvec{\mu }}^k\) can be optimized using log-linear programming model, as detailed in Sect. 3.3. This one extends the work of Banker and Maindiratta (1986). Some properties of the new model are studied in Sect. 3.4. The reader should be aware of the problem of multiple/degenerate solutions associated with those coefficients, which can be critical to our optimal payments. Therefore, we extend our DEA model to solve the problem of multiple solutions (Sect. 3.5). In some circumstances, this model may become infeasible; hence, we propose a strategy to overcome this problem, vide Sect. 3.6. Finally, Sect. 3.7 presents a simple step-by-step procedure to simplify the exposition and to sum up our approach to optimize payments.

3.1 Past research on multiplicative DEA

The proposed way of optimizing tariffs imposes the achievement of Pareto-optimal weights \({\varvec{\mu }}^k\). DEA can be very useful in such a situation. Since its foundation, it seems to be the widest employed model for efficiency assessment, especially in health care (Hollingsworth 2008). Because of the underlying convexity, the DEA formulation proposed by Banker et al. (1984) requires non-increasing marginal products. However, according to the classical production theory, there are three main stages characterizing the consumption of resources and the associated quantity of produced outputs (vide Fig. 2). For input quantities smaller than a, the fixed input is being utilized more effectively as the variable input increases (Kao 2017), which is to say that the marginal product increases accordingly (Henderson and Quandt 1980). Before b, the production function (red line) is non-concave and the production possibility set cannot be convex (Banker and Maindiratta 1986). If the standard DEA model is used to estimate this frontier (dashed line [Ob]), then some efficient observations exploiting gains from increasing specialization with larger scale sizes (i.e., close to a) would be rated as inefficient.

An immediate conclusion is that the convexity assumption must be smoothed to account for the case of increasing marginal products. An alternative is the so-called FDH (Deprins et al. 1984), which constructs a frontier with a staircase nature that, in turn, is discontinuous in some points and not differentiable (note that we do not require that the production function must be differentiable). Situations including non-variable returns to scale and imposition of restrictions over multipliers through FDH (Ferreira and Marques 2017) require solving a mixed-integer linear program (Agrell and Tind 2001), which can be difficult to implement in some solvers. Another alternative is the multiplicative DEA, also named log-DEA or DEA-Cobb–Douglas (whose mathematical details will be detailed soon).

Back nearly 40 years ago, Banker et al. (1984) constructed a log-linear model in which outputs are modelled following a Cobb–Douglas function. Under such a framework, outputs do not compete for the inputs, although they do in most of the empirical scenarios, including hospitals. Therefore, this model is not applicable in these situations. Charnes et al. (1982) suggested a multiplicative efficiency measure, but which is not consistent with the postulated underlying production of Banker and Maindiratta (1986). One year later, the same authors modified their model to account for non-radial inefficiency sources. Nonetheless, they did not examine the characteristics of the production technology, ignoring concepts like returns to scale, optimal scale, and non-competing outputs. Later on, Banker and Maindiratta (1986) proposed a radial log-DEA that account for competing outputs. These authors have verified that their model outperforms the piecewise linear DEA model of Banker et al. (1984) in terms of rates of substitution of inputs, and especially whenever the production technology frontier exhibits non-concavity in some regions. In the present work, we follow the model of Banker and Maindiratta, which has not seen further meaningful developments and enhancements, despite its advantages (vide infra).

Chang and Guh (1995) criticize the remedy of Suayoshi and Chang (1989) to transform the efficiency score associated with the multiplicative model of Charnes et al. (1982) into unit invariant. They propose another model, which according to Seiford and Zhu (1998) reduces itself to the proposal of Charnes et al. This is because the model of Chang and Guh is for the case of constant output and linearly homogeneous production technology. The authors fail to recognize that the constraint associated with the convexity of the DEA model of Banker et al. is the basis for units invariance. Therefore, according to Seiford and Zhu, the distance efficiency measure of Chang and Guh is of no value.

Marginal products are usually related to returns to scale (Ferreira and Marques 2018a). Since the standard DEA fails to identify the production regions of increasing marginal products, multiplicative DEA models have been used to analyse returns to scale and most productive scale size; vide the works of Zarepisheh et al. (2010) as well as Davoodi et al. (2015).

Only a few applications of the multiplicative DEA model have been published. We point out three: Emrouznejad and Cabanda (2010), Tofallis (2014) and Valadkhani et al. (2016). The former authors integrated the so-called Benefit of Doubt (Cherchye et al. 2011) and the log-DEA to construct a composite indicator associated with six financial ratios and twenty-seven UK industries. They confirm that the multiplicative model is more robust than the standard DEA model. In the same vein of Emrouznejad and Cabanda, Tofallis also constructed composite indicators with multiplicative aggregation and remarked that the log-DEA avoids the zero weight problem of the standard DEA. Meanwhile, Valadkhani et al. proposed a multiplicative extension of environmental DEA models, accounting for weakly disposable undesirable outputs, and used it to measure efficiency changes in the world’s major polluters. It is interesting to note that the adopted model is very close to the one proposed by Mehdiloozad et al. (2014). These authors developed a generalized multiplicative directional distance function as a comprehensive measure of technical efficiency, accounting for all types of slacks and satisfying several desirable properties.

Previous studies have considered that every observation is enveloped by the log-linear frontier and, for that reason, no infeasibility may arise. If this is not the case, projecting super-efficient observations into the frontier can be infeasible, mostly because of the undertaken path (model direction) (Chen et al. 2013). Infeasibility may, then, occur if the hospital whose tariff we are optimizing is not enveloped by the frontier. However, should it belong to the reference set used to construct such a frontier, and multiple solutions may be achieved, meaning that the optimal tariff could be non-unique. Neither of these two problems has been properly addressed in the literature with respect to either the multiplicative or log-DEA or the payments optimization.

3.2 Advantages of using log-DEA instead of the standard DEA

The advantages of log-DEA can be summarized as follows:

-

log-DEA is more flexible than DEA, allowing for increasing marginal products (Kao 2017);

-

log-DEA allows for outputs competing for inputs, as happens in most of empirical situations, including health care, (Banker and Maindiratta 1986);

-

log-DEA outperforms DEA in terms of rates of substitution of inputs (Banker and Maindiratta 1986);

-

log-DEA outperforms DEA when the (unknown) production technology frontier exhibits non-concavity regions, (Emrouznejad and Cabanda 2010);

-

log-DEA avoids the zero weight problem of the standard DEA (Tofallis 2014);

-

log-DEA keeps the production technology globally and geometrically convex (or non-convex under the arithmetic definition), which is a more natural solution. Indeed, there is no reason to believe that the production technology must be (piecewise) linear and the associated set must be convex. Frontiers related to non-convex sets are always consistent, even if the technology is convex (Daraio and Simar 2007). Because of this property, log-DEA can be used to estimate economies of scope (vide a comprehensive discussion in Ferreira et al. 2017);

-

log-DEA mitigates the inefficiency overestimation resulting from infeasible regions constructed by two or more very distant efficient observations (Tiedemann et al. 2011);

-

log-DEA classifies all non-dominated observations as efficient, unlikely DEA.

3.3 Performance assessment through nonparametric log-linear benchmarking tools

A very important feature on defining the optimal (best practice based) tariff is the vector \({\varvec{\mu }}^k=(\mu _1^k,\ldots ,\mu _i^k,\ldots ,\mu _{J^k}^k)^\top\). As previously mentioned, the components of this vector should be optimized in the Pareto sense. This subsection explains how this can be done for those components. We will describe the construction of a log-linear piecewise frontier function from the \(J^k\) hospitals composing \(\varOmega ^{k(\ell )}\). This frontier contains the potential benchmarks related to hospital k and the service/DRG \(\ell\).

First of all, we have to define the concepts of target and target setting.

Definition 6

(Targets of hospital k) Targets are the optimal values associated with hospital k for inputs and outputs, \({\tilde{x}}^{k(\ell )}\) and \({\tilde{y}}^{k(\ell )}\) respectively. Targets must verify the conditions:

Definition 7

(Target setting for hospital k) Targets are mathematically defined by the \({\mathcal {O}}\)-order Hölder average:

In the case of \({\mathcal {O}}=0\) (Banker and Maindiratta 1986), Eq. (10) reduces to:

Because the logarithmic function is monotonically increasing, Eq. (9) can be rewritten as:

and, by Eq. (11), we have:

Inequalities in (12) can be transformed into equations by using (nonnegative) slacks. These slacks can be decomposed into controllable (vector \(\mathbf {D}^k=(d_1^k,d_2^k)\) with \(d_1^k,d_2^k\geqslant 0\)) and uncontrollable quantities (coefficients \(\beta ^k,~s_1^k,\) and \(s_2^k\)). Hence,

where \(\mathbf {1}/\mathbf {Y}^{k(\ell )}\) is the Hadamard’s componentwise division between two vectors, \(\mathbf {1}=(1,\ldots ,1)\) and \(\mathbf {Y}^{k(\ell )}\), and the logarithmic function applies to each component of vectors, i.e., \(\log \mathbf {V} = (\log V_1,\ldots ,\log V_{J^k})^\top\).

These constraints create a log-linear piecewise frontier function assuming that \(\langle \sqrt{{\varvec{\mu }}^k},\sqrt{{\varvec{\mu }}^k} \rangle = 1\) and all components of \({\varvec{\mu }}^k\) are nonnegative. Let us find the maximum value of the uncontrollable components using a linear program. In this case, we first optimize \(\beta ^k\) and, once its value has been achieved, we maximize both \(s_1^k\) and \(s_2^k\), which justifies the adoption of a non-Archimedean, \(\varepsilon\). The linear program is as follows:

Note that \(\beta ^k\) is not an efficiency score per se. In fact, the efficiency of hospital k in service/DRG \(\ell\) can be defined as the relationship between optimal targets and observations for both inputs and outputs.

Definition 8

(Efficiency score, \(\theta ^{k(\ell )}\)) Using the concept of targets, we define the efficiency score of hospital k in service/DRG \(\ell\) as Portela and Thanassoulis (2006):

Because of Definition 7 and the assumption \({\mathcal {O}}=0\), which returns a log-linear piecewise frontier, Eq. (15) becomes:

where \({\varvec{\mu }}^k\) is obtained from Model (14). Using Eq. (16) and after some easy algebraic manipulations, we can associate \(\theta ^{k(\ell )}\) with \(\beta ^k\):

This relationship allows us to conclude: (a) if \(\beta ^k=s_1^k=s_2^k=0\), then \(1/\theta ^{k(\ell )}=1\Leftrightarrow \theta ^{k(\ell )}=1\), i.e., hospital k is efficient; (b) if \(\beta ^k \ne 0 \vee s_1^k + s_2^k > 0\), then k is not efficient because \(\theta ^{k(\ell )} < 1\). Of course, k can outperform the frontier constructed by \(\varOmega ^{k(\ell )}\), which means that \(\beta ^k < 0\) and \(\theta ^{k(\ell )} > 1\), indicating super-efficiency.

3.4 Some properties of the developed model

Model (14) exhibits several important properties: efficiency requirement, scale invariance, nonconvexity, strict monotonicity, and directional nature. Notwithstanding, it is not translation invariant and it may exhibit multiple optima solutions. Because of the latter, the optima of log-DEA primal are not sufficient to compute the optimal paid price, \({\mathscr {P}}^{k(\ell ,{\mathcal {O}})},~{\mathcal {O}}=0,\) through the optimized weights \({\varvec{\mu }}^k\). We will explore an alternative to provide the unique solution for those weights—the so-called primal–dual approach for multiple optima.

Proposition 1

(Efficiency requirement) If either \(k\in \varOmega ^{k(\ell )}\) or \(\exists j\in \varOmega ^{k(\ell )}:~(x^{j(\ell )},y^{j(\ell )}) \succ (x^{k(\ell )},y^{k(\ell )})\),Footnote 2then \(\theta ^{k(\ell )}\) is bounded by a non-Archimedean, \(\varepsilon \sim 0\), and by 1, i.e., the log-DEA primal model verifies the efficiency requirement property.

Proof

Under the condition \(k\in \varOmega ^{k(\ell )}\) or the condition \(\exists j\in \varOmega ^{k(\ell )}:~(x^{j(\ell )},y^{j(\ell )}) \succ (x^{k(\ell )},y^{k(\ell )})\), all slacks (\(d^k\beta ^k + s^k\)) are nonnegative, and the optimal objective is nonnegative as well. Hence, \(\log \frac{1}{\theta ^{k(\ell )}} \geqslant 0 \Leftrightarrow \frac{1}{\theta ^{k(\ell )}} \geqslant \exp 0 = 1 \Leftrightarrow \theta ^{k(\ell )} \leqslant 1\). \(\square\)

Proposition 2

(Maximum value of \(\beta ^k\)) Let \({\mathscr {X}}^{k(\ell )} = \displaystyle \frac{1}{d_1^k}\log \frac{ x^{k(\ell )} }{\displaystyle \min \nolimits _{j\in \varOmega ^{k(\ell )}} x^{j(\ell )} }\) and \({\mathscr {Y}}^{k(\ell )} = \displaystyle \frac{1}{d_2^k}\log \frac{ 1 }{y^{k(\ell )} \displaystyle \min \nolimits _{j\in \varOmega ^{k(\ell )}} \frac{1}{y^{j(\ell )}} }\). Therefore, \(\beta ^k \leqslant \min \{ {\mathscr {X}}^{k(\ell )}, {\mathscr {Y}}^{k(\ell )} \}\).

Proof

From the definition of the log-DEA primal model, we known that \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle \leqslant \log x^{k(\ell )} - d_1^k \beta ^k\) and \(\langle {\varvec{\mu }}^k, \log 1/\mathbf {Y}^{k(\ell )} \rangle \leqslant \log 1/y^{k(\ell )} - d_2^k \beta ^k\). Moreover, from the Hölder mean it is obvious that \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle \geqslant \min _{j\in \varOmega ^{k(\ell )}} \log x^{j(\ell )} = \log \min _{j\in \varOmega ^{k(\ell )}} x^{j(\ell )}\) and \(\langle {\varvec{\mu }}^k, \log 1/\mathbf {Y}^{k(\ell )} \rangle \geqslant \min _{j\in \varOmega ^{k(\ell )}} \log 1/y^{j(\ell )} = \log \min _{j\in \varOmega ^{k(\ell )}} 1/y^{j(\ell )}\). Therefore,

\(\square\)

Because of Proposition 2 and the maximization of \(\beta ^k\) in the objective function, we know that \(\beta ^k = \min \{ {\mathscr {X}}^{k(\ell )}, {\mathscr {Y}}^{k(\ell )} \}\). Hence, the log-DEA primal can be simplified as follows:

Proposition 3

(Scale invariance) If \(\mathbf {D}^k\) does not depend on data, then the log-DEA primal model is scale invariant.

Proof

Let us assume that \(\mathbf {D}^k\) does not depend on data. Consider the transformation of \(x^{k(\ell )}\) using a scalar \(\xi \in {\mathbb {R}}\): \(x^{k(\ell )}\longmapsto \xi x^{k(\ell )}\). The first constraint of log-DEA primal becomes:

where \(\left\langle {\varvec{\mu }}^k, \log \xi \mathbf {X}^{k(\ell )} \right\rangle = \left\langle {\varvec{\mu }}^k, \log \xi \right\rangle + \left\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \right\rangle\) results from the distributive property of the inner product and \(\left\langle {\varvec{\mu }}^k, \log \xi \right\rangle = \log \xi\) results from the condition \(\langle \sqrt{{\varvec{\mu }}^k}, \sqrt{{\varvec{\mu }}^k} \rangle = 1\). Thus, the first constraint of log-DEA primal is recovered. However, this is only true if \(\mathbf {D}^k\) does not depend on data; otherwise, the equivalences above do not necessarily hold. Since the same does apply for the output \(y^{k(\ell )}\), we conclude this proof. \(\square\)

Proposition 4

(Convexity) The frontier constructed by log-DEA primal model is not convex in the original space of variables. This is because \(\log {\mathcal {T}}^{(\ell )}\) is (normal) convex, meaning that \({\mathcal {T}}^{(\ell )}\) is log-convex.

Proof

It is not difficult to conclude that, in the log-space, the frontier is convex. Now, let us consider a linear facet of the frontier in the log-space. Hence, we have \(\log y = a\log x + b\) or, equivalently, \(y=x^a \exp b\), which is not linear. That is, in the original space of variables, the constructed frontier is not convex. \(\square\)

Proposition 5

(Strict monotonicity) Log-DEA is strictly monotone. \(\theta ^{k(\ell )}\) strictly decreases with the increase of slacks.

Proof

The objective function of log-DEA strictly increases with slacks, and so does \(\log (1/\theta ^{k(\ell )})\). Because of the monotonicity property of logarithmic functions, \(1/\theta ^{k(\ell )}\) also increases with slacks, which means that \(\theta ^{k(\ell )}\) decreases with the increase of slacks. In fact, we have \(\theta ^{k(\ell )} = \exp ( - \beta ^k (d_1^k + d_2^k) - s_1^k - s_2^k)\). This is in line with the efficiency requirement property. \(\square\)

Proposition 6

(Directional nature) Log-DEA is a radial directional model.

Proof

Log-DEA is a radial model because of the parameter \(\beta ^k\) that imposes the projection of hospital k in the frontier constructed using \(\varOmega ^{k(\ell )}\). It is directional because of the vector \(\mathbf {D}^k\), which controls for the path used to project k (Fukuyama and Weber 2017). \(\square\)

Proposition 7

(Translation invariance) Log-DEA is not translation invariant.

Proof

This proposition results from the fact that the logarithmic function does not verify the distributive property, i.e., \(\log (a+b) \ne \log a + \log b,~\forall~a,b\in\mathbb{C}\). \(\square\)

Proposition 8

(Log-translation invariance) Log-DEA is log-translation invariant.

Proof

This is because the log-DEA model is scale invariant. Indeed, scale invariance or log-translation invariance are tantamount. \(\square\)

To introduce the next proposition, we need the dual version of log-DEA primal, which results from the duality in linear programming. We first note that \(\beta ^k\) is an unrestricted variable of log-DEA, which means that it can be transformed into two nonnegative variables: \(\beta ^k = {\overline{\beta }}^k - {\underline{\beta }}^k\). Additionally, each constraint of the primal model (except for the nonnegativity of some variables) is modelled by an equation, meaning that two nonnegative variables are required to model each constraint: \(u^+,u^-\) for inputs, \(v^+,v^-\) for outputs, and \(w^+,w^-\) for the convexity constraint. Hence, we get the following set of dual constraints:

The objective function is, obviously,

From constraints (18d) and (18e), we conclude that \(d_1^k (u^+ - u^-) + d_2^k (v^+ - v^-) = 1\) or, equivalently, \(u^+ - u^- = \frac{1 - d_2^k (v^+ - v^-)}{d_1^k}\), which allows us to simplify the dual model. Since \(u^+ - u^- \geqslant \varepsilon\), we have \(v^+ - v^- \leqslant \frac{1 - \varepsilon d_1^k}{d_2^k}\). From constraint (18c), we conclude that:

Furthermore, the first constraint can be rewritten as:

Now, we observe that \(v^+ - v^-=v\) and \(w^+ - w^-=w\), where v and w are both unrestricted by definition. Let

This means that the log-DEA dual model becomes:

Proposition 9

(Multiple solutions) If \(k\in \varOmega ^{k(\ell )}\), then the log-DEA dual model violates the desired property of solutions uniqueness.

Proof

To prove that the log-DEA dual model provides multiple solutions it is sufficient to observe that the hyperplane created by the constraint (21b) and the one constructed by the objective function are parallel if \(k\in \varOmega ^{k(\ell )}\). Indeed, when \(j=k\), we have \(\eta ^j v - w =\eta ^k v - w\): the latter is, precisely, the objective function. \(\square\)

3.5 The case of multiple optima

Should the dual have multiple optima (Proposition 9), then every optimal basic solution to the primal is degenerate. This causes problems in the definition of optimal paid prices. In the absence of degeneracy, two different simplex tableaus in canonical form give two different solutions. Otherwise, it is possible that there are two different sets of basic variables (coefficients \({\varvec{\mu }}^k\)) giving the same solution (efficiency score). Since we need unique \({\varvec{\mu }}^k\), we must develop a linear program that returns these coefficients verifying such a property.

Based on proposition 9, if one desires/needs only the efficiency score, then there is no need for further model improvements. In general, restrictions over dual variables are adopted to include managerial/policy preferences (cf. Shimshak et al. (2009), Podinovski (2016) and Podinovski and Bouzdine-Chameeva (2015, 2016) for a further discussion), but they tend to influence the efficient frontier shape. That is, efficiency scores change due to the inclusion of multiplier constraints. Intrinsically, these restrictions also minimize (but do not avoid) the multiple solutions problem (Cooper et al. 2007), at the cost of both efficiency scores changing and their meaning potential loss. To avoid this serious problem, we follow the approach of Sueyoshi and Sekitani (2009) to develop a multiplicative log-DEA model, which does not violate the desired property of solutions uniqueness. The next two definitions help us on this goal.

Definition 9

(Complementary slackness condition) The well-known complementary slackness theorem of linear programming can be applied to both log-DEA primal and log-DEA dual. The following conditions must be obeyed for every optima of both log-DEA models, should \(u=u^+ - u^-\), \(v=v^+ - v^-\), \(w=w^+ - w^-\) are three free in sign variables such that u and v are related via \(u=(1-d_2^k v)/d_1^k\). Let \(\eta ^j = \frac{1}{d_1^k}\log \left( (y^{j(\ell )})^{d_1^k} ~(x^{j(\ell )})^{d_2^k} \right)\) for \(j\in \varOmega\).

These are the strong complementary slackness conditions associated with the log-DEA model.

Definition 10

(Unique Solution-based log-DEA) The following linear program provides solutions for log-DEA model that obey to the desirable uniqueness property.

The US-log-DEA merges the dual, the primal, and the strong complementary slackness conditions (9), and forces that the objectives of both dual and primal log-DEA models are equal (23e). If a variable x verifies \(x > a\), where \(a\in {\mathbb {R}}\), then there is a nonnegative quantity \(\alpha\) such that \(x-\alpha \geqslant a\). The objective of US-log-DEA is to maximize the parameter \(\alpha\) that transforms the strong complementary slackness conditions into feasible linear conditions (23h–23j). This means that the optimal solution of US-log-DEA, \(({\varvec{\mu }}^{k*},\beta ^{k*}, s_1^{k*}, s_2^{k*}, v^{*}, w^{*}, \alpha ^{*})\), verifies the strong complementary slackness conditions (9). US-log-DEA solves the problem of multiple projections in log-DEA dual as it restricts multipliers so that the projection becomes unique (Sueyoshi and Sekitani 2009). That is, restricting multipliers v and w does not solve the problem of multiple solutions. Furthermore, it results from the linear programming theory and from the duality theorem that optima of US-log-DEA are also optima of both log-DEA dual and primal. Therefore, the efficiency score \(\theta ^{k(\ell )}\) remains unchanged. But since we need coefficients \({\varvec{\mu }}^k\) to define optimal prices, we must save the outcomes of US-log-DEA for such a purpose. It is needless to say that, by proposition 9, if k does not belong to \(\varOmega ^{k(\ell )}\), then it is not necessary the use of US-log-DEA as the problem of multiple solutions vanishes in that case.

3.6 The problem of infeasibility in linear programming models

We have developed a log-DEA model to assess the coefficients related to the optimal price to be paid to hospital k. In some circumstances, the log-DEA primal can be infeasible because of the choice of the directional vector \(\mathbf {D}^k\). If \(k\in \varOmega ^{k(\ell )}\), then the linear model cannot be infeasible. This is because k is enveloped by the frontier constructed using data associated with that subset of \(\varOmega\). However, k may not belong to \(\varOmega ^{k(\ell )}\) because of its quality levels. Two scenarios are then suitable: k is enveloped by the frontier related to \(\varOmega ^{k(\ell )}\)—and, in such a case, there is not an infeasibility problem—or k is super-efficient regarding such a frontier. Only in the latter scenario infeasibility may occur. However, being super-efficient is not a sufficient condition to generate infeasibility, vide Proposition 10. We thus have to look at the log-DEA primal and search for a vector \(\mathbf {D}^k\) linear transformation to ensure that the model becomes feasible. Naturally, if k belongs to \(\varOmega ^{k(\ell )}\), then a multiple solutions problem arise and the US-log-DEA is compulsory. Nevertheless, in such a case, the linear problem cannot be infeasible. If \(k\notin \varOmega ^{k(\ell )}\), then the multiple solutions problem vanishes. Hence, it is enough to consider the log-DEA primal.

Proposition 10

(Infeasibility of log-DEA primal) Let \(\mathbf {D}^k = (\log x^{k(\ell )}, \log y^{k(\ell )})\) (Chambers et al. 1996, 1998). If \(y^{k(\ell )} > 1\), \(y^{j(\ell )} > 1\) for all \(j\in \varOmega ^{k(\ell )},\) and \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle > 2\log x^{k(\ell )},\) then log-DEA primal is infeasible.

Proof

This proof follows Ray (2008). Under the assumption of \(\mathbf {D}^k = (\log x^{k(\ell )}, \log y^{k(\ell )})\) (Chambers et al. 1996, 1998), we obtain \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle \leqslant (1-\beta ^k) \log x^{k(\ell )}\). If \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle > 2\log x^{k(\ell )}\), then \((1-\beta ^k) \log x^{k(\ell )}> 2\log x^{k(\ell )} \Leftrightarrow 1-\beta ^k > 2 \Leftrightarrow \beta ^k < -1\). Likewise, we have \(\langle {\varvec{\mu }}^k, \log \mathbf {Y}^{k(\ell )} \rangle \geqslant (1+\beta ^k) \log y^{k(\ell )}\). Now, we know that \(\beta ^k < -1\) or \(1+\beta ^k < 0\). If \(y^{j(\ell )} > 1\) for all \(j\in \varOmega ^{k(\ell )}\), then \(\langle {\varvec{\mu }}^k, \log \mathbf {Y}^{k(\ell )} \rangle\) must be positive. However, given that \(y^{k(\ell )}\) is also larger than 1, the right hand side of the inequality associated with \(\mathbf {Y}^{k(\ell )}\) becomes negative because \(1+\beta ^k < 0\). Therein lies the problem: there is no combination of (nonnegative coefficients) \(\mu _j^k\) for \(j\in \varOmega ^{k(\ell )}\) obeying to \(\langle \sqrt{{\varvec{\mu }}^k},\sqrt{{\varvec{\mu }}^k} \rangle = 1\) and simultaneously satisfying \(\mathbf {Y}^{k(\ell )}<0\). Hence, the log-DEA primal model becomes infeasible. \(\square\)

Proposition 11

(Affine transformation of \(\mathbf {D}^k\) to remove infeasibility) Let \({\mathscr {D}}^{k(1)} = \delta _0^{k(1)} + \delta _1^{k(1)}d_1^k\) and \({\mathscr {D}}^{k(2)} = \delta _0^{k(2)} + \delta _1^{k(2)}d_2^k\) be the affine transformation of the components of vector \(\mathbf {D}^k=(d_1^k,d_2^k),\) with \(\delta _0^{k(1)},\delta _1^{k(1)},\delta _0^{k(2)},\delta _1^{k(2)}\geqslant 0\). Let \(m_Y=\min \mathbf {Y}^{k(\ell )}\) and \(\nabla ^k = \max \mathbf {X}^{k(\ell )} - \min \mathbf {X}^{k(\ell )} + \epsilon\), with \(\epsilon >0\). If the following condition is met by the coefficients of the affine transformation of \(\mathbf {D}^k\), then the infeasibility problem vanishes.

We can select any values for the coefficients of the affine transformation, as long as they obey to the relationship in (24).

Proof

(Inspired on Chen et al. 2013) The log-DEA primal is feasible if and only if all of its constraints are obeyed. With the affine transformation of \(\mathbf {D}^k\), those constraints should be verified. Let us ignore slacks \(s_1^k\) and \(s_2^k\) and start by the constraint over the input:

From the constraint over the output, we have:

The output target is, of course, \({\tilde{y}}^{k(\ell )} = \langle {\varvec{\mu }}^k, \log \mathbf {Y}^{k(\ell )}\rangle\), and this should be nonnegative, i.e.

Hence, the following is obvious:

Unfortunately, we do not know the value of \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle\), but it is clear that \(\langle {\varvec{\mu }}^k, \log \mathbf {X}^{k(\ell )} \rangle - \log x^{k(\ell )} \leqslant \nabla ^k\). Therefore, it is sufficient to impose:

which concludes this proof. \(\square\)

3.7 On optimizing the best practice tariffs: a step-by-step procedure

Figure 3 presents the flowchart of the procedure adopted to optimize the paid price to hospitals by their services. Steps are as followsFootnote 3:

-

Step 1 Define data.

-

Step 2 Define \(k\in \varOmega\) as the hospital whose payment we want to optimize.

-

Step 3 Define bandwidths, as suggested in Sect. 2.4.1.

-

Step 4 Construct the comparability sets, according to Definitions 1–3.

-

Step 5 Construct the overall comparability set, \(\varOmega ^{k(\ell )}\), by intersecting the sets achieved in Step 4—vide Definition 4.

-

Step 6 Using the entries of \(\varOmega ^{k(\ell )}\), construct \(\mathbf {X}^{k(\ell )}\) and \(\mathbf {Y}^{k(\ell )}\)—details at the end of Subsection 2.4.2.

-

Step 7 Check if k belongs to \(\varOmega ^{k(\ell )}\).

-

Step 7.1 If \(k\in \varOmega ^{k(\ell )}\), use the US-log-DEA model (23) and obtain \({\varvec{\mu }}^k\)—its coefficients are the unique solutions associated with the benchmarks of k.

-

Step 7.2 If \(k\notin \varOmega ^{k(\ell )}\), use the log-DEA primal model (14). Check if the log-DEA primal is infeasible.

-

Step 7.2.1 If the model is infeasible, transform \(\mathbf {D}^k\) using an affine transformation obeying to Proposition 11. The infeasibility problem vanishes. Obtain \({\varvec{\mu }}^k\) whose components are unique for k.

-

Step 7.2.2 If the model is not infeasible, just obtain \({\varvec{\mu }}^k\).

-

Step 8 Using \({\varvec{\mu }}^k,~\mathbf {X}^{k(\ell )},~y^{k(\ell )}\), and Eq. 8, obtain the optimal payment \({\mathscr {P}}^{k(\ell ,0)}\) associated with k.

4 Efficiency, optimal payments, and cost savings

The definition of optimal tariffs to be paid foresees some cost savings to the commissioner. This section explains how optimal payments are related to the technical efficiency, as previously detailed, and how cost savings can be generated.

4.1 Efficiency and optimal payments

We can easily relate the efficiency score with the optimal payment due to k.

Proposition 12

The optimal payment due to \(k\,\,({\mathcal {O}}=0)\) can be written as follows:

where \(\hat{{\mathscr {P}}}^{k(\ell )}=x^{k(\ell )}/y^{k(\ell )}\) is the current unitary cost of k in service/DRG \(\ell \in \varPsi\).

Proof

This proposition is obvious after merging Eqs. (8) and (16). \(\square\)

According to Eq. (25), the optimal payment \({\mathscr {P}}^{k(\ell ,0)}\) depends on coefficients \({\varvec{\mu }}^k\) of the log-linear combination; hence, it can be non-unique unless the US-log-DEA model is used. However, \(\theta ^{k(\ell )}\) is unique and can be achieved simply by using the log-DEA primal and the relationship between the efficiency score and \(\beta ^k\).

Proposition 13

If hospital k is efficient regarding \(\varOmega ^{k(\ell )}\) in the Pareto sense (absence of slacks), then \({\mathscr {P}}^{k(\ell ,0)} = \hat{{\mathscr {P}}}^{k(\ell )}\).

Proof

If hospital k is efficient regarding \(\varOmega ^{k(\ell )}\) in the Pareto sense (absence of slacks), then \(\theta ^{k(\ell )}=1\) and \({\varvec{\mu }}^k\) contains one and only one entry that is different from zero, i.e., \({\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0) = y^{k(\ell )}\), which implies \({\mathscr {P}}^{k(\ell ,0)} = \hat{{\mathscr {P}}}^{k(\ell )}\) from Eq. (25). \(\square\)

Proposition 14

\({\mathscr {P}}^{k(\ell ,0)}< \hat{{\mathscr {P}}}^{k(\ell )} \Longleftrightarrow \theta ^{k(\ell )} < y^{k(\ell )} / {\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0)\).

Proof

The proposition results directly from (25). Let hospital k be inefficient. Thus, there may exist an inefficiency source in the side of outputs, say \({\tilde{s}}_2^k\). Should it be zero, we have \({\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0) = y^{k(\ell )}\). Yet, because of inputs, k is inefficient by hypothesis, and it is clear that \(\theta ^{k(\ell )} < 1\) and \({\mathscr {P}}^{k(\ell ,0)} < \hat{{\mathscr {P}}}^{k(\ell )}\). That is, the optimal payment is smaller than the current unitary cost incurred by k. Should \({\tilde{s}}_2^k\) be larger than zero, we have \({\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0) > y^{k(\ell )}\). Therefore, we get \({\mathscr {P}}^{k(\ell ,0)} < \hat{{\mathscr {P}}}^{k(\ell )}\) if and only if \(\theta ^{k(\ell )} < y^{k(\ell )} / {\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0)\). \(\square\)

4.2 Cost savings

Based on both current and optimal costs, we can easily define the cost savings for hospital \(k\in \varOmega\) and service/DRG \(\ell \in \varPsi\).

Definition 11

(Cost savings for hospital k and service/DRG \(\ell\), \(\varDelta ^{k(\ell )}\)) Cost savings are defined as the (positive) difference between the current and the optimal operational expenditures of hospital k for the service/DRG \(\ell\):

As claimed in Definition 11, there are cost savings if and only if \(\varDelta ^{k(\ell )}\) is strictly positive, i.e., \(x^{k(\ell )} > {\mathscr {H}}(\mathbf {X}^{k(\ell )},{\varvec{\mu }}^k,{\mathcal {O}})\).

Proposition 15

Cost savings associated with \(k \,\,({\mathcal {O}}=0)\) can be written as follows:

Proof

It is straightforward after inserting (25) into (26). \(\square\)

Proposition 16

If k is technically efficient regarding \(\varOmega ^{k(\ell )}\) and in the Pareto sense, then \(\varDelta ^{k(\ell ,{\mathcal {O}})} = 0\).

Proof

If k is efficient in the Pareto sense, then \({\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,{\mathcal {O}})=y^{k(\ell )} \wedge {\mathscr {H}}(\mathbf {X}^{k(\ell )},{\varvec{\mu }}^k,{\mathcal {O}})=x^{k(\ell )}\Longleftrightarrow \theta ^{k(\ell )} = 1 \Longrightarrow \varDelta ^{k(\ell ,{\mathcal {O}})} = 0\). The reciprocal is not necessarily true. \(\square\)

Proposition 17

\(\varDelta ^{k(\ell ,0)} = 0 \Longleftrightarrow {\mathscr {P}}^{k(\ell ,0)} = \hat{{\mathscr {P}}}^{k(\ell )}\).

Proof

This proposition results from both propositions 13 and 16. \(\square\)

Proposition 18

\(\varDelta ^{k(\ell ,0)} > 0 \Longleftrightarrow {\mathscr {P}}^{k(\ell ,0)} < \hat{{\mathscr {P}}}^{k(\ell )}\).

Proof

\(\varDelta ^{k(\ell ,0)}> 0 \Longleftrightarrow 1 - \frac{1}{y^{k(\ell )}} \theta ^{k(\ell )} {\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0) > 0 \Longleftrightarrow \theta ^{k(\ell )}< y^{k(\ell )}/{\mathscr {H}}(\mathbf {Y}^{k(\ell )},{\varvec{\mu }}^k,0) \underbrace{\Longleftrightarrow }_{\text {Prop. }14} {\mathscr {P}}^{k(\ell ,0)} < \hat{{\mathscr {P}}}^{k(\ell )}\). \(\square\)

Proposition 19

Let \({\hat{t}}_{q_r}^{(\ell )}\) and \({\tilde{t}}_{q_r}^{(\ell )}\) be two thresholds related to the \(r^{(\ell )}{} { th}\) quality dimension. Cost savings associated with them are \({\hat{\varDelta }}^{k(\ell ,0)}\) and \({\tilde{\varDelta }}^{k(\ell ,0)},\) respectively. If \({\hat{t}}_{q_r}^{(\ell )} < {\tilde{t}}_{q_r}^{(\ell )}\) (ceteris paribus), then \({\hat{\varDelta }}^{k(\ell ,0)} \geqslant {\tilde{\varDelta }}^{k(\ell ,0)}\).

Proof

Thresholds are defined as the minimum acceptable quality levels. Let us decrease a threshold related to a given quality dimension, keeping all remaining parameters unchanged, i.e. from \({\tilde{t}}_{q_r}^{(\ell )}\) to \({\hat{t}}_{q_r}^{(\ell )}\). Let \(\# A\) be the cardinality of a set A. It is clear that \(\#{\hat{\varOmega }}_q^{k(\ell )} \geqslant \#{\tilde{\varOmega }}_q^{k(\ell )}\), \(\#{\hat{\varOmega }}_y^{k(\ell )} \geqslant \#{\tilde{\varOmega }}_y^{k(\ell )}\), and \(\#{\hat{\varOmega }}_z^{k(\ell )} \geqslant \#{\tilde{\varOmega }}_z^{k(\ell )}\). By construction, \(\#{\hat{\varOmega }}^{k(\ell )} \geqslant \#{\tilde{\varOmega }}^{k(\ell )}\). Note that we have to impose \(\geqslant\) instead of > because a decrease on thresholds does not necessarily increase the cardinality of a set. The probability of k become dominated by the new comparability set increases as a consequence of the broadening of \(\varOmega ^{k(\ell )}\).Footnote 4 Therefore, the efficiency of hospital k does not increase, and, by Proposition 15, cost savings do not decrease. \(\square\)

Proposition 20

Let \({\hat{b}}_{q_r}^{k(\ell )}\) and \({\tilde{b}}_{q_r}^{k(\ell )}\) be two bandwidths associated with the \(r^{(\ell )}{} { th}\) quality dimension. Cost savings related to them are \({\hat{\varDelta }}^{k(\ell ,0)}\) and \({\tilde{\varDelta }}^{k(\ell ,0)},\) respectively. If \({\hat{b}}_{q_r}^{k(\ell )} > {\tilde{b}}_{q_r}^{k(\ell )}\) (ceteris paribus), then \({\hat{\varDelta }}^{k(\ell ,0)} \geqslant {\tilde{\varDelta }}^{k(\ell ,0)}\).

Proof

As in previous proposition. \(\square\)

Based on previous propositions, and given the user-defined thresholds \(t^{(\ell )}=\{t_{1}^{(\ell )},\ldots ,t_{q_r}^{(\ell )},\ldots ,t_{q_R}^{(\ell )} \}\), we can identify several scenarios:

-

Hospital k belongs to \(\varOmega ^{k(\ell )}\) and exhibits the smallest unitary cost; hence, \({\mathscr {P}}^{k(\ell ,0)} = x^{k(\ell )}/y^{k(\ell )}\) and \(\varDelta ^{k(\ell ,0)}=0\).

-

Hospitals k and j both belong to \(\varOmega ^{k(\ell )}\) but \(x^{k(\ell )}/y^{k(\ell )} > x^{j(\ell )}/y^{j(\ell )}\); hence, \({\mathscr {P}}^{k(\ell ,0)} < x^{k(\ell )}/y^{k(\ell )}\) and \(\varDelta ^{k(\ell ,0)}>0\).

-

Hospital k does not belong to \(\varOmega ^{k(\ell )}\) because of its poor quality levels (\(q_{r^(\ell )}^{k(\ell )} < t_{q_r}^{(\ell )}\)):

-

There is, at least, a hospital j in \(\varOmega ^{k(\ell )}\) such that \(x^{k(\ell )}/y^{k(\ell )} > x^{j(\ell )}/y^{j(\ell )}\); hence, \(\varDelta ^{k(\ell ,0)} > 0\).

-

All hospitals in \(\varOmega ^{k(\ell )}\) have unitary costs larger than \(x^{k(\ell )}/y^{k(\ell )}\); hence, \(\varDelta ^{k(\ell ,0)} < 0\).

-

In the last case, the user (or group of stakeholders) should decide whether quality threshold(s) is(are) too large and must be decreased to raise cost savings, or threshold(s) must be kept and low-quality hospitals must be financed by \({\mathscr {P}}^{k(\ell ,0)} > x^{k(\ell )}/y^{k(\ell )}\) and the difference \(|\varDelta ^{k(\ell ,0)}|\) must be solely devoted to quality improvements.

5 The application of the new tool to the Portuguese public hospitals

5.1 An overview about the Portuguese health care system

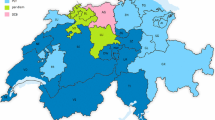

Contracting in health care aims to establish a mechanism for resources allocation in line with each health care service provision and the corresponding population needs. These ones include to ensure the quality, efficiency, and effectiveness of health care (Shimshak et al. 2009). The contracting process in Portuguese health care services arises from the relationship between different stakeholders, including (1) public funding sources (the Portuguese State, through taxes), (2) the regulator (an independent Regulatory Agency for Health and the Ministry of Health), and (3) the health care providers (hospitals, either public or private, primary health care centres, and tertiary health care centres) (Sakellarides 2010). Figure 4 shows the financial flow in the Portuguese (NHS). The analysis of this section is centred on public hospitals. Most of their financing volume comes from taxes, collected from citizens and companies. Health care providers are public though autonomous entities, implying that there must be a monitoring model holding them responsible for their weak performance and inducing the so-called transparency for the population those hospitals serve.

Financial flow in the Portuguese NHS; Source: Barros et al. (2011). RHA regional health administration, ACSS Portuguese Central Administration of Health Systems, OOP out-of-pocket payment

Contracting mechanisms try to allocate resources to health care providers in an efficient and effective way, through a clear and well-designed strategy. However, in most European countries there is nothing like a set of indicators allowing the evaluation of that strategy impact on the population health status which is a quite difficult task (Figueras et al. 2005; Lu et al. 2003). Accordingly, such a strategy requires a previous fieldwork (negotiation phase) that should be fulfilled by a contracting-specific team from the Ministry of Health. This strategy must be in line with some directions defined by the Portuguese Central Administration of Health Systems. A robust knowledge on demographics, health policies, and health care services production is compulsory to identify those population and service’s needs. Still, predictions of needs and allocated resources are usually constrained by the limited State budget (Mckee and Healy 2002; Galizzi and Miraldo 2011). This negotiation phase should neither be merely symbolic nor bureaucratic, but it must have a relevant role on the budgetary allocation of health facilities, to avoid their indebtedness. This means that the process shall be managed with responsibility and accountability, and be followed/ monitored over time, which is not a common practice in Portugal, though.

Budgetary constraints and some Government resistance to make some funds available must neither overlap nor limit the health care provision ability/ capacity. Otherwise, one may observe the opposite effect, i.e., underfunding generates overindebtedness, given the arrears accumulation related to new acquisitions that health facilities had to do, to carry out their activity. This effect was observed in Portugal in the past few years, leading to capital injections with money from the 2011 Memorandum of Understanding between the Portuguese Government and the European Commission (on behalf of the Eurogroup), the European Central Bank and the International Monetary Fund (Nunes and Ferreira 2019a).

In Portugal, contracts between the (central) Government and each health care provider encompass a set of dimensions including the provisions type, volume and duration, referencing networks, human resources and facilities, monitoring schemes, performance prizes, and prices. Worth to mention, contracts should also contain quality-related terms, including penalties for poor quality. That is, contracting health care services is paramount to promote a user’s focused action and to improve quality and efficiency, not only in Portugal but also in any other country.

Prices are perhaps one of the most important variables within a contract for health care provision. As a matter of fact, statutory payments to hospitals regard their production in terms of DRG and services (emergencies, medical appointments, inpatient services, \(\ldots\)). These prices are usually defined according to the smallest unitary costs (in the previous year) among providers. Some adjustments regarding the average complexity of treated patients can also be included. Nonetheless, quality is clearly neglected in such a framework. We remark that health care services, like any other service (either public or not), may exhibit considerable levels of inefficiency, which means those unitary costs necessarily include an inefficiency parcel. Furthermore, providers also exhibit heterogeneous technologies (e.g., assets). In a period featured by resources scarcity, financing hospitals through an inefficiency-based mechanism that disregards their underlying technologies is totally nonsense, requiring a considerable reformulation, justifying, in theory, the adoption of our approach.

5.2 Contracting in the Portuguese NHS

Several New Public Management-based health policies have been extensively applied to the Portuguese public hospitals. Particularly, their management model (legal status) has observed a few changes since the beginning of 2003, moving from the Administrative Public Sector (under the public/administrative law) to the State Business Sector (under the commercial/private law) in 2005 (Ferreira and Marques 2015). This was the so-called corporatisation reform. Currently, most of Portuguese hospitals belong to the State Business Sector. One of the most relevant issues underlying this reform regards the hospitals financing model. While in the past such a financing was based on a retrospective model and prices (or tariffs) derived from each hospital history, nowadays this financing stems from the negotiation phase and the resulting contract. There is a hospital-specific budget that is negotiated between each hospital’s Administrative Council and the Ministry of Health. Budget is negotiated in terms of production and quality, in line with some other European countries like Finland, Ireland, France, and England. In Portugal, paid unitary price (tariff) results from the national average of DRGs or services’ costs. Patients are assigned to a specific DRG and/or to a specific service. In any case, tariffs are tabled, which means they are the same for all hospitals, regardless their performance. In other words, the current mechanism is basically a case-based payment, which encompasses several disadvantages: first, it may prompt the supplier-induced demand that, in turn, includes unwanted activity; second, more complex cases can be disregarded or treated negligently, which reduces the overall quality of care; third, providers are encouraged to up-code classification of patients into a more highly reimbursed group; fourth, the introduction of quality-raising (though cost-increasing) technologies is totally discouraged (Marshall et al. 2014).