Abstract

Objectives

Proposing a machine learning model to predict readers’ performances, as measured by the area under the receiver operating characteristics curve (AUC) and lesion sensitivity, using the readers’ characteristics.

Methods

Data were collected from 905 radiologists and breast physicians who completed at least one case-set of 60 mammographic images containing 40 normal and 20 biopsy-proven cancer cases. Nine different case-sets were available. Using a questionnaire, we collected radiologists’ demographic details, such as reading volume and years of experience. These characteristics along with a case set difficulty measure were fed into two ensemble of regression trees to predict the readers’ AUCs and lesion sensitivities. We calculated the Pearson correlation coefficient between the predicted values by the model and the actual AUC and lesion sensitivity. The usefulness of the model to categorize readers as low and high performers based on different criteria was also evaluated. The performances of the models were evaluated using leave-one-out cross-validation.

Results

The Pearson correlation coefficient between the predicted AUC and actual one was 0.60 (p < 0.001). The model’s performance for differentiating the reader in the first and fourth quartile based on the AUC values was 0.86 (95% CI 0.83–0.89). The model reached an AUC of 0.91 (95% CI 0.88–0.93) for distinguishing the readers in the first quartile from the fourth one based on the lesion sensitivity.

Conclusion

A machine learning model can be used to categorize readers as high- or low-performing. Such model could be useful for screening programs for designing a targeted quality assurance and optimizing the double reading practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

To improve the quality of screening mammography programs, guidelines have proposed criteria based on the reader characteristics for certification to undertake independent mammography interpretation [1, 2]. Although these guidelines mostly use annual mammographic reading volume as a criterion for certification, there are discrepancies between countries in the volume read required for certification. For example, whilst the USA require 960 reads biannually, European countries and Australia require at least 5000 and 2000 reads, respectively, per year. Moreover, inconsistent relationship between mammography reading volume and sensitivity was reported, with studies showing no association [3,4,5,6,7,8,9,10], positive association [11,12,13,14,15], and quadratic associations [16]. Similarly, mixed findings have been reported for the relationship between the specificity and mammographic reading volume: non-significant [3, 6, 8, 9, 16], positive [4, 14, 15, 17, 18], quadratic [11], and negative associations [5].

Improving understanding about the relationship between readers’ characteristics and mammography interpretation performances could be used to inform targeted quality assurance and surveillance measures for readers, particularly for those at high risk of under-performing. Such programs might improve the performance of the screening program [19].

In previous studies [3,4,5,6, 8, 9, 11, 14,15,16,17,18] often low numbers of observers were involved and most of the studies were done using images produced by non-digital mammography units. In the current study, we aim to use data from very large cohort of readers (905 radiologists and breast physicians) and digitally acquired images to assess the relationship between reader characteristics and mammography image interpretation performance. We also propose a machine learning model to predict reader performances using their characteristics.

Materials and methods

Image case sets and participants

Using Breast Screen Reader Assessment Strategy (BREAST; http://sydney.edu.au/health-sciences/breastaustralia/) platform. The process of the case selection has been described elsewhere [20]. All cases were selected by a senior breast radiologist based on radiological and pathological reports and contained four digital mammograms (both sides, two views). Each case set included 40 normal (based on a two-year follow-up) and 20 biopsy-proven cancer cases. Cancer cases contained lesions with different mammographic features: malignant masses, calcifications, asymmetries, or architectural distortions. The senior radiologists, who selected the cases in various test sets, also ensured the inclusion of only good quality images. The quality assessment process considered image criteria related to the proper breast positioning, exposure parameters, contrast, and artifacts. Please see the Supplementary Materials for the summary of the criteria.

Table 1 summarises the characteristics of the test sets. Average size of the cancer cases and distribution of cases across various BI-RADS density categories and various cancer types is shown. All cases were retrieved from the screening archive. Information about the presence of benign lesions was not available as benign findings are not routinely collected in the screening archive. Images were acquired using the mammography machines from different manufacturers in use in the screening facilities. Three of the nine image sets included a small (< 5%) to moderate (20%) fraction of computed radiography (CR) cases, but the vast majority were from full-field digital mammography (FFDM) systems.

Data were collected from 905 certified radiologists and breast physicians who completed at least one of the test sets. Using a questionnaire, we collected radiologists’ demographic details. Participants were recruited in nine different workshops. One test set was allocated to each workshop and made available to the participants. All interested radiologists/breast physicians who attended each workshop were allowed to do the test sets. Table 2 show radiologists’ responses.

Experimental protocol

Readings were conducted either at conference venues in rooms carefully designed to match radiologic reporting environments or in the reporting rooms of radiologists’ practicing facilities between January 2012 and January 2019. Half of the assessments (50.28%) were conducted in a conference venue. The radiologists viewed images using Sectra Breast Imaging PACS (Sectra, Linköping, Sweden; Hologic, Bedford, Mass). Options for zooming, panning, and windowing were available to readers. Ambient lightning was below 10 lx in the room. The workstations were linked to either two MFGD 5621 monitors (Barco, Kortrijk, Belgium), or two RadiForce G51 monitors (Eizo, Ishikawa, Japan). All monitors were calibrated to the Digital Imaging and Communication in Medicine gray-scale standard display function, had a contrast ratio of 365:1, and displayed a maximum luminance within 5% of 475 cd. m−2, minimum luminance of 1.3 cd.m−2.

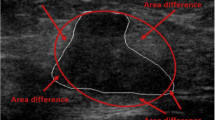

Each reader reported their findings per case using the Royal Australian and New Zealand College of Radiologists (RANZCR) rating system, which rates mammographic findings into the following five categories: (1) no significant abnormality, (2) Benign, (3) Equivocal, (4) Suspicious, and (5) Malignant. The RANZCR rating system is similar to the Tabar five-tier grading system [21]. Although it is different from Breast imaging-reporting and data system (BI-RADS) classification system [22], the two systems are translatable. Grades 1, 2, and 5 in the RANZCR system is identical to BI-RADS 1, 2, and 5, respectively. The RANZCR grade for the cases graded as BI-RADS 3 and 4A, is “3” while the RANZCR grade equivalent to the BI-RADS 4B and 4C, is “4”. The readers had no prior knowledge about the prevalence and types of cancer included in the case sets. They were also asked to annotate the location of cancer. By using the readers’ ratings, a receiver operating characteristic (ROC) was generated and the area under the ROC curve (AUC) was calculated. All markings rated as 3 or more were considered as positive to compute sensitivity, specificity, and lesion sensitivity.

Proposed machine learning model

To predict the readers’ AUC values and their lesion sensitivity, radiologists’ characteristics were fed into two ensemble of regression trees (250 trees with surrogate splits to handle the missing data combined using the bagging method). Among all collected characteristics, participants’ gender was excluded as considerable number of participants did not provide response to this question. We selected the ensemble of trees method as the feature selection is embedded within the model and it can successfully handle missing data. The AUC value provides a measure of over-all accuracy and the trade-off between case-level sensitivity and specificity while lesion sensitivity indicates how well radiologists perform in the actual annotation of the cancer location (lesion-level analysis).

We also included the case set difficulty measure. To do so, for each case set we used the jack-knifing free response operating characteristic curve (JAFROC) figure of merit (FOM) [23], which measures the trade-off between lesion sensitivity and specificity. We ranked the case sets from 1 to 9 and used this value as one of the regression model’s inputs (ordinal variable).

We used the average JAFROC FOM from five radiologists, who used a similar platform to read the images. For six sets, readings from all five was available while for three sets, readings from four of these five radiologists were available. All these radiologists read 101–150 mammograms per week, had 10–16 years of experience in reading mammograms, were screen readers, and devoted 10–20 h of their practice to reading breast images.

Statistical analysis and validation

The mean AUC, sensitivity, specificity, and lesion sensitivity for readers in each group of categorical variables was calculated. Using the Kruskal–Wallis test, we investigated if the performance metrics differed across various values of each variable (Table 2). For participant’s age, number of years reading mammograms, and number of years certified as a screening reader, we calculated the range of each quartile (presented in Table 2) and explored if performance metrics differed significantly in each quartile using the Kruskal–Wallis test. For analysing each variable, the samples where responses were not provided by the reader, were omitted for the Kruskal–Wallis test. We also calculated the adjusted odds ratio (OR) of various factors for having an AUC (and lesion sensitivity) higher than the median value to adjust for confounding effects. In all statistical tests, a p value of < 0.05 was considered statistically significant.

We performed the analysis twice. Firstly, we treated work-load variables (hours and case number) as ordinal and considered number of years reading mammograms and number of years certified as a screening reader as ordinal variables. The rest of the variables have been treated as categorical variables in both types of analyses. Secondly, we categorised number of years reading mammograms and number of years certified as a screen reader into four categories (representing four quartiles) and treated all variables as categorical variables. We conducted the same process for the lesion sensitivity. These statistical analyses were conducted in the R (version 3.6.0) environment.

The performances of the models for predicting the AUC and lesion sensitivity were evaluated using leave-one-out cross validation (LOOCV). We calculated the Pearson correlation coefficient between the predicted values by the model and the actual AUC and lesion sensitivity. The model’s output can also be thresholded and used to categorise readers as high-performers and low-performers. We grouped readers as two categories in five different ways based on their performances:

(1) Those in the lowest quartile (low-performers) versus those in the second, third, highest quartiles (high-performers).

(2) Those in the lowest and second quartiles (low-performers) versus those in the third, highest quartiles (high-performers).

(3) Those in the lowest, second, and third quartiles (low-performers) versus those in the highest quartiles (high-performers).

(4) Those in the lowest quartile (low-performers) versus those in the highest quartiles (high-performers).

(5) Lowest one-third of the readers (low-performers) versus highest one-third of the readers (high-performers).

In each one of the scenarios, we then evaluated the model’s over-all accuracy by generating the ROC curve by applying different cut-off values to the performance metrics predicted by the model. The models were built and cross validated in MATLAB 2018b (Mathworks, MA, US) on a desktop (Dell Precision 5820 Tower). The cross-validation processes for the AUC and lesion sensitivity models completed in 784 and 622 s, respectively.

Currently, in most countries, the interpretive volume serves as the main eligibility criteria for being qualified as a screen reader [2]. Therefore, for all these five scenarios, a model which relies on the reading volume (number of cases per week) and case set difficulty served as the comparison baseline. To build the baseline model, a linear regression model and an ensemble of regression trees were tested. Although the performance of the ensemble was slightly better, the difference was insignificant. For consistency in the comparison, the ensemble of regression trees was used as the baseline comparison. It should be noted that this is slightly more accurate and complicated compared to the simple thresholding of the reading volume.

Results

Table 2 indicates the performance metrics. As shown, the p-values for all performance metrics were significant for number of cases per week, number of hours per week, being a screen reader, number of years reading mammograms (# Years 1 in Table 2), and number of years certified as screening readers (# Years 2 in Table 2). Although most of the p-values for the last three variables in Table 2 were significant, adjustments against the confounding factors and case set difficulties were required. The last three variables presented in Table 2 were significantly correlated with each other. Therefore, we only included number of years reading mammograms, which resulted in the highest non-adjusted OR for the AUC and Lesion sensitivity. Table 3 shows the characteristics which led to an adjusted OR significantly greater than, or less than 1. As stated, number of hours, reading volume, and years of experience are treated once as ordinal and once as categorical variables. Based on the first analysis presented in Table 3, a one-year increase in years of experience increases the odds of having AUC above the median by 3% (CI 1%–5%; OR of 1.03). Also, a single unit of increase in two ordinal variables, representing seven levels of interpretive hours and volume, increases the odds of having an AUC above the median by 28% (CI 16%-40%; OR of 1.28) and 38% (CI 23%–55%; OR of 1.38), respectively.

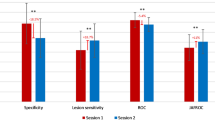

The ROC curves for the proposed method and the baseline comparison method for categorising readers (the second way of categorisation in the Statistical Analysis and Validation section and the second column in Table 4) is shown in Fig. 1. The baseline model represents how well one can categorise these two groups of readers if only the number of cases per week (measure of reading volume) and case set difficulty was used. As indicated in the figure and Table 4, the proposed model outperformed the baseline model for categorising readers.

The receiver operating characteristics (ROC) curves and their confidence intervals (dashed and dotted lines) for categorising readers as high- and low-performers using the median AUC value (the second way of categorisation in the Statistical Analysis and Validation section). The grey ROC curve represents the ROC of the proposed ensemble of regression trees and the black ROC curve represents how well one can categorise these two groups of readers if only the number of cases per week (measure of reading volume) and case set difficulty is used

The Pearson correlation coefficient between the predicted AUC and the lesion sensitivity by the ensemble models and the actual values was 0.59 (p < 0.001) and 0.58 (p < 0.001), respectively. Using MATLAB’s predictorImportance function, the three most important features in the AUC model were number of years since certification, position, and number of cases per week while the three features for the lesion sensitivity were number of cases per week, number of hours per week, and number of years reading mammograms.

Table 4 shows the AUC values of the model for classifying the readers into high- or low-performers. Column Numbers represent the five different ways of categorizing readers as low- and high-performers, as presented in the Statistical Analysis and Validation section. As screening guidelines mostly use reading volume as their main criteria for selecting the screen-readers, we also calculated the AUC obtained by using reading volume (as measured by number of cases per week) to classify readers. As explained earlier, test set difficulty was also fed into the model.

The histogram of the model’s absolute error, i.e., the predicted AUC subtracted from the actual AUC, is indicated in Fig. 2. As shown, the absolute error value for most (91%) of the readers is less than 0.1. The error rate (absolute error divided by the value) was less than ± 10% for nearly 87% of readers.

The proposed machine learning model can be used for analyzing the sensitivity of reader’s performance to each variable. Examples of such analyses is shown in Fig. 3a-d. In Fig. 3a, b, we analysed the model’s output when sweeping the entire grid placed on a two-dimensional feature space including all possible pairs for years of experience in mammography and cases per week. As mentioned earlier, we included a variable to represent the case set difficulty. We simulated the results for the most difficult (a) and the easiest (b) case sets, for a screen reader without fellowship training. These figures indicate how the proposed model can be used to understand readers’ performance in case sets with different levels of difficulties. For example, as shown by the orange arrows in Fig. 3a, for the easiest case set, based on the model’s prediction, readers’ performances increased with a steeper slope from “ < 20” to “21–50” cases per week compared to “None” to “ < 20”. On the other hand, for the most difficult case set the model suggests that the performance of the reader steadily increases from “None” to “21–50” cases per week (orange arrow). From 51 cases per week onwards (dashed black arrows), the trends are only slightly different in two test sets and a peak is evident at 101–151 cases per week only for the most difficult test set.

The output of the proposed machine learning model has been simulated for various values for number of cases per week and years of experiences a the simulation results for the most difficult; and b the simulation result for the easiest case set. The output of the proposed machine learning model has been simulated for all possible pairs for hours per week and cases per week c the simulation result for the easiest case set; and d the simulation results for the most difficult case set

As another example, we also analysed the model’s output when sweeping the entire grid placed on a two-dimensional feature space including all possible pairs for hours per week and cases per week for a screen reader with fellowship training. The model is simulated for a highly experienced reader (24 years of experiences). We assigned average age and years since certified to this reader, based on average of readers with 20 to 25 years of experience in the original dataset. The simulated resulted are shown in Fig. 3 for the most difficult (c) and the easiest (d) case sets. As shown, the sensitivity of variables to changes of hours per week and cases per week is dependent on how difficult the case set is. For the easiest case set, the performance reach to a plateau for high volume readers (interpretative volume > = 51) or those spending > 20 h reading mammograms. On the other hand, for the most difficult case set, an increasing trend is exhibited by the model.

Discussion

This paper investigated how the readers' characteristics affect the performance of readers, using a very large dataset collected from 905 radiologists and breast physicians. Only a few previous studies considered the effect of radiologists’ characteristics on the overall accuracy [7,8,9,10, 12,13,14,15, 24, 25]. A study in the US [11] found no evidence of a relationship between overall accuracy and larger reading volume while in [4], in concordance with this study, a positive relationship between these two was noted.

We also proposed a model for predicting the reader’s AUC and lesion sensitivity based on reader’s characteristics. The Pearson correlation values suggest a moderate level of correlation between the model’s prediction and the actual performance of the readers. To investigate the usefulness of the model, a baseline model for comparison was created based on the case difficulty and reading volume, as most screening program guidelines use the number of cases as their main criterion for selecting the screening readers. The proposed machine learning model outperformed the baseline model in all various scenarios for categorising high- and low-performing readers.

By having a reliable tool to identify readers at an elevated risk of low performance, the screening programs can establish more frequent and targeted quality assurance schemes for these readers and structure training programs for less experienced radiologists or trainees. Moreover, in settings with limited resources for training or quality assurance, the resources could be more effectively allocated to improve global diagnostic abilities. Feedbacks about radiologists’ decisions [7] and knowledge sharing [26] could improve readers’ performances. Identifying readers at risk of performing lower than the median value and making feedback and knowledge sharing available to them, could improve the overall screening program.

Moreover, our results showed that the proposed machine learning model outperformed the baseline model, which relied on the mammographic reading volume. Currently, the guidelines mostly use annual mammographic reading volume as a criterion for certification. The promising results obtained by the machine learning model, suggest that this model can be used for the certification of the readers. Although, it should be noted that a criterion based on the annual reading volume can be easily understood by the workforce, clinic managers, and policymakers. A more complex machine learning model is difficult to interpret, particularly when someone is classified as unqualified, proper explanations should be provided. Therefore, before using the model, efforts on improving the explainability of the model should be made. Moreover, similar to any other machine learning model, the proposed model might misclassify individuals. The implications of such error on the sensitivity and specificity of a screening program should be investigated. Finally, the feasibility of using this model should be investigated in the context of each screening program considering the availability of the workforce.

Secondly, such a model can be used for pairing low- and high-performing readers in double reading practice. Recent studies showed that more benefits of double reading can be accrued by optimising the pairing [20, 27]. Such strategic matching will lower the chances of pairing low-performing readers and reducing the efficacy of double reading. However, it should be noted that a pre-requisite for translating this into practice is conducting detailed efficacy studies to consider the possible net change in the number of false positives and false negatives by pairing low and high-performing reader, compared to random pairing of the readers. Another important point to consider in the implementation of routinely pairing low- and high-performing readers in double reading practice is the radiology workforce shortage. A shortage of radiologists specialising in Breast imaging is a recognized worldwide challenge [28,29,30] and the limited number of radiologists in a practice might hinder efforts for optimally pairing readers. To some extent, the wider utilization of teleradiology might help in adopting this pairing strategy, especially where there are significant workforce shortages. Therefore, beside cost-efficacy analysis, the available radiology workforce and infrastructure for teleradiology should be taken account.

This study had a few limitations. Firstly, the prevalence of cancer cases in the case sets was different from the clinical practice. Mixed evidence about the prevalence effect has been reported [31], with studies showing both measurable performance decreases [32,33,34] and no change in performance [35]. A laboratory effect could also be a limitation [36]. Although it certainly affects our data, a significant positive correlation between laboratory and real practice performance was reported in a previous study [37]. As our model aims at classifying readers into high- and low-performers, the presence of such a correlation implies that our model could predict the performance of readers in real clinical practice to some extent. Moreover, approximately half of the assessments were conducted in the participants’ workplaces and we were not able to ensure that ambient light and monitor’s parameters were in concordance with the assessments conducted in the conferences. Also, although in none of the previous studies such a large cohort of radiologists were included, number of readers in some categories were limited.

As stated, to adjust for the case difficulty, we added a variable ranged from 1 to 9 to show the difficulty of cases in a set. As we analysed the data retrospectively, we could not match the test sets based on their difficulty level. This is another limitation of our study. The difficulty measure was calculated based on assessments from five radiologists, who were included in the analysis. This could cause bias in the performance of the model. However, as these five readers comprised less than 0.56% of our samples, it is reasonable to assume that considerable bias was not introduced to the results.

Currently, among all input variables, only the test set difficulty describes the characteristics of the cases in our test sets. The quality of images produced by the CR and FFDM units are different, and these differences could lead to a change in a reader’s performance [37]. Thus, for a future work, variables describing the mammography unit manufacturer and technology can also be fed as input variables to the performance prediction model to improve its accuracy. In the current study, although images were acquired from various mammography, for all test sets except one, the proportion of CR images was small or non-existent. Hence, our dataset did not provide sufficient power to include mammography technology as one of the inputs. Moreover, as stated in the Materials and Methods section, the senior radiologists, who selected the cases, ensured that only good quality images were included in the test sets. As all images had acceptable quality, a possible effect that different technologies and image processing methods may have on the visibility of cancer was potentially mitigated in our dataset. To explore the effect of adding variables describing the above-mentioned case characteristics on the accuracy of the model, a more diverse set of images should be used to create test sets in the future.

A more comprehensive reader characteristics can also be collected from readers and fed into the model to improve the accuracy of the model. For example, whether working full-time or part-time [11] or being affiliated with academic centres [38] could affect the performance. The current study is a proof-of-concept study and although the proposed machine learning model outperformed the baseline model for categorising high- and low-performing readers, the added benefits of the variables describing additional case and reader characteristics can be explored in the future works.

Abbreviations

- BREAST:

-

Breast screen reader assessment strategy

- ROC:

-

Receiver operating characteristics

- AUC:

-

Area under ROC curve

- OR:

-

Adjusted odds ratio

- LOOCV:

-

Leave-one-out cross validation

- JAFROC:

-

Jackknifing free response operating characteristic curve

- FOM:

-

Figure of merit

References

Ekpo EU, Alakhras M, Brennan P. Errors in mammography cannot be solved through technology alone. Asian Pac J Cancer Prev: APJCP. 2018;19(2):291.

Hofvind S, Bennett R, Brisson J, Lee W, Pelletier E, Flugelman A, Geller B. Audit feedback on reading performance of screening mammograms: an international comparison. J Med Screen. 2016;23(3):150–9.

Elmore JG, Jackson SL, Abraham L, Miglioretti DL, Carney PA, Geller BM, Yankaskas BC, Kerlikowske K, Onega T, Rosenberg RD, Sickles EA, Buist DSM. Variability in interpretive performance at screening mammography and radiologists’ characteristics associated with accuracy. Radiology. 2009;253(3):641–51.

Theberge I, Chang SL, Vandal N, Daigle JM, Guertin MH, Pelletier E, Brisson J. Radiologist interpretive volume and breast cancer screening accuracy in a Canadian organized screening program. J Natl Cancer Inst. 2014. https://doi.org/10.1093/jnci/djt461.

Buist DSM, Anderson ML, Haneuse SJPA, Sickles EA, Smith RA, Carney PA, Taplin SH, Rosenberg RD, Geller BM, Onega TL, Monsees BS, Bassett LW, Yankaskas BC, Elmore JG, Kerlikowske K, Miglioretti DL. Influence of annual interpretive volume on screening mammography performance in the United States. Radiology. 2011;259(1):72–84.

Miglioretti DL, Smith-Bindman R, Abraham L, Brenner RJ, Carney PA, Bowles EJA, Buist DSM, Elmore JG. Radiologist characteristics associated with interpretive performance of diagnostic mammography. J Natl Cancer Inst. 2007;99(24):1854–63.

Elmore JG, Wells CK, Howard DH. Does diagnostic accuracy in mammography depend on radiologists’ experience? J Womens Health. 1998;7(4):443–9.

Molins E, Macià F, Ferrer F, Maristany MT, Castells X. Association between radiologists’ experience and accuracy in interpreting screening mammograms. BMC Health Serv Res. 2008. https://doi.org/10.1186/1472-6963-8-91.

Kim SH, Lee EH, Jun JK, Kim YM, Chang YW, Lee JH, Kim HW, Choi EJ, K. Alliance for Breast Cancer Screening. Interpretive performance and inter-observer agreement on digital mammography test sets. Korean J Radiol. 2019;20(2):218–24.

Timmers JMH, Verbeek ALM, Pijnappel RM, Broeders MJM, Den Heeten GJ. Experiences with a self-test for Dutch breast screening radiologists: lessons learnt. Eur Radiol. 2014;24(2):294–304.

Barlow WE, Chi C, Carney PA, Taplin SH, D’Orsi C, Cutter G, Hendrick RE, Elmore JG. Accuracy of screening mammography interpretation by characteristics of radiologists. J Natl Cancer Inst. 2004;96(24):1840–50.

Ciatto S, Ambrogetti D, Catarzi S, Morrone D, Rosselli Del Turco M. Proficiency test for screening mammography: results for 117 volunteer Italian radiologists. J Med Screen. 1999;6(3):149–51.

Reed WM, Lee WB, Cawson JN, Brennan PC. Malignancy detection in digital mammograms. Important reader characteristics and required case numbers. Acad Radiol. 2010;17(11):1409–13.

Rawashdeh MA, Lee WB, Bourne RM, Ryan EA, Pietrzyk MW, Reed WM, Heard RC, Black DA, Brennan PC. Markers of good performance in mammography depend on number of annual readings. Radiology. 2013;269(1):61–7.

Suleiman WI, Lewis SJ, Georgian-Smith D, Evanoff MG, McEntee MF. Number of mammography cases read per year is a strong predictor of sensitivity. J Med Imaging. 2014;1(1):015503.

Haneuse S, Buist DSM, Miglioretti DL, Anderson ML, Carney PA, Onega T, Geller BM, Kerlikowske K, Rosenberg RD, Yankaskas BC, Elmore JG, Taplin SH, Smith RA, Sickles EA. Mammographic interpretive volume and diagnostic mammogram interpretation performance in community practice. Radiology. 2012;262(1):69–79.

Hoff SR, Myklebust TÅ, Lee CI, Hofvind S. Influence of mammography volume on radiologists’ performance: results from breastscreen Norway. Radiology. 2019;292(2):289–96.

Théberge I, Hébert-Croteau N, Langlois A, Major D, Brisson J. Volume of screening mammography and performance in the Quebec population-based breast cancer screening program. CMAJ: Can Med Assoc J = journal de l'Association medicale canadienne. 2005; 172(2): 195–99

Martinez AG, Martinez RO, Villalba V, Martinez MG, Lancis CV. Quality assurance in breast cancer screening: identifying radiologist’s under performance, ECR 2017. Insights Imaging. 2017;8:1–583. https://doi.org/10.1007/s13244-017-0546-5.

Brennan PC, Ganesan A, Eckstein MP, Ekpo EU, Tapia K, Mello-Thoms C, Lewis S, Juni MZ. Benefits of independent double reading in digital mammography: a theoretical evaluation of all possible pairing methodologies. Acad Radiol. 2019;26(6):717–23.

Tabár L, Dean P. Teaching atlas of mammography. New York, NY: Thieme-Stratton; 1983. p. 88–136.

A. C. o. Radiology. ACR BI-RADS atlas: breast imaging reporting and data system, vol. 2014. Reston, VA: American College of Radiology; 2013. p. 37–78.

Chakraborty DP. Analysis of location specific observer performance data: validated extensions of the jackknife free-response (JAFROC) method. Acad Radiol. 2006;13(10):1187–93.

Beam CA, Conant EF, Sickles EA. Association of volume-independent factors with accuracy in screening mammogram interpretation. J Natl Cancer Inst. 2003;95(4):282–90.

Burnside ES, Lin Y, Munoz Del Rio A, Pickhardt PJ, Wu Y, Strigel RM, Elezaby MA, Kerr EA, Miglioretti DL. Addressing the challenge of assessing physician-level screening performance: mammography as an example. PLoS ONE. 2014. https://doi.org/10.1371/journal.pone.0089418.

Taba ST, Hossain L, Heard R, Brennan P, Lee W, Lewis S. Personal and network dynamics in performance of knowledge workers: a study of Australian breast radiologists. PLoS ONE. 2016. https://doi.org/10.1371/journal.pone.0150186.

Gandomkar Z, Tay K, Brennan PC, Kozuch E, Mello-Thoms C. Can eye-tracking metrics be used to better pair radiologists in a mammogram reading task? Med Phys. 2018;45(11):4844–56.

European Society of Radiology (ESR), American College of Radiology (ACR). European Society of Radiology (ESR) and American College of Radiology (ACR) report of the 2015 global summit on radiological quality and safety. Insights Imaging 2016;7:481–4. https://doi.org/10.1007/s13244-016-0493-6.

Wing P, Langelier MH. Workforce shortages in breast imaging: impact on mammography utilization. Am J Roentgenol. 2009;192(2):370–8.

Bassett LW, Monsees BS, Smith RA, Wang L, Hooshi P, Farria DM, Sayre JW, Feig SA, Jackson VPJR. Survey of radiology residents: breast imaging training and attitudes. Radiology. 2003;227(3):862–9.

Horowitz TS. Prevalence in visual search: from the clinic to the lab and back again. Jpn Psychol Res. 2017;59(2):65–108.

Wolfe JM. Use-inspired basic research in medical image perception. Cogn Res Princ Implic. 2016;1(1):17.

Ethell SC, Manning D. Effects of prevalence on visual search and decision making in fracture detection. International Society for Optics and Photonics; 2001. p. 249–57.

Egglin TK, Feinstein ARJJ. Context bias: a problem in diagnostic radiology. JAMA. 1996;276(21):1752–5.

Gur D, Bandos AI, Fuhrman CR, Klym AH, King JL, Rockette HE. The prevalence effect in a laboratory environment: changing the confidence ratings. Acad Radiol. 2007;14(1):49–53.

Gur D, Bandos AI, Cohen CS, Hakim CM, Hardesty LA, Ganott MA, Perrin RL, Poller WR, Shah R, Sumkin JHJR. The “laboratory” effect: comparing radiologists’ performance and variability during prospective clinical and laboratory mammography interpretations. Radiology. 2008;249(1):47–53.

Scott HJ, Evans A, Gale AG, Murphy A, Reed J. The relationship between real life breast screening and an annual self assessment scheme. International Society for Optics and Photonics; 2009. p. 72631E.

Jackson SL, Abraham L, Miglioretti DL, Buist DSM, Kerlikowske K, Onega T, Carney PA, Sickles EA, Elmore JG. Patient and radiologist characteristics associated with accuracy of two types of diagnostic mammograms. Am J Roentgenol. 2015;205(2):456–63.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This study was funded by National Health and Medical Research Council (grant number APP1162872) and National Breast Cancer Foundation (IIRS-18–089).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

ZG declares that she has no conflict of interest. TL declares that she has no conflict of interest. ZG declares that she has no conflict of interest. TL declares that she has no conflict of interest. EE declares that he has no conflict of interest. SJL declares that she has no conflict of interest. PCB declares that he has no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. All participants provided informed consent to use their de-identified data in research studies.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Gandomkar, Z., Lewis, S.J., Li, T. et al. A machine learning model based on readers’ characteristics to predict their performances in reading screening mammograms. Breast Cancer 29, 589–598 (2022). https://doi.org/10.1007/s12282-022-01335-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12282-022-01335-3