Abstract

Purpose

To evaluate a self-test for Dutch breast screening radiologists introduced as part of the national quality assurance programme.

Methods and materials

A total of 144 radiologists were invited to complete a test-set of 60 screening mammograms (20 malignancies). Participants assigned findings such as location, lesion type and BI-RADS. We determined areas under the receiver operating characteristics (ROC) curves (AUC), case and lesion sensitivity and specificity, agreement (kappa) and correlation between reader characteristics and case sensitivity (Spearman correlation coefficients).

Results

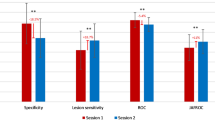

A total of 110 radiologists completed the test (76 %). Participants read a median number of 10,000 screening mammograms/year. Median AUC value was 0.93, case and lesion sensitivity was 91 % and case specificity 94 %. We found substantial agreement for recall (κ = 0.77) and laterality (κ = 0.80), moderate agreement for lesion type (κ = 0.57) and BI-RADS (κ = 0.45) and no correlation between case sensitivity and reader characteristics.

Conclusion

Areas under the ROC curve, case sensitivity and lesion sensitivity were satisfactory and recall agreement was substantial. However, agreement in lesion type and BI-RADS could be improved; further education might be aimed at reducing interobserver variation in interpretation and description of abnormalities. We offered individual feedback on interpretive performance and overall feedback at group level. Future research will determine whether performance has improved.

Key Points

• We introduced and evaluated a self-test for Dutch breast screening radiologists.

• ROC curves, case and lesion sensitivity and recall agreement were all satisfactory.

• Agreement in BI-RADS interpretation and description of abnormalities could be improved.

• These are areas that should be targeted with further education and training.

• We offered individual feedback on interpretative performance and overall group feedback.

Similar content being viewed by others

References

Holland R, Rijken HJ, Hendriks JH (2007) The Dutch population-based mammography screening: 30-years experience. Breast Care 2:12–18

National Evaluation Team for Breast Cancer Screening (2012) Preliminary results breast cancer screening programme in the Netherlands, 2011. NETCB, Rotterdam

Australian Government Department of Health and Aging (2009) BreastScreen Australia Evaluation: evaluation final report. Department of Health and Aging, Canberra

The Royal College of Radiologists (2007) Standards for self-assessment of performance. RCR, London

United States Food and Drug Administration (2012) Mammography quality standards act and program 2012. http://www.fda.gov/radiationemitting-products/mammographyqualitystandardsactandprogram/default.htm. Accessed 21 Nov 2012

Soh BP, Lee W, Kench PL et al (2012) Assessing reader performance in radiology, an imperfect science: lessons from breast screening. Clin Radiol 67:623–628

Scott HJ, Gale A (2006) Breast screening: PERFORMS identifies key mammographic training needs. Br J Radiol 79:S127–S133

Cook AJ, Elmore JG, Zhu W et al (2012) Mammographic interpretation: radiologists' ability to accurately estimate their performance and compare it with that of their peers. AJR Am J Roentgenol 199:695–702

Geller BM, Ichikawa L, Miglioretti DL, Eastman D (2012) Web-based mammography audit feedback. AJR Am J Roentgenol 198:562–567

Shapiro S, Coleman EA, Broeders M et al (1998) Breast cancer screening programmes in 22 countries: current policies, administration and guidelines. International Breast Cancer Screening Network (IBSN) and the European Network of Pilot Projects for Breast Cancer Screening. Int J Epidemiol 27:735–742

Yankaskas BC, Klabunde CN, Ancelle-Park R et al (2004) International comparison of performance measures for screening mammography: can it be done? J Med Screen 11:187–193

Timmers JM, van Doorne-Nagtegaal HJ, Zonderland HM et al (2012) The Breast Imaging Reporting and Data System (BI-RADS) in the Dutch breast cancer screening programme: its role as an assessment and stratification tool. Eur Radiol 22:5

Eng J (2012) ROC analysis: web-based calculater for ROC curves. http://www.jrocfit.org. Accessed 21 Nov 2012

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33:159–174

Cohen J (1960) A coefficient of agreement for nominal scales. Educ Psychol Meas 20:37–46

Ciatto S, Ambrogetti D, Morrone D, Del Turco M (2006) Analysis of the results of a proficiency test in screening mammography at the CSPO of Florence: review of 705 tests. Radiol Med 111:797–803

Onega T, Smith M, Miglioretti DL et al (2012) Radiologist agreement for mammographic recall by case difficulty and finding type. J Am Coll Radiol 9:788–794

Reed WM, Lee WB, Cawson JN, Brennan PC (2010) Malignancy detection in digital mammograms: important reader characteristics and required case numbers. Acad Radiol 17:1409–1413

Esserman L, Cowley H, Eberle C et al (2002) Improving the accuracy of mammography: volume and outcome relationships. J Natl Cancer Inst 94(5):369–375

Miglioretti DL, Gard CC, Carney PA et al (2009) When radiologists perform best: the learning curve in screening mammogram interpretation. Radiology 253:632–640

Pusic MV, Andrews JS, Kessler DO et al (2012) Prevalence of abnormal cases in an image bank affects the learning of radiograph interpretation. Med Educ 46:289–298

Carney PA, Bogart TA, Geller BM et al (2012) Association between time spent interpreting, level of confidence, and accuracy of screening mammography. Am J Roentgenol 198:970–978

Elmore JG, Jackson SL, Abraham L et al (2009) Variability in interpretive performance at screening mammography and radiologists' characteristics associated with accuracy. Radiology 253:641–651

Kerlikowske K, Grady D, Barclay J et al (1998) Variability and accuracy in mammographic interpretation using the American College of Radiology Breast Imaging Reporting and Data System. J Natl Cancer Inst 90:1801–1809

Lazarus E, Mainiero MB, Schepps B et al (2006) BI-RADS lexicon for US and mammography: interobserver variability and positive predictive value. Radiology 239:385–391

Pinto A, Acampora C, Pinto F et al (2011) Learning from diagnostic errors: a good way to improve education in radiology. Eur J Radiol 78:372–376

Gur D, Bandos AI, Cohen CS et al (2008) The "laboratory" effect: comparing radiologists' performance and variability during prospective clinical and laboratory mammography interpretations. Radiology 249:47–53

Acknowledgements

The authors especially would like to acknowledge the careful work of Paul van de Looi in organising and developing the test set. We also thank the expert panel for their time in composing the test set and the participating radiologists for providing the data for this work

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Timmers, J.M.H., Verbeek, A.L.M., Pijnappel, R.M. et al. Experiences with a self-test for Dutch breast screening radiologists: lessons learnt. Eur Radiol 24, 294–304 (2014). https://doi.org/10.1007/s00330-013-3018-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-013-3018-4