Abstract

Purpose

Although pharmaceutical high-speed filling lines are fully automated, interventions are still performed by hand via glove ports. Bringing robots into the high-speed production lines for the handling of interventions could increase both the thoughput and the sterility of the system. The work presented in this manuscript proposes a semi-autonomous intervention strategy for the removal of erroneous plungers.

Methods

A YOLOv8-based computer vision algorithm continuously monitors the state of the plunger feed. Autonomous motion planning is used for large, general movements, while human-operated teleoperation with system guidance allows for small, precise motions. The combination of both creates a intuitive, gloveless intervention method.

Results

A user test with a group of 22 volunteers shows that an untrained operator can perform the teleoperated intervention via this system in an average of \(\pm 30\)s and \(\pm 21\)s when the plungers are static and dynamic respectively. Via a Likert-scale based questionnaire, it was found that the test group experienced the haptic system as very intuitive. Additionally, the system is capable of running completely remotely via a two-laptop setup, which has been tested at distances up to 100km.

Conclusion

An intuitive, haptic semi-autonomous teleoperation system is created for the execution of a plunger removal intervention. Both in a static and dynamic scenario, an improvement of the operating time is observed compared to the current glove-based method (2 to 3 min). The system can run fully remotely and was found to be very intuitive by the user group.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Pharmaceutical production differs from more traditional industrial manufacturing processes in many ways, the most obvious being the demand for outstanding product quality when it comes to the aseptic nature of the process, as this could mean the difference between life and death of a patient. This implies that significant amounts of time are spent in ensuring that the system is sufficiently sterile. Because of this, any reduction of the time required to reach the required level of sterility is a beneficial addition to the manufacturing process. With reduced downtime, the line can be operational for longer in the same amount of time, increasing its throughput and yield, which in turn result in a financial benefit.

Although the current state-of-the-art large-scale batch production isolator filling line principle has stood the test of time, one major remaining bottleneck is the use of rubber gloves on the side for performing interventions. As they have non-rigid forms with overlapping sections, the gloves are difficult to thoroughly clean and sterilise/decontaminate, meaning their cleaning process consumes a lot of time. An even more pressing drawback of the gloves is that small punctures in the gloves could cause the generation of a jet effect of lower-grade outside air into the isolator when an operator puts their hand in the glove due to the generated over-pressure. For these reasons, all gloves need to be checked periodically to verify and ensure their integrity. These checks are mainly performed visually after an intervention has been performed and can reliably detect defects in the glove of 400 to \(500~{\upmu \text {m}}\) [1]. professional glove leak testing equipment can detect holes in the range of \(100~{\upmu \text {m}}\) [1]. One important aspect that should be mentioned here is that the air surrounding the isolator setup is not regular outside air, but air of grade C quality, which is already highly sterile [2]. It goes without saying that pharmaceutical companies are fully aware of these glove drawbacks, and it can’t be stressed enough that every necessary measure is taken to ensure the safety and quality of the product. The presence of gloves does not bring an inherent risk to the aseptic production process, but a large timely cost for their drawbacks to be eliminated via appropriate measures. A significant and costly effort is made towards the validation of the gloves in the system. Additionally, these measures can also impede in the execution of the interventions themselves, causing them to require more operating time and possibly a full line interruption if the intervention is not compliant with the validated glove port procedures, both of which cause significant downtime for the production process.

This manuscript proposes a proof-of-concept approach for performing a common intervention within the isolator filling line, namely the removal of erroneous plungers or stoppers. This showcases the capabilities and potential of robots for interventions. The goal of this is to pave the way for full robotic integration in both a fully autonomous and (guided) teleoperative way within future isolator setups, and ultimately the complete removal of gloves.

Robotisation within pharmaceutical production systems is not a new concept. Examples of gloveless isolator filling can be found in [3,4,5,6,7]. However, these systems opt to overhaul the production principle, by robotising the filling process itself. These methods are designed with drug research in mind, aiming at low-scale, highly customisable manufacturing. They have proven difficult or impossible to scale to high-speed 600 vial-per-minute batch production lines and are therefore not suitable. For this reason, in this work, the robotisation takes place alongside the filling process, by (semi)automating the currently manual interventions instead of the already automated process itself. Employing robots for interventions offers multiple benefits compared to human glove-based interventions, even in a teleoperative setup. Assuming the robot itself is validated to work inside the aseptic environment, it is part of the entire isolated filling machine, meaning it can be cleaned and maintained as any other part inside the isolator (an industrial robot requires preventative maintenance every 12 months or 10.000 running hours on average). As a robot does not require any breaks, a long 10-day production batch could theoretically run continuously.

This approach is a direct continuation of our work proposed [8], where we created a haptic teleoperation system using both sensor-based and virtual environment-based force feedback to improve the safety during control and the sense of spatial awareness of the operator. The continuous state monitor would calculate and anticipate the future movements of the robot based on the operator’s input, and alter them as required to prevent the robot from colliding with the environment or entering singular robot configurations. Our tests with a group of 14 volunteers with no prior knowledge of the system showed promising results in terms of intuitiveness, with an overall improvement of \(22.8~\%\) of the measured required operating times. The inter-quartile range of these times also reduced significantly by \(82.7~\%\). Lastly, by evaluation via a four-point Likert-scale, \(92.0~\%\) of all replies fell in the positive or very positive category, indicating a pleasant control experience. Whereas this control method was designed to be a general control strategy to freely and safely manipulate the robot within the known environment, the presented work expands this by specialising the architecture towards one intervention. Additionally, [8] assumes the operator is sitting next to the robot setup, granting them direct visual feedback of the system. In contrast, for this work, the operator is performing the intervention completely remotely from a control station separate from the physical robot setup. He or she utilises a haptic control interface to control the manipulator in real-time with camera-based visual feedback. Because of this, it is imperative that the system is user-friendly under the constraints of video stream-based feedback, as this is the only visual feedback available to the operator during the intervention. Lastly, the presented work is fully developed in ROS2 (Robot Operating System), while the previous work was made in ROS1. ROS1 was primarily designed as a research-oriented robot tool, and was therefore never intended to be used in industrial applications or commercial use cases. For this reason, elements surrounding safety, security, stability, and network topology were never a priority, and are not feasible in the ROS1 architecture. ROS2 addresses these flaws and focuses on industrial applications and integration, with some notable benefits over its predecessor being:

-

The possibility to use multiple machines together directly via wifi thanks to the use of the Data Distribution Service (DDS) protocol [9];

-

Guaranteed security measures thanks to the use of the established and tested DDS protocol, allowing for safe communication over insecure networks (such as the internet);

-

Customisable communication preference via the Quality of Service (QoS) parameters offered via DDS;

-

True real-time computation and communication capability for high runtime efficiency.

Materials and Methods

The presented work is built and tested on a testbed setup which is dimensionally accurate to a real-life isolator filling line. It is designed to be modular in nature, so different interventions can easily be copied, constructed and moved around. The Stäubli TX2-60L SteriClean 6-DoF robot was selected for this use case as it is compliant with the \(H_{2}O_{2}\)-based Vaporised Hydrogen Peroxide (VHP) decontamination process, making it viable for use according to the Current Good Manufacturing Practice (CGMP) guidelines [10]. At the time of writing this document, the same robot is now available in an even higher compliant grade (SteriClean+) model. This robot has a usable range of \(920~{\text {m}\text {m}}\), meaning 4 robots would be required to cover the entire length of the production line. However, a more interesting approach would be to mount the robot on an external linear axis. With this, one robot would be sufficient as it could move alongside the production line.

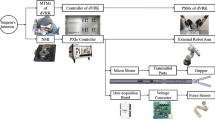

The robot is controlled by the operator using a Geomagic Touch haptic interface [11], as was also used in [8]. It is constructed as a 6-DoF link system with a pen-shaped end-effector for the operator to handle. A one-to-one replica of the stainless steel plunger feed rail used in production was made to accurately model the behaviour of the rail under differently lighting conditions to make the setup as authentic as possible for the computer vision-based detection algorithm. For accurate simulation of the movement of the plungers, an additional rail is added which consists of a transport belt design driven by a NEMA-17 stepper motor. A picture of the test setup can be found in Fig. 1.

Real-world test setup, equipped with a one-to-one recreation of the stainless steel plunger rail, a dynamic belt-driven plunger rail for movement simulation and a computer vision system with two polarised light sources and an industrial UEye camera with polarisation filter. The robot is fitted with a vacuum tool with an integrated force-torque sensor

Computer Vision System and Anomaly Detection

The setup is equipped with a computer vision system, running an IDS-imaging UEye UI-5240-CP-C-HQ industrial camera, combined with a polarisation filter and light sources emitting polarised light. As both the plungers and the stainless steel rail are in a similar colour space, the detection algorithm experiences difficulties in consistent and robust detection of the objects when using natural lighting. For this reason, polarised light is utilised to generate a better contrast between the rubber plungers and the stainless steel rail when compared to natural light. As explained in [12], polarised light reacts differently to the different materials due to the reduction of specular reflection. This effect is shown in Fig. 2.

From Fig. 2, the difference of the different lighting setups becomes apparent. When using a bright field illumination technique, the polarised light creates the desired contrast between the plungers and the rail. However, the difference between correct and incorrect plungers is hard to notice. A dark field illumination strategy casts a shadow in the hole of the incorrect plungers, creating a noticeable visual distinction between the correct and the incorrect plungers, combined with the added contrast between the two materials under polarised light. This drastically improves the detectability of the incorrect plungers for the vision algorithm. An additional, second light source is fitted, which shines over the plungers and eliminates shadows cast by the robot itself as these are undesirable. It also illuminates the ArUco marker on the robot tool [13], used for a close-up camera view for the operator’s convenience when executing fine movements with the robot. This can be viewed in Fig. 5c. A schematic of this setup can be found in Fig. 3.

Schematic of the lighting system used in the demo setup. One polarised light source illuminates the plungers via dark field illumination, which also casts shadows in the incorrect plungers for better object detection. The other source eliminates shadows created by the robot and illuminates the ArUco marker on the robot tool which is used to create a zoomed-in view to aid the operator during execution of the intervention

Object and anomaly detection is realised by the use of YOLOv8 (You Only Look Once) computer vision algorithm [14,15,16]. As stated by [16], the fundamental principles behind the algorithm stay mostly the same. However, each version of YOLO builds upon its predecessor by implementing new features and reforming the architecture for enhanced learning capabilities and better hardware utilisation. For this reason, it was decided to use the latest version of the algorithm which was available at the time, being YOLOv8. The algorithm is capable of detecting every-day objects via the COCO dataset [17]. To facilitate the detection of our custom plunger objects, transfer learning is applied by training YOLOv8 with \(\pm 150\) custom, labeled images of the plungers. Three detection categories are created: (1) upside down, (2) sideways and (3) correct orientation. The reasoning to have both upside down and sideways labeled individually, instead of one ‘incorrect’ tag, is due to the fact that these cases can be resolved in different ways during the intervention. The plungers have ridges on the side which causes them to interlock when they are either correctly oriented or upside down. Because of this, when removing an upside down plunger by simply lifting it up, the surrounding plungers are lifted also, creating a harmonica-effect which has a high chance of cause new issues. Because of this, these cases are resolved by scooping the plunger out in order to clear the locked ridges. When the plunger is on its side, this effect does not occur, and the plunger can be lifted out without any issue. The correct plungers are monitored to gauge the state of the system. If there is an anomalous fill rate in one of the lanes without observing an incorrect plunger, this could indicate an obstruction outside of the view of the camera, which should still be signaled.

Robot Control

The robot is controlled in both a fully automated and a (guided) teleoperative way. This combines the best of both worlds as large, repetitive motions can be generated and executed automatically and collision-free without human help, while fine motions are aided by a human operator taking control. As the working environment is fully known a priori, a simplified model is used for collision prevention and virtual haptic feedback generation. The planning algorithm that is employed will utilise the geometric information of the environment to automatically create collision-free robot trajectories. The virtual robot environment is displayed in Fig. 4.

After detection of an incorrect plunger, the robot can move to the lane of the plunger autonomously using the linear planning capabilities of the Pilz motion planning library via the MoveIt!2 planning interface [18]. After this, the operator is given control of the robot to grasp the anomaly, after which the robot will automatically remove the plunger and move back to its safe home position, where it will dispose of the plunger in the waste area accordingly. As is customary for the current real-life manual intervention, the plunger is disposed of by dropping it to the bottom plate of the setup. For a demonstration of the system, please visit https://www.youtube.com/watch?v=Fj9pDML6jlo.

Once the operator takes control, the input has to be translated to a format which can be understood by the robot. This is the so-called twist format, which consists of a linear and an angular velocity vector, containing each of the three Cartesian axes. The rotational axes are locked, as these are complicated for the user to control appropriately in a full six degree of freedom system. For the linear velocities, it is opted to use a quadratic velocity scaling function to allow large movements when the pen of the interface is moved quickly, and precise, fine movements when the pen is moved slowly. These fine movements are hard to accomplish with a linear scaling. The quadratic scaling offers a more natural feeling ramp-up in the low-velocity region, which is also why quadratic input scaling is commonly used in video game applications. The scaling is achieved by comparing the latest pose of the interface pen to the previous one, taking the elapsed time between the two measurements into account. The quadratic formulations are denoted in Formula 1, 2 and 3.

With \(T_x\) being the linear x-axis velocity of the twist message, \(P_x\) and \(P^{\prime }_x\) being the Cartesian x-coordinate of the interface in the current and previous time step respectively, \(\Delta t\) the elapsed time between both time steps, and \(\alpha\) the scaling factor. The same naming convention applies to the y and z axis calculations.

As stated above, to facilitate easier control, the rotational axes of the robot are locked. Additionally, the robot tool is only allowed to move within the 2D plane of the plunger lane, perpendicular to the stainless steel rail. As the camera is setup directly facing the rail head on, this reduces the original 3D movement problem of the intervention to a 2D scenario, increasing the intuitiveness for the operator and simplifying the controls significantly when operating from a 2D video stream.

Haptic Feedback

Haptic feedback is generated to give the operator a better sense of awareness inside the operating environment, as well as to prevent undesired, dangerous situations. Haptics are induced in both a virtual and a physical manner.

Virtual haptic feedback implies feedback that is generated based on mathematical equations derived from the virtual environment and the state of the robot. The first form of virtual feedback is implemented for safety of the environment and the robot. It occurs when the operator would control the robot in close proximity to the environment similar to the implementation in our previous work [8]. In this case, haptics are generated to push the operator back to prevent them from sending the robot into a collision state. This is achieved by measuring the closest distance between the robot and the virtual environment continuously, and calculating the feedback force according to this distance value using Eq. 4.

With \(F_{feedback}\) being the generated feedback force, and x the measured distance between the robot and the environment in cm. This function was designed to give a pleasant scaling of feedback as the robot approaches the collision geometry, with a maximum of \(3{\text {N}}\) of force applied when the distance nears zero, as this is the maximum force that can be applied by the haptic interface.

A second form of virtual haptic feedback is applied to simulate a realistic feel to the operator while handling the robot. Once he or she takes control via the haptic interface, the generated feedback will force the pen of the interface to move inside the plane of the plunger lane, similar to the robot itself. If the operator would move the pen outside of the active working plane, feedback pulls or pushes the pen back to the active plane according to Eqs. 5 and 6. This improves intuitiveness by stimulating the operator to move inside the active movement plane, creating the feeling of a guided movement inside the rail. At this point, it is not possible to manually alter the selected lane. This could be an issue if an error in the detection algorithm sends the robot to the incorrect lane of the rail. This however will be resolved in the future and is added as future work.

where x is expressed in m. The further the operator moves the pen from the active plane, the more force is applied to the pen of the interface. When the pen is moved \(15~{\text {m}\text {m}}\) or more from the plane to either side, the maximum force of \(3~{\text {N}}\) is applied.

(Remote) Intervention

The teleoperation system can be used completely remotely from the physical robot setup. All the operator requires at the remote location is the control interface and a laptop, reading in the commands and supplying them to the main compute unit, which runs locally at the test setup. The main compute unit is responsible for all the ‘heavy lifting’ of the task, to keep the compute cost at the remote side as minimal as possible. This way, the required data transfer between the two units is kept low, minimising the required bandwidth. Remote communication is established by utilising the wireless, DSS-based same-network communication features of ROS2, combined with TCP-based socket connections used for video streaming. To be able to use these communication features, it is required that both computers are connected to the same network, or at least believe that they are. This is realised via the open source ZeroTier service [19]. When both computers are connected to each other via ZeroTier, they are forced onto the same network address, allowing them to communicate as if they were connected locally. When running the system remotely, a sufficient internet connection is essential. It was found that the network must be able to provide a stable, symmetrical connection, with a bandwidth of \(\pm ~300\) Mbit/s for both upload and download minimally. A wired connection is preferred as it provides a more stable connection. The remote test was executed with a distance of roughly \(100~{\text {k}\text {m}}\) between the main compute unit and the remote laptop, running continuously for 8 hours without interruptions or errors. Having this remote capability would allow the construction of one centralised control room in the production facility, from which multiple isolator systems could be monitored and interventions could be executed, without the operators having to be present at the physical isolator setup.

The operator is supplied with three windows to perform the intervention, which are displayed in Fig. 5. The first screen (Fig. 5a) is the heads-up display (HUD), showing the result of the object detection algorithm, combined with the fill rate of each individual plunger rail. This way, the operator can quickly gauge the state of the system. The second screen (Fig. 5b) is the command window which (1) guides the operator through the steps of the intervention by telling them what to do, and (2) sends commands to the robot when the operator has performed the indicated step. Lastly, the third screen (Fig. 5c) is a close-up camera view of the robot tool, making it easier for the operator to make fine adjustments when controlling the robot. The close-up view is generated by tracking the ArUco marker that is attached to the vacuum tool.

The most pressing plunger is always indicated as the first to remove. Intuitively, this is the plunger that is located the closest to the output of the rail, as this would be the first plunger to cause an obstruction further down in the process. As experiments proved it hard for the operators to accurately observe which plunger needed to be removed from which rail, both the plunger and the rail it is located in are highlighted additionally to eliminate the ambiguity created due to the lack of depth information from the 2D camera, as can be seen in Fig. 5c.

In contrast to the current situation with manual interventions, where the production line has to be put on hold for operator safety, a robotic arm would allow the filling process to continue while the robot is executing task. Therefore, to simulate the movement of the plungers, an additional, eleventh plunger rail was added. This rail consists of a transport belt driven by a NEMA 17 stepper motor. With it, the movement of the plungers can be simulated accurately and affordably, without the need of reconstructing the entire feed system. The system automatically synchronises the tool of the robot with the movement of the plunger rail based on the detection of the computer vision algorithm. Although this rail does not represent the actual feed system visually, it is only used to verify the ability of the system and the operator to grasp a moving plunger. Once it has been grasped, it can be considered as stationary form that point on. For this reason, the validation of the system is twofold. The computer vision detection system, and plunger removal are tested on the replica rail, as this models the real-life situation as accurately as possible when it comes to visual aspects, drag between the objects and lighting. The dynamic movement and approach to the plunger are tested on the dynamic rail. If both tests are successful, it can be assumed that the system would function accordingly with moving plungers in the rail, stainless steel rail. When an anomaly is detected in the dynamic rail, the robot automatically synchronises itself to the movement of the plungers. This movement is superposed ontop of the input of the operator, so the robot automatically follows the plungers, and the operator can focus purely on the removal of the anomaly.

Automatic plunger following is achieved by detecting movement of the plungers in between subsequent frames of the camera input. First, the system is calibrated to the movement of the plungers. At each camera frame, the pixel value of the plunger detections, and the ArUco marker are compared to those of the previous frame. Movement of the plunger or the ArUco marker is detected by calculating the difference of their pixel. When the pixel difference of the plunger is sufficiently different, movement of the plunger is indicated. Subsequently, a move trigger is sent to the robot, which makes the robot move along with the movement of the plunger. However, there will always be a delay between the movement of the plunger and the according movement of the robot tool, due to the time required for image processing, sending the movement trigger, etc. Because of this, the robot will always lag behind the movement of the plungers. To combat this, during calibration, the difference in the time between the movement detection of the plunger and the according detected movement of the ArUco marker is calculated. The average value at the end of the calibration step is then used during regular operation to compensate the robot movement for the communication delay, by anticipating the next plunger movement and calculating the required time stamp for the robot movement trigger. This results in the following compensation method in Eq. 7, which is also visually explained in Fig. 6.

Visualisation of the robot movement delay calibration method. The plungers move at a set interval of \(1~{\text {s}}\) (\(\Delta m_p\)). The movement of the plungers is detected via the object detection algorithm at \(d_p\). Without any correction, the robot will move at \(m_r\), lagging behind the movement of the plunger. The time between the detected movement of the plunger and the detected movement of the robot is denoted as \(\Delta d_{r/p}\). The calibration shifts the first movement so that it coincides with the first following plunger movement

With \(t_r\) being the timestamp of the robot movement trigger, \(d_p\) the timestamp of the detected plunger movement as detected by the computer vision system, \(\Delta FPS\) the time delay induced by the camera and detection algorithm, \(\Delta m_p\) the time interval between subsequent movements of the plungers, and \(\Delta d_{r/p}\) the time between the detected movement of the robot compared to the detected movement of the plunger during the calibration step. The plungers in the real system are removed from the rail with a swivel arm, which operates at a frequency of \(1~{\text {Hz}}\). Hence, \(\Delta m_p\) is equal to \(1~{\text {s}}\). As the detection algorithm runs in real-time relative to the camera feed, \(\Delta FPS\) delay is equal to the delay induced by the camera’s frame rate. Assuming a camera frame rate of 30, the delay would result in \(33.3~{\text {m}\text {s}}\), which can then be accounted for. If the synchronisation would still be incorrect, the operator can manually fine tune the trigger offset via the keyboard of the computer.

Practical Tests

To test the intuitiveness, reliability and stability of the system, it was deployed both locally and remotely, utilising a test-group of volunteers without prior knowledge of the system. The volunteers vary in gender, educational background, profession and age to prevent biases as much as possible. It consisted of 22 people with 6 being female, and 16 male. Other gender options were offered but not selected by the participants. 16 people were in the age range of 18 to 30 years old while the other 6 were in the range of 30 to 60 years old. This results in an age range which roughly correlates with the working age range of operators. Each participant’s operation time was recorded to analyse the potential difference between their first and second attempt. They were also asked to fill out a questionnaire to evaluate their experience when using the system based on the four-point Likert scale method [20, 21].

Operation Time Results

User group testing is used to evaluate how intuitive the system is perceived by unfamiliar users. Additionally, it also allows a comparison between their operating times to those of real-world, trained operators within a pharmaceutical production line, performing the same task manually. Figure 7 shows the times of each volunteer for both the static and dynamic version of the task for both their first and second attempt.

Looking at the time results of the static version in Fig. 7a, it can be observed that there is only a slight improvement of the average operation time between the first and the second attempt, as it decreases from \(31.9~{\text {s}}\) to \(29.6~{\text {s}}\). To investigate whether or not this decrease can be deemed significant, a paired t-test is applied. Assuming a significance level of 0.05 and a hypothesises mean difference of zero, it can be noticed that there is no significant difference between the mean values of both attempts. The boxplot of the static task in Fig. 8a also highlights that the variation between different participants stays relatively consistent between the first and second attempt, with an inter quartile range (IQR) of \(8.0~{\text {s}}\) for the first attempt, and \(6.3~{\text {s}}\) for the second. This could indicate that there is no learning effect for the static scenario, suggesting its intuitiveness.

Applying the same strategy to the dynamic variant (Fig. 7b), it becomes apparent that there is a larger improvement of the average time required when going from the first attempt to the second, going from \(26.3~{\text {s}}\) to \(21.2~{\text {s}}\). This is backed up by the same paired t-test method, which shows that the mean difference of the dynamic version is statistically significant. The boxplot representation in Fig. 8b also shows that the results between the different users becomes much more consistent, with the IQR decreasing from \(8.4~{\text {s}}\) to \(4.4~{\text {s}}\). This ‘learning curve’ effect can be explained by comparing the dynamic variant to the static one. In the dynamic variant, the operators ‘learn’ to utilise the movement of the plungers to their advantage to further reduce their overall operation time. Although the dynamic scenario appears more complex at first sight, these results indicate that thanks to this learning effect of the plunger movement, the operating time can be reduced drastically from \(31.9~{\text {s}}\) to \(21.2~{\text {s}}\) in this case. This results in a total improvement of \(33.4~\%\) of the dynamic version compared to the static one. When comparing both methods to the current manual approach, both can be considered a significant improvement, as trained operators have indicated that they perform this intervention in roughly 2 to 3 minutes.

Before testing, it was assumed that younger operators (sub-30) would have an easier time using the system than the older candidates. Nevertheless, when performing paired t-tests on the results of the different age groups, no discernible significant difference could be found. This shows that the system is equally intuitive for all tested ages. The same consensus can be made when looking at different operator genders; there is no significant difference between the results of male and female participants (as stated earlier, other gender identification options were given, but none were indicated by the users).

Likert Scale Results

Via the Likert scale-based questionnaire, the intuitiveness of the system is assessed further in a more subjective manner. After completing all the tests, the users were asked to indicate their experience with the system by answering the following questions with either very negative, negative, positive, or very positive:

-

1.

Do you find the static scenario to be sufficiently intuitive without prior knowledge?

-

2.

Do you find the dynamic scenario to be sufficiently intuitive?

-

3.

Do you think that the second attempt of the static scenario went better than the first?

-

4.

Do you think that the second attempt of the dynamic scenario went better than the first?

-

5.

Do you find it useful that the robot is locked into the plane of the plunger lane when you take control via teleoperation?

-

6.

Is the haptic feedback sufficiently noticeable?

The results of the questionnaire can be observed in Fig. 9. From this, it can be concluded that the system is seen as very intuitive by the users, especially the lane locking implementation of teleoperation based robot motion as all participants indicated this as very positive. Remarkably, roughly \(90~\%\) of the participants felt like the second attempt of the static intervention went better than the first, even though the measured times do not indicate a significant difference between the two attempts. The most prominent feedback given was that it was sometimes unclear which was the plunger which needed to be removed due to the lack of depth perception. To improve this, lane and plunger highlighting was added, which can also be seen in Fig. 5c. Originally, there was an additional, seventh question in the questionnaire: Is the haptic feedback when touching the plunger to indicate a grasp sufficiently noticeable/useful?. Unfortunately, after testing, it was discovered that the force-torque sensor experiences drift in its measurements after extended run times. Because of this, the haptic feedback generated via its measurements behaved vastly different for the final participants compared to the initial participants. For this reason, the responses to this question were deemed unusable. The issue with the sensor has since been resolved by using a relative force measurement approach instead of using the absolute value. Now at the start of each test attempt, a virtual zero value is updated to the state of the force-torque sensor at that time. This way, the drift on the absolute values of the measuring device are mitigated.

Aseptic Risk Assessment and Validation

As the ultimate goal of this system is to implement robots within high-speed filling lines, the potential for validation within those systems is of high importance. As is stated by [22]: "It is useful to assume that the operator is always contaminated while operating in the aseptic area. If the procedures are viewed from this perspective, those practices which are exposing the product to contamination are more easily identified." This further emphasises the potential aseptic gain that can be made by removing the human operator from the isolator equation entirely. When it comes to the robot itself, it should not pose an issue from a mechanical point of view, as the SteriClean(+) versions are compliant with the environment’s demands [10]. The first air principles dictates that it is not allowed to interrupt the flow of sterile air before it touches any of the critical components of the system (stoppers, open vials, etc.). Using the virtual collision environment, these regions can be marked to ensure that they are fully taken into account during the trajectory generation phase. One way to achieve this is by modeling the first air zones as collision objects to the robot. As the system will now see these regions as physical barriers, they become impossible for the robot to move through. Additionally, unlike a human operator’s actions, the robot’s movements are fully traceable and repeatable. This means that every generated trajectory can be validated against the CGMP norm. In the event when a mistake does occur, thanks to the traceability aspect, every action can be checked to see where the error arose in order to prevent it in the future.

Regarding the camera system, the authors would suggest designing a sterilisable housing unit for both the camera and the light sources. During testing, placement of the camera and light sources was taken into account to ensure none were directly above the critical components, as this would violate the first air principle of the laminar airflow. As the camera (a) only films the production process, not people, and (b) does not store any of its video footage anywhere, there should not be any issues regarding GDPR regulations of employees. Lastly, as the robot tool uses vacuum to grasp the plungers, it only sucks out high-grade air to a lower grade zone. Additionally, it has no moving parts and is therefore compliant with the demands, assuming the design of the tool itself allows for proper sterilisation. It should be noted however that the vacuum used should be created outside of the isolator system to eliminate any air blow-out on the tool itself.

When looking at the validation of the interventions performed via the robot, these could be validated according to the same principles applied to manual glove-based interventions. First, the worst case scenario for the intervention should be determined (e.g. removing the most distant plunger as this would be the most difficult one to resolve and could cause the most air disturbance), followed by a risk assessment, smoke studies and media fill procedure.

Lastly, when it comes to the integration with other systems of the isolator filling line, the robot should receive all process parameters from the Supervisory Control and Data Acquisition (SCADA) system of the isolator line, informing it on filling parameters, batch size, etc. Likewise, all process and/or fault data from the robot system should be signaled back to the SCADA system. This could be a graphical interface showing what part of the intervention the robot is currently executing for instance.

Conclusion

In this manuscript, a novel system for (remote) robotic interventions within high-speed isolator filling lines is proposed. It serves as a proof-of-concept method for gloveless high-speed isolator filling lines, with the goal of decreasing the downtime of current state-of-the-art isolator filling lines. It is designed to combine both fully automated and teleoperative control of the robot, using a haptic 6-DoF control interface. Anomalies are detected automatically via a YOLOv8-based computer vision system. equipped with polarised lighting for better object detection. When the operator takes control of the robot, the available movement options are reduced to a 2-dimensional space to make the intervention as intuitive for the operator as possible. Haptic feedback is applied to give the operator a better sense of awareness while performing the intervention.

The system is tested with a user group of 22 untrained volunteers with varying age, background, and gender. It was tested remotely at a location \(100~{\text {k}\text {m}}\) away from the physical robot setup directly in both a static and dynamic scenario. Time measurements of the user attempts indicate that there is no significant improvement for subsequent attempts when the plungers are static. When the plungers are dynamic however, the second attempt was significantly faster than the first. Additionally, the required times for the intervention when the plungers were moving were \(\pm ~30~\%\) faster than when they remained static (\(\pm ~30~{\text {s}}\) on average for the static scenario compared to \(\pm ~21~{\text {s}}\) for the dynamic version). This indicates that the operators can learn to use the movement of the plunger to their advantage to perform the intervention more efficiently. Both the static and the dynamic setup proved a vast improvement over the current manual setup in terms of time, as manually, operators can perform the intervention in 2 to 3 minutes. A Likert scale-based questionnaire was utilised to evaluate the perceived intuitiveness of the system. Here, the overall reception of the system was found to be very positive.

Future work includes the extension of the working principles towards different types of interventions, to make the first step towards future implementation of similar robotic systems in real isolator setups. As stated earlier in “Haptic Feedback”, it is currently not possible to manually switch the lane that the robot is locked into. If for any reason, the system sends the robot to the incorrect lane, the intervention can not be completed successfully. For this reason, a lane hopping feature will be added in the future. It should be stated however that incorrect lane allocation did not occur during our testing period. Nevertheless, the option should be available in the future when it does occur. Lastly, the authors would like to further optimise the data streaming during the remote variant of the intervention, the reduce the latency on the communication between the remote laptop and local compute unit as much as possible.

References

Maier C, Drinkwater J. Managing contamination risks in glove holes in barrier separation technology. Euro Pharm Rev. 2016. https://www.europeanpharmaceuticalreview.com/article/45014/managing-contamination-risks-glove-holes-barrier-separation-technology/.

A Breakdown of Cleanroom Grades and Standards. Neslo. 2020. https://www.neslo.com/blog/breaking-down-cleanroom-grades.

Gloveless robotic system for filling and closing RTU syringes, vials and cartridges. Groninger-group. https://www.groninger-group.com/en/pharma/syringe-filling-machines/robocell/. Accessed 5 June 2024.

Robotic Aseptic Filling. Wuxi Biologics. https://www.wuxibiologics.com/technologies/robotic-fill/. Accessed 5 June 2024.

Hollis T, Schleyer U. New Tech Disrupts Traditional Aseptic Processing. Parental Drug Association. 2018. https://www.pda.org/pda-letter-portal/home/full-article/new-tech-disrupts-traditional-aseptic-processing.

The future is gloveless for biopharma aseptic filling. Cleanroom Technology. 2018. https://www.cleanroomtechnology.com/news/article_page/The_future_is_gloveless_for_biopharma_aseptic_filling/147408.

Bullinger T. Small, flexible filling and packaging systems - using robots could mean great benefits. A3P. 2021. https://www.a3p.org/en/robots-benefits/.

Dekker I, Kellens K, Demeester E. Design and evaluation of an intuitive haptic teleoperation control system for 6-dof industrial manipulators. Robotics. 2023;12(2). https://doi.org/10.3390/robotics12020054.

Krinkin K, Filatov A, Filatov A, Kurishev O, Lyanguzov A. Data distribution services performance evaluation framework. In: 2018 22nd Conference of Open Innovations Association (FRUCT). 2018;94–100. https://doi.org/10.23919/FRUCT.2018.8468297.

Current Good Manufacturing Practice (CGMP) Regulations. Food and Drug Administration. 2023. https://www.fda.gov/drugs/pharmaceutical-quality-resources/current-good-manufacturing-practice-cgmp-regulations.

The Geomagic Touch. 3D System. https://www.3dsystems.com/haptics-devices/touch. Accessed 5 June 2024.

Steger C, Ulrich M, Wiedemann C. Machine Vision Algorithms and Applications. 2008;11–2.

Garrido S, Panov A. Detection of ArUco Markers. OpenCV. https://docs.opencv.org/4.x/d5/dae/tutorial_aruco_detection.html. Accessed 5 June 2024.

Jocher G, Chaurasia A, Qui J. Ultralytics YOLO. 2023. https://github.com/ultralytics/ultralytics.

Terven J, Cordova-Esparza D-M, Romero-Gonzalez J-A. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO_NAS. Mach Learn Knowl Extr. 2023;5(4):1680–716. https://doi.org/10.3390/make5040083.

Hussain M. "YOLO-v1 to YOLO-v8, the rise of yolo and its complementary nature toward digital manufacturing and industrial defect detection". Machines (Basel). 2023;11(7):677 (2023)

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollar P, Zitnick C-L. Microsoft COCO: common objects in context. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T, editors. Computer Vision - ECCV 2014. Cham: Springer; 2014. p. 740–55.

Pilz Industrial Motion Planner. MoveIt Picknik. https://moveit.picknik.ai/main/doc/how_to_guides/pilz_industrial_motion_planner/pilz_industrial_motion_planner.html. Accessed 5 June 2024.

ZeroTier Documentation. ZeroTier. Available at https://docs.zerotier.com/. Accessed 5 June 2024.

Joshi A, Kale S, Chandel S, Pal D: "Likert scale: Explored and explained". Br J Appl Sci Technol. 2015;7:396–403. https://doi.org/10.9734/BJAST/2015/14975.

Wu H, Leung SO. Can likert scales be treated as interval scales?–a simulation study. J Soc Serv Res. 2017;1–6. https://doi.org/10.1080/01488376.2017.1329775.

Avallone H. Current regulatory issues regarding parenteral inspections. PDA J Pharm Sci Technol. 1989;43(1):3–7. https://journal.pda.org/content/43/1/3.full.pdf.

Acknowledgements

The authors would like to thank all researchers from research group ACRO Diepenbeek KU Leuven. Additionally, they would like to thank the Flanders’ government for Ivo Dekker’s Flanders Innovation & Entrepreneurship Baekeland grant number HBC.2020.2884, facilitating this research.

Funding

This research was funded by Flanders Innovation & Entrepreneurship Baekeland grant number HBC.2020.2884.

Author information

Authors and Affiliations

Contributions

Ivo Dekker: conceptual design, construction of the experiment, data analysis, supervision, writing of the manuscript; Wido Tancer & Pieter Waelbers: conceptual design, construction of the experiment, data acquisition, data analysis; Eric Demeester & Karel Kellens: supervision, editing of the final manuscript.

Corresponding author

Ethics declarations

Consent to Participate

All participants were informed on the anonymous use of their questionnaire responses and have signed a consent form before their participation.

Conflict of Interest

The authors declare that they have no known conflict of interest which could have altered the work presented in the manuscript.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dekker, I., Waelbers, P., Tancer, W. et al. Robots for Interventions: Proof-of-Concept Method for High-Speed Filling Line Interventions Towards the Removal of Gloves. J Pharm Innov 19, 36 (2024). https://doi.org/10.1007/s12247-024-09845-7

Accepted:

Published:

DOI: https://doi.org/10.1007/s12247-024-09845-7