Abstract

We prove upper and lower bounds on the minimal spherical dispersion, improving upon previous estimates obtained by Rote and Tichy in (Anz Österreich Akad Wiss Math Nat Kl 132:3–10, 1995). In particular, we see that the inverse \(N(\varepsilon ,d)\) of the minimal spherical dispersion is, for fixed \(\varepsilon >0\), linear in the dimension d of the ambient space. We also derive upper and lower bounds on the expected dispersion for points chosen independently and uniformly at random from the Euclidean unit sphere. In terms of the corresponding inverse \(\widetilde{N}(\varepsilon ,d)\), our bounds are optimal with respect to the dependence on \(\varepsilon \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

In this paper we study the minimal spherical dispersion of point sets on the Euclidean unit sphere \(\mathbb {S}^{d}:= \big \{ x\in \mathbb {R}^{d+1}\,:\, \Vert x \Vert _2 = 1 \big \}\) with a focus on obtaining bounds that depend simultaneously on the dimension \(d+1\) of the ambient space \(\mathbb R^{d+1}\) and the number n of points. The study of this quantity, which we shall define (in a slightly modified form) in a moment, was initiated by Rote and Tichy [1], extending a concept previously introduced and investigated by Hlawka [2] and Niederreiter [3]. The motivation comes from typical problems arising in robotics, where one is interested in approximating general curves by simple ones (we refer to [1] for more information). There is also a significant body of research on the dispersion of the d-dimensional cube and torus, and so we just refer the reader to the recent work [4] and the references cited therein.

The Euclidean sphere \({\mathbb S}^{d}\) comes with a natural Borel probability measure \(\pi _d\) given by the normalized Hausdorff measure. This measure is commonly known as the normalized spherical measure or normalized surface measure and corresponds to the uniform distribution on the sphere. A spherical cap \(C(x,t)\subseteq \mathbb {S}^d\) with center \(x\in \mathbb {S}^d\) and \(t\in [-1,1]\) is given by

so it is simply an open half-space intersected with the sphere. The collection of spherical slices in \(\mathbb R^{d+1}\), which we shall denote by \(\mathcal {S}_d\), is given by intersecting two open half-spaces then intersected with the sphere. This can be expressed in terms of spherical cap intersections, more precisely,

As already mentioned, we are interested in the minimal spherical dispersion, i.e., the minimal dispersion with respect to the test set of spherical slices. To define this quantity, we first introduce the spherical dispersion of an n-element point set \(\mathcal P_n:=\{x_1,\dots ,x_n\}\subseteq \mathbb {S}^d\), which is given by

This is the largest (in the sense of the normalized surface measure) spherical slice not containing any point of \({\mathcal {P}}_n\). For \(d,n\in \mathbb N\), the minimal spherical dispersion is defined as

i.e., the infimum of the dispersion over all possible point sets on \({\mathbb S}^d\) with cardinality n.

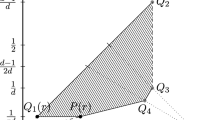

The work of Rote and Tichy already contains both lower and upper bounds on the spherical dispersion. They work with a slightly different class of test sets, which consists of intersections of half-spheres only. Moreover, the focus of their paper is on the dependence on the number n of points, while we are aiming for simultaneous control in the number of points and the dimension of the ambient space; their upper bound is of the form \(\mathcal {O}(d^3/n)\) and their lower bound of the form \(\mathcal {O}(n^{-1})\). We derive lower bounds depending not just on the number of points n, but also on the dimension d, and upper bounds improving upon the dimensional dependence \(\mathcal {O}(d^3/n)\); clearly our upper bound also holds in the setting considered in [1]. Even though we work with a richer family of test sets, due to our construction in the proof of the lower bound, it also carries over to their framework. We shall prove the following results (Fig. 1).

Theorem A

(Lower bounds) Let \(d\in \mathbb N\). Then, for any \(n \in \mathbb {N}\),

As will become clear from our proof, this bound is sharp when \(n=d+1\) and \(n=2d+1\). It may be improved in other cases. However, as the following remark together with our upper bound in Theorem B show, the dependence on d cannot be improved (cf. Remark 3).

Visualization of lower bounds in Theorem A in dependence on the relation of n and d

Remark 1

It is instructive to reformulate Theorem A in terms of the inverse of the minimal spherical disperison. This quantity is defined as

We obtain from Theorem A that, for any \(\varepsilon \in (0,1/4)\),

It shows that for fixed \(\varepsilon \in (0,1/4)\) the inverse minimal spherical dispersion grows linearly with respect to the dimension d.

The proof relies on the following ideas. The lower bound is based on a test set expansion procedure and a suitable choice of appropriate hyperplanes that yield “good” spherical slices. For deriving our upper bound, we use the fact that the minimal dispersion is always smaller than the expected dispersion, in formulas,

where \({\mathcal {P}}_n:= \{X_1,\dots ,X_n \}\) and \(X_1,\dots ,X_n\) is an iid sequence of uniformly distributed random variables on the sphere \({\mathbb S}^d\). This approach is related to the recent work [5] (see comments after Theorem B), in which the authors study the expected dispersion of random point sets whose elements are uniformly distributed on the cube. Eventually, any upper bound on the expected dispersion leads to an upper bound of the minimal dispersion. The following theorem provides such an upper bound together with a lower bound and it is interesting to mention that the dependence on n cannot be improved since there is a corresponding lower bound. Our result reads as follows.

Theorem B

Let \(n,d\in \mathbb N\) with \(n\ge 32d\). Assume that \(X_1,\dots ,X_n\in {\mathbb S}^d\) are independent random vectors chosen with respect to \(\pi _d\). Then

where \({\mathcal {P}}_n:= \{X_1,\dots ,X_n \}\).

In contrast to the result in [5], where the upper bound on the expected dispersion is deduced from a \(\delta \)-cover approach (exploiting [6, Lemma 1, Theorem 1]), our upper bound on the expected spherical dispersion is in terms of the VC-dimension of the set \({\mathscr {S}}_d\) and uses a result of Blumer, Ehrenfeucht, Haussler, and Warmuth [7]. The lower bound in Theorem B follows from similar arguments as in [5] adapted to our spherical framework.

Remark 2

We shall reformulate the bounds on the expected spherical dispersion from Theorem B in terms of its inverse, which is defined for all \(\varepsilon \in (0,1)\) and \(d\in \mathbb N\) as

From (1) we know that \(N(\varepsilon ,d)\le \widetilde{N}(\varepsilon ,d)\) such that, together with Remark 1, we obtain for all \(\varepsilon \in (0,\frac{1}{9e})\),

i.e., the dependence on the parameter \(\varepsilon \) and on d is (individually) optimal.

Remark 3

Exploiting again the fact that \(N(\varepsilon ,d)\le \widetilde{N}(\varepsilon ,d)\) yields to an upper bound on \(N(\varepsilon ,d)\). We obtain from Remark 1 and Remark 2 that

for \(\varepsilon \in (0,\frac{1}{9\textrm{e}})\). It shows that the inverse minimal spherical dispersion grows linearly with respect to d for fixed \(\varepsilon \) and almost linearly in \(\varepsilon ^{-1}\) (up to the \(\varepsilon \)-dependent logarithmic factor in the upper bound) for fixed dimension d.

The rest of the paper is organized as follows. Section 2 is dedicated to the proofs and split into several parts, dealing first with the expected spherical dispersion as presented in Theorem B and then with the lower bound on the minimal spherical dispersion presented in Theorem A.

2 Proofs of the Main Results

We shall now present the proofs of our main results and start with the bounds presented in Theorem B. After that we go over to arguing for the lower bounds on the minimal dispersion presented in Theorem A.

2.1 The Expected Spherical Dispersion

In [5], Hinrichs, Krieg, Kunsch, and Rudolf studied the expected dispersion for iid random points in the d-dimensional cube \([0,1]^d\). We consider a generalized setting: assume that there is a probability space \((B,\Sigma ,\mu )\), where B is equipped with a metric and \(\Sigma \) is the corresponding \(\sigma \)-algebra of Borel sets. Let \(\mathcal {B}\subseteq \Sigma \) be a family of subsets of B which we call set of test sets. Then, for \(\mathcal {P}=\{x_1,\dots ,x_n\} \subseteq B\) define the \((\mathcal {B},\mu )\)-dispersion of \(\mathcal {P}\) as

We restrict ourselves to scenarios where \(\mathcal {B}\) is countable, such that the supremum within the dispersion is taken over a countable set, which leads to the measurability of the mapping \(x_1,\dots ,x_n \mapsto \textrm{disp}_\mu (x_1,\dots ,x_n;\mathcal {B})\). Given a probability space \((\Omega ,\mathcal {A},\mathbb {P})\), for \(n\in \mathbb {N}\) let \(X_1,\dots ,X_n\) be an iid sequence of random variables, with \(X_i :\Omega \rightarrow B\), where each \(X_i\) is distributed according to \(\mu \). With this we define \(\mathcal {P}_n:= \{X_1,\dots ,X_n\}\) and the expected dispersion as

In contrast to the \(\delta \)-cover approach of [5], our results on the upper bound of the expected dispersion are based on the VC-dimension. Moreover, we adapt the statement of the lower bound of the expected dispersion from [5] to our generalized situation. The proof follows as in [5], but for the convenience of the reader we provide it. Eventually applying the former estimates leads to the upper and lower bound on the expected spherical dispersion for iid random points on the sphere \({\mathbb S}^{d}\). Finally, we provide the justification for the bounds of the inverse of the expected dispersion.

2.1.1 The Upper Bound of the Expected Dispersion, Proof of the Upper Bound of Theorem B

We start with defining the VC-dimension \(d_\mathcal {B}\) of \(\mathcal {B}\). It is the cardinality of the largest subset P of B such that the set system \(\{P\cap T:T\in \mathcal {B} \}\) contains all subsets of P. Having this we are able to state an auxiliary result of Blumer, Ehrenfeucht, Haussler, and Warmuth. It follows by virtue of [7, Lemma A2.1, Lemma A2.2 and Proposition A2.1(iii)].

Lemma 1

For any \(t>0\) and any \(n\ge d_{\mathcal {B}}\), we have

With that estimate we provide the arguments for our upper bound of the expected dispersion.

Proposition 2

Let \(n\in \mathbb {N}\) with \(n\ge d_{\mathcal {B}}\). Then

Proof

Set \(\gamma := \gamma _{d_\mathcal {B},n} = \min \left\{ 1, \frac{2d_{\mathcal {B}}}{n} \log _2 \left( \frac{en}{d_{\mathcal {B}}}\right) \right\} \). With this we have

\(\square \)

Observing that the mapping

is increasing for \(x\in [1,n]\) leads to the fact that an upper bound of the VC-dimension gives an upper bound of the expected dispersion via Proposition 2. Therefore, we state a further tool for the application of the former proposition to the spherical dispersion. For a proof of the next result we refer to the application of [7, Lemma 3.2.3.].

Lemma 3

Given a family of test sets \(\mathcal {B}\) with VC-dimension \(d_{\mathcal {B}}\) we have that the VC-dimension of the new set of test sets

satisfies \(d_{\widetilde{\mathcal {B}}} \le 4d_{\mathcal {B}} \log _2 6\).

In the setting of the spherical dispersion, we have \(B=\mathbb {S}^d\), \(\mu =\pi _d\), and \(\mathcal {B}=\mathcal {S}_d\). Note that the test set of spherical slices \(\mathcal {S}_d\) consists of intersections of spherical caps of \(\mathbb {S}^d\). Therefore, by Lemma 3, for obtaining an upper bound of \(d_{\mathcal {S}_d}\), it is sufficient to provide the VC-dimension of the set of test sets of spherical caps, which for \(d\in \mathbb {N}\) is denoted by

In the works [8, Proposition 5.12] and [9, Proposition 42] it is shown that \(d_{\mathcal {C}_d} = d+2\). Here recall that d is the “classical dimension”, that is, \(\mathbb {S}^d\subseteq \mathbb {R}^{d+1}\). Now we have all auxiliary results to state and prove our upper bound on the expected spherical dispersion.

Proposition 4

Let \(n,d \in \mathbb {N}\) with \(n\ge 32 d\). Assume that \(X_1,\dots ,X_n\) are independent random points chosen uniformly distributed from \(\mathbb {S}^d\) with respect to \(\pi _d\). Then

Proof

The proof follows essentially as already indicated. From the VC-dimension of \(\mathcal {C}_d\) mentioned before, we obtain \(d_{\mathcal {S}_d} \le 32 d\). Using the monotonicity from (3) and considering only \(n\ge 32 d\), leads by Proposition 2 to the claimed upper bound of the expected spherical dispersion. \(\square \)

2.1.2 The Lower Bound of the Expected Dispersion

The proof of this result follows as in [5] and is based on the coupon collector’s problem. We elaborate on some of the details for the sake of completeness and convenience. All random variables shall be defined on the common probability space \((\Omega ,{\mathcal {A}},\mathbb P)\). Let us start by recalling an elementary result from [5, Lemma 2.3], which follows from Chebychev’s inequality.

Lemma 5

Let \(\ell \in \mathbb N\) and \((Y_i)_{i\in \mathbb N}\) be a sequence of independent random variables uniformly distributed on the set \(\{1,\dots ,\ell \}\). If we set

then, for any natural number \(n\le \big (\sum _{j=1}^\ell j^{-1}-2\big )\ell \), we have

From this bound on the upper tail of \(\tau _\ell \), we can deduce under a ‘decomposition condition’ a lower bound on the expected \((\mathcal {B},\mu )\)-dispersion for independent \(\mu \)-distributed points on B.

Proposition 6

Let \(n,d\in \mathbb N\) and \(\ell := \lceil \frac{(1+e)n}{\log (n)} \rceil \), where \(\lceil \cdot \rceil \) denotes the ceiling function, which maps \(x\in \mathbb R\) to the least integer greater than or equal to x. Assume that there are pairwise disjoint test sets \(S_1,\dots ,S_{\ell } \in \mathcal {B}\) such that \(B=\bigcup _{i=1}^{\ell } S_i\) and \(\mu (S_j)=1/\ell \) for any \(j=1,\dots ,\ell \). Let \(X_1,\dots ,X_n\) be independent random points on B, where each \(X_i\) is \(\mu \)-distributed. Then

where \({\mathcal {P}}_n:= \{X_1,\dots ,X_n \}\).

Proof

We follow verbatim the proof in [5], but include the details for the readers convenience. For \(i\in \{1,\dots ,n\}\), we define random variables \(Y_i:\Omega \rightarrow \{1,\dots ,\ell \}\) so that \(Y_i(\omega )=j\in \{1,\dots ,\ell \}\) if and only if \(X_i(\omega )\in S_j\), i.e,

This means that the \(Y_i\)’s are independent and uniformly distributed in \(\{1,\dots ,\ell \}\), and that the value of \(Y_i\) indicates the test set from \(S_1,\dots ,S_\ell \) which the point \(X_i\) falls in. Note that for any \(\omega \in \Omega \) such that

(which means the left-hand side must be strictly contained in the right-hand side), there must exist at least one \(r\in \{1,\dots ,\ell \}\) such that \(\{X_1(\omega ),\dots ,X_n(\omega ) \} \cap S_r = \emptyset \). For those \(\omega \in \Omega \) satisfying (4), we thus have

where \({\mathcal {P}}_n(\omega ):=\{X_1(\omega ),\dots ,X_n(\omega ) \}\). Therefore, on average, we obtain

where \(\tau _\ell \) is defined as in Lemma 5. We now use that \(\ell = \lceil \frac{(1+e)n}{\log (n)} \rceil \) and obtain

where it was used that \(\sum _{j=1}^\ell j^{-1}>\log (\ell +1)\) and that for any \(x>1\),

(where equality holds for \(x=\exp (1+1/e)\)). Therefore, \(n\le \big (\sum _{j=1}^\ell j^{-1}-2\big )\ell \), and so we can apply Lemma 5, obtaining together with (5) that

Altogether, this leads us to the estimate

which completes the proof. \(\square \)

2.1.3 The Inverse of the Expected Spherical Dispersion—Proof of Remark 2

Let us consider the inverse of the minimal dispersion, which is, for every \(\varepsilon \in (0,1)\) and \(d\in \mathbb N\) as

Theorem B together with a simple computation (see [5]) show that whenever \(\varepsilon \in (0,\frac{1}{9e})\),

For convenience of the reader we prove the following lemma which serves as tool to obtain the upper bound on the inverse of the expected spherical dispersion.

Lemma 7

Let \(\varepsilon \in (0,1)\), \(d\in \mathbb N\), and \(c_1,c_2\in [1,\infty )\) be absolute constants. Define a differentiable function \(f:(0,\infty )\rightarrow \mathbb R\) via \(x\mapsto c_1 \frac{d}{x}\log \Big (c_2\frac{x}{d}\Big )\). Then, for any real number \(x\ge d\frac{a}{\varepsilon }\log \Big (\frac{a}{\varepsilon }\Big )\) with \(a:=c_1(1+c_2/e)\), we have

Proof

First, we show that f is decreasing for all \(x>\frac{e d}{c_2}\) and, in particular, for any \(x\ge a\frac{d}{\varepsilon }\log \Big (a\frac{\sqrt{d}}{\varepsilon }\Big )\). To see this, we first note that f is differentiable and that, for all \(x\in (0,\infty )\),

The latter is obviously less than zero if and only if \(1< \log \Big (c_2\frac{x}{d}\Big )\), which is equivalent to \(x>\frac{ed}{c_2}\).

For some \(\beta \in (0,\infty )\), we define \(g_\beta :(0,\infty )\rightarrow \mathbb R\) via \(x\mapsto \frac{\log (\beta x)}{x}\). This function is again differentiable with \(g_\beta '(x) = \frac{1}{x^2}(1-\log (\beta x))\) and satisfies

In particular, \(g_\beta (x_{\max })=\frac{\beta }{e}\). Hence, for each \(x\in (0,\infty )\), \(g_\beta (x) \le g_\beta (x_{\max }) = \frac{\beta }{e}\).

After this preparation, we shall now prove the desired estimate. For every \(x\ge d\frac{a}{\varepsilon }\log \Big (\frac{a}{\varepsilon }\Big )\), due to the monotonicity of f,

with the choice \(\beta :=c_2\) above. Using the established bound on g, we obtain, for all \(x\ge d\frac{a}{\varepsilon }\log \Big (\frac{a}{\varepsilon }\Big )\),

where we used \(a:=c_1(1+c_2/e)\) in the last estimate. \(\square \)

Using the upper bound of the expected dispersion from Theorem B and eventually applying the previous lemma with \(c_1=64/\log 2\), \(c_2 = e/32\) leads to

with \(a=\frac{64}{\log 2}(1+1/32)\le 96\).

2.2 Lower Bound of the Minimal Spherical Dispersion

The general idea behind the lower bound exploits the following observation. Assume we are given a point set \(\{ x_1,\dots , x_{d}\} \in \mathbb {S}^d\). Then there exists a hyperplane H containing the origin \(0\in \mathbb R^{d+1}\) which supports all points, i.e., \(\{x_1,\dots ,x_{d}\}\in H\). Considering the half-space determined by this hyperplane intersected with the sphere \(\mathbb {S}^d\) leads to a spherical slice (even a spherical cap) with \(\pi _d\)-volume of at least 1/2.

2.2.1 Proof of Theorem A

Proof

Let \(n\in \mathbb N\) and \({\mathcal {P}}_n:=\{x_1,\dots ,x_n\}\subseteq {\mathbb S}^d\). For \(n'\ge n\), consider the set \({\mathcal {P}}':=\mathcal P_n \cup \{x_{n+1},\dots ,x_{n'-1},x_{n'}\} \) with \(x_{n+1},\dots ,x_{n'}\in {\mathbb S}^d\). Then

because the number of points in the primed point set obtained by this procedure is non-decreasing.

We now distinguish four regimes:

1st case: \(n\le d+1\): Since \(n\le d+1\), we may consider an expanded point set \({\mathcal {P}}':= {\mathcal {P}}_n\cup \{x_{n+1},\dots ,x_{d+1} \}\), where \(\{x_{n+1},\dots , x_{d+1} \}\in {\mathbb S}^d\). Then there exists a vector \(\theta \in {\mathbb S}^d\) and a corresponding hyperplane \(H_\theta := \{y\in \mathbb R^{d+1}\,:\, \langle \theta ,y \rangle =0 \}\) perpendicular to \(\theta \) such that \(\{x_1,\dots ,x_d,0\} \subseteq H_\theta \). The half-space \(H_\theta ^{+}:=\big \{y\in \mathbb R^{d+1}\,:\, \langle \theta , y\rangle >0 \big \}\) and the half-spaces \(H_\theta ^{-}:=\big \{y\in \mathbb R^{d+1}\,:\, \langle \theta , y\rangle <0 \big \}\) do not contain any points of \(\{x_1,\dots ,x_d\}\) and the same holds for the corresponding spherical caps \(S^+:= H_\theta ^{+} \cap {\mathbb S}^{d}\) and \(S^-:= H_\theta ^{-} \cap {\mathbb S}^{d}\). Moreover, either \(S^+\) or \(S^-\) contains the point \(x_{d+1}\), so that we set \(S_0\in \{S^+,S^-\}\) to be the spherical cap which does not contain \(x_{d+1}\). Eventually, by the continuity of measure, \(\pi _d(S_0)=\frac{1}{2}\) such that the definition of the spherical dispersion thus yields

Using this together with (6), we obtain

2nd case: \(n\ge d+1\): We proceed similar as in the previous case. Namely, we use the hyperplane spanned by the points \(\{x_1,\dots ,x_d,0\}\). In other words, there is a vector \(\theta \) such that the aforementioned hyperplane is given by \(H_\theta := \{y\in \mathbb {R}^{d+1}:\langle \theta ,y \rangle =0\}\). As in the previous case we have the two half-spaces \(H_\theta ^+\) and \(H_\theta ^-\) as well as the corresponding spherical caps \(S^+\) and \(S^-\) that do not contain any point of \(\{x_1,\dots ,x_d\}\). Recall that \(\pi _d(S^+)=\pi _d(S^-)=1/2\). Now by the pigeonhole principle, we can choose \(S\in \{S^+,S^-\}\), such that the spherical cap S contains at most \(\lfloor (n-d)/2 \rfloor \) points from \(\{ x_{d+1},\dots ,x_n \}\). Further, we decompose S in \(\lfloor (n-d)/2 \rfloor +1\) spherical slices (given as intersections of two spherical caps) of equal \(\pi _d\)-measure. Formally, we just choose \(\theta ^\perp \in S\) with \(\langle \theta ,\theta ^{\perp } \rangle = 0\) (i.e., \(\theta ^{\perp }\in {\mathbb S}^{d}\cap H_\theta \)) and now define a rotation in the plane spanned by \(\theta \) and \(\theta ^{\perp }\); such a rotation by an angle \(\alpha \) is given by the orthogonal matrix \(A:={{\,\textrm{id}\,}}_{(d+1)\times (d+1)} + (\cos (\alpha )-1)[\theta \otimes \theta + \theta ^{\perp }\otimes \theta ^{\perp }]\), where \({{\,\textrm{id}\,}}_{(d+1)\times (d+1)}\) denotes the \((d+1)\times (d+1)\) identity matrix. Then we take \(\alpha := \pi /\lfloor \frac{n-d}{2}\rfloor \) and perform successive rotations of \(H_\theta \) at this angle, considering spherical slices obtained from the intersection of the spherical cap S with the spherical cap obtained from intersecting \({\mathbb S}^{d}\) with the rotation of \(H_\theta \). Then, again by the pigeon hole principle, we find at least one of the slices, say \(S_0\in \mathcal {S}_d\), with \(S_0\cap \mathcal {P}_n = \emptyset \), such that

Thus, by the definition of the spherical dispersion we have

3rd case: \(d+1<n\le 2d+1\): As in the 1st case we create an expanded point set \({\mathcal {P}}'\) by adding points until we have exactly \(2d+1\) and then we take (6) into account. Without loss of generality let us assume that the first 2d points do not lie on a single hyperplane through the origin, because this would lead us eventually back to the 1st case. Let \(H_1\) be the hyperplane in \(\mathbb R^{d+1}\) determined by the points \(\{0, x_1,\dots ,x_{d} \}\) and let \(H_2\) be the hyperplane determined by \(\{0,x_{d+1},\dots ,x_{2d}\}\). Assume that \(\theta _1,\theta _2\in {\mathbb S}^d\) are the respective normals to these hyperplanes and choose those vectors such that \(\langle \theta _1,\theta _2 \rangle \ge 0\); in particular we know that \(|\langle \theta _1,\theta _2 \rangle | \ne 1\). Let us look at the corresponding upper and lower open half-spaces, i.e., for \(i=1,2\) define

Define

One can easily see that the spherical slices \(S^{+,+},S^{+,-},S^{+,-},S^{+,-}\) are pairwise disjoint and that for any \(S\in \{S^{+,+},S^{+,-},S^{+,-},S^{+,-}\}\) we have \(S\cap \{x_1,\dots ,x_{2d}\} = \emptyset \). Moreover

such that

-

either \(\pi _d(S^{+,-}) \ge 1/4\) or \(\pi _d(S^{+,+}) \ge 1/4\); and

-

either \(\pi _d(S^{-,-}) \ge 1/4\) or \(\pi _d(S^{-,+}) \ge 1/4\).

Hence, there is an \(S_1\in \{S^{+,-},S^{+,+} \}\) and an \(S_2\in \{S^{-,-},S^{-,+}\}\) with \(\pi _d\)-measure greater than or equal to 1/4. Since \(S_1\) and \(S_2\) are disjoint, we can choose \(S_0\in \{S_1,S_2\}\) in such a way that it does not contain \(x_{2d+1}\), i.e., \(S\cap \mathcal {P}'=\emptyset \).

Therefore

which together with (6) yields

4th case: \(n>2d\): Let \(H_1\) and \(H_2\) be as in the 3rd case. This gives rise to four different spherical slices that do not contain any of the points \(\{x_1,\dots ,x_{2d}\}\), where two of the slices, say \(S_1,S_2\in \mathcal {S}_d\), have \(\pi _d\)-measure of at least 1/4. Thus, by the pigeonhole principle we find an \(S\in \{S_1,S_2\}\) that contains at most \(\lfloor (n-2d)/2 \rfloor \) points of \(\{x_{d+1},\dots ,x_n\}\). Now we decompose S into \(\lfloor (n-2d)/2 \rfloor +1 \) spherical slices of equal \(\pi _d\)-measure (given as intersections of two spherical caps) and get by the pigeonhole principle that there must exist a test set \(S_{0}\in \mathcal {S}_d\) with \(S_{0}\cap \mathcal {P}_n=\emptyset \) such that

Therefore, we have

Eventually taking the maximum over the lower bounds derived in the previous four cases, depending on the range where those are satisfied, yields the claimed estimate. \(\square \)

References

Rote, G., Tichy, R.F.: Spherical dispersion with an application to polygonal approximation of curves. Anz. Österreich. Akad. Wiss. Math.-Natur. Kl. 132, 3–101996 (1995)

Hlawka, E.: Abschätzung von trigonometrischen Summen mittels diophantischer Approximationen. Österreich. Akad. Wiss. Math.-Naturwiss. Kl. S.-B. II 185(1-3), 43–50 (1976). Collection in honor of Hans Hornich

Niederreiter, H.: In: Erdős, P., Alpár, L., Halász, G., Sárközy, A. (eds.) A quasi-Monte Carlo method for the approximate computation of the extreme values of a function, pp. 523–529. Birkhäuser Basel, Basel (1983)

Litvak, A., Livshyts, G.: New bounds on the minimal dispersion. J. Complex. 72, 101648 (2022)

Hinrichs, A., Krieg, D., Kunsch, R.J., Rudolf, D.: Expected dispersion of uniformly distributed points. J. Complex. 61, 101483 (2020)

Rudolf, D.: An upper bound of the minimal dispersion via delta covers. Contemporary computational mathematics—a celebration of the 80th birthday of Ian Sloan, 1099–1108 (2018)

Blumer, A., Ehrenfeucht, A., Haussler, D., Warmuth, M.: Learnability and the Vapnik-Chervonenkis dimension. J. ACM 36(4), 929–965 (1989)

Bilyk, D., Lacey, M.: Random tessellations, restricted isometric embeddings, and one bit sensing. arXiv preprint arXiv:1512.06697 (2015)

Sonnleitner, M.: Discrepancy and numerical integration on spheres. Master thesis. Universität Linz (2019)

Acknowledgements

JP is supported by the Austrian Science Fund (FWF) Projects P32405 “Asymptotic geometric analysis and applications” and Project F5508-N26, which is part of the Special Research Program “Quasi-Monte Carlo Methods: Theory and Applications”. DR is supported by DFG project 522337282. We thank Simon Breneis for comments on a previous version of this paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Prochno, J., Rudolf, D. The Minimal Spherical Dispersion. J Geom Anal 34, 85 (2024). https://doi.org/10.1007/s12220-023-01527-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12220-023-01527-z