Abstract

Let \(\gamma :[0,1]\rightarrow \mathbb S^{2}\) be a non-degenerate curve in \(\mathbb R^3\), that is to say, \(\det \big (\gamma (\theta ),\gamma '(\theta ),\gamma ''(\theta )\big )\ne 0\). For each \(\theta \in [0,1]\), let \(l_\theta =\text {span}(\gamma (\theta ))\) and \(\rho _\theta :\mathbb R^3\rightarrow l_\theta \) be the orthogonal projections. We prove an exceptional set estimate. For any Borel set \(A\subset \mathbb R^3\) and \(0\le s\le 1\), define \(E_s(A):=\{\theta \in [0,1]: \dim (\rho _\theta (A))<s\}\). We have \(\dim (E_s(A))\le \max \{0,1+\frac{s-\dim (A)}{2}\}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

If \(\gamma :[0,1]\rightarrow \mathbb S^2\) is a smooth map that satisfies the non-degenerate condition

then we call the image of \(\gamma \) a non-degenerate curve, or simply call \(\gamma \) a non-degenerate curve. A model example for the non-degenerate curve is \(\gamma _\circ :\theta \mapsto (\frac{\cos \theta }{\sqrt{2}},\frac{\sin \theta }{\sqrt{2}},\frac{1}{\sqrt{2}})\) \((\theta \in [0,1])\).

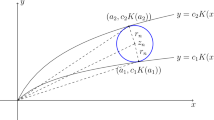

In this paper, we study the projections in \(\mathbb R^3\) whose directions are determined by \(\gamma \). For each \(\theta \in [0,1]\), let \(V_\theta \subset \mathbb R^3\) be the 2-dimensional subspace that is orthogonal to \(\gamma (\theta )\) and let \(l_\theta \subset \mathbb R^3\) be the 1-dimensional subspace spanned by \(\gamma (\theta )\). We also define \(\pi _\theta :\mathbb R^3\rightarrow V_\theta \) to be the orthogonal projection onto \(V_\theta \), and define \(\rho _\theta :\mathbb R^3\rightarrow l_\theta \) to be the orthogonal projection onto \(l_\theta \). We use \(\dim X\) to denote the Hausdorff dimension of set X. Let us state our main results.

Theorem 1

Suppose \(A\subset \mathbb R^3\) is a Borel set of Hausdorff dimension \(\alpha \). For \(0\le s\le 1\), define the exceptional set

Then we have

As a corollary, we have

Corollary 1

Suppose \(A\subset \mathbb R^3\) is a Borel set of Hausdorff dimension \(\alpha \). Then we have

Remark 1

The proof of Theorem 1 relies on the small cap decoupling for the general cone. We also remark that, for the set of directions determined by the model curve \(\gamma _\circ \), Käenmäki, Orponen and Venieri can prove the exceptional set estimate with upper bound \(\dim (E_s)\le \frac{\alpha +s}{2\alpha }\) when \(\alpha \le 1\) (see [1] Theorem 1.3). The novelty of our paper is that we prove a Falconer-type exceptional set estimate for general non-degenerate curve, hence Corollary 1.

Remark 2

Pramanik et al. [2] have also recently proved Corollary 1 with an exceptional set estimate of the form \(\dim (E_s)\le s\), compared to (2). Their proof is based on some incidence estimates for curves in the spirit of Wolff’s circular maximal function estimate. The estimates in [2] hold for curves that are only \(C^2\), which requires a very different proof from earlier work of Wolff and others on these problems.

Remark 3

It is also an interesting question to ask for the estimate of the set

which consists of directions to which the projection of A has zero measure. We notice that recently Harris [3] proved that

Intuitively, one may think of E as \(E_1\) (\(E_1\) is defined in (1)). The main result of this paper (2) yields \(\dim (E_1)\le \frac{3-\dim A}{2}\) which is better than the bound \(\frac{4-\dim A}{3}\). This shows that (3) cannot imply (2).

Now we briefly discuss the history of projection theory. Projection theory dates back to Marstrand [4], who showed that if A is a Borel set in \(\mathbb R^2\), then the projection of A onto almost every line through the origin has Hausdorff dimension \(\min \{1,\dim A\}\). This was generalized to higher dimensions by Mattila [5], who showed that if A is a Borel set in \(\mathbb R^n\), then the projection of A onto almost every k-plane through the origin has Hausdorff dimension \(\min \{k,\dim A\}\). More recently, Fässler and Orponen [6] started to consider the projection problems when the direction set is restricted to some submanifold of Grassmannian. Such problems are known as the restricted projection problem. Fässler and Orponen made conjectures about restricted projections to lines and planes in \(\mathbb R^3\) (see Conjecture 1.6 in [6]). In this paper, we give an answer to the conjecture about the projections to lines.

2 Projection to One Dimensional Family of Lines

In this section, we prove Theorem 1. Theorem 1 will be a result of an incidence estimate that we are going to state later. Recall that \(\gamma :[0,1]\rightarrow \mathbb S^2\) a non-degenerate curve.

Definition 1

For a number \(\delta >0\) and any set X, we use \(|X|_\delta \) to denote the maximal number of \(\delta \)-separated points in X.

Definition 2

(\((\delta ,s)\)-set) Let \(P\subset \mathbb R^n\) be a bounded set. Let \(\delta >0\) be a dyadic number, \(0\le s\le d\) and \(C>1\). We say that P is a \((\delta ,s,C)\)-set if

for any \(B_r\) being a ball of radius r with \(\delta \le r\le 1\).

For the purpose of this paper, C is always a fixed large number, say \(10^{10}\). For simplicity, we omit C and write the spacing condition as

and call such P a \((\delta ,s)\)-set.

Let \(\mathcal {H}^t_\infty \) denote the t-dimensional Hausdorff content which is defined as

We recall the following result (see [6] Lemma 3.13).

Lemma 1

Let \(\delta ,s>0\), and \(B\subset \mathbb R^n\) with \(\mathcal {H}_\infty ^s(B):=\kappa >0\). Then there exists a \((\delta ,s)\)-set \(P\subset B\) with cardinality \(\#P\gtrsim \kappa \delta ^{-s}\).

Next, we state a useful lemma whose proof can be found in [7, Lemma 2]. We remark that this type of argument was previously used by Katz and Tao (see [8, Lemma 7.5]). The lemma roughly says that given a set X of Hausdorff dimension less than s, then we can find a covering of X by squares of dyadic lengths which satisfy a certain s-dimensional condition. Let us use \(\mathcal {D}_{2^{-k}}\) to denote the lattice squares of length \(2^{-k}\) in \([0,1]^2\).

Lemma 2

Suppose \(X\subset [0,1]^2\) with \(\dim X< s\). Then for any \(\varepsilon >0\), there exist dyadic squares \(\mathcal {C}_{2^{-k}}\subset \mathcal {D}_{2^{-k}}\) \((k>0)\) so that

-

1.

\(X\subset \bigcup _{k>0} \bigcup _{D\in \mathcal {C}_{2^{-k}}}D, \)

-

2.

\(\sum _{k>0}\sum _{D\in \mathcal {C}_{2^{-k}}}r(D)^s\le \varepsilon \),

-

3.

\(\mathcal {C}_{2^{-k}}\) satisfies the s-dimensional condition: For \(l<k\) and any \(D\in \mathcal {D}_{2^{-l}}\), we have \(\#\{D'\in \mathcal {C}_{2^{-k}}: D'\subset D\}\le 2^{(k-l)s}\).

Remark 4

Besides \([0,1]^2\), this lemma holds for other compact metric spaces, for example \([0,1]^n\) or \(\mathbb S^2\). The proof is exactly the same.

Our main effort will be devoted to the proof of the following theorem.

Theorem 2

Fix \(0<s<1\), and let \(C_1>1\) be a constant. For each \(\varepsilon >0\), there exists \(C_{s,\varepsilon ,C_1}\) depending on \(s,\varepsilon \) and \(C_1\), so that the following holds. Let \(\delta >0\). Let \(H\subset B^3(0,1)\) be a union of disjoint \(\delta \)-balls and we use \(\#H\) to denote the number of \(\delta \)-balls in H. Let \(\Theta \) be a \(\delta \)-separated subset of [0, 1] such that \(\Theta \) is a \((\delta ,t)\)-set and \(\#\Theta \ge C_1^{-1} (\log \delta ^{-1})^{-2}\delta ^{-t}\) for some \(t>0\). Assume for each \(\theta \in \Theta \), we have a collection of \(\delta \times 1\times 1\)-slabs \(\mathbb S_\theta \) with normal direction \(\gamma (\theta )\). \(\mathbb S_\theta \) satisfies the s-dimensional condition:

-

1.

\(\#\mathbb S_\theta \le C_1 \delta ^{-s}\),

-

2.

\(\#\{S\in \mathbb S_\theta :S\cap B_r\}\le C_1 (\frac{r}{\delta })^{s}\), for any \(B_r\) being a ball of radius r \((\delta \le r\le 1)\).

We also assume that each \(\delta \)-ball contained in H intersects \(\ge C_1^{-1} |\log \delta ^{-1}|^{-2}\#\Theta \) many slabs from \(\cup _\theta \mathbb S_\theta \). Then

2.1 \(\delta \)-Discretization of the Projection Problem

We show Theorem 2 implies Theorem 1 in this subsection.

Proof of Theorem 1 assuming Theorem 2

Suppose \(A\subset \mathbb R^3\) is a Borel set of Hausdorff dimension \(\alpha \). We may assume \(A\subset B^3(0,1)\). Recall the definition of the exceptional set

If \(\dim (E_s)=0\), then there is nothing to prove. Therefore, we assume \(\dim (E_s)>0\). Recall the definition of the t-dimensional Hausdorff content is given by

A property for the Hausdorff dimension is that

We choose \(a<\dim (A),t<\dim (E_s)\). Then \(\mathcal {H}^t_\infty (E_s)>0\), and by Frostman’s lemma there exists a probability measure \(\nu _A\) supported on A satisfying \(\nu _A(B_r)\lesssim r^a\) for any \(B_r\) being a ball of radius r. We only need to prove

since then we can send \(a\rightarrow \dim (A)\) and \(t\rightarrow \dim (E_s)\). As a and t are fixed, we may assume \(\mathcal {H}^t_\infty (E_s)\sim 1\) is a constant.

Fix a \(\theta \in E_s\). By definition we have \(\dim \rho _\theta (A)<s\). We also fix a small number \(\epsilon _{\circ }\) which we will later send to 0. By Lemma 2, we can find a covering of \(\rho _\theta (A)\) by intervals \(\mathbb I_\theta =\{I\}\), each of which has length \(2^{-j}\) for some integer \(j>|\log _2\epsilon _{\circ }|\). We define \(\mathbb I_{\theta ,j}:=\{I\in \mathbb I_\theta : r(I)=2^{-j}\}\) (Here r(I) denotes the length of I). Lemma 2 yields the following properties:

For each j and r-interval \(I_r\subset l_\theta \), we have

For each \(\theta \in E_s\), we can find such a \(\mathbb I_\theta \). We also define the slab sets \(\mathbb S_{\theta ,j}:=\{\rho ^{-1}_\theta (I): I\in \mathbb I_{\theta ,j}\}\cap B^3(0,1)\), \(\mathbb S_{\theta }:=\bigcup _j\mathbb S_{\theta ,j}\). Each slab in \(\mathbb S_{\theta ,j}\) has dimensions \(2^{-j}\times 1\times 1\) and normal direction \(\gamma (\theta )\). One easily sees that \(A\subset \bigcup _{S\in \mathbb S_\theta }S\). By pigeonholing, there exists \(j(\theta )\) such that

For each \(j>|\log _2\epsilon _{\circ }|\), define \(E_{s,j}:=\{\theta \in E_s: j(\theta )=j\}\). Then we obtain a partition of \(E_s\):

By pigeonholing again, there exists j such that

In the rest of the poof, we fix this j. We also set \(\delta =2^{-j}\). By Lemma 1, there exists a \((\delta ,t)\)-set \(\Theta \subset E_{s,j}\) with cardinality \(\#\Theta \gtrsim (\log \delta ^{-1})^{-2}\delta ^{-t}\).

Next, we consider the set \(U:=\{(x,\theta )\in A\times \Theta : x\in \cup _{S\in \mathbb S_{\theta ,j}}S \}\). We also use \(\mu \) to denote the counting measure on \(\Theta \) (note that \(\Theta \) is a finite set). Define the section of U:

By (6) and Fubini, we have

This implies

since

By (9), we have

We are ready to apply Theorem 2. Recall \(\delta =2^{-j}\) and \(\#\Theta \gtrsim (\log \delta ^{-1})^{-2}\delta ^{-t}\). By (11) and noting that \(\nu _A(B_\delta )\lesssim \delta ^{a}\), we can find a \(\delta \)-separated subset of \(\{x\in A: \# U_x\ge \frac{1}{20j^2}\#\Theta \}\) with cardinality \(\gtrsim (\log \delta ^{-1})^{-2}\delta ^{-a}\). We denote the \(\delta \)-neighborhood of this set by H, which is a union of \(\delta \)-balls. For each \(\delta \)-ball \(B_\delta \) contained in H, we see that there are \(\gtrsim (\log \delta ^{-1})^{-2}\#\Theta \) many slabs from \(\cup _{\theta \in \Theta }\mathbb S_{\theta ,j}\) that intersect \(B_\delta \). We can now apply Theorem 2 to obtain

Letting \(\epsilon _{\circ }\rightarrow 0\) (and hence \(\delta \rightarrow 0\)) and then \(\varepsilon \rightarrow 0\), we obtain \(a\le 2+s-2t\). \(\square \)

2.2 Proof of Theorem 2

For convenience, we will prove the following version of Theorem 2 after rescaling \(x\mapsto \delta ^{-1}x\).

Theorem 3

Fix \(0<s<1\), and let \(C_1>1\) be a constant. For each \(\varepsilon >0\), there exists \(C_{s,\varepsilon ,C_1}\) depending on \(s,\varepsilon \) and \(C_1\), so that the following holds. Let \(\delta >0\). Let \(H\subset B^3(0,\delta ^{-1})\) be a union of \(\delta ^{-a}\) many disjoint unit balls. Let \(\Theta \) be a \(\delta \)-separated subset of [0, 1] so that \(\Theta \) is a \((\delta ,t)\)-set and \(\#\Theta \ge C_1^{-1}(\log \delta ^{-1})^{-2}\delta ^{-t}\). Assume for each \(\theta \in \Theta \), we have a collection of \(1\times \delta ^{-1}\times \delta ^{-1}\)-slabs \(\mathbb S_\theta \) with normal direction \(\gamma (\theta )\). \(\mathbb S_\theta \) satisfies the s-dimensional condition:

-

1.

\(\#\mathbb S_\theta \le C_1 \delta ^{-s}\),

-

2.

\(\#\{S\in \mathbb S_\theta :S\cap B_r\}\le C_1 r^{s}\), for any \(B_r\) being a ball of radius r \((1\le r\le \delta ^{-1})\).

We also assume that each unit ball contained in H intersects \(\ge C_1^{-1} |\log \delta ^{-1}|^{-2}\#\Theta \) many slabs from \(\cup _\theta \mathbb T_\theta \). Then

We define the cone

For any large scale R, there is a standard partition of \(N_{R^{-1}}\Gamma \) into planks \(\sigma _{R^{-1/2}}\) of dimensions \(R^{-1}\times R^{-1/2}\times 1\):

Here, the subscript of \(\sigma _{R^{-1/2}}\) denotes its angular size. For any function f and plank \(\sigma =\psi _{R^{-1/2}}\), we define \(f_\sigma :=(1_\sigma \widehat{f})^\vee \) as usual. The main tool we need is the following fractal small cap decoupling for the cone \(\Gamma \).

Theorem 4

(fractal small cap decoupling) Suppose \(N_\delta (\Gamma )=\bigcup \gamma \), where each \(\gamma \) is a \(\delta \times \delta \times 1\)-cap. Given a function g, we say g is t-spacing if \(\textrm{supp}\widehat{g}\subset \cup _{\gamma \in \Gamma _g}\gamma \), where \(\Gamma _g\) is a set of \(\delta \times \delta \times 1\)-caps from the partition of \(N_\delta (\Gamma )\) and satisfies:

If g is t-spacing, then we have

Small cap decoupling for the cone was studied by the second and third authors in [9], where they proved amplitude-dependent versions of the wave envelope estimates (Theorem 1.3) of [10]. Wave envelope estimates are a more refined version of square function estimates, and sharp small cap decoupling is a straightforward corollary. For certain choices of conical small caps, the critical \(L^{p_c}\) exponent is \(p_c=4\) (as is the case in our Theorem 4). When \(p_c=4\), the sharp small cap decoupling inequalities follow already from the wave envelope estimates of [10]. A version of this was first observed in Theorem 3.6 of [11] and was later thoroughly explained in §10 of [9]. To prove Theorem 4 above, we repeat the derivation of small cap decoupling from the wave envelope estimates of [10] but incorporate the extra ingredient of t-spacing.

Remark 5

We will actually apply Theorem 4 to a slightly different cone

Compared with \(\Gamma \), we see that \(\Gamma _{K^{-1}}\) is at distance \(K^{-1}\) from the origin, but we still have a similar fractal small cap decoupling for \(\Gamma _{K^{-1}}\). Instead of (14), we have

The idea is to partition \(\Gamma _{K^{-1}}\) into \(\sim K\) many parts, each of which is roughly a cone that we can apply Theorem 4 to. By triangle inequality, it gives (16) with an additional factor \(K^{O(1)}\). It turns out that this factor is not harmful, since we will set \(K\sim (\log \delta ^{-1})^{O(1)}\) which can be absorbed into \(\delta ^{-\varepsilon }\).

We postpone the proof of Theorem 4 to the next subsection, and first show how it implies Theorem 3.

Proof of Theorem 3 assuming Theorem 4

Since the \(C_1\) in Theorem 3 is a constant, we just absorb it to the notation \(\sim \) or \(\lesssim \) for simplicity. We consider the dual of each \(S_\theta \in \mathbb S_\theta \) in the frequency space. For each \(\theta \in \Theta \), we define \(\tau _\theta \) to be a tube centered at the origin that has dimensions \(\delta \times \delta \times 1\), and its direction is \(\gamma (\theta )\). We see that \(\tau _\theta \) is the dual of each \(S_\theta \in \mathbb S_\theta \). Now, for each \(S_\theta \in \mathbb S_\theta \), we choose a bump function \(\psi _{S_\theta }\) satisfying the following properties: \(\psi _{S_\theta }\ge 1\) on \(S_\theta \), \(\psi _{S_\theta }\) decays rapidly outside \(S_\theta \), and \(\textrm{supp}\widehat{\psi }_{S_\theta }\subset \tau _\theta \).

Define functions

From our definitions, we see that for any \(x\in H\), we have \(f(x)\gtrsim (\log \delta ^{-1})^{-2}\#\Theta \). Therefore, we obtain

where “\(\lessapprox \)" means “\(\lesssim (\log \delta ^{-1})^{O(1)}\)".

Next, we will do a high-low decomposition for each \(\tau _\theta \).

Definition 3

Let K be a large number which we will choose later. Define the high part of \(\tau _\theta \) as

Define the low part of \(\tau _\theta \) as

We choose a smooth partition of unity adapted to the covering \(\tau _\theta =\tau _{\theta ,high}\bigcup \tau _{\theta ,low}\) which we denote by \(\eta _{\theta ,high}, \eta _{\theta ,low}\), so that

on \(\tau _{\theta }\). The key observation is that \(\{\textrm{supp}\widehat{\eta }_{\theta ,high}\}_\theta \) are at most O(K)-overlapping and form a canonical covering of \(N_\delta (\Gamma _{K^{-1}})\). (See the definition of \(\Gamma _{K^{-1}}\) in (15)).

Since \(\textrm{supp}\widehat{f}_\theta \subset \tau _\theta \), we also obtain a decomposition of \(f_\theta \)

where \(\widehat{f}_{\theta ,high}=\eta _{\theta ,high} \widehat{f}_\theta , \widehat{f}_{\theta ,low}=\eta _{\theta ,low}\widehat{f}_{\theta }.\) Similarly, we have a decomposition of f

where \(f_{high}=\sum _{\theta }f_{\theta ,high}, f_{low}=\sum _\theta f_{\theta ,low}.\)

Recall that for \(x\in H\), we have

We will show that by properly choosing K, we have

Recall that \(f_{low}=\sum _\theta f_\theta *\eta ^\vee _{\theta ,low}\). Since \(\eta _{\theta ,low}\) is a bump function at \(\tau _{\theta ,low}\), we see that \(\eta _{\theta ,low}^\vee \) is an \(L^1\)-normalized bump function essentially supported in the dual of \(\tau _{\theta ,low}\). Denote the dual of \(\tau _{\theta ,low}\) by \(S_{\theta ,K}\) which is a \(\delta ^{-1}\times \delta ^{-1}\times K\)-slab whose normal direction is \(\gamma (\theta )\). One actually has

Here, \(\psi _{S_{\theta ,K}}\) is bump function \(=1\) on \(S_{\theta ,K}\) and decays rapidly outside \(S_{\theta ,K}\). Ignoring the rapidly decaying tails, we have

Recalling the condition (2) in Theorem 3, we have

This implies

Since \(s<1\), by choosing \(K\sim (\log \delta ^{-1})^{\frac{2}{1-s}}\), we obtain (20). This shows that for \(s\in H\), we have

We define \(g=f_{high}\). By remark (5), we actually see that \(\{\tau _{\theta ,high}\}\) form a K-overlapping covering of \(N_\delta (\Gamma _K)\), and we have the decoupling inequality (16). By (17), we have

By (16), it is further bounded by

Since the slabs in \(\mathbb S_\theta \) are essentially disjoint, the above expression is bounded by

This implies \((\#\Theta )^4\# H\lesssim _\varepsilon \delta ^{2t-s-1-\varepsilon }\). \(\square \)

2.3 Proof of Theorem 4

The proof of Theorem 4 is based on an inequality of Guth, Wang and Zhang. Let us first introduce some notation from their paper [10]. Let \(\Gamma _{\circ }\) denote the standard cone in \(\mathbb R^3\):

We can partition the \(\delta \)-neighborhood of \(\Gamma _{\circ }\) into \(\delta \times \delta ^{1/2}\times 1\)-planks \(\Sigma =\{\sigma \}\):

More generally, for any dyadic s in the range \(\delta ^{1/2}\le s\le 1\), we can partition the \(s^2\)-neighborhood of \(\Gamma _{\circ }\) into \(s^2\times s\times 1\)-planks \(\mathcal T_s=\{\tau _s\}\):

For each s and frequency plank \(\tau _s\in \mathcal T_s\), we define the box \(U_{\tau _s}\) in the physical space to be a rectangle centered at the origin of dimensions \(\delta ^{-1}\times \delta ^{-1}s\times \delta ^{-1}s^2\) whose edge of length \(\delta ^{-1}\) (respectively \(\delta ^{-1}s\), \(\delta ^{-1}s^2\)) is parallel to the edge of \(\tau _s\) with length \(s^2\) (respectively s, 1). Note that for any \(\sigma \in \Sigma \), \(U_\sigma \) is just the dual rectangle of \(\sigma \). Also, \(U_{\tau _s}\) is the convex hull of \(\cup _{\sigma \subset \tau _s}U_{\sigma }\).

If U is a translated copy of \(U_{\tau _s}\), then we define \(S_U f\) by

We can think of \(S_U f\) as the wave envelope of f localized in U in the physical space and localized in \(\tau _s\) in the frequency space. We have the following inequality of Guth, Wang and Zhang (see [10] Theorem 1.5):

Theorem 5

(Wave envelope estimate) Suppose \(\textrm{supp}\widehat{f}\subset N_{\delta }(\Gamma _{\circ })\). Then

Although the theorem above is stated for the standard cone \(\Gamma _\circ \), it is also true for general cone \(\Gamma \) (see (12)). The Appendix of [9] shows how to adapt the inductive proof of Guth-Wang-Zhang for \(\Gamma _\circ \) to the case of a general cone \(\Gamma \).

As we did for \(\Gamma _\circ \), we can also define the \(\delta \times \delta ^{1/2}\times 1\)-planks \(\Sigma =\{\sigma \}\) and \(s^2\times s\times 1\)-planks \(\mathcal T_s=\{\tau _s\}\), which form a partition of certain neighborhood of \(\Gamma \). We can similarly define the wave envelope \(S_U f\) for \(\textrm{supp}\widehat{f}\subset N_{\delta }(\Gamma )\). We have the following estimate for general cone.

Theorem 6

(Wave envelope estimate for general cone) Suppose \(\textrm{supp}\widehat{f}\subset N_{\delta }(\Gamma )\). Then

We are ready to prove Theorem 4.

Proof of Theorem 4

By pigeonholing, we can assume all the wave packet of \(g_\gamma \) have amplitude \(\sim 1\), so we have

Apply Theorem 6 to g, we have

For fixed \(s, \tau _s, U\parallel U_{\tau _s}\), let us analyze the quantity \(\Vert S_Ug\Vert _2^2\) on the right hand side. By definition,

Note that U has dimensions \(\delta ^{-1}\times \delta ^{-1}s\times \delta ^{-1}s^2\), so its dual \(U^*\) has dimensions \(\delta \times \delta s^{-1}\times \delta s^{-2}\). We will apply local orthogonality to each \(f_\sigma \) on U. Let \(\{\beta \}\) be a set of \((\delta s^{-1})^2\times \delta s^{-1}\times 1\)-planks that form a finitely overlapping covering of \(N_{\delta s^{-1}}(\Gamma )\). We see that \(U^*\) and each \(\beta \) have the same angular size \(\delta s^{-1}\). For reader’s convenience, we recall that we have defined three families of planks: \(\{\gamma :\gamma \in \Gamma _g\}\) of dimensions \(\delta \times \delta \times 1\); \(\{\beta \}\) of dimensions \((\delta s^{-1})^2\times \delta s^{-1}\times 1\); \(\{\sigma \}\) of dimensions \(\delta \times \delta ^{1/2}\times 1\).

Since \(\delta ^{1/2}\le s\le 1\), we have the nested property for these planks: each \(\gamma (\in \Gamma _g)\) is contained in 100-dilation of some \(\beta \) and each \(\beta \) is contained in 100-dilation of some \(\sigma \). We simply denote this relationship by \(\gamma \subset \beta , \beta \subset \sigma \). We can write

Choose a smooth bump function \(\psi _U\) at U satisfying: \(|\psi _U|\gtrsim \varvec{1}_U\), \(\psi _U\) decays rapidly outside U, and \(\widehat{\psi }_U\) is supported in \(U^*\). We have

Since \((\psi _U g_\beta )^\wedge \subset U^*+\beta \) and by a geometric observation that \(\{U^*+\beta \}_{\beta \subset \sigma }\) are finitely overlapping, we have

Summing over \(\sigma \subset \tau _s\), we get

Therefore, we have

The last inequality is by the \(L^2\) orthogonality.

Noting (24), we have

\(\square \)

Data availability

Data sharing is not applicable to this article as no datasets were generated or analysed in this article.

References

Käenmäki, A., Orponen, T., Venieri, L.: A Marstrand-type restricted projection theorem in \(\mathbb{R}^{3}\). arXiv preprint arXiv:1708.04859 (2017)

Pramanik, M., Yang, T., Zahl, J.: A Furstenberg-type problem for circles, and a Kaufman-type restricted projection theorem in \(\mathbb{R}^{3}\). arXiv preprint arXiv:2207.02259 (2022)

Harris, T.L.: Length of sets under restricted families of projections onto lines. arXiv preprint arXiv:2208.06896 (2022)

Marstrand, J.M.: Some fundamental geometrical properties of plane sets of fractional dimensions. Proc. Lond. Math. Soc. 3(1), 257–302 (1954)

Mattila, P.: Hausdorff dimension, orthogonal projections and intersections with planes. Ann. Acad. Sci. Fenn. Ser. AI Math. 1(2), 227–244 (1975)

Fässler, K., Orponen, T.: On restricted families of projections in \(\mathbb{R} ^3\). Proc. Lond. Math. Soc. 109(2), 353–381 (2014)

Gan, S., Guo, S., Guth, L., Harris, T.L., Maldague, D., Wang, H.: On restricted projections to planes in \(\mathbb{R}^{3}\). arXiv preprint arXiv:2207.13844 (2022)

Katz, N.H., Tao, T.: Some connections between Falconer’s distance set conjecture and sets of Furstenburg type. N. Y. J. Math. 7, 149–187 (2001)

Maldague, D., Guth, L.: Amplitude dependent wave envelope estimates for the cone in \(\mathbb{R}^{3}\) (2022)

Guth, L., Wang, H., Zhang, R.: A sharp square function estimate for the cone in \(\mathbb{R} ^3\). Ann. Math. 192(2), 551–581 (2020)

Demeter, C., Guth, L., Wang, H.: Small cap decouplings. Geom. Funct. Anal. 30(4), 989–1062 (2020)

Funding

Open Access funding provided by the MIT Libraries.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gan, S., Guth, L. & Maldague, D. An Exceptional Set Estimate for Restricted Projections to Lines in \(\mathbb R^3\). J Geom Anal 34, 15 (2024). https://doi.org/10.1007/s12220-023-01456-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12220-023-01456-x