Abstract

In this article, we consider the length functional defined on the space of immersed planar curves. The \(L^2(ds^\gamma )\) Riemannian metric gives rise to the curve shortening flow as the gradient flow of the length functional. Motivated by the vanishing of the \(L^2(ds^\gamma )\) Riemannian distance, we consider the gradient flow of the length functional with respect to the \(H^1(ds^\gamma )\)-metric. Circles with radius \(r_0\) shrink with \(r(t) = \sqrt{W(e^{c-2t})}\) under the flow, where W is the Lambert W function and \(c = r_0^2 + \log r_0^2\). We conduct a thorough study of this flow, giving existence of eternal solutions and convergence for general initial data, preservation of regularity in various spaces, qualitative properties of the flow after an appropriate rescaling, and numerical simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the length functional:

defined on \(\text {Imm}^1\), the space of (once) continuously differentiable curves \(\gamma :\mathbb {S}^1\rightarrow \mathbb {R}^2\) with \(|\gamma '|\ne 0\). The definition of a gradient of \(\mathcal {L}\) requires a notion of direction on \(\text {Imm}^1\), that is an inner product or more generally a Riemannian metric \({\langle \cdot , \cdot \rangle }\). The gradient is then characterised by \( d\mathcal {L}={\langle {{\,\textrm{grad}\,}}\mathcal {L}, \cdot \rangle }\).

To calculate the (Gateaux) derivative \(d{\mathcal {L}}\) take a variation \(\gamma :(-\varepsilon ,\varepsilon )\times \mathbb {S}^1 \rightarrow \mathbb {R}^2\), \({\left. \partial _\varepsilon \gamma \right| }_{\varepsilon =0}=V\) and calculate

Here the inner product is the Euclidean one, u is the given parameter along \(\gamma =(x,y)\), s is the Euclidean arc-length parameter with associated arc-length derivative \(\partial _s = |\partial _u\gamma |^{-1}\partial _u\) and measure \(ds^\gamma = |\partial _u\gamma |\,du\), \(T=(x_s,y_s)\) is the unit tangent vector, k the curvature scalar, and \(N = (-y_s,x_s)\) is the normal vector.

As for the inner product or Riemannian metric in \(\text {Imm}^1\), there are many possibilities. For instance, we might choose either of

for v, w vector fields along \(\gamma \). The former is simpler, but from the point of view of geometric analysis (and in particular geometric flows) the latter is preferable because it is invariant under reparametrisation of \(\gamma \), and this invariance carries through to the corresponding gradient flow (see Sect. 3). Both of the metrics above are Riemannian, with the flat \(L^2\)-metric being independent of the base point whereas the \(L^2(ds^\gamma )\) metric depends on the base point \(\gamma \) through the measure \(ds^\gamma \). We use the superscript \(\gamma \) to highlight this dependence.

1.1 The Gradient Flow for Length in \((\text {Imm}^1,L^2(ds^\gamma ))\)

The calculation (2) shows that the gradient flow of length in the \(L^2(ds^\gamma )\) metric is the famous curve shortening flow proposed by Gage–Hamilton [13]:

where \(X:\mathbb {S}\times (0,T)\rightarrow \mathbb {R}^2\) is a one-parameter family of immersed regular closed curves, \(X(u,t) = (x(u,t),y(u,t))\) and s, T, k, N are as above.

The curve shortening flow moves each point along a curve in the direction of the curvature vector at that point. Concerning local and global behaviour of the flow, we have:

Theorem 1.1

(Angenent [4], Grayson [15], Gage-Hamilton [13], Ecker-Huisken [10]) Consider a locally Lipschitz embedded curve \(X_0\). There exists a curve shortening flow \(X:\mathbb {S}\times (0,T)\rightarrow \mathbb {R}^2\) such that \(X(\cdot ,t)\searrow X_0\) in the \(C^{1/2}\)-topology. The maximal time of smooth existence for the flow is finite, and as \(t\nearrow T\), \(X(\cdot ,t)\) shrinks to a point \(\{p\}\). The normalised flow with length or area fixed exists for all time. It becomes eventually convex, and converges exponentially fast to a standard round circle in the smooth topology.

Remark 1.2

In the theorem above, we make the following attributions. Angenent [4] showed that the curve shortening flow exists with locally Lipschitz data where convergence as \(t\searrow 0\) is in the continuous topology. Ecker-Huisken’s interior estimates in [10] extend this to the \(C^{1/2}\)-topology. Gage-Hamilton [13] showed that a convex curve contracts to a round point, whereas Grayson [15] proved that any embedded curve becomes eventually convex. There are a number of ways that this can be proved; for instance we also mention Huisken’s distance comparison [17] and the novel optimal curvature estimate method in [1].

The curve shortening flow has been extensively studied and found many applications. We refer the interested reader to the recent book [2].

1.2 Vanishing Riemannian Distance in \((\text {Imm}^1,L^2(ds^\gamma ))\)

Every Riemannian metric induces a distance function defined as the infimum of lengths of paths joining two points. For finite dimensional manifolds the resulting path-metric space has the same topology as the manifold, but for infinite-dimensional manifolds it is possible that the path-metric space topology is weaker (so-called weak Riemannian metrics) or even trivial. The first explicit example of this (the \(L^2\)-Hofer-metric on the symplectomorphism group) is given in [11].

This was also demonstrated by Michor-Mumford in [22]. The example given by Michor-Mumford is the space \((\mathcal {Q},L^2(ds^\gamma ))\), where \(\mathcal {Q}= \text {Imm}^1 / \text {Diff}(\mathbb {S}^1)\).Footnote 1 While \(\text {Imm}^1\) is an open subset of \(C^1(\mathbb {S}^1,\mathbb {R}^2)\) and so \((\text {Imm}^1,L^2(ds^\gamma ))\) is a Riemannian manifold, the action of \(\text {Diff}(\mathbb {S}^1)\) is not free (see [22, Sections 2.4 and 2.5]), and so the quotient \(\mathcal {Q}\) is not a manifold (it is an orbifold).

Theorem 2.1

(Michor-Mumford [22]) The Riemannian distance in \((\mathcal {Q}, L^2(ds^\gamma ))\) is trivial.

This surprising fact is shown by an explicit construction in [22] of a path between orbits with arbitrarily small \(L^2(ds^\gamma )\)-length, which for the benefit of the reader we briefly recall in Sect. 2.1. A natural question arising from Michor-Mumford’s work is if the induced metric topology on the Riemannian manifold \((\text {Imm}^1,L^2(ds^\gamma ))\) is also trivial. This was confirmed in [5] as a special case of a more general result.

Theorem 2.2

The Riemannian distance in \((\text {Imm}^1, L^2(ds^\gamma ))\) is trivial.

Here we give a different proof of Theorem 2.2 using a detour through small curves. The setup and proof is given in detail in Sect. 2.1.

We can see from (2) that the curve shortening flow (3) is indeed the \(L^2(ds^\gamma )\)-gradient flow of the length functional in \(\text {Imm}^1\), not the quotient \(\mathcal {Q}\). Theorem 2.2 yields that the underlying metric space that the curve shortening flow is defined upon is trivial, and therefore this background metric space structure is useless in the analysis of the flow.

While it could conceivably be true that the triviality of the Riemannian metric topology on \((\text {Imm}^1, L^2(ds^\gamma ))\) is important for the validity of Theorem 1.1 and the other nice properties that the curve shortening flow enjoys, one naturally wonders if this is in fact the case. What do gradient flows of length look like on \(\text {Imm}^1\), with other choices of Riemannian metric? For instance, we can ask whether these flows avoid the finite-time singularities of the classical curve shortening flow, whether they have unique asymptotic profiles for more general data, whether they reduce the isoperimetric deficit, and so on.

1.3 The Gradient Flow for Length in \((\text {Imm}^1,H^1(ds^\gamma ))\)

We wish to choose a metric that yields a non-trivial Riemannian distance. One way of doing this (similar to that described by Michor–Mumford [22]) is to view the \(L^2(ds^\gamma )\) Riemannian metric as an element on the Sobolev scale of metrics (as the \(H^0(ds^\gamma )\) metric). The next most simple choice is therefore the \(H^1(ds^\gamma )\) metric:

Note that we have set the parameter A from [22, Section 3.2, Equation (5)] to 1 and we are considering the full space, not the quotient. In contrast to the \((\mathcal {Q},L^2(ds^\gamma ))\) case, it is shown in [22] that the Riemannian distance on \((\mathcal {Q}, H^1(ds^\gamma ))\) is non-trivial. Therefore the Riemannian distance also does not vanish on the larger space \((\text {Imm}^1,H^1(ds^\gamma ))\).Footnote 2 The \(H^1(ds^\gamma )\)-gradient flow for length is our primary object of study in this paper.

Remark 1.3

We remark that, as will be discussed in Sect. 3, the \(H^1(ds^\gamma )\) metric is not scale invariant or even homogeneous. Homogeneous metrics can be constructed by introducing appropriate factors of \({\mathcal {L}}(\gamma )\) into (4). At least at a first glance, the presence of such factors appears to complicate things. Therefore, we defer investigation of the gradient flows of length with respect to such metrics to a future paper.

Remark 1.4

There is an expanding literature on the multitude of alternative metrics proposed for quantitative comparison of shapes in imaging applications (see for example [6, 7, 12, 18, 20, 22, 24, 28,29,30,31,32,33]). It might be interesting to compare the dynamical properties of the gradient flows of length on \(\text {Imm}^1\) with respect to other Riemannian metrics. Although our paper is the first study of the analytical properties of the \(H^1(ds^\gamma )\)-gradient flow on \(\text {Imm}^1\), the study of Sobolev type gradients is far from new. We mention the comprehensive book on the topic by Neuberger [25], and the flow studied in [29] for applications to active contours is closely related to the one we study here. A recurring theme seems to be better numerical stability for the Sobolev gradient compared to its \(L^2\) counterpart.

The steepest descent \(H^1(ds^\gamma )\)-gradient flow for length (called the \(H^1(ds^\gamma )\) curve shortening flow) on maps in \(\text {Imm}^1\) is a one-parameter family of maps \(X:\mathbb {S}^1\times I\rightarrow \mathbb {R}^2\) (I an interval containing zero) where for each t, \(X(\cdot ,t)\in \text {Imm}^1\) and

where \(d{\tilde{s}}^X = |\partial _uX|({\tilde{u}},t) d{\tilde{u}}\) and G is given by

Note that above X is often used to denote \(X(\cdot ,t)\). Our derivation of this is contained in Sect. 4.1.

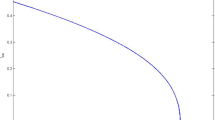

An instructive example of the flow’s behavior is exhibited by taking any standard round circle as initial data. The circle will shrink self-similarly to a point under the flow, taking infinite time to do so. Circle solutions can be extended uniquely and indefinitely in negative time as well, that is, they are eternal solutions (see also Sect. 4.1).

The flow (5) makes sense on the larger space \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) where \(\mathcal {C}\) is the space of constant maps. It cannot be characterised as the gradient flow of length with respect to the \(H^1(ds^\gamma )\) metric on this larger space, because the metric is not well-defined on curves that are not immersed. However, this does not cause an issue for the existence and uniqueness of the flow: we are able to obtain eternal solutions for any initial data \(X_0\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\). This is Theorem 4.14, which is the main result of Sect. 4.2:

Theorem 4.14

For each \(X_0\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) there exists a unique eternal \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times \mathbb {R}\rightarrow \mathbb {R}^2\) in \(C^1(\mathbb {R}; H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C})\) such that \(X(\cdot ,0) = X_0\).

There is a similar statement for the flow on immersions (with \(X_0\in {{\,\mathrm{\text {Imm}}\,}}^1\)), see Corollary 4.15.

In Sect. 4.3 we study convergence for the flow, showing that the flow is asymptotic to a constant map in \(\mathcal {C}\).

Theorem 4.21

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. Then X converges as \(t\rightarrow \infty \) in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\) to a constant map \(X_\infty \in H^1(\mathbb {S}^1,\mathbb {R}^2)\).

Numerical simulations of the flow show fascinating qualitative behavior for solutions. Figures 1 and 2 exhibit three important properties: first, that there is no smoothing effect - it appears to be possible for corners to persist throughout the flow. Second, that the evolution of a given initial curve is highly dependent upon its size, to the extent that a simple rescaling dramatically alters the amount of re-shaping along the flow. Third, the numerical simulations in Figs. 1 and 2 indicate that the flow does not uniformly move curves closer to circles. The scale-invariant isoperimetric ratio \(\mathcal {I}\) is plotted alongside the evolutions and for an embedded barbell it is not monotone.Footnote 3 We have given some comments on our numerical scheme in Sect. 5.2.

Despite the lack of a generic smoothing effect, what we might hope is that a generic preservation effect holds. In Sect. 5.4 we consider this question in the \(C^k\) regularity spaces (here \(k\in \mathbb {N}\)), and show that this regularity is indeed preserved by the flow. We consider the question of embeddedness in Sect. 5.7, with the main result there showing that curves with small length relative to their chord-arc length ratio will remain embedded.

We make three brief remarks. First, the initial condition (6) implies that the initial curve is embedded. Second, the resultant estimate on the chord-arc length ratio is uniform in time, and so does not deteriorate as t increases. Finally, since the chord-arc length ratio is scale-invariant but length is not, the initial condition (6) can always be satisfied by rescaling the initial data.

The theorem is as follows.

Theorem 5.10

Let \(k\in \mathbb {N}\). For each \(X_0\in {{\,\mathrm{\text {Imm}}\,}}^k\) there exists a unique eternal \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times \mathbb {R}\rightarrow \mathbb {R}^2\) in \(C^1(\mathbb {R}; {{\,\mathrm{\text {Imm}}\,}}^k)\) such that \(X(\cdot ,0) = X_0\).

Furthermore, suppose \(X_0\) satisfies

where

and \(s^{X(\cdot ,t)}(u_i) = \int _0^{u_i} |\partial _u X|(u,t)\,du\).

Then there exists a \(C = C(X_0) > 0\) such that

for all t.

In particular X (as well as its asymptotic profile and limit \(Y_\infty \), see below) is a family of embeddings.

Although the \(H^1(ds^\gamma )\) curve shortening flow disappears in infinite time (that is, as \(t\rightarrow \infty \) the flow converges to a constant map (a point) as guaranteed by Theorem 4.21) we are interested in identifying if it asymptotically approaches any particular shape. In order to do this, we define the asymptotic profile of a given \(H^1(ds^\gamma )\) curve shortening flow \(Y:\mathbb {S}\times \mathbb {R}\rightarrow \mathbb {R}^2\) by

Because of the exponential rescaling bounds for Y and its gradient become more difficult than for X. On the other hand, scale invariant estimates for X such as the chord-arc ratio and isoperimetric ratio carry through directly to estimates on Y. Furthermore, for \(H^1(ds^\gamma )\) curve shortening flows on the \(C^2\) space the curvature scalar k is well defined, and we can ask meaningfully if curvature remains controlled along Y (on X, it will always blow up).

By considering the asymptotic profile we hope to be able to identify limiting profiles \(Y_\infty \) for the flow. For the flow X, the limit is always a constant map. In stark contrast to this, possible limits for Y are manifold. We are able to show that the asymptotic profile Y does converge to a unique limit \(Y_\infty \) depending on the initial data \(X_0\), but it seems difficult to classify precisely what these \(Y_\infty \) look like. For the curvature, we show in Sect. 5.5 that it is uniformly bounded for \(C^2\) initial data, and that the profile limit \(Y_\infty \) is immersed with well-defined curvature (Theorem 5.7). On embeddedness, the same result as for X applies due to scale-invariance.

The isoperimetric deficit \(\mathcal {D}_Y:= {\mathcal {L}}(Y)^2-4\pi A(Y)\) on Y is studied in Sect. 5.6. It isn’t true that the deficit is monotone, or improving, but at least we can show that the eventual deficit of the asymptotic profile limit \(Y_\infty \) is bounded by a constant times the deficit of the initial data \(X_0\). This is sharp.

We summarise these results in the following theorem.

Theorem 5.8

Let \(k\in \mathbb {N}_0\) be a non-negative integer. Set \(\mathcal {B}\) to \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) for \(k=0\) and otherwise set \(\mathcal {B}\) to \(C^k(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\). For each \(X_0\in \mathcal {B}\) there exists a non-trivial \(Y_\infty \in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) such that the asymptotic profile \(Y(t)\rightarrow Y_\infty \) in \(C^0(\mathbb {S}^1,\mathbb {R}^2)\) as \(t\rightarrow \infty \).

Furthermore:

-

\(Y_\infty \) is embedded if at any \(t\in (0,\infty )\) the condition (6) was satisfied for X(t)

-

If \(k\ge 2\), and X(0) is immersed, then \(Y_\infty \) is immersed with bounded curvature

-

There is a constant \(c = c(\Vert {X(0)}\Vert _\infty )\) such that the isoperimetric deficit of \(Y_\infty \) satisfies

$$\begin{aligned} \mathcal {D}_{Y_\infty } \le c\mathcal {D}_{X(0)}. \end{aligned}$$

2 Metrics on Spaces of Immersed Curves

Let \(C^k(\mathbb {S}^1,\mathbb {R}^2)\) be the usual Banach space of maps with continuous derivatives up to order k. Our convention is that \(|\mathbb {S}^1| = |[0,1]| = 1\). For \( 1\le k \le \infty \) we define

Note that \({{\,\mathrm{\text {Imm}}\,}}^k\) is an open subset of \(C^k(\mathbb {S}^1,\mathbb {R}^2)\).

The tangent space \(T_{\gamma }{{\,\mathrm{\text {Imm}}\,}}^k\cong C^{k}(\mathbb {S}^1,\mathbb {R}^2)\) consists of vector fields along \(\gamma \). We define the following Riemannian metrics on \({{\,\mathrm{\text {Imm}}\,}}^1\) for \(v,w\in T_\gamma {{\,\mathrm{\text {Imm}}\,}}^1\):

The length function (1) is of course well-defined on the larger Sobolev space \(H^1(\mathbb {S}^1,\mathbb {R}^2)\), as are the \(L^2, H^1\) and \(L^2(ds^\gamma )\) products above. However, the \(H^1(ds^\gamma )\) product is not well-defined because of the arc-length derivatives, even if one restricts to curves which are almost everywhere immersed.

We remark that each of the metrics above are examples of weak Riemannian metrics. That is, the topology induced by the Riemannian metric is weaker than the manifold topology. This is a purely infinite-dimensional phenomenon. In fact, even for a strong Riemannian metric, geodesic and metric completeness are not equivalent (as guaranteed by the Hopf-Rinow theorem in finite dimensions) and it is not always the case that points can be joined by minimising geodesics (for an overview of these and related facts see [9]).

2.1 Vanishing Riemannian Distance in \((\text {Imm}^1,L^2(ds^\gamma ))\)

Consider curves \(\gamma _1, \gamma _2 \in \text {Imm}^1\) and a smooth path \(\alpha :[0,1]\rightarrow \text {Imm}^1\) with \(\alpha (0) = \gamma _1\) and \(\alpha (1) = \gamma _2\). The \(L^2(ds^\gamma )\)-length of this smooth path is well-defined and given by

As usual, one defines a distance function associated with the Riemannian metric by

Since the \(L^2(ds^\gamma )\) metric is invariant under the action of \(\text {Diff}( \mathbb {S}^1)\) it induces a Riemannian metric on the quotient space \(\mathcal {Q}\) (except at the singularities) as follows. Let \(\pi :\text {Imm}^1\rightarrow \mathcal {Q}\) be the projection. Given \(v,w\in T_{[\gamma ]}\mathcal {Q}\) choose any \(V,W\in T_\gamma \text {Imm}^1\) such that \(\pi (\gamma )=[\gamma ],\) \(T_\gamma \pi (V)=v, T_\gamma \pi (W)=w\). Then the quotient metric is given by

where \(V^\perp \) and \(W^\perp \) are projections onto the subspace of \(T_\gamma \text {Imm}^1\) consisting of vectors which are tangent to the orbits. This is just the space of vector fields along \(\gamma \) in the direction of the normal N to \(\gamma \), and so \(V^\perp ={\left\langle {V,N} \right\rangle }N\). The length of a path \(\pi (\alpha )\) in \(\mathcal {Q}\) according to the quotient metric is then

and the distance is

This is the distance function that Michor and Mumford have shown to be identically zero (Theorem 2.1). They also point out (cf. [22, Section 2.5]) that for any smooth path \(\alpha \) between curves \(\gamma _1\), \(\gamma _2\), there exists a smooth t-dependent family of reparametrisations \(\phi :[0,1]\rightarrow \text {Diff}(\mathbb {S}^1)\) such that the reparametrised pathFootnote 4\({\tilde{\alpha }}(t,u):=\alpha (t,\phi (t,u))\) has path derivative \({\tilde{\alpha }}_t(t)\) which is normal to \({\tilde{\alpha }}(t)\). Thus an equivalent definition is

Theorem 2.1

(Michor–Mumford [22]) For any \(\varepsilon >0\) and \([\gamma _1], [\gamma _2]\) in the same path component of \(\mathcal {Q}\) there is a path \(\alpha :[0,1]\rightarrow {{\,\mathrm{\text {Imm}}\,}}^1\) satisfying \(\alpha (0)\in [\gamma _1], \alpha (1)\in [\gamma _2]\) and having length \({\mathcal {L}}_{L^2(ds^\gamma )}(\alpha )<\varepsilon \).

Since it is quite a surprising result and an elegant construction, we include a description of the proof. The idea is to show that we may deform any path \(\alpha \) in \({{\,\mathrm{\text {Imm}}\,}}^1\) to a new path \(\alpha _n\) that remains smooth but has small normal projection, and whose endpoint changes only by reparametrisation.

So, let us consider a smooth path \(\alpha :[0,1]\rightarrow {{\,\mathrm{\text {Imm}}\,}}^1\) such that \(\alpha (0) = \gamma _1\) and \(\alpha (1) = \gamma _2\). We choose evenly-spaced points \(\theta _0,\ldots ,\theta _n\) in \(\mathbb {S}^1\) and move \(\gamma _1(\theta _i)\), via \(\alpha (2t)\), to their eventual destination \(\gamma _2(\theta _i)\) twice as fast. The in-between points \(\psi _i = (\theta _{i-1} + \theta _i)/2\) should remain stationary while this occurs. Once half of the time has passed, and all points \(\theta _i\) are at their destination, the points \(\gamma _1(\psi _i)\) may begin to move via \(\alpha \). They should also move twice as fast as before. A graphical representation of this is given in Fig. 3.

The resultant path \(\alpha _n\) has small normal projection (depending on n) but also longer length (again depending on n). The key estimate in [22, Section 3.10] shows that the length of the path \(\alpha _n\) increases proportional to n and the normal projection decreases proportional to \(\frac{1}{n}\). Since the normal projection is squared, this means that the length of \(\alpha _n\) is proportional to the length of \(\alpha \) times \(\frac{1}{n}\).

In other words, for any \(\varepsilon >0\) we can connect \(\gamma _1\) to a reparametrisation of \(\gamma _2\) by a path with \(L^2(ds^\gamma )\)-length less than \(\varepsilon \), and so the distance between them is zero.Footnote 5

Note that the corresponding result does not immediately follow for the full space \(\text {Imm}^1\) because in the full space the tangential component of the path derivative is also measured. Indeed, we could apply the Michor-Mumford construction to obtain a path \(\alpha _n\) whose derivative has small normal component, and then introduce a time-dependent reparametrisation to set the tangential component to zero, but of course the reparametrisation changes the endpoint to a reparametrisation of the original endpoint. However, as we show in the following theorem, it is still possible to get the desired result by diverting through a sufficiently small curve.

Theorem 2.2

The \(L^2(ds^\gamma )\)-distance between any two curves in the same path component of \(\text {Imm}^1\) vanishes.

Proof

Let \(\gamma _0,\gamma _1 \in C^\infty (\mathbb {S}^1,\mathbb {R}^2)\) be smooth immersions in the same path component, and let \(\eta _0\) be another curve in the same component with \(\Vert {\eta _0'}\Vert _{L^\infty }<\left( \frac{\varepsilon }{4}\right) ^{2/3}\) (for example, \(\eta _0\) could be a sufficiently small scalar multiple of \(\gamma _0\)). By Theorem 2.1 there exists a path \(\alpha _0\) from \(\gamma _0\) to \(\eta _1\), where \(\eta _1\) is a reparametrization of \(\eta _0\), with \(\mathcal {L}_{L^2(ds^\gamma )}(\alpha _0)<\frac{\varepsilon }{4}\). Now let \(\theta \in \text {Diff}( \mathbb {S}^1)\) such that \(\eta _1(u)=\eta _0(\theta (u))\) and define a path \(\alpha _1\) from \(\eta _0\) to \(\eta _1\) by

Then

and by (8) the \(L^2(ds^\gamma )\)-length of \(\alpha _1\) is

Now we concatenate \(\alpha _0\) with \(\alpha _1(-t)\) to form a path p from \(\gamma _0\) to \(\eta _0\) with \(\mathcal {L}_{L^2(ds^\gamma )}(p)<\frac{\varepsilon }{2}\). By the same method we construct a path q from \(\gamma _1\) to \(\eta _0\) with \(\mathcal {L}_{L^2(ds^\gamma )}(q)<\frac{\varepsilon }{2}\) and then the concatenation of p with \(q(-t)\) is a path from \(\gamma _0\) to \(\gamma _1\) with arbitrarily small \(L^2(ds^\gamma )\)-length. We assumed \(\gamma _0,\gamma _1\) were smooth, but since the smooth curves are dense in \(C^1\) and \(\Vert {\alpha _t}\Vert _{L^2(ds^\gamma )}\le c\Vert {\alpha _t}\Vert _{C^1}\Vert {\alpha _u}\Vert _{L^2} \) for any path \(\alpha \), we can also join any pair of curves in \({{\,\mathrm{\text {Imm}}\,}}^1\) by a path with arbitrarily small \(L^2(ds^\gamma )\)-length. \(\square \)

Remark 2.3

As mentioned in the introduction, an alternative proof of a more general result is outlined in [5]. The proof relies on another theorem of Michor and Mumford [21] (extended in eg. [8]) showing that the right invariant \(L^2\) metric on diffeomorphism groups gives vanishing distance.

3 Symmetries of Metrics and Gradient Flows

The standard curve shortening flow (3) enjoys several important symmetries:

-

Isometry of the plane: if \(A:\mathbb {R}^2\rightarrow \mathbb {R}^2\) is an isometry and \(X:\mathbb {S}^1\times [0,T)\rightarrow \mathbb {R}^2\) is a solution to curve shortening flow then \(A\circ X\) is also a solution.

-

Reparametrisation: if \(\phi \in \text {Diff}(\mathbb {S}^1)\) and X(u, t) is a solution to curve shortening flow then \(X(\phi (u),t)\) is also a solution.

-

Scaling spacetime: if X(u, t) is a solution to curve shortening flow then so is \(\lambda X(u,t/\lambda ^2), \) with \(\lambda >0\).

It is interesting to note that these symmetries can be observed directly from symmetries of the length functional \({\mathcal {L}}\) and the \(H^{0}(ds^\gamma )\) Riemannian inner product without actually calculating the gradient.

Lemma 3.1

Suppose there is a free group action of G on (M, g) which is an isometry of the Riemannian metric g and which leaves \(E:M\rightarrow \mathbb {R}\) invariant. Then the gradient flow of E with respect to g is invariant under the action.

Proof

Since \(E(p)=E(\lambda p)\) for all \(\lambda \in G\), \(p\in M\), we have

then equating

shows that \(d\lambda _p{{\,\textrm{grad}\,}}E_p={{\,\textrm{grad}\,}}E_{\lambda p}\) (\(d\lambda _p\) has full rank because the action is free). Therefore if X is a solution to \(X_t=-{{\,\textrm{grad}\,}}E_{X}\) then

so \(\lambda X\) is also a solution. \(\square \)

To demonstrate we observe the following symmetries of the \(H^1(ds^\gamma )\) gradient flow.

Isometry. An isometry \(A:\mathbb {R}^2\rightarrow \mathbb {R}^2\) induces \(A:{{\,\mathrm{\text {Imm}}\,}}^1(\mathbb {S}^1,\mathbb {R}^2)\rightarrow {{\,\mathrm{\text {Imm}}\,}}^1(\mathbb {S}^1,\mathbb {R}^2)\) by \(A\gamma =A\circ \gamma \). Since an isometry is length preserving

and similarly for the arc-length functions \(s_{A\gamma }=s_\gamma \implies ds^{A\gamma } = ds^\gamma \). Hence, in the \(H^1(ds^\gamma )\) metric:

That is, the induced map \(A:{{\,\mathrm{\text {Imm}}\,}}^1(\mathbb {S}^1,\mathbb {R}^2)\rightarrow {{\,\mathrm{\text {Imm}}\,}}^1(\mathbb {S}^1,\mathbb {R}^2)\) is an \(H^1(ds^\gamma )\) isometry. Now by Lemma 3.1 if X is a solution of the \(H^1(ds^\gamma )\) gradient flow of \({\mathcal {L}}\) then so is AX.

Reparametrisation. Given \(\phi \in {{\,\textrm{Diff}\,}}(\mathbb {S}^1)\) we have \({\mathcal {L}}(\gamma )={\mathcal {L}}(\gamma \circ \phi )\) and the map \(\Phi (\gamma )=\gamma \circ \phi \) is linear on \({{\,\mathrm{\text {Imm}}\,}}^k(\mathbb {S}^1,\mathbb {R}^2)\) so \(d{\mathcal {L}}_\gamma =d{\mathcal {L}}_{\Phi \gamma }\Phi \). Assuming w.l.o.g. that \(\phi '>0\) we have

so \(\Phi \) is also an \(H^1(ds^\gamma )\) isometry and again by Lemma 3.1 the gradient flow is invariant under reparametrisation.

Scaling space-time. For a dilation of \(\mathbb {R}^2\) by \(\lambda >0\) the length function scales like \({\mathcal {L}}(\lambda x)=\lambda {\mathcal {L}}(x)\). To obtain a space-time scaling symmetry we need the metric to also be homogeneous. However the \(H^1(ds^\gamma )\) metric is not homogeneous:

Let us give an example of a homogeneous metric that allows us to maps trajectories of the gradient flow of length in that metric to other trajectories via the space-time scaling \(X(u,t)\mapsto \lambda X(u,t/\lambda )\). Consider the metric

where

This metric, used in [29], satisfies \({\left\langle { \xi , \eta } \right\rangle }_{H^1(d{\bar{s}}^{\lambda \gamma })}={\left\langle {\xi ,\eta } \right\rangle }_{H^1(d\bar{s}^{\gamma })}\) and therefore

With this metric, the Riemannian distance between \(\lambda \gamma _1\) and \(\lambda \gamma _2\) is \(\lambda \) times the Riemannian distance between \(\gamma _1\) and \(\gamma _2\), which seems natural. Then

Since \({\mathcal {L}}(\lambda \gamma )=\lambda {\mathcal {L}}(\gamma )\) we have

that is, \(d{\mathcal {L}}_{\lambda \gamma } = d{\mathcal {L}}_\gamma \) and so from

we find \({{\,\textrm{grad}\,}}{\mathcal {L}}_{\lambda \gamma } = {{\,\textrm{grad}\,}}{\mathcal {L}}_{\gamma }\). Now if X(u, t) is a solution to \(X_t=-{{\,\textrm{grad}\,}}{\mathcal {L}}_X\) then defining \({\tilde{X}}(u,t):= \lambda X(u,t/\lambda )\) we have

4 The Gradient Flow for Length with Respect to the \(H^1(ds^\gamma )\) Riemannian Metric

4.1 Derivation, Stationary Solutions and Circles

The \(H^1(ds^\gamma )\) gradient of length is defined by

Comparing with (2) the gradient of length with respect to the \(H^1(ds^\gamma )\) metric must satisfy distributionally

where we have used the notation \(T = \gamma _s\).

We solve this ODE in the arc-length parametrisation using the Green’s function method. Considering

with \(C^1\)-periodic boundary conditions and the required discontinuity we find the Green’s function

(cf. [29] eqn. (12) for the metric (10) above.) Then the solution to (11) is

We can integrate by parts twice in (14) to obtain

and we observe that it is not neccesary for \(\gamma \) to have a second derivative. Indeed, using integration by parts and (12) we find

Definition

Consider a family of curves \(X:\mathbb {S}^1\times (a,b)\rightarrow \mathbb {R}^2\) where for each \(t\in (a,b)\subset \mathbb {R}\), \(X(\cdot ,t)\in {{\,\mathrm{\text {Imm}}\,}}^1\). We term X an \(H^1(ds^\gamma )\) curve shortening flow if

where G is given by

Here we write \(G(X;s,\tilde{s})\) to emphasize the dependence on the curve X(., t). Similarly, we have written \(ds^X\) and \(d{\tilde{s}}^X\) to remind the reader that s and \({\tilde{s}}\) are arc-length parameters for X. Henceforth we will omit these unless it is needed to avoid ambiguity.

Remark 4.1

The \(H^1(ds^\gamma )\) curve shortening flow is an ODE. One way to see this is to note that the convolution \(G * \phi \) is equal to \((1-\partial _s^2)^{-1}\phi \) for maps \(\phi \), then

The above expression could additionally be helpful in obtaining the local well-posedness of the flow. We thank the anonymous referee for pointing this out to us.

Remark 4.2

Note that (15) makes sense on the larger space \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) where

is the space of constant maps, provided we do not use the arc-length parametrisation. That is, we consider

where F is defined by

The constant maps (for which length vanishes) are potentially problematic: viewing G as a map from \(H^1(\mathbb {S}^1,\mathbb {R}^2)\times \mathbb {S}^1\times \mathbb {S}^1 \rightarrow \mathbb {R}\) we see that taking a sequence in the first variable toward the space of constant maps results in \(-\infty \). Then in (18), the integral involving G along such a sequence may not be well-defined. If we consider the case of circles, say \(X^r(u) = r(\cos u, \sin u)\), then \(X^r\) converges to a constant map as \(r\searrow 0\), yet \(F(X^r;u)\rightarrow 0\) despite \(G(X^r;u,{\tilde{u}})\rightarrow -\infty \) (all limits are as \(r\searrow 0\)). For general \(H^1\) maps the limiting behavior may be more complicated, however, note that a-posteriori our results here indicate that this behavior is generically valid.

Most of the results that follow will be proved for this larger space \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\). However, the interpretation of this flow as the \(H^1(ds^\gamma )\) gradient flow of length requires that we use the space \({{\,\mathrm{\text {Imm}}\,}}^1\). This is so that \(H^1(ds^\gamma )\) is a Riemannian metric: the product (7) is not positive definite at curves which are not immersed, and in fact is not necessarily well-defined because of the arc length derivatives. Moreover, \({\mathcal {L}}\) is not differentiable outside of \({{\,\mathrm{\text {Imm}}\,}}^1\). Nevertheless we proceed to study the flow mostly in the space \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\), but bear in mind that on this space it is at best a ‘pseudo-gradient’.

We begin our study of the flow by considering stationary solutions and observing the evolution of circles.

Lemma 4.3

There are no stationary solutions to the \(H^1(ds^\gamma )\) curve shortening flow.

Proof

From (15), a map \(X\in H^1(\mathbb {S}^1,\mathbb {R}^2)\) is stationary if

The arc-length function is in \(H^1(\mathbb {S}^1,\mathbb {R})\) and so G (see (16)) is in turn in \(H^1(\mathbb {S}^1,\mathbb {R})\).

Differentiating, we find

and so the first derivative of X exists classically. Iterating this with integration by parts shows that in fact all derivatives of X exist and it is a smooth map.

Furthermore, examining the case of the second derivative in detail, we find (applying (12))

Since X is periodic, this implies that X must be the constant map. As explained in remark 4.2, G is singular at constant maps. \(\square \)

Let us now consider the case of a circle. Here, we see a stark difference to the case of the classical \(L^2(ds^\gamma )\) curve shortening flow.

Lemma 4.4

Under the \(H^1(ds^\gamma )\) curve shortening flow, an initial circle in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\) with any radius and any center will

-

(i)

exist for all time; and

-

(ii)

shrink homothetically to a point as \(t\rightarrow \infty \).

Proof

We can immediately conclude from the symmetry of the length functional and the symmetry of the circle that the flow must evolve homothetically (see Lemma 3.1). We must calculate the evolution of the radius of the circle.

So, suppose that X is an \(H^1(ds^\gamma )\) curve shortening flow of the form

with \(r(0)>0\). Here u is the arbitrary parameter and not the arc length variable. Then \(X(s,t)=r(t)(\cos (\frac{s}{r}),\sin (\frac{s}{r}))\) and \(X_{ss}=-\frac{1}{r^2}X\). Therefore

Applying (12) and integrating by parts gives

Differentiating (20) gives \(X_t=\frac{\dot{r}}{r}X\) and then substituting into the above leads to the ODE for r(t):

Using separation of variables yields \( r^2e^{r^2}=e^{-2t+c}\) (here \(c = \log (r^2(0)e^{r^2(0)})\)) which has solutions

where W is the Lambert W function (the inverse(s) of \(xe^x\)). Since \(t\mapsto \sqrt{W(e^{c-2t})}\) is a monotonically decreasing function converging to zero as \(t\rightarrow \infty \), this finishes the proof. \(\square \)

Remark 4.5

If we consider the steepest descent \(H^1(d{\bar{s}}^\gamma )\) gradient flow for length (this is the 2-homogeneous metric, see equation (10)), the behavior of the flow on circles changes from long-time existence to finite-time extinction. The length of the solution in this case decreases linearly with time.

4.2 Existence and Uniqueness

Now we turn to establishing existence and uniqueness for the \(H^1(ds^\gamma )\) curve shortening flow.

For the initial data, we take it to be on the largest possible (Hilbert) space for which (15) makes sense. As explained in Remark 4.2, this is the space of maps \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) where

is the space of constant maps. We note that \(\mathcal {C}\) is generated by the action of translations in \(\mathbb {R}^2\) applied to the orbit of the diffeomorphism group at any particular constant map in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\). Since the orbit of the diffeomorphism group applied to a constant map is trivial, the space \(\mathcal {C}\) turns out to be two-dimensional only.

The main result of this section is the following.

Theorem 4.14

For each \(X_0\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) there exists a unique eternal \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times \mathbb {R}\rightarrow \mathbb {R}^2\) in \(C^1(\mathbb {R}; H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C})\) such that \(X(\cdot ,0) = X_0\).

This is proven in two parts.

4.2.1 Local Existence

We begin with a local existence theorem.

Theorem 4.6

For each \(X_0\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) there exists a \(T_0>0\) and unique \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times [-T_0,T_0]\rightarrow \mathbb {R}^2\) in \(C^1([-T_0,T_0]; H^1(\mathbb {S}^1,\mathbb {R}^2){\setminus }\mathcal {C})\) such that \(X(\cdot ,0) = X_0\).

The flow (15) is essentially a first-order ODE and so we will be able to establish this result by applying the Picard-Lindelöf theorem in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\). (see [34, Theorem 3.A]). Note that this means we should not expect any kind of smoothing effect or other phenomena associated with diffusion-type equations such as the \(L^2(ds^\gamma )\) curve shortening flow. Of course, we will need to show that the flow a-priori remains away from the problematic set \(\mathcal {C}\).

Recalling (18), we observe the following regularity for F in our setting.

Lemma 4.7

For any \(X\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) consider F and G as defined in (18). Then \(F(X) \in H^1(\mathbb {S}^1,\mathbb {R}^2)\).

Proof

The weak form of equation (12) implies continuity and symmetry of the Greens function G, as well as

Note that there is a discontinuity in the first derivative of G with respect to either variable. Since G is strictly negative we have \(\int _0^{\mathcal {L}}|G(s,{\tilde{s}})| d{\tilde{s}}=1\). Now

therefore

and we have

For the derivative with respect to u, using \(G_s=-G_{{\tilde{s}}}\) and integration by parts we find

This implies

Since our convention is that \(|\mathbb {S}^1| = 1\), we have \({\mathcal {L}}(X)\le \Vert {X}\Vert _{L^2}\), and so the inequalities (22) and (24) together show that \(F(X)\in H^1(\mathbb {S}^1,\mathbb {R}^2)\). \(\square \)

The \(H^1\) regularity of F from Lemma 4.7 is locally uniform (for given initial data), with a Lipschitz estimate in \(H^1\), as the following lemma shows.

Lemma 4.8

Given \(X_0\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) let

where \(b>0\) is fixed. Then there exist constants \(L\ge 0\) and \(K>0\) depending on \(\Vert {X_0}\Vert _{H^1}\) such that

Proof

To obtain the estimates and remain away from the problematic set \(\mathcal {C}\) it is necessary that the length of each \(X\in Q_b\) is bounded away from zero. This is the reason for the upper bound on b. Indeed if \(X\in Q_b\) then (note that \(|\mathbb {S}^1| = 1\) in our convention)

hence

and \({\mathcal {L}}(X_0)-b>0\) by assumption. It follows that \(G(X;u,{\tilde{u}})\) exists on \(Q_b\) and since \(s^X(u)\le {\mathcal {L}}(X)\) we deduce

We will also need the derivative

which obeys the estimate

Since G is well-defined on \(Q_b\), we may use (22) and (24) from the proof of Lemma 4.7 to obtain

for a constant \(c>0\) and all \(X\in Q_b\).

As for the Lipschitz estimate, we will begin by studying the Lipschitz property for G. First note that the arc length function is Lipschitz as a function on \(H^1(\mathbb {S}^1,\mathbb {R}^2)\):

and setting \(u=1\) we also have

For the numerator of G, note that \(\cosh \) is smooth and its domain here is bounded via (25), and so there is a \(c_1>0\) such that

A similar argument applies to the denominator \(\sinh (-{\mathcal {L}}(X)/2)\) and moreover inequality (25) ensures that \(\sinh (-{\mathcal {L}}(X)/2)\) is bounded away from zero. Since the quotient of two Lipschitz functions is itself Lipschitz provided the denominator is bounded away from zero, we have that G is Lipschitz, i.e. there is a constant \(c_2>0\) such that

Now we have

By (25) and (26) there exists \(c_0\) such that \(|G(X;u,{\tilde{u}})|\le c_0\) for all \(X\in Q_b\). Then using the Lipschitz condition for G we find

Therefore, recalling that \(X,Y\in Q_b\) satisfy \(\Vert {X}\Vert ,\Vert {Y}\Vert \le \Vert {X_0}\Vert _1+b\), integrating the above gives

We need a similar result for

Comparing (27), define

so that

Then as in (28) we have \(|A(X)(u,{\tilde{u}})|\le \frac{1}{2}\) and arguing as for G above we also have that A is Lipschitz:

Now

Using this estimate in (31), together with (28) gives

and then

Combining (30) and (32) gives the required estimate, there exists L such that

for all \(X,Y \in Q_b\). \(\square \)

Proof of Theorem 4.6

According to the generalised (to Banach space) Picard-Lindelöf theorem in [34] (Theorem 3.A), the estimates in Lemma 4.8 guarantee existence and uniqueness of a solution on the interval provided \(KT_0 < b\). \(\square \)

4.2.2 Global Existence

We may extend the existence interval by repeated applications of the Picard-Lindelöf theorem from [34].

There are two issues to be resolved for this. First, the constants K and L from Lemma 4.8 depend on the \(H^1\)-norm of \(X_0\). When we attempt to continue the solution, we must show that in the forward and backward time directions this norm does not explode in finite time to \(+\infty \).

Second, the flow must remain within \(\mathcal {Q}_b\) for some b; as the evolution continues forward, length is decreasing, and so the amount of time that we can extend depends not only on the \(H^1\)-norm of the solution but also the length bound from below. In the backward time direction length is in fact increasing, so this second issue does not arise there.

First, we study the \(L^\infty \)-norm of the solution.

Lemma 4.9

Let X be an \(H^1(ds^\gamma )\) curve shortening flow defined on some interval \((-T,T)\). Then \(\Vert {X(t)}\Vert _\infty \) is non-increasing on \((-T,T)\). Furthermore, we have the estimate

Proof

In the forward time direction, we proceed as follows for the uniform bound. For any \(t_0\in (-T,T)\) there exists \(u_0\) such that \(\Vert {X(t_0)}\Vert _\infty =|X(t_0,u_0)| \) and then

Now let \(t_1 =\sup \{ t\ge t_0: \Vert {X(t)}\Vert _{L^\infty }={\left| {X(t,u_0)}\right| } \}\). By the inequality above \(\Vert {X(t)}\Vert _\infty \) is non-increasing for all \(t\in [t_0,t_1)\), and by the continuity of X in t, \(\lim _{t\rightarrow t_1} \Vert {X(t)}\Vert _{L^\infty }=\Vert {X(t_1)}\Vert _{L^\infty }\). Since \(t_0\) was arbitrary, it follows that \(\Vert {X(t)}\Vert _{\infty }\) cannot increase at any t.

In the backward time direction, we need an estimate from below. Let us calculate

so (\(u_0\) as before)

Hence

and integrating from t to 0 (assuming \(t<0\), and \(u_0\) changing as necessary) this translates to

This is the claimed estimate in the statement of the lemma. \(\square \)

Lemma 4.10

Let X be an \(H^1(ds^\gamma )\) curve shortening flow defined on some interval \((-T,T)\). Then \(\Vert {X_u(t)}\Vert _{L^2}\) is non-increasing on \((-T,T)\). Furthermore, we have the estimate

Proof

As in (23)

and therefore, recalling that \(\int _0^{\mathcal {L}}|G(s,{\tilde{s}})| d{\tilde{s}}=1\),

This settles the forward time estimate. As before, for the backward time estimate we need a lower bound. We calculate

The same integration as in the backward time estimate for Lemma 4.9 yields the claimed backward in time estimate. \(\square \)

The estimates of Lemmata 4.9, 4.10 yield the following control on the \(H^1\)-norm of the solution.

Corollary 4.11

Let X be an \(H^1(ds^\gamma )\) curve shortening flow defined on some interval \((-T,T)\). Then

and

A similar technique allows us to show also that if the initial data for the flow is an immersion, it remains an immersion.

Lemma 4.12

Let X be an \(H^1(ds^\gamma )\) curve shortening flow defined on some interval \((-T,T)\) with \(X(0)\in {{\,\mathrm{\text {Imm}}\,}}^1\). Then \(X(t)\in \text {Imm}^1\) for all \(t\in (-T,T)\).

Proof

Using (23) we have

Now since \(\int _0^{\mathcal {L}}X_s \,ds^\gamma =0\) we have

so using \(|G_s|\le \frac{1}{2}\) (cf. (28)) we find

Using this estimate with (33) yields

From \(\frac{d{\mathcal {L}}}{dt}=-\Vert {{{\,\textrm{grad}\,}}{\mathcal {L}}_X}\Vert ^2_{H^1(ds^\gamma )}\) we know that \({\mathcal {L}}\) is non-increasing and so rearranging the inequality on the left and multiplying by an exponential factor gives

Now integrating from 0 to \(t>0\) gives

Then since \(X_u\) is initially an immersion, it remains so for \(t>0\). The estimate backward in time is analogous but we instead use the second inequality in (35) and integrate from \(t<0\) to 0. The statement is

for \(t<0\). \(\square \)

Corollary 4.13

Let X be an \(H^1(ds^\gamma )\) curve shortening flow defined on some interval \((-T,T)\) with \(T<\infty \). Then there exists \(\varepsilon >0\) such that \({\mathcal {L}}(X(t))>\varepsilon \) for all \(t\in (-T,T)\).

Proof

For \(t\ge 0\), taking the square root in (36) and then integrating over u gives

Since \({\mathcal {L}}(t)\) is non-increasing the result follows. \(\square \)

Theorem 4.14

Any \(H^1(ds^\gamma )\) curve shortening flow defined on some interval \((-T,T)\) with \(T<\infty \) may be extended to all \(t\in (-\infty ,\infty )\).

Proof

Take \(\overline{T}\) to be the maximal time such that the flow X can be extended forward: \(t\in (-T, \overline{T})\). In view of Lemma 4.8 and Theorem 4.6, if \(\overline{T} <\infty \), then one or more of the following have occurred:

-

\({\mathcal {L}}(X(t))\searrow 0\) as \(t\nearrow \overline{T}\);

-

\(\Vert {X(t)}\Vert _{H^1}\rightarrow \infty \) as \(t\nearrow \overline{T}\).

The first possibility is excluded by Corollary 4.13, and the second is excluded by Corollary 4.11 (we use \(\overline{T}<\infty \) here). This is a contradiction, so we must have that \(\overline{T} = \infty \).

The argument in the backward time direction is completely analogous: suppose \(\overline{T}\) is the maximal time such that the flow X can be extended backward: \(t\in (\overline{T}, T)\). If \(\overline{T} > -\infty \), then one or more of the following have occurred:

-

\({\mathcal {L}}(X(t))\rightarrow 0\) as \(t\searrow \overline{T}\);

-

\(\Vert {X(t)}\Vert _{H^1}\rightarrow \infty \) as \(t\searrow \overline{T}\).

The first possibility is excluded by the fact that the flow decreases length. The second is excluded by Corollary 4.11 (we again use \(\overline{T}>-\infty \) here). This is a contradiction, so we must have that \(\overline{T} = -\infty \). \(\square \)

Due to Lemma 4.12, Theorem 4.14 implies a similar statement for the \(H^1(ds^\gamma )\) curve shortening flow on \({{\,\mathrm{\text {Imm}}\,}}^1\), on which space it is a legitimate gradient flow:

Corollary 4.15

For each \(X_0\in {{\,\mathrm{\text {Imm}}\,}}^1\) there exists a unique eternal \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times \mathbb {R}\rightarrow \mathbb {R}^2\) in \(C^1(\mathbb {R}; {{\,\mathrm{\text {Imm}}\,}}^1)\) such that \(X(\cdot ,0) = X_0\).

4.3 Convergence

In this subsection, we examine the forward in time limit for the flow. The backward limit is not expected to have nice properties. One way to see this is in the \(H^1(ds^\gamma )\) length of the tail of an \(H^1(ds^\gamma )\) curve shortening flow. (We will see in Lemma 4.20 that the \(H^1(ds^\gamma )\) length of any forward trajectory is finite.) For instance, a circle evolving under the flow has radius \(r(t) = \sqrt{W(e^{c-2t})}\). The \(H^1(ds^\gamma )\) length is larger than the \(L^2(ds^\gamma )\) length, and this grows (as \(t\rightarrow -\infty \)) linear in \(W(e^{c-2t})\). This is not bounded, and so in particular the \(H^1(ds^\gamma )\)-length of any negative tail is unbounded. The \(H^1(d{\bar{s}}^\gamma )\)-length of the negative time tail for the gradient flow of length with respect to the 2-homogeneous metric (see Eq. (10) and Remark 4.5) is similarly unbounded.

Throughout we let \(X:(-\infty ,\infty )\rightarrow H^1(\mathbb {S}^1,\mathbb {R}^2)\) be a solution to the \(H^1(ds^\gamma )\) curve shortening flow (15). We will prove \(\lim _{t\rightarrow \infty } X(t)\) exists and is equal to a constant map. In order to use the \(H^1(ds^\gamma )\) gradient (see Remark 4.2) we present the proof for the case where X(t) is immersed, but the results can be extended to the flow in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) as described in Remark 4.23 below.

It will be convenient to define K by

so that \(G=-K(|s-{\tilde{s}}|)\), \(K(s)>0\) and \(\int _0^{\mathcal {L}}K(s)\,ds = 1\). We take the periodic extension of K to all of \(\mathbb {R}\), which we still denote by K, and then

Define, for any \(\gamma \in H^1(\mathbb {S}^1,\mathbb {R}^2)\),

This is a so-called ‘nonlinear’ (in \(\gamma \)) convolution. We have the following version of Young’s convolution inequality.

Lemma 4.16

For any \(\gamma \in H^1(\mathbb {S}^1,\mathbb {R}^2)\) and K,\(*\) as above, we have

Proof

Write

then by the Hölder inequality and (38)

Hence

\(\square \)

The convolution inequality implies the following a-priori estimate in \(L^2\).

Lemma 4.17

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. Then \(\Vert {X(t)}\Vert _{L^2(ds^\gamma )} \) is non-increasing as a function of t.

Proof

First note that since \(G_s=-G_{\tilde{s}}\) we have

Then (using \(\frac{d}{dt} ds^X={\left\langle {X_{ts}, X_s} \right\rangle }\, ds^X\)) we find

Hölder’s inequality and the convolution inequality (39) now yield

\(\square \)

Now we give a fundamental estimate for the \(H^1(ds^\gamma )\)-gradient of length along the flow.

Lemma 4.18

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. There exists a constant \(C>0\) depending on X(0) such that

for all \(t\in [0,\infty )\).

Proof

From (2) and (14), if X is a solution of (15) then

Integration by parts with (12) gives

Since \(\frac{d{\mathcal {L}}}{dt}=-\Vert {{\,\textrm{grad}\,}}_{H^1(ds^\gamma )}{\mathcal {L}}_{X}\Vert _{H^1(ds^\gamma )}^2\), we have

The inequality \(2ab\le \varepsilon a^2 +\frac{1}{\varepsilon }b^2\) for all \(\varepsilon >0\) implies

Now Lemma 4.9 yields

and choosing \(\varepsilon \) sufficiently small gives (40). \(\square \)

The gradient inequality immediately implies exponential decay of length.

Lemma 4.19

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. The length \({\mathcal {L}}(X(t))\) converges to zero exponentially fast as \(t\rightarrow \infty \).

Proof

Using the gradient inequality (40) we have

Integrating gives

as required. \(\square \)

Another consequence of the gradient inequality is boundedness of the \(H^1(ds^\gamma )\) length of the positive trajectory X.

Lemma 4.20

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. The \(H^1(ds^\gamma )\)-length of \(\{X(\cdot ,t)\,:\,t\in (0,\infty )\}\subset H^1(\mathbb {S}^1,\mathbb {R}^2)\) is finite.

Proof

From the gradient inequality (40)

i.e.

and therefore

Taking the limit \(t\rightarrow \infty \) in the above inequality, the left hand side is the length of the trajectory \(X:[0,\infty )\rightarrow H^1(\mathbb {S}^1,\mathbb {R}^2)\) measured in the \(H^1(ds^\gamma )\) metric. \(\square \)

Now we conclude convergence to a point.

Theorem 4.21

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. Then X converges as \(t\rightarrow \infty \) in \(H^1\) to a constant map \(X_\infty \in H^1(\mathbb {S}^1,\mathbb {R}^2)\).

Proof

Recalling (21) we have \({\left| {X_t}\right| } \le {\mathcal {L}}\) and therefore

Using \(G_s=-G_{\tilde{s}}\) we have

and then from (34)

Hence \({\left| {X_{tu}}\right| }\le {\left| {X_u}\right| }(1+{\mathcal {L}}^2/2)\). Recalling (35) and then (42)

Using the second inequality, multiply by the integrating factor \(e^{p(t)}\) where

and integrate with respect to t to find

for some constant \(c_3\). For future reference, we note that the same procedure can be applied to the lower bound in (46) and then

We therefore have

and then referring back to (44):

Using the gradient inequality (40) and monotonicity of \({\mathcal {L}}\) we obtain

By integrating \(\frac{d{\mathcal {L}}}{dt}=-\Vert {X_t}\Vert _{H^1(ds^\gamma )}^2\) with respect to t we have

Hence for all \(\varepsilon >0\) there exists \(t_\varepsilon \) such that \(\int _t^\infty \Vert {X_t}\Vert _{H^1} dt <\varepsilon \) for all \(t\ge t_\varepsilon \), and since

it follows that \(X_t\) converges in \(H^1\) to some \(X_\infty \). By (42) the length of \(X_\infty \) is zero, i.e. it is a constant map. \(\square \)

Remark 4.22

If \(({{\,\mathrm{\text {Imm}}\,}}^1,H^1(ds^\gamma ))\) were a complete metric space then Lemma 4.20 would be enough to conclude convergence of the flow. However it is shown in [23] section 6.1 that the \(H^1(ds^\gamma )\) geodesic of concentric circles can shrink to a point in finite time, so the space is not even geodesically complete. Indeed, Theorem 4.21 demonstrates convergence of the flow with finite path length to a point outside \(\text {Imm}^1\), proving again that \(({{\,\mathrm{\text {Imm}}\,}}^1, H^1(ds^\gamma ))\) is not metrically complete.

Remark 4.23

To see that the convergence result above holds for initial data \(X(0)\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\), the main point is to establish equation (41). To do this we can approximate by \(C^2\) immersions, as it follows from eg. Theorem 2.12 in [16] that these are dense in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\). Given \(X(t_0)\in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) we let \(X_\varepsilon (t_0)\) be an immersion such that \(\Vert {X(t)-X_\varepsilon (t)}\Vert _{H^1}\le \varepsilon \) for t in a neighborhood of \(t_0\). Then following (41) we have

The limit exists because all the terms are bounded by \(\Vert {X_\varepsilon }\Vert _{H^1}\) (for \((X_\varepsilon )_t\) this follows from (29)). Similarly \(\frac{d{\mathcal {L}}(X)}{dt}=-\Vert {F(X)}\Vert ^2_{H^1}\) and we proceed with the rest of the proofs by writing F(X) or \(X_t\) in place of \(-{{\,\textrm{grad}\,}}_{H^1(ds^\gamma )}{\mathcal {L}}_X\).

5 Shape Evolution and Asymptotics

5.1 Generic Qualitative Behavior of the Flow

Computational experiments indicate that the flow tends to reshape the initial data, gradually rounding out corners and improving regularity. However the scale dependence of the flow introduces an interesting effect: when the length becomes small, the ‘reshaping power’ seems to run out and curves shrink approximately self-similarly, preserving regions of low regularity. This means that corners of small polygons persist whereas corners of large polygons round off under the flow (cf. Figure 1).

Heuristically, this is because of the behavior of G as \({\mathcal {L}}\rightarrow 0\). If we Taylor expand

since \({\left| {s-{\tilde{s}}}\right| }\le {\mathcal {L}}\), the constant term dominates when \({\mathcal {L}}\) is small. Then

and \(\lim _{{\mathcal {L}}\rightarrow 0}\frac{{\mathcal {L}}}{2\sinh ({\mathcal {L}}/2)}=1\) so each point on the curve moves toward its center.

5.2 Remarks on the Numerical Simulations

The numerical simulations were carried out in Julia using a basic forward Euler method. Curves are approximated by polygons. For initial data we take an ordered list of vertices \(X_i\) in \(\mathbb {R}^2\) of a polygon and the length of X is of course just the perimeter of the polygon. The arc length \(s_i\) at \(X_i\) is the sum of distances between vertices up to \(X_i\) and for the arc-length element \(ds_i\) we use the average of the distance to the previous vertex and the distance to the next vertex. The Green’s function is then calculated at each pair of vertices:

and the flow velocity \(V_i\) at \(X_i\) is

The new position \({\tilde{X}}_i\) of the vertex \(X_i\) is calculated by forward-Euler with timestep h: \({\tilde{X}}_i=X_i+h V_i\). No efforts were made to quantify errors or test accuracy, but the results appear reasonable and stable provided time steps are not too large and there are sufficiently many vertices. A Jupyter notebook containing the code is available online [27].

5.3 Evolution and Convergence of an Exponential Rescaling

Definition

Let \(X:[0,\infty )\rightarrow H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) be a solution to the \(H^1(ds^\gamma )\) curve shortening flow (15). We define the asymptotic profile Y of X as

We anchor the asymptotic profile so that \(Y(t,0) = 0\) for all t. This is not only for convenience; if the final point that the flow converges to is not the origin, then an unanchored profile \({\tilde{Y}} = e^t X\) would simply disappear at infinity and not converge to anything.

The aim in this section is to prove that the asymptotic profile converges. Simulations indicate that there are a variety of possible shapes for the limit (once we know it exists); numerically, even a simple rescaling of the given initial data may alter the asymptotic profile. As in the previous section we present the results under the assumption that X is a flow of immersed curves, but they can be extended to \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) by the method described in Remark 4.23.

We will need the following refinement of the gradient inequality.

Lemma 5.1

Let X be an \(H^1(ds^\gamma )\) curve shortening flow. For any \(\alpha \in (0,1)\) there exists \(t_\alpha \) such that

for all \(t\ge t_\alpha \).

Proof

We abbreviate the gradient to \({{\,\textrm{grad}\,}}{\mathcal {L}}_X\) in order to lighten the notation. Equation (21) implies

and therefore from (41) and \(\frac{d{\mathcal {L}}}{dt}=-\Vert {{\,\textrm{grad}\,}}{\mathcal {L}}(X)\Vert _{H^1(ds^\gamma )}^2\):

where we have also used Lemma 4.9. Now using (42)

If \(\alpha \ge 1-\Vert {X(0)}\Vert _\infty {\mathcal {L}}(0) \) we can find the required \(t_\alpha \) by solving \(\alpha =1- \Vert {X(0)}\Vert _\infty {\mathcal {L}}(0) e^{-Ct_\alpha }\), otherwise \(t_\alpha =0\). \(\square \)

We also need an upper bound for the gradient in terms of length.

Lemma 5.2

For \(X\in H^1(\mathbb {S}^1,\mathbb {R}^2)\),

Proof

From (21) we have

Then (45) implies

and the result follows. \(\square \)

We now prove convergence of the asymptotic profile along a subsequence of times – sometimes this is called subconvergence.

Theorem 5.3

Let X be an \(H^1(ds^\gamma )\) curve shortening flow and Y its asymptotic profile. There is a non-trivial \(Y_\infty \in C^0(\mathbb {S}^1,\mathbb {R}^2)\) such that Y(t) has a convergent subsequence \(Y(t_i)\rightarrow Y_\infty \) in \(C^0\) as \(i\rightarrow \infty \).

Proof

We will show that Y(t) is eventually uniformly bounded in \(H^1\). First we claim that there exist constants \(c_0,c_1>0\) and \(t_0<\infty \) such that

For the upper bound, from \({\mathcal {L}}(Y)=e^t{\mathcal {L}}(X)\), (41) and (48)

From Lemma 5.1, for any \(\alpha \in (0,1)\) there exists \(t_\alpha \) such that

hence \( {\mathcal {L}}(X(t))\le {\mathcal {L}}(X(t_\alpha ))e^{-\alpha t}\) for \( t>t_\alpha . \) Using this in (51) with eg. \(\alpha =\frac{3}{4}\),

where c is a constant depending on X(0) and \({\mathcal {L}}(X(t_{3/4}))\). Integrating from \(t_{3/4}\) to t gives

which gives an upper bound for \({\mathcal {L}}(Y(t))\) for \(t\ge t_{3/4}\). For the lower bound the estimate (49) gives

Let \(t_\beta \) be such that \({\mathcal {L}}(X(t))<1\) for all \(t>t_\beta \). (From (42) we can find \(t_\beta \) by solving \(1 = {\mathcal {L}}(0)e^{-Ct_\beta }\).)

Then also using the gradient inequality (40) there is a constant c such that

Recalling (49), this implies (\(t>\max \{t_\beta ,t_{3/4}\}\))

Integrating with respect to t, there is a constant \(c_0\) such that

and therefore \({\mathcal {L}}(Y)\ge c_0\) for all \(t>t_\beta \). Choosing \(t_0\) to be the greater of \(t_{3/4}, t_\beta \), we have established the claim (50). We claim also that

To see this, note that by the Fundamental Theorem of Calculus followed by the Hölder inequality applied to each component of Y:

and so (50) gives \(\Vert {Y}\Vert _{L^2}\le c_1 \).

Multiplying (47) by \(e^{2t}\) gives

We therefore have a uniform bound on \(\Vert {Y_u}\Vert _{L^p}\) for \(1\le p \le \infty \) in terms of \(\Vert {X_u(0)}\Vert _{L^p}\). In particular, if \(X(0)\in H^1\) we have a uniform \(H^1\) bound for Y and then by the Arzela-Ascoli theorem there is a sequence \((t_i)\) and a \(Y_\infty \in W^{1,\infty }\) such that \(Y(t_i)\rightarrow Y_\infty \) in \(C^0\) (cf. [19] Theorems 7.28, 5.37 and the proof of 5.38). \(\square \)

This result can be quickly upgraded to full convergence using a powerful decay estimate.

Theorem 5.4

Let X be an \(H^1(ds^\gamma )\) curve shortening flow and Y its asymptotic profile. There is a non-trivial \(Y_\infty \in H^1(\mathbb {S}^1,\mathbb {R}^2)\) such that \(Y(t)\rightarrow Y_\infty \) in \(C^0\) as \(t\rightarrow \infty \).

Proof

For the evolution of Y we calculate

The \(\frac{1}{2}\)-Lipschitz property for G (from (28)) implies that

by the estimate (52) in Theorem 5.3. The estimates in the proof of Theorem 5.3 include \(||Y||_\infty \le c\). Using these we find

Exponential decay of the velocity implies full convergence by a standard argument (a straightforward modification to \(C^0\) of the \(C^\infty \) argument in [3, Appendix A] for instance). \(\square \)

The convergence result (Theorem 5.4) applies in great generality. If the initial data \(X_0\) is better than a generic map in \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\), for instance if it is an immersion, has well-defined curvature, or further regularity, then this is preserved by the flow. That claim is proved in the next section (see Theorem 5.6). In these cases, we expect the asymptotic profile also enjoys these additional properties. This is established in the \(C^2\) space in the section following that (see Theorem 5.7).

Remark 5.5

The asymptotic shape is very difficult to determine, in particular, it is not clear if there is a closed-form equation that it must satisfy. As mentioned earlier, we see this in the numerics. We can also see this in the decay of the flow velocity \(Y_t\). It decays not because the shape has been optimised to a certain point, but simply because sufficient time has passed so that the exponential decay terms take over. The asymptotic profile of the flow is effectively constrained to a tubular neighborhood of Y(0).

5.4 The \(H^1(ds^\gamma )\)-Flow in \({{\,\mathrm{\text {Imm}}\,}}^k\) Spaces

Observe from (21) and (23) that if \(\gamma \in C^1\) then \({{\,\textrm{grad}\,}}{\mathcal {L}}_\gamma \) is also \(C^1\). We might therefore consider the flow with \({{\,\mathrm{\text {Imm}}\,}}^1\) initial data as an ODE on \({{\,\mathrm{\text {Imm}}\,}}^1\) (instead of \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\)). In fact the same is true for \({{\,\mathrm{\text {Imm}}\,}}^2\) ( and moreover \({{\,\mathrm{\text {Imm}}\,}}^k\)) as we now demonstrate.

Assume \(X\in C^2\) is an immersion, then

and using \(G_{ss}=G_{{\tilde{s}}{\tilde{s}}}\) as well as integrating by parts we obtain

Now from

we have that \(\Vert {X_{ss}}\Vert _\infty \) is bounded provided \(|X_u|\) is bounded away from zero for all u. Assuming this is the case we have furthermore from (56) that \(|({{\,\textrm{grad}\,}}{\mathcal {L}}_X)_{uu}|\) is bounded and \({{\,\textrm{grad}\,}}{\mathcal {L}}_X \in C^2\). We may therefore consider the flow as an ODE in \({{\,\mathrm{\text {Imm}}\,}}^2\). Short time existence requires a \(C^2\) Lipschitz estimate. One can estimate \(\Vert {{{\,\textrm{grad}\,}}{\mathcal {L}}_X-{{\,\textrm{grad}\,}}{\mathcal {L}}_Y}\Vert _{C^1} \) much the same as in Lemma 4.8. From (56) and product expansions as in Lemma 4.8:

The result now follows from the Lipschitz estimate for G, together with estimates \(|X_s-Y_s|\le c|X_u-Y_u|\) and \(|X_{ss}-Y_{ss}|\le c|X_{uu}-Y_{uu}|\) which also follow from product expansions using eg (57).

It follows from (47) that if X(0) is \(C^1\), then X(t) is \(C^1\) for all \(t<\infty \), and moreover \(|X_u(t)|\) is bounded away from zero for all \(t<\infty \), so we have global existence for the \(C^1\) flow.

Suppose X(t) is \(C^2\) for a short time, then from (56)

From (57) notice \(|X_{ss}|\le 2|X_{uu}|\,|X_u|^{-2}\) and therefore

where \(c=\Vert {X_u}\Vert _\infty \sup _u |X_u|^{-1}\). Supposing that at time \(t_0\), \(\Vert {X_{uu}}\Vert _\infty \) is attained at \(u_0\), it follows that

and therefore \(\Vert {X_{uu}(u_0,t)}\Vert \le e^{ct}\). By the short time existence \(\Vert {X_{uu}}\Vert _\infty \) is continuous in t, so in fact

and we have global \(C^2\).

For the \({{\,\mathrm{\text {Imm}}\,}}^k\) case there is little that is novel and much that is tedious. Claim:

where each \(P_i\) is polynomial in the derivatives of X up to order \(k-1\). From (55) this is true for \(k=2.\) Assuming it is true for k we have

where each \({\tilde{P}}_i\) is polynomial in the derivatives of X up to order k. From (58) and \(\partial _s^kG=-\partial _{{\tilde{s}}}^kG \) we can calculate \(\partial _u^k ({{\,\textrm{grad}\,}}{\mathcal {L}}_X)\) and observe that if X is in \({{\,\mathrm{\text {Imm}}\,}}^k\) then so is \({{\,\textrm{grad}\,}}{\mathcal {L}}_X\). We may therefore consider the gradient flow as an ODE in \({{\,\mathrm{\text {Imm}}\,}}^k\). Short time existence requires a \(C^k\) Lipschitz estimate. We claim that such an estimate can be proved inductively using using \(\partial _u^k({{\,\textrm{grad}\,}}\mathcal {L}_X)\) by similar methods to those used above for the \(C^2\) case, except with longer product expansions. As it is the same technique but only with a longer proof, we omit it.

In summary, we have:

Theorem 5.6

Let \(k\in \mathbb {N}\) be a natural number. For each \(X_0\in {{\,\mathrm{\text {Imm}}\,}}^k\) there exists a unique eternal \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times \mathbb {R}\rightarrow \mathbb {R}^2\) in \(C^1(\mathbb {R}; {{\,\mathrm{\text {Imm}}\,}}^k)\) such that \(X(\cdot ,0) = X_0\).

5.5 Curvature Bound for the Rescaled Flow

In this subsection, we study the \(H^1(ds^\gamma )\) curve shortening flow in the space of \(C^2\) immersions. This means that the flow has a well-defined notion of scalar curvature. Note that while the arguments in the previous section show that the \(C^2\)-norm of X is bounded for all t, they do not show that this bound persists through to the limit of the asymptotic profile \(Y_\infty \). They need to be much stronger for that to happen: not only uniform in t, but on X they must respect the rescaling factor.

The main result in this section (Theorem 5.7) states that this is possible, and that the limit \(Y_\infty \) of the asymptotic profile in the \(C^2\)-space enjoys \(C^2\) regularity, being an immersion with bounded curvature.

We start with the commutator of \(\partial _s\) and \(\partial _t\) along the flow X(t, u). Given a differentiable function f(u, t):

From \(X_{ss}=kN\) we have \(X_{sst}=kN_t+k_tN\) and then using \(\langle N_t,N\rangle =0\),

Applying (59) twice

and then

where \(({{\,\textrm{grad}\,}}{\mathcal {L}}_X)_{ss}-{{\,\textrm{grad}\,}}{\mathcal {L}}_X=kN\) (from (11)) and \({{\,\textrm{grad}\,}}{\mathcal {L}}_s=T+T*G\) have been used. Therefore

Now letting

using (21) and (34) to estimate (60), we find

Note that in the second inequality we used \(a \le 1 + a^2/4\), which holds for any \(a\in \mathbb {R}\). Integration gives

and so by the Bellman inequality ( [26] Thm. 1.2.2)

Since \({\mathcal {L}}(X)\) decays exponentially (42), we have that \(\varphi \) is uniformly bounded.

This gives stronger convergence for Y in the case of \(C^2\) data, and we conclude the following. (Note that the fact \(Y_\infty \) is an immersion followed already from (54).)

Theorem 5.7

Let X be an \(H^1(ds^\gamma )\) curve shortening flow with \(X(0)\in {{\,\mathrm{\text {Imm}}\,}}^2 \), and Y its asymptotic profile. There is a non-trivial \(Y_\infty \in {{\,\mathrm{\text {Imm}}\,}}^2\) such that \(Y(t)\rightarrow Y_\infty \) in \(C^0\) as \(t\rightarrow \infty \). That is, the asymptotic profile converges to a unique limit that is immersed with well-defined curvature.

5.6 Isoperimetric Deficit

The goal of the remaining sections of the paper is to prove the following.

Theorem 5.8

Let \(k\in \mathbb {N}_0\) be a non-negative integer. Set \(\mathcal {B}\) to \(H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) for \(k=0\) and otherwise set \(\mathcal {B}\) to \(C^k(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\). For each \(X_0\in \mathcal {B}\) there exists a non-trivial \(Y_\infty \in H^1(\mathbb {S}^1,\mathbb {R}^2)\setminus \mathcal {C}\) such that the asymptotic profile \(Y(t)\rightarrow Y_\infty \) in \(C^0\) as \(t\rightarrow \infty \).

Furthermore:

-

\(Y_\infty \) is embedded if at any \(t\in (0,\infty )\) the condition (6) was satisfied for X(t)

-

If \(k\ge 2\), and X(0) is immersed, then \(Y_\infty \) is immersed with bounded curvature

-

There is a constant \(c = c(\Vert {X(0)}\Vert _\infty )\) such that the isoperimetric deficit of \(Y_\infty \) satisfies

$$\begin{aligned} \mathcal {D}_{Y_\infty } \le c\mathcal {D}_{X(0)}. \end{aligned}$$

In this section, we show that the isoperimetric deficit of the limit of the asymptotic profile \(Y_\infty \) is bounded in terms of the isoperimetric deficit of \(X_0\). This is in a sense optimal, because of the great variety of limits for the rescaled flow, it is not reasonable to expect that the deficit always improves. Indeed, numerical evidence suggests that the deficit is not monotone under the flow. Nevertheless, it is reasonable to hope that the flow does not move the isoperimetric deficit too far from that of the initial curve, and that’s what the main result of this section confirms.

5.6.1 Area

We start by deriving the evolution of the signed enclosed area. Using (59) we find

Differentiating \(\langle N,T \rangle =0\) and \(\langle N,N\rangle =1\) with respect to t yields

Therefore

Using the area formula \(A=-\frac{1}{2}\int _0^{\mathcal {L}}\langle X, N\rangle ds^X\), and \(ds^X={\left| {X_u}\right| }du\) implies \(\frac{d}{dt}ds^X=\langle X_{ts},X_s \rangle ds^X\), we calculate the time evolution of area as

Now since \(\partial _s \left( \langle X,N\rangle T-\langle X,T\rangle N\right) =-N\), integration by parts gives

5.6.2 Estimate for the Deficit

Consider the isoperimetric deficit

From \(\frac{d}{dt} {\mathcal {L}}=\int \langle kN,{{\,\textrm{grad}\,}}{\mathcal {L}}_X\rangle ds\) and (62) we find

With the gradient in the form

we use the second order Taylor approximation

Note that \(\int X({\tilde{s}})-X(s) d {\tilde{s}}={\mathcal {L}}({\bar{X}}-X(s))\) and moreover

where the \({\bar{X}}\) term vanishes because kN and N are both derivatives and \({\mathcal {L}}{\bar{X}}\) is independent of s. Hence

For the terms involving k we have, for example,

and therefore we estimate

Because \(\frac{{\mathcal {L}}}{2\sinh ({\mathcal {L}}/2)}\le 1\) and \(\mathcal {D}\ge 0\), we have

For the isoperimetric deficit \(\mathcal {D}_Y\) of the asymptotic profile Y, we have

hence

From Lemma 5.1 we can take \(t\ge t_{3/4}\) such that \(o({\mathcal {L}}^4) e^{2t}\) decays like \(e^{-t}\) for \(t>t_{3/4}\). If \(\frac{3}{4} \ge 1-\Vert {X(0)}\Vert _\infty {\mathcal {L}}(0) \) we can find the required \(t_{3/4}\) by solving \(\frac{3}{4} = 1- \Vert {X(0)}\Vert _\infty {\mathcal {L}}(0)e^{-Ct_{3/4}}\), otherwise \(t_{3/4}=0\). The constant C is from the gradient inequality and also depends on \(\Vert {X(0)}\Vert _\infty \). Therefore the estimate for the integral of the extra terms depends only on X(0).

Integrating with respect to t gives

The Taylor expansion for \(x\mapsto x/(2\sinh (x/2))\) yields

Now using again the exponential decay of \({\mathcal {L}}\) we find

Summarising, we have:

Proposition 5.9

Let X be an \(H^1(ds^\gamma )\) curve shortening flow and Y its asymptotic profile. There is a non-trivial \(Y_\infty \in H^1(\mathbb {S}^1,\mathbb {R}^2)\) such that \(Y(t)\rightarrow Y_\infty \) in \(C^0\) as \(t\rightarrow \infty \). Furthermore, there is a constant \(c = c(\Vert {X(0)}\Vert _\infty )\) such that the isoperimetric deficit of \(Y_\infty \) satisfies

5.7 A Chord-Length Estimate and Embeddedness

The purpose of this section is to finish the proof of the following:

Theorem 5.10

Let \(k\in \mathbb {N}\). For each \(X_0\in {{\,\mathrm{\text {Imm}}\,}}^k\) there exists a unique eternal \(H^1(ds^\gamma )\) curve shortening flow \(X:\mathbb {S}^1\times \mathbb {R}\rightarrow \mathbb {R}^2\) in \(C^1(\mathbb {R}; {{\,\mathrm{\text {Imm}}\,}}^k)\) such that \(X(\cdot ,0) = X_0\).

Furthermore, suppose \(X_0\) satisfies

where

Then there exists a \(C = C(X_0) > 0\) such that

for all t.

In particular X (as well as its asymptotic profile and limit \(Y_\infty \)) is a family of embeddings.

What remains is to prove that if the flow is sufficiently embedded (relative to total length) at any time, it must remain embedded for all future times. Note that this holds also for the asymptotic profile (the chord-arc ratio is scale-invariant).

We achieve this via a study of the squared chord-arc ratio:

Let us fix \(u_1\), \(u_2\) (with \(u_1 < u_2\)) in the below, where we will often write simply \(\mathcal {C}\!h\) and \(\mathcal {S}\). Note for later that \(\mathcal {S}= \int _{u_1}^{u_2} |\partial _u X|\,du = \int _{s_1}^{s_2}\,ds^X\). Recalling that

we find

and define

so that

Since we have \(\mathcal {S}=\int _{s_1}^{s_2} ds^X\) and \(\frac{d}{dt}ds^X=\langle X_{ts},X_s \rangle ds^X\) we obtain

Now let

and then

Therefore the time evolution of the squared chord-arc ratio is given by

Using the estimates (that follow via Poincaré and (34))

and recalling the length decay estimate (42) we see that

Therefore

Lemma 4.19, and choosing the appropriate \(\varepsilon \) in the proof of Lemma 4.18, implies that

where \(\beta (X_0) = 1/\sqrt{2+\Vert {X_0}\Vert _\infty ^2}\).

Now let us impose the following hypothesis on \(X_0\):

We calculate

Integration gives

By hypothesis (63) the RHS is positive, and so the function \(\sqrt{\phi }\) can never vanish. In fact, as \({\mathcal {L}}\) decays exponentially, \(\phi \) is uniformly bounded from below by a constant depending on \(X_0\).

Since the chord-arc length ratio is scale-invariant, the same is true for the asymptotic profile Y. Moreover, the hypothesis (63) may be satisfied simply by scaling any embedded initial data (again, \(\phi \) is scale-invariant, but the RHS of (63) is not).

Thus (keeping in mind Theorem 5.6) we conclude Theorem 5.10. Moreover Theorem 5.7, Proposition 5.9 and Theorem 5.10 complete the proof of Theorem 5.8.

Notes

\(\text {Diff}(\mathbb {S}^1)\) is the regular Lie group of all diffeomorphisms \(\phi :\mathbb {S}^1\rightarrow \mathbb {S}^1\) with connected components \(\text {Diff}^+(\mathbb {S}^1)\), \(\text {Diff}^-(\mathbb {S}^1)\) given by orientation preserving and orientation reversing diffeomorphisms respectively.

Note that this does not guarantee that the metric is non-degenerate–there may still exist distinct immersions with zero geodesic distance between them.

This is also the case for the classical curve shortening flow, as explained in [14].

Note that as paths in the full space \(\text {Imm}^1\), \({\tilde{\alpha }}\) is different to \(\alpha \), but they project to the same path in \(\mathcal {Q}\).

We remark that this phenomenon of triviality of the metric topology induced by the Riemannian \(L^2(ds^\gamma )\) metric on the quotient space is also established in higher dimensions, see [21].

References

Andrews, B., Bryan, P.: Curvature bound for curve shortening flow via distance comparison and a direct proof of Grayson’s theorem. J. Reine Angew. Math. 2011(653), 179–187 (2011)

Andrews, B., Chow, B., Guenther, C.M., Langford, M.: Extrinsic Geometric Flows. American Mathematical Society, Providence (2020)

Andrews, B., McCoy, J., Wheeler, G., Wheeler, V.-M.: Closed ideal planar curves. Geom. Topol. 24(2), 1019–1049 (2020)