Abstract

This paper deals with the analysis of the statistical properties of the profitability yielded by Private Equity from a fractionally integrated viewpoint. Using quarterly data from 1981q2 to 2021q3, the results support the hypothesis of stationarity and mean reversion in all cases; however, we observe differences in the degree of persistence across regions, Europe being the closest to short memory while the US shows the highest degree of long range dependence and thus the longer lasting effects of shocks. Some policy recommendations of the results obtained are included at the end of the manuscript.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The aim of this paper is to study the evolution of profitability of private equity across time and geographically, and more specifically, whether the shocks in the series over time feature transitory or permanent effects, looking at both aggregated and disaggregated data by region. We focus on four specific areas: US, Europe, Asia/Pacific and Rest of the World, along with the “Total” data. For this purpose, we use methodologies based on the concept of fractional integration, employing updating techniques in time series analysis.

Assessment of the type of shock is normally performed by unit root tests (Dickey and Fuller 1979; Phillips and Perron 1988 and others). In the case that the process has no unit roots, it is supposed to be stationary and hence exhibiting reversion to the mean (the lagged level, pre-shock, will drive the reversion to the mean). However, should it have unit roots, the process does not revert to the mean, the shock having a permanent effect on the series. In this article, we depart from these classical methods by using fractional integration, which is more flexible and general in the sense that it allows fractional degrees of differentiation and mean reversion takes place as long as the differencing parameter is significantly smaller than 1. As later explained in the manuscript, this will allow us to consider flexible approaches, including, for example, nonstationary though mean reverting processes if the differencing parameter is in the range [0.5, 1). Thus, the main objective of the paper is to determine if shocks in profitability of private equity have transitory or permanent effects, and we determine this by estimating the degree of differentiation of the series from a fractional viewpoint.

The structure of the paper is as follows: Sect. 2 presents a historical context of the profitability of private equity. Section 3 deals with the methodology employed in the paper. Section 4 displays the dataset and the main empirical results, while Sect. 5 concludes the paper.

2 Literature review

Private Equity (PE) has been mainly studied from these four perspectives: a) profitability (i.e. performance against a benchmark), b) key factors to select companies to invest in, c) valuation of PE funds, and d) interaction with limited partners (LP).

Research on profitability shed contradictory results ranging from—6% (Phalippou and Gottschalg 2009) to + 32% (Cochrane 2005). Regarding the benchmarks, Steger (2017) defends an index excluding large capitalized companies such as the Russel 2000 Index in the wake of a substantial part of private equity funds investing in small or mid-sized companies. Furthermore, several measures have been employed to track returns. IRR (Internal Rate of Return),Footnote 1 TVPI (Total Value to Paid-In, or “Money Multiple”) and PME (Public Market Equivalent). TVPI is defined by Phalippou and Gottschalg (2009) as the sum of all cash distributions plus the latest Net Assets Value (NAV) (which serves as a proxy for future cash flows), divided by the sum of all drawdowns. The PMEFootnote 2 approach, documented in Kaplan and Schoar (2005) is calculated as the sum of all discounted cash outflows over the sum of the discounted cash inflows, where the total return of the S&P 500 Index is used as the discount rate.

Gompers and Lerner (2000), Gompers et al. (2005) studied the organizational structure and performance of various venture capital funds. They found a strong positive relationship between the degree of specialization by individual venture capitalists at a firm and the firm’s success. They also concluded that experienced funds outperformed inexperienced funds, and that small and inexperienced funds are the main drivers of low performance in private equity funds.

Phalippou and Gottschalg (2009) depict a fund having typically a life of ten years, which can be extended to thirteen, reporting quarterly a Net Asset Value that reflects the value of on-going investments, and basically are non-tradable. Also, they suggest that two different assumptions have been made concerning the treatment of the final NAVs. The first and most frequent one treats the final NAV as a cash inflow of the same amount at the end of the sample period. That is, NAVs are assumed to be an unbiased assessment of the market value of a fund (e.g., Kaplan and Schoar 2005, and industry benchmarks). The second one only computes cash flows (e.g., Ljungqvist and Richardson 2003), what is applicable to “mature” funds and to follow up “on-going” funds (median IRR takes 8 years to turn positive).

As stated by Brown et al. (2016), adoption of SFAS 157Footnote 3 has not prevented PE firms from manipulating NAVs in two directions; inflate NAVs during times that fundraising activity is likely to occur,Footnote 4 and in contrast, top-performing funds under-report returns, which is a way to insure against future bad luck that could make them appear as though they are NAV manipulators.

As to the behavior itself of the profits over the time reaped by the PE, research has been oriented to the phenomenon of persistence, rather than presenting static models to explain its evolution over time.

Kaplan and Schoar (2005) find persistence not only between two consecutive funds, but also between the current fund and the second previous fund (unlike for mutual funds, that in case of existing, it is driven by underperformance, rather than overperformance). Moreover, the results suggest a statistically and economically strong persistence in private equity, particularly for Venture Capital funds. However, the persistence seems to have been declining according to Brown et al. (2016) and Korteweg and Sorensen (2017).

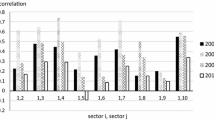

Ang et al. (2018) state that the structure and nature of the data are limited, which makes it particularly difficult to evaluate its time series properties, and assessing PE returns, construct an index for separate classes, which shows that their cycles are not highly correlated. This suggests that a diversified strategy across sub-asset classes of PE may be beneficial. Moreover, the authors’ index exhibits negligible serial dependence, in contrast to industry indices. This result is consistent with the smoothing induced by a conservative appraisal process or by a delayed and partial adjustment to market prices, which often arises in illiquid asset markets (see, e.g., Geltner 1991, and Ross and Zisler 1991).

More recently, Harris et al. (2020), using ex post or most recent fund performance (as of June 2019), confirm the findings on persistence overall as well as for pre-2001 and post-2000 funds.

3 Methodology

As earlier mentioned, the main objective in this paper is to determine if shocks in the series have permanent or transitory effects. For this purpose the most standard approach are the unit root procedures, widely employed to determine if the series of interest is stationary I(0) (and thus with shocks being temporary) or nonstationary I(1) (in which case shocks will have a permanent nature). Within this methodology, the ADF test (Dickey and Fuller 1979) is the most widely used procedure, though other more robust methods were later developed, including Phillips and Perron (1988), Kwiatkowski et al. (1992), Elliott et al. (1996), Ng and Perron (2001), etc. Nevertheless, all these methods have the drawback that they simply consider two potential scenarios, I(0) and I(1) and do not take into account fractional degrees of differentiation. This is important, noting that many authors have shown that the above mentioned procedures have extremely low power if the true data generating process is fractionally integrated. Classical references here are Diebold and Rudebush (1991), Hassler and Wolters (1994) and Lee and Schmidt (1996). Thus, in this paper we use an I(d) modelling framework of the following form:

where the operator B indicates a backshift function (i.e., Bxt = xt-1) and where ut is an integrated of order 0 or I(0) process, properly defined as a covariance or second order stationary process where the infinite sum of its autocovariances is finite. Thus, ut may be a white noise process but it might also display a weak autocorrelated (e.g., ARMA) structure.

The estimation of d is conducted via the Whittle function in the frequency domain and is implemented throughout a testing statistic derived in Robinson (1994), which is supposed to be the most efficient method in the Pitman (1936) sense against local departures from the null. We use a simple version of his method that is based on the following model,

where zt is a vector of deterministic terms that may include a constant and a linear trend among other terms, and ut is supposed to be I(0). Based on this set-up, Robinson (1994) proposed testing the null hypothesis:

for any real value do, including thus values in the stationary range (do < 0.5) as well as those being nonstationary (do > 0.5). In addition, another advantage of this approach is that its limit distribution is standard normal and this holds independently of the regressors used in zt, the values of do, and the specific structure of the I(0) error term ut.

Employing alternative parameteric methods (e.g., Sowell 1992) or even semiparametric ones (Shimotsu and Phillips (2005, 2006), the results were qualitatively very similar to those reported in this paper.

4 Data and empirical results

Quarterly data (available up to Q3-21) were retrieved from Cambridge Asociates LLC (CA) hosted in Eikon-Reuters database, selecting in first place, all the world and all kinds of assets, and secondly, broken down by geographical areas, according to the following four regions: United States, Europe, Asia/Pacific, Rest of World, and All the World). Together they produce a table with 744 records, of which 713 were finally counted after missing values were excluded.

The chosen metrics were “Pooled IRR” instead of leaning on other IRRs (average or weighted) or TVPI.Footnote 5 Due to having worked with the entire database from CA, the start periods for the analysis matches with those of the database, which vary depending on the geographical area and class of investment,

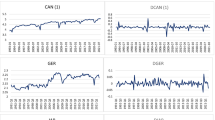

Table 1 gathers the starting dates and number of observations for each region along with maximum and minimum IRR values. Descriptive statistics are reported in Table 2. We see that the median quarterly IRR for the overall PE industry was 3.15% during the period spanning from 1981Q2 to 2021Q3Footnote 6 (3.22% in the United States, 3.78% in Europe, 1.79% in Asia/Pacific). The time series plots and their corresponding histograms are displayed in the Appendix. We observe that skewness to the right (positive index) is present in almost all the areas (also including the aggregation of total world) with the exception of the Rest of World, whereas the four regions (and also total world) show a leptokurtic distribution (index > 3, indicating that the values are largely concentrated around the mean). Shapiro–Wilk tests reject the hypothesis of the pooled IRR stemming from a normal distribution (graphically histograms overlaying normal distribution are featured in Appendix: Histograms), and addressing randomness, Runs tests only spot the returns from Europe to stick to a random process.

In the empirical application, we consider that xt in (1) can be the errors in a regression model incorporating an intercept and a linear time trend,

where β0 and β1 denote the unknown coefficients of these deterministic terms. In other words, the estimated model is:

and we report the estimates of the differencing parameter d under three different scenarios: i) first, we consider the case with no deterministic components, i.e., assuming that β0 and β1 are both set up equal to 0 a priori in Eq. (3); ii) then, we only include a constant, so β1 = 0, and iii) finally, with both coefficients, β0 and β1 freely estimated from the data along with d. In addition, we make different assumptions with respect to the error term ut in (3). Thus, in Table 3, we suppose ut is a white noise process; in Table 4, ut is allowed to be autocorrelated; however, instead of imposing here a given parametric model, we use the exponential spectral approach of Bloomfield (1973), which is non-parametric in the sense that no functional form is presented for ut but simply displaying its spectral density function, which is very similar (in logs) to the one produced by AR structures. Finally, in Table 5, and based on the quarterly structure of the data, a seasonal AR(1) process will be adopted.

Starting with the results based on white noise errors, in Table 3, the first thing we observe is that the time trend is not required in any single case, and the intercept coefficient is significant only for the case of Europe. More importantly, and focusing on the degree of integration, we observe that the values of d range from -0.08 in Europe to 0.43 in the USA. The null hypothesis of short memory or I(0) behavior cannot be rejected for Europe, although it is rejected in the remaining cases in favor of long memory (d > 0) or fractional integration, this value being 0.26 for Asia–Pacific; 0.35 for Rest of the World, and 0.41 for Total, the latter being clearly influenced by the large number obtained for the USA. It should be noticed here that only for Europe and Asia/Pacific the estimates of d are within the stationary region since the upper bounds of the confidence intervals are still below 0.5. However, for the remaining three series (United States, Rest of the World and Europe) the confident bands include values which some are below 0.5 while others are above 0.5).

If we allow for autocorrelation, first using the exponential spectral model of Bloomfield (1973), (Table 4) we notice first that the time trend coefficient is now statistically significant for Europe and Asia–Pacific, in the former case with a negative coefficient and in the latter with a positive one (see lower part of the table). With respect to the order of integration, the value is negative for Europe and Asia–Pacific, where the I(0) hypothesis cannot be rejected along with the Rest of the World (d = 0.03). However, for Total and the USA, the coefficient is significantly positive supporting once more the hypothesis of long memory (the estimated value of d is equal to 0.28 for Total and 0.30 for the USA). Note here that for United States and Total, the confidence intervals are very wide including values of d outside the stationary region (d ≥ 0.5). Finally, if seasonal autoregressions are permitted, in Table 5, the results are very similar to those based on white noise errors (Table 3) finding no evidence of time trends; I(0) behavior for the case of Europe and long memory (d > 0) in all the other cases, especially for the US data.

As a robustness method, we also use two widespread semiparametric estimation methods, the log-periodogram estimator (Geweke and Porter-Hudak 1983), and the local Whittle estimation approach of Künsch (1987) (Table 6). In both cases, a bandwidth parameter specifying the number of Fourier frequencies must be fed between 0 and 1, for which we follow Weijie et al. (2021) who propose the interval (0.58, 0.67) for the GPH estimator for a sequence length of 100, being (0.59, 0.68) when the length is 300. Results shown on Table 5 are consistent with those reported across Tables 2, 3, 4, with evidence of long memory being found in all cases except for Europe. Performing a parametric approach based on Haslett and Raftery (1989), the results are once more consistent with the previous one and long memory is found in all cases except for Europe (see Table 7).

Impulse responses for each region cannot be properly calculated noting that there is no explicit model in case of autocorrelated errors (or when the estimates are semiparametrically calculated). Nevertheless, and as approximation, we have computed half-lifeFootnote 7 shocks under the assumption of an AR(1) structure. Results are displayed in Table 8. They are consistent with our previous results noting that half-lives are smaller in the geographical areas possessing a lower degree of persistence (0.99 quarters for Europe versus 0.17 in Europe).

5 Conclusions

Our results based on fractional integration confirm the stationarity in the PE returns measured by “Pooled IRR” series, though showing evidence of long memory behavior in all series except for Europe. The USA displays the highest degree of persistence, following by the Rest of the World and Asia, while the order of integration for Europe is close to 0 by all methods employed.

The main finding of this paper underpins the idea that shocks will have long impacts in all regions except for Europe (more remarkably in case of the US, which may be a competitive advantage in the case of shocks ignited by innovation or on the other hand, entail lingering adverse economic effects in the face of supply/demand issues. To dig into the causes, the same analysis should be deployed by kind of asset, which could reveal a different composition of investment by geographical area. Furthermore, a long memory process cast doubts on the independence of the returns and would support the idea of GP smoothing the reported profits, yet it also might be the aftermath of better performance, as mentioned by Kaplan and Schoar (2005). Also, the short memory of PE returns in Europe (in the sense of lack of strong persistence), poses the question of benchmarking its performance against the United States (despite average IRR being somewhat higher in Europe, the standard deviation is higher which leads to a lower ratio mean-standard).

In this regard, industry practices should evolve towards a higher transparency, not ruling out, to place some of them legally in force. Firstly, this industry lags in IT in comparison with some other financial sectors. A higher embracement of digitization would enable a superior statistical handling of data, including what if analyses. Secondly, the attempts by supervising entities (such as SECFootnote 8) to broaden disclosure rules should be adopted. This double folded objective could be better grasped from the reporting devoted to Environmental, Social, and Governance (ESG) standards.

On the other hand, this study can gain in granularity if applied to (disaggregating) the factors shaping the excess returns of PE (namely, illiquidity premium, management by the GP, leverage, and risk adjustment). Moreover, fractional integration employed in analyzing the performance of public stock markets can be extended to private equity indexes, and more specifically when they are homogenized in terms of “public market equivalent” (Long and Nickels 1996). From a methodological viewpoint, the model can be extended to allow for non-linear structures including, for example, Chebyshev polynomials in time (Cuestas and Gil-Alana 2016), Fourier transform functions (Gil-Alana and Yaya 2021) or even neural networks (Yaya et al. 2021), all of them within the context of fractional integration. These lines of research will be developed in future papers.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

The internal rate of return on an investment or project is the "annualized effective compounded return rate" or rate of return that sets the net present value of all cash flows (both positive and negative) from the investment equal to zero. Its formula is: \(0=NPV=\sum_{t=1}^{T}\frac{{C}_{t}}{{(1+IRR)}^{t}}-{C}_{0}\)

Based on the Venture Capital, Private Equity and Merger & Acquisitions database, PitchBook, “Public Market Equivalent” is a metric designed to compare private capital fund performance to public indices. Essentially, the metric adapts public market returns into an IRR-like metric that accounts for irregular and fluctuating cash flows.

In September of 2006, the U.S. Financial Accounting Standards Board adopted Statement of Financial Accounting Standards 157 (SFAS 157) which effectively changed the NAV reporting standard for PE funds. Fair value was defined as “the price that would be received to sell an asset or paid to transfer a liability in an orderly transaction between market participants at the measurement date.” This Statement requires consideration of the exit price paid (if liability) or received (if asset) in a hypothetical transaction in an orderly market (i.e., not a forced liquidation or sold under duress). Furthermore, the Statement also introduces two more concepts to the definition of fair value – the Principal (or Most Advantageous) Market and Highest and Best Use.

However, those managers are unlikely to raise a next fund, suggesting that investors see through the manipulation.

Another metric extensively used is TVPI (Total Value to Paid In): The ratio of the current value of remaining investments within a fund, plus the total value of all distributions to date, relative to the total amount of capital paid into the fund to date. Hence, should it be larger than 1.0, our investment has gained value. It is a quite good performance indicator as long as the fund’s life has not reached its end. Moreover, it ignores the time value of money.

In the same period of time, the capitalization according to the data gathered by CA soared from 178 million dollars to 6,5 trillion dollars, representing a cumulative annual growth of a 29.8%.

The half-life is defined as the number of periods required for the response of a time series to be halved after a shock.

U.S. Securities and Exchange Commission (SEC) is an independent agency of the United States federal government. The primary purpose of the SEC is to enforce the law against market manipulation. To achieve its mandate, the SEC enforces the statutory requirement that public companies and other regulated companies submit quarterly and annual reports, as well as other periodic reports. Also, company executives must provide the so called "the management discussion and analysis" (MD&A), that outlines the previous year of operations and outlines the upcoming year,

References

Ang A, Chen B, Goetzmann W, Phalippou L (2018) Estimating private equity returns from limited partner cash flows. J Financ (John Wiley & Sons, Inc.) 73(4):1751–1783. https://doi.org/10.1111/jofi.12688

Bloomfield P (1973) An exponential model for the spectrum of a scalar time series. Biometrika 60(2):217–226. https://doi.org/10.2307/2334533

Brown GW, Gredil O, Kaplan SN (2016) Do private equity funds game returns? Fama-Miller working paper. University of North Carolina, Tulane University, and University of Chicago. https://doi.org/10.2139/ssrn.2271690

Cochrane J (2005) The risk and return of venture capital. J Financ Econ 75:3–52. https://doi.org/10.1016/j.jfineco.2004.03.006

Cuestas JC, Gil-Alana LA (2016) A nonlinear approach with long range dependence based on Chebyshev polynomials. Stud Nonlinear Dyn Econom 23:445–468

Dickey DA, Fuller WA (1979) Distribution of the estimators for autoregressive time series with a unit root. J Am Stat Assoc 74(366):427–431. https://doi.org/10.2307/2286348

Diebold FX, Rudebush GD (1991) On the power of Dickey-Fuller tests against fractional alternatives. Econ Lett 35:155–160. https://doi.org/10.1016/0165-1765(91)90163-F

Elliott G, Rothenberg TJ, Stock JH (1996) Efficient tests for an autoregressive unit root. Econometrica 64(4):813–836. https://doi.org/10.2307/2171846

Geltner DM (1991) Smoothing in appraisal-based returns. J Real Estate Financ Econ 4(3):327–345. https://doi.org/10.1007/BF00161933

Gil-Alana LA, Yaya O (2021) Testing fractional unit roots with non-linear smooth break approximations using Fourier functions. J Appl Stat 48(13–15):2542–2559. https://doi.org/10.1080/02664763.2020.1757047

Geweke J, Porter-Hudak S (1983) The estimation and application of long memory time series models. J Time Ser Anal 4:221–238. https://doi.org/10.1111/j.1467-9892.1983.tb00371.x

Gompers P, Lerner J (2000) Money chasing deals? The impact of fund inflows on private equity valuations. J Financ Econ 55(2):281–325. https://doi.org/10.1016/S0304-405X(99)00052-5

Gompers PA, Kovner A, Lerner J, Scharfstein D (2005) Venture capital investment cycles: the impact of public market. NBER Working Paper no. 11385, 2005. http://www.nber.org/papers/w11385

Harris RS, Jenkinson T, Kaplan SN, Stucke R (2020) Has persistence persisted in private equity? Evidence from buyout and venture capital funds. (No. w28109). National Bureau of Economic Research

Haslett J, Raftery AE (1989) Space-time modelling with long-memory dependence: assessing Ireland’s wind power resource. J R Stat Soc Ser C (Appl Stat) 38(1):1–50. https://doi.org/10.2307/2347679

Hassler U, Wolters J (1994) On the power of unit root tests against fractional alternatives. Econ Lett 45(1):1–5. https://doi.org/10.1016/0165-1765(94)90049-3

Kaplan SN, Schoar A (2005) Private equity performance: Returns, persistence, and capital flows. J Financ 60:1791–1823. https://doi.org/10.1111/j.1540-6261.2005.00780.x

Korteweg A, Sorensen M (2017) Skill and luck in private equity performance. J Financ Econ 124(3):535–562. https://doi.org/10.1016/j.jfineco.2017.03.006

Künsch HR (1987) Statistical aspects of self-similar processes. Paper presented at FirstWorld Congress of the Bernoulli Society, Tashkent, Uzbekistan, September 8–14, pp 67–74

Kwiatkowski D, Phillips PCB, Schmidt P, Shin Y (1992) Testing the null hypothesis of stationarity against the alternative of a unit root: How sure are we that economic time series have a unit root? J Econ 54:159–178. https://doi.org/10.1016/0304-4076(92)90104-Y

Lee D, Schmidt P (1996) On the power of the KPSS test of stationarity against fractionally-integrated alternatives. J Econ 73(1):285–302. https://doi.org/10.1016/0304-4076(95)01741-0

Ljungqvist A, Richardson M (2003) The cash flow, return and risk characteristics of private equity. SSRN eLibrary 9454. https://doi.org/10.2139/ssrn.369600

Long AM III, Nickels CJ (1996) A private investment benchmark. The University of Texas System, AIMR Conference on Venture Capital Investing

Ng S, Perron P (2001) Lag length selection and the construction of unit root tests with good size and power. Econometrica 69:1519–1554. http://www.jstor.org/stable/2692266

Phalippou L, Gottschalg O (2009) The performance of private equity funds. Rev Financ Stud 22(4):1747–1776. https://doi.org/10.1093/rfs/hhn014

Phillips PCB, Perron P (1988) Testing for a unit root in time series regression. Biometrika 75(2):335–346. https://doi.org/10.2307/2336182

Pitman E (1936) Sufficient statistics and intrinsic accuracy. Math Proc Cambridge Philos Soc 32(4):567–579. https://doi.org/10.1017/S0305004100019307

Robinson PM (1994) Efficient tests of nonstationary hypotheses. J Am Stat Assoc 89(428):1420–1437. https://doi.org/10.2307/2291004

Ross SA, Zisler RC (1991) Risk and return in real estate. J Real Estate Financ Econ 4(2):175–190. https://doi.org/10.1007/BF00173123

Shimotsu K, Phillips PCB (2005) Exact local whittle estimation of fractional integration. Ann Stat 33:1890–1933. http://www.jstor.org/stable/40664474

Shimotsu K, Phillips PCB (2006) Local whittle estimation of fractional integration and some of its variants. J Econ 130:209–233. https://doi.org/10.1016/j.jeconom.2004.09.014

Sowell F (1992) Maximum likelihood estimation of stationary univariate fractionally integrated time series models. Journal of Econometrics 53:165–188. https://doi.org/10.1016/0304-4076(92)90084-5

Steger D (2017) The returns of private equity funds: a Swiss perspective. J Private Equity 20(2):15–27. https://doi.org/10.3905/jpe.2017.20.2.015

Weijie Z, Huihui T, Feifei W, Weiqiang P (2021) The optimal bandwidth parameter selection in GPH estimation. J Math 2021. https://doi.org/10.1155/2021/2876000

Yaya OS, Ogbonna AE, Furuoka F, Gil-Alana LA (2021) A new unit root test for unemployment hysteresis based on the autoregressive neural network. Oxford Bull Econ Stat 83(4):960–981. https://doi.org/10.1111/obes.12422

Acknowledgements

Prof. Luis A. Gil-Alana gratefully acknowledges financial support from the MINEIC-AEI-FEDER PID2020-113691RB-I00 project from ‘Ministerio de Economía, Industria y Competitividad’ (MINEIC), ‘Agencia Estatal de Investigación’ (AEI) Spain and ‘Fondo Europeo de Desarrollo Regional’ (FEDER), and also from Internal Projects of the Universidad Francisco de Vitoria.

Comments from the Editor and three anonymous reviewers are gratefully acknowledged.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors state there is no conflict of interest with the publication of the present manuscript.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Quarterly weighted IRRs by geographical area

Time Series

Histograms

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gil-Alana, L.A., Puertolas-Montanes, F. Profitability of private equity: mean reversion and transitory shocks. J Econ Finan 47, 458–471 (2023). https://doi.org/10.1007/s12197-022-09606-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12197-022-09606-7