Abstract

This work emphasizes the computational and analytical analysis of integral-differential equations, with a particular application in modeling avoidance learning processes. Firstly, we suggest an approach to determine a unique solution to the given model by employing methods from functional analysis and fixed-point theory. We obtain numerical solutions using the approach of Picard iteration and evaluate their stability in the context of minor perturbations. In addition, we explore the practical application of these techniques by providing two examples that highlight the thorough analysis of behavioral responses using numerical approximations. In the end, we examine the efficacy of our suggested ordinary differential equations (ODEs) for studying the avoidance learning behavior of animals. Furthermore, we investigate the convergence and error analysis of the proposed ODEs using multiple numerical techniques. This integration of theoretical and practical analysis enhances the domain of applied mathematics by providing important insights for behavioral science research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and preliminary concepts

Differential equations occupy a critical nexus among mathematics, the sciences, and engineering disciplines, providing a robust framework for elucidating complex phenomena and systems. This branch of mathematics is instrumental in modeling dynamic behaviors and processes that range from the movements of celestial bodies [1] to the intricate dynamics of biochemical reactions [2, 3]. It plays an essential role in predicting future behaviors within these systems [4, 5], thereby advancing theoretical scientific inquiry and facilitating the practical design and enhancement of engineering solutions [6,7,8]. The capacity of differential equations to model and simulate complex dynamics across diverse domains renders it a cornerstone in expanding scientific knowledge and achieving engineering innovation (refer to [9,10,11]). This mathematical tool’s predictive capabilities are pivotal in exploring science’s frontiers and driving technological progress, underscoring its invaluable contribution to interdisciplinary research and development.

Brady and Marmasse introduced a learning model using “shock" terminology [12], where a fisher experiences a “shock" from poor catch rates, impacting learning through aversion and information gain, described by the cumulative distribution of shocks \(\hbar (t)\) over time t. This model does not presume a specific form for \(\hbar (t)\) but derives it from the rate of shocks \(\vartheta (t)\), with learning reducing \(\vartheta (t)\). The total shocks \(\mathcal {N}(t)\) up to time t combine shocks from initial knowledge \(\mathcal {N} \left( t_0 \right) \) and those acquired through learning \(\int _{t_0}^t \vartheta (\eta ) \textrm{d} \eta \), formalized as

Building on the above foundational work, which utilizes shock terminology to model learning through the cumulative distribution of shocks \(\vartheta (t)\), many researchers extended the discourse by integrating a system of differential equations (DEs) to explore the spatiotemporal dynamics of predator–prey relationships within a simulated aquatic ecosystem (for the detail, see [13,14,15,16]). Such advancements illuminate the predator-avoidance strategies within prey schools, resonating with the natural aquatic ecosystems’ observed behaviors, thus reinforcing the model’s accuracy and applicability.

This work delves deeper into avoidance behavior-a crucial strategy evident in both natural and artificial systems-by employing mathematical modeling and numerical computations. It introduces the following differential equation to further explore this phenomenon:

where \(t \in [0, \mathcal {T}]\) for some \( \mathcal {T} >0\), \(\vartheta :[0, \mathcal {T}] \rightarrow \mathfrak {R}\), \(\psi : [0, \mathcal {T}] \times \mathfrak {R} \times \mathfrak {R} \rightarrow \mathfrak {R}\) and \(\phi : [0, \mathcal {T}] \times [0, \mathcal {T}] \times \mathfrak {R} \rightarrow \mathfrak {R}\).

The organization of this manuscript is structured as follows: We commence by exploring the foundational mathematical principles of the differential equation delineated in (1.2), which integrates integral components, highlighting their critical function in capturing memory effects within dynamic frameworks. Following this, we articulate a robust theoretical framework designed to address these equations, utilizing a blend of analytical and computational methodologies to ensure the solutions’ stability and continuity under diverse conditions. Leveraging this theoretical groundwork, we proceed to the empirical application of our methods through modeling avoidance behavior, demonstrating the efficacy of our techniques through comprehensive computational simulations. The manuscript concludes with an extensive analysis of the broader ramifications of our findings for understanding avoidance dynamics across biological and artificial domains and proposes avenues for future research.

In the sequel, the following well-known results will be required.

Definition 1.1

([17]) Let \((\mathbb {Y},d)\) be a metric space. An operator \(\mathcal {A}: \mathbb {Y} \rightarrow \mathbb {Y}\) is characterized as follows:

-

1.

An k-Lipschitzian mapping if it satisfies the inequality

$$\begin{aligned} d ( \mathcal {A} a_{1}, \mathcal {A} a_{2} ) \le k d ( a_{1}, a_{2} ), \ \ \forall a_{1}, a_{2} \in \mathbb {Y}, \ where \ k > 0; \end{aligned}$$(1.3) -

2.

An \(\tilde{k}\)-contraction mapping if \(\mathcal {A}\) is \(\tilde{k}\)-Lipschitzian, with \(\tilde{k} \in [0,1)\);

-

3.

A nonexpansive mapping if \(\mathcal {A}\) is 1-Lipschitzian;

-

4.

A contractive mapping if it fulfills the condition

$$\begin{aligned} d ( \mathcal {A} a_{1}, \mathcal {A} a_{2} ) < d( a_{1}, a_{2} ), \ \ \forall a_{1}, a_{2} \in \mathbb {Y} \ with \ a_{1} \ne a_{2}. \end{aligned}$$(1.4)

Leveraging these definitions, we employ a key theorem from fixed-point theory that is crucial for analyzing our model’s convergence.

Theorem 1.1

([18]) Consider \((\mathbb {Y},d )\) as a complete metric space where an operator \(\mathcal {A}: \mathbb {Y} \rightarrow \mathbb {Y}\) satisfies condition (2) of Definition 1.1. It is established that \(\mathcal {A}\) possesses a unique fixed point \(\tilde{y}\), and for any \(y\in \mathbb {Y}\), the iterates of \(\mathcal {A}\) converge to \(\tilde{y}\), i.e.,

2 Theoretical foundations and analytical investigations

Consider the space \(\mathcal {X} = \mathcal {C}^1([0, \mathcal {T}], \mathfrak {R})\) of functions that are continuously differentiable on \([0, \mathcal {T}]\) with values in \(\mathfrak {R}\), endowed with the norm

Define an operator \(\mathcal {Z}: \mathcal {X} \rightarrow \mathcal {X}\) that reflects the structure of the ODE (1.2). For a given function \(\vartheta \in \mathcal {X}\), let

where \(\vartheta _{0} = \vartheta (0)\) is the initial condition.

In the sequel, we need the following assumptions:

- \((\mathcal {A}_{1})\):

-

Assume \(\vartheta (t): [0, \mathcal {T}] \rightarrow \mathfrak {R}\) and its derivative \(\vartheta '(t)\) are both bounded on \([0, \mathcal {T}]\), i.e., there exist constants \(\tau _{1}, \tau _{2} > 0\) such that for all \(t \in [0, \mathcal {T}]\), \(|\vartheta (t)| \le \tau _{1}\) and \(|\vartheta '(t)| \le \tau _{2}\).

- \((\mathcal {A}_{2})\):

-

Assume \(\psi (t, \mu , \chi )\) is Lipschitz continuous in \(\mu \) and \(\chi \) uniformly in t, i.e., there exist constants \(\ell _\psi > 0\) such that for all \(t \in [0, \mathcal {T}], \mu _{1}, \mu _{2}, \chi _1, \chi _2 \in \mathfrak {R}\),

$$\begin{aligned} \left| \psi \left( t, \mu _{1}, \chi _1\right) -\psi \left( t, \mu _{2}, \chi _2\right) \right| \le \ell _\psi |\mu _{1}-\mu _{2}|+\ell _\psi \left| \chi _1-\chi _2\right| . \end{aligned}$$(2.3) - \((\mathcal {A}_{3})\):

-

Assume for each fixed \(\nu \in \mathfrak {R}\), the function \(\phi (t, \nu , \xi )\) satisfies the condition that for any \(\xi \in \mathcal {X}\), the integral \(\int _0^t \phi (t, \nu , \xi (s)) \, \textrm{d}s\) is bounded for all \(t \in [0, \mathcal {T}]\). Specifically, there exists a constant \(\tau _{3} > 0\) such that

$$\begin{aligned} \left| \int _0^t \phi (t, \nu , \xi (s)) \, \textrm{d}s \right| \le \tau _{3} \end{aligned}$$(2.4)for all \(t \in [0, \mathcal {T}]\) and for all \(\nu \in \mathfrak {R}\).

- \((\mathcal {A}_{4})\):

-

Assume \(\phi (t, \nu , \xi )\) is Lipschitz continuous in \(\xi \), i.e., there exist constants \(\ell _\phi > 0\) such that for all \(t \in [0, \mathcal {T}], \xi _{1}, \xi _{2} \in \mathfrak {R}\),

$$\begin{aligned} \left| \phi \left( t, \nu , \xi _1 \right) - \phi \left( t, \nu , \xi _2 \right) \right| \le \ell _\phi |\xi _{1}-\xi _{2}| . \end{aligned}$$(2.5)

We shall begin with the following key result.

Theorem 2.1

Let \(T > 0\) and consider the interval \([0, \mathcal {T}]\). Suppose \(\psi \) and \(\phi \) are continuous functions. Assume that \((\mathcal {A}_{1}) - (\mathcal {A}_{4})\) holds with \(k:= \sup _{t \in [0, \mathcal {T}]} \left( \ell _{\psi } t + \frac{1}{2} \ell _{\psi } \ell _{\phi } t^2 \right) \), then for any initial condition \(\vartheta (0) = \vartheta _{0}\), there exists a unique solution \(\vartheta \in \mathcal {X}\) to the differential equation

on the interval \([0, \mathcal {T}]\).

Proof

Define an operator \(\mathcal {Z}: \mathcal {X} \rightarrow \mathcal {X}\) that reflects the structure of the ODE (2.6). For a given function \(\vartheta \in \mathcal {X}\), let

Now, we need to show that \(\mathcal {Z}\) is well-defined. As \(\mathcal {Z} \vartheta \) is continuously differentiable and that \(\mathcal {Z}\) indeed maps \(\mathcal {X}\) into itself. Also, if \(\vartheta \in \mathcal {X}\) is a fixed point of \(\mathcal {Z}\), then \(\vartheta \) is a solution of (2.6) associated with \(\vartheta (0) = \vartheta _{0}\).

To show that the operator \(\mathcal {Z}\) is a contraction, we need to demonstrate that there exists a constant \(0 \le k<1\) such that for any two functions \(\mu , \nu \in \mathcal {X}\), we have

For this, let \(\mu , \nu \in \mathcal {X}\) and estimate

where \(k:= \sup _{t \in [0, \mathcal {T}]} \left( \ell _{\psi } t + \frac{1}{2} \ell _{\psi } \ell _{\phi } t^2 \right) \).

If \(k < 1,\) \(\mathcal {Z}\) is a contraction mapping. Consequently, from Theorem 1.1, the proposed ODE (2.6) has a unique solution. \(\square \)

Theorem 2.2

Given the premises outlined in Theorem 2.1, a singular solution \(\vartheta (t)\), for all \(t \in [0, \mathcal {T}]\), to the differential equation given in (1.2) is bounded on the interval \([0, \mathcal {T}]\). Specifically, there exists a constant \(\tau _{3} > 0\) such that for all \(t \in [0, \mathcal {T}]\), \(|\vartheta (t)| \le \tau _{3}\).

Proof

Given that \(\vartheta (t)\) is a solution to the differential equation (1.2), and considering the boundedness conditions specified in assumptions \((\mathcal {A}_{1})\) through \((\mathcal {A}_{4})\), we aim to establish a bound for \(|\vartheta (t)|\) for all \(t \in [0, \mathcal {T}]\).

From assumption \((\mathcal {A}_{1})\), we know \(\vartheta (t)\) and its derivative are bounded. Assumptions \((\mathcal {A}_{2})\) and \((\mathcal {A}_{4})\) ensure that \(\psi \) and \(\phi \) satisfy Lipschitz conditions, which, combined with the continuity of these functions, implies the existence of a maximum rate of change of \(\vartheta (t)\) over \([0, \mathcal {T}]\).

To establish a bound for \(|\vartheta (t)|\), consider the initial condition \(\vartheta (0) = \vartheta _0\) and the maximum Lipschitz constants for \(\psi \) and \(\phi \), denoted as \(\ell _\psi \) and \(\ell _\phi \), respectively. The solution \(\vartheta (t)\) is continuous and differentiable by its definition, and the rate of change is bounded by the Lipschitz conditions. Therefore, we can define

which ensures that

This bound is derived considering the initial condition and the maximum possible change in \(\vartheta (t)\) over the interval \([0, \mathcal {T}]\), taking into account the effects of both \(\psi \) and \(\phi \). \(\square \)

Corollary 2.1

Under the same conditions that ensure the existence of a singular solution (mentioned in Theorem 2.1) to the differential equation given in equation (1.2), the unique solution \(\vartheta (t; \vartheta _{0})\) is continuous with respect to the initial condition \(\vartheta (0) = \vartheta _{0}\). Specifically, if \(\vartheta (t; \vartheta _{0})\) denotes the solution corresponding to the initial condition \(\vartheta (0) = \vartheta _{0}\), then for any sequence \(\{\vartheta _{0,n}\}\) converging to \(\vartheta _{0}\) in \(\mathfrak {R}\), the corresponding sequence of solutions \(\{\vartheta (t; \vartheta _{0,n})\}\) converges uniformly to \(\vartheta (t; \vartheta _{0})\) on the interval \([0, \mathcal {T}]\).

Corollary 2.2

Suppose \(\psi \) and \(\phi \) depend continuously on a parameter \(\varrho \in \mathfrak {R}\), and they satisfy the Lipschitz conditions with respect to their second and third arguments uniformly in \(\varrho \). Then, for each fixed \(t \in [0, \mathcal {T}]\), the unique solution \(\vartheta (t; \varrho )\) to the differential equation given in equation (1.2) is continuous with respect to \(\varrho \).

Theorem 2.3

Assume that in addition to the conditions of Theorem 2.1, the functions \(\psi \) and \(\phi \) are differentiable with respect to their last two arguments, and their derivatives with respect to these arguments are bounded. Then the solution \(\vartheta (t)\) to the differential equation given in equation (1.2) is stable under small perturbations of \(\psi \) and \(\phi \). Specifically, if \(\tilde{\psi } \) and \(\tilde{\phi } \) are functions that are close to \(\psi \) and \(\phi \), respectively, in the supremum norm, then the solution \(\tilde{\vartheta }(t)\) corresponding to \(\tilde{\psi }\) and \(\tilde{\phi }\) is close to \(\vartheta (t)\) uniformly on \([0, \mathcal {T}]\).

Proof

Let \(\tilde{\psi }\) and \(\tilde{\phi }\) denote the perturbed versions of \(\psi \) and \(\phi \), respectively, with the perturbations being small in the supremum norm. Let \(\tilde{\vartheta }(t)\) be the solution corresponding to these perturbed functions. We assume that the supremum norm differences \(\Vert \tilde{\psi } - \psi \Vert _{\infty }\) and \(\Vert \tilde{\phi } - \phi \Vert _{\infty }\) are sufficiently small.

Given the differentiability and boundedness of the derivatives of \(\psi \) and \(\phi \), the difference between the solutions, \(\varpi (t) = \tilde{\vartheta }(t) - \vartheta (t)\), satisfies a differential equation that can be analyzed to show the stability of \(\vartheta (t)\) under perturbations. Specifically, the Lipschitz continuity of \(\psi \) and \(\phi \), along with the boundedness of their derivatives, allows us to establish an inequality that bounds the growth of \(\varpi (t)\).

By integrating this inequality and applying Gronwall’s inequality [19], we can show that \(\Vert \varpi (t)\Vert \) remains small for all \(t \in [0, \mathcal {T}]\) if the initial perturbations are small. This demonstrates that \(\tilde{\vartheta }(t)\) is uniformly close to \(\vartheta (t)\) on the interval \([0, \mathcal {T}]\), confirming the stability of the solution under small perturbations of \(\psi \) and \(\phi \). \(\square \)

3 Numerical examples

Example 3.1

Consider the following ODE in which the rate of change of \(\vartheta (t)\) depends on its current state and an exponentially weighted integral of its past states, representing some form of “memory effect”:

where \(a_{1}\), \(a_{2}\), and \(a_{3}\) are positive constants.

If we define \(\psi \) and \(\phi \) by

where \(I\) is the integral term, and

then the differential equation (1.2) can be represented in the form of (3.1).

We define an operator \(\mathcal {Z}: \mathcal {X} \rightarrow \mathcal {X}\) by

where \(\vartheta _{0} \in \mathfrak {R}\).

To prove \(\mathcal {Z}\) is a contraction mapping, we let \(\mu , \nu \in \mathcal {X}\) and estimate

where the constant \(k_{1}: = \sup _{t \in [0, \mathcal {T}]} \left( a_{1} + a_{2} t e^{a_{3} t} \right) \) reflects the maximum rate of change influenced by \(a_{1}\), \(a_{2}\), and the exponential decay factor \(a_{3}\). If \(k_{1} < 1\), then \(\mathcal {Z}\) is a contraction mapping on \(\mathcal {X}\). Consequently, from Theorem 2.1, the model (3.1) has a unique solution.

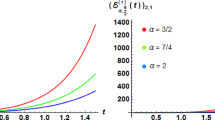

To compute the numerical solution of the proposed ODE given in (3.1), we employ the Picard approximation method, utilizing parameters \(a_{1} = 0.4, a_{2} = 0.04, a_{3} = 0.01,\) over a time span \(T = 10, N = 100\) discretization points. This method iteratively refines the solution starting from an initial guess, which is particularly effective for ODEs involving integral terms. We explore the method’s performance under various initial conditions to understand its behavior across different scenarios (see Fig. 1). Additionally, we conduct error analysis by comparing the Picard approximations to a reference solution, and we examine the rate of convergence to assess how quickly the method approaches the true solution as the number of iterations increases (see Figs. 2 and 3).

Example 3.2

Consider the following integro-differential equation with periodic coefficients

where \(\lambda _{1}\) and \(\lambda _{2}\) are constants representing the amplitude of external forcing and the damping factor, respectively, and \(\omega \) is the angular frequency of the oscillation.

If we define \(\psi \) and \(\phi \) by

where \(I\) is the integral term, and

then equation (1.2) can be represented in the form of (3.3), aligning with the definitions of \(\psi \) and \(\phi \).

We define an operator \(\mathcal {Z}: \mathcal {X} \rightarrow \mathcal {X}\) by

where \(\vartheta _{0} \in \mathfrak {R}\).

To prove \(\mathcal {Z}\) is a contraction mapping, we let \(\mu , \nu \in \mathcal {X}\) and estimate

where the constant \(k_{2}:= \lambda _{2} \sup _{t \in [0, \mathcal {T}]} \left( \frac{t^{2}}{2} \right) \). If \(k_{2} < 1\), then \(\mathcal {Z}\) is a contraction mapping on \(\mathcal {X}\). Consequently, from Theorem 2.1, the model (3.3) has a unique solution.

To solve the ODE outlined in (3.3), the Picard approximation technique is utilized, with the parameters set as \(\lambda _{1} = 0.09\), \(\lambda _{2} = 0.03\), and \(\omega = 2\). The analysis covers a time interval of \(T = 5\) using \(N = 100\) points for discretization. Starting from an initial approximation, this method systematically refines the solution, proving especially suitable for ODEs that include integral components. The adaptability and effectiveness of this approach are investigated under a range of initial conditions to discern its performance in varying contexts, as illustrated in Fig. 4). Furthermore, an error analysis is performed by comparing the Picard approximations with a benchmark solution, and the convergence rate is analyzed to determine the method’s efficiency in converging to the accurate solution with an increasing number of iterations, as shown in Figs. 5 and 6).

4 An application in modeling avoidance behavior

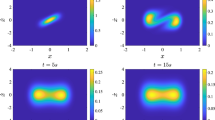

In this section, we explore a controlled experiment conducted within a specially designed enclosure, where naive male albino rats were subjected to an environment simulating a learning scenario for avoidance behavior (see [12]). The setup activated shocks in response to the rats’ movements, necessitating learning to avoid certain areas to minimize discomfort. This experimental arrangement revealed a complex interplay between learning to evade shocks and a subsequent “forgetting" phenomenon, evidenced by a progressive reduction in shock encounters over 350 h and distinct oscillations in behavior, as shown in Fig. 7. In contrast, control rats exhibited a higher level of exploratory behavior without oscillatory patterns, highlighting the learning and forgetting dynamics in the experimental group, as seen in Fig. 8.

The dynamic behavior of avoidance can be mathematically represented through the following ODE that includes integral components:

In the above model, \(\vartheta (t)\) delineates the rate of behavior that produces a shock at time t, encapsulating the organism’s inclination towards actions that have previously led to an adverse stimulus. The parameters embedded within this equation play a pivotal role in elucidating the model’s ramifications on avoidance behavior:

-

the variable t denotes the present moment, facilitating an analysis of behavioral evolution over time;

-

the integration variable \(\eta \) signifies historical moments leading up to the current time t;

-

the constant \(\kappa \), a positive value, measures the intensity of the learning or memory effect, showcasing the impact of prior experiences on present behavior;

-

the decay constant \(\lambda \) describes the rate at which memories of past shocks wane, with smaller values of \(\lambda \) indicating a rapid forgetting mechanism, whereas larger values suggest prolonged memory retention;

-

the integral expression \(\int _{0}^{t}e^{-\frac{t-\eta }{\lambda }} \vartheta (\eta ) d \eta \) represents the aggregated influence of historical shocks on the current shock-producing behavior rate, modulated by an exponential decay function. This component mirrors the psychological principle of “forgetting," highlighting the diminishing effect of previous shocks over time.

NOTE: To connect (4.1) with a general framework (1.2), we define \(\psi \) and \(\phi \) as follows:

where \(\mathcal {I}\) denotes the integral term, and

Theorem 4.1

Let \(\mathcal {X}\) be the space of continuously differentiable functions on the interval \([0, \mathcal {T}]\) with values in \(\mathfrak {R}\), equipped with the norm

The proposed model, defined by equation (4.1), has a unique solution.

Proof

Define an operator \(\mathcal {Z}: \mathcal {X} \rightarrow \mathcal {X}\) by

where \(\vartheta _{0} \in \mathfrak {R}\), \(\kappa > 0\), and \(\lambda > 0\) are constants.

To show that \(\mathcal {Z}\) is a contraction mapping, let \(\mu , \nu \in \mathcal {X}\). The difference in the application of \(\mathcal {Z}\) to \(\mu \) and \(\nu \) can be bounded by

where \(\tilde{k}: = \sup _{[0, \mathcal {T}]} \left( \kappa \lambda t \right) \). For \(\mathcal {Z}\) to be a contraction mapping, it is required that \(\tilde{k} < 1\). Hence, by utilizing Theorem 2.1, there exist a unique solution to the model (4.1). \(\square \)

The 3D plot for the ODE (4.1)’ solution can be seen in Fig. 9. In the numerical computation of the given ODE (4.1), we employ the Euler and Runge–Kutta methods by considering the values \(T = 50, N = 100, \vartheta _{0} = 0, \kappa = 0.3\), and \(\lambda = 1.3\), which facilitate the approximation of differential equation solutions, thereby permitting the investigation of system dynamics across time (see Fig. 10). These methods vary in accuracy and computational efficiency, allowing us to assess their performance through error comparison and the calculation of convergence rates (see Figs. 11 and 12). This approach underscores the trade-offs between simplicity and precision in numerical simulations.

5 Conclusion

This study addressed the importance of solving general ODEs with integral terms by combining functional analysis tools and fixed-point results. The theoretical framework established for the existence of a singular solution paves the way for further exploration of their intrinsic properties, including stability considerations. The practical application of the Picard iteration method demonstrated through two examples validates the effectiveness of the proposed analytical techniques in computing numerical solutions. Moreover, applying our findings to model avoidance behavior illustrates the potential of these mathematical constructs in contributing to the understanding of complex phenomena. The comparative analysis of different numerical schemes, accompanied by an in-depth error analysis and evaluation of convergence rates, reinforces the robustness and reliability of the methodologies employed. This work enriches the existing knowledge on ODEs and opens avenues for future research in applied mathematics, particularly in areas where modeling and numerical simulations play a crucial role.

Data Availibility

Data availability does not apply to this article, as this study did not involve the creation or analysis of any new data.

References

Chelnokov, Y.N.: Quaternion methods and regular models of celestial mechanics and space flight mechanics: local regularization of the singularities of the equations of the perturbed spatial restricted three-body problem generated by gravitational forces. Mech. Solids 58(5), 1458–1482 (2023)

Whitby, M., Cardelli, L., Kwiatkowska, M., Laurenti, L., Tribastone, M., Tschaikowski, M.: PID control of biochemical reaction networks. IEEE Trans. Automat. Control 67(2), 1023–1030 (2021)

Fröhlich, F., Sorger, P.K.: Fides: Reliable trust-region optimization for parameter estimation of ordinary differential equation models. PLoS Comput. Biol. 18(7), e1010322 (2022)

Liu, L., Liu, S., Wu, L., Zhu, J., Shang, G.: Forecasting the development trend of new energy vehicles in China by an optimized fractional discrete grey power model. J. Clean. Product. 372, 133708 (2022)

Linot, A.J., Burby, J.W., Tang, Q., Balaprakash, P., Graham, M.D., Maulik, R.: Stabilized neural ordinary differential equations for long-time forecasting of dynamical systems. J. Comput. Phys. 474, 111838 (2023)

Zúñiga-Aguilar, C.J., Gómez-Aguilar, J.F., Romero-Ugalde, H.M., Escobar-Jiménez, R.F., Fernández-Anaya, G., Alsaadi, F.E.: Numerical solution of fractal-fractional Mittag–Leffler differential equations with variable-order using artificial neural networks. Eng. Comput. 38(3), 2669–2682 (2022)

Liu, Y., Kutz, J.N., Brunton, S.L.: Hierarchical deep learning of multiscale differential equation time-steppers. Philos. Trans. Royal Soc. A 380(2229), 20210200 (2022)

Zhao, X., Gong, Z., Zhang, Y., Yao, W., Chen, X.: Physics-informed convolutional neural networks for temperature field prediction of heat source layout without labeled data. Eng. Appl. Artif. Int. 117, 105516 (2023)

Turab, A., Sintunavarat, W.: A unique solution of the iterative boundary value problem for a second-order differential equation approached by fixed point results. Alexandria Eng. J. 60(6), 5797–5802 (2021)

Yu, F., Kong, X., Mokbel, A.A.M., Yao, W., Cai, S.: Complex dynamics, hardware implementation and image encryption application of multiscroll memeristive Hopfield neural network with a novel local active memeristor. IEEE Trans. Circ. Syst.II: Exp. Briefs 70(1), 326–330 (2022)

Kumar, S., Wang, X., Strachan, J.P., Yang, Y., Lu, W.D.: Dynamical memristors for higher-complexity neuromorphic computing. Nat. Rev. Mater. 7(7), 575–591 (2022)

Brady, J.P., Marmasse, C.: Analysis of a simple avoidance situation: I. Exp. Paradigm. Psychol. Record 12(4), 361 (1962)

Hartono, A.D., Nguyen, L.T.H., Ta, T.V.: A stochastic differential equation model for predator-avoidance fish schooling. Math. Biosci. 367, 109112 (2024)

Townsend, J.T., & Busemeyer, J.R.: Approach-avoidance: Return to dynamic decision behavior. In Current issues in cognitive processes (pp. 107-133). Psychology Press (2014)

Burger, J., van der Veen, D.C., Robinaugh, D.J., Quax, R., Riese, H., Schoevers, R.A., Epskamp, S.: Bridging the gap between complexity science and clinical practice by formalizing idiographic theories: a computational model of functional analysis. BMC Med. 18, 1–18 (2020)

Ta, T.V., Nguyen, L.T.H.: A stochastic differential equation model for the foraging behavior of fish schools. Phys. Biol. 15(3), 036007 (2018)

Berinde, V., & Takens, F. Iterative approximation of fixed points (Vol. 1912, pp. xvi+-322). Berlin: Springer (2007)

Banach, S.: Sur les opérations dans les ensembles abstraits et leur application aux équations intégrales. Fund. Math. 3(1), 133–181 (1922)

Wang, X.: Several inequalities of Gronwall and their proofs. Insight-Inf. 4(2), 58–63 (2022)

Acknowledgements

This research is supported by the University of Alicante, Spain, the Spanish Ministry of Science and Innovation, the Generalitat Valenciana, Spain, and the European Regional Development Fund (ERDF) through the following funding: At the national level, the following projects were granted: TRIVIAL (PID2021-122263OB-C22); and CORTEX (PID2021- 123956OB-I00), funded by MCIN/AEI/10.13039/501100011033 and, as appropriate, by “ERDF A way of making Europe”, by the “European Union” or by the “European Union NextGenerationEU/PRTR”. At regional level, the Generalitat Valenciana (Conselleria d’Educacio, Investigacio, Cultura i Esport), Spain, granted funding for NL4DISMIS (CIPROM/2021/21).

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Contributions

Each author contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Turab, A., Montoyo, A. & Nescolarde-Selva, JA. Computational and analytical analysis of integral-differential equations for modeling avoidance learning behavior. J. Appl. Math. Comput. (2024). https://doi.org/10.1007/s12190-024-02130-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12190-024-02130-3