Abstract

Italy offers a cultural heritage of considerable value that needs to be protected. Indeed, natural deterioration linked to the passage of time affects ancient artifacts and buildings. Sometimes, the deterioration compromises the functionality of cultural assets, pushing them toward decay. In this scenario, effective intervention seems impossible on the various critical points because of the wide variability of factors involved and the wide range of possible treatments. However, the spread of low-cost technologies has led to the possibility of having different devices and sensors able to communicate and interact with each other and humans: the Internet of Things (IoT). In this scenario, the IoT paradigm makes it possible to map reality by defining a coherent virtual representation (Digital Twin), which could help preserve Cultural Heritage. This work introduces an IoT-based system combining monitoring, predictive maintenance, and decision-making regarding the implementable interventions for protecting cultural heritage buildings. For this purpose, deep and machine learning techniques allow for the detection and classification of damages on specific materials. The experimental phase consists of two phases: the first aims to evaluate the accuracy of the proposed architecture, and the second exploits a prototype capable of interacting with expert users. The results of the experimental campaign are promising.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The advent of Smart Cities and their paradigms in the design field has forced different disciplines to meet in a common area. Therefore, the innovation from ICT has had an effective integration in territorial and environmental monitoring [1, 2]. This approach also expands into other construction areas, such as the predictive maintenance of civil or CH buildings [3]. Therefore, the ICT integration in territorial and environmental monitoring encourages further developments toward a greater awareness of artistic and CH monitoring, improving planned interventions both qualitatively and in terms of timing for acting precisely and timely concerning the type of intervention required [4, 5]. Conservation of CH is a coherent combination of planning, coordination, study, research, prevention, maintenance, and restoration. Among these, preventive intervention plays a fundamental role in conservation to limit possible risk situations since maintenance intervention stands between the prevention phase and restoration one, usually more critical and sometimes invasive [6]. Therefore, maintenance can be defined as a series of interventions aimed at maintaining the conditions of a given asset intact without affecting the functionality and identity of the whole or parts subject to maintenance. If prevention and maintenance fail to guarantee the conservation of an element, the restoration phase comes into play [7]. It is the last act that a conservator can perform to limit the damage by intervening directly on the asset through a complex series of operations to protect and fully transmit the cultural values it represents [8, 9]. In general, all assets are subject to different types of deterioration over time, depending on the type of environmental exposure, risking compromising their functionality to a decay state. The types of maintenance and prevention vary depending on the factors acting on the asset of cultural interest because several factors can contribute to the deterioration of a given element, such as climatic or microclimatic conditions or the material or physical properties or chemical composition of the object, or even worse the mixing of more than one factor at the same time [10,11,12]. Given the complexity of the scenario described, the difficulty of intervention in a precise manner on an object for conserving a cultural asset depends on the variability of evaluable elements to consider. The continuous evolution of new technologies in the field of ICT has made it possible to improve various approaches inherent to the management and recovery of cultural heritage assets. This increased diffusion depends on lowering the costs of these technologies, which has allowed for an increase in interconnected sensors and devices and an improved knowledge of the asset under study. These factors influenced the diffusion of the IoT paradigm, allowing for monitoring physical elements through a sensor system connected to a centralizer that manages their data collection and user interface, constituting a coherent virtual representation, i.e., a DT [13, 14]. Several IoT-based sensors can be used to monitor CH depending on the type of target information. The sensor network can [15]acquire data according to the typology of degradation to monitor or prevent, such as material alteration, physical and structural problems, and climatic and environmental conditions of the asset. Therefore, the choice of sensors depends on the type of the material asset. Each material will have its specific way of reacting to certain conditions, so this information also significantly influences the choice of one sensor over another. In this way, it will be possible to use the DT to carry out and simulate the possible maintenance actions applicable to the monitored asset [16]. Therefore, this approach allows supporting experts in the field to improve the evaluation of strategies to recover historical elements. Furthermore, thanks to the developments of ML techniques that have improved the concept of PM, starting from the actual description of the element [17], it is possible to make predictions aimed at the studied and known characteristics by evaluating all its parameters [18]. To summarise, PM can be defined as preventive maintenance derived after studying extracted and elaborated values, according to well-defined laws, of the period remaining before an event damaging the element occurs. The substantial difference between preventive maintenance and PM is that the latter is performed if measured values indicate the need for intervention [12, 19]. Thus, employing the IoT-based framework would allow for the early identification of the deterioration of one or more elements based on the data detected in real-time, making interventions more effective by assessing the highest priority cases and discarding unnecessary interventions.

In addition, a key aspect is the choice of intervention methods related to CH’s real estate. This study aims to identify possible intervention scenarios to optimize the final result. In this scenario, the objective is to recognize the most appropriate intervention among various scenarios, such as restoration, recovery, and valorization [20]. The choice of the type of evaluation to propose is also complicated. Therefore, the literature introduces the multi-criteria analysis for solving and rational examining different scenarios characterized by an information overflow [21]. Precisely in this context, using all the advantages and peculiarities coming from the IoT, it is interesting to create a workflow that combines the aspects described above: real-time monitoring of the values coming from the sensors, then predictive maintenance based on the acquired information, and then the appropriate intervention actions to ensure adequate conservation of the cultural Heritage [22]. For the decision support phase of the system, this work proposes an ML model for the identification of structural anomalies of the monitored cultural assets [10, 23, 24]. This model consists of an AE, a particular Artificial Neural Network that requires as output the input given to the network to approximate it. AEs are usually exploited for both AD [25] and dimensionality reduction [26, 27] and consist of two parts: an Encoder that aims to encode the input and a Decoder that instead reconstructs the initial input with a given approximation. In particular, this work aims to exploit the AE ability to approximate inputs to identify a threshold that will allow the distinction between regular data and data related to damages. Therefore, in the field of CHs, the use of AD techniques enable the identification of risky conditions in historical structures or artifacts to support the work of experts in the field and optimize the intervention time on historical artifacts or structures. After the anomaly identification, ML techniques also allow specific damage assessment and provide support to experts in the field regarding potential conservative measures [28].

Thus, this article aims to introduce an architecture for monitoring and predictive maintenance of architectural structures to support decision-making by experts in the field.

In the context of cultural heritage conservation, this study integrates two sophisticated modules, the Predictive Maintenance Module, and the Decision Support Module, to achieve the goal of predictive maintenance and decision support. The former leverages Artificial Neural Networks (ANN), specifically Auto Encoders (AE), for Anomaly Detection (AD). Each type of monitoring applied to the facility is associated with a specially trained AE model designed to accurately identify deviations from expected performance patterns, thus facilitating early interventions. The Decision Support Module, on the other hand, exploits the anomalies detected by the Predictive Maintenance Module. It uses the K-Nearest Neighbor (kNN) classification algorithm to propose potential corrective actions for the facility based on the classified decay related to the specific analyzed material. This approach not only categorizes the damage observed but also provides a data-informed basis for experts in the field to prioritize and execute preservation efforts effectively. Employing kNN, the module provides an understanding of the damage, enabling targeted interventions crucial to maintaining and preserving cultural property. The proposed approach aims to leverage machine learning techniques to improve the preservation of historic buildings by detecting and classifying possible damage to the structure. In addition, employing machine learning approaches can enable experts to intervene early to prevent the onset of an unrecoverable state of damage.

Therefore, this work aims to describe an architecture for conserving cultural structures exploiting IoT devices and machine-learning approaches. Because of the application field, the sector study represents a crucial step in combining the application of novel technologies for preserving structures and respecting the current regulations related to cultural structures. Specifically, current regulations regulate both types of damage and possible methodologies for restoration works.

The structure of the paper is as follows: The next chapter reports and analyses some significant works that have contributed to this line of research. The third chapter introduces the IoT-based architecture for monitoring, PM, and decision-making applied to preserve cultural heritage buildings. A description of a real case study follows, integrated with the results related to the proposed architecture validation. Finally, conclusions will follow, and possible approaches and future developments will be proposed.

2 Related works

In the scientific field, there have been many efforts to protect CH. For example, in [29], the three-year environmental monitoring carried out at The Cloisters, home of the Metropolitan Museum of Art in New York, using a wireless sensor platform is presented and discussed. This sensor network, comprising more than 200 devices distributed in 5 art rooms, evaluates factors such as temperature, humidity, air quality, dew point, and number of visitors. The data collected by the sensor platform represents the basis for studying the impact of air conditioning and visitors on the long-term preservation of art objects. [30] describes a three-level IoT architecture as a first step towards creating a monitoring environment for the Church of the Holy Archangels Michael and Gabriel in Sarajevo. The paper proposes a solution based on ML algorithms such as Decision Tree, Support Vector Machine, or SVM Lite to ensure real-time appropriate decisions. In [31], the application of SHM techniques to assess the safety and structural stability of the Verona Arena is presented for developing an advanced SHM data process to quantify uncertainty and reduce static and dynamic monitoring parameters. The main objective is to understand the structural behavior of historic buildings. In [32], the importance of monitoring the health of historical buildings to predict and prevent further damage by employing sensors and advanced information technology is emphasized, exploiting a predictive approach based on statistical techniques such as data mining, predictive modeling, and machine learning to predict future events.

In [33], a museum monitoring system is described to improve visitor management within the museum space. The use of Bluetooth beacons, statistical analysis, and multilayer perceptron neural networks enables the precise reconstruction of visitor trajectories, which are essential for stochastic simulation and optimization of museum operations.

In [33], the authors describe the application of a room monitoring system for preserving paintings on a wooden support, in which the indoor microclimate parameters of temperature, humidity, and vibration are measured. On the other hand, in [34], intelligent IoT devices called Smart Tags are presented to be applied in the vicinity of monuments and connected through a middleware; the system proposes to use low-energy sensors, increasing the system’s overall efficiency.

The authors of [35] propose an innovative evaluation model to identify the historic buildings’ Highest and Best Use (HBU), considering their social, cultural, and financial identity and preserving their integrity and original image. The choice of HBU requires a study approach that analyzes multiple factors, such as the financial performance of the restoration and enhancement project, the effects on the territory and community, employment opportunities, and cultural growth. For the implementation, the research proposes the Analytic Hierarchy Process (AHP) employment: a multi-criteria technique for breaking down the complex decision-making problem into more straightforward elements based on multiple criteria and sub-criteria. This technique will allow the consideration of multiple aspects when assessing the HBU of historic buildings, helping to make an informed and considered decision.

Another machine learning model used in the literature is decision trees, which can be used for classification and regression. Some application examples are listed below. An application case regards the monitoring within a museum, using a decision tree to identify cultural assets most at risk of damage or deterioration due to environmental conditions, such as humidity and temperature, and to make decisions on protection modalities of these assets. Another example applied in an archaeological site employs decision trees to identify the finds requiring greater attention and protection based on their state of conservation and historical importance. Ultimately, it deals with conserving historical heritage to identify cultural assets that need restoration or protection based on age, fragility, and popularity [36].

Finally, among the techniques for identifying defects or damage on artifacts, there are AEs widely used in the AD field. In particular, [37] exploits a convolutional AE based on the images obtained from a thermal camera to monitor possible damage to contemporary artworks, developing the experimental phase on an artisanal reproduction of the “La Bouteille de Suze” by Picasso. Instead, in [38] and [39], the AD is exploited to identify keywords to improve the usability of ancient manuscripts by simplifying access to archives. For example, in [40] is proposed an AeKNN model that combines an autoencoder for extracting latent features from meta-features of datasets and a kNN for recommending optimal learning pipelines based on the distances between feature vectors, allowing it to outperform classical kNN and other traditional meta-models. Finally, in [24, 41], AEs are exploited to protect structures through image processing and crowdsourcing. These works of literature confirm the effectiveness of AE in identifying anomalies, guaranteeing the possibility of operating promptly to preserve cultural assets.

In this scenario, the proposed AeKNN model represents a significant innovation compared to previous systems. While many studies have focused on IoT technologies for environmental monitoring and preventive conservation, our model introduces a unique approach that integrates latent feature extraction via autoencoder with advanced classification via kNN. This combination enables the monitoring environmental and physical parameters and a more accurate and compelling interpretation of data dynamics, offering data-driven recommendations for conservation interventions. The use of AeKNN allows detailed reconstruction of deterioration lines and early identification of damage, improving traditional models both in terms of accuracy and adaptability to different types of materials and environmental conditions. This innovation is expected to significantly improve decision-making in cultural heritage conservation by providing a more robust and informed platform for preventive interventions and predictive maintenance. As such, the model will significantly impact the community, improving the quality and timeliness of conservation interventions and setting a benchmark for future developments in the field.

3 The problem

The peculiarities of the approach under study are many, such as functional support to non-expert users of cultural heritage monitoring and management or the possibility of predicting elements of degradation and consequently billing a maintenance program through targeted interventions and then designing the best intervention action to implement. This chapter discusses the method applied for monitoring, predictive maintenance, and the decision-making phase of interventions for protecting CH assets. For the application of concepts related to PM, a prototype system was studied and developed to obtain testing results that will be discussed.

The presented framework represents an evolution of the methodology proposed previously in [42], based on the IoT system applied to cultural heritage buildings. The project combines monitoring, predictive maintenance, and decision-making related to conservation tools. In the described methodology, data collected through sensors on environmental and micro-environmental conditions, including monitoring alterations of materials and structure, flow into an inference model capable of predicting, through appropriate learning models, the possible variation in the level of preservation of buildings. This data typology feeds an inference system that, thanks to the learning models, makes it possible to predict future deterioration conditions and the subsequent planning of conservation interventions. A prototype is developed and tested with a historic building, with encouraging results: it integrates several sensors to monitor various indicators of critical environmental and structural parameters, such as humidity, temperature, vibration, and other elements that can affect the preservation of the building. Therefore, it was decided to continue the work, combining additional knowledge and upgrading the inference model by employing neural networks dedicated to the analysis of the monitored parameters to improve the reliability of the system and concurrently preserve the versatility of the proposed architecture by preserving the peculiarities that each cultural heritage asset intrinsically preserves.

To verify the process of the proposed study, the architecture under analysis was applied to a building of cultural significance, such as the scientific library of the University of Salerno. Built on the design of the Roman architect Nicola Pagliara, it stands within the Fisciano campus on more than 2,000 square meters with eight levels: five visible from the outside (including the access floor) and three underground. It has a total capacity of approximately 350,000 bibliographic units, and it brings together the library collections formerly belonging to the Faculties of Mathematical, Physical and Natural Sciences, Engineering, and Pharmacy libraries. The building is reminiscent of an industrial design, as if it stood for the industry of knowledge. The choice of material elements characterizes its facade because the use of stone and marble elements with gray-colored bricks gives a sense of movement to the structure. The choice of this study is justified by the fact that it is a relatively young structure that shows the first hints of decay, so it is functional to analyze the evolution of a given decay over time, from the first signs to more substantial damage. In addition, another factor that made us choose the science library specifically was the ease of finding information on the singular materials used and information on the installation method. In summary, this source information represents a significant knowledge base on which to base a viable model over time.

To implement the experimental part of the architecture, a starting prototype was designed and then assembled based on the IoT concept, integrating open-source technologies characterized by low cost and open standards. Table 1 lists the network of sensors fielded for monitoring. The different characteristics of the sensors chosen allow for the most comprehensive analysis of the distinct conditions to which the asset may be subjected.

The choice of sensor types turns out to be crucial since it is possible to analyze both purely architectural and structural aspects of a building to overcome the sectoriality of some approaches already adopted in the literature. Information inherent in the environmental conditions of the area and the microenvironmental conditions of the element under study are recorded through a vast system of sensors, such as air temperature and humidity, presence of gases, presence of particulate matter and fine dust, air quality, thermal imaging cameras, cameras, and weather stations. Then, thanks to some of these values, it is possible to calculate the dew point using temperature and relative humidity values, thus monitoring the presence of condensation on some architectural elements that might be particularly affected. Thermal imaging employment allows for the temperature of the analyzed elements to be recorded by measuring the intensity of infrared radiation (directly proportional to temperature values) emitted by the subject under analysis. In fact, through the use of thermal imaging cameras, we can detect thermal dispersion of a building envelope (infill wall, attic, roof, windows, etc.), highlight material discontinuities (assessing their possible risks on the asset), analyze the condition of impermeability of surfaces, types of degradation related to moisture or more generally regarding possible water infiltration or better inspect certain elements that are not directly visible but are located within the building envelope, such as the presence of pipes inside a wall. Instead, everything related to changes in structural and material conditions is monitored through cameras and video cameras. Camera usage allows the encapsulation of information inherent to deformations and kinematics, such as the presence of cracks. Instead, for material analysis through cameras, it is possible to encapsulate information regarding both physical and chemical corrosion phenomena, which can be intrinsically related to the characteristics of the construction (congenital in the material itself, location in the site, or defects in the design phase) or extrinsically regarding the surrounding environment and context, which are almost always permanent (weathering, pollutants, biodeteriogens). Using computer vision techniques based on Deep Learning, valid and automatically manipulatable data can be visualized and extracted directly from images from cameras and camcorders. There are already several applications that allow the analysis of possible structural damage through the comparison of crack pictures and the detection of material alterations through the evaluation of colors. The monitoring system exploits a Raspberry Pi4 board connected to a network of actuators that autonomously regulates the conditioning system of the rooms to survey. In contrast, data management and visualization take advantage of the IoT management platform ThingsBoard, which enables device coordination, collection, development, and visualization of collected information. The visualization and monitoring of the data are done through dedicated dashboards, and this type of visualization is easily accessible through both web and smartphone apps, thus expanding the user audience. So, thanks to the collected data, it is possible to implement analyses for PM based on inferential engines powered by ML algorithms. The testing phase was performed by verifying the system’s ability to detect existing damages to the asset under study in a preventive manner. Specifically, by keeping an eye on defined parameters, such as indoor and outdoor air temperature and humidity, the study of the dew point, surface temperature of the infill elements (walls and fixtures), wind direction and speed, level of meteoric precipitation, thus general weather conditions, and camera and camera images. So, the system identified on the facade at different times of the year the possibility of risky conditions of the presence of alterations due to surface condensation, and it was on this phenomenon that the experimental phase focused most.

The selection of sensors is justified precisely by evaluating the condensation that occurs under specific temperature and humidity conditions. So, condensation appears when the surface temperature of the element under study is lower than the dew point temperature of the indoor air. The dew point, or dew temperature, is the value that, at constant pressure, the air turns into droplets of moisture and condenses. “Dew point” takes this name to denote the point at which the air becomes saturated and is identified through the psychrometric diagram. The psychrometric diagram is a graph to represent the different conditions in which the air and humidity are. Each point on the diagram represents a definite state of the air and describes its properties. Since the diagram is composed of infinite points, it can provide information about an infinite number of thermodynamic conditions related to the air state. So, to establish the dew temperature value, psychrometric diagram analysis allows for the reading of the values along the relative temperature and humidity lines encountered on the saturation curve. Instead, the thermal camera employment permits to read the surface temperature. So, briefly, we can say that both the microclimatic values of the building and the climatic conditions of the surroundings significantly influence the data inherent in the dew point and surface temperature. In addition, the images taken by the thermal imaging camera can also detect material changes and thus highlight the early effects of condensation due to moisture. The effects of condensation can be swelling, the presence of stains or mold, salt efflorescence, and chalking. So, because water action carries salts and soluble substances, it represents the first natural cause of material degradation through several forms: rising, seeping, stagnant moisture, and water vapor (condensation). Classical deterioration analysis follows a logical path: causes, deterioration mechanisms, effects, and interventions.

Degradation processes can be physical, chemical, and biological. Physical degradation depends on sunlight, wind, and low temperatures, which cause the crystallization of salts on the surface of materials and the formation of freeze/thaw cycles. Chemical degradation is caused by the deposition of dust, gases, and suspended substances in the atmosphere that react with the surface layers of materials. Biological degradation depends on bacteria, parasites, and microorganisms colonizing the surfaces of materials. Crystallization of salts occurs in very porous materials due to temperature excursions. The pores of the material absorb water that contains soluble salts. The water evaporates, and the salts settle inside the pores, causing a progressive increase in volume. Diagnosis, understood as the identification of degradation and disruption, is a knowledge process related to the state of preservation of artifacts. There are two basic levels of material diagnosis: the first is direct observation, macroscopic analysis, and the second consists of more in-depth observation with precision instruments, microscopic analysis, which is done either directly on the material (in situ) or by sampling and analysis in the laboratory.

Codes are used to identify the types of degradation of materials and to unify the pathologies in scientific terms from the description of the phenomenon that presents itself to a visual (or macroscopic) analysis. Among the various types of classification of the state of conservation of artifacts are the RILEM, UNI, and NorMal codes. In Italy, the NorMal, created by the Central Institute for Restoration, has been one of the most widely used codes. NorMal is an acronym that stands for “Normativa Materiali Lapidei”. The code deals with natural materials used in architecture (the stones) and artificial stone materials (elaborated from natural raw materials, such as bricks, ceramic products, mortars, plasters, stucco, etc.). The NorMal code that dealt with the types of degradation of stone (and assimilated) materials was identified as NorMal 1/88. Today, the UNI 11182:2006 standard has replaced NorMal 1/88. The document makes it possible to survey the state of conservation of stone surfaces, defining the principal forms of alteration in alphabetical order, accompanied by a photographic description and a definition of the causes that caused the degradation.

So, UNI 11182:2006 standard defines alteration as a modification of a material that does not necessarily imply a deterioration of its characteristics from a conservation point of view; it also defines degradation as a modification of a material involving a deterioration of its characteristics from a conservation point of view. Some of the most commonly encountered degradations in the conservation of cultural property are defined in the table.

3.1 BIM and thingsboard integration - digital twin

In the context of advanced planning and management of a building, the possibility of integrating a BIM model also based on data from external sources is of great value. This article describes in detail how data can be acquired from the ThingsBoard platform on Revit via the Dynamo system and deals with the so-called DT, consisting of a real-time replica of the real model of the physical object described in the BIM model.

The primary purpose is to facilitate the synergic connection of ThingsBoard data with the Revit BIM model, providing a real-time and detailed viewpoint of the physical object being represented to ensure informed choices and optimal maintenance of the building structure.

The first stage of the integration process involves the appropriate configuration of the ThingsBoard API. This process enables optimal communication of data values between the platform and the Revit BIM system. For this reason, it is necessary to accurately identify the data to be kept under control and to be integrated into the BIM model. This step involves carefully reviewing the information in ThingsBoard and choosing only those values that help create the DT in the Revit BIM model. The next step involves using Dynamo, the visual programming tool integrated with Revit. With Dynamo, proprietary scripts are developed to link the ThingsBoard platform and the BIM model. These scripts are designed to automate the flow of data extraction, processing, and transfer to ensure complete and efficient processing. Dynamo is then used to extract the data identified in the previous step from the ThingsBoard platform. This data is transformed into a format type compatible with the Revit BIM model. Finally, the converted data is loaded into the model, ensuring seamless interaction and fully integrating relevant information. The precise mapping of values between ThingsBoard and the BIM model is a decisive element in achieving consistency in the flow of information. During this procedure, exact correlations are defined between the data obtained via the ThingsBoard platform and the corresponding components of the BIM model in Revit. This aims to ensure that its data is presented precisely and entirely in the DT, thus fostering uniformity and improved security of the adopted model. Exploiting the data collected to create a digital replica of the physical object in the BIM model is crucial in this integration process.

Maintaining the correspondence between the DT and the physical structure through regular updating is essential to ensure that the information in the digital model also accurately matches the current condition of the physical object. This involves creating a permanent flow of updated data from ThingsBoard to the BIM model, ensuring that the DT accurately represents the actual object.

Establishing a continuous monitoring system is crucial to ensuring that the data in the BIM and digital twin models are updated accurately. This cost-effective surveillance system ensures that the information reflects the current state of the physical object, maintaining the connection between the digital and physical worlds. Dealing with potential issues or gaps in time is a necessary component of ongoing monitoring and maintenance. The monitoring system is designed to identify such circumstances and initiate an expedient correction procedure for anomalies or unexpected changes. Promptly resolving issues or gaps is essential to ensuring the reliability of the integrated model over time and maintaining the validity and accuracy of the data obtained from the DT.

4 The proposed approach

As seen previously, in works concerning the protection of CHs, there are no approaches that integrate all considerable aspects. So, this chapter proposes an architecture that combines the three crucial facets that characterize the maintenance of buildings in the CH category: monitoring, PM, and the decision-making phase on the interventions to implement. To best describe the developed process in Fig. 1, the proposed architecture exploits four functional layers: Sensor Layer, Knowledge-Base Layer, Inference Engine Layer, and Application Layer.

The Sensor Layer collects all IoT devices to represent environmental parameters related to the object under observation. In this layer, there are different devices, such as sensors, that allow the collection of information regarding the environmental and micro-environmental conditions of the area, as well as material alterations and structural deficits and other devices that can intervene to modify the environmental conditions, such as actuators. With this data, it is possible to obtain additional information that enriches the system, such as degradation mechanisms and related interventions integrated with established services that describe the conditions of the Cultural Heritage (See Figs. 2 and 3).

In addition, data acquisition through sensors takes advantage of the MQTT Communication protocol. Based on a publish-subscribe approach, the MQTT protocol involves three actors: the publisher who publishes data on a given topic, the subscriber who has access to the data published on the subscribed topics, and the broker who manages messages on the various topics. The proposed architecture manages data acquisition through the IoT ThingsBoard platform, which provides several services, including the acquired data management from transferable sensors through services based on Rest APIs. These APIs allow structured and unstructured data acquisition related to applied sensors (See Figs. 4 and 5).

The core of the proposed architecture is the Knowledge-Base Layer. This layer acquires data through the Rest services made available by the IoT Thinghboards platform, allowing the storage of the time series that prepares the data for the processing that will take place in the Inference Engine Layer. In addition, a preprocessing and validation phase of the collected information takes place in this layer, making the data homogeneous and then stored for later use. The preprocessing phase is fundamental for extracting significant information in the pursuit of cultural heritage preservation, increasing the dataset of information. The acquired data are then employed to feed the inference block.

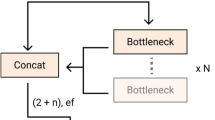

The Inference Engine Layer implements learning models to describe the actual conditions and determine the various parameters based on the collected information. So, this module is crucial to the whole success of the process because this layer encompasses the entirety of the information flow for the inference on which the choices inherent in monitoring, predictive maintenance, and intervention types will be based. The Inference Engine Layer consists of two sub-modules: The Predictive Maintenance Module and the Decision Support Module. The former exploits particular Artificial Neural Networks called AEs that enable AD, as seen in the literature analysis. Specifically, there is a trained AE model for each type of monitoring applied to the structure. The second, on the other hand, exploits the anomalies identified by the Predictive Maintenance Module to indicate the potential interventions to perform on the structure.

The Predictive Maintenance Module processes the data through the trained AE model related to the possible damage that was analyzed. In the case of anomalies, the system leverages the kNN to classify the best intervention concerning the identified anomaly. In particular, the Predictive Maintenance Module exploits the ability of AE to approximate the identity function and, through training data, identify the approximation error across inputs and outputs to determine a threshold to distinguish between regular data and data related to damages. This step enables the detection of damages that can be classified through the kNN algorithm.

The Decision Support Module communicates with the Predictive Maintenance module, acquiring the data elaborated through AutoEncoders and kNN. Specifically, the AEs allow the detection of damages, and the kNN classifies them. Based on the damage identified, the Decision Support Module connects the damage to possible restoration works provided for in the regulations.

Moreover, the functioning of the Inference Engine Layer requires an offline phase for training machine-learning techniques through collected data and an online phase in which trained models allow the inference of data. In addition, the system provides a periodic phase of updating the models through the new data collected.

Thanks to the Application Layer, obtained information and knowledge are made available to a consequential module that proposes monitoring and performing the necessary analysis for the PM and the most fit-for-purpose decision-making phase. The collected information is made available for visualization through ThingsBoard, an open-source IoT platform, which enables remote management, processing, and visualization of the said data. Subsequently, the module dedicated to predictive maintenance and decision-making is based on algorithmic logic that considers the patterns present in the inference engine. This type of application targets experienced users. In fact, this approach provides information on the trend of conditions at the site to monitor, allowing autonomous decisions to support the system, such as temperature and humidity regulation, air quality assessment, and energy consumption optimization. Through the visualization of dedicated dashboards, expert users can take advantage of real-time data for asset management or possible actions that the model performs autonomously. Another type of practicable application hypothesizes using data obtained from monitoring to derive information inherent in the timing and mode of maintenance of the asset and optimize the practical phase of possible approaches to intervene appropriately.

5 Experimental results

This section covers the experimental results related to the case study presented earlier. The purpose of the proposed architecture, based on the IoT and Digital Twin paradigms, is to combine different fundamentals aimed at preserving buildings belonging to Cultural Heritage. In particular, the introduced architecture aims to support expert users in monitoring tasks, PM, and decision-making regarding the interventions to be implemented. For this reason, referring to the case study related to the scientific library of the University of Salerno, an experimental phase was conducted to test fundamental aspects of the proposed methodology. In particular, the experimental phase tests the system’s ability to support expert users for the management and maintenance phase through suggestions and autonomous choices. In addition, the system’s ability to adequately present the acquired information to ensure users have an overall picture of what is happening was evaluated. For this purpose, the experimental phase requires the development of a prototype with a server component and client components reachable from the Web and through applications. Python-based technologies were employed using the Django REST framework for the server part related to inferential engines.

The developed visualization application allows supporting experienced users in managing routine building-related activities. This application shows, through dashboards, an overview of all monitored crucial parameters and to choose actions to be performed by the system.

The developed prototype is fed through data from two boards, one for the inside of the building and one for the outside, which act as a concentrator to which different parameters from various sensors are routed, according to Table 1. Weather parameters were also monitored using a Weather Station integrated with external concentrators to supplement the collected data.

The monitored parameters made it possible to build a dataset by which the system can acquire indoor and outdoor environmental parameters, such as weather conditions, humidity, indoor temperature, air quality, presence of people, etc. Such information is sufficient to predict, for example, the need to turn on the heaters automatically, to control the lighting system and the possibility of having the building ventilated, when necessary, through rules set based on the inferences produced by the architecture. As a matter of convenience, the experimental campaign was divided into two phases. The first phase focused on understanding the reliability of the inference engines underlying the ability to predict potential damage situations in the case study. The second phase evaluates suggestions provided by the proposed architecture and the graphical interfaces to support expert users using a questionnaire submitted to expert users.

In particular, this section is organized as follows: the first subsection describes collected data aimed at the accuracy evaluation of the machine-learning techniques exploited in the Inference Engine Layer, and the second subsection describes the obtained results related to accuracy and the questionnaire.

5.1 Data and experimental settings

For the accuracy evaluation of the proposed approach, 559,358 instances were collected related to indoor and outdoor monitoring of both damage conditions that the structure begins to exhibit and regular conditions.

Data collected by the system for about six months (from May to November 2022) were employed to develop this experimental phase.

Specifically, the following were collected:

-

432,012 regular instances divided into:

-

145,205 instances of non-critical situations in relation to plastering with aerated lime mortar;

-

142,493 instances related to non-critical situations in relation to brick;

-

137,817 instances related to non-critical situations in relation to stone;

-

133,843 instances related to non-critical situations in relation to iron;

-

-

127,346 instances of damage divided into:

-

33,228 instances of damage associated with plastering with aerated lime mortar as follows:

-

12,159 related to disruption;

-

10,326 related to fracking;

-

10,743 related to erosion;

-

-

33,246 instances of damage associated with brick;

-

29,126 instances of damage associated with stone;

-

31,746 instances of damage associated with iron.

-

Therefore, four independent AutoEncoders were developed in the inference engine with inputs related to the data of interest described in Table 1. Specifically, there are four AEs, as shown in Fig. 6, and each AE requires input from the data described in Table 2 to fit with current regulations and ensure proper alignment with the types of interventions required by law. In addition, to classify the damage related to anomalies of the instances associated with the airborne lime mortar plaster, the k-Nearest Neighbor algorithm was exploited to distinguish between disintegration, fracturing, and erosion based on the input data related to the specific damage instances. In particular, kNN uses the same features given as input to the Autoencoders, elaborating the similarity among samples with the Euclidean distance (See Fig. 7).

Structure of autoencoder based on ReLU activation function related to plaster (a), stone (b), brick (c), and iron (d). The images are obtained through [43]

Identifying a tolerance specific to each AutoEncoder made it possible to obtain the confusion matrices described in Fig. 8. In addition, Fig. 9 introduces the results obtained concerning Precision \(P\), Recall \(R\), \({F}_{1}\) score, and Accuracy \(A\) in agreement once True Positive (\(TP\)), i.e., anomalies, False Positive (\(FP\)), True Negative (\(TN\)), and False Negative (\(FN\)) were identified.

According to the introduced accuracy metrics, we set the AE models according to the deeper possible structure based on the data related to each AE. In particular, the input data are related to Table 2. In addition, the value of k for the KNN algorithm is selected based on the results shown in the Figure below.

Confusion matrices obtained from AutoEncoders for damage analysis related to the materials described in Table 1

5.2 Numerical results

As introduced before, this section introduces the results obtained through the two different developed experiments. The first part of the subsection presents the accuracy results related to the performance of the Inference Engine Layer, and the second part describes the questionnaire and related results.

5.2.1 Step 1: analysis of the accuracy of the inference engine layer

This subsection introduces the results obtained from data described in the previous section according to the introduced accuracy metrics.

Figure 9 shows that both Precision and Recall of the inference techniques associated with the data detection for the four types of materials exceed 90%, and the system achieves an accuracy that exceeds 95% in all four cases.

In addition to the four inference engines, it is necessary to distinguish, in the case of damage related to ‘plaster with aired lime mortar, between three types of damage:

-

Disintegration;

-

Fracturing;

-

Erosion.

For this purpose, an engine based on the kNN algorithm was trained to classify the three classes. The optimal number of neighbors is k = 13. Figure 10 shows the obtained confusion matrix, and Fig. 11 shows Precision, Recall, and \({F}_{1}\)-score. The accuracy achieved by the system is 92.21%. Again, Precision and Recall exceed 90% for all three classes under analysis.

5.2.2 Step 2: real-time parameter visualization and intervention suggestions

The Inference Engine provides elaborate information to the Application Layer by elaborating on the Predictive Maintenance Module and the Decision Support Module. Specifically, the Predictive Maintenance Module allows the detection and classification of damages through data, and the Decision Support Module suggests restoration works regularized by Table 4. In the presence of anomalies, the system provides appropriate interventions associated with each type of damage to Table 1. In particular, some of the possible interventions include.

-

1.

The management of energy-saving functions;

-

2.

The possibility of ventilating the building to control the healthiness of the environment and prevent the formation of mold;

-

3.

Access to the heating system to control temperature and humidity levels.

The first parameter to foresee represents a helpful feature for optimizing resources. In particular, activating Energy Saving triggers reduced lighting conditions when the inside space is void or excessive lighting is not needed due to good natural light. The second and third parameters concern ventilation, occurring through the extractor fans’ employment to ventilate and sanitize the environment and heating thermoregulation. In addition to improving environmental comfort, prediction and control are oriented towards preventing the phenomenon of dew point creation that could cause deterioration of the building.

Therefore, the second step of the experimental phase concerned the system’s ability to display the information acquired from the real-time processing via appropriate dashboards (Fig. 5). Furthermore, in the dashboard, it is possible to obtain information concerning the decision-making process related to the interventions to implement to preserve the building. In particular, the system allows for obtaining information on ordinary and extraordinary maintenance interventions about the condition of the building and manipulates the elaborated suggestions for interventions customized to the condition. (give some examples of maintenance).

For this purpose, 18 expert users were involved in the experimental phase through the presentation of the developed prototype. The dashboard contains all the information elaborated by the system for indoor and outdoor environmental conditions and the actions to be taken, such as lighting, heating, and ventilation system control. In addition, it is possible to explore information on routine and extraordinary maintenance actions concerning the building conditions.

After the interaction, users completed an evaluation questionnaire to test the proposed system. The questionnaire consists of five sections, and each question was associated with five possible answers according to the Likert scale: “I totally disagree” - TD, “I disagree” - D, “Undecided” - U, “I agree” - A, “I totally agree” - TA.

Moreover, the questionnaire structure is the following:

-

Section A: Presentation.

-

Q1.

Data and services are presented appropriately.

-

Q2.

The information provided is comprehensive.

-

Section B: Elaboration.

-

Q1.

The system was able to provide the type of interventions needed.

-

Q2.

The system provided helpful support for the maintenance of the facility.

-

Section C: Interaction.

-

Q1.

Interaction with the dashboard is natural.

-

Q2.

The dashboard can communicate effectively without complications.

-

Section D: Usability.

-

Q1.

The interface is user-friendly.

-

Q2.

Response times are adequate.

Figure 12 shows the results. Looking at all answers, the degree of user satisfaction is high. In addition to interaction and usability, the ability to present data was tested, which provided us with important feedback on the ability to make experienced users aware of the management of the building under study. Furthermore, although at a preliminary stage, the developed system showed a promising user acceptance index, especially in the recommendation of interventions, supporting the operators in taking the right actions at the right time. In particular, sections A and B reach significant results representing the ability to provide the appropriate information to users and obtain suggestions for interventions, respectively.

6 Conclusion

This study introduces an IoT-based architecture for protecting buildings belonging to Cultural Heritage. The novelty of the proposed system lies in the combination of three aspects: asset monitoring, predictive maintenance, and decision-making regarding the interventions to implement. The central point of the system is the use of IoT technologies: asset monitoring data provide the starting point for the management and conservation of Cultural Heritage and valuable feedback for the management and effectiveness of applicable interventions. The system exploits Machine Learning techniques supported by data management tools to increase context awareness. The proposed architecture was the subject of experimentation that involved the development of a prototype and validation involving expert users. The experimental phases yielded promising results: the system effectively supports users in monitoring data and efficiently scheduling maintenance interventions, choosing the best possible combination of conservation interventions. Future developments include database expansion, which could improve the system, especially in decision-making. Furthermore, introducing new control parameters and actuators could lead to comprehensive monitored asset management.

Data availability

The data supporting the findings of this study are available upon request from the corresponding author. Specific datasets are not publicly available due to confidentiality or proprietary considerations.

Abbreviations

- IoT:

-

Internet of Things

- ICT:

-

Information and Communication Tecnologies

- CH:

-

Cultural Heritage

- DT:

-

Digital Twin

- ML:

-

Machine Learning

- PM:

-

Predictive Maintenance

- AE:

-

AutoEncoder

- AD:

-

Anomaly Detection

- SHM:

-

Structural Health Monitoring

- kNN:

-

K-Nearest Neighbor

References

Basu A et al (2023) Digital Restoration of Cultural Heritage With Data-Driven Computing: a Survey. IEEE Access 11:53939–53977. https://doi.org/10.1109/ACCESS.2023.3280639

Attanasio A, Maravalle M, Muccini H, Rossi F, Scatena G, Tarquini F (2022) Visitors flow management at Uffizi Gallery in Florence, Italy, Information Technology & Tourism, vol. 24, no. 3, pp. 409–434, Sep. https://doi.org/10.1007/s40558-022-00231-y

Casillo M, Guida CG, Lombardi M, Lorusso A, Marongiu F, Santaniello D (2022) Predictive preservation of historic buildings through IoT-based system, in IEEE 21st Mediterranean Electrotechnical Conference (MELECON), IEEE, Jun. 2022, pp. 1194–1198. https://doi.org/10.1109/MELECON53508.2022.9842965

Liang X, Liu F, Wang L, Zheng B, Sun Y (2023) Internet of Cultural things: current research, challenges and opportunities. Computers Mater Continua 74(1):469–488. https://doi.org/10.32604/cmc.2023.029641

Menaguale O (2023) Digital Twin and Cultural Heritage – The Future of Society built on history and art. in The Digital Twin. Springer International Publishing, Cham, pp 1081–1111. doi: https://doi.org/10.1007/978-3-031-21343-4_34.

Choi H, Kim S (2023) Proposal of smart guide system for cultural heritage of archaeological sites using IoT-focusing on Hoeamsa Temple site in Yangju, Korea. Math Biosci Eng 20(5):8745–8765. https://doi.org/10.3934/mbe.2023384

D’Aniello G, Gaeta M, Reformat MZ (2017) Collective Perception in Smart Tourism Destinations with Rough Sets, in 3rd IEEE International Conference on Cybernetics (CYBCONF), IEEE, Jun. 2017, pp. 1–6. https://doi.org/10.1109/CYBConf.2017.7985765

Rossi M, Bournas D (May 2023) Structural Health Monitoring and Management of Cultural Heritage structures: a state-of-the-art review. Appl Sci 13(11):6450. https://doi.org/10.3390/app13116450

De Simone MC, Guida D (2020) Experimental investigation on structural vibrations by a new shaking tables 819–831. https://doi.org/10.1007/978-3-030-41057-5_66

Casillo M, Colace F, Gupta BB, Lorusso A, Marongiu F, Santaniello D (2022) A Deep Learning Approach to Protecting Cultural Heritage Buildings Through IoT-Based Systems, in IEEE International Conference on Smart Computing (SMARTCOMP), IEEE, Jun. 2022, pp. 252–256. https://doi.org/10.1109/SMARTCOMP55677.2022.00063

Dembele SP, Bellatreche L, Ordonez C (2020) Towards Green Query Processing - Auditing Power Before Deploying, in IEEE International Conference on Big Data (Big Data), IEEE, Dec. 2020, pp. 2492–2501. https://doi.org/10.1109/BigData50022.2020.9377819

Lorusso A, Celenta G (2023) Internet of things in the Construction industry: a General Overview. 577–584. https://doi.org/10.1007/978-3-031-31066-9_65

García-Valldecabres J, Galiano-Garrigós A, Meseguer LC, López González MC (2021) HBIM Work methodology applied to preventive maintenance. A State of the Art Review. Nov 157–169. https://doi.org/10.2495/BIM210131

Bertolin C, Cavazzani S (Nov. 2022) Potential of frost damage of off-ground foundation stones in Norwegian stave churches since 1950 using land surface temperature. Heliyon 8(11):e11591. https://doi.org/10.1016/j.heliyon.2022.e11591

Huang Q (2023) Current situation and path of foreign minority language protection based on Internet of Things from the perspective of ethnic identity, Journal of Computational Methods in Sciences and Engineering, vol. 23, no. 5, pp. 2677–2686, Oct. https://doi.org/10.3233/JCM-226899

Wang S, Chen Y, Liang D, Zhang L (Nov. 2023) Development of Wushu culture industry using internet of things technology: a case study of Anhui Province, China. Heliyon 9(11):e21732. https://doi.org/10.1016/j.heliyon.2023.e21732

Tarani F, R. Manganelli del F´a, and, Riminesi C (2020) Towards IoT monitoring of street-side monuments: the Florentine Dietrofront as a case study, IOP Conf Ser Mater Sci Eng, vol. 949, no. 1, p. 012005, Nov. https://doi.org/10.1088/1757-899X/949/1/012005

Riminesi C, Del RM, Fá S, Vettori F, Tarani, Tiano P (2022) Towards Preventive Conservation of Stone artefacts in Historical Gardens by Decay Monitoring. in Handbook of Cultural Heritage Analysis. Springer International Publishing, Cham, pp 1121–1136. doi: https://doi.org/10.1007/978-3-030-60016-7_38.

Lucchi E (Dec. 2023) Digital twins for the automation of the heritage construction sector. Autom Constr 156:105073. https://doi.org/10.1016/j.autcon.2023.105073

Yu S, Wang Y (2023) The application of traditional Chinese cultural elements in urban street landscape using the Internet of Things and deep learning, Journal of Intelligent & Fuzzy Systems, vol. 45, no. 6, pp. 11381–11395, Dec. https://doi.org/10.3233/JIFS-232292

Kumoratih D, Aprilia HD, Syaravina S, Utoyo AW (2023) ‘My Ancestors were Seafarers!’: New Media Design to Restore Collective Memory of Maritime Cultural Heritage in Public Spaces, E3S Web of Conferences, vol. 426, p. 02034, Sep. https://doi.org/10.1051/e3sconf/202342602034

Yun H (Sep. 2023) Combining Cultural Heritage and Gaming experiences: enhancing location-based games for Generation Z. Sustainability 15(18):13777. https://doi.org/10.3390/su151813777

Muñoz R, Lourenço PB (2019) Mechanical behaviour of metal anchors in historic Brick Masonry: An Experimental Approach. 788–798. https://doi.org/10.1007/978-3-319-99441-3_85

De Simone MC, Lorusso A, Santaniello D (Jul. 2022) Predictive maintenance and Structural Health Monitoring via IoT system. in 2022 IEEE Workshop on Complexity in Engineering (COMPENG). IEEE, pp 1–4. doi: https://doi.org/10.1109/COMPENG50184.2022.9905441.

Yin C, Zhang S, Wang J, Xiong NN (2022) Anomaly Detection based on Convolutional Recurrent Autoencoder for IoT Time Series. IEEE Trans Syst Man Cybern Syst 52(1). https://doi.org/10.1109/TSMC.2020.2968516

Zhao T, Zheng Y, Gong J, Wu Z (2022) Machine learning-based reduced-order modeling and predictive control of nonlinear processes. Chem Eng Res Des 179. https://doi.org/10.1016/j.cherd.2022.02.005

Grincheva N (Jan. 2023) Contact zones’ of heritage diplomacy: transformations of museums in the (post)pandemic reality. Int J Cult Policy 29(1). https://doi.org/10.1080/10286632.2022.2141721

Cheng JCP, Chen W, Chen K, Wang Q (Apr. 2020) Data-driven predictive maintenance planning framework for MEP components based on BIM and IoT using machine learning algorithms. Autom Constr 112:103087. https://doi.org/10.1016/j.autcon.2020.103087

Laborda J, García-Castillo AM, Mercado R, Peiró-Vitoria A, Perles A (2022) From concept to validation of a wireless environmental sensor for the integral application of preventive conservation methodologies in low-budget museums, Herit Sci, vol. 10, no. 1, p. 197, Dec. https://doi.org/10.1186/s40494-022-00837-9

Maksimovic M, Cosovic M (2019) Preservation of Cultural Heritage Sites using IoT, in 2019 18th International Symposium INFOTEH-JAHORINA (INFOTEH), IEEE, Mar. pp. 1–4. https://doi.org/10.1109/INFOTEH.2019.8717658

Lorenzoni F, Casarin F, Modena C, Caldon M, Islami K, Porto Fda (2013) Structural health monitoring of the Roman Arena of Verona, Italy, J Civ Struct Health Monit, vol. 3, no. 4, pp. 227–246, Dec. https://doi.org/10.1007/s13349-013-0065-0

Bezas K, Komianos V, Koufoudakis G, Tsoumanis G, Kabassi K, Oikonomou K (2020) Structural Health Monitoring in Historical Buildings: A Network Approach, Heritage, vol. 3, no. 3, pp. 796–818, Jul. https://doi.org/10.3390/heritage3030044

Trigona C, Costa E, Politi G, Gueli AM (2022) IoT-Based microclimate and vibration monitoring of a painted canvas on a wooden support in the Monastero of Santa Caterina (Palermo, Italy). Sensors 22(14). https://doi.org/10.3390/s22145097

Mitro N, Krommyda M, Amditis A (2022) Smart tags: IoT sensors for Monitoring the Micro-climate of Cultural Heritage monuments. Appl Sci (Switzerland) 12(5). https://doi.org/10.3390/app12052315

Ribera F, Nesticò A, Cucco P, Maselli G (Jan. 2020) A multicriteria approach to identify the Highest and best use for historical buildings. J Cult Herit 41:166–177. https://doi.org/10.1016/j.culher.2019.06.004

Wang Q, Yang C, Lu J, Wu F, Xu R (2020) Analysis of preservation priority of historic buildings along the subway based on matter-element model, J Cult Herit, vol. 45, pp. 291–302, Sep. https://doi.org/10.1016/j.culher.2020.03.003

Liu Y, Wang F, Liu K, Mostacci M, Yao Y, Sfarra S (2023) Deep convolutional autoencoder thermography for artwork defect detection. Quant Infrared Thermogr J. https://doi.org/10.1080/17686733.2023.2225246

Sushma SN, Sharada B (2022) A Convolutional Autoencoder based Keyword Spotting in Historical Handwritten Devanagari Documents, in 5th International Conference on Inventive Computation Technologies, ICICT 2022 - Proceedings, https://doi.org/10.1109/ICICT54344.2022.9850900

Dembele SP, Bellatreche L, Lorusso A, Marongiu F, Santaniello D (2023) In-Memory Database Query Energy Estimation: Modeling & Green Strategy Support, in IEEE World Conference on Applied Intelligence and Computing (AIC), IEEE, Jul. 2023, pp. 278–285. https://doi.org/10.1109/AIC57670.2023.10263900

Garouani M, Ahmad A, Bouneffa M, Hamlich M (Feb. 2023) Autoencoder-kNN meta-model based data characterization approach for an automated selection of AI algorithms. J Big Data 10(1):14. https://doi.org/10.1186/s40537-023-00687-7

Shishido H, Kim H, Kitahara I (2019) Super Long Interval Time-Lapse Image Generation for Proactive Preservation of Cultural Heritage Using Crowdsourcing, in Proceedings – 2019 IEEE International Conference on Big Data, Big Data 2019, https://doi.org/10.1109/BigData47090.2019.9006399

Colace F, Elia C, Guida CG, Lorusso A, Marongiu F, Santaniello D (2021) An IoT-based Framework to Protect Cultural Heritage Buildings, in Proceedings – 2021 IEEE International Conference on Smart Computing, SMARTCOMP 2021. https://doi.org/10.1109/SMARTCOMP52413.2021.00076

Bauerle A, Van Onzenoodt C, Ropinski T (2021) Net2Vis-A visual grammar for automatically Generating publication-tailored CNN Architecture visualizations. IEEE Trans Vis Comput Graph 27(6). https://doi.org/10.1109/TVCG.2021.3057483

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

F.C. and D.S. led the conceptualization and design of the study, setting out the research objectives and methodological approach. M.C. and A.L. were involved in gathering data, while R.G. and C.V. contributed to data processing and result accuracy.A.L., R.G., and C.V. collaborated on the initial draft of the article, while M.C., F.C., and R.G., played a crucial role in revising and refining the manuscript to ensure consistency and quality.Throughout the research and writing process, F.C. and D.S. provided supervision and guidance, leveraging their academic and professional expertise to fulfill the research objectives.

Corresponding author

Ethics declarations

Ethical approval

Every method employed in research with human participants was conducted under the ethical norms of the respective institutional and/or national research committee, as well as the 1964 Helsinki Declaration and its subsequent updates or equivalent ethical guidelines.

Informed consent

Consent was secured from all participants involved in the study.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Casillo, M., Colace, F., Gaeta, R. et al. Revolutionizing cultural heritage preservation: an innovative IoT-based framework for protecting historical buildings. Evol. Intel. (2024). https://doi.org/10.1007/s12065-024-00959-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12065-024-00959-y