Abstract

Eyewitnesses to crimes may seek the perpetrator on social media prior to participating in a formal identification procedure, but the effect of this citizen enquiry on the accuracy of eyewitness identification is unclear. The current study used a between-participants design to address this question. Participants viewed a crime video, and after a 1–2-day delay were either exposed to social media including the perpetrator, exposed to social media that substituted an innocent suspect for the perpetrator, or not exposed to social media. Seven days after viewing the crime video, all participants made an identification from a video lineup. It was predicted that exposure to social media that did not contain the guilty suspect would reduce the accuracy of subsequent identifications. Analysis revealed no association between social media exposure and lineup response for target present lineups. For target absent lineups, there was a significant association between social media exposure and lineup response, but this was driven by a higher number of correct rejections for participants who saw the guilty suspect on social media. The results suggest that at least in some circumstances, witnesses searching social media do not have a negative effect on formal ID procedures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The easy access to information afforded by the worldwide web offers many advantages, but the negative impact it has had on the legal system is well documented. Case details that were easily guarded in the pre-internet age are now freely available, which may compromise a defendant’s right to a fair trial. High-profile contempt of court convictions for jurors who investigated a defendant’s previous convictions online (BBC News 2017), contacted a defendant via social media (BBC News 2011), or canvassed the opinions of their friends in deciding the outcome of a case (Khan 2008) illustrates the breadth of the problem; they also show that courts are responding to the threat within existing legal frameworks. However, online citizen enquiry is not limited to juror activity during criminal trials, as crime victims and eyewitnesses may also conduct online investigations shortly after experiencing a crime in order to identify the perpetrator (Mack and Sampson 2013). As this type of online citizen enquiry takes place during the investigatory stage, it may have implications for the evidence gathered by the police, and for the outcome of formal visual identification procedures.

The term ‘web sleuthing’ has been adopted to describe such public use of online resources to conduct amateur crime investigations (Yardley et al. 2018). A high-profile example of this occurred in response to the Boston Marathon bombing in 2013, when ‘Reddit’ users crowd-sourced imagery from the crime scene and conducted their own enquiry, concurrent with the official police investigation (Nhan et al. 2017). The citizen investigators publicly named several suspects, resulting in harassment and distress for the implicated individuals and their families (Lee 2013). The official investigation revealed that none of those accused by the ‘web sleuths’ had any involvement in the attacks (Lee 2013; Nhan et al. 2017), and Reddit issued a public apology for their role in the affair (BBC News 2013). While this case illustrates the problems of amateur investigations, there can also be advantages. For example, in response to a police request for help in identifying the perpetrators of a hate crime in Philadelphia, a group of Twitter users worked collectively to match police CCTV footage to social media images. They helped identify several of those involved in the attack, and an investigating officer ‘tweeted’ his thanks to the citizen investigators (Shaw 2014).

The previous examples are of large-scale collective public activity in response to high-profile crimes, but those who have directly witnessed a criminal act may also conduct investigations on a smaller scale. Taking advantage of the unrestricted access to images and personal data that may be offered by social media, an eyewitness might attempt to make a ‘Facebook identification’ (Mack and Sampson 2013), in advance of attending an official police lineup. These actions may be well-intentioned, and the appeal of an immediate online identification is easy to see; however, in many jurisdictions, visual identification of criminal suspects is governed by strict guidelines. In England and Wales, police follow guidelines for showing video lineups as set out by Code D of the Police and Criminal Evidence Act (PACE 1984; Codes of Practice 2017) and police officers must adhere to these regulations to avoid prejudicing the identification process. Informal identifications via social media bypass the safeguards enshrined in a properly conducted police lineup, which can create complications when these cases come to trial, and social media identifications have resulted in appeals in courts in the UK.

There have been several documented instances in the UK when witnesses have used Facebook to conduct their own investigations prior to a formal identification procedure. One example is the case of Daniel McGill and Gordon Alexander who were convicted of a robbery after being identified through Facebook by their victim, who relayed the information to the police (R v Alexander and McGill 2012). Both men were then selected from a subsequent video parade; however, the Court of Appeal claimed the identification from Facebook had been unfair, as the evidence had not been presented at court (Hargreaves 2020). In another more recent case, three eyewitnesses to a fight that involved the victim being stabbed were all shown a social media image of the defendant by a third party prior to a formal identification procedure (R v Phillips 2020). During the trial, the judge specifically addressed the identification from social media and asked each witness in turn whether the defendant was the person they had witnessed commit the offence or simply the person whose social media image they had been shown. The Court of Appeal emphasised that where an identification had been made via social media, the jury should consider the weakness of this form of identification, as during a formal identification procedure (e.g. lineup), the witness might be identifying the person viewed on social media, rather than the actual offender (Gledhill and Noble 2020).

In response to the increase of searches for defendants on social media, guidelines in England and Wales (PACE 1984; Codes of Practice 2017) have been updated to detail how evidence should be recorded in the wake of a social media identification, but the update does not consider the effect of social media use on the accuracy of subsequent formal identifications. There has, however, been guidance from the National Visual and Voice Identification Strategy Group (NVVISG) that recommends that the identification officer obtains as much information about the informal identification to ensure that the best evidence is produced for court. This evidence would include not only the image(s) that the witness saw but also what the witness did, such as how they searched social media or were able to see the image and also why they did it (Kirk et al. 2014). The guidance does not state whether the informal identification of social media images will influence later formal identification from a video lineup, and as this a relatively novel phenomenon, psychological research to inform this issue is also lacking.

Although there has been little, if any, research directly exploring the impact of searching social media on an eyewitness’s subsequent decision at a lineup, there has been a considerable amount of research exploring the impact of other forms of post-event information on eyewitness memory more generally. In a review, Loftus (2005) suggested that in the real-world, a witness’s memory may be affected by misinformation presented by conversing with other witnesses, leading questions asked by law enforcement personnel and by media coverage. Paterson and Kemp (2006) compared the impact of these three sources of potential post-event information, finding that where incorrect information was presented that all three can adversely affect memory. Interestingly, ‘co-witness’ information (resulting from two witnesses conferring) appeared to exert a more powerful influence than media reports and even leading questions, a result observed previously in research on co-witness effects (e.g. Shaw 1997).

Much of the research on the effects of post-event information on eyewitness memory has focused on memory for details of the event, but there are also studies that have shown both verbal and visual forms of post-event information can affect memory for the perpetrator’s face (Sporer 1996). Loftus and Greene (1980) demonstrated that memory of the perpetrator’s appearance could be distorted by reading a misleading verbal description, whilst the effects of visual forms of post-event information have been explored in studies examining informal identification procedures, such as mugshot inspection (where the police show eyewitnesses ‘mugshot’ images of suspects prior to holding an official lineup), and street identification (where the police take a witness to a particular place, often driving around the vicinity of the crime scene, to see if they can spot the perpetrator) illustrates the potential risks (.g. Blunt and McAllister 2009; Brown et al. 1977; Godfrey and Clark 2010; Gorenstein and Ellsworth 1980; Memon et al. 2002; Valentine et al. 2012). A meta-analysis of 32 studies examining the effect of an intervening mugshot task on lineup accuracy revealed that this both reduced the number of correct identifications and increased the incidence of innocent suspects being selected from a lineup (Deffenbacher, et al. 2006). In addition, there is evidence that as mugshot inspection involves the witness in multiple identification procedures, it can create a ‘commitment effect’, such that a witness who selects a mugshot image is more likely to select that same person in a subsequent lineup, regardless of the accuracy of the initial decision (e.g. Blunt and McAllister 2009; Brown et al. 1977; Gorenstein and Ellsworth 1980; Memon et al. 2002). This effect has also been observed in experimental investigations of street identification which has revealed that repeated identification procedures can increase the choosing of foils, and innocent suspects, but often have little effect on guilty suspects (e.g. Godfrey and Clark 2010; Valentine et al. 2012). Evidence for the commitment effect has also found in an analysis of real-world police data, where lineups were conducted following street identifications. In this research, the majority (84%) of suspects identified in the street were later identified from a video lineup; however, whether those suspects were later found guilty in court was not known (Davis et al. 2015).

In the UK video, lineups now largely replaced live lineups, and research has shown that video lineups are a less biased than live lineups in actual criminal cases (Valentine et al. 2003) and less stressful than live lineups (Brace et al. 2009). When comparing video lineups and static photo lineups, video lineups can reduce the false choosing of lineup members from target absent lineups as compared to photo lineups for adults and children, without reducing the correct identifications from target present lineups (Havard et al. 2010; Valentine et al. 2007). Furthermore, when witnesses are shown a video lineup, to provide safeguards against mistaken recognition, they are always told that person they saw ‘may, or may not, be present and if they cannot make an identification, they should say so’ and they are shown the lineup twice before being asked to make a decision (PACE 1984; Codes of Practice 2017).

Under PACE code D (PACE 1984; Codes of Practice 2017), a video lineup is the preferred method of suspect identification, and the shortcomings of informal police identification methods are recognised. If suspects are identified via uncontrolled viewing of films or photographs, such as social media, then according to the PACE guidelines, the witness should be asked to give as much detail as possible in regard to the circumstances and conditions under which the viewing took place. Although there is some limited guidance on procedures for when a witness views a social media image prior to seeing a formal identification procedure (Kirk et al. 2014; PACE 1984; Codes of Practice 2017), the police have no control over the amateur use of social media sites for suspect identification, and the impact that this practice has on the accuracy of a subsequent video line-up identification is unknown.

The aim of the current study is to investigate the influence of social media exposure of potential suspects on the accuracy of identification from a subsequent video lineup. As previous research has shown that intervening mugshot exposure increases the rate of false identifications in lineups, it is predicted that exposure to intervening target absent social media images will reduce accuracy in the subsequent lineup task in the same way.

Method

Participants

One hundred and twenty members of staff at The Open University participated in the experiment (96 female). Participants received a £5 shopping voucher as recompense for their time. Participants were aged between 22 and 67 years (M = 43.8, SD = 10.8), and all had normal, or corrected-to-normal, vision.

Design

The experiment employed a 3 × 2 between-participants design. The first factor was 'Type of Social Media Exposure’, with three levels: Guilty Suspect (exposure to social media containing the perpetrator), Innocent Suspect (exposure to social media containing an innocent suspect), and Control (no social media exposure). The factor was Lineup Type, with two levels: Target Present (guilty suspect included in the lineup) and Target Absent (innocent suspect included in the lineup in place of guilty suspect). The first dependent variable was accuracy on the lineup task. In the Target Present (TP) lineups, participants could make one of three response types: a correct identification (Hit), a foil identification (False Alarm), or an incorrect rejection (Miss). In Target Absent (TA) lineups, participants could make one of three response types: a correct rejection, an innocent suspect identification, or a foil identification. Data from the TP and TA lineups were analysed separately. The second dependent variable was confidence, measured on a 7-point scale from 1 (very unsure) to 7 (very sure).

Materials

A short mock crime video was created using a Caucasian male as the target. The film showed two seated women talking while a man stole a handbag from the back of one of their chairs. The man is then seen outside removing the handbag’s contents before throwing the bag away. The film lasted approximately 1 min and 30 s, and the target was visible in both full-face and profile views during the film.

To expose participants to social media images, an interactive mock social media site called “Friendface” was created using Microsoft PowerPoint. An event page was created for a fake community centre open day, where the mock crime was purported to have taken place. This page contained photos from the event, and names and thumbnail pictures of people who had indicated interest in attending. There were twelve identities in the “Attending” category, and a further twelve in the “Maybe” category, with equal numbers of men and women in each group. By clicking on these thumbnail images, participants could view the “profile pages” of these individuals; these pages included a larger version of the thumbnail image profile picture, and three further images of each individual.

Photos typical of those used on social media were used to populate the profile pages with images. For the experimental manipulation, two versions of a profile page were created for the character ‘David Brown’, one contained photos of the suspect in the crime video (the Guilty Suspect condition), and the other contained photos of the innocent suspect (the Innocent Suspect condition). Real photos were supplied by the guilty and innocent suspect actors, taken from their personal social media accounts. The actors were matched in terms of age, build, and general appearance.

The profile, and every other aspect of the social media site, was otherwise the same in both conditions, and the profile always appeared in the ‘maybe attending’ category on the event page. The images for the additional 23 profile pages were obtained from the People In Photo Albums (PIPA) dataset (Zhang et al. 2015), consisting of over 60,000 instances of around 2000 individuals, which originated from Flickr photo albums.

Four 9-person video lineups were created using PROMAT Video Identification Parade Software. Half of the lineups were Target Present (TP) and half Target Absent (TA). The Promat software was used to vary the position of the target in the line-up across participants. The guilty suspect and innocent suspect were filmed using standard PROMAT guidelines. The films showed the head and shoulders of each person framed against the green PROMAT background. First the suspects are shown looking straight at the camera, and then they are shown turning their head to the right, then to the left, then back to facing straight ahead. Foils were chosen from the PROMAT database by searching based on keywords related to the suspect’s description (sex, ethnicity, age range, hair style), which yielded a selection of potential foils that matched the suspect’s general appearance. The same foils were used for TP and TA conditions. All of the videos for the lineup were filmed under the same lighting conditions.

Procedure

The experiment took place over three sessions. During the first session, participants took part in a briefing session and provided basic demographic information, before viewing the crime video. This session lasted approximately 10 min.

The second session took place either 1 or 2 days later. At the start of the second session, all participants completed the filler task, the Need for Closure Questionnaire (Kruglanski et al. 2013), which was presented on-screen using Qualtrics software (Qualtrics, Provo, UT). The Need for Closure Questionnaire (Kruglanski et al. 2013), which consists of 42 questions designed to assess an individual’s need for cognitive closure (and a further five which form a ‘lie scale’), was administered to all participants as a filler task.

After completing the questionnaire, the participants were then randomly assigned to one of three social media exposure conditions. Those in the experimental conditions viewed the “Friendface” social media site and were given time to explore the site freely. In the ‘Guilty suspect’ condition, the perpetrator was included in the social media content; in the ‘Innocent Suspect’ condition, an innocent suspect replaced the perpetrator in the social media content. Participants in each of the two experimental conditions were asked to search the mock social media site for the person they had seen committing the theft in the video. They were free to explore the site for as long as they wanted. When they were finished exploring the site, they were asked to tell the experimenter if the perpetrator was present or not, and if present, to supply the name from the social media profile. Participants in the control group did not engage with social media after completing the filler task, so did not make any identification during this session.

The third session took place 7 days after the initial session. All participants were required to make an identification from a PROMAT video lineup and to rate their confidence in this decision. Each participant was tested individually. Half of the participants viewed a TP lineup, and half viewed a TA lineup. Prior to viewing the lineup, the participants were told ‘Today I am going to show you a video that has pictures of different people in it and the man you saw in the film may or may not be there. We will watch the video twice. When we’ve watched the video I will ask you if you can see the man from the film and if you see him tell me what number he is’.

Following the PROMAT protocol, and Code D of PACE (PACE 1984; Codes of Practice 2017), each lineup was presented sequentially via a laptop, and the lineup was shown twice before the witness was asked to make a decision.

Results

Social Media Identification

When participants were exposed to the guilty suspect social media condition, 76.2% (32 out of 42) were able to correctly identify the guilty suspect from the social media, 14.3% (6) made incorrect rejections, and 9.5% (4) falsely identified an alternative. When participants were exposed to social media in the Innocent Suspect condition, 56.4% (22 out of 39) made correct rejections, 7.7% (3) falsely identified the matched distractor, and 35.9% (14) falsely identified another person on the social media site.

Video Lineup Identification

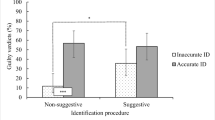

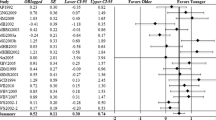

In the TP lineups, 85.2% of participants (52 out of 61) correctly identified the perpetrator, 6.6% (4) made an incorrect rejection, and the final 8.2% (5) made a false identification. In TA lineups, 45.8% made a correct rejection (27 out of 59), 13.6% (8) identified the matched distractor (I.e. the innocent suspect), and 54.2% (24) falsely identified another foil. Table 1 shows the percentage of response types in each category.

The Relationship Between Social Media Identification and Video Lineup Identification

The accuracy of social media identification was not significantly associated with accuracy of lineup identification for either TP (χ2 (1, N = 41) = .81, p = .37, V = .14) or TA (χ2 (1, N = 40) = .84, p = .36, V = .15] lineups.

To examine the effect of social media exposure on lineup identification decisions, separate analyses were conducted for TP and TA lineups.

Table 1 shows that for TP lineups, response patterns were similar across conditions, and the results of the chi-square analysis confirmed there was no significant effect of social media exposure on lineup response (χ2 (4, N = 61) = 2.1, p = .711, V = .13 n.s.). For TA lineups, the chi-square analysis showed the effect of social media exposure on lineup response was significant (χ2 (4, N = 59) = 9.6, p = .047, V = .29). Looking at Table 1, this is likely due to an elevated rate of correct rejections in the ‘Guilty Suspect’ condition compared to the other two conditions.

Confidence

The relationship between confidence and accuracy was examined separately for Target Absent and Target Present Lineups. A 3 x× 2 ANOVA with Social Media Condition (Control/Guilty Suspect/Innocent Suspect) and Lineup Accuracy (Correct/Incorrect) as factors was conducted for each of the two lineup types.

For TP lineups, the main effect of Lineup Accuracy was significant (F (1,55) = 7.67, p = .008, η2 = .122) and the mean confidence score was higher for those who made accurate lineup decisions (M = 5.6) than for those who made inaccurate decisions (M = 4.6). There was no main effect of social media condition on confidence (F (2,55) = 1.34, p = .268, η2 = .047), and the interaction was non-significant (F (2,55) = .106, p = .899, η2 = .004]. For TA lineups, the main effect of Lineup Accuracy was non-significant (F (1,53) = .003, p = .96, η2 < .000) and the mean confidence score was similar for those who made accurate lineup decisions (M = 4.84) and those who made inaccurate decisions (M = 4.82). There was no main effect of social media condition on confidence (F (2,53) = .585, p = .561, η2 = .022), and the interaction was non-significant (F (2,53) = 1.17, p = .319, η2 = .042).

Discussion

When the target was present in the lineup, accuracy was high across the three conditions, and exposure to social media did not significantly affect lineup identification decisions. When the target was absent from the lineup, social media exposure did affect identification decisions; however, this effect was driven by an increase in correct rejections for participants who saw the perpetrator on social media, rather than the predicted increase in false identifications following exposure to the guilty suspect on social media. For participants in the social media exposure groups, the accuracy of their social media identification was not related to the accuracy of their lineup identification.

Control participants did not make an identification from social media, so the PROMAT lineup, which took place 7 days after viewing the mock crime video, was the only form of identification for this group. While the PROMAT video lineup and the Social Media Identification differ on several dimensions, and therefore cannot be directly compared, the percentage correct identification for control TP PROMAT lineups (80%) is similar to the accuracy for the social media TP identification (76%). This suggests that where a guilty suspect is encountered on social media, the accuracy of the subsequent identification may not be substantially compromised by the medium in which they are encountered. The percentage of correct rejections in the social media identifications (56.4%) was higher than the percentage of correct rejections in the control TA PROMAT lineups (31.6%), which may reflect the higher number of realistic distractors present in the PROMAT lineups compared to the mock social media site, which contained few identities that resembled the guilty suspect.

The prediction that social media exposure would negatively affect the accuracy of subsequent identification decisions was not supported for either TA or TP lineups in the current study. This finding contrasts with the results of a meta-analysis on intervening mugshot inspection (Deffenbacher et al. 2006), which demonstrates that this practice is associated with a reduction in correct identifications, and an increase in false identifications, on subsequent lineup tasks. Similarly, research on street identifications suggests they negatively affect the accuracy of subsequent identifications (Godfrey and Clark 2010; Valentine et al. 2012). However, while exposure to intervening images is common to both the current study and previous research on intervening identification tasks, there are a number of differences between these approaches that may account for the conflicting findings.

First, mugshot studies aim to simulate situations in which police show the images to witnesses, so the images are standardised and are chosen to match the appearance of the perpetrator. In contrast, the current study aims to simulate the unconstrained viewing of social media images prior to making a formal identification, so the images and identities are necessarily more diverse. The fake social media site that was used to expose participants to facial images in the current study contained an array of 32 identities, 16 of which were male, but only one of these was specifically selected to be similar to the target in appearance. Examination of the false alarms from the social media identification data shows that only the matched distractor and two other identities were ever mistakenly identified from this stage of the study. This raises the possibility that there were few identities that could be confused for the target on the mock social-media site, and this may have contributed to a lower error rate in the current study; however, this explanation is not entirely consistent with the findings of Deffenbacher et al. (2006) meta-analysis.

In the studies included in Deffenbacher et al.’s meta-analysis, the number of mugshots participants viewed varied between 12 and 60 (median = 21), and studies in which participants viewed more than the median number of mugshots did not generate a reliably negative effect on the accuracy of subsequent identifications. The five studies that used fewer than the median number of interpolating mugshots did produce a reliable negative effect on subsequent identifications. This suggests that a small number of intervening mugshots may have a more damaging effect on accuracy than a large number. However, in the current study, the number of identities that could be confused with the target is low in comparison with the bottom half of the median-split in Deffenbacher et al.’s meta-analysis, so the possibility that there were too few realistic distractors on the mock social media site to generate an effect cannot be ruled out. On the other hand, the studies in the Deffenbacher et al. meta-analysis that exposed participants to a large number of images did not have a deleterious effect on the accuracy of subsequent lineup identifications. In the current study, participants were exposed to an array of 32 identities on a single page, so if the effect is driven by the quantity of faces seen, rather than the quality of the matches, this could explain the lack of impairment following social media exposure in the current task. Further research could vary the number and similarity of the identities viewed on social media to distinguish between these two competing explanations.

Although the current results contrast with research on mugshot exposure, they are similar to the findings of recent research investigating the effect of facial composite construction on the accuracy of subsequent lineup identification. Whilst a few studies have reported that composite construction appears to increase misidentification rates (e.g. Wells et al. 2005), the majority of studies have either reported no effect (e.g. Pike et al. 2020, 2019) or even a beneficial effect (e.g. Davis et al. 2014), and a recent meta-analysis concluded that composite construction does not appear to affect lineup decision (Tredoux et al. 2020).

Considering TP performance, accuracy was high across all three conditions, with relatively few participants incorrectly rejecting the target or making false identifications in the final lineup. This may indicate that the task was not difficult enough. The mock-crime video used in the current study was very high quality, and the perpetrator’s face was visible from a number of angles, including in close-up. While the aim was to simulate the experience of witnessing a live crime, the range and quality of the footage may have offered views that exceeded those of real-life encounters, leaving little room for error. In addition, for the TP lineups, even if participants picked someone else from the social media, that identity would not appear in the final lineup, so the perpetrator is the only identity in the lineup to which participants have been previously exposed. These factors should be explored in future studies.

Although there was no effect of social media exposure on TP lineup identifications there was a significant effect on TA lineups. Contrary to our prediction, this difference was driven by higher accuracy for those who saw the perpetrator on social media, relative to the control and distractor conditions. This suggests that social media exposure to the correct perpetrator helps participants to correctly reject target absent lineups, but social media exposure to an innocent suspect does not increase false alarms relative to controls. The finding that viewing additional images of the perpetrator is beneficial for subsequent facial identification is consistent with research on face memory conducted by Bruce (1982), which demonstrated that differences between images at study and test (e.g. change of expression) carry a high cost for correct identification. More recently, research on face matching has focussed on within-person variability, and the challenge of ‘telling people together’ (e.g. Jenkins et al. 2011; Burton et al. 2016). The current finding could be accommodated within this theoretical framework.

Jenkins et al. (2011) propose that an individual image is a poor representation of a face because appearance varies across images, and within-person variation can be greater than between-person variation. This means that two images of the same person may differ to a greater extent than images of two different people. In their study they administered a card-sorting task, which required people to divide sets of 40 facial images (20 × 2 individuals), into their constituent identities. When participants unfamiliar with the people in the images performed the task, the median number of piles was 7.5; when participants familiar with the identities sorted the same image sets, the median number of piles was 2. Interestingly, while participants made errors in separating each of the two identities into several different piles, they did not create piles that mixed the two identities. The errors were with ‘telling people together’, that is thinking there were more identities than were present, rather than mistaking two different identities as being the same person.

Jenkins et al. (2011) offer this finding as evidence that exposure to multiple images of a face under different conditions helps form a robust representation, incorporating individual variation in appearance. Importantly, as variation is idiosyncratic, the benefits of exposure to facial variation are specific and do not generalise to other identities. Further research has shown that exposure to multiple images of a face benefits performance on a subsequent matching task, which suggests that facial variability exposure offers a fast-track to face learning (Andrews et al. 2015). The pattern of results in the current study is consistent with the idea that exposure to multiple images of the perpetrator offered participants an understanding of the way in which his face varies, creating a more robust representation of that individual face that could not accommodate any of the foils. This finding builds on existing theory on within-person variability by demonstrating that exposure to facial variation also benefits face learning in an eyewitness memory paradigm.

While the current study offers useful insights into the effect of social media exposure on subsequent identification procedures, because the study was designed to simulate real-world conditions, it was necessarily very time-consuming and involved testing people over several sessions. As such, the study used a single target identity, and while it offers a useful starting point in investigating how social media exposure affects lineup identification, further research is required to validate the findings with multiple identities.

Importantly, this research demonstrates that exposure to interpolating images between witnessing a crime and taking part in a formal identification procedure does not always have a cost for accuracy. While previous research on mugshot exposure (e.g. Deffenbacher et al. 2006) and street identifications (e.g. Davis et al. 2015) demonstrates that these practices may harm subsequent lineup identifications, the current study offers the first indication that social media exposure does not have similar consequences. This suggests that the decisions of appeal courts to uphold convictions where a social media identification preceded a formal identification procedure may be well founded. However, in the current study the social media exposure was presented to participants without bias or additional context, which may not be reflective of most real-world experiences. In the appeal case cited (R v Alexander and McGill, 2012), there were additional factors (e.g. cues from friends, repeated viewing of the imagery) which may have a greater influence on subsequent decisions compared to social media exposure alone. Until the effect of these additional factors is established, social media identifications should be considered with caution.

As the use of social media by citizens conducting their own investigations is becoming more commonplace, policy makers need to consider the impact that this can have on more formal identification procedures. There have been some suggested guidelines from the National Visual and Voice Identification Strategy Group (NVVISG) that could help to inform legislation (Kirk et al. 2014) and from the legal profession (McGorrey 2016). Guidelines for the police suggest that suspect descriptions should be obtained as soon as possible from a witness, followed by a formal identification procedure, prior the opportunity for any self-directed searches on social media and that the police should also advice the witness to avoid searching for the suspect on social media (McGorrey 2016). The NVVISG guidelines suggest obtaining as much information as possible about the informal identification the witness had taken part in to ensure that the best evidence is produced. This evidence would consist of not only the image(s) that the witness saw but also the process by which they were obtained, and whether they were guided by another party to the images. Then when the evidence is presented in court, the jury should be made aware of the informal identification procedure and its potential pitfalls, such as a witness identifying someone they have seen on social media, rather than actually identifying the suspect they saw commit the offence.

References

Andrews S, Jenkins R, Cursiter H, Burton AM (2015) Telling faces together: Learning new faces through exposure to multiple instances. Q J Exp Psychol 68(10):2041–2050. https://doi.org/10.1080/17470218.2014.1003949

BBC News (2013) Reddit apologises for Boston bombings witch hunt. BBC News Technology. Retrieved from: http://www.bbc.co.uk/news/technology-22263020

BBC News (2011) Facebook juror sentenced to eight months for contempt. Retrieved from: http://www.bbc.co.uk/news/uk-13792080

BBC News (2017) Juror sentenced for internet research on convictions. Retrieved from: http://www.bbc.co.uk/news/uk-england-tyne-3981073

Blunt MR, McAllister HA (2009) Mug shot exposure effects: Does size matter? Law Hum Behav 33:175–182. https://doi.org/10.1007/s10979-008-9126-z

Brace NA, Pike GE, Kemp RI, Turner J (009) Eye-witness identification procedures and stress: a comparison of live and video identification parades. Int J Police Sci Manag 11(2):183–192. https://doi.org/10.1350/ijps.2009.11.2.122

Brown E, Deffenbacher K, Sturgill W (1977) Memory for faces and the circumstances of encounter. J Appl Psychol 62(3):311–318. https://doi.org/10.1037/0021-9010.62.3.311

Bruce V (1982) Changing faces: Visual and non-visual coding processes in face recognition. Bri J Psychol 73(1):105–116. https://doi.org/10.1111/j.2044-8295.1982.tb01795.x

Burton AM, Kramer RSS, Ritchie KL, Jenkins R (2016) Identity from variation: representations of faces derived from multiple instances. Cogn Sci 40(1):202–223. https://doi.org/10.1111/cogs.12231

Davis J, Valentine T, Memon A, Roberts A (2015) Identification on the street: a field comparison of police street identifications and video line-ups in England. Psychol Crime Law 21:9–27. https://doi.org/10.1080/1068316X.2014.915322

Davis JP, Gibson S, Solomon C (2014) The positive influence of creating a holistic facial composite on video line-up identification. Appl Cogn Psychol 28(5):634–639. https://doi.org/10.1002/acp.3045

Deffenbacher KA, Bornstein BH, Penrod SD (2006) Mugshot Exposure Effects: Retroactive Interference, Mugshot Commitment, Source Confusion, and Unconscious Transference. Law Hum Behav 30(3):287–307. https://doi.org/10.1007/s10979-006-9008-1

Gledhill S, Noble G (2020) The impact of social media on identification procedures. 3 Temple Gardens. https://www.3tg.co.uk/library/theimpactofsocialmediaonidentificationprocedures.pdf

Godfrey RD, Clark SD (2010) Repeated eyewitness identification procedures: Memory, decision-making, and probative value. Law Hum Behav 34:241–258. https://doi.org/10.1007/s10979-009-9187-7

Gorenstein GW, Ellsworth PC (1980) Effect of choosing an incorrect photograph on a later identification by an eyewitness. J Appl Psychol 65(5):616–622. https://doi.org/10.1037/0021-9010.65.5.616

Hargreaves, B. (2020, December 9) Social media and identification. Carmelite Chambers https://www.carmelitechambers.co.uk/blog/blog-social-media-and-identification

Havard C, Memon A, Clifford B, Gabbert F (2010) A comparison of video and static photo lineups with child and adolescent witnesses. Appl Cogn Psychol 24:1209–1221. https://doi.org/10.1002/acp.1645

Jenkins R, Van Montfort X, White D, Burton AM (2011) Variability in photos of the same face. Cognition 121(3):313–323

Kirk A, Waterman K, Monaghan A, Sherrington L (2014) Internet Social Media and Identification Procedures. Guidance produced by the National Visual and Voice Identification Strategy Group (NVVIS) https://library.college.police.uk/docs/APPREF/NVVIS-Guidance-on-Internet-Social-Media-and-Identification-Procedures.pdf

Khan U (2008, November 24) Juror dismissed from a trial after using Facebook to help make a decision. The Telegraph. Retrieved from: http://www.telegraph.co.uk

Kruglanski AW, Atash MN, De Grada E, Mannetti L, Pierro A (2013) Need for Closure Scale (NFC). Retrieved from https://www.mids.org, Measurement Instrument Database for the Social Sciences

Lee D (2013) Boston bombing: How internet detectives got it very wrong. BBC News Technology. Retrieved from http://www.bbc.co.uk/news/technology-22214511

Loftus EF (2005) Planting misinformation in the human mind: A 30-year investigation of the malleability of memory. Learn Mem 12(4):361–366

Loftus EF, Greene E (1980) Warning: Even memory for faces may be contagious. Law Hum Behav 4(4):323–334

Mack J, Sampson R. (2013) "Facebook Identifications". Criminal Law & Justice Weekly. 177, JPN 73.

McGorrey P (2016) But I was so sure if was him: How face could be making eyewitness identifications unreliable. Internet Law Bulletin. Retrieved from https://www.researchgate.net/profile/Paul-Mcgorrery/publication/298391277_But_I_was_so_sure_it_was_him_How_Facebook_could_be_making_eyewitness_identifications_unreliable/links/56e9101908aea51e7f3ba1ca/But-I-was-so-sure-it-was-him-How-Facebook-could-be-making-eyewitness-identifications-unreliable.pdf

Memon A, Hope L, Bartlett J, Bull, R (2002) Eyewitness recognition errors: The effects of mugshot viewing and choosing in young and old adults. Mem Cogn 30(8):1219–1227. https://doi.org/10.3758/BF03213404

Nhan J, Huey L, Broll R (2017) Digilantism: An analysis of crowdsourcing and the Boston Marathon bombings. British Journal of Criminology. 57(2):341–361. https://doi.org/10.1093/bjc/azv118

Paterson HM, Kemp RI (2006) Comparing methods of encountering post-event information: The power of co-witness suggestion. Appl Cogn Psychol 20(8):1083–1099

Pike GE, Brace NA, Turner J, Ness H, Vredeveldt A (2020) Advances in facial composite technology, utilizing holistic construction, do not lead to an increase in eyewitness misidentifications compared to older feature-based systems. Front Psychol 10, Article 1962. https://doi.org/10.3389/fpsyg.2019.01962

Pike GE, Brace NA, Turner J, Vredeveldt A (2019) The effect of facial composite construction on eyewitness identification accuracy in an ecologically valid paradigm. Crim Justice Behav 46(2):319–336. https://doi.org/10.1177/0093854818811376

Police and Criminal Evidence Act (1984) Codes of Practice, Code D (2017) Retrieved from: https://www.gov.uk/guidance/police-and-criminal-evidence-act-1984-pace-codes-of-practice

R v Alexander and McGill [2012] EWCA Crim 2768. https://www.3tg.co.uk/library/theimpactofsocialmediaonidentificationprocedures.pdf

R v Phillips (2020) EWCA CRIM126

Shaw E (2014) ‘Philly Hate Crime Suspects Tracked Down by Anonymous Twitter Hero’, Gawker, Retrieved from http://gawker.com/philly-hate-crime-suspects-tracked-down-by-anonymous-tw-1635661609/all

Shaw JS III, Garven S, Wood JM (1997) Co-witness information can have immediate effects on eyewitness memory reports. Law Hum Behav 21:503–523

Sporer SL (1996) Experimentally induced person mix-ups through media exposure and ways to avoid them. Adv Res Psychol Law pp 64–73

Tredoux CG, Sporer SL, Vredeveldt A, Kempsen K, Nortje A (2020) Does constructing a facial composite affect eyewitness memory? A research synthesis and meta-analysis. J Exp Criminol. Advance online publication. https://doi.org/10.1007/s11292-020-9432-z

Valentine T, Davis JP, Memon A, Roberts A (2012) Live showups and their influence on a subsequent video lineup. Appl Cogn Psychol 26(1):1–23. https://doi.org/10.1002/acp.1796

Valentine T, Darling S, Memon A (2007) Do strict rules and moving images increase the reliability of sequential identification procedures? Appl Cogn Psychol 21(7):933–949. https://doi.org/10.1002/acp.1306

Valentine T, Harris N, Colom Piera A, Darling S (2003) Are police video identifications fair to African-Caribbean suspects? Appl Cogn Psychol 17(4):459–476. https://doi.org/10.1002/acp.880

Wells GL, Charman SD, Olson EA (2005) Building face composites can harm lineup identification performance. Journal of Experimental Psychology: Applied 11(3):147–156. https://doi.org/10.1037/1076-898x.11.3.147

Yardley E, Thomas Lynes AG, Wilson D, Kelly E (2018) What’s the deal with ‘websleuthing’? News media representations of amateur detectives in networked spaces. Crime Media Cult 14(1):81–109. https://doi.org/10.1177/1741659016674045

Zhang N, Paluri M, Taigman Y, Fergus R, Bourdev L (2015) Beyond frontal faces: improving person recognition using multiple cues,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Boston: IEEE), 4804–4813.

Acknowledgements

We are grateful to Steff Reicher and Camilla Elphick who collected data for this study.

Funding

This work was supported by a Police Knowledge Fund grant from HEFCE and the Home Office.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

The study adhered to the British Psychology Guidelines Code of Ethics and Conduct (2018) and received ethical approval from the Open University Human Research Ethics Committee (HREC).

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Havard, C., Strathie, A., Pike, G. et al. From Witness to Web Sleuth: Does Citizen Enquiry on Social Media Affect Formal Eyewitness Identification Procedures?. J Police Crim Psych 38, 309–317 (2023). https://doi.org/10.1007/s11896-021-09444-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11896-021-09444-z