Abstract

Different approaches for measuring instructional quality have been debated. Previous studies based on student ratings have primarily used class-average ratings. Beyond this, the high within-classroom variability of students’ ratings might indicate that instruction caters to some, but not all students. Therefore, we investigated student-reported instructional quality in mathematics classrooms by considering the average student ratings and rating heterogeneity within classrooms. Using a case-centered clustering approach, we aimed to detect meaningful configurations of the level and heterogeneity of student-reported instructional quality in terms of the Three Basic Dimensions (TBD): classroom management, cognitive activation, and student support. We analyzed data from N = 973 grade eight students across N = 106 classes. Using Latent Profile Analysis (LPA), we identified four classroom profiles comprising 20% to 28% of the sample. The results indicate that the profile with the lowest average ratings showed consistently high heterogeneity for all indicator variables. However, the profile with the highest average ratings exhibited consistently low heterogeneity. We gained interesting insights into between-class differences in instructional quality by considering rating heterogeneity. Furthermore, we explored how classrooms from the identified profiles differed regarding socio-economic status (SES) and mathematics-related characteristics (interest, intrinsic motivation, self-concept, and achievement).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last few decades, the structure of classroom teaching and quality of instruction have been investigated intensively, revealing a relationship with student outcomes (e.g., Hattie, 2009; Muijs et al., 2014).

Many researchers have examined the central dimensions of instructional quality. Hence, numerous frameworks have been developed to analyze the complex instruction phenomenon and its quality (e.g., Charalambous & Praetorius, 2018). While correlations between these dimensions have been extensively studied (e.g., Kunter et al., 2013; Lipowsky et al., 2009), little is known about how to characterize school classes regarding overall instructional quality. Whether there are typical configurations of instructional quality is still unclear, for example, classes with consistently high or low quality over all dimensions or even classes with more complex patterns.

Prior research has identified measures of objective instructional quality, such as using video or in vitro observations, teacher reports, or average student ratings over an entire class (Senden et al., 2022). However, instructional quality can also be seen from the perspective of individual students, raising the question of whether students within a given class similarly perceive instruction regarding a specific dimension. There is little evidence on whether students’ perceptions of the same lessons in the same class with the same teacher are homogeneous or heterogeneous. For example, it is conceivable that a high extent of within-classroom variability might indicate that instruction caters to some, but not all students.

Thus, this study aimed to investigate whether typical configurations of instructional quality in mathematics classrooms can be identified by considering average student ratings of instructional quality (aggregated at the class level) and the standard deviation, expressing the heterogeneity of students’ perceptions. Therefore, this connection was explored by identifying classroom profiles of instructional quality based on both types of measures. This study’s second and rather exploratory goal is to investigate whether the classrooms of the identified profiles can be characterized by the demographic, subject-related motivational, and subject-related cognitive characteristics of the students in these classrooms. Therefore, this study examined the socio-economic status (SES) of learners as a demographic student characteristic, mathematics-related interest, intrinsic motivation, self-concept as motivational characteristics, and mathematics achievement as a cognitive student characteristic.

In the context of the special issue, this study contributes to considering instructional quality from the student perspective, taking into account both average student ratings and the within-class heterogeneity of these ratings, to describe and characterize typical configurations of student-reported instructional quality in mathematics classrooms.

2 Background

2.1 Conceptualizing and measuring instructional quality

Instructional practice is often regarded as a complex social situation in which many processes happen simultaneously (Doyle, 1986). Hence, numerous researchers have adopted different approaches to conceptualize instructional quality. According to Mu et al., (2022), instructional quality refers to observable characteristics of classroom instruction that are orchestrated by teachers for which a connection to a desirable development of students’ learning outcomes is theoretically plausible or supported by empirical evidence. Following Berliner (2005), this may center on whether teaching is based on normative standards focusing on students’ holistic development (“good teaching”) or on whether students achieve the intended learning or motivational outcomes (“effective teaching”). Another possibility is to differentiate between surface structures (such as teacher methods or organizational characteristics) and deep structures, which relate to students’ engagement with learning materials or the immediate teaching and learning process (Oser & Baeriswyl, 2001). Several reviews have described deep structures as positively connected to student learning (e.g., Helmke, 2009; Seidel & Shavelson, 2007).

To classify deep structures, Klieme et al. (2009) identified three core dimensions of instructional quality in video-based observations, forming the Three Basic Dimensions (TBD) framework: classroom management, cognitive activation, and student support. Classroom management involves coordinating and managing complex interactions in the classroom (such as dealing with and preventing disruptions), aiming to make optimal use of learning time and minimize non-instructional activities (Evertson & Weinstein, 2006). Cognitive activation involves exploiting the cognitive potential of challenging subject-related tasks, activating prior knowledge, and initiating content-related discourse in which students are encouraged to engage in higher-level thinking–for example, by explaining, sharing, and comparing their thoughts and solutions (Klieme et al., 2006; Lipowsky et al., 2009). Student support covers features of teacher-learner interaction, such as supportive teacher-student relationships, positive and constructive teacher feedback, a positive approach to student errors and misconceptions, individual learner support, and caring teacher behavior (Klieme et al., 2006). These three dimensions have been the basis for a substantial national and international research body, such as TIMSS (Nilsen & Gustafsson, 2016).

Other frameworks mainly differ in the way instructional quality is operationalized and whether it is surveyed based on subject-specific or general aspects (e.g., Charalambous & Praetorius, 2018; Mu et al., 2022). For example, the Mathematical Quality of Instruction framework (MQI, Learning Mathematics for Teaching, 2011) advocates separating mathematical aspects from pedagogical methods. Such approaches focus primarily on conceptual clarity and discuss subject-related aspects. Some frameworks can be located between subject-specific and generic, such as TEDS-Instruct framework (Schlesinger et al., 2018), incorporating both TBD and content-specific instructional aspects. Although various different frameworks have been developed, Mu et al., (2022) demonstrated the continued importance of TBD: 63.5% of the identified measures for instructional quality dimensions (N = 1,351 out of 2,127) could be associated with one core dimension of TBD. Thus, this widely used model provides a pragmatic clustering of a range of indicators identified in prior research.

Various approaches have been adopted to measure instructional quality. For a detailed discussion of their strengths and weaknesses, refer to Senden et al. (2022). Among these different perspectives, student ratings have been controversial, as some authors doubt that students can objectively rate instructional quality (Aleamoni, 1999). However, recent studies have shown that student ratings provide reliable and valid measures because of students’ daily experiences with different teaching styles (e.g., Senden et al., 2023). Furthermore, based on longitudinal data from six education systems, Herbert et al. (2022) showed that student perceptions of instructional quality aggregated at the class level were reliable and stable between measurements. However, Fauth et al. (2014) pointed out that the differences between quality dimensions must be considered. Göllner et al. (2021) stated that student assessments are in no way generally inferior to alternative assessments, such as classroom observations or teacher self-reports. Nevertheless, they recommend a deeper look at the characteristics of existing surveys to determine the learnings about instructional quality from the students’ perspective.

Numerous studies have used student reports on TBD. For example, Kunter and Voss (2013) employed a questionnaire in the COACTIV study, which included scales on mathematics instruction and teachers (regarding instructional organization, the types of tasks used, or forms of social interaction). In the German BilWiss research program, Kunter et al. (2017) operationalized the three dimensions of TBD using several subdimensions, such as attention to student distractions and difficulties and dealing with disturbances, reflecting different classroom management aspects.

2.2 Students’ perspectives on instructional quality

Over the past four decades, research on instructional quality has shifted its focus away from discrete and directly observable teaching practices, teacher personalities, and teacher behavior in the classroom (Creemers & Kyriakides, 2015) towards an interactive Process-Mediation-Product Paradigm (Brophy, 2000, 2006; Brophy & Good, 1984). This approach highlights the interplay between instructional actions, methods, or approaches implemented by the teacher (processes) and the active engagement of students in utilizing the entailed learning opportunities (mediation), ultimately leading to student advancement (product; Praetorius et al., 2014).

Successful learning relies on students’ use of the learning opportunities provided, which may also be related to their individual perceptions of these opportunities. From this perspective, it can be argued that understanding student learning involves not only the objective instructional quality in a classroom but also how students individually perceive it. Lüdtke et al. (2006) described two levels of interpretation of student ratings of instructional quality: at the individual level, they represent individual students’ perceptions of the learning environment, and when aggregated at the classroom level, they indicate shared perceptions. Studies relying on student reports usually equate instructional quality to average student assessments aggregated at the classroom level. However, it should also be considered to what extent students within a class agree with each other regarding the quality of instruction provided by their teacher (Lüdtke et al., 2009). For example, a teacher may generally handle students’ mistakes professionally; nevertheless, some students may perceive the teacher's approach to their mistakes as disrespectful. Considering these deviations from the average perception could facilitate valuable insights into the ongoing discourse on instructional quality.

Examining the classroom climate, Schenke et al. (2017) provided initial evidence suggesting that high within-classroom variability in perceptions of classroom climate can negatively affect students’ academic achievement. In this sense, objective measures of instructional quality must be seen considering the heterogeneity of student perception of this quality: while high objective instructional quality with low heterogeneity may be desirable, a higher level of heterogeneity could be promising in cases of low instructional quality. In these (though undesirable) cases, at least some students appear to perceive the potential for gaining valuable insights from the instruction. When searching for typical configurations of instructional quality, the average perceived level of instructional quality and within-classroom variability should be considered.

2.3 Instructional quality and classroom characteristics

As a process that arises from a balance between teachers’ actions and students’ reactions to these actions, instructional quality can hardly be conceptualized in the complete independence of students’ learning prerequisites (Hattie, 2009; Seidel & Shavelson, 2007). Students’ prerequisites influence the learning opportunities they can benefit from, and vice versa; many teachers try to adapt their instruction to their students’ prerequisites (Tomlinson, 2014). While an orchestration of individualized learning opportunities that cater to all students according to their prerequisites seems desirable (Brophy, 2000; Lipowsky et al., 2009), class-average prerequisites such as average achievement may be easier for teachers to diagnose and react to (Baumert et al., 2010).

In addition to achievement, demographic characteristics such as socio-economic status and subject-specific motivational factors such as interest, intrinsic motivation, and self-concept may also be relevant to students’ use of learning opportunities. At the individual student and class levels, the question arises as to what extent these characteristics are associated with a specific (higher or lower) level of instructional quality. It is important to note that bidirectional effects could explain these connections; high-quality teaching could lead to above-average student outcomes. Conversely, in higher-performing classes, teachers may be able to offer higher quality and, in particular, cognitively more activating lessons. A similar reciprocal influence may exist at the class level between teaching and developing motivational traits. However, individual bidirectional effects are also conceivable, for example, between the development of subject-related achievement and self-concept or perceived instructional quality and individual learning development.

3 Current study: goals and questions

This study identifies the typical configurations of the dimensions of student-reported instructional quality, considering average student ratings and their heterogeneity within classes. Following Klieme et al. (2009), we focused on the Three Basic Dimensions (TBD): classroom management, cognitive activation, and student support, further dividing student support into social and structuring support. Using a case-centered clustering approach that considers each classroom as a case (see Section 4.4), we addressed the following questions:

-

Q1. Which classroom profiles can be identified among grade eight mathematics classrooms based on average student-reported instructional quality and its within-classroom variability? Specifically, we were interested in whether within-classroom variability contributes to cluster interpretability.

-

We expected four possible profiles characterized by different combinations of ratings and heterogeneity: (i) high ratings with low heterogeneity, (ii) low ratings with high heterogeneity, (iii) high ratings with high heterogeneity, and (iv) low ratings with low heterogeneity.

-

Q2. Do the identified profiles of instructional quality differ regarding socio-economic status (SES) and mathematics-related characteristics (interest, intrinsic motivation, self-concept, and achievement)?

4 Method

The results presented here are part of the German research project Digitalization of classroom teaching in school (“Digitalisierung von Unterricht in der Schule” or “DigitUS” for short), funded by the Federal Ministry of Education and Research (01JD1830A). This project aimed to identify the key factors for successfully using digital tools in instruction (Stegmann et al., 2022). It comprised an intervention study on establishing professional learning communities to facilitate the effective use of digital tools in a train-the-trainer approach. This article presents the results of a student survey on the instructional quality of mathematics lessons conducted prior to the intervention study.

4.1 Sample

Data were collected in autumn 2021, the first of two measurement points, as part of the DigitUS project.

The initial sample included N = 2,396 eighth-grade students from N = 136 classes. Students responded voluntarily to questions on instructional quality regarding their last five lessons in either mathematics or biology (approximately half the class in mathematics and the other half in biology). This study focuses on the mathematics-related subsample, comprising N = 1,214 students from N = 134 classes. Furthermore, the students answered questions on their socio-economic status and mathematics-related characteristics (interest, intrinsic motivation, and self-concept) and participated in a test to assess their mathematics achievement. A total of 173 students were excluded because of incomplete organizational data (missing tokens specifying the students’ classes) or missing data on instructional quality. Thus, our preliminary sample included N = 1,041 students from N = 133 classes.

Further restrictions were applied by considering only classes with data from at least five students to obtain meaningful estimations of classroom means. Therefore, 27 classes with 68 students were excluded from the dataset. Consequently, the final sample for analysis contains N = 973 students (48.4% female; 47.3% male; 1.8% diverse; 2.5% missing) from N = 106 classes.

These students attended 38 schools in three different school tracks, all in Bavaria, Germany (low-attaining track: Nstudents = 158, Nschools = 12; medium-attaining track: Nstudents = 359, Nschools = 13; and high-attaining track: Nstudents = 456, Nschools = 13).

4.2 Procedure

Before data collection, an agreement was obtained from the state Ministry of Education and consent from the participants and their parents. The academic staff collected data by informing the students that participation was anonymous and voluntary and that they could withdraw at any time. Including organizational details and breaks, the DigitUS survey lasted for almost three school lessons (135 min).

4.3 Measures

Students’ individual perceptions of instructional quality over the last five mathematics lessons were measured using scales for classroom management, cognitive activation, and social and structuring student support. The reliability of the instructional quality scales ranges from acceptable to good (Table 1). The students responded to the items on these scales using a four-point Likert scale (0 = strongly disagree to 3 = strongly agree). We first calculated the individual mean scores for each subscale and then a weighted mean score for each overall scale. For more information on the scales and subscales, refer to Kunter et al. (2017).

4.3.1 Classroom management

Perceptions of classroom management were assessed using ten items from three subscales: attention to student distractions and difficulties of students / monitoring (e.g., “In class, our teacher made sure that we were paying attention.“), disturbances (e.g., “There was constant chatter in class.”, reversed), and wasting time (e.g., “Our teacher often started the lesson unpunctually.”, reversed).

4.3.2 Cognitive activation

For cognitive activation, 16 items across three subscales were employed: independence and obligation to provide reasoning while working on tasks / cognitive autonomy (e.g., “Our teacher often asks us to explain our work steps in detail.”), discursive treatment of different student solutions / discussion (“In class, we often discussed the different ways of working on the tasks.”) and high interaction pace without time for reflection (“Our teacher didn't give us enough time to think about questions.”, reversed).

4.3.3 Student support (social)

The scale for social student support encompassed three items from the subscale respectful and patient handling of mistakes / error culture (e.g., “Our teacher was patient when someone made a mistake in class.”).

4.3.4 Student support (structuring)

Structuring student support was measured with nine items from two subscales: diagnosis of difficulties in understanding / Diagnostic competence of the teacher (“Our teacher noticed immediately if someone didn't understand something correctly.”) and adaptive relief for difficult tasks (e.g., “Our teacher gave good examples in class to make the tasks understandable.”).

Regarding Q2, we collected data on various student characteristics.

4.3.5 Socio-economic status (SES)

As in other studies (e.g., PISA; OECD, 2014), SES was assessed using a single item that asked about the number of books at home. Students could select one of the following five categories: 0–10, 11–25, 26–100, 101–200, or more than 200.

4.3.6 Mathematics-related student characteristics

The students’ mathematics-related characteristics were measured with scales on intrinsic motivation (four items, e.g., “I exert myself in mathematics lessons because I want to understand the material.”), interest (three items, e.g., “Mathematics is one of my favorite subjects.”), and self-concept (five items, e.g., “Math is easy for me.”). These scales were based on Wild et al., (2001). Students responded using a four-point Likert scale (0 = is not true to 3 = is exactly true).

Mathematics achievement was measured using a paper–pencil competence test developed in the German National Educational Panel Study (NEPS; Neumann et al., 2013). Students in the high-attaining track worked on the medium test booklet for grade 9 (33 items), whereas students in the low- and medium-attaining tracks dealt with either the test booklet for grade 7 (26 items) or the easy test booklet for grade 9 (34 items). All items required either multiple choice or short constructed responses. Both tests were IRT-scaled and showed good reliability.

4.4 Case-centered approaches and statistical analyses

Researchers have often employed variable-centered approaches to examine the key dimensions of instructional quality. Regarding TBD, for example, numerous empirical studies have confirmed the positive effects of successful classroom management on student learning (e.g., Doyle, 1986; Seidel & Shavelson, 2007). Similarly, cognitive activation is often described as positively associated with mathematics achievement when relevant learning prerequisites are controlled statistically (e.g., Baumert et al., 2010; Lipowsky et al., 2009). Student support, on the other hand, is mainly assumed to affect mathematics-related interest (e.g., König et al., 2021; Yu & Singh, 2018). However, the interplay of TBD requires a more holistic view, considering the complex interactions of these dimensions (Decristan et al., 2015). This is also suggested by Praetorius et al. (2018), whose analysis of the instructional quality of three lessons indicated that teachers may show different patterns regarding TBD.

Case-centered approaches (treating classrooms as cases) could help consider all three dimensions simultaneously and thus capture typical configurations of TBD concerning a teacher’s instruction. In learner research, these approaches are commonly known as person-centered (e.g., Bauer, 2022). Latent Profile Analysis (LPA) can be advantageous compared to traditional cluster analysis methods. According to Bauer (2022), LPA is a model-based exploratory method that classifies similar individuals or objects (in our study, classrooms) into an a priori unknown homogeneous subgroup regarding certain indicator variables (in our study, the perceived average level of instructional quality and within-classroom variability). Notably, more fine-grained case definitions are possible, such as using single lessons as separate cases, and coarser definitions, such as using the teacher as a case.

The LPA results allow us to describe different profiles based on their specific patterns of mean values for the indicator variables. For example, two classes may be similar in average perceived classroom management but differ significantly in cognitive activation and student support. Therefore, analyzing instructional quality aggregated at the classroom level is reasonable. To capture the similarity of students’ perceptions and obtain a more comprehensive picture of instructional quality, we also consider class-level standard deviations, expressing the heterogeneity of students’ ratings within a class.

To examine our first question (Q1), we conducted an LPA using Mplus 8.3 software (Muthén & Muthén, 2017). We utilized the four z-standardized instructional quality class-level means (classroom management, cognitive activation, student support (social), and student support (structuring)) and four z-standardized standard deviations as indicator variables. The selection of the best model and the number of profiles were based on the AIC, BIC, aBIC, Entropy, and BLRT p-values, as well as the model interpretability.

To investigate differences in class-level aggregated SES and mathematics-related characteristics (interest, intrinsic motivation, self-concept, and achievement) across the identified profiles of instructional quality (Q2), we used the BCH method (Asparouhov & Muthén, 2021).

As Little’s missing completely at random (MCAR) test was significant, the expectation–maximization (EM) algorithm was applied to impute missing data at the student level. Missing values were imputed first for the instructional quality subscale means and then for the student characteristic variables (see Section 4.3), which accounted for an overall missing rate of 11.2%.

5 Results

5.1 Preliminary analyses

Distribution

Each evaluated measure was examined for reliability, skewness, kurtosis, and outliers (Table 1). All reliabilities ranged from acceptable to good.

Correlations

Table 2 displays the class-level Pearson correlations among all assessed variables, illustrating that the dimensions of instructional quality–namely, classroom management, cognitive activation, student support (social) and student support (structuring)–along with the mathematics-related student characteristics interest, intrinsic motivation, and self-concept, generally show strong and positive intercorrelations. However, mathematics achievement positively correlated exclusively with SES. Moreover, SES significantly negatively correlated with both dimensions of student support.

Intraclass Correlation Coefficients (ICCs)

Table 3 displays the ICCs for all the measures. Values of 10% or higher indicated substantial between-class variance observed for all instructional quality variables. The SES also showed significant between-class variance.

5.2 Profile identification

Number of profiles

A Latent Profile Analysis (LPA) was conducted using eight indicator variables capturing the different aspects of instructional quality and the extent of within-classroom variability: classroom management, cognitive activation, student support (social), student support (structuring), and standard deviations for each of these four dimensions. The fit statistics for the k = 1 to k = 6 profile solutions (Table 4) show the AIC, BIC, and aBIC values, where lower values signify a better fit. BIC favors a four-profile solution, whereas AIC and aBIC suggest that more profiles can be identified. The significant p-value of the BLRT for k = 5 and the non-significant p-value for k = 6 indicate that, while a five-profile solution is more appropriate than a four-profile solution, a six-profile solution is not. However, one profile in the five-profile solution represented only a small proportion of the classes (approximately 8%), limiting its interpretability. The Entropy values, which indicate the separation between profiles on a scale from 0 to 1, are satisfactory for all considered solutions (≥ 0.80; Bauer, 2022). A four-profile solution was selected considering all indices and interpretability.

Profiles of classrooms (Q1)

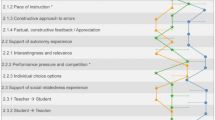

Table 5 shows the mean values and standard errors of all z-standardized indicator variables for each profile. Based on the mean values of the indicator variables (Figs. 1 and 2), we interpret the profiles as follows:

-

Profile 1: Low quality / heterogeneous (N = 25). Profile 1 represents classrooms with the lowest class-level means of instructional quality compared to the entire sample. The average standard deviations are the highest for classroom management and social student support and the second highest for the other indicators.

-

Profile 2: Average quality / homogeneous (N = 30). Profile 2 shows class-level means slightly above average for classroom management and cognitive activation, whereas the values for both student support indicators are slightly below average. The average standard deviations are the lowest for cognitive activation and the second lowest for the other indicators.

-

Profile 3: Supportive / heterogeneous (N = 21). Profile 3 exhibits the second highest class-level means for both student support indicators and slightly above average for classroom management and cognitive activation, similar to Profile 2. The average standard deviations are the highest for cognitive activation and structuring student support and the second highest for the other indicators.

-

Profile 4: High quality / homogeneous (N = 30). Profile 4 has the highest class-level means. Moreover, the average standard deviations are the lowest for all indicators except for cognitive activation, where the average quality profile is comparable but slightly lower.

Contribution of within-classroom variability to cluster interpretation

As depicted on the right side of Figs. 1 and 2, the standard deviations respectively within-classroom variability provide valuable insights. For example, the average quality profile and the supportive profile differ mainly in student support, whereas they are similar for classroom management and cognitive activation. Focusing only on the mean values of these indicators, one might conclude that the supportive profile is superior to the average quality profile. However, when we consider standard deviations, these two profiles differ in how heterogeneous cognitive activation is perceived within classrooms: The supportive profile exhibits the highest variability, whereas the average quality profile shows the lowest. In addition, the heterogeneity of perceived student support in the supportive profile is higher than that in the average quality profile.

In addition, within-classroom variability contributes to interpreting the low and high quality profiles. While the high quality profile has the highest average values and exhibits a homogeneous perception of instructional quality among students within the class, the low quality profile displays a high within-classroom variation of perceptions. This indicates that some students in these classes may perceive low instructional quality, while others may show perceptions closer to the scale averages. Thus, considering within-classroom variability provides a more comprehensive understanding of the distinct instructional quality profiles.

Characterization of the profiles based on the subscales of instructional quality dimensions

To gain more differentiated insights into the perceptions of various aspects of instructional quality, we investigated how the four identified profiles could be characterized concerning the TBD subscales mentioned in Section 4.3. Therefore, we performed a BCH analysis, including z-standardized class-level means and standard deviations of the subscales of the instructional quality dimensions. Figure 3 shows the patterns of the four profiles regarding the subscales.

Mean values and standard errors of the four profiles for z-standardized auxiliary variables (Note: the subscales disturbances (CM), wasting time (CM), and interaction pace (CA) are reversed; see also Section 4.3)

While the findings are broadly consistent with the respective overall scale for most subscales, some specific details emerge: regarding classroom management (CM), the low quality profile is mainly characterized by low attention to student distractions and difficulties / monitoring (“attention” in Fig. 3) with high heterogeneity, and much time wasting. Concerning cognitive activation (CA), similar mean values for high interaction pace without time for reflection (“interaction pace”) are evident for both low quality and average quality profiles. For this subscale, the average quality profile exhibited moderate within-class variability, with the standard deviation value closer to the two heterogeneous profiles than the other homogeneous profile (high quality). The two subscales for structuring student support (ST) do not show any particular differences concerning the overall scale. Only one subscale was used to assess social support (SO).

5.3 Instructional quality profiles and classroom characteristics (Q2)

To investigate Q2, we performed a BCH analysis with class averages of mathematics-related interest, intrinsic motivation, self-concept, achievement, and socio-economic status as auxiliary variables in the LPA.

Table 6 shows that the p-values of the overall tests indicated that the four profiles differed significantly regarding mathematics-related interest, intrinsic motivation, and mathematics achievement. However, no significant differences were observed regarding mathematics self-concept and SES.

Table 7 presents the estimated mean values and standard errors of the four profiles for all classroom characteristics, exhibiting significant p-values for the specific overall test.

Pairwise comparisons among the four profiles were performed to identify significant mean differences. After the p-values were adjusted with a Bonferroni correction, only two pairwise comparisons showed significant differences: Profile 4 (high quality) showed significantly higher interest than Profile 2 (average quality, \({\chi }^{2}(3)\)= 15.236, p < 0.001). Furthermore, Profile 3 (supportive) was characterized by significantly higher achievement in the mathematics test than Profile 1 (low quality, \({\chi }^{2}(3)\)= 7.976, p < 0.05). Regarding intrinsic motivation, no pairwise comparisons were significant.

We also explored how the three school tracks (low/medium/high attainment) were distributed across the profiles but found no significant differences.

6 Discussion

Our main goal was to identify distinct profiles of student-reported instructional quality in eighth-grade mathematics classrooms. An innovative aspect of our approach was clustering classrooms by average student ratings and within-classroom variability. Additionally, we explored whether these profiles varied in socio-economic status (SES) and mathematics-related characteristics (interest, intrinsic motivation, self-concept, and achievement).

6.1 Profiles of student-reported instructional quality: The role of within-classroom variability (Q1)

Our study used a case-centered clustering approach to investigate meaningful configurations of student-reported instructional quality in mathematics classrooms regarding the Three Basic Dimensions (Klieme et al., 2009). Our Latent Profile Analysis (LPA) revealed four distinct classroom profiles, with proportions between 20 and 28%, each with unique combinations of instructional quality levels and heterogeneity. We observed two profiles with anticipated characteristics: the profile with the lowest average ratings (Profile 1) consistently showed the highest or second highest heterogeneity. In contrast, the profile with the highest average ratings (Profile 4) consistently exhibited the lowest or second lowest heterogeneity.

However, two expected configurations (low quality and low heterogeneity, high quality and high heterogeneity) did not occur. Instead, an average quality profile (Profile 2) and a supportive profile (Profile 3) revealed unexpected patterns: although both profiles showed comparable levels of perceived cognitive activation, this perception was homogeneous within classrooms in the average profile but very heterogeneous in the supportive profile. Although the supportive profile exhibited above-average mean values for both student support indicators, the elevated standard deviations suggest that some students could not use the student support reported by their peers as intended. It is conceivable, for example, that the teacher directed a significant portion of his or her resources towards a small number of students who seemed to be in particular need of support, while other students received or perceived only minimal or average assistance.

The extent of heterogeneity also reveals interesting insights into the low and high quality profiles, and notably, the comparatively high within-classroom heterogeneity in the low quality profile may also have positive connotations. Despite having low average values, some students in these classes still experienced benefits from the teacher's learning environment. Furthermore, it is remarkable that even in classes with low instructional quality, no group dynamics seemed to arise, causing all students to rate the instructional quality negatively uniformly. Conversely, it is also astonishing that in consistently high-rated classes, the assessments of all students were very similar, indicating that these teachers managed to engage and support all students to a similar extent. Overall, we can state that it is worth considering within-classroom variability in addition to the average level of instructional quality ratings.

From a practical perspective, the results provide teachers with interesting insights into intra-class differences in perceptions of their instruction. This adds a new perspective to calls for supporting teachers in adapting their instruction to students’ needs (van de Pol et al., 2010): they may not only consider students’ learning prerequisites but also their different needs and–resulting from these–their perceptions during instruction. Therefore, it may be promising to sensitize teachers to the diverse perceptions of their instruction among students and provide suitable support measures and training opportunities to address these differences.

6.2 The connection between instructional quality and classroom characteristics (Q2)

We further explored how the identified instructional quality profiles of mathematics classrooms differed according to various classroom characteristics.

Our results showed that the supportive profile revealed significantly higher mathematics achievement than the low quality profile. Furthermore, the high quality profile showed significantly higher mathematics-related interest than the average quality profile. Although the overall test for intrinsic motivation in mathematics revealed a significant p-value, no pairwise comparisons showed significant differences (after Bonferroni correction). However, our descriptive findings suggest that these characteristics are associated with a perception of high instructional quality and may be fostered more effectively in environments with such quality. This study further indicated that the socio-economic status (SES) did not differ significantly across profiles, which is remarkable given the high ICC value of 25.5% for SES. The lack of significant differences across school tracks in profile distribution demonstrates that instructional quality may hinge more on individual-, teacher-, and class-level factors than school type.

Our findings indicate that the differences between instructional quality profiles are associated with achievement and motivational variables but to a stronger extent with the latter. Therefore, our study serves as a preliminary exploration of the characteristics that correlate with instructional quality, paving the way for future longitudinal and experimental studies to investigate the direction of causality. From an educational policy perspective, understanding the complex relationship between student and classroom characteristics, and individual- and class-level measures of instructional quality is crucial for designing interventions that address diverse learning needs and foster high instructional quality.

6.3 Limitations and outlook

This study had some limitations. Although the TBD model provides a solid theoretical foundation and has been extensively studied, it may not fully capture important subject-specific aspects of instructional quality (e.g., Charalambous & Praetorius, 2018). Future research should explore more comprehensive frameworks, such as the TEDS-Instruct framework (Schlesinger et al., 2018), incorporating content-specific aspects (e.g., content-related correctness). Furthermore, Wagner et al. (2013) noted that the construct validity of students’ perceived teaching quality is high but not perfect, as the generalizability of teaching quality ratings seems to depend more on the specific subject and the specific students in the class.

In addition, exploring the differences in student learning between the identified profiles is beyond the scope of this study. However, this could contribute to the conclusion drawn by Schenke et al. (2017) whose findings suggest that within-classroom variability in students’ perceptions of classroom climate can adversely impact their academic achievement. Beyond this work, we broadened the scope by incorporating additional aspects of instructional quality, particularly cognitive activation. Another interesting aspect in this context is to examine the extent to which students’ individual ratings (as measures of their perception of instructional quality) predict student-level learning beyond class-average ratings of instructional quality (as an estimation of “real” instructional quality). Above all, the question of whether low within-classroom variability of perceived instructional quality is a characteristic of “good teaching” according to Berliner (2005) is worth a normative discussion, independent of its effects on students’ outcome variables (“effective teaching”).

Comparing student and teacher ratings of instructional quality could provide valuable insights; however, larger sample sizes and more extensive teacher assessments are necessary for such investigations. The identified profiles should be validated with video or in vitro observations for a more comprehensive and multiperspective understanding of instructional dynamics. Furthermore, incorporating teacher characteristics such as technological pedagogical knowledge would enrich the analysis.

Concerning Q2, it remains unclear whether the lack of correlation between the profiles and the SES could be because only a single item was used to capture SES, a method commonly employed in other studies (e.g., PISA; OECD, 2014). From an educational policy perspective, it is essential to understand how the classroom characteristics discussed in Q2 relate to instructional quality. Longitudinal (and possibly experimental) studies are required to examine the potential directions of these effects.

6.4 Conclusion

Our study revealed meaningful configurations of level and heterogeneity in student-reported instructional quality, with within-classroom variability playing a crucial role in interpreting the identified profiles. Future research should investigate its effects on content-related achievement and motivational development to gain a deeper understanding of its impact on student learning. Additionally, our investigations revealed that instructional quality profiles differed significantly regarding students’ mathematics-related interest, intrinsic motivation, and achievement. Further studies should explore possible bidirectional effects between individual and class-related student characteristics on the one hand and instructional quality and student achievement on the other.

References

Aleamoni, L. M. (1999). Student Rating Myths Versus Research Facts from 1924 to 1998. Journal of Personnel Evaluation in Education, 13(2), 153–166. https://doi.org/10.1023/A:1008168421283

Asparouhov, T., & Muthén, B. (2021). Auxiliary Variables in Mixture Modeling: Using the BCH Method in Mplus to Estimate a Distal Outcome Model and an Arbitrary Secondary Model. Mplus Web Notes, 21(2), 1–22.

Bauer, J. (2022). A Primer to Latent Profile and Latent Class Analysis. In M. Goller, E. Kyndt, S. Paloniemi, & C. Damşa (Eds.), Methods for Researching Professional Learning and Development: Challenges, Applications and Empirical Illustrations (pp. 243–268). Springer International Publishing. https://doi.org/10.1007/978-3-031-08518-5_11

Baumert, J., Kunter, M., Blum, W., Brunner, M., Voss, T., Jordan, A., Klusmann, U., Krauss, S., Neubrand, M., & Tsai, Y.-M. (2010). Teachers’ Mathematical Knowledge, Cognitive Activation in the Classroom, and Student Progress. American Educational Research Journal, 47(1), 133–180. https://doi.org/10.3102/0002831209345157

Berliner, D. C. (2005). The Near Impossibility of Testing for Teacher Quality. Journal of Teacher Education, 56(3), 205–213. https://doi.org/10.1177/0022487105275904

Brophy, J. (2000). Teaching. Educational practices series 1. International Academy of Education.

Brophy, J. (2006). Observational Research on Generic Aspects of Classroom Teaching. In P. A. Alexander & P. H. Winne (Eds.), Handbook of educational psychology (pp. 755–780). Lawrence Erlbaum Associates Publishers.

Brophy, J., & Good, T. L. (1984). Teacher Behavior and Student Achievement. Occasional Paper No. 73. East Lansing Institute for Research on Teaching.

Charalambous, C. Y., & Praetorius, A.-K. (2018). Studying mathematics instruction through different lenses: Setting the ground for understanding instructional quality more comprehensively. ZDM Mathematics Education, 50(3), 355–366. https://doi.org/10.1007/s11858-018-0914-8

Creemers, B., & Kyriakides, L. (2015). Process-Product Research: A Cornerstone in Educational Effectiveness Research. The Journal of Classroom Interaction, 50(2), 107–119.

Decristan, J., Klieme, E., Kunter, M., Hochweber, J., Büttner, G., Fauth, B., Hondrich, A. L., Rieser, S., Hertel, S., & Hardy, I. (2015). Embedded Formative Assessment and Classroom Process Quality: How Do They Interact in Promoting Science Understanding? American Educational Research Journal, 52(6), 1133–1159. https://doi.org/10.3102/0002831215596412

Doyle, W. (1986). Classroom organization and management. In M. C. Wittrock (Ed.), Handbook of research on teaching. A project of the American Educational Research Association (pp. 392–431). Macmillan.

Evertson, C. M., & Weinstein, C. S. (2006). Classroom Management as a Field of Inquiry. In C. M. Evertson & C. S. Weinstein (Eds.), Handbook of classroom management: Research, practice, and contemporary issues (pp. 3–15). Routledge.

Fauth, B., Decristan, J., Rieser, S., Klieme, E., & Büttner, G. (2014). Student ratings of teaching quality in primary school: Dimensions and prediction of student outcomes. Learning and Instruction, 29, 1–9.

Göllner, R., Fauth, B., & Wagner, W. (2021). Student ratings of teaching quality dimensions: Empirical findings and future directions. In W. Rollett, H. Bijlsma, & S. Röhl (Eds.), Student Feedback on Teaching in Schools (pp. 111–122). Springer International Publishing. https://doi.org/10.1007/978-3-030-75150-0_7

Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses relating to achievement. Routledge. https://doi.org/10.4324/9780203887332

Helmke, A. (2009). Unterrichtsqualität und Lehrerprofessionalität: Diagnose, Evaluation und Verbesserung des Unterrichts. Klett.

Herbert, B., Fischer, J., & Klieme, E. (2022). How valid are student perceptions of teaching quality across education systems? Learning and Instruction, 82, 101652. https://doi.org/10.1016/j.learninstruc.2022.101652

Klieme, E., Lipowsky, F., Rakoczy, K., & Ratzka, N. (2006). Qualitätsdimensionen und Wirksamkeit von Mathematikunterricht. Theoretische Grundlagen und ausgewählte Ergebnisse des Projekts “Pythagoras.” In M. Prenzel & L. Allolio-Naecke (Eds.), Untersuchungen zur Bildungsqualität von Schule. Abschlussbericht des DFG-Schwerpunktprogramms (pp. 127–146). Waxmann.

Klieme, E., Pauli, C., & Reusser, K. (2009). The Pythagoras study: Investigating effects of teaching and learning in Swiss and German mathematics classrooms. In T. Janik & T. Seidel (Eds.), The power of video studies in investigating teaching and learning in the classroom (pp. 137–160). Waxmann.

König, J., Blömeke, S., Jentsch, A., Schlesinger, L., née Nehls, C. F., Musekamp, F., & Kaiser, G. (2021). The links between pedagogical competence, instructional quality, and mathematics achievement in the lower secondary classroom. Educational studies in mathematics, 107(1), 189–212. https://doi.org/10.1007/s10649-020-10021-0

Kunter, M., & Voss, T. (2013). The model of instructional quality in COACTIV: A multicriteria analysis. In M. Kunter, J. Baumert, W. Blum, U. Klusmann, S. Krauss, & M. Neubrand (Eds.), Cognitive activation in the mathematics classroom and professional competence of teachers: Results from the COACTIV project (pp. 97–124). Springer. https://doi.org/10.1007/978-1-4614-5149-5_6

Kunter, M., Klusmann, U., Baumert, J., Richter, D., Voss, T., & Hachfeld, A. (2013). Professional competence of teachers: Effects on instructional quality and student development. Journal of Educational Psychology, 105(3), 805–820. https://doi.org/10.1037/a0032583

Kunter, M., Baumert, J., Leutner, D., Terhart, E., Seidel, T., Dicke, T., Holzberger, D., Kunina-Habenicht, O., Linninger, C., Lohse-Bossenz, H., Schulze-Stocker, F., & Stürmer, K. (2017). Dokumentation der Erhebungsinstrumente der Projektphasen des BilWiss-Forschungsprogramms von 2009 bis 2016. Goethe-Universität Frankfurt.

Learning Mathematics for Teaching P. (2011). Measuring the mathematical quality of instruction. Journal of Mathematics Teacher Education, 14(1), 25–47.

Lipowsky, F., Rakoczy, K., Pauli, C., Drollinger-Vetter, B., Klieme, E., & Reusser, K. (2009). Quality of geometry instruction and its short-term impact on students’ understanding of the Pythagorean Theorem. Learning and Instruction, 19(6), 527–537. https://doi.org/10.1016/j.learninstruc.2008.11.001

Lüdtke, O., Trautwein, U., Kunter, M., & Baumert, J. (2006). Reliability and agreement of student ratings of the classroom environment: A reanalysis of TIMSS data. Learning Environments Research, 9(3), 215–230. https://doi.org/10.1007/s10984-006-9014-8

Lüdtke, O., Robitzsch, A., Trautwein, U., & Kunter, M. (2009). Assessing the impact of learning environments: How to use student ratings of classroom or school characteristics in multilevel modeling. Contemporary Educational Psychology, 34(2), 120–131. https://doi.org/10.1016/j.cedpsych.2008.12.001

Mu, J., Bayrak, A., & Ufer, S. (2022). Conceptualizing and measuring instructional quality in mathematics education: A systematic literature review. Frontiers in Education, 7, 994739. https://doi.org/10.3389/feduc.2022.994739

Muijs, D., Kyriakides, L., van der Werf, G., Creemers, B., Timperley, H., & Earl, L. (2014). State of the art – teacher effectiveness and professional learning. School Effectiveness and School Improvement, 25(2), 231–256. https://doi.org/10.1080/09243453.2014.885451

Muthén, L. K., & Muthén, B. (2017). Mplus user’s guide: Statistical analysis with latent variables, user’s guide.

Neumann, I., Duchhardt, C., Grüßing, M., Heinze, A., Knopp, E., & Ehmke, T. (2013). Modeling and assessing mathematical competence over the lifespan. Journal for Educational Research Online, 5(2), 80–109.

Nilsen, T., & Gustafsson, J.-E. (2016). Teacher quality, instructional quality and student outcomes: Relationships across countries, cohorts and time. Springer Nature. https://doi.org/10.1007/978-3-319-41252-8

OECD. (2014). PISA 2012 Technical Report. OECD Publishing.

Oser, F. K., & Baeriswyl, F. J. (2001). Choreographies of teaching: Bridging instruction to learning. In V. Richardson (Ed.), Handbook of research on teaching (Vol. 4, pp. 1031–1065). American Educational Research Association.

Praetorius, A.-K., Pauli, C., Reusser, K., Rakoczy, K., & Klieme, E. (2014). One lesson is all you need? Stability of instructional quality across lessons. Learning and Instruction, 31, 2–12. https://doi.org/10.1016/j.learninstruc.2013.12.002

Praetorius, A.-K., Klieme, E., Herbert, B., & Pinger, P. (2018). Generic dimensions of teaching quality: The German framework of Three Basic Dimensions. ZDM Mathematics Education, 50(3), 407–426. https://doi.org/10.1007/s11858-018-0918-4

Schenke, K., Ruzek, E., Lam, A. C., Karabenick, S. A., & Eccles, J. S. (2017). Heterogeneity of student perceptions of the classroom climate: A latent profile approach. Learning Environments Research, 20(3), 289–306. https://doi.org/10.1007/s10984-017-9235-z

Schlesinger, L., Jentsch, A., Kaiser, G., König, J., & Blömeke, S. (2018). Subject-specific characteristics of instructional quality in mathematics education. ZDM Mathematics Education, 50(3), 475–490. https://doi.org/10.1007/s11858-018-0917-5

Seidel, T., & Shavelson, R. J. (2007). Teaching Effectiveness Research in the Past Decade: The Role of Theory and Research Design in Disentangling Meta-Analysis Results. Review of Educational Research, 77(4), 454–499. https://doi.org/10.3102/0034654307310317

Senden, B., Nilsen, T., & Blömeke, S. (2022). 5 Instructional quality: A review of conceptualizations, measurement approaches, and research findings. In K. Klette, M. Blikstad-Balas, & M. Tengberg (Eds.), Ways of Analyzing Teaching Quality (pp. 140–172). Scandinavian University Press. https://doi.org/10.18261/9788215045054-2021-05

Senden, B., Nilsen, T., & Teig, N. (2023). The validity of student ratings of teaching quality: Factorial structure, comparability, and the relation to achievement. Studies in Educational Evaluation, 78, 101274. https://doi.org/10.1016/j.stueduc.2023.101274

Stegmann, K., Kastorff, T., Poluektova, I., Berger, S., Kosiol, T., Reith, S., Förtsch, C., Rutkowski, A., Mohr, M., Lindermayer, C., Aufleger, M., Traub, D., Haldenwang, V., Ufer, S., Neuhaus, B. J., Bannert, M., Oechslein, K., Lindner, M., Nerdel, C., Fischer, F., & Gräsel, C. (2022). Digitaler Wandel des Schulunterrichts durch professionelle Lerngemeinschaften: Der Einsatz von Multiplikatoren zur Etablierung von Lerngemeinschaften. MedienPädagogik: Zeitschrift für Theorie und Praxis der Medienbildung, 49(Schulentwicklung), 250–270. https://doi.org/10.21240/mpaed/49/2022.07.01.X

Tomlinson, C. A. (2014). The differentiated classroom: Responding to the needs of all learners. Ascd.

van de Pol, J., Volman, M., & Beishuizen, J. (2010). Scaffolding in Teacher-Student Interaction: A Decade of Research. Educational Psychology Review, 22(3), 271–296. https://doi.org/10.1007/s10648-010-9127-6

Wagner, W., Göllner, R., Helmke, A., Trautwein, U., & Lüdtke, O. (2013). Construct validity of student perceptions of instructional quality is high, but not perfect: Dimensionality and generalizability of domain-independent assessments. Learning and Instruction, 28, 1–11.

Wild, E., Gerber, J., Exeler, J., & Remy, K. (2001). Dokumentation der Skalen-und Item-Auswahl für den Kinderfragebogen zur Lernmotivation und zum emotionalen Erleben [Documentation of the scales and items of the questionnaire on motivation and emotional experience]. Universität Bielefeld.

Yu, R., & Singh, K. (2018). Teacher support, instructional practices, student motivation, and mathematics achievement in high school. The Journal of Educational Research, 111(1), 81–94. https://doi.org/10.1080/00220671.2016.1204260

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was supported by the German Federal Ministry of Education and Research (BMBF), Grant Nr. 01JD1830A (PI: Frank Fischer).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation and analysis were performed by Christian Lindermayer, Timo Kosiol and Stefan Ufer. Data collection and coding were performed by Christian Lindermayer and Timo Kosiol. The first draft of the manuscript was written by Christian Lindermayer, Timo Kosiol and Stefan Ufer. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Informed consent

Participation in the study required informed consent.

Conflicts of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lindermayer, C., Kosiol, T. & Ufer, S. Classroom profiles of instructional quality: contribution of level and variability of students’ perception. ZDM Mathematics Education (2024). https://doi.org/10.1007/s11858-024-01583-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s11858-024-01583-x