Abstract

Electronic fetal monitoring is used to evaluate fetal well-being by assessing fetal heart activity. The signals produced by the fetal heart carry valuable information about fetal health, but due to non-stationarity and present interference, their processing, analysis and interpretation is considered to be very challenging. Therefore, medical technologies equipped with Artificial Intelligence algorithms are rapidly evolving into clinical practice and provide solutions in the key application areas: noise suppression, feature detection and fetal state classification. The use of artificial intelligence and machine learning in the field of electronic fetal monitoring has demonstrated the efficiency and superiority of such techniques compared to conventional algorithms, especially due to their ability to predict, learn and efficiently handle dynamic Big data. Combining multiple algorithms and optimizing them for given purpose enables timely and accurate diagnosis of fetal health state. This review summarizes the currently used algorithms based on artificial intelligence and machine learning in the field of electronic fetal monitoring, outlines its advantages and limitations, as well as future challenges which remain to be solved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The electronic fetal monitoring (EFM) is used nowadays particularly for monitoring the heart activity of fetus and assessing its well-being. The main objective of monitoring is an early detection of life-threatening fetal hypoxia. If the fetal hypoxia is not detected and rectified on time, the oxygen saturation of blood becomes extremely low and the fetal hypoxia progresses to asphyxia. The consequence of a serious asphyxia is a hypoxic-ischemic damage of organs which may, inter alia, lead to a brain and heart damage and a nervous system failure [1, 2]. An accurate and reliable fetal monitoring during pregnancy and birth may lead to an early detection of states which may adversely affect the health of fetus or cause perinatal death.

The fetal heart monitoring has its origins in the first half of the 19th century when fetal heart sounds (fHSs) were detected thanks to the invention of stethoscope. The assessment of fetal well-being was based on intermittent auscultation of fHSs and subsequent determination of fetal heart rate (fHR) [3]. The EFM became the basis of prenatal care and a common part of clinical practice as late as in the 1960s when the first cardiotocograph (CTG), which was supposed to help doctors in detecting fetal hypoxia, was introduced thanks to the development in science and technology [4]. Nevertheless, some studies in the past [5,6,7] argued that the number of executed caesarian sections had increased after introducing the CTG equipment to delivery rooms but at the same time there had been no decrease in perinatal mortality caused by the influence of hypoxia. This was attributed to a difficult interpretation of CTG data influenced by subjective assessment and experience of doctors which led to a high false positivity when determining the hypoxia leading to a high number of unnecessarily executed caesarian sections [4, 5]. This tendency from the past persists and the numbers of caesarian sections are still high. The numbers of caesarian sections should move within 10–15% [8] according to the World Health Organization statement on caesarean section rates, however the world average ranges around 21.1% according to the data from 2015. In some countries (Dominican Republic, Egypt or Brazil for instance), the numbers of caesarian sections are exceeding 55% [9], which is alarming. Since the caesarian section is an invasive procedure after which the incidence of complications is more frequent than after a spontaneous delivery [10], the current research is focused on the development of both the classical CTG [11,12,13], and alternative techniques for non-invasive EFM such as fetal electrocardiography (fECG) [14, 15], fetal phonocardiography (fPCG) [16] or fetal magnetocardiography (fMCG) [17].

Such alternative techniques bring very important clinical information about the fetal health and have a great potential to become complement to the conventional CTG or substitute the CTG entirely thanks to its non-invasiveness and absence of ultrasound radiation. Nevertheless, the processing, analysis and interpretation of these biological signals is a challenging task, because it is rather difficult and computationally demanding to model a function of human body with classical mathematics [18]. This is mainly due to the nonlinear and non-stationary nature of these biological signals and the presence of many types of interference. In addition, useful signal and interference often overlap in the time and frequency domains, causing conventional algorithms to fail to process these signals [18]. According to the latest research [19,20,21,22] the algorithms based on artificial intelligence (AI) have appeared to be very promising for the processing and analysis of these real-world signals, especially due to their ability to predict and learn.

1.1 Structure of the Review

This review focuses on the use of AI in the field of non-invasive electronic monitoring of fetal heart activity, provides the summary of advantages and limitations of presented methods, and also the list of future challenges. The structure of the contribution includes Sect. 2 which provides a general overview of both the non-invasive techniques for EFM and AI-based approaches used in these fields. Section 3 describes three main application areas of AI in EFM (noise suppression, feature detection and fetal state classification). Section 4 summarizes the efficiency of presented algorithms, highlights the most important findings and outlines directions for further research. In Sect. 5, the remaining challenges associated with the fetal monitoring and AI are discussed. The conclusion of the contribution is in Sect. 6.

1.2 Literature Search Strategy

To find relevant literature for this review, Google Scholar, PubMed, and the Scopus search engines were used. The search used phrases combining terms from the field of artificial intelligence: “Artificial intelligence”, “Machine learning”, “Artificial neural networks”, “Fuzzy logic”, “Nature-inspired optimization” combined with fetal monitoring techniques: “Cardiotocography”, “Fetal electrocardiography”, “Fetal phonocardiography”, “Fetal magnetocardiography”. A total of 20 queries were used for the search, see Fig. 1. All results found were inspected and evaluated. Only studies that were published after 2000 were selected. A large part of the conference papers was excluded.

2 Background

In the field of EFM, as in other areas, there is a rapid development with the advancement of science and technology. The goal of scientists and obstetricians is to develop a highly reliable system, using the most modern approaches and technologies, through which it would be possible to detect fetal hypoxia with a high accuracy and decrease the number of unnecessarily executed caesarian sections. AI technologies, which excels in solving complex tasks and processing of great number of data, appear to be promising for the processing and interpretation of fetal signals [23]. This section gives an overview of basic techniques for EFM and AI-based approaches used in these fields.

2.1 Electronic Fetal Monitoring

The term EFM was used in the past exclusively as a synonym for the CTG technique. It turned out to be misleading and since 2015, based on the consensus of The International Federation of Gynecology and Obstetrics, only the term CTG has been reserved for this monitoring technique. The term EFM will be used in this article only as a general term [4] comprising more methods of fetal monitoring, for example:

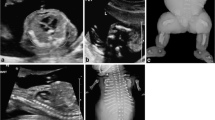

Illustration of different monitoring methods, their signals and placement of sensing electrodes and sensors. In CTG monitoring, uterine contractions are measured using a toco sensor (TS) and the fHR trace is obtained using an ultrasound transducer (UT). In the case of fECG measurements, aECG signals are obtained using AE\(_1\) and AE\(_2\) electrodes. AE\(_0\) refers to the reference electrode and N refers to the active ground. The placement of the sensing electrodes was inspired by Matonia et al. [38] and examples of aECG signals from the Fetal electrocardiograms, direct and abdominal with reference heartbeat annotations dataset were used. The fMCG signals are measured using a magnetometer system (MS). In examples of fECG and fMCG signals, the maternal and fetal components are labeled with m and f, respectively. The fPCG signal is measured by phonocardiographic sensor (PS). The diagram shows ideal fPCG signal (upper trace) and fPCG signal with ambient interference (lower trace) with S\(_1\) and S\(_2\) sounds labeled

-

1.

Cardiotocography CTG is based on the simultaneous measuring of uterine contractions and fHR (see Fig. 2). The trace of fHR is obtained by scanning the movements of fetal heart recorded with Doppler-based ultrasound transducer [4]. Subsequently, the recorded signal passes through the modulation and the autocorrelation to ensure its sufficient quality. This process leads to the approximation of the true fHR intervals. Uterine contractions are scanned by a pressure sensor. Nevertheless, the quality of records strongly depends on the sensor placement and belt tightening [1]. However, this method is associated with a number of disadvantages which include, in particular, an important influence of the examining doctor’s experience upon the interpretation of CTG records (commonly leading to an inaccurate detection of fetal hypoxia), an influence of sensor placement on the quality of signals, a sensitivity to the movements of fetus as well as the mother and a lower sensitivity of women with higher values of body mass index (BMI) [24].

-

2.

Fetal Electrocardiography The non-invasive fECG (NI-fECG), based on recording electrical signals from the mother’s abdominal area, appears to be the most promising technique of fetal monitoring. At present, the invasive variant (the so-called direct fECG), measuring fECG signal by means of scalp electrode directly from the fetal head. This makes it highly accurate, but many critics disparage its contributions due to the negatives related to its invasiveness (for example the mother’s discomfort or the risk of infection). Apart from the fHR, the fECG signal provides also additional information about pathological states connected in particular with fetal hypoxia or metabolic acidosis [4, 25]. They manifest as changes in the morphology of fECG traces, such as changes of T:QRS ratio or QT interval [26, 27]. It has been demonstrated that during the simultaneous recording of fHR and T:QRS ratio (known as ST analysis or STAN) there has been both an early detection of acidosis and a decrease in the number of unnecessary caesarian sections [25, 28], thus eliminating the main aspects for which the CTG has been criticized [5, 29]. Due to these reasons, NI-fECG has a huge potential to become a complementary method to the CTG or to replace it completely in clinical practice. Moreover, in case of NI-fECG, neither the mother nor the fetus are exposed to ultrasound radiation which allows a continual recording, even within the framework of home monitoring. An advantage is its use for women who have higher values of BMI. The main obstacle of NI-fECG recording is the presence of other disturbing signals, in particular the maternal electrocardiographic signal (mECG), which must be eliminated in order to obtain clinical information from NI-fECG [4, 25]. In Fig. 2 there is a schematic example of the electrode placement and recorded abdominal signals (aECG) containing a mixture of mECG and fECG.

-

3.

Fetal Phonocardiography The method of fPCG is low-cost and a completely passive technique based on the monitoring of fHSs, cardiac acoustic vibrations and murmurs by means of microphone-transducer [30, 31]. The attention has been recently paid to understanding the nature and the origin of this signal, especially because the fPCG signal recorded in high quality may provide more information about cardiac pathologies than commonly used CTG [32]. According to the research focused on the development of fPCG [33], this technique might become a complement of the classical CTG. The fPCG could find its use especially in a long-term monitoring and home monitoring which might lead to a reduction in the number of physical visits to the doctor [34]. In contrary to CTG, additional information about the fetal health state could be obtained through a complex analysis of heart murmurs. Unfortunately, the main disadvantage of fPCG is a high sensitivity to the disturbing signals and necessity of proper placement of the recording sensor [32, 33]. When fPCG signals from maternal abdominal area are recorded, the useful signal is contaminated especially with ambient noise (e.g. speech, cough, radio, air conditioning, door closings or alarms). Moreover, maternal as well as fetal movement artifacts, such as breathing artifacts, uterine contractions, bowel sounds and maternal heart sounds (mHSs) are also considered to be disturbing [32]. In Fig. 2 there is a schematic example of the recording sensor placement, ideal fPCG signal and fPCG signal disturbed by ambient noise.

-

4.

Fetal Magnetocardiography The fMCG is an alternative method based on recording magnetic fields created by conduction currents in the fetal heart. The fMCG signal shows the same information as the fECG (i.e. the electrical activity of the fetal heart in the form of the PQRST complex) [35]. Figure 2 shows a schematic diagram of magnetometer system and the recorded fMCG signals. The magnetic field produced by the fetal heart is sensed by magnetometer system from outside the maternal abdomen above the fetal heart [36]. The fMCG signals have much higher value of signal-to-noise ratio (SNR) than the NI-fECG signals since the propagation of magnetic fields is not that affected by surrounding tissue as in the case of NI-fECG [35]. Moreover, the NI-fECG is almost impossible to be recorded between the 28th and 32nd weeks of pregnancy due to the fetal coverage with the layer of vernix caseosa, which isolates the fetal electrical cardiac activity [25]. However, this very promising method is not often used in clinical practice due to high financial costs and the complexity of the measuring device. The superconducting quantum interference device technology is used for recording which requires cooling by liquid helium and measuring must be conducted in a large and magnetically shielded room [17, 37]. Moreover, the fMCG must be measured by an experienced doctor and a skilled technical support must be available [4]. Nevertheless, the current research focuses on the development of new magneto-metric technologies, such as optically pumped magnetometers [17], which are less costly and their use is more practical and simple. This progress in the development might allow the fMCG to become a part of common clinical practice and the priceless information about the fetal health could be obtained from it [17].

All four of the above-mentioned EFM techniques are of great benefit to clinical practice. However, in order to obtain all important information regarding fetal and maternal health state, further research and development is needed, especially in the field of signal-processing algorithms. There is a great need to design and implement reliable and intelligent methods that can improve the processing, analysis and interpretation of fetal and maternal heart

2.2 Artificial Intelligence Methods

The function of most real systems, including human body, is represented by non-linear dynamic behaviour with a certain degree of variability and uncertainty. The processing of such data is difficult and computationally demanding for conventional algorithms [18]. AI-based algorithms are able to deal with this inaccuracy and uncertainty. In addition to that, these algorithms can use these properties to create an adaptive low-cost solution [18, 39]. AI-based algorithms generally imitate human intelligence to solve complex tasks and, at the same time, improve iteratively on the basis of obtained information [23]. There are many partial sub-areas of AI using scientific findings, in particular from the fields of mathematics, statistics or biology. With respect to EFM, it is possible to encounter algorithms based on machine learning (ML), artificial neural networks (ANNs), fuzzy logic or nature-inspired optimization techniques. This section and Fig. 3 provide a basic overview of these sub-areas.

-

1.

Machine Learning ML-based algorithms are able to learn and automatically increase their efficiency through experience based on data they work with [40]. According to learning methods, ML algorithms can be divided into four categories: supervised learning, unsupervised learning, semi-supervised learning and reinforcement learning. In case of supervised learning, a mathematical model of a data set, containing both inputs and outputs (a class for classification or a value for regression) assigned by human experts, is created [40, 41]. According to [39, 42], we can include, for example, decision trees (random forest [12] or C4.5 algorithm [43]), support vector machines (SVM) [11, 44], regressive analysis [45], k-nearest neighbor (k-NN) [11] or naive Bayes [46] into this category. As for unsupervised learning, an algorithm has only input (unlabeled) data and the output is unknown to the ML technique. The algorithm learns to recognize complex processes and formulas and usually creates a proper and simpler representation of input data which it subsequently clusters into groups without the possibility to assess the correctness of clustering [40]. According to [39, 42], some of the most well-regarded algorithms in this category are k-means [47, 48], k-medoids [47, 49], hierarchical clustering [47] or fuzzy c-means [47]. The semi-supervised learning combines both approaches described above in such a way that a part of the input data also contains the required output, but the other part of the data (usually larger) does not contain the required output [40]. Reinforcement learning is based on rewarding the required behaviour and, vice-versa, punishing the non-required behaviour [41]. Thus, the learning functions on the basis of the trial and error principle because there are no training data available. Nevertheless, neither the semi-supervised learning nor the reinforcement learning are very widespread in the field of EFM.

-

2.

Artificial Neural Networks ANNs-based algorithms are computing models inspired by biological structures behaviour. Such techniques mimic the learning and adapting mechanisms of biological neurons in brains [39, 50]. The basic network unit is the neuron which may have a random number of inputs but only one output. Neurons forming ANNs are mutually connected and transmit signals which are transformed by means of transfer functions. According to the topology (structure) of network, ANNs may be divided into feed-forward networks and recurrent neural networks (RNNs) [50]. The simplest model of feed-forward network for binary classification composed of a single neuron is called a perceptron or a more powerful adaptive linear neuron (ADALINE) [51, 52]. Due to the limited use of perceptron, this model was expanded to a multilayer perceptron (MLP), which represents a special type of multilayer network where each layer is fully connected (each layer neuron is connected with all neurons in the preceding layer) [52]. Multilayer networks are usually organized in several layers composed of an input layer, intermediate hidden layers and an output layer [50]. ANNs with a large number of layers (tens of layers) are called deep ANNs and form a basis for deep learning to solve very complex linear and non-linear problems. In order to estimate the parameters (training) of ANNs, the back propagation (BP) algorithm is of the most popular techniques [39]. This group of networks may include, for example, convolutional neural networks (CNNs) [12, 19, 53], probabilistic ANNs [54], polynomial ANNs [55, 56], deep belief ANNs [57]. Adaptive neuro-fuzzy inference system (ANFIS) [58,59,60] is also used very often in this field. As far as RNNs are concerned, there is also a reverse transmission of information from higher layers back to lower layers in addition to signal propagation from the input layer in the direction of the output layer, which is in contradiction with multilayer ANNs [50]. Within the framework of EFM, it is possible to encounter especially an echo state network (ESN) [61, 62] or a long short-term memory (LSTM) [22].

-

3.

Fuzzy Logic Fuzzy logic is a powerful tool for solving tasks where a solution cannot be found through classical statement logic using only two logic values 1 a 0. Fuzzy logic allows to work with all values in the interval \(\langle \)0;1\(\rangle \), and the number of values is infinite [63]. It allows to catch an approximate reasoning better which is characteristic of human reasoning and environment full of uncertainties and inaccuracies [64]. The fuzzy model is usually created on the basis of fuzzy rules implemented as a series of if-then rules. Since the fuzzy concept is based on the qualitative rather than the quantitative expression, it is easier to connect mathematics with real-world applications [63, 64]. In the field of EFM, we may also encounter, for example, a fuzzy unordered rule induction algorithm (FURIA) [65] in addition to the classical fuzzy logic [66,67,68].

-

4.

Nature-Inspired Optimization Techniques Nature-inspired algorithms are often inspired by the evolution, biological systems or chemical or physical processes. Such approaches have been demonstrated to be very flexible and effective for solving highly non-linear problems and their use is appropriate especially for challenging optimization tasks [69, 70]. In order to find an optimal solution effectively (or a solution close to being optimal), they use iterative processes and intelligent learning strategies for the exploration and exploitation of the search space [42]. Within the framework of EFM we may encounter, in particular, evolutionary algorithms which are inspired by biological evolution, such as genetic algorithms (GA) [71, 72], differential evolution (DE) [73, 74], or evolution strategy (ES) [75]. The second large group of algorithms are swarm-based algorithms inspired by the behaviour of biological swarms, such as particle swarm optimization (PSO) [76, 77], firefly algorithm (FA) [78] or moth-flame optimization algorithm (MFO) [79].

3 Application Areas of Artificial Intelligence

In the field of EFM, we may come across three main areas in which AI-based algorithms are used. The first area is the suppression of noise especially in fECG signals (the elimination of mECG) [19, 22, 57, 61, 80], but also in the processing of fPCG [60]. The second area is represented by the feature detection which includes the detection of fetal QRS (fQRS) complexes [47, 49, 53, 81,82,83,84,85] and the estimate of fHR without the necessity to detect R peaks [20] in fECG signals. Similarly, in fPCG signals, AI-based algorithms were used to detect fHSs [45, 48]. The last area is the classification of fetal health using signals recorded by means of CTG [11, 43, 86,87,88,89], but also by means of alternative techniques of fPCG [66, 90, 91] or fMCG [44]. Figure 4 illustrates the areas where the AI was used in the past marked by the red frame.

3.1 Noise Suppression

It is essential to have a quality signal for a precise signal analysis and obtaining correct clinical information. In case of CTG or fMCG, signal filtration is not such a difficult task as in the case of fECG or fPCG. The extraction of fECG is a challenging task due to a relatively low SNR of the useful signal (fetal component) which is overlapped by mECG and other noise both in temporal and frequency domains [4, 25]. Therefore, the past research in the field of fECG extraction focused on testing especially advanced signal processing methods such as blind source separation methods [92, 93], wavelet transform (WT) [14], empirical mode decomposition (EMD) based algorithms [94, 95] or adaptive filters [15, 96]. Hybrid methods combining adaptive and non-adaptive algorithms turned out to be the most promising [97]. However, in low quality recordings, even these algorithms failed to provide satisfactory results.

A number of authors (e.g. in [21, 98]) have recently shown that non-linear fECG domain could be modelled more accurately by means of AI approaches. In case of fPCG signal extraction, an effective suppression of most types of interference was achieved by means of the modified finite impulse response filter [99] or WT [100]. A more difficult task arises in suppressing mHSs where there is a room for using AI-based algorithms as in case of fECG.

The AI-based methods for fetal component extraction (both in fPCG and fECG) work on the same principle as adaptive algorithms. The principle will be illustrated on the fECG extraction example, see Fig. 5. These methods use the following inputs: (1) composed aECG signal, i.e. mixture of maternal and fetal components (mECG\(_\text{A}\), fECG) and noise, and (2) reference thoracic mECG\(_\text{T}\). The thoracic mECG\(_\text{T}\) is adapted by AI-based algorithm into the form of mECG\(_\text{A}\) included in the aECG input. The distortion of mECG\(_\text{A}\) is caused by measuring this signal far from the source (the heart of mother) and non-linear transformation of the signal passing through different layers and tissues in the abdominal area [55]. The mECG\(_\text{T}\) signal adapted by the algorithm into the form of mECG\(_\text{A}\) is finally subtracted from aECG to obtain the fECG.

A general schema of fECG extraction by means of AI-based algorithms. The thoracic electrode records the reference mECG\(_\text{T}\) signal and the electrodes on the mother’s abdomen record aECG which is a mixture of mECG\(_\text{A}\), fECG and noise. The mECG\(_\text{T}\) and aECG signal enters the AI-based algorithm and it adjusts the mECG\(_\text{T}\) signal into the form of mECG\(_\text{A}\) signal contained in aECG. The adjusted mECG\(_\text{T}\) is finally deducted from the aECG and the final fECG is obtained

3.1.1 Artificial Neural Networks in Noise Suppression

Different types of ANNs are appropriate for fECG extraction especially due to their adaptability to the non-linear and time-varying features of fECG signal [101]. Thanks to their ability to learn and predict, ANNs are able to separate useful signal (i.e. fECG) and noise (i.e. mECG), which overlap in time and frequency domain and reach higher accuracy in fECG extraction in comparison with classical adaptive algorithms, such as the least mean squares (LMS) [102], the normalized LMS (NLMS) [103] or the recursive least squares (RLS) [104]. Moreover, a lower number of calculations is necessary as demonstrated in [21].

-

Polynomial Neural Networks Polynomial ANNs are multilayer networks using polynomial exponentiation activation function [105]. Although, according to Ayat et al. [56], this method managed to extract the fECG and outperform the algorithm of singular value decomposition (SVD). In some cases, however, it did not provide a satisfactory results. If the thoracic mECG\(_\text{T}\) and aECG signals were recorded from different locations, the morphology of mECG\(_\text{T}\) and mECG\(_\text{A}\) was different and the final fECG signal was contaminated with significant maternal residue. During experiments conducted by Assaleh et al. [55], the polynomial ANN managed to sufficiently suppress the maternal component, even in the cases when fetal and maternal beats overlapped. On the other hand, there was a noise present in the extracted fECG signal due to muscular activity, therefore, the use of additional post-processing techniques was recommended for its elimination. It was also interesting to discover that polynomial expansions of higher order than three did not lead to further quality improvement of the extracted signal according to a visual assessment. Ahmadi et al. [106] dealt with the pre-processing and post-processing of signal by means of WT before or after the application of polynomial ANN. Three scenarios were tested: a) the WT method filtered aECG signal before the application of polynomial ANN, b) WT was applied to an extracted fECG signal obtained by the application of polynomial ANN and c) WT was used for pre-processing of aECG and subsequently also for post-processing of the extracted fECG. Although SNR improved in all three scenarios in contrast to the use of basic polynomial ANN, the biggest improvement was achieved in the case of b) variant, i.e. in the application of WT as post-processing.

-

Convolutional Neural Networks CNNs belong to deep, feed-forward, multi-layer networks composed of an input layer, multiple hidden layers and an output layer. Hidden layers contain a convolutional layer, activation layer, pooling layer, fully-connected layer and normalization layers [107]. In convolutional layer, the operation of convolution is applied and multiple feature maps are obtained, which leads to the reduction of memory bulk used by CNNs. The activation layer maps the output of convolutional layer through the activation function to form the feature mapping relationship. The normalization layer serves for standardizing the input data of each layer during the training. Pooling layers appear periodically between convolutional layers going one after another to reduce the amount of data, the complexity of network, to speed up the calculation and to prevent overfitting. Thus, more information intensive features for final classification finally enter into the fully-connected layer (this is a classical MLP). After the fully-connected layer, there is a dropout layer preventing the overfitting in such a way that during the training process, there are several neural units which are with a certain probability temporarily dropped from the network. At the end, the classification network using the function of softmax is used to separate outputs [108]. The CNN-based approach introduced by Fotiadou et al. [19] was used to remove residual noise in extracted fECG signals in which the maternal component was already suppressed. The technique used the convolutional encoder-decoder network with symmetric skip-layer connections. The encoder as well as decoder contained eight convolutional layers, but the convolutional layers of the decoder were symmetrically transposed. Skip connections were placed between every two convolutional as well as transposed convolutional layers. When testing the algorithm on simulated signals, its efficiency was evaluated according to the SNR improvement (SNR\(_{\text{imp}}\)) parameter. The final fECG signals were improved (SNR\(_{\text{imp}}\) = 9.5 dB) and the benefit was the keeping of beat-to-beat morphological variations. Moreover, there was no need of any prior information on the power spectra of the noise. To increase the accuracy of fECG extraction, Almadani et al. [109] proposed a combination of two parallel fully CNNs (U-Nets [110]) with transformer encoding. In addition to efficient fECG extraction, the system was able to operate in real time and, according to the authors, could be implemented in a portable device. The CNN turned out to be powerful in combination with Bayesian filter as showed by Jagannath et al. [98] in 2019. The Bayesian filter managed to provide accurate mECG\(_\text{A}\) estimate in the aECG signal and the deep CNN estimated a non-linear relationship between the mECG\(_\text{A}\) and the thoracic mECG\(_\text{T}\), which was non-linearly transformed. A nine-layer network was used with three convolutional layers, three max-pooling layers for the compression of signal features and reduction of complexity and three fully-connected layers. The last layer contained one neuron representing the final fECG signal. The linear activation function was used for all layers, excluding the last one, and the softmax function was used in case of the last fully-connected layer. In terms of efficiency, this combined algorithm surpassed the classical adaptive LMS algorithm. The disadvantage of the algorithm is the high computational time during the training process, and therefore it was not suitable for training large datasets. In 2020, the same authors [57] compared their previously designed combination of CNN with the Bayesian filter to two other networks (the deep belief ANN and the conventional ANN using BP algorithm) which were also combined with the Bayesian filter. The input layer of conventional ANN contained 72 neurons, the hidden layer contained 2 layers with 84 neurons in each of them and 1 output neuron. In the input layer of the deep belief ANN, there were 98 neurons, 2 hidden layers were composed of the total number of 84 neurons and 1 neuron was used in the output layer. All three methods extracted a high-quality fECG, but it was the CNN (SNR\(_{\text{imp}}\) = 39.73 dB) that achieved the best results in terms of the SNR improvement. The limitation of all three algorithms was their high time complexity, which would not allow their implementation in real-time operating devices.

-

Multilayer Perceptron It is a feed-forward multi-layer network with one or more hidden layers. The main feature of the network is that every neuron in a specific layer is connected with all neurons in the preceding layer [52, 54]. In order to select the number of neurons in the hidden layer, two rules are generally used: a) their number should not be higher than the double of neurons in the input layer and b) their number should move between the number of neurons in the input layer and the output layer [54]. Golzan et al. [80] suggested a MLP where the connections between the input and hidden neurons were weighted by an adjustable weight parameter. The authors compared the efficiency of two different training methods: maximum likelihood (it presumes that weights have a fixed unknown value) and Bayesian learning (it considers the uncertainties of weight vector and assigns them probability distribution). The algorithms were tested on real recordings and their efficiency was evaluated according to the accuracy (ACC), sensitivity (SE), and positive predictive value (PPV) parameters. The algorithm based on Bayesian learning turned out to be superior with ACC = 94.86%, SE = 96.49% and PPV = 93.79% which may be attributed to its more effective use of training data.

-

Long Short Term Memory LSTM networks are a specific type of RNN able to remember information for a long period of time and learn a long-term dependency. The chain structure with recurring modules of ANNs, each containing four layers, is typical for the LSTM. The classic LSTM unit contains an input and an output gate, a forget gate and a cell. The cell remembers values in time intervals and gates decide which information will be removed and which will be retained [111]. The two-stage architecture combining slow and fast LSTM was employed by Zhou et al. [22]. The principle of the method was the implementation of slow LSTM for filtering mECG and the fast LSTM in order to highlight fECG, eliminate the remaining noise and improve the computing efficiency. Compared to the classic LSTM, the improved method reduced the total number of calculations by 50% without degrading the efficiency of the algorithm (vice versa the SNR was slightly improved: SNR\(_{\text{imp}}\) = 4.9 dB). Moreover, the high adaptability method was demonstrated on different types of signals with different levels of noise. However, the algorithm has been tested only on synthetic signals and thus the verification of its efficiency using the real signals is needed. The LSTM combined with the autoencoder (AE) model was tested on real data by Ghonchi et al. [112]. AE is a multilayer symmetric network containing an encoder and decoder that was able to improve the feature representation of the input data. Extensive testing was performed on two datasets and in both cases an average F1 > 92% was achieved. The advantage of the approach was the preservation of prior and posterior information of the input signal. However, the performance of the algorithm decreased significantly for signals with low SNR.

-

Echo State Networks ESN algorithm is a type of RNN where the reservoirs (weights between the input and the hidden layers) are generated accidentally, only the weights of output layer are trained and updated [4, 61]. The hidden layer is very sparsely connected (typically 1% connectivity) [113]. In contrast to adaptive algorithms, the benefit of ESN is that they can work with a certain degree of uncertainty. The limitation was the strong dependence on the optimal setting of hyper-parameters for the ESN algorithm to be effective. The challenge in implementation of this method is the optimization of a relatively large number of parameters, especially: the size of units of the reservoir weight matrix, the spectral radius of the reservoir connection matrix, the input scaling of the input weight matrix, and the leakage rate [4, 62]. Behar et al. [62] used a grid search for the optimization of ESN method parameters. Although this type of optimization led to an effective suppression of mECG and a quality extracted fECG, it was not an effective procedure in terms of time. Therefore, the same authors, Behar et al. in [61], suggested a random search for optimizing the ESN whose efficiency was compared with the grid search. The efficiency of ESN in using the random search was slightly lower (F1 = 85.6%) than in optimizing the ESN by means of the grid search (F1 = 87.9%). However, only 512 repetitions were used for the random search, whereas the grid search was repeated 798 times. The final optimal parameters were different for both methods. The optimal value for the size of units of the reservoir weight matrix was 90 according to the grid search and 135 for random search. The spectral radius of the reservoir connection matrix was set to 0.4 according to the grid search and 0.821 according to the random search. The input scaling of the input weight matrix was set to the value of 1 for grid search, whereas the optimal value was found to be 0.622 for the random search. No conformity was found for the leakage rate, which was set to the value of 0.4 for the grid search and 0.974 for the random search.

-

Adaptive Linear Neural Network ADALINE is a one-layer network with multiple neurons. The principle of the network consists in minimizing the mean squared error of the predictions of a linear function. Therefore, the objective is to find weight values in such a way that the lowest error is obtained (a difference between the required signal and the output signal) [114]. ADALINE has the same characteristics as the perceptron, e.g. it is a single-layer neural model, has linear decision boundary, is used for binary classification. However, it is considered as its improved version. This is because ADALINE uses a continuous response to update the scales, unlike the perceptron, which uses a binary response to update the scales. The continuous response allows the scale updates to reflect the actual error and the thus make resulting extraction more efficient [52, 114]. The efficiency of the algorithm depends on the following parameters: the dimensionality of the input space, the learning rate, the momentum and initial weights which are adjusted as long as the difference between the thoracic mECG\(_\text{T}\) signal and the mECG\(_\text{A}\) in the aECG signal is not minimized (in case of the fECG extraction). Based on the research by Amin et al. [115] and Reaz et al. [116], the algorithm worked efficiently, if the high learning rate with the value of 0.8, the low momentum with the value of 0.5, and very small non-zero initial weights were used. Nevertheless, according to the results of Kahankova et al. [51], the lower learning rate with the value of 0.07 performed better. The optimal value of the input space dimensionality lay in the interval 20–30.

-

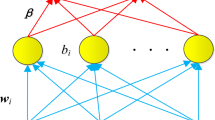

Adaptive Neuro-Fuzzy Inference System One of the most tested AI approaches for noise suppression and extraction of usable fECG signal is the ANFIS method. It combines the ANN and the Takagi-Sugeno fuzzy inference system. It is therefore a very effective method that utilizes the advantages of fuzzy expert systems (it works with inaccurate data) and the ANN (learning from the environment) [4]. Apart from the classic Takagi-Sugeno type-1 structure, the implementation of Takagi-Sugeno type-2 structure proved to be suitable according to Ahmadieh et al. [117] which surpassed the type-1 structure. The superiority of the type-2 to the type-1 is given mainly by its ability to capture the non-linearity of the model. The basic structure of ANFIS consists of five layers: the first is an adaptive input layer with two inputs (aECG and mECG\(_\text{T}\)), which performs fuzzification of the input language variables, the second is a non-adaptive rule layer for multiplying output signals from the first layer in order to determine the weight of the rules, the third is a non-adaptive normalising layer for determining the proportion of each rule’s weight to the sum of weights of all rules, the fourth is an adaptive defuzzification layer, and the last is a summary layer represented by a single non-adaptive node for determining the output (fECG) [58]. According to Assaleh [58], the proper setting of the algorithm parameters had a substantial impact on the resulting quality of fECG extraction. Based on this research, the author recommended using four membership rules (this corresponded to 16 fuzzy rules, 53 nodes, 48 linear and 24 non-linear parameters). However, the setting was chosen based on the trial and error principle, and the efficiency of the algorithm in real recordings was performed by visual assessment of the extracted fECG signals. The authors of Emuoyibofarhe et al. [118] made an important remark concerning the use of the ANFIS method—the morphology of the resulting extracted fECG signal with respect to the original fetal component in the aECG signal remains unchanged. However, the extracted fECG signal obtained visible residues of the maternal component. Combination of the ANFIS method and other techniques was recommended for enhancing the filtration. For these reasons, many authors including Saranya et al. [59], Nasiri et al. [77], or Elmansouri et al. [119], tuned the ANFIS structure using PSO, which led to extraction of high-quality fECG. A combination of DE, ANFIS and extended Kalman smoother (EKS) designed by Panigrahy et al. [74] was also used to enhance the efficiency of ANFIS. The combined approach allowed fECG extraction with ACC = 90.66%, without requiring any user interaction for initialization of the parameters. However, when implementing the algorithm, one must consider high computational cost of PSO. Another method that can be used to enhance the quality of ANFIS extraction, especially in the post-processing phase, is the WT method. This combination was proposed for instance by Swarnalath et al. [120], Jothi et al. [121], and Hemajothi et al. [122]. An interesting comparison was published in Swarnalath et al. [120], who tested three different scenarios which include a) ANFIS only, b) WT for signal pre-processing and ANFIS, and c) ANFIS and WT for post-processing. The results showed that the variant c), combination of ANFIS and WT for post-processing, was able to extract higher-quality fECG than the other two variants. Jothi et al. [121] used the undecimated WT combined with ANFIS instead of the classic discrete WT. Combining the undecimated WT and ANFIS turned out to be superior to the combination of discrete WT and ANFIS. Current research also incorporates ANFIS and WT into systems for remote monitoring. Kumar et al. [123] used orthogonal frequency division multiplexing for the transmission of mECG\(_\text{T}\) and aECG signals, followed by using the combination of ANFIS and WT for the extraction of fECG in the received signals. Thus, in the fECG extraction, the WT algorithm was very effective as a post-processing method that could enhance fetal R-peaks. However, according to the findings in [4], its application was more suitable for the determination of fHR than for morphological analysis. The application of the WT altered the morphology of fQRS complexes and the subsequent morphological analysis was less accurate. Most of the studies mentioned above used aECG and mECG\(_\text{T}\) signals acquired from the mother’s chest as inputs of the ANFIS algorithm. However, the morphology of mECG\(_\text{T}\) is different than that of mECG\(_\text{A}\), and is usually sensed with poor quality, resulting in deteriorated extraction by the ANFIS structure [4]. For these reasons, Al-Zaben et al. [124] focused on using aECG signals and SVD algorithm only. SVD was able to carry out high-quality estimation of mECG\(_\text{A}\) and aECG with emphasised fetal component from the aECG signals, which were subsequently used in ANFIS. ANFIS was also proved suitable for suppressing interferences according to Skutova et al. [60]. Two synthetic input signals were used for each tested recording; the first one contained only a maternal PCG signal, the other contained an abdominal PCG signal with a combination of mHSs, fHSs, biological and ambient noise. The number of tested membership functions ranged between 2 and 9, the number of epochs was 10 to 30, and 4 shapes of membership function were tested (triangular, trapezoidal, bell-shaped, and Gaussian). The research proved that the growing number of membership functions and epochs and the use of trapezoidal and bell-shaped membership functions led to a higher-quality filtration (higher SNR values). From the perspective of compromise between the extraction quality and the computation time, the setting with 6 membership functions and 10 to 20 epochs has been considered optimal. Nevertheless, the authors recommended using the ANFIS method combined with another algorithm such as PSO or GA to suppress the residual mHSs.

3.1.2 Nature-Inspired Optimization Techniques in Noise Suppression

Nature-inspired optimization algorithms are suitable for estimating the global optima for challenging optimization problems. They are usually implemented in combination with other algorithms to achieve a better result from the perspective of accuracy [42, 125]. Algorithms that require optimization of higher number of parameters (e.g. adaptive methods or ANNs) [59, 76, 79], can provide accurate and time-effective solutions thanks to the nature-inspired intelligence algorithms, and could be implemented in a device operating in real-time.

-

Differential Evolution DE is an evolutionary algorithm, which is inspired by biological evolution. Similarly to other algorithms, DE uses conventional operations such as mutation, crossover, and selection [73, 126]. The search is based on the selection of initial population and a subsequent simulation of its development, while being under the control of evolution mechanisms. As the population evolves one generation after another, bad solutions tend to disappear, while good solutions frequently “interbreed,” creating even better solutions (the fitness function of the algorithm improves). The aim is therefore to find such generation of the population that offers the best solution to a problem [42]. Beside the ANFIS-EKS [74] (mentioned in the ANFIS subsection), DE was also implemented in combination with an adaptive algorithm for the purpose of fECG extraction [73]. The DE method helped with the optimization of the adaptive filter coefficients. DE parameters were set to population size of 20, a scaling factor of 0.5, a crossover ratio of 0.9, and a maximum number of cycles of 500. The results were compared to the classic LMS filter. According to the visual comparison of the extracted fECG, residues of maternal QRS (mQRS) complexes were visible when LMS was used. These residues were fully suppressed by the use of DE and adaptive filter and allowed accurate detection of the fQRS complexes.

-

Particle Swarm Optimization The PSO algorithm belongs to a group of algorithms inspired by the behaviour of biological swarms, in this case of a flock of birds searching for food. Particles defined by its position, speed, and memory of the previous successful searches are created and then moved around the defined search space. Their position and speed are updated in iterations based on the fitness function. The pattern for searching individual particles is influenced by its own experience and by the experience of more successful particles within the flock [127]. During their tests of non-linear fECG extraction, most authors [59, 77, 119] used the PSO method in combination with ANFIS, as mentioned earlier. Optimizing an adaptive algorithm using the PSO method is also a suitable tool as mentioned by Anoop et al. [76]. According to Panigrahy et al. [128], another option is to use PSO to initialize and optimize EKS parameters which participate on the modelling of the mECG\(_\text{T}\) signal according to the morphology of mECG\(_\text{A}\) in aECG. The combination of PSO and EKS yielded ACC = 89.7%, surpassing the basic EKS and adaptive RLS method.

-

Moth-Flame Optimization Current research in the field of fECG focuses on implementing relatively new algorithms, one of which is MFO designed in 2015 [129]. The algorithm was inspired by the navigation method used by moths. Moths maintain a fixed angle with respect to the moon, allowing them to travel long distances in a straight line. When moths see artificial light, they try to maintain angle similar to the light. However, the artificial light is closer than the moon, causing the moths to spiral around the light instead of flying forward [129, 130]. Moths are therefore considered as search agents moving in search space, while the flames are the best position. At first, the positions of moths within the search space are randomly generated, followed by their evaluation with fitness function, resulting in the designation of the best position. The positions of moths are updated to achieve better positions with respect to flames. The whole procedure recurs until the termination criterion is reached [130]. MFO with adaptive LMS algorithm designed by Jibia et al. [79] proved to be a suitable combination, where the MFO method excelled in high global search capability, simplicity, flexibility, and easy implementation.

3.2 Feature Detection

In order to analyse the filtered signals, the key signal features must be detected and extracted. For these purposes, AI was used to detect fQRS complexes in an fECG signal [47, 49, 53, 81,82,83,84,85] to estimate fHR [20], or to detect fHSs in an fPCG signal [45, 48]. There are two types of fHSs in a noiseless fPCG signal (S\(_1\) and S\(_2\)). The S\(_1\) sound is generated by closing the mitral and tricuspid valves, while the S\(_2\) sound is generated by closing the aortic and pulmonary valves [32, 131]. The S\(_1\) sound is usually detected to determine the fHR in a fPCG signal and corresponds to the R peak in an fECG signal.

3.2.1 Artificial Neural Networks in Feature Detection

Analysis of fECG recordings, including the detection of fQRS complexes is generally considered as a challenging task. This is mainly due to the complicated suppression of mQRS complexes, residues of which make accurate detection of fQRS complexes difficult [47]. Various types of ANNs proved to be very promising for this task [53, 81,82,83,84,85]. According to [20], fHR could be estimated without having to detect R peaks.

-

Convolutional Neural Networks In 2018, Lee et al. [53] implemented a network of CNN with 10 layers for detecting fQRS complexes, which was able to reliably detect fQRS complexes without having to eliminate mECG from the input signal. The aECG signals were brought into the CNN by small portions of 100 samples and the network output yielded a probability of the input being in the group marked 0 (not an fQRS complex) or 1 (is an fQRS complex). The network comprised a total of seven convolutional layers, two fully-connected layers, and one softmax layer. The designed approach provided better detection of fQRS with SE = 89.06%, PPV = 92.77% compared to the earlier-tested CNN mentioned in Zhong et al. [85], which achieved SE = 80.54%, PPV = 75.33%, F1 = 77.85%. This was probably caused by the fact that the first mentioned CNN was multi-channelled and comprised a deeper architecture than the less-effective CNN, which had a single channel and only three convolutional layers. In addition to a time domain, CNN can also detect fQRS complexes in time-frequency domain. This approach has been used by Lo et al. [81] who normalized and segmented four input aECG signals in the pre-processing phase, followed by application of a short-time Fourier transform (STFT) to acquire time and frequency representation of signals. The representation was used as CNN input containing two convolutional layers, two pooling layers, and one fully-connected layer. Individual features were classified into four groups representing (1) mQRS complexes, (2) fQRS complexes, (3) overlapping mQRS and fQRS complexes, and (4) noise. The results of classification of each aECG recording were merged to derive final detection of fQRS complexes. With its ACC = 87.58%, CNN substantially surpassed the k-NN algorithm with its average ACC = 77.78%. Vo et al. [84] designed an innovative approach using a 1-D octave CNN for detecting fQRS complexes without the necessity to have a reference mECG\(_\text{T}\) available. The method combined CNN and RNN with residual network architecture. Although the combined approach did not require any substantial data pre-processing, it achieved very high performance (F1=91.1%). The method was able to acquire more contextual information and reduce temporal redundancy, thus decreasing the computational complexity. However, the need to set a large number of parameters appropriately can be considered a limitation of this algorithm. Fotiadou et al. [20] used a combination of dilated inception CNN and LSTM for fHR estimate, which they used to capture the short-term and long-term temporal dynamics of the fHR. The fHR could be estimated without having to detect R peaks. They selected this approach because the SNR was low in some cases, making the detection of R peaks difficult. CNN was used to extract features in an fECG signal, while LSTM was used to model temporal dynamics and subsequently estimate fHR. The combination of algorithms was tested on real recordings and its effectiveness was evaluated using a positive predictive agreement (PPA). High accuracy of determining the fHR was achieved, with average PPA = 98.45%, however, the authors stated that the method is limited by the necessity to set up many parameters (e.g. number of layers, dilation rates, number of nodes for each layer, type of layers). To eliminate these disadvantages of CNNs, i.e. the high computational complexity and the need to set a large number of parameters, Krupa et al. [132] proposed to use a MobileNet-based approach for the detection of fQRS complexes. MobileNet is an improved type of CNN that is not very computationally intensive, is low-latency and low-power. The network uses a much lower number of parameters since its architecture is composed of depthwise separable convolutions. The efficiency of the network is not reduced, as high accuracy (ACC = 91.32%) was achieved when tested on real signals. According to the authors, this solution is also suitable for the Internet of Things environment and thus for remote fetal monitoring.

-

Echo State Networks An approach based on a data-driven statistical learning approach, combining ESN and statistics-based dynamic programming algorithms for detecting fQRS complexes, was explored by Lukosevicius et al. [82]. ESN trained to mark fetal R-peaks in a signal with cancelled mECG was used in the first step. The setting of the ESN approach was as follows: ESN reservoirs of size 1000 or 500, leakage rate value of 0.9, spectral radius of the reservoir connection matrix of 0.9 or 0.94, input scaling of the input weight matrix of 0.1 or 0.08. Dynamic programming used the signal with marked fetal R-peaks created by the ESN and interpreted it in a probabilistic way. Various probabilistic interpretations were tested such as direct interpretation based on threshold settings, interpretation assessing the length of R-R interval, interpretation assessing the R-R interval variation, or a combination of the second and third probabilistic interpretation. It has been proved that using substantially less reservoirs had lower impact on the accuracy of the algorithm than using the less powerful dynamic programming algorithm. The benefit of the algorithm is the lack of necessity to have a reference mECG\(_\text{T}\) signal.

3.2.2 Machine Learning in Feature Detection

ML-based algorithms are able to automatically identify patters in fECG or fPCG signal and classify them in an uncertain environment [42]. Both supervised and unsupervised learning algorithms proved to be effective.

-

K-means and K-medoids Both algorithms are based on unsupervised learning and belong to a group of partitioning clustering methods [49]. The goal of the algorithm is to partition the objects into pre-selected number of clusters. The objects are grouped into clusters based on minimizing the sum of squares distances between the objects and the cluster centroids. The algorithm is iteration-based and randomly selects a location of centroids, assigns objects to the closest centroids and creates clusters. The position of centroids is recalculated so that they represent the new mean of the cluster of objects assigned to it. In the next step, objects are again assigned to newly determined centroids and the process repeats until the position of centroids stabilizes [133, 134]. However, the k-means algorithm is susceptible to outliers. This drawback is eliminated by the k-medoids method, which uses medoids instead of centroids [133]. The k-means method proved to be more suitable for smaller datasets, while the k-medoids method was more effective for the larger ones [134]. Castillo et al. [49] used the k-medoids clustering algorithm for partitioning the features of aECG signals. The input signals were pre-processed using WT and the features were clustered with k-medoids into three groups: (1) mQRS, (2) fQRS, and (3) noise. The clustering process was based on information regarding the amplitude and time distance of all the local maxima followed by a local minimum. The method proved to offer high accuracy (ACC = 97.19%) of detection of fQRS complexes and the subsequent determination of fHR in a wide scale of signals with various characteristics and in signal with noisy sections and managed to surpass classic threshold techniques. For the purpose of determining fHR from the fECG, Alvarez et al. [47] compared k-means, k-medoids, and two more clustering algorithms—hierarchical and fuzzy c-means. The input signals were pre-processed using the WT method and signal features were clustered into three groups: mQRS, fQRS complexes and noise, using the same rule as in [49]. The most accurate detection of fQRS complexes and determination of fHR, with ACC = 94.8%, was achieved using k-means based on city-block distances, followed by a k-medoids algorithm based on squared Euclidean distances, which yielded ACC = 94.5%. Fuzzy c-mean yielded even less accurate detection (ACC = 93.7%), while hierarchical clustering provided the worst results (ACC = 87.8%). The k-means method combined with independent component analysis (ICA) for processing fPCG signals was presented by Jimenez-Gonzalez et al. [48]. Real abdominal recordings were decomposed by the ICA method to individual source components. With k-means, patterns of individual ICA components were distinguished and these components were effectively grouped into clusters corresponding to fHSs, mHSs, maternal respiration and noise based on their spectral similarities. The authors evaluated the effectiveness of the combined method using specificity (SP) and SE parameters and achieved high SP = 99%, but low SE = 68% values.

-

Random Forest Supervised learning classifiers called decision trees [135, 136] are based on recursive partition of the instance space. The decision trees are designed using a sequence of nodes (a spot where a decision is made) and edges (decision-making variants, e.g. weight or probabilistic evaluation). Each node represents one signal feature, where the root node is the highest-level node with no incoming edges while the other nodes have one incoming edge. Nodes with outgoing edges are called internal nodes and other nodes with no outgoing edges are called leaves or terminal nodes and represent class labels [136]. The whole classification procedure starts with root node and ends with a decision at the leaves. Advantages of decision trees algorithms include particularly low demands on data pre-processing, and the ability to process data containing errors or missing values. The drawback of this algorithm is its computational complexity compared to a single decision tree [135, 136]. In general, random forest profits from creating many (hundreds to thousands) decision trees. The class that is most frequently selected by individual trees is then considered as the outcome class. The selection of features is random and is based on the idea of bagging, allowing to create a group of trees with controlled variance [137]. The random forest algorithm proved to be the most suitable for detecting fetal S\(_1\) sounds in fPCG compared to other three methods (logistic regression, SVM, MLP) according to the results of experiments conducted by Vican et al. [45]. All algorithms were tested in combination with EMD. The EMD method decomposed the input fPCG signal to three intrinsic mode functions (IMFs) which correspond to individual frequency bands of the input signal. Each IMF was split to 200 ms long windows with a hop of 50 ms, and a total of 18 statistical and 9 spectral features were extracted. The extracted features were used to predict the presence of S\(_1\) sounds. Accuracy of the classifiers was validated through the presence of peaks in Doppler signals acquired concurrently with the fPCG signals. With the exception of random forest with ACC = 72.8%, all other algorithms yielded ACC below 67%.

3.3 Fetal State Classification

CTG recordings are currently used in clinical practice as the most common indicator of fetal medical condition and indicator of fetal hypoxia. However, accurate identification of fetal hypoxia using a visual evaluation of fHR trace and the curve of uterine contractions by medical personnel did not lead to satisfactory results in the past. High variability of opinions in doctors interpreting of CTG recordings led to large inter- and extra-observer disagreement [138].

In order to eliminate the inconsistencies and variability, The International Federation of Gynaecology and Obstetrics (FIGO) implemented a standard for evaluating CTG recordings and classifying them as normal, suspicious, and pathological. The newest FIGO2015 system [1] recommends evaluating the fHR baseline, fHR variability, or decelerations, see Table 1. Despite the implementation of these recommendations into clinical practice, interpretation of CTG recordings, or detection of fetal hypoxia, remains inaccurate even today [139]. This may be due to the length of the recording or its poor quality, as well as due to its dynamics and complexity. Visual interpretation of these recordings is still very difficult for medical experts and highly influenced by human factor and experience of the respective doctors [139, 140].

For these reasons, several recent works in the literature focuses on developing automatic AI-based systems for evaluating fetal medical condition using computerised interpretation of the CTG recordings [87, 89, 108, 141]. Since the alternative techniques of fECG, fPCG a fMCG also allow determining fHR traces (in addition, fECG and fMCG allow extracting electrohysterographic signal providing information about uterine contractions, same as with CTG), several authors focused on classification of fetal medical condition through fPCG [66, 90, 91] or fMCG [44]. These fHR traces were subsequently classified via AI-based algorithm, similar to CTG. Automated AI-based systems could distinguish and analyse fHR and uterine contraction patterns better than a human expert, allowing obstetricians to determine the fetal diagnosis faster and with higher accuracy. The processing and analysis of records using the AI consist of the following three main steps: pre-processing and noise suppression, feature detection and extraction, and fetal state classification.

-

1.

Pre-processing and noise suppression CTG signals measured in clinical practice are usually noisy, which is caused mainly by movements of the mother or the fetus [142]. This leads to poor contact of the sensors with the mother, thus creating artefacts and short-term signal losses. This may also result in a situation where maternal heart rate is measured instead of fHR, leading to fHR signal drops to a lower value [143]. Signal pre-processing, noise suppression [12, 13, 142, 143], and elimination of outliers [13, 43] therefore proved to be an important step for the follow-up accurate classification of the fetal health state.

-

2.

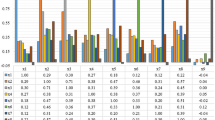

Feature detection and extraction Detection, extraction, and selection of appropriate signal features that will be used as classification algorithm inputs are key for successful classification as well. The combination of features in the time and frequency domain seems to the optimal. Cardiotocography dataset [144] containing prepared CTG signal features that are acquired from 2126 CTG recordings recorded in clinical practice is broadly used in many studies [46, 65, 72, 78, 88, 89, 145]. Each recording contains 21 features extracted from an fHR trace as well as from a uterine contractions curve (e.g. fHR baseline, number of accelerations per second, minimum/maximum of fHR histogram, or number of uterine contractions per second), and reference classification of the recording into one of three classes (normal, suspect, pathological) done by expert obstetricians. Georgieva et al. [146] also used clinical features such as fetal gestation age or maternal temperature during labour for the classification. For optimal feature subset selection, the FA [78] or GA [72] have proven to be effective. Many studies [72, 86, 147, 148] proved that lower amount of appropriate features leads to a more accurate classification compared to having a higher number of features that do not carry important information or distort the resulting classification.

-

3.

Fetal state classification After selecting an appropriate classification algorithm, training, and parameter tuning, resulting classification is done using the test dataset. The fetal state classification usually comprised two classes (normal, abnormal) [147, 149, 150] or three classes (normal, suspect, pathological) [54, 86, 87].

3.3.1 Artificial Neural Networks in Classification

In order to successfully classify a fetal state using ANNs, a suitable type of ANN must be selected as well as its architecture and hyper-parameter setting [87]. Using a classic feed-forward multilayer network requires setting up the number of neurons in the input layer, the number of hidden layers including the number of neurons, the number of neurons in the output layer, training algorithms (e.g. gradient descent BP, Levenberg-Marquardt BP, quasi-Newton BP) [87, 151], training concept (incremental-weights are updated in each iteration, or batch-weights are updated only when all inputs are available in the network) [87], number of epochs, learning rate (usually a small positive value ranging between 0 and 1) [152], and an activation function (e.g. linear, sigmoid, Gaussian) [151].

Comert et al. [87] achieved accurate fetal state classification by means of the CTG recordings and feed-forward ANN, a structure that included 21 input variables, 10 nodes in a hidden layer, and 3 nodes in an output layer. A test was conducted on a total of 12 training algorithms, including, for example, gradient descent BP, gradient descent with adaptive learning rate BP, resilient BP, conjugate gradient BP with Fletcher-Reeves restarts, or one-step secant BP. The authors achieved the best results with the resilient BP training algorithm and an average of 5707 epochs. Noguchi et al. [153] used substantially more nodes (30) in the hidden layer. The authors used 24 nodes in the input layer and 3 nodes in the output layer in combination with the BP training algorithm. The study outcomes proved that evaluating a CTG recording longer than 50 min was difficult and recommended a shorter measuring period to make the analysis more accurate, e.g. 15 min and analysing it every 5 min. Georgieva et al. [146] employed only 2 neurons in a hidden layer, 12 neurons in an input layer, and 2 neurons in an output layer. Instead of implementing a single ANN, the authors tested a combination of 10 ANNs so that each network was trained in a different section of the dataset and that the result was determined as an average of all 10 outputs. Quasi-Newton BP algorithm [154] and the hyperbolic tangent transfer function were used in all networks. The basic assumption that multiple networks tested on different parts of the dataset can provide better performance, was confirmed.

In 2007, Jezewski et al. [147] used a feed-forward ANN to classify fetal condition from CTG recordings and testing several configurations of the used signal features. The best results were achieved by reducing the number of the used features from 21 to 7 (some features did not carry important information about the fetal condition). Since more than 50% of the recordings were of the female patients, where multiple CTG recordings were made, the recording acquired the closest to the date of labour proved to be the most suitable.

Comert et al. designed an interesting extension for selecting and extracting features in [149]. In addition to the usual morphological, spectral, and statistical characteristics, they used new features such as contrast, correlation, energy, and homogeneity. This was ensured by transforming signal spectra containing time and frequency information into 8-bit grey images and creating a grey-level co-occurrence matrix (GLCM). The subsequent classification with ANN led to a more accurate classification of the fetal condition by means of CTG compared to using traditional features only. However, using solely GLCM features without the traditional ones did not yield satisfactory results.

-

Convolutional Neural Networks CNN was used to classify fetal medical state using CTG recordings by Tang et al. [12]. The network contained five convolution blocks (each convolution block contained three convolution layers), three full connection layers, and one softmax layer. CNN was able to classify the fetal medical state into two groups with substantially higher accuracy (ACC = 94.7%) compared to the SVM method (ACC = 83.46%), random forest (ACC = 84.5%), and RNN (ACC = 90.3%). The self-training CNN with eight layers (input, convolution, activation, normalization, pooling, fully-connected, dropout, and classification layer with softmax function) were implemented by Zhao et al. [108] to predict fetal acidaemia based on features acquired from CTG by means of continuous WT (CWT). Daubechies wavelets and symlet with an order of two and wavelet scales of four, five and six were used. CWT was used to extract the features due to its ability to capture hidden signal characteristics concurrently in the time and frequency domain. In addition, the classic signal features were susceptible to distortion during extraction. The same authors, Zhao et al. [141] slightly improved the classification using a combination of CNN and recurrence plot, which was able to capture non-linear signal characteristics. However, the disadvantage of CNN is the computational complexity and the need to optimally set a large number of parameters for the network to work effectively.

-

Probabilistic Neural Networks Probabilistic ANN is an implementation of Parzen windows probability density approximation. It is a feed-forward network consisting of four layers (input, pattern, summation and output) [54]. In 2016, Yılmaz [54] presented probabilistic ANN for fetal medical state classification using the CTG. The number of neurons in an input layer was given by the number of features in a dataset (21 neurons). The number of neurons in a pattern layer was given by the number of used training patterns, while each neuron was connected to all neurons in an input layer (1913 neurons for 6 iterations and 1914 for 4 iterations), 3 neurons (number of classes) in a summary layer, 1 neuron in an output layer, which applied Bayes decision rule and carried out classification. The optimum value of the smoothing parameter was 0.13. The performance of the method was compared, among other, with MLP containing 2 hidden layers with 20 neurons in each. The classification accuracy was similar in both methods, probabilistic ANN with ACC = 92.15% slightly surpassed MLP with ACC = 90.35%. The high storage needs can be considered as limitation of the method.

-

Legendre Neural Networks Legendre ANNs are single-layer networks consisting of functional Legendre expansion block based on Legendre polynomials and an output layer. Hidden layers are eliminated, since the input is transformed into higher dimensional space using Legendre polynomials. These networks excel particularly with their simple implementation and speed [155]. Highly accurate classification of fetal state using CTG recordings and the second-order Legendre ANN was designed by Alsayyari [86]. When using 21 attributes, 4 signals out of 1000 were misclassified, with 10 attributes, only 2 signals out of 1000 were misclassified. In addition, only several iterations (5 to 10) were required for training. Legendre ANN had lower computational requirements compared to the Volterra ANN and converged faster with a lower mean square error. The Legendre ANN performance could be improved by increasing the Legendre polynomial order in the testing phase.

3.3.2 Machine Learning in Classification

The ML algorithms also proved effective for classification of fetal medical state. The research in this area focused particularly on testing the supervised learning algorithms [43, 46, 72, 88, 89].

-

Support Vector Machine One of the important representatives of ML is the SVM method [156] based on supervised learning. The main goal of the algorithm is to assemble a hyperplane so that the space of features has optimal distribution (data belonging to different classes will lie in opposite semi-spaces). In optimal situation, the minimum value of distance of points from the hyperplane is as high as possible. In other words, the maximal margin is as high as possible on both sides around the hyperplane [89, 142]. The hyperplane can be described by only the points on the edge of the band, while there is usually not many of them; these are called support vectors. The kernel transformation allows transforming the originally inseparable task to a linearly separable one, on which an algorithm for finding the separating hyperplane can be applied [89]. SVM seems to be a very promising technique for classification and prediction of fetal medical state using CTG recordings. In the study of Nagendra et al. [88], the SVM method yielded accuracy similar to random forest (the average ACC > 99% in both cases). However, the SVM algorithm performed slightly better with suspect signals. Comparison studies [11, 13] had similar outcomes and concluded that the SVM method performed better than algorithms such as ANN, k-NN, or decision tree. The SVM method had another particular advantage over ANNs—it did not require many model parameters and always found a global solution during the training. An important factor for acquiring high-quality results from CTG recordings is to select suitable signal features that will serve as the classification algorithm input. Lunghi et al. [148] used a total of nine features for the classification, originating from the time and frequency domains, morphological features, and regularity parameters. They achieved the most accurate classification in a test phase, using only six features. Other features carried less information and could be therefore omitted. Appropriate feature selection and dataset balancing were also highlighted as key prerequisites for accurate CTG classification using SVM by Ricciardi et al. [157]. The optimal feature subset could be found with FA according to Subha et al. [78] or GA according to Ocak et al. [72], which was able to detect the most important features and ignore the irrelevant ones. This led to the maximization of the subsequent classification by means of SVM and, at the same time, to the reduction of the number of used features (from 21 to 13). As Krupa et al. [142] proved, feature extraction from CTG could also be done with the EMD method, which decomposed the input signal to individual IMFs. High-frequency IMFs were eliminated and a standard deviation value was calculated for the remaining ones. Standard deviations were considered as signal features and served as the input of a SVM classifier, which classified them into two groups: normal and at risk. Silwattananusarn et al. [89] proposed improving the stability of CTG signal features in order to improve the performance of SVM with polynomial kernel function. To do this, a combination of two feature search strategy approaches (correlation-based feature selection and information gain) was used. The selected features entered an ensemble of seven SVM classifiers that yielded ACC = 99.85%. A modified version of the classic SVM - least squares SVM (LS-SVM) was designed by Yilmaz et al. [145]. In addition, PSO was used to optimize parameters, leading to an effective classification, but at the cost of increasing the computational complexity of the algorithm. When classifying a fetal state by means of an fHR trace estimated from fMCG, SVM proved to be the best choice compared to MLP and J48 decision tree (also known as C4.5 algorithm), as shown in the experiments in Snider et al. [44]. Signals were acquired from fetuses with the age of 24–39 gestation weeks, and features used in the classifications were selected from the time (e.g. RR interval mean, RR interval standard deviation) and frequency domain (e.g. peak frequency, kurtosis). All three methods yielded similar accuracy, but SVM with ACC = 66.2% was slightly more accurate than the C4.5 algorithm with ACC = 65.4% and MLP with an overall ACC = 61.4%.

-