Abstract

The collective behaviour of fish schools, shoals and other swarms in nature has long inspired researchers to develop solutions for optimization problems. Instinct influences the behaviour of fish to group into schools to increase safety, enhance foraging success, and promote breeding. According to these instinctive behaviours, several fish-inspired algorithms have been introduced to solve hard problems. This paper presents a comprehensive survey of fish-inspired heuristics, exploring their evolution within the context of general optimization problems. To our knowledge, this survey is the first to cover both main fish-inspired heuristics in the literature, namely, the artificial fish swarm algorithm (AFSA) and Fish school search (FSS), in addition to other algorithms inspired by specific fish species. The review covers more than 50 papers published in the Web of Science and IEEE databases since 2000. We first review the basic fish heuristics, highlighting their advantages and drawbacks, and then detail attempts in the literature to improve their behaviour to solve complex, multi-objective and high-dimensional problems in several domains. Our work is intended to provide guidance for researchers and practitioners for the purpose of further advancing research in the area of fish-inspired heuristics. We aspire to encourage their utilization in various fields for global optimization and in real-life applications. The survey findings indicate that fish-inspired heuristics are very alive in recent literature and still have great potential. Several challenges and future research directions are also identified among the findings of this survey, which can help to enhance this vibrant line of research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimization is an invaluable tool when making decisions or analysing systems. An optimization problem refers to the process of finding solutions that are optimal or nearly optimal from among all feasible solutions with respect to certain goals. To solve an optimization problem, optimization algorithms are utilized to express the objective as a quantitative performance measure of the optimized system, the unknown variables of the system, and the constraints of the problem that describe the relations between the variables and their allowed values. Some optimization problems are intrinsically harder than others in that they cannot be solved optimally within an “acceptable” time frame. These types of problems are usually approached using metaheuristics, such as evolutionary algorithms and swarm intelligence [1].

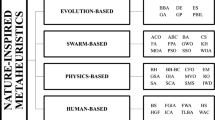

Swarm intelligence (SI) is a modern discipline within the field of artificial intelligence (AI) that is inspired by the collective behaviour of animal societies. The goal of SI is to take this inspiration and design intelligent multi-agent systems for applications, e.g., optimization problems [2]. A swarm in SI can be defined as a structured aggregation of interacting organisms or agents performing a collective behaviour. These organisms or agents are relatively simple and unsophisticated, yet their coordination directs the swarm towards a desired goal. This means that the self-organization of the whole system is the result of collective behaviour by means of local interactions among simple agents. Therefore, the main properties characterizing SI are the existence of simple entities, the distribution of control, and self-organization. In fact, the coordinated movement of animal groups (e.g., bird flocks and fish schools) has intrigued researchers for many decades. Thus, several swarm-inspired metaheuristics have recently emerged in the literature to solve complex optimization problems, because the underlying properties of SI lend themselves to successful application in such problems. While sacrificing the guarantee of an optimal solution that is attained from complete algorithms (e.g., exact search methods), SI-based algorithms are capable of obtaining good solutions in a significantly reduced amount of time. SI algorithms are also easier to implement, more flexible, and more robust than traditional optimization approaches [3]. Among the popular SI algorithms are ant colony optimization (ACO), which takes its inspiration from the swarm foraging behaviour of some ant species [4, 5]; the artificial bee colony (ABC) algorithm, inspired by the foraging behaviour of honeybees [6]; glow worm swarm optimization (GSO), inspired by the night time activities of glow worms and their control over luciferin emissions [7]; cuckoo search, inspired by the obligate brood parasitism of some cuckoo species when laying eggs [8]; and the artificial fish swarm algorithm (AFSA), inspired by the collective movement of fish and their foraging behaviours [9], which is the focus of the current survey.

Fish have largely been viewed throughout history as automatons and perhaps the most successful vertebrates since ancient times. Their behaviour was thought to be controlled by predispositions based on environmental cues. However, this function has since diversified with the advent of evolution. With the enormous diversity of fish comes an astounding array of physiological and behavioural adaptations that ensure their survival in nature [10]. A school of fish refers to a group with members from the same species that is led by a few fish towards a food source or a safe haven. Fish derive various benefits from group decisions within the school, such as reduced aggression, defence against predators, and better food localization. The ability of fish schools to coordinate movements in water has been extensively studied due to its implications for social cognition, animal behaviour, and artificial intelligence (e.g., [9, 11,12,13]). The AFSA is one of the earliest fish-inspired metaheuristics and is a relatively recent addition to the field of natural computing [9]. The AFSA imitates fish behaviours such as preying, swarming, and following for the purpose of reaching the global optimum of a given problem. Fish school search (FSS) is another optimization algorithm based on the gregarious behaviour of schools and considers the foraging, swimming, and breeding behaviours of schools of fish [13].

Although research on fish swarm optimization is a relatively recent innovation in the field of natural computing and SI, it has since found widespread applications in complex optimization domains such as networks [14,15,16,17,18], image processing [19,20,21], robotics and motion control [22,23,24,25,26,27], machine learning [28,29,30,31,32,33], path planning [34, 35], traffic control [36], industry [37,38,39], and automation [40].

The AFSA is a stochastic population-based SI algorithm that was inspired by the natural schooling behaviour of fish in water [9]. The algorithm uses artificial fish to imitate the specific behaviours of individual fish and the interactions that are carried out between fish in a school while foraging for sustenance. The characteristics of the AFSA include parallelism, simplification, robustness, global search ability, fast convergence, and independence from the gradient information of the objective function [9, 41]. An extensive survey of the AFSA was presented in 2014, reviewing its evolution in the literature, improvements, and applications in various fields [42]. Nevertheless, to the best of our knowledge, no survey has been conducted on all fish-inspired heuristic techniques for general optimization problems. Hence, this paper attempts to review fish-inspired heuristics that either extend well-established algorithms (e.g., the AFSA and FSS) or are inspired by specific behaviours or species. The survey focuses on modifications that specifically address general optimization problems or improve optimization processes.

The remainder of this paper is organized as follows. Section 2 presents the biological foundation of fish behaviour. Next, the survey methodology is presented in Sect. 3. Section 4 is the main survey that describes the original AFSA and FSS algorithms and reviews the literature for modifications of fish-inspired algorithms for general optimization problems separately for each type, followed by a review of papers dealing with algorithms inspired by specific fish behaviour. Finally, Sect. 5 discusses the overall findings of the survey and provides insights into some open challenges and further research directions.

2 Biological Foundations

A school of fish is composed of a large number of fish of the same species that are moving sociably in a coordinated manner in the water. This behaviour is prevalent in nature, with almost 80% of fish species exhibiting this behaviour in one or more phases of their lifecycle [43]. Fish derive benefits from belonging to a school, such as safety from predators, enhanced foraging success, and increased success in terms of finding a mate. Individual fish are assorted within the structure of a fish school depending on differences in speed, interaction range, age, nutritional status, and sex [44]. The positions of fish can change with time, as modifications are possible due to local interactions between fish in a school. The group size has also been shown to control modifications, where individual fish can change their probability of being in a group of a certain size at a certain time.

Fish schools are often highly integrated with regard to their collective movement as a cohesive unit, where important information about the environment can be acquired based on the movement of the others in the school. Underlying the cohesive movements of fish schools are the fundamental social tendencies of the individuals within the group, including the avoidance of collisions with others and gravitation towards or alignment with others [10]. In the absence of external stimuli, these simple rules of collective motion can be observed. A school of fish often needs to respond to external stimuli and modify its behaviour with respect to the other fish and the environment. These behaviours require highly integrated social interactions among the individual fish in the school so that information about the location and direction of a predator attack is rapidly propagated.

The cohesion of the fish school is also imperative for facilitating information transfer while foraging or migrating [10]. Schools essentially form an integrated array of sensors to allow individuals to increase the effectiveness of the interaction range with the environment. This means that a swarm is able to detect gradients of food concentrations or appropriate habitats that would not have been successfully detected by an individual fish. Foraging area copying is also a common behaviour in fish schools, in which individual observer fish (i.e., less-successful feeders, also known as copiers) gravitates towards the area where an informed individual fish (i.e., a successful forager, also known as a searcher) is seen feeding. This demonstrates that informed fish can guide uninformed individuals successfully without requiring a direct signal. Swarms also split when the strength of their attraction weakens [44].

3 Survey Methodology

This survey was prepared by examining the Web of Science and IEEE databases for fish-inspired heuristics utilized for optimization problems in general. Papers that target a specific optimization problem, such as scheduling or task allocation, were considered beyond the scope of this paper. After generating a list of fish-inspired algorithms, only publications that emerged starting from the year 2000 up to April 2020 were included in the survey. This is a reasonable choice since the majority of studies that have drawn inspiration from fish behaviour mainly emerged after the seminal AFSA paper [9]. The findings were categorized into two prominent fish-inspired heuristics, the AFSA and FSS, which were inspired by the general behaviours of fish schools, and a third category for algorithms inspired by a specific fish or behaviour. Figure 1 illustrates the distribution of the surveyed works across the three categories. The majority of the works were categorized as original AFSA papers and variants (35 papers), followed by original FSS papers and their variants (11 papers). The smallest number of papers was found for the third category, with three papers inspired by specific fish species and a fourth inspired by certain fish behaviour. In Fig. 2, the year-wise distribution of the papers is demonstrated based on the three aforementioned high-level categories: the AFSA, FSS, and algorithms inspired by specific fish or behaviours.

4 Fish-Inspired Algorithms

4.1 Artificial Fish Swarm Algorithm (AFSA) and Variants

This section describes the detailed procedure of the standard AFSA and presents the fish behaviours that inspired it. The rest of the subsections provide summaries of the works that have modified the standard AFSA for the purpose of enhancing its performance or adopting the algorithm for other optimization problems.

The AFSA was first proposed in 2002 with the basic idea of imitating fish foraging behaviours, with individual fish performing local searches for the purpose of reaching the global optimum [9]. In water, a fish often finds the area with the most food by itself or by following other fish (i.e., swarming). Therefore, an area with the largest number of fish has the most plentiful food. Based on this characteristic, the algorithm comprises four foraging behaviours or functions: random moving (free moving), preying (searching), swarming, and following (chasing). An artificial fish resides in the solution space and the domain of other artificial fish, where it perceives external concepts with its sense of sight. Each artificial fish maintains its current position, visual range of exploration, and maximum length of movement (i.e., step limit). If a fish wants to proceed to a position within its visual field of sight, the food consistency at that position is checked. If the food consistency is better, then the fish is able to proceed; otherwise, the fish continues searching its visual area.

This information helps the artificial fish determine which of the four behaviours to perform. In other words, an artificial fish looks for positions that have better fitness values in the search space by performing these behaviours.

Artificial fish perceive their external surroundings with the sense of sight. The position of an artificial fish can be described as X = X1, X2, …, Xn, where Xi is a control variable. Xv is a position in the artificial fish’s view and is expressed as follows:

where the visual parameter indicates the sight field of the artificial fish, and the rand() function generates a random number with a uniform distribution.

Xnext is the next position of an artificial fish. If Xv has a better food concentration than the current position, then the artificial fish will attempt to move a step towards it using Xnext. However, if the next position does not have a better concentration of food, then the artificial fish will continue searching in its visual area. The next movement step is expressed as follows, where the step parameter expresses the step length:

In the AFSA, an artificial fish takes a random walk within its sight range in the problem space, as shown in Eqs. (1) and (2), to enlarge its search space.

The second behaviour in the AFSA is preying, where each fish uses its senses to look for its prey independently from the swarm. The preying behaviour is performed in the algorithm when artificial fish randomly choose a position in their field of vision. The new position is given by:

where Xi is the artificial fish position in the search space and Xj is the new position. The new position’s fitness value, i.e., the food concentration at that position, is then compared with the current position’s fitness value. If the fitness value of the new position is better, then the artificial fish moves a step towards the new position (Eq. 4). Otherwise, the artificial fish selects a new position in its field of view and again judges its suitability. If it cannot satisfy the pre-set conditions after a certain number of trials, then it reverts to moving randomly (Eq. 5).

where t is the number of iterations, Xt is the position of the artificial fish in the current iteration, Xt+1 is the new position at the next iteration, Xj is the next position, Step is the permitted step limit, and the rand() function generates a random number with a uniform distribution.

In water, fish naturally assemble into groups to guarantee their survival in the colony and to be protected from predators. In the AFSA, swarms maintain their generality as artificial fish try to move forward to a central position where there might be more food. Thus, this behaviour reduces the number of artificial fish trapped in local optimal solutions as it accumulates the fish in regions where a global optimum can be achieved. An artificial fish attempts to move a step toward the centre and examines the states of the fitness functions of those two positions. If the companion centre has more food and the area is not very crowded according to a control factor, then the artificial fish moves a step towards the centre (Eqs. 6 and 7). Otherwise, the preying behaviour is executed instead (Eq. 3).

As fish move in a swarm, they continually watch over their nearest neighbours. If one neighbour finds a food source and moves towards it, the fish trail this neighbour to reach the food quickly. The following behaviour is performed in the AFSA when the artificial fish follow the neighbour with the highest fitness function value (Eq. 8). Otherwise, the fish execute the preying behaviour (Eq. 3).

Free or random movement and preying are categorized as individual movements, while following and swarming are behaviours performed within a group. An artificial fish will first attempt to perform the swarming or following behaviours; if it is not able to move to a better position using these group behaviours, then the fish will attempt a preying movement. If a better position is not attainable via a preying movement, then random movement is carried out. Each of these behaviours influences the algorithm’s capabilities: preying lays the foundation for algorithm convergence, swarming enhances the stability and global convergence of the algorithm, and following hastens the algorithm’s convergence. The AFSA also utilizes a bulletin to record the best position that has been found by all swarm members. During each iteration, the bulletin value is compared with the fitness value of the best artificial fish. If this value is better than the recorded value in the bulletin, then the bulletin is updated with the new position.

Despite the positive advantages of the standard AFSA, several disadvantages were identified during its application. These include lack of balance between global search and local search, lower convergence speeds at later stages of the optimization process, high time complexity, and a lack of benefit from the experiences of group members for determining the successive movements. Accordingly, several efforts have been described in the literature that aim to address the shortcomings of the standard AFSA and extend its abilities to other optimization problems.

Our survey of the literature from the conception of the AFSA in 2002 until April 2020 discovered 35 publications that modified the standard AFSA to enhance its performance. This includes modifying the AFSA to improve its stability and ability to search for global optima; improving its convergence speed and optimization accuracy; and addressing multimodal, continuous, dynamic, and multi-objective optimization problems. Table 1 categorizes the AFSA and its variants based on their originality, multimodal testing, and optimization topics. The year-wise distribution of the AFSA and its variants is also illustrated in Fig. 3. Further details regarding these works are presented in the following subsections.

4.1.1 Improving the Stability and the Search for Global Optima

From the surveyed literature, some of the earliest efforts focused on enhancing the stability of the standard AFSA and its ability to find global optima. One of those works, the improved AFSA (IAFSA), introduced a leaping movement to the standard AFSA that is triggered once the swarm’s optimal values exhibit no further improvement [47]. Accordingly, a new feed-forward neural network optimization module based on the IAFSA was presented. A comparative study was conducted by training three feed-forward neural networks with back-propagation (BP), the AFSA, and the IAFSA. The results showed that the IAFSA had a better global convergence rate and stability than the other two algorithms. These results further show that the IAFSA is an effective method for training feed-forward neural networks.

Despite the many benefits of the AFSA, it can suffer from lack of precision, as artificial fish can become trapped in local optima. To overcome this problem, the standard AFSA was enhanced with the introduction of a chaotic search and feedback strategy [48]. The modified algorithm then utilizes the AFSA to perform global exploration, while a chaotic search is applied for the exploitation of the best artificial fish. Furthermore, a new behaviour utilizes the record in the bulletin as feedback to guide the movement of the artificial fish given a certain probability. Two multimodal test functions were used to analyse the optimization ability of the proposed algorithm, where the results showed that the modified AFSA had a better convergence speed, generation, and precision than the original algorithm.

Chaos search was also used in another improved AFSA, called the Chaos search AFSA (CSAFSA), to overcome the disadvantage of entering local optima in later evolution periods [36]. Utilizing the underlying benefits of chaos search (randomness, ergodicity, and regularity), which can avoid local minimum points during the search process, the AFSA searches around the globally best fish within a certain radius. If a better displacement is found, then the globally best fish is replaced with the new solution. The performance of the CSAFSA was comparatively tested against the standard AFSA using four functions characterized as continuous, discontinuous, convex, and nonconvex. The results showed that the CSAFSA was able to achieve a higher convergence speed and better stability than the standard AFSA.

A new modified AFSA, the MFSA, was formed by hybridizing the standard AFSA with PSO for the purpose of reconfiguring the standard AFSA to minimize the impact of the step parameter [49]. As a result, artificial fish can swim similarly to particles in PSO using the visual parameter. Moreover, communication behaviour was introduced to the MFSA to construct a CMFSA that allows fish to obtain the best position of the swarm from the bulletin. The findings showed that the CMFSA exhibited superior performance to that of the standard PSO in all tested algorithms in terms of accuracy and stability.

In [50], the parameters of a Bayesian network were obtained by learning, for which a modified AFSA can be applied. To best improve its stability and ability to obtain global optima, the standard AFSA was first hybridized with a genetic algorithm’s variation factor to modify the parameters of the artificial fish. Experiments were conducted by applying the standard AFSA and the proposed modification to learn a Bayesian network to comparatively assess their performance. The experimental findings showed that both algorithms can be applied to learn a Bayesian network’s parameters with respectable convergence. Of the two algorithms, the modified AFSA outperformed the standard algorithm with better convergence.

In summary, several variants of the original AFSA have the primary goal of strengthening its stability and improving the search for global optima. Different approaches were undertaken to improve the algorithm’s performance by introducing new fish behaviours [47], global communication [36, 48, 49], and a variation factor [50]. In one instance, the step parameter of the standard AFSA was eliminated due to its direct impact on the global search capacity of the artificial fish [47]. Regularly, the original behaviours of the AFSA (random movement, preying, swarming, and following) were modified to accommodate the changes applied to the improved algorithms [36, 47, 48, 50]. Modifications to the standard AFSA were often enabled by hybridizing the original algorithm with chaos search [36, 48], PSO [49], and a GA [50]. All variants of the AFSA were comparatively examined against the performance of the standard AFSA [36, 47,48,49,50]. Slightly under half of the variants in this section were also comparatively assessed against other algorithms, such as PSO [49] and BP [47]. Test functions were often utilized in a variable amount, ranging from two to eight functions [36, 48, 49]. Alternatively, other studies employed their modified AFSAs to train feed-forward neural networks [47] and learn Bayesian network parameters [50].

4.1.2 Improving the Convergence Speed and Optimization Accuracy

One of the shortcomings of the standard AFSA is its lower convergence speed during the later stages of its execution, which can impact its effectiveness as an optimization tool. Because the original version of the algorithm only utilizes local information to search for better positions for the artificial fish, it leads to unsatisfactory optimization precision and convergence speed. One of the earliest variants of the AFSA that addresses this problem is an improved AFSA (IAFSA) that utilizes global information to influence the behaviour of artificial fish [52]. The position of each artificial fish is derived from a global value representing the best artificial fish. The algorithm further adapts the leaping [89] and swallowing [90] behaviours developed in the literature to increase the amount of leaping from local extremes and save memory space to speed up the iterative process, respectively. The experimental results showed that the proposed algorithm has a better convergence rate, higher global search accuracy, and lesser computing complexity than the standard AFSA.

A hybridization of the standard AFSA and PSO, the particle fish swarm algorithm (PFSA), was proposed to effectively search for global optimal solutions with higher precision and to overcome the weakness of the AFSA [53]. From PSO, the social concept was introduced to the PFSA with a globally best solution variable and adjustable step length to enhance the convergence speed. The crowd factor from the standard AFSA was also modified to allow the artificial fish to find solutions quickly and precisely. The PFSA proved better at producing optimization results for four well-known functions, with a faster convergence speed and higher precision than the standard AFSA.

A new adaptive meta-cognitive AFSA (AMAFSA) was constructed based on meta-cognition (AMAFSA), in which artificial fish use their knowledge about their surrounding environment to improve the optimization performance [51]. The new algorithm introduced four variables to express meta-cognitive knowledge, including the best ever state of the artificial fish, the best state ever tried, the number of instances when the first two variables were equal, and the count of the attenuation time. The performance of the AMAFSA was investigated using four benchmark functions versus the standard AFSA and adaptive AFSA, proving the success of the approach, as it elevates the adaptability of the artificial fish and accelerates the convergence rate.

A modification to the standard AFSA was inspired by the symbiosis phenomenon, in which fish schools coordinate their movements in the water to overcome the low accuracy and slow convergence speed of the AFSA [54]. The symbiosis phenomenon occurs when different fish develop cooperative interactions with each other to improve their survival. The symbiosis-based AFSA uses two artificial fish swarms to search the solution space cooperatively. Additionally, the step and visual parameters are adaptable under this phenomenon, where these values are determined by the average distance to all individual artificial fish in the swarm. Moreover, the swarming behaviour was modified with a weighted centre to rationally gravitate the swarm towards superior artificial fish. Experimentation was conducted to comparatively assess the algorithm’s performance against the unified PSO (UPSO) and shuffled frog leaping algorithms (SFLA). The findings show that the new algorithm surpassed the other algorithms with faster convergence and more accuracy for most functions, although with equal complexity to that of the standard AFSA.

To overcome the shortcomings discussed in this section, a new algorithm improved upon the standard AFSA by incorporating the leader mode from the glow worm swarm optimization algorithm (GSOA) and the crossover mutation operation from the genetic algorithm (GA) [55]. The new self-adaptive artificial mutation-based fish school algorithm (AMAFSA) adjusts the visual and step parameters by taking the distance of each artificial fish from the other fish in the school into consideration. A neighbourhood sensing effect and a leader mode were also introduced to consider repulsion/attraction effects and attract other fish to better food concentrations, respectively. Mutation and crossover operations were also added to leap local optimization tracks and maintain the diversity of the population. The performance of the AMAFSA was compared with that of the standard AFSA and GA, where the AFSA and AMAFSA were able to find global optima. Nevertheless, the convergence speed of the AMAFSA was significantly faster than that of the standard AFSA and with better precision.

An improved AFSA adopts dynamic adjustment to the visual and step parameters to positively influence the convergence rate and precision [56]. That is, it introduces interactive learning between historical and global optimization to improve the update strategy of the artificial fish. Experimentation was carried out to verify the performance of the proposed algorithm against the standard AFSA using three benchmark functions. The conclusion drawn was that the convergence performance of the proposed algorithm is superior to that of the other algorithms examined, with higher precision and accuracy.

A global AFSA (GAFSA) was introduced to further enhance the convergence speed and accuracy of the standard AFSA by incorporating two intelligent methods: chaos search (CS) and a modified simplex method (MS) [57]. The GAFSA aimed to improve the global search ability of the AFSA by adding globally optimal artificial fish information to update the positions of the artificial fish. While the GAFSA performed better than the standard AFSA, its computational complexity increased, and it exhibited a slow convergence rate at later stages of the optimization process, as well as reduced accuracy. On the other hand, in the proposed chaos search/modified simplex GAFSA (CS MS GAFSA) approach, the visual parameter and crowding factor are dynamically adjusted to improve the global search capabilities, convergence speed, and optimization accuracy of the algorithm. Simulations were conducted to evaluate the optimization performance of the CS MS GAFSA compared to that of the standard AFSA and GAFSA, where improved convergence accuracy was demonstrated by the GAFSA.

A parallel adaptive AFSA based on differential evolution (PAAFSA-DE) was proposed to address several disadvantages of the standard AFSA [58]. In the PAAFSA-DE, the artificial fish population is divided into two subgroups of the same size, and adaptive mechanisms are applied to each subgroup to assign local search tasks to one group and global search tasks to the other. The local search group’s visual and step parameter values are adaptively changed by gradually decreasing them to enhance the convergence rate in the early stages of optimization and to improve the accuracy in the later stages. The global search group’s visual and step parameter values are large for the purpose of enlarging the search space. The two subgroups evolve independently from each other, and individual migration periodically occurs to ensure the exchange of information and the spread of superior individuals. Moreover, a differential evolution mechanism is employed when there is no change in the information for a certain time period, and thus, the algorithm avoids falling into a local optimum. The findings showed that PAAFSA-DE converged much faster and more accurately than the standard AFSA for all tested functions.

A modification to the standard AFSA involved the introduction of a dynamic adjustment scheme to the visual and step parameters to better balance the convergence speed and optimization precision [59]. In the improved AFSA, the visual and step parameters are adjusted using a regulation factor to respond to dynamic changes during the process of iteration. The findings showed that the improved AFSA exhibits better performance in terms of convergence speed and optimization accuracy than the standard AFSA. The improved version also proved suitable for the parameter optimization of a support vector machine.

In [61], an improved version of the AFSA was proposed to enhance the convergence speed and search accuracy of the original algorithm, using the adaptive mechanism and crossover and mutation operators adopted from the adaptive genetic algorithm. The main idea is that if the optimal AF does not change during the iteration process or has few changes, the mutation operation maintains the optimal individual’s state of the AFs and mutates minority dimensions for the remaining AFs based on a certain probability. The algorithm was tested on five different instances of the problem of green wave traffic control, showing better feasibility and effectiveness than the traditional methods.

In [60], a hybrid artificial fish swarm was proposed to improve the rate of convergence of the AFSA. The authors introduced the chemotactic behaviour of the bacterial foraging optimization algorithm (BFA) to fish foraging behaviour and introduced Levy flight to the random behaviour. The algorithm was tested with and without the newly introduced components, where the results showed that the proposed algorithm outperforms the other versions.

To summarize the findings of this section, we can see that the bulk of the improvements made for the standard AFSA were carried out to enhance the convergence rate and optimization accuracy. This was anticipated, as one of the primary drawbacks of the original AFSA is its low convergence speed during the later stages of execution and its overall reduced precision. Slightly more than half of the surveyed AFSA variants in this section borrow behaviours from natural phenomena [54, 60], theoretical concepts [59], and other well-established optimization algorithms [51, 55, 58, 60]. Modifications have commonly been applied to the visual and step parameters, which is understandable, as their impacts on the convergence and optimization accuracy have previously been verified [52, 54,55,56,57,58,59]. In several instances, the crowding factor was dynamically adjusted to influence artificial fish migration [52, 53, 57]. Another AFSA variant utilized the leaping and swallowing fish behaviours from other research to improve the performance of the modified AFSA [52]. Typically, the algorithms’ performances were assessed against the standard AFSA, which is the case for all AFSA variants in this section. Comparative assessments were also carried out against other optimization algorithms for slightly more than half of the variants. These include the adaptive AFSA [52], UPSO [54], the SFLA [54], two improved AFSAs [54], the GA [55, 56], PSO [56], and the GAFSA [57]. The test function utilized with the comparative evaluations often differed across AFSA variants with variable complexities.

4.1.3 Enhancing the Algorithm for Multimodal Optimization Problems

The performance of the standard AFSA has been enhanced for multimodal optimization problems that have multiple local optima around the global optimum [64]. In [62], adaptive methods based on a fuzzy system were proposed. The proposed algorithm introduced two fuzzy adaptive methods. The first algorithm, fuzzy uniform fish (FUF), uniformly adjusts the step and visual parameters by utilizing the output of a fuzzy engine. The second algorithm, fuzzy autonomous fish (FAF), incorporates a fuzzy controller for each artificial fish so that it can adjust its own parameters independently from the swarm. The effectiveness of the search was considerably improved using the FAF and FUF algorithms, as the artificial fish are able to pass local optima more rapidly and search around the global optimum more accurately after convergence.

In another modification to the AFSA, niching technology was injected into the standard AFSA to enable the algorithm to find multiple optimal solutions [63]. In the new algorithm, the niche quantum AFSA (NQAFSA), artificial fish are divided into several subswarms to form a niche and explore the search space in parallel. To maintain the diversity of species, a restricted competition selection strategy is used. The performance of the NQAFSA was comparatively assessed against the niche genetic algorithm (NGA) using two test functions, where the NQAFSA was able to find better solutions than those of the NGA for both test functions. The performance of the NQAFSA was further assessed against the standard AFSA using one of the test functions, where the results showed that the artificial fish in the NQAFSA were able to congregate around the optimal solution, while in the case of the standard AFSA, they failed to do so.

A novel cultured AFSA, named CAFAC, introduced a crossover operation to promote the diversification of the artificial fish and the inheritance of the parents’ advantages for multimodal optimization problems [64]. Culture algorithms (CAs) are a class of computational models derived from the principles of cultural evolution. A CA was combined with the embedded AFSA to overcome the shortcomings of the uniform search process of the AFSA. The domain knowledge is extracted from the swarm and stored in a belief space to influence the evolution of the swarm at each iteration. Moreover, the group behaviours of the standard AFSA (swarming and following) utilize this knowledge to direct the movement steps of the artificial fish. The optimization results on ten test functions showed that CAFAC was able to achieve better performance with faster convergence than the crossover AFSA and standard AFSA.

A novel extension to the standard AFSA extends the algorithm with ocean current power (OCP) to influence the speed of fish activity (AFSAOCP) [41]. The velocities of the ocean currents affect the speed of the fish; the fish swimming along an ocean current are encouraged to move forward, while the ability to move forward is hampered when the fish are swimming against the current. Accordingly, in the AFSAOCP, artificial fish are divided into three subgroups depending on the influence of the current: positive, negative, and neutral. Each subgroup moves with a different step length that can improve the evolution of the fish and the convergence speed of the algorithm. Ten multimodal test functions were utilized to evaluate the performance of the AFSAOCP against the standard AFSA, AAFSA1 (with an adaptive step length), AAFSA2 [73] (which introduces a new behaviour), and the IAFSA [53]. The findings showed that for most test functions, the AFSAOCP had superior global search capabilities and faster convergence than the four other algorithms. The computational complexity was also reduced by the AFSAOCP. Nevertheless, it proved more complex for simpler functions with lower dimensions.

A hybrid swarm intelligence algorithm that combines the standard AFSA with the artificial bee colony (ABC) algorithm was proposed in [65], using a two-stage treatment method: reverse learning-based swarm initialization and interactive learning. To improve the swarm diversity, a reverse solution was generated from the initial value, creating two sequences for possible initialization. The sequence with the better fitness was then selected to improve the quality and efficiency of the solution. An interactive learning strategy was devised by dividing the swarm into two groups, where the swarm with the poorest fitness value was replaced with the swarm with the better value. The effectiveness of the proposed algorithm was examined against the dynamic weight niche-based artificial fish swarm algorithm (DN-AFS) and random perturbation-based ABC algorithm (RP-ABS) for solving five well-known benchmark functions. The hybrid algorithm proved superior with improved optimization accuracy and convergence speed, although with more complex operation time.

In [39], a modified version of the AFSA (MAFSA) was proposed to overcome the poor global search and the weak local approaching abilities of the traditional AFSA. The modified algorithm mainly performs the preying behaviour. The following behaviours are performed when fish cannot move one more step towards the optimal value. Thus, the fish move forward in a better direction. Furthermore, the visual and step parameters are increased for the preying behaviour to achieve a better global search ability and decreased for the following and swarming behaviours to improve the local approaching ability of the algorithm. When tested on a representative multimodal function, the MAFSA exhibited better performance than the traditional AFSA under the same conditions.

In summary, the standard AFSA was originally intended for unimodal optimization problems, and since then, several efforts have been undertaken to adapt the original algorithm for multimodal optimization. Modifications to the original algorithm have been supported by other technologies (fuzzy systems [62] and niche technology [63]), other optimization algorithms (the CA [64] and ABC algorithm [65], or natural phenomena (OCP [41]). The majority of variants maintain the original behaviours of the fish in the AFSA (random movement, preying, swarming, and following) since the amendments were often applied to the overall procedure, parameters, or behaviour selection process. This excludes CAFAC [64] and the MAFSA [39], where modifications were applied to the swarming, preying and following behaviours of the artificial fish to direct the step movement of the artificial fish when performing group behaviours. Comparative assessments were often carried out against the standard AFSA [41, 62,63,64] using a varied number of multimodal benchmark functions, ranging from as few as two functions [63] to ten test functions [41, 64]. The performances of all AFSA variants were also assessed against other algorithms: GPSO [62], the NGA [63], three variations of improved AFSAs [41], the DN-AFS [65], and RP-ABC [65].

4.1.4 Enhancing the Algorithm for Continuous Optimization Problems

A new algorithm, AFSA-CLA, was formulated by hybridizing the standard AFSA with cellular learning automata (CLA) for the purpose of solving optimization problems in continuous and static environments [71]. In the proposed algorithm, each search space is assigned a cell of a CLA, and AFSA optimization occurs in that specific search space. A change was further imposed on the original AFSA by disallowing the best artificial fish from performing a random movement to ensure that a worse fitness value is avoided. Six objective test functions were used to assess the performance of AFSA-CLA against the standard AFSA, the global version of PSO (GPSO), and cooperative PSO (CPSO). The findings showed that for most functions, AFSA-CLA and CPSO had better efficiency results than the AFSA and GPSO. AFSA-CLA also exhibited more balanced global and local search processes than CPSO and a higher convergence rate, because the CLA acts as a brain for each swarm and thus presents more control over the behaviour of the fish.

The traditional group search optimization (GSO) algorithm is an SI approach utilized for continuous optimization problems. GSO was hybridized as FSGSO with the standard AFSA to overcome the GSO’s shortcoming of becoming easily trapped in local optima [72]. The GSO algorithm employs the producer-scrounger (PS) model as a framework that represents the social foraging strategies of groups of animals. The performance of FSGSO was compared against that of the standard GSO, PSO, and differential evolution (DE) algorithms using eight benchmark functions. FSGO achieved the best overall performance in comparison with the other three algorithms.

4.1.5 Enhancing the AFSA for Dynamic Optimization Problems

Many problems in the real world are riddled with uncertainty due to the nature of their dynamic environments and thus cannot be solved using static optimization techniques. For the purpose of addressing dynamic optimization problems, a new algorithm that extends the standard AFSA was proposed in [68]. In the modified AFSA (MAFSA), an artificial fish adapts the original AFSA fish behaviours but it does not move unless the fitness of the new positions is better than that of the current position. The MFASA is further configured for dynamic environments (DMAFSA) by utilizing multiple swarms that act independently from each other to cover all peaks in a moving peak benchmark problem. The performance of the DMAFSA was comparatively assessed against ten well-known algorithms for dynamic optimization, where the results showed that the DMAFSA is highly efficient due to its ability to track optima, achieve suitable convergence rates, and exhibit local search ability.

The MAFSA in [68] was recently further extended in [70] to cope with dynamic environments. In the proposed algorithm, the parent–child AFSA (PCAFSA), swarms are divided into parent, non-best child, and best child swarms with different configurations. Parent swarms are responsible for tracking undiscovered peaks within an appropriate timeframe, whereas child swarms are responsible for covering the peaks with continued tracking after an environmental change. The moving peak benchmark problem was used to compare the efficiency of the PCAFASA against 22 state-of-the-art algorithms. The proposed algorithm exhibited a high convergence speed and high accuracy compared to the other algorithms.

An earlier modification to the AFSA, the new AFSA (NAFSA), was proposed to overcome several weaknesses of the standard algorithm and improve the convergence speed in dynamic environments [69]. A multiswarm NAFSA (mNAFSA) was proposed for dynamic optimization by utilizing multiple swarms that act independently from each other according to the NAFSA procedure. To cope with dynamic environments, the visual parameter is updated in terms of the maximum number of peak movements in the problem space. The efficiency of the mNAFSA is also improved with a novel sleeping-awakening mechanism, where awake swarms evaluate the fitness in the search space and asleep swarms do not. Using the moving peak benchmark, the performance of the basic NAFSA was assessed against the standard AFSA and several variants of the PSO. As expected, the AFSA exhibited the worst results, while the NAFSA exhibited a high convergence rate in dynamic environments.

4.1.6 Enhancing the AFSA for Multi-Objective Optimization Problems

The earliest effort, the global AFSA (GAFSA), adapted the standard AFSA for multi-objective optimization by utilizing the concept of Pareto dominance to evaluate artificial fish. Therefore, if an artificial fish Pareto dominates a second fish, the first fish is superior to the second [66]. The GAFSA maintains three sets in the algorithm: the artificial fish swarm set, a nondominated set, and an external record set. The first maintains the positions of the artificial fish; the second set saves the found Pareto optimal solution for each iteration; and the third set saves all the Pareto optimal solutions and represents the final results of the algorithm. Four multi-objective functions were used to comparatively assess the performance of the GAFSA, the standard AFSA, and the nondominated sorting genetic (NSGA) algorithm. All three algorithms were able to find the Pareto optimal fronts for three of the four functions; however, the optimal front found by the GAFSA was more uniformly distributed, and the solutions that were not at the front were smaller. For the fourth test function, which was nonconvex and nonuniformly distributed, the GAFSA’s performance was worse than that of the NGSA, as it required more iterations to find the Pareto optima.

A global AFSA that utilizes chaos search and a differential evolution mechanism [67] was proposed for multi-objective optimization problems to accelerate the convergence speed, by allowing only the best artificial fish in the algorithm to execute the global optimization search. The performance of the proposed algorithm was comparatively evaluated against the standard AFSA for solving two test functions, showing that the global AFSA has better diversity and search ability for Pareto optimal solutions than the standard AFSA.

In [38], the authors improved the AFSA to handle multi-objective optimization problems by introducing the iterative deletion (ID) algorithm (IDAFSA). The IDAFSA works by nominating AFs to be deleted every few iterations. To verify the effectiveness of the proposed algorithm, two groups of optimizations were carried out based on the basic AFSA and the IDAFSA. The results showed that the IDAFDA outperformed the basic AFSA.

4.1.7 Enhancing the AFSA for Constrained Optimization Problems

For the purpose of efficiently addressing constrained global optimization problems, two heuristics were proposed, the modified AFSA (mAFS) and the modified AFSA with priority (mAFS-O), for incorporation into the standard AFSA [73]. The first heuristic, mAFS, modifies the original random movement, preying behaviour and inherited leaping movement. The modified AFSA was further improved as mAFS-P with an added priority-based AFSA technique to speed up fish movements by reducing the number of function evaluations and executing the swarming and following behaviours based on priority. A numerical experiment was conducted to solve 25 test functions and comparatively evaluate the performance of the two proposed algorithms relative to adaptive simulated annealing (ASA), a population-based evolution strategy with a covariance matrix adaption (CMA-EA), and a population-based particle swarm approach (Pswarm). The average performance of mAFS-P was better than that of mAFS. Similarly, mAFS-P was able to outperform both ASA and Pswarm. However, CMA-EA proved superior, with an average performance better than that of mAFS-P.

A filtering strategy was introduced in the AFSFilter algorithm to extend the original AFSA for constrained global optimization problems [74]. The filtering methods are executed at each iteration on a population of trial solutions to define the acceptability of those solutions with respect to the current solution. To test the proposed algorithm, six well-known engineering design problems were solved using the proposed algorithm (AFSFilter). The results showed that AFSFilter exhibited competitive performance on most test problems. These findings encourage the application of the proposed algorithm for more complex problems.

4.2 Fish School Search (FSS) Algorithm and Variants

FSS is a stochastic population/SI search algorithm that was inspired by the behaviour of fish schools as they search for food, swim in swarms, and breed [13]. The artificial school in FSS was designed for the purpose of performing a search that automatically changes from exploitation to exploration and vice versa depending on the success of its artificial fish. Unlike the AFSA, the original FSS uses the accumulated successes during the search process instead of local information. Initially, FSS was developed as a unimodal optimization algorithm that excelled at self-regulating the trade-off between exploration and exploitation. It has since expanded to consider other optimization problems. The main characteristics of FSS include its global search ability, autonomy, scalability, minimal centralized control, and distinct diversity mechanisms. Nevertheless, the original FSS suffers from various disadvantages, mainly its low exploitation ability and high implementation complexity.

Several variations of FSS exist in the literature, many of which adjust the original model for the purpose of extending its optimization capabilities. Our survey of the literature discovered 11 publications since the conception of FSS in 2008 until April 2020 that aimed to enhance the performance of FSS to address multimodal, dynamic, and multi-objective optimization problems. Table 2 categorizes FSS and its variants based on general optimization topics. The year-wise distribution of the surveyed studies is shown in Fig. 4.

The rest of this section describes the original FSS algorithm, its procedures, and fish-inspired operations. Next, the section explores the various modifications applied to the original FSS to extend its performance for several optimization problems.

4.2.1 Fish School Search Algorithm

The FSS algorithm was first proposed in 2008 by Bastos-Filho et al. [13], drawing inspiration from the gregarious behaviour of fish schools used to survive in nature. The search process in FSS is carried out by a school of fish that comprises individuals with limited memories. Similar to the fish in the AFSA [9], each individual fish in the FSS algorithm represents a single solution for a particular optimization problem. The algorithm employs information from each fish to direct the search process to the best region in the search space. In contrast to those in the AFSA, the fish in the FSS algorithm use their weights as innate memories of their success, and therefore, the algorithm does not need to maintain a memory of the best locations visited by all fish. FSS also incorporates the idea of evolution through a combination of information about the parents (after breeding) and collective swimming.

The algorithm derives characteristics from real fish schools as they group to perform the following observable behaviours (i.e., FSS operators): feeding, swimming, and breeding. The feeding and swimming behaviours were subsequently demonstrated in a later publication [75].

Feeding was inspired by the inherent instinct of fish to search for food to survive and procreate. The food operator in FSS considers the weight of each fish, which increases when the search for food is successful (food intake) and decreases while swimming and when the search for food fails. Initially, all fish have the same weight, with a starting weight of 1. The variation in a fish’s weight is proportional to the normalized difference between the evaluations of the fitness function at the current and new positions. The weight of each fish is updated as follows:

where Wi is the weight of fish i, ∆fi is the difference in fitness between the current and new positions for fish i, and max(∆f) is a function that returns the maximum difference in the fitness values among all the fish.

The swimming behaviour was inspired by the coordinated movement of all the fish in a school. It is driven by feeding and guides the search process. Swimming in FSS is categorized into three forms of movement: individual, collective-instinctive, and collective-volitive movements.

Individual movement occurs for each fish in the water during every iteration of the FSS algorithm. Each fish selects a position that is evaluated based on the fitness function. The next position of each fish is determined by adding a random number generated from a uniform distribution to the dimensionality of the current position. This value is then multiplied by a predetermined step as follows:

where xi is the current position of the fish in dimension i, ni is the neighbouring position of the fish in dimension i, rand (−1, 1) is a function that returns a random number from a uniform distribution in a specified interval, and stepind is a percentage of the search space amplitude. Nevertheless, the movement of the individual to the new position occurs only if the new position has better fitness than the current position. Therefore, the fitness difference and displacement are evaluated as follows:

where f is the fitness function, \(\Delta f\) is the fitness difference, and \(\Delta \vec{x}\) is the displacement. Of course, the displacement is evaluated only if the individual movement occurs; otherwise, \(\Delta \vec{x} = 0\). The predetermined step utilized is computed as a percentage of the search space amplitude. This value decreases linearly with each iteration to improve the exploitations at later iterations as follows:

where stepvol is a predetermined step used to control the displacement from/to the barycentre, and distance is a function that returns the Euclidean distance between the barycentre and the current position of the fish.

Breeding was inspired by a natural selection mechanism, where successful individuals are given the capacity to procreate, while weaker fish are more likely to perish. Breeding refines the search process; the algorithm selects candidates for breeding by considering all fish that reach a certain threshold (an indicator of success) and choosing the winner that has the maximum ratio of weight to distance. As a result, one child is generated by a selected couple at a given time. The size of the child is the average of its parents’ sizes, and its initial position is at the midpoint between its parents. This behaviour logically represents the refinement that is desired within a search process under a successful operation, thus achieving the concept of exploitation. The breeding operations are carried out in FSS as follows:

where \({\overrightarrow{W}}_{k}\) is the weight of the new fish k, \({\overrightarrow{W}}_{i}\) and \({\overrightarrow{W}}_{j}\) are the weights of its parents i and j, respectively, and \({\overrightarrow{x}}_{k}\) is the position of the new fish k based on the positions of its parents i and j.

The performance of the original FSS [13] was comparatively assessed against three versions of the PSO algorithm (the original PSO with the globally best topology, the constriction PSO with the globally best topology, and the constriction PSO with the locally best topology) using two simple unimodal problems and three highly complex multimodal complex functions that contained many local optima. All five functions were used for the minimization problems. The proposed algorithm, FSS, outperformed the original PSO with the globally best topology in all cases. Interestingly, the performance of FSS on some multimodal functions was better than that of the original PSO and its variations. Additionally, the performance of FSS on multimodal functions was surprisingly much better than that of the unimodal functions, which were still effective. These results demonstrate that as a search tool, FSS shows promise for addressing unstructured high-dimensional spaces. The results also indicate a desired balance between the exploration and exploitation abilities of the algorithm in the search process.

In a subsequent publication, the influence of each of the FSS swimming operators’ movements (individual, collective-instinctive, and collective-volitive movements) on the search process was explored in depth [75]. Two main forms of analyses were conducted: the first examined the influence of the swimming operator, and the second comparatively assessed the performance of FSS with several PSO variations. For the first analysis, six minimization functions were used (three multimodal and three unimodal functions) as benchmarks. To explore the influence of each swimming movement operator, the individual operator was switched on first, and then the three different operators were switched on simultaneously with the individual movements: instinctive, volitive, and instinctive movements along with volitive movement. The contribution of the volitive movement, followed by that of the instinctive movement, led to the greatest improvement in the search process. Of course, the individual movement still has value, as it triggers the other swimming operations. Performance comparisons were also carried out with three PSO variations (the original PSO with the globally best topology, the constriction PSO with the globally best topology, and the constriction PSO with the locally best topology) using the six objective functions utilized in the first analysis. Similar to what was seen in the original FSS paper [13], FSS outperformed the original PSO on some of the multimodal functions. Moreover, FSS was better at evaluating simple functions, as it was able to evaluate twice the number of objective functions in each iteration. Thus, FSS performed half of the number of iterations carried out by PSO to achieve the same number of fitness evaluations.

4.2.2 Enhancing FSS for Multimodal Optimization Problems

The earliest extensions to FSS aimed to address multimodal optimization problems, which are characterized by multiple peaks around the global optimum. One such enhancement introduced a density segregation mechanism to FSS to allow each fish to obtain a different optimal solution [76]. The new algorithm, dFSS, allows partitioning of the school into subgroups, where each subgroup corresponds to one possible solution. The dFSS was compared with NichePSO and Glowworm Swarm Optimization (GSO), where it showed better performance in terms of the total number of iterations and the total number of entities in the swarm.

The dFSS algorithm was further enhanced to overcome the high computational overheads by introducing weights for fish in subgroups [77]. The results, based on a set of multimodal benchmark functions, showed that the proposed algorithm outperformed dFSS in terms of the computational complexity.

Half a decade after the presentation of the original FSS algorithm, an enhanced version of FSS was presented, referred to as FSS-II, to overcome several drawbacks of the original FSS [78], such as: low exploitation capability, the need to evaluate the fitness function twice per fish for each iteration, and a relatively high implementation complexity compared to those of other swarm-based algorithms (e.g., PSO). FSS-II evaluates the fitness function only once per fish during an iteration. To test the algorithm’s performance, simulations were performed using six benchmark minimization problems. FSS-II was comparatively assessed against the original FSS and two variations of PSO: the standard version with the global topology (GPSO) and the standard version with the local topology (LPSO). In both cases, FSS-II performed optimally.

Similar to dFSS [77], a weight-based FSS (wFSS) determines the relationships between leader and follower fish, based on their weights, to find solutions for multimodal problems at low computational costs [79]. The performance of wFSS was compared with that of NichePSO and dFSS using seven benchmark functions (similar to those used in dFSS [76]). The results showed that wFSS was able to return a more diversified set of correct solutions, as its performance exceeded that of dFSS on almost all of the benchmark functions while using fewer fitness calls than dFSS.

The effectiveness of stagnation avoidance routines (SARs) was determined for the original FSS and wFSS [79] to improve the exploratory abilities of FSS, when used to solve combinatorial optimization problems with multi-plateau search spaces [80]. The new algorithm incorporated SARs in the individual component of the movement operator to allow a fish to move to a position that does not improve its fitness, if it satisfies a randomized threshold. Nevertheless, only fish that have presented improvements in their fitness within the individual component can contribute to the collective-instinctive movement. To assess the exploration abilities of FSS, wFSS, PSO, and the SAR extensions, two multi-plateau functions, L-shaped and C-shaped, were defined and utilized as benchmark functions. The SAR version and wFSS were able to converge to optimal fitness values when solving the L-shaped function, while PSO was not. For the C-shaped functions, wFSS was able to converge but in a slower manner than wFSS-SAR and FSS–SAR.

A new variation of the FSS algorithm (RL-FSSA) introduced a rate learning-based capability to refine the search strategy of FSS [81]. A refinement phase was introduced in RL-FSSA, where the algorithm seeks the positions of the best fish ever found and refines them by a rate-learning process. A set of five unimodal and multimodal benchmark algorithms was used to comparatively assess the performance of RL-FSSA with that of the original FSS. The results show that the proposed algorithm surpasses FSS with comparatively shorter time for execution and better convergence quality and stability.

More recently, two metaheuristics algorithms, FSS (fish school search) and wFSS (weight-based fish school search), have been used to optimize the consumption of a lighting system [82]. The wFSS algorithm is a weight-based version of FSS intended to provide multiple solutions for multimodal optimization problems. The strategy is based on an operator called a link formator. This operator is responsible for defining leaders for fish to form sub-schools [15]. The performance FSS and wFSS was compared with that of two deterministic algorithms. The execution time of wFSS was two seconds higher; this makes wFSS a good solution for solving real-time problems.

In summary, the FSS variants presented in this section were used to explore the different approaches utilized for adapting the original FSS to multimodal optimization problems. Often, refinements were made to the original operations, particularly the collective-instinctive and collective-volitive behaviours [76,77,78,79,80]. In some instances, new operators were introduced to the FSS algorithm along with modifications to the original behaviours. For instance, dFSS introduced memory and partitioning operations to mimic food sharing behaviour in place of food foraging as a swarm [76], and the link formator operator was introduced for defining leaders for fish to form sub-schools [82]. In other instances, the original operators were maintained while a refinement phase was introduced to control the search process. One such case is RL-FSSA, where a learning-based refinement phase was introduced to improve the local search ability of the original FSS [81]. A variable number of multimodal benchmark functions was utilized to assess the performance of the FSS variants against the original FSS [77, 80, 81] and multiple variants. These include NichePSO [76, 78] GSO [76], dFSS [78, 79], GPSO [77], LPSO [77], wFSS [80], and the constriction PSO [80].

4.2.3 Enhancing FSS for Dynamic Optimization Problems

Despite its many advantages, FSS also has some disadvantages. One way to overcome these disadvantages is by combining FSS with other algorithms. Conversely, some features of FSS can potentially compensate for the weaknesses of other algorithms. One such case involves a hybrid algorithm, volitive PSO, that overcomes PSO’s inability to generate diversity after environmental changes (i.e., dynamic optimization) by combining PSO with FSS [83]. The collective-volitive operator is able to autoregulate the exploration–exploitation balance during the algorithm’s execution. In volitive PSO, each particle is weighted to indicate the collective-volitive movement and the expansion (or contraction) of the school. The performance of the hybrid algorithm was comparatively assessed against three PSO variants (the inertia PSO, restart PSO, charged PSO) and FSS using DF1 as a benchmark function. Volitive PSO achieved, on average, better results than those of the other four algorithms, with a higher mean fitness and lower standard deviation.

4.2.4 Enhancing FSS for Multi-Objective Optimization Problems

The underlying characteristics of FSS allow it to be extended to multi-objective optimization problems due to its ability to self-regulate. The multi-objective FSS, MOFSS, was recently proposed to find solutions for multi-objective optimization problems [84]. The operators of the original FSS are adapted in MOFSS, and an external archive (EA) is deployed to store the best solutions found during the search process. A turbulence operator is also included in the EA at every iteration to prevent the swarm from becoming stuck in local minima. A set of seven multi-objective functions was used to examine the performance of MOFSS against well-known multi-objective optimizers: OMOPOSO, MOPSO-CDR, SMPSO, and SPEA2. MOFSS converged for most of the benchmark functions, with two instances of the fish becoming trapped in local minima. While the performance of MOFSS was promising for problems with few local minima, its performance comparatively suffered when solving problems with many local minima.

4.3 Algorithms Inspired by Specific Fish Species

The previously discussed algorithms, the AFSA and FSS, were devised from generalized observations of fish species. Several publications intending to solve optimization problems gained inspiration from activities exhibited by certain species of fish or unique behaviours. The ebb-tide-fish algorithm (ETFA) was proposed to address nonlinear and multimodal optimization problems [85]. The algorithm drew inspiration from the foraging behaviour of fish in ebb tides, where fish communicate via special sounds to lead other fish to areas with more abundant food supplies. The proposed algorithm uses a simple updating scheme to encourage movement towards particular global optima and local search. The performance of the ETFA was evaluated in comparison to that of PSO, the bat algorithm (BA), differential evolution (DE), and cat swarm optimization (CSO) using two benchmark functions. The results showed that compared to the other four algorithms, ETFA produced the best convergence speed and optimization precision. The simplicity of the proposed algorithm was also evident, with a time complexity lower than those of the other algorithms.

The distributed perception algorithm (DPA) is an optimization algorithm that drew inspiration from the behaviour of golden shiner fish [86]. Golden shiner fish are a strongly gregarious species, as members can form large shoals containing up to 250 individuals. The DPA utilizes the perception mechanism in golden shiner fish, which prefer shaded areas. The algorithm also uses their individual and distributed perceptions, which aid the fish when navigating their environment. Four standard test functions were used to assess the performance of the DPA against that of the well-established PSO algorithm. The findings indicated the competitiveness of the algorithm against PSO with much room for future improvement.

Elephant-nose fish search for food using active electrolocation, where they can sense their surrounding environment by generating electric fields and detecting distortions in the field. Sharks, on the other hand, use passive electrolocation, as they can sense the bioelectric fields generated by other fish and use this information to locate them. These special behaviours, active and passive electrolocation, inspired the fish electrolocation optimization (FEO) algorithm, which combines both electrolocation techniques [87]. This means that the fish in the algorithm are able to sense both active and passive electrolocations and therefore can construct an electrical wave to analyse electrical images and sense the electric pulses emitted by other fish. The fish divide the search space into multiple zones, and the behaviour of the fish toggles between passive and active electrolocation based on a toggle switch judgement. The electric pulses are then calculated to determine the distance to a prey object. The FEO algorithm was evaluated in a simulated experiment against a real-coded genetic algorithm (RCGA), accelerated particle swarm optimization (ACPSO), PSO, harmony search (HS), DE and the SA algorithm using eleven well-known benchmark functions. The results showed that the FEO algorithm outperformed the other algorithms in terms of the mean number of function evaluations and the percentage of success.

In [88], the authors proposed a new heuristic called the electric fish optimization algorithm, which took inspiration from the behaviours of electric fish. Electric fish rely on electrolocation to sense their environment. Active and passive electrolocations are modelled as local and global search mechanisms, as these mechanisms are believed to be good candidates to balance them. To transform the behaviour of electric fish into a heuristic algorithm, the models assume that the longer the fish has a good food source, the faster it grows; thus it produces electric signals with higher amplitudes than those of other fish. The new heuristic has been extensively evaluated on various problems against six well-known heuristics (simulated annealing (SA), vortex search (VS), the genetic algorithm, (GA), differential evolution (DE), particle swarm optimization (PSO), and the artificial bee colony (ABC) algorithm). In the experiments, a wide selection of benchmark problem sets were used. The simulation results indicated that EFO performed considerably better than almost all existing approaches in the literature on different bound-constrained, clustering, and real-world design problems.

5 Discussion and Conclusions

Optimization algorithms are used to discover optimal or near-optimal solutions concerning a specific optimization problem. Optimization problems are often challenging to solve, and there are usually no efficient algorithms that can guarantee an optimal solution in a reasonable amount of time. To cope with this challenge, new search algorithms, some of them inspired by biological behaviours, have been introduced since their underlying characteristics support their successful application to difficult optimization problems. The strength of these types of approaches lies in their ability to produce solutions much quicker from simpler implementations; they are also more flexible and robust than existing approaches.

Fish-inspired heuristics are among the bioinspired approaches that have seen widespread application in complex optimization domains, despite their relative recency in the field. Both main categories of fish heuristics, the AFSA (artificial fish swarm algorithm) and FSS (fish school search), have several advantages in terms of robustness, rapid convergence to global optima, small numbers of parameters, applicability in both continuous and discrete optimization problems, insensitivity to initial settings, their parallel natures, etc. Despite this, some drawbacks regarding these algorithms have also been identified, such lower convergence speeds at later stages of optimization, a lack of balance between global and local search, low exploitation abilities and high implementation complexities. Thus, it was clear that modifications to the original works were necessary to improve their performances and overcome their drawbacks significantly.

This paper reviewed seminal fish-heuristics articles and the various modifications that have been applied for their application in general optimization problems. It can easily be observed from this survey that there has been apparent growth in the literature concerning fish-inspired heuristics in the past decade. The majority of the works extended the well-established AFSA; this is understandable, as its conception was earlier than that of FSS. The modifications to both the AFSA and FSS in this review were categorized based on improvements in stability, the search for global optima, convergence speed, and constrained and continuous optimization (for the AFSA), as well as improvements intended to handle multimodal, dynamic and multi-objective optimization (for both the AFSA and FSS). Comparative assessments for both the AFSA and FSS modifications were carried out against other optimization algorithms to examine the performance of the proposed extensions, as detailed in this survey.

The AFSA and FSS algorithms were inspired by behaviours that are often shared by many fish species and schools. A few papers deviated from this approach, however, and were influenced by unique behaviours exhibited by certain species of fish. These algorithms have drawn inspiration from the behaviours of ebb-tide fish, golden shiner fish, elephant-nose fish, and electric fish for various optimization problems, as summarized in this survey.

It is evident from the large number of papers that deal with variants of fish-inspired heuristics in different domains that these optimization methods are very alive in recent literature and will probably continue to stand out in the future. Despite this, similar to other research in this vibrant field, we can identify some avenues where further research is still needed to overcome the challenges imposed by different types of hard optimization problems. Among these, we suggest the following potential research directions:

-

1.

Most fish-inspired heuristics have been oriented towards continuous optimization problems; specifically, the new variants that have been developed were mostly tested on benchmark continuous functions to prove their advantages over the original methods. Combinatorial optimization problems, where the problem variables are discrete, are also a very challenging category of optimization problems. Although some attempts have been made to solve these types of problems using fish heuristics in the literature (mostly for improving other algorithms such as clustering approaches, neural networks, and support vector machines), there are still many other potential combinatorial optimization problems that can be addressed using fish heuristics. Vehicle routing, planning, and scheduling are some examples. There is also the related issue of constraint handling and how fish heuristics can be adapted to handle constraint satisfaction problems. We believe that utilizing fish heuristics in these types of problems will help in comparing and contrasting their utility against that of other optimization algorithms, such as evolutionary algorithms, which are famous for solving these types of problems.

-

2.

Dynamic problems, where the environment of the search is not fixed and the optimal solution may vary as the search progresses, pose another challenge in general optimization. Despite some attempts to tackle these types of problems, as in [68,69,70] for the AFSA and [83] for FSS, the developed methods remain mostly within the realm of optimizing benchmark dynamic functions. Research on handling real-life dynamic and stochastic optimization problems remains largely unapproached with fish-inspired heuristics.

-

3.